COMS4111 Intro to Databases

- Week 1 - Logistics and Intro

- Week 2

- Week 3

- SQL Queries

- Advanced SQL Constraints

- Advanced SQL Queries

- Security and Authorization

- Database Application Development

- Triggers - Server Programming

- Normal Forms

- Final

- Beyond CS4111

- Useful Tricks

Week 1 - Logistics and Intro

- Course Website http://www.cs.columbia.edu/~biliris/4111/21s/index.html

- Exams are open book

Introduction

Some terms to know:

Definitions:

- Database

- a very large, integrated (relational) collection of shared data

- DBMS - database management system

- software that can manage database systems, with the advantage of reducing application development time, concurrent accesses, recovery from crashes, etc.

- Data Models

- a collection of concepts/structure for describing the data

- for example, is it a graph (social network data)? A tree? A table?

- Schema

- a description of a particular collection of data, using the data model

- It defines how the data is organized and how the relations among them are associated. It formulates all the constraints that are to be applied on the data.

- Relational Data Model

- basically a set of inter-related tables containing your data

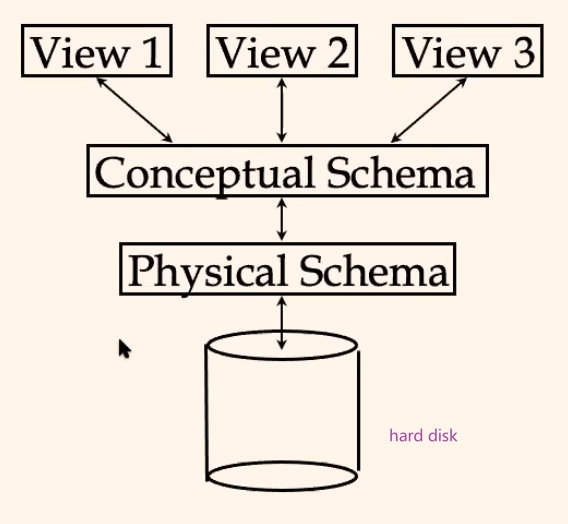

The levels of abstraction used in this course:

where:

- Physical Database Schema − This schema pertains to the actual storage of data and its form of storage like files, indices, etc. It defines how the data will be stored in a secondary storage.

- Conceptual/Logical Database Schema − This schema defines all the logical constraints that need to be applied on the data stored. It defines tables, views, and integrity constraints.

For example:

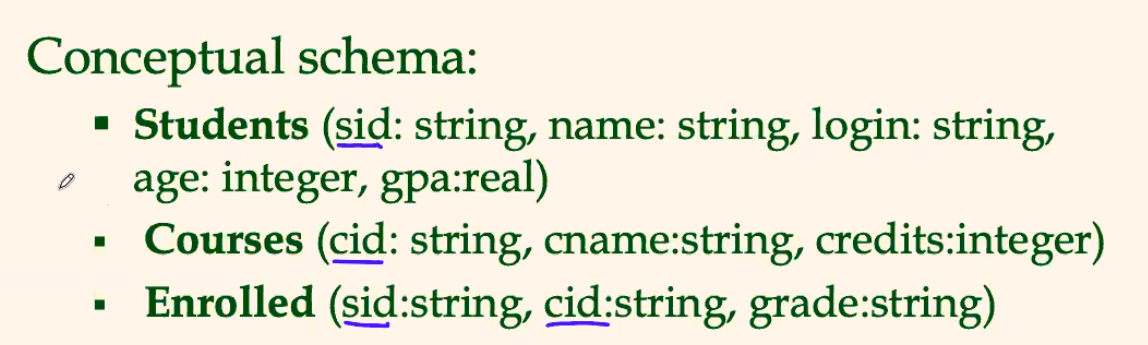

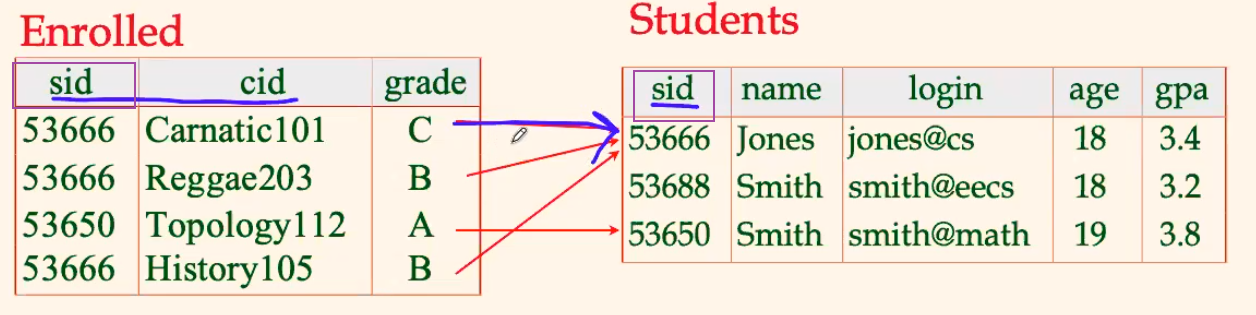

Consider a simple university database:

where:

- the Enrolled table connects the Students and Courses table

- so the conceptual schema tells you the organization of the table

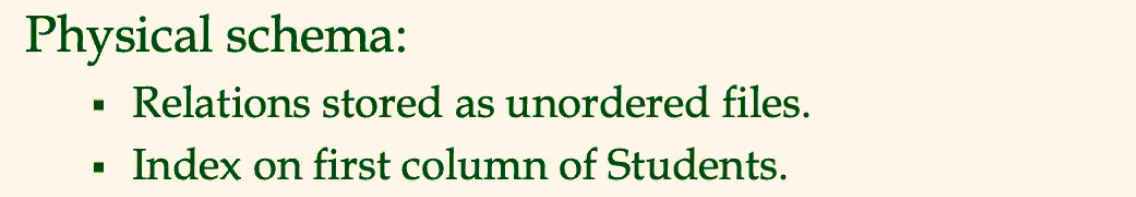

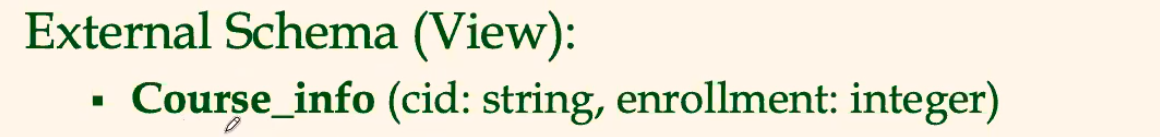

and:

where:

- this would be a computed table based on the actual tables we have (defined in the conceptual schema)

Definition: Data Independence

- From the above, it can be implied that we need our application to be insulated from how the data is structured, so that the structure/organization should be intact

- logical data independence - protection from changes in logical structure of data

- physical data independence - protection from changes in physical structure of data

Definition: Transactions in Database

- Transactions (a query/insert/… in database) is either committed or aborted (all-or-none)

- This means it is important for having a concurrency control

- Lock based protocol

- Time-stamp protocol

- Validation based protocol

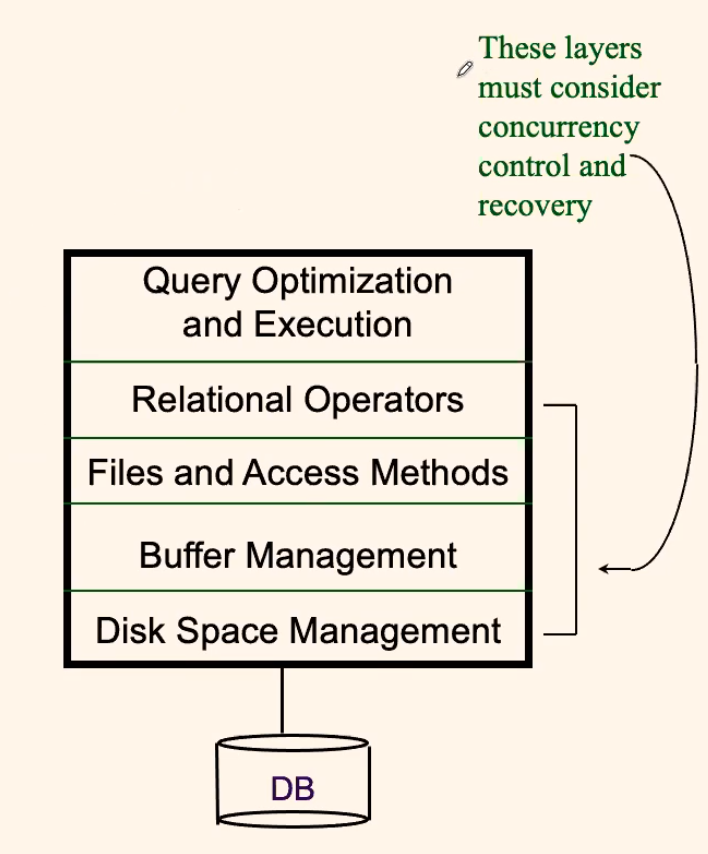

This means that we will have the following structure for a DBMS:

Overview of Database Design

Conceptual Design (using ER Model)

- What are the entities and relationships in the enterprise?

- What are the integrity constraints or business rules that hold?

- Can map an ER diagram into a relational schema?

Physical Database Design and Tuning

- Considering typical workloads and further refine the database design

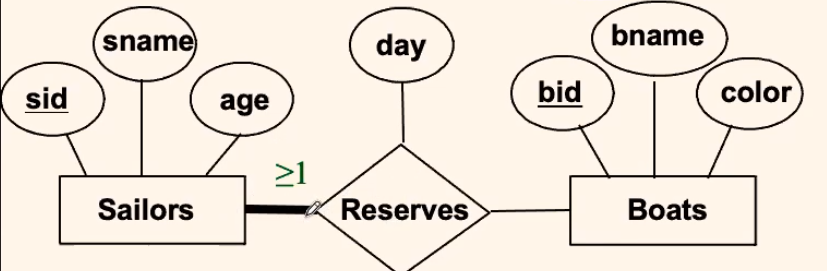

ER Diagram Basics

What is an ER Model? Why do we need it?

- in short, it provides an abstract way of understanding the model without touching the data

Definition

- Entity

- real-world object, described using a set of attributes

- Entity Set

- a collection of entities that have the same attribute fields

- each entity set has a key (could be a composite key as well)

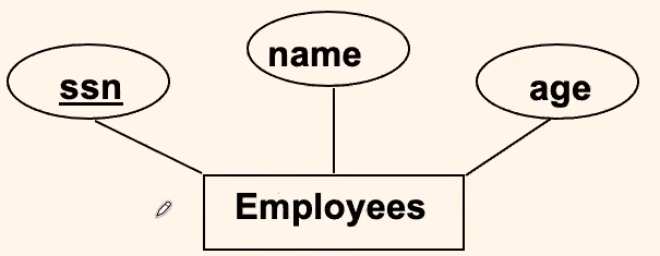

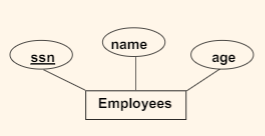

For example:

where:

- employee would be an entity

-

employees would be a table containing the entity employee

- the primary key would be the ssn

Definition

- Relationship

- Association among two or more entities.

- Relationship Set

- A collection of similar relationships

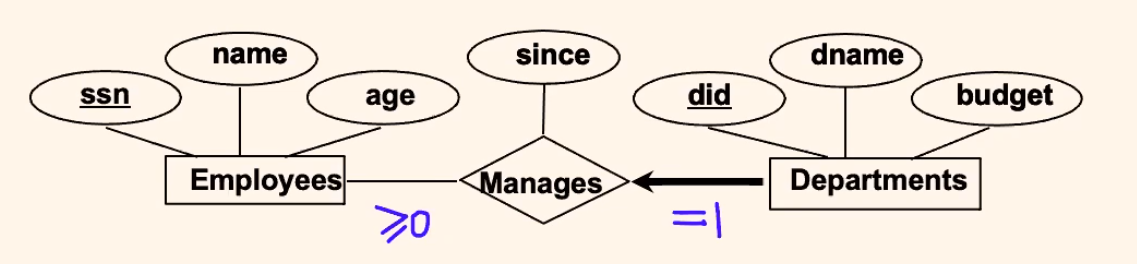

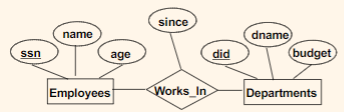

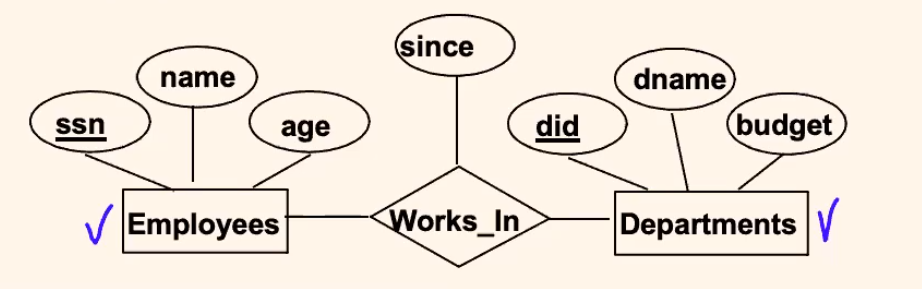

For example:

where:

- Entity would be in square

- Relationship would be in a diamond

- Attributes would be in circle

Key and Participation Constraints

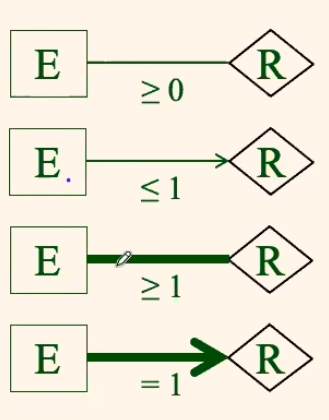

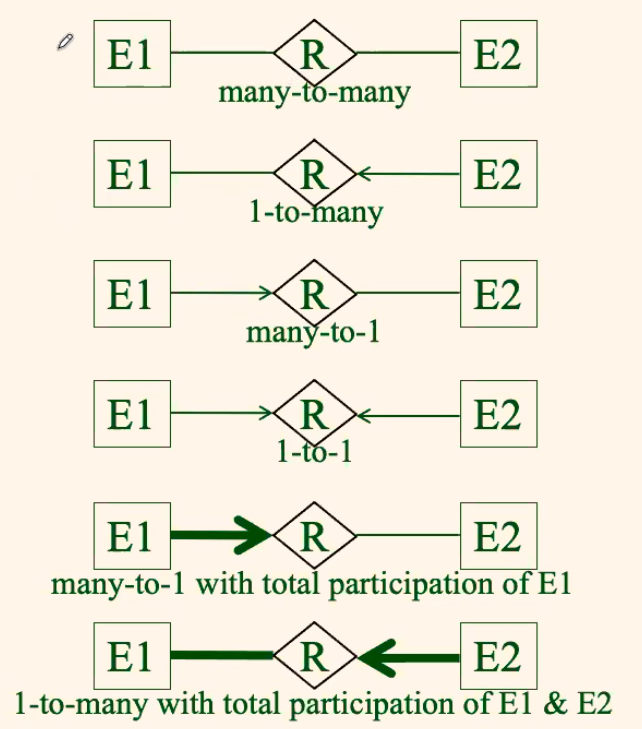

Before going to the details, first we talk about some notations:

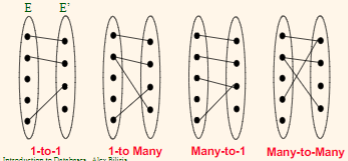

The following relations are possible between one entity E another entity E’:

and in a more abstracted way:

where:

- an arrow with head means upper-bounded with the 1-1 relationship

- a bold line means a strong requirement that each entity must have a relation

For Example:

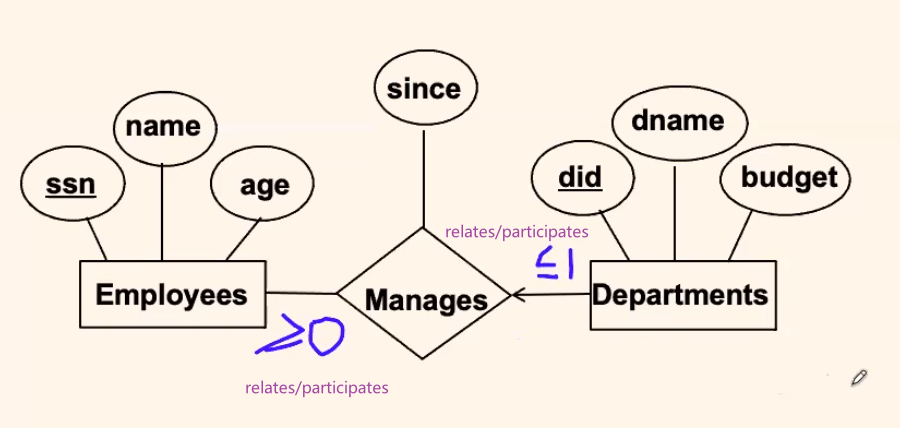

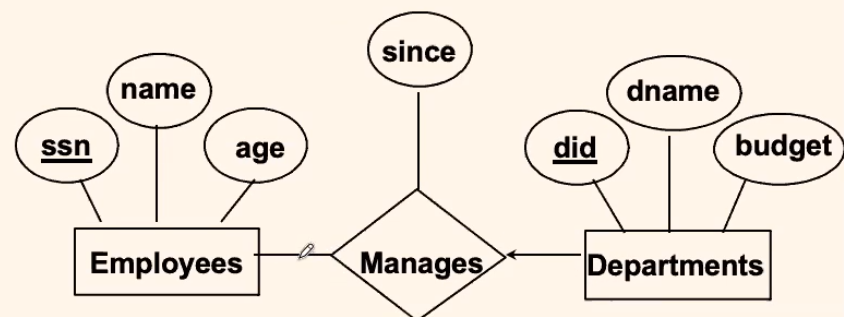

Now, we can go to key and participation constraints:

Key Constraint

the above diagram actually is a key constraint:

where:

- since each department can participate at most one time in the relationship, each department has most one manager (or zero manager) = key constraint

- each employee can manage zero or more departments

Participation Constraint:

where we see it means:

- Each department must have a manager, because it participates exactly one = participation constraint

- The participation constraint of Departments in Manages is said to be total (vs. partial).

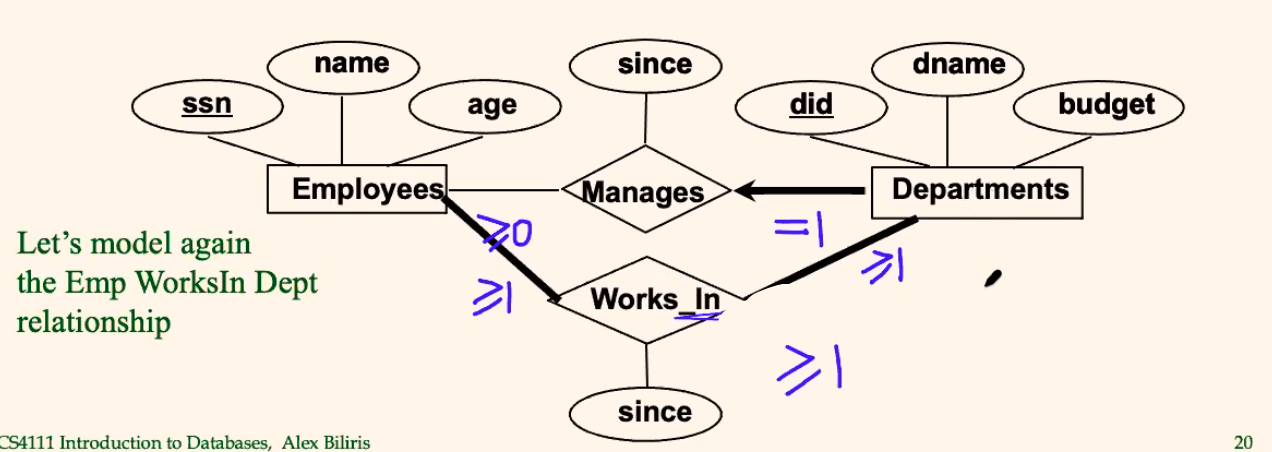

For example:

Consider a more complicated case:

where, for the works_in relationship:

- each Department participates at least once, meaning each department has at least one employee

- each Employee works in at least one Department

Some summaries:

Weak Entities

Definition

- A weak entity can be identified uniquely only by considering the primary key of another (owner) entity.

- so that the weak entity does not “exist” automatically if the owner is deleted

- Owner entity set and weak entity set must participate in a one-to-one or one-to–many relationship set (one owner, one or many weak entities; each weak entity has a single owner).

- Weak entity set must have total participation in this identifying relationship set.

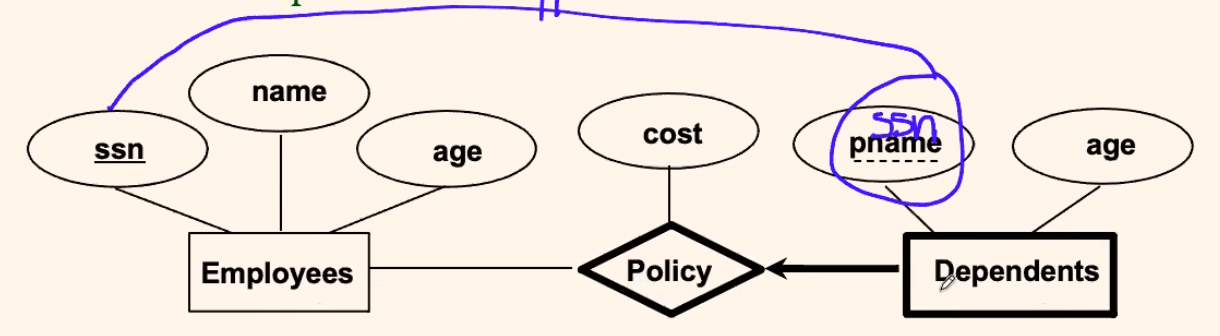

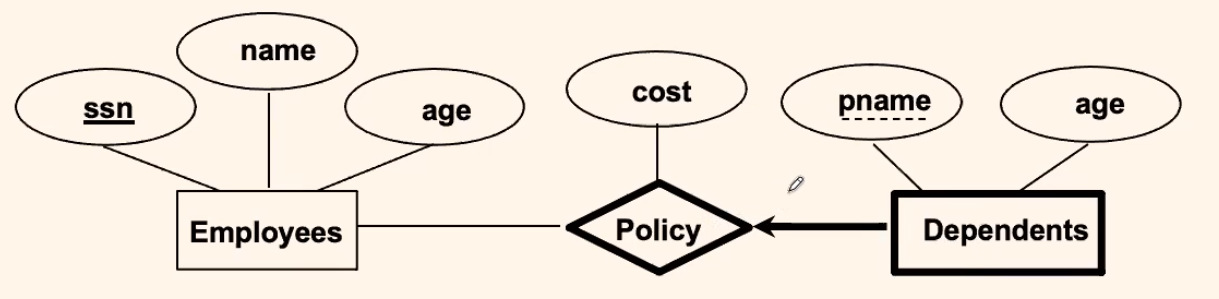

For example:

where:

- the entity Dependent (weak entity) depends completely on the existence of an Employee

- once a Policy/Employee is deleted, the Dependents of that policy/employee will automatically be disregarded

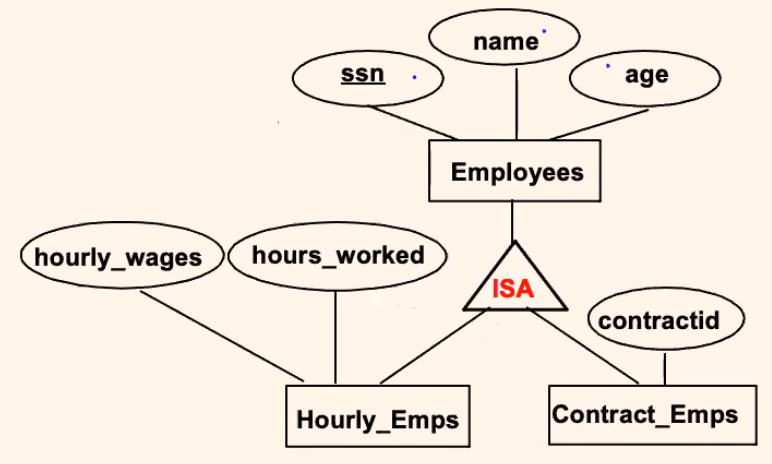

“Is A” Hierarchy

This is basically the same concept in OOP programming, in the context that:

- children inherits the attributes of the parent

However, if using this design, you need to consider the following:

Overlap constraints:

- Can Joe be an

Hourly_Empsas well as aContract_Empsentity? (Allowed/disallowed)

Covering constraints:

- Does every

Employeesentity also have to be anHourly_Empsor aContract_Empsentity?(Yes/no)

Reasons for using ISA:

- To add descriptive attributes specific to a subclass easily.

- e.g. easily look up an

Hourly_Empinformation (name) in theEmployeetable

- e.g. easily look up an

- To identify entities that participate in a relationship.

Week 2

ER Diagram Continued

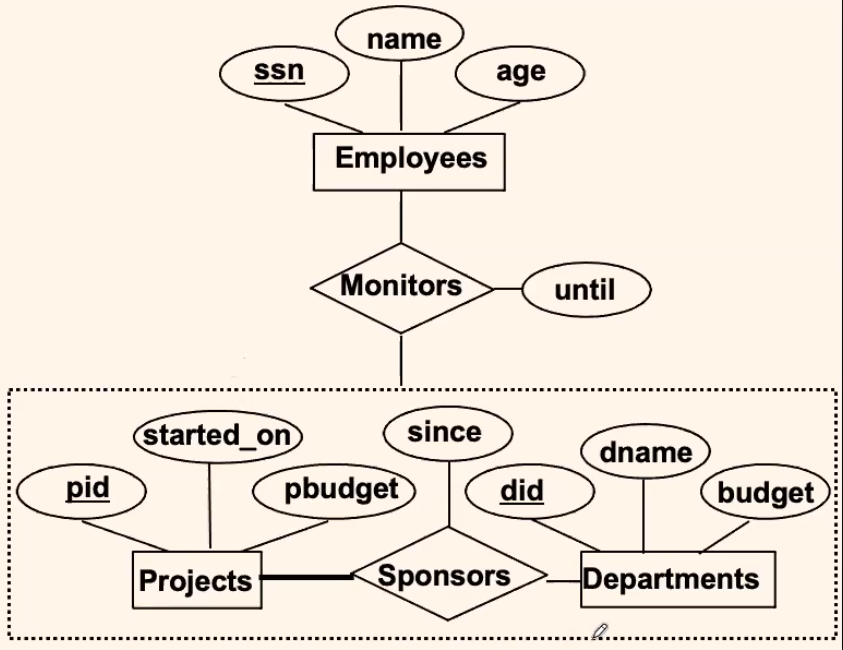

Aggregation

General Constraint:

- Usually, you should not relate relationships, you should only relate entities.

But what if you kind of need to?

For Example:

where:

- we want the

Employeeto monitor not only the project, but also how the budget flow in this sponsorship. This means theEmployeemonitorspbudget+since+budget- Practically, you could just have an aggregate entity consists of

pid,did,since

- Practically, you could just have an aggregate entity consists of

- so what we do is to view/aggregate the entire

Project+Sponsors+Departmentas one entity

Definition: Aggregation

- Allows us to treat a relationship set as an entity set for purposes of participation in (other) relationships.

Conceptual Design Using ER Model

Design choices:

- Should a concept be modeled as an entity or an attribute or a relationship?

- Identifying relationships: Binary or ternary? Aggregation?

Constraints in the ER Model:

- A lot of data semantics can (and should) be captured.

- But some constraints cannot be captured in ER diagrams.

Entity vs. Attribute

For Example: Design Choice

Should address be an attribute of Employees or an entity (connected to Employees by a relationship)?

Ans: depends upon the use we want to make of address information, and the semantics of the data:

- If we have several addresses per employee,

addressmust be an entity - If the structure/components of it (city, street, etc.) is important, e.g., we want to retrieve employees in a given city, address must be modeled as an entity (since attribute values are atomic).

- If all you need it to lookup/retrieve its entirety, then an attribute would suffice

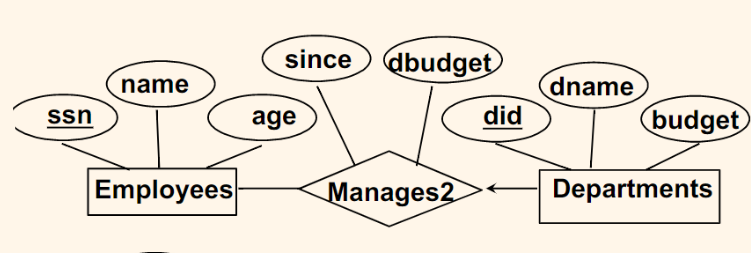

Entity vs. Relationship

For Example

Consider the case: A manager gets a separate discretionary budget for each dept

- First ER Diagram

- works

Note

- the relational attribute

dbudget(discretionary budget) depends on both theEmployeeand theDepartment. Hence, it is not an attribute ofDepartment(e.g. a department might not have a manager, then there will be nobudget)

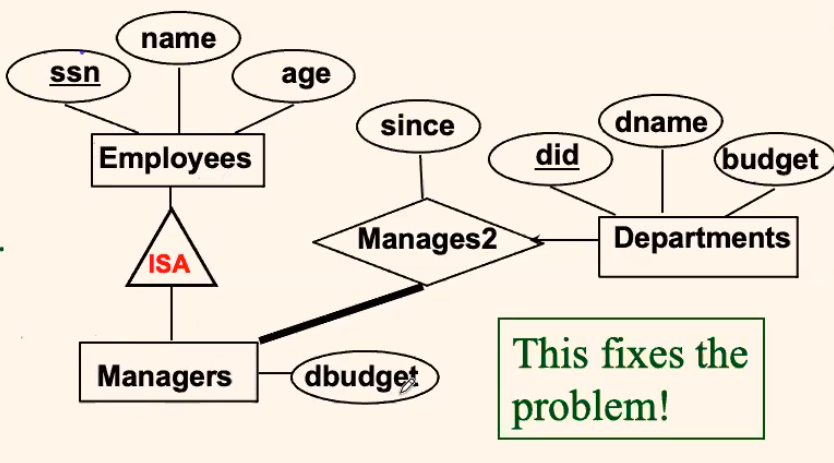

Consider the case: A manager gets a discretionary budget that covers all his/her managed depts

- then, the above diagram is bad, because:

- Redundancy:

dbudgetstored for each dept managed by manager. - Misleading: Suggests

dbudgetassociated with department-mgr combination.

- Redundancy:

- In short, the

dbugetdepends on only theEmployeewho manages at least one departments

where:

- the constraint above is: each

Managermanages at least oneDepartment. Hence, we have a thick line.

Binary vs. Ternary Relationship

In most of the time

- A relationship would be no more than ternary, unless it IS EXTREMELY complicated

For Example: Binary Relationship

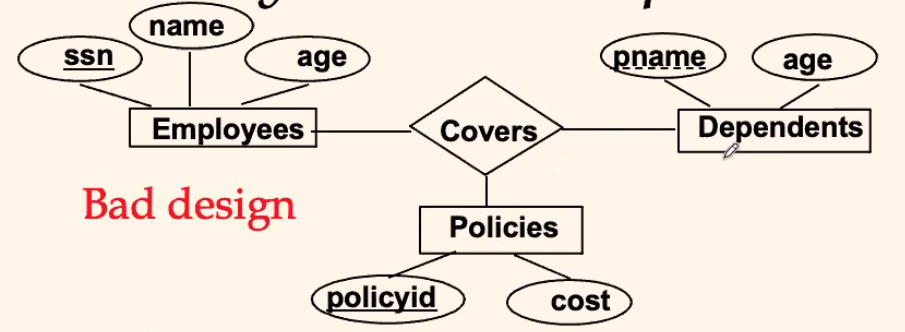

Consider: If each policy is owned by just 1 employee, and each dependent is tied to the covering policy

- This diagram would be inaccurate

- we cannot constraint both:

policycan only be owned by oneemployeeandpolicycan have multipledependents, i.e. the constraint is different for the relationships

- we cannot constraint both:

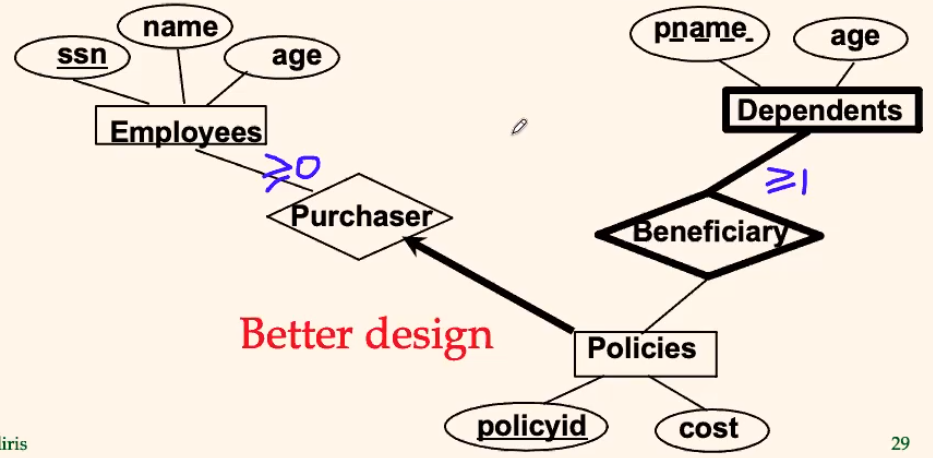

- This diagram would work:

- now, since the constraint is different, we separated them into:

- each

policyis owned by onepurchaser policycan have multipledependents

- each

- now, since the constraint is different, we separated them into:

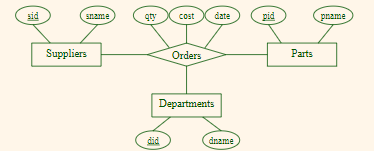

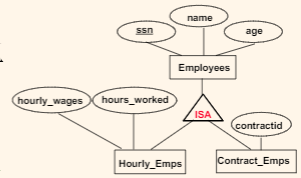

For Example: Ternary Relationship

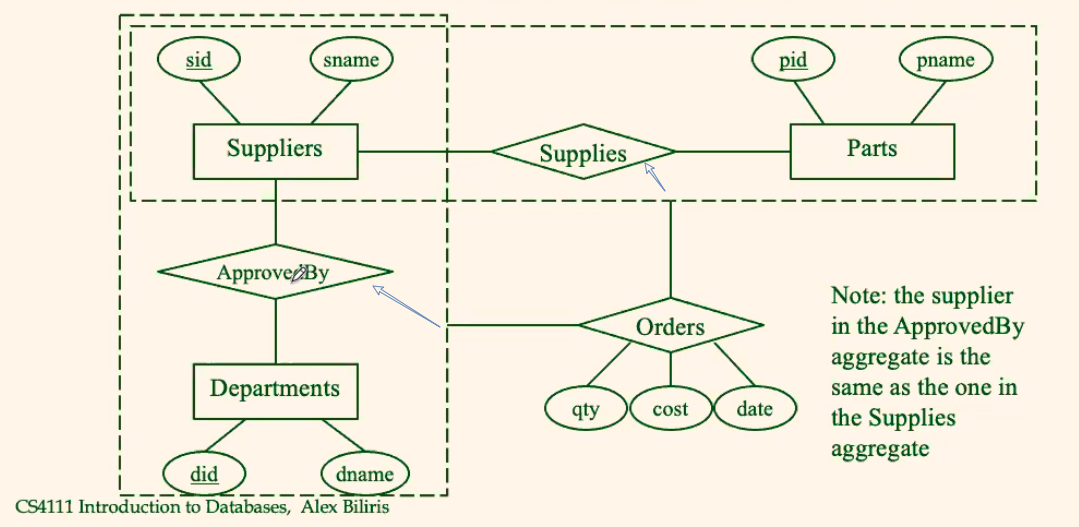

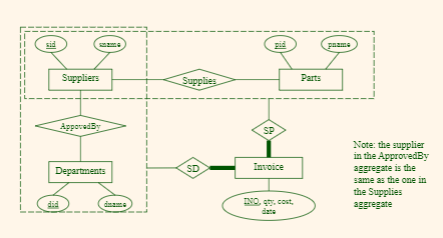

Consider: Orders relates entity sets Suppliers, Departments and Parts

- An order

<S,D,P>indicates: a supplierSsupplies to a departmentDqtyofa partPat a certaincoston a certaindate. - All of them have no constraints

Then this would work:

Summary:

- When the relationships involved have the same constraint, then ternary relationship would work

- Otherwise, you need to separate the relationships

For Example: Additional Constraints

Consider: Orders relates entity sets Suppliers, Departments and Parts, with these additional constraints

- A

departmentmaintains a list of approved suppliers who are the only ones that can supply parts to the department - Each

suppliermaintains a list of parts that can supply

where:

- again, since you should not relate relationships, you have the aggregates

- however, a diagram cannot represent: the

supplierof thedepartmentand thesupplierof thepartsis the same in one order. Therefore, for that you can only write a note in your diagram

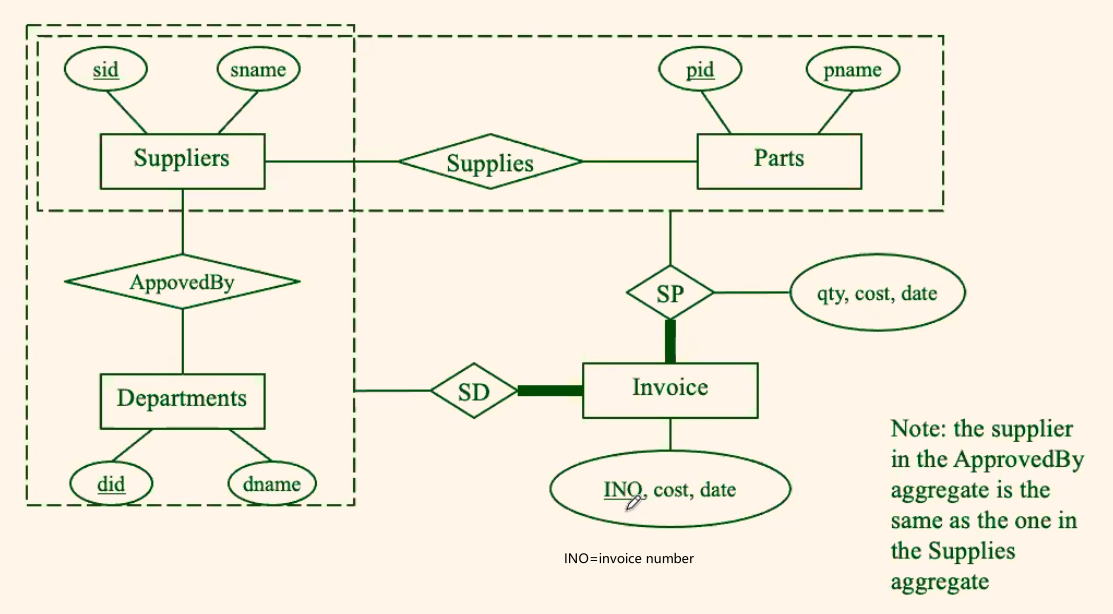

Or alternatively:

where:

- an

INOwould be useful for retrieving - each

Invoicehere can include more than one “orders”, so you can have multipleSD+SPassociated with oneinvoice

Summaries on ER Model

- Conceptual design follows requirements analysis

- Yields a high-level description of data to be stored

- ER model popular for conceptual design

- Constructs are expressive, close to the way people think about their applications

- Basic constructs: entities, relationships, and attributes (of entities and relationships).

- Think about constraints

- Some additional constructs: weak entities, ISA hierarchies, and aggregation.

Note:

- There are many possible variations on ER model.

Relational Model and Database

By far, the relational model/database is the most widely used.

Major vendors:

- Oracle, 44% market share (source: Gartner)

- IBM (DB2 and Informix), 22%

- Microsoft (SQL Server), 18%

- SAP (bought Sybase in 2010), 4.1%

- Teradata, 3.3%

A major strength of the relational model:

- supports simple, powerful querying of data.

- Queries can be written intuitively, and the DBMS is responsible for efficient evaluation

Definitions

Relational database:

- a set of relations (tables)

A relation (table)

- is a set of tuples (rows)

- Schema: name of relation, name and type of each column, constraints.

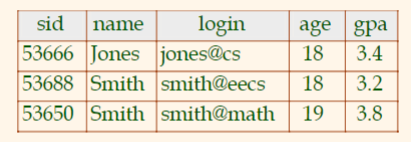

- e.g.

Students(sidstring, namestring, loginstring, ageinteger, gpareal).- #columns= degree / arity

- Instance: the actual table (i.e., its rows) at a particular point in time.

- All rows in a table are distinct.

- columns could be the same

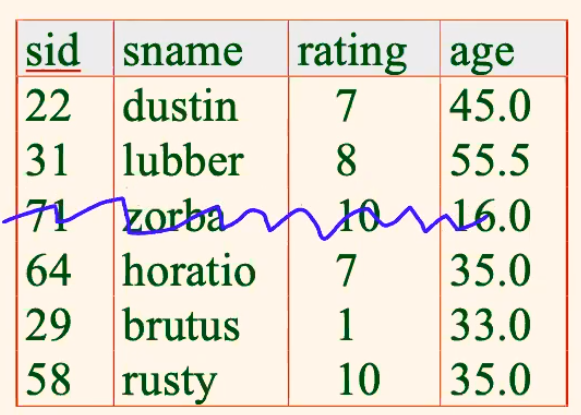

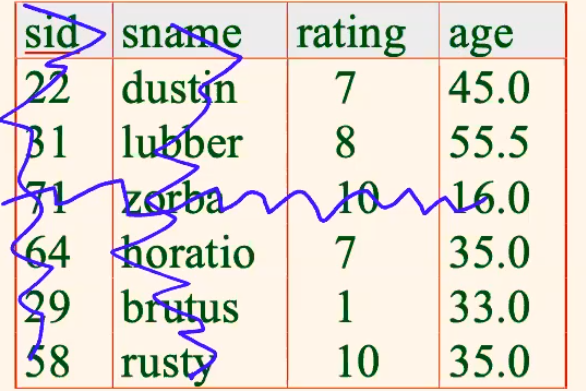

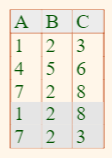

For Example

Basic SQL Query Language

For Example:

To find all 18 year old students, we write:

SELECT * FROM Students WHERE age=18

or

SELECT * FROM Students S WHERE S.age=18

Sis used when you need to avoid ambiguities when querying multiple tables

CRUD Relations/Tables in SQL

To create a table:

CREATE TABLE Students(sid CHAR(20), name CHAR(20), login CHAR(10),age INTEGER,gpa REAL)

To delete a table

DROP TABLE Students

To alter a table design:

ALTER TABLE Students ADD COLUMN firstYear: integer

Note

- The schema of

Studentsis altered if you change the structure- In the above, the structure is changed by adding a new field

- every tuple in the current instance is extended with a

nullvalue in the new field.

To update data into a table:

INSERT INTO Students (sid, name, login, age, gpa) VALUES (53688, ‘Smith’, ‘smith@ee’, 18, 3.2)

or

DELETE FROM Students S WHERE S.name = ‘Smith’

Integrity Constraints

You can specify constraints in your table, such that:

- IC condition that must be true for any instance of the database

- ICs are specified when schema is defined

- ICs are checked when relations are modified

- A legal instance of a relation is one that satisfies all specified ICs

Primary Key Constraint

Key

- A set of fields is a key for a relation if:

- No two distinct tuples can have same values in all key fields, and

- This is any subset of the key becomes non-distinct, i.e. cannot be further decomposed

- e.g.

uniwould be a key

Superkey

- superkey is basically a union/superset of keys

- e.g.

uni+lastnamewould be a superkey

Candidate and Primary Key

- Candidate key: any of the keys

- there can be multiple candidate keys in a table

- Primary key: one of the keys chosen DBA

- there can only be one Primary key in a table

Primary/Unique/Not null

Syntaxes:

PRIMARY KEY (att1, att2, ...)

UNIQUE (att1, att2, ...)

att NOT NULL

For example: Primary Key

CREATE TABLE Enrolled (

sid CHAR(20)

cid CHAR(20),

grade CHAR(2),

PRIMARY KEY (sid,cid)

)

means

- For a given student and course, there is a single grade, or:

- A student can only get one grade in an enrolled course

For example: Unique

CREATE TABLE Enrolled (

sid CHAR(20),

cid CHAR(20),

grade CHAR(2),

PRIMARY KEY (sid),

UNIQUE(cid, grade)

)

means

- A student can only take one course, and

- No two students can have the same grade in a course

For Example: Not Null

CREATE TABLE Students (

age INTEGER NOT NULL

)

Foreign Keys

Foreign keys keep referential integrity

Foreign Key

- Fields in one tuple that is used to refer to another tuple’s

PRIMARY KEYorUNIQUE.

- Like a logical pointer, and it must exists. Otherwise, some optional actions will be taken (see below)

- Referential integrity is achieved if all foreign key constraints are enforced, i.e., no dangling references.

For example

Consider the following referential integrity needed

CREATE TABLE Enrolled(

sid CHAR(20),

cid CHAR(20),

grade CHAR(2),

PRIMARY KEY (sid,cid),

FOREIGN KEY (sid) REFERENCES Students

)

where:

- the last line written in full is

FOREIGN KEY (sid) REFERENCES Students (sid)

Now, to enforce referential integrity, consider:

- What should be done if an

Enrolledtuple with a non-existent student id is inserted? - What should be done if a

Studentstuple is deleted?

SQL/99 supports all 4 options on deletes and updates.

- Default is NO ACTION (delete/update is rejected)

- e.g. you cannot delete

53666ofEnrolledorStudent

- e.g. you cannot delete

- CASCADE (also delete all tuples that refer to deleted tuple)

- e.g. deleting

53666inEnrolledwill also delete53666inStudent

- e.g. deleting

- SET NULL /SET DEFAULT (sets foreign key value of referencing tuple)

CREATE TABLEEnrolled(

sid CHAR(20),

cid CHAR(20),

grade CHAR(2),

PRIMARY KEY (sid,cid),

FOREIGN KEY (sid)

REFERENCES Students

ON DELETE CASCADE

ON UPDATE SET DEFAULT

)

Views

View

- A view is basically a ‘fictitious table’, such that

- all of its data are computed at real time from another table at the time it is accessed

- hence, it only stores definitions

Syntaxes

- Creating a

view

CREATE VIEW YoungGoodStudents (sid, name, gpa) AS(

SELECT S.sid, S.name, S.gpa

FROM Students S

WHERE S.age < 20 and S.gpa >= 3.0

)

- Deleting a

view

DROP VIEW YoungGoodStudents

Since view dependent on actual tables:

- How to handle

DROP TABLEif there’s a view on the table?DROP TABLEcommand has options to let the user specify this.

Week 3

ER to Relational Database

How to we map what we have done in ER Diagram into Database?

Entity Sets to Tables

with:

CREATE TABLE Employees (

ssn CHAR(11),

name CHAR(20),

age INTEGER,

PRIMARY KEY (ssn)

)

Relationship Sets to Tables

Now, relationships are basically tables with mostly foreign keys.

- but now, we need to think about how to map the 1-to-many/many-to-1.,,, relationship

Many-to-Many Relationship:

with:

CREATE TABLE Works_In(

ssn CHAR(11),

did INTEGER,

since DATE,

PRIMARY KEY (ssn, did),

FOREIGN KEY (ssn) REFERENCES Employees,

FOREIGN KEY (did) REFERENCES Departments

)

where:

PRIMARY KEY (ssn, did)essentially implements the many-to-many constraint

Key Constraint/ At Most 1 Participation

So that Departments can participate at most once

with:

-

three-table solution

CREATE TABLE Manages ( did INTEGER, ssn CHAR(11) NOT NULL, // ok if NULL? No. Then the Manages table does not make sense since DATE, PRIMARY KEY (did), FOREIGN KEY (ssn) REFERENCES Employees, FOREIGN KEY (did) REFERENCES Departments )so that if it appears on this table, there is one manager. If a department does not have a manager, it is not in this table (this is the sole purpose of a relationship table)

-

two-table solution

CREATE TABLE Dept_Mngr( did INTEGER, dnameCHAR(20), budget REAL, ssn CHAR(11), // ok if NULL. Represents a Dept without Manager since DATE, PRIMARY KEY (did), FOREIGN KEY (ssn) REFERENCES Employees )since each

Depthas a unique manager, combineManagesandDeptsinto one table.- now, a

Deptcan at most have one manager since it is anattributethat isnullable

- now, a

Total Participation Constraint (=1/One to One)

Now, using the Dept_Mngr table is simply:

-

CREATE TABLE Dept_Mngr( did INTEGER, dname CHAR(20), budget REAL, ssn CHAR(11) NOT NULL, since DATE, PRIMARY KEY (did), FOREIGN KEY (ssn) REFERENCES Employees, ON DELETE NO ACTION )now, we have

ssnbeingNOT NULL, so that each department has exactly one manager- if it is one-to-one, then it is basically an attribute

-

This means a relationship table would not capture the constraint anymore:

CREATE TABLE Manages ( ssn CHAR(11) NOT NULL, did INTEGER, since DATE, PRIMARY KEY (did), FOREIGN KEY (ssn) REFERENCES Employees, FOREIGN KEY (did) REFERENCES Departments ) // Does not workthere is no constraint that forces ALL entries of

Deptto appear here

Total Participation Constraint ($\ge$1)

Without Check constraint, this is not possible.

-

Consider the following:

CREATE TABLE Dept_Mngr( did INTEGER, dname CHAR(20), budget REAL, ssn CHAR(11), // ssn is a PK, so no need to NOT NULL since DATE, PRIMARY KEY (did, ssn), FOREIGN KEY (ssn) REFERENCES Employees, ON DELETE NO ACTION )this would work physically, but there would be redundancy, which would be a source of error for CRUD

dname CHAR(20)andbudget REALwould be repeated

-

Similarily:

//3 tables (Emp, Dept, Manages) CREATE TABLE Manages ( ssn CHAR(11), did INTEGER, since DATE, PRIMARY KEY (did, ssn), FOREIGN KEY (ssn) REFERENCES Employees, FOREIGN KEY (did) REFERENCES Departments )which would not work since a relationship table cannot force total participation

Weak Entity to Tables

Reminder

- A weak entity should only make sense by considering the PK of the owner

- depends entirely on the existence of an owner

Consider:

with:

// Dependents and Policy are translated into ONE TABLE

CREATE TABLE Dep_Policy (

pname CHAR(20),

age INTEGER,

cost REAL,

ssn CHAR(11),

PRIMARY KEY (pname, ssn),

FOREIGN KEY (ssn) REFERENCES Employees

ON DELETE CASCADE

)

where:

- Weak entity set and identifying relationship set are translated into a single table.

- so that entries in

Dependentswill also be deleted when the owner is gone

- so that entries in

- When the owner entity is deleted, all owned weak entities must also be deleted.

Alternatively:

if you want to keep the three table structure:

CREATE TABLE Policies (

policyid INTEGER,

cost REAL,

ssn CHAR(11) NOT NULL,

PRIMARY KEY (policyid),

FOREIGN KEY (ssn) REFERENCES Employees,

ON DELETE CASCADE

)

CREATE TABLE Dependents(

pname CHAR(20),

age INTEGER,

policyid INTEGER,

PRIMARY KEY (pname, policyid),

FOREIGN KEY (policyid) REFERENCES Policies,

ON DELETE CASCADE

)

so that:

- When the owner entity is deleted, all owned weak entities together with policy are also be deleted.

Compact SQL Notation

This is only for writing to quickly clarify the design. This will not compile.

Examples include:

Note:

- Never omit the always crucial

PK,UNIQUE,NOT NULLandFKconstraints

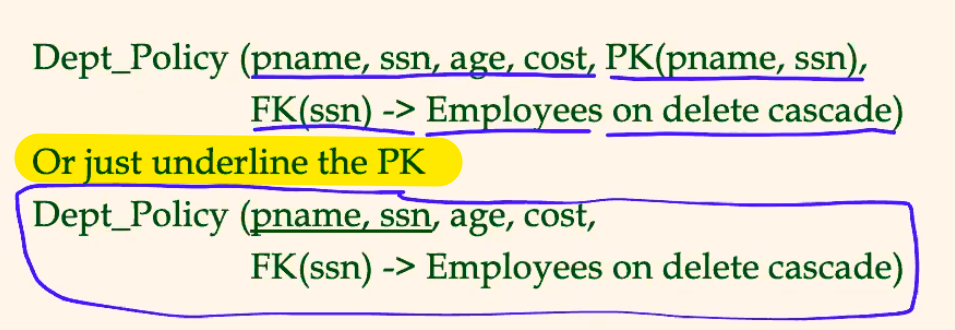

ISA Hierarchies

Reminder

- This is basically the OOP concept of inheritance

- Additionally, some constraints here include:

- Overlap constraints: can an entry go into both children?

- Covering constraints: does an entry in parent forces an entry in at least one of the children?

In general, this is not supported naturally by relational database, but there are some approaches:

-

Emps(ssn, name, age, PK(ssn))Hourly_Emps(h_wages, h_worked, ssn, PK(ssn), FK(ssn) -> Empson delete cascade)Contract_Emps(c_id, ssn, PK(ssn), FK(ssn) -> Emps on delete cascade)so that you basically manually creates the ISA relationship

-

Hourly_Emps(ssn, name, age, h_wages, h_worked)Contract_Emps(ssn, name, age, c_id)this makes queries easier, but there may be redundancy

However, the above design all depends on:

- Overlap constraint: being both

Hourly_EmpsandContract_Emps- redundancy for the second design

- if not, no redundancy

ER Design Examples

Orders example #1

Consider the following ER:

and we need that:

- the supplier in the

ApprovedByaggregate is the same as the one in theSuppliesaggregate

then the SQL tables would be:

-

Entities:

Suppliers (sid, sname)Parts (pid, pname)Departments (did, dname) -

Relationships:

ApprovedBy(sid, did, FK(sid) -> Suppliers, FK(did) -> Departments)Supplies (sid, pid, FK(sid) -> Suppliers, FK(pid) -> Parts) -

Aggregate

``Orders (sid, did, pid, qty, cost, date,`

PK(sid, did, pid, qty, cost, date)FK(sid, did) -> ApprovedBy,FK(sid, pid) -> Supplies)where:

- there is only one

sid Ordersrelates to bothApprovedByaggregate andSuppliesaggregate by the twoFK

- there is only one

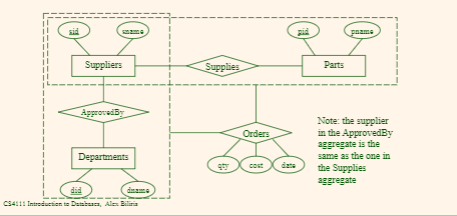

Orders example #2

Consider this example:

where:

-

Entities: same as above

-

Relationship: same as above

-

Aggregate:

Invoice(INO,sid NOT NULL, did NOT NULL, pid NOT NULL, qty, cost, date,PK(INO),FK(sid, did) -> ApprovedBy,FK(sid, pid) -> Supplies)where:

- the idea is basically the same as before, the only advantage is that it only requires

PK(INO)to get an entry, as compared to the previousPK(sid, did, pid, qty, cost, date)

- the idea is basically the same as before, the only advantage is that it only requires

Relational Query Languages

Query Languages

- Query languages: Allow manipulation and retrieval of data from a database.

Query

- Queries are applied to tables, and they output tables

- i.e. the schema of the input and the output of a query is always the same, but the data inside might be different depending on data changes in the actual schema

Relational Algebra

In short, all tables are essentially sets, since each entry/row is unique.

Therefore, we have the following operations in SQL:

Basic operations:

- Selection ($\sigma$): Selects a subset of rows from relation.

- Projection ($\pi$): Deletes unwanted columns from relation.

- Cross-product ($\times$): Allows us to combine two relations.

- Set-difference ($\setminus$ or $-$): Tuples in reln. 1, but not in reln. 2.

- Union ($\cup$): Tuples in reln. 1 and in reln. 2.

Additional operations:

- Intersection $\cap$, join, division, renaming: Not essential, but (very!) useful.

Since each operation returns a relation, operations can be composed! (Algebra is “closed”.)

Operators Definitions

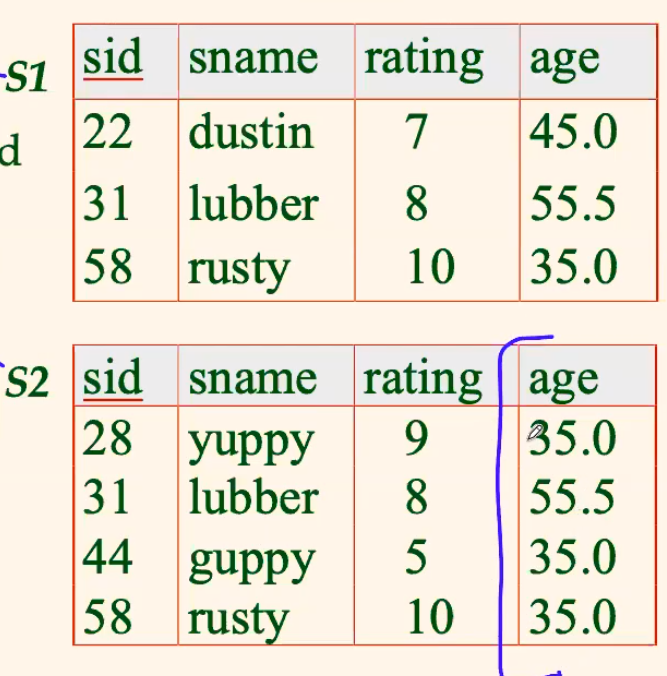

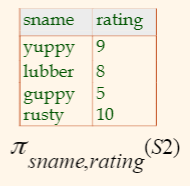

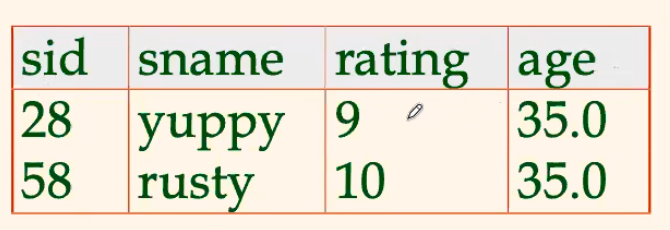

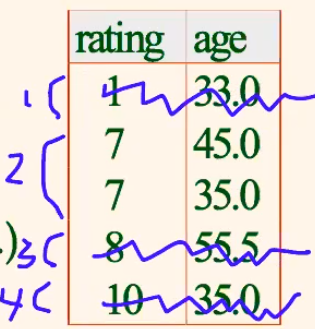

Projection

-

\[\pi_{sname, rating} (S2)\]

gives:

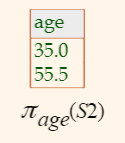

-

\[\pi_{age}(S2)\]

gives:

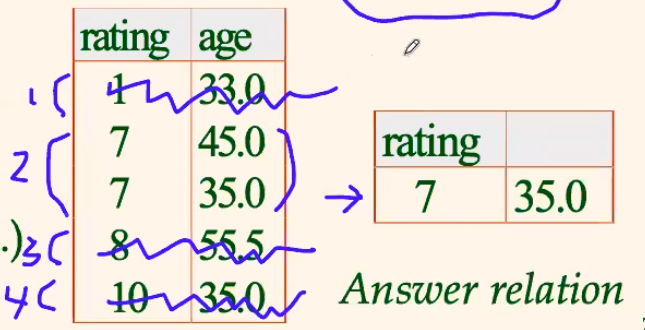

Selection

-

\[\sigma_{rating>8}(S2)\]

gives:

-

\[\pi_{sname, rating}(\sigma_{rating>8}(S2))\]

gives:

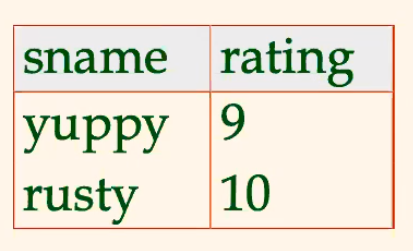

Set Operations are trivial

but note:

- the only thing you need to make sure is that all entries are unique

- tables need to be union-compatible

- contains the same number of attributes

- attributes are of the compatible type

- so that each row can be compared

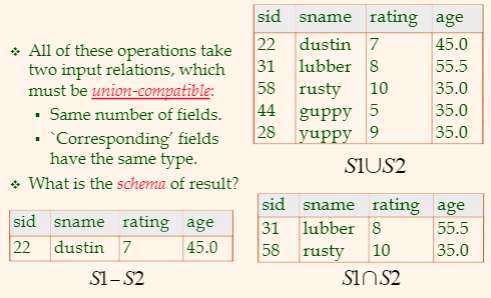

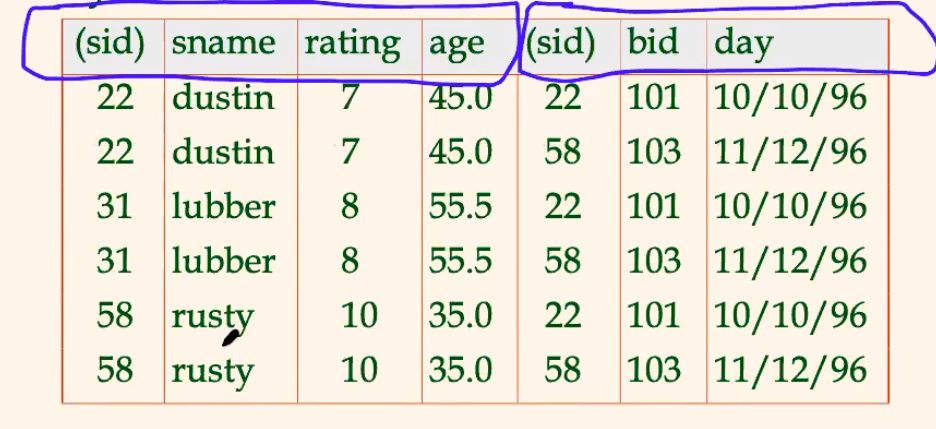

Cartesian Product

Consider:

-

\[S1 \times R1\]

gives:

where:

-

Conflict: Both

S1andR1have a field calledsid. (but the cartesian product does not care)-

usually here you would rename: $$

\rho(C(1\to sid1, 5\to sid2),S1\times R1)

$$

-

-

the cartesian product itself is usually useless. You will need to do some additionally operations.

-

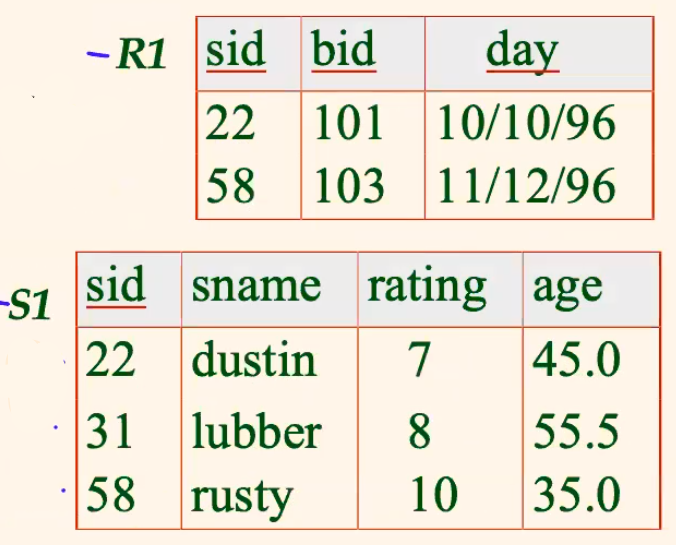

Joins

-

Condition join is a combination of cartesian product and selection $$

\sigma_{condition}(R \times S) \equiv R \bowtie_{condition} S

$$

-

for example: $$

S1 \bowtie_{S1.sid < R1.sid}R1

$$ gives:

Note

- This might be able to compute more efficiently

-

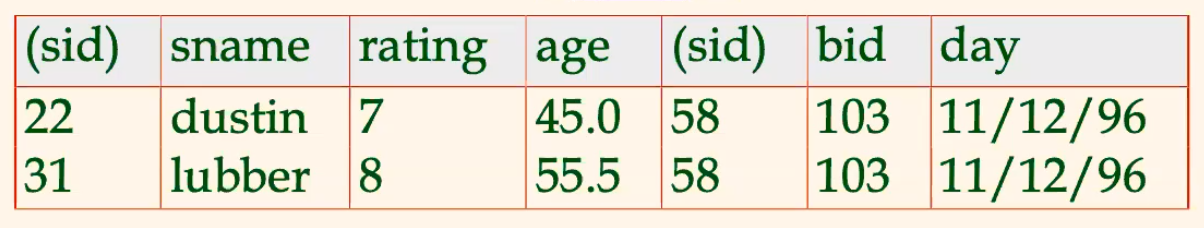

Equi-Join is a selection based on some equality

-

for example: $$

S1 \bowtie_{sid}R1

$$ gives:

-

Natural Join Equijoin on all common fields (fields with the same name and the same type)

-

for example: $$

S1 \bowtie R1

$$ gives the same:

since

sidis the only common field for tableS1and tableR1

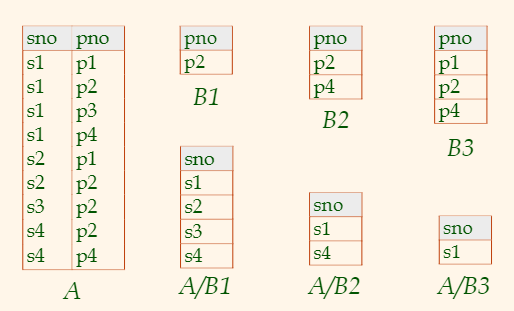

Division

-

Let

Ahave 2 fields,xandy;Bhave only fieldy: $$A/B = { \lang x\rang\, \, \exist\lang x,y\rang \in A,\,\forall \lang y\rang \in B} $$

-

i.e.,

A/Bcontains allxtuples (sailors) such that for everyytuple (boat) in B, there is anxytuple in A. -

i.e. returns

xinAif and only if it has/tuples with allyinB -

Not supported as a primitive operator. To express this as standard operation we had before:

Idea: For A/B,

xvalue is disqualified if by attachingyvalue from B, we obtain anxytuple that is not in A. $$\pi_x(A) - \pi_x(\,(\pi_x(A)\times B)-A \,)

$$ where:

- $\pi_x(\,(\pi_x(A)\times B)-A \,)$ signifies the disqualified

xvalues (xytuple that is not in A.)

- $\pi_x(\,(\pi_x(A)\times B)-A \,)$ signifies the disqualified

-

for example:

Example

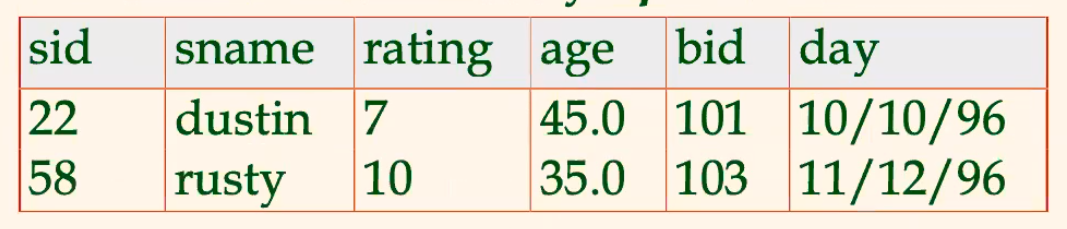

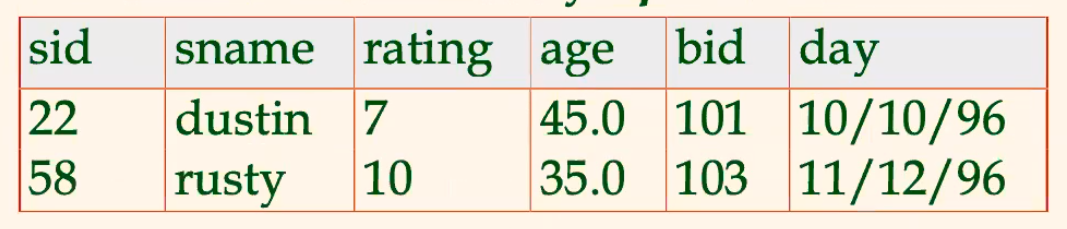

For Example: Find names of sailors who’ve reserved boat #103

- so we have a

Sailorsand aReservestable

Solution:

-

The straight-forward approach $$

\pi_{sname}((\sigma_{bid=103}Reserves)\bowtie Sailors)

$$

-

Splitting the above into individual steps: $$

\begin{align} &\rho(Temp1,\,\sigma_{bid=103}Reserves))

&\rho(Temp2,\,Temp1 \bowtie Sailors)

&\pi_{sname}(Temp2) \end{align}$$

-

The “formula” approach (since many times, you simply joins first): $$

\pi_{sanme}(\sigma_{bid=103}(Reserves \bowtie Sailors))

$$

Choosing Which Query to Use

- The expression itself must be correct

- in the above case, all of them

- The expression must be easy to read/understand for yourself

- so that it there is an error, you can see it immediately

- Do not care about performance yet

- DBMS has internal query optimization made for you

For Example: Find names of sailors who’ve reserved a red boat.

- So we have three tables,

Sailors,Reserves, andBoat

Solution:

-

The straight-forward approach: $$

\pi_{sname}( (\sigma_{color=red}Boats)\bowtie Reserves \bowtie Sailors )

$$

-

The “formula” approach $$

\pi_{sname}(\sigma_{color=red}(Sailors \bowtie_{sid}Reserves \bowtie_{bid} Boats))

$$

-

etc.

For Example: Find names of sailors who’ve reserved a red or a green boat.

Solution:

-

The straight-forward approach: $$

\pi_{sname}( (\sigma_{color=red\,\lor\,color=green}Boats)\bowtie Reserves \bowtie Sailors )

$$

-

The “formula” approach $$

\pi_{sname}(\sigma_{color=red\,\lor\,color=green}(Sailors \bowtie_{sid}Reserves \bowtie_{bid} Boats))

$$

-

Computing the red and green boat separately, and then union the names

For Example: Find names of sailors who’ve reserved a red and a green boat.

Solution:

-

if this question is changed to and instead of or, then:

- changing $\lor$ to $\land$ for solution 1 and 2 would not work, because

coloris a singular value

- changing $\lor$ to $\land$ for solution 1 and 2 would not work, because

-

changing the union to intersect would not work either, because:

- if there is

sname=Mike, uid=1who borrowedredboat, and asname=Mike, uid=2who borrowedgreenboat, then the intersection will also giveMikeas the result, which is wrong - therefore, you should use primary keys before the last step

where:

- $\pi_{sname,sid} (\sigma_{red} (S \bowtie R \bowtie B))$ selects correctly unique sailors who have reserved red boat

- $\pi_{sname,sid} (\sigma_{green} (S \bowtie R \bowtie B))$ selects correctly unique sailors who have reserved green boat

- if there is

For Example: Find the names of sailors who’ve reserved all boats

Solution:

-

Using division: $$

\begin{align} &\rho(Tempsids, (\pi_{sid, bid} Reserves)/(\pi_{bid} Boats)) \end{align}

\[which gets all `sid` who has *reserved all boats*, since: - $(\pi_{sid, bid} Reserves)/(\pi_{bid} Boats)$ returns a tuple $\lang sid, bid\rang$ $\iff$ a $\lang sid \rang$ in $Reserves$ contains *columns* $\lang sid, bid\rang$ for all $\lang bid \rang$ in $Boats$ lastly, we do:\]\pi_{sanme}(Tempsids \bowtie Sailors)

$$ to fetch the names.

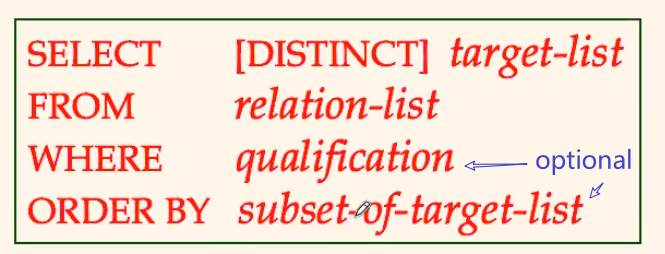

SQL Queries

Basic SQL Structures

Basic Select Queries

It has the following structure

where:

- by default, SQL will not remove duplicates from the query result. Therefore, sometimes you need to specify

[DISTINCT]flag.

Basic Select Query Mechanism

Fromrelation-list could be a list of relation/table names- in that case, it computes the cartesian product of the given tables

WHEREqualification are comparisons using operators such as $>,<.=,etc$, connected withAND/OR/NOT- in this case, it performs selection

SELECTtarget-list is a list of attributes of relations you wanted to have from the relation-list- performs the projection

[DISTINCT]removes duplicate rowsORDER BYjust alters the sequence of the result presented

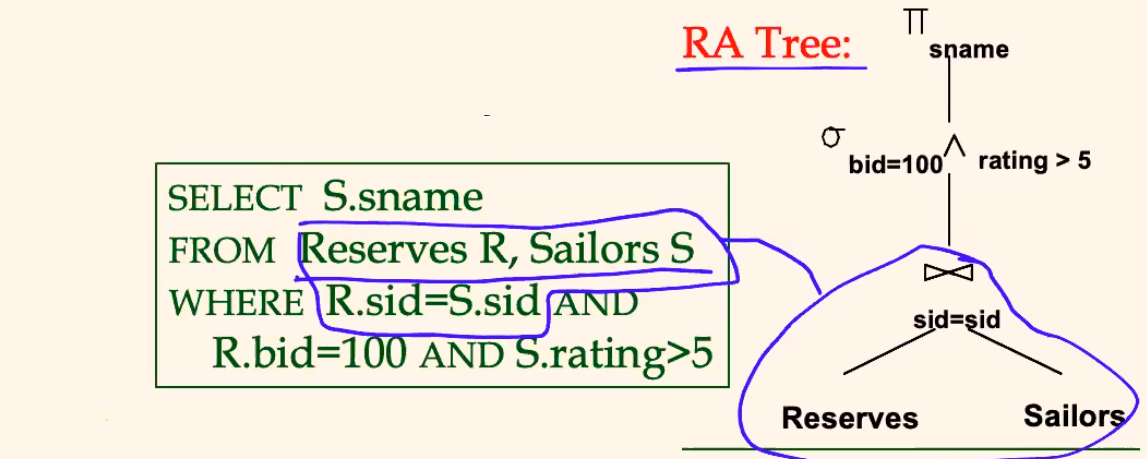

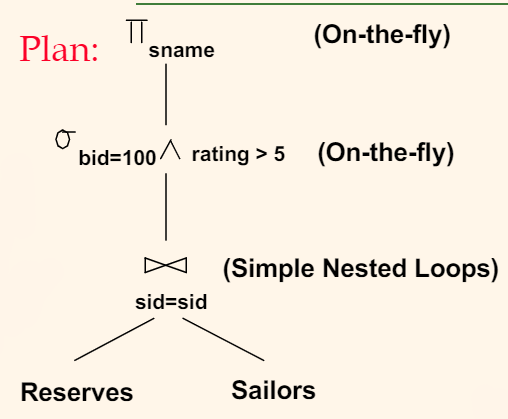

For Example:

Find the name of sailor who has rent the boat 103.

SELECT S.sname

FROM Sailors S, Reserves R

WHERE S.sid=R.sid AND R.bid=103

For Example:

Consider the following query:

SELECT S.sid

FROM Sailors S, Reserves R

WHERE S.sid=R.sid

will the result contain duplicate sid?

- Answer: yes, because a sailor could have reserved multiple boats, and by default

SELECTstatements do not remove duplicate results

Join Syntax

Inner Join

The below are all equivalent

SELECT S.sname

FROM Sailors S, Reserves R

WHERE S.sid=R.sid AND R.bid=103

SELECT S.sname

FROM Sailors S JOIN Reserves R ON S.sid=R.sid

WHERE R.bid=103

SELECT S.sname

FROM Sailors S INNER JOIN Reserves R ON S.sid=R.sid

WHERE R.bid=103

Expression and Strings

For Example:

SELECT age1=S.age-5,

2*S.age AS age2,

S.sid || ‘@columbia.edu’ AS email

FROM Sailors S

WHERE S.sname LIKE ‘B_%B’

where:

age1=S.age-5computesS.age-5and renames the column toage12*S.age AS age2computes2*S.ageand renames the column toage2-

S.sid||‘@columbia.edu’ AS emailconcatenatesS.sidwith@columbia.eduand renames the column toemail LIKEis used for string matching._stands for any one character and%stands for 0 or more arbitrary characters.

CASTis used for type castingCAST (S.rating as float)

Set Operations

Basic Syntax of Set Operations

query1 UNION [ALL] query2 query1 INTERSECT [ALL] query2 query1 EXCEPT [ALL] query2where:

- by default, duplicates are eliminated unless

ALLis specified!

- in this the only operation in SQL that automatically eliminates duplicates

- of course, set operations work only when they are union compatible (same columns and types)

For Example

Find sids of sailors who’ve reserved a red or a green boat.

SELECT R.sid

FROM Boats B, Reserves R

WHERE R.bid=B.bid AND B.color=‘red’

UNION

SELECT R.sid

FROM Boats B, Reserves R

WHERE R.bid=B.bid AND B.color=‘green’

alternatively, only using WHERE:

SELECT R.sid

FROM Boats B, Reserves R

WHERE R.bid=B.bid AND (B.color=‘red’ OR B.color=‘green’)

For Example

Find sids of sailors who’ve reserved a red boat but not a green boat.

SELECT R.sid

FROM Boats B, Reserves R

WHERE R.bid=B.bid AND B.color=‘red’

EXCEPT

SELECT R.sid

FROM Boats B, Reserves R

WHERE R.bid=B.bid AND B.color=‘green’

alternatively, only using WHERE

SELECT R1.sid

FROM Boats B1, Reserves R1,Boats B2, Reserves R2

WHERE R1.sid=R2.sid AND R1.bid=B1.bid AND R2.bid=B2.bid

AND (B1.color=‘red’ AND NOT B2.color=‘green’)

note that we needed two pairs to tables, Boats B1, Reserves R1,Boats B2, Reserves R2, since for a table there is only one value for color

- i.e. each record can only capture one

color. Therefore, we need two tables and combine the result usingsidandbid. (R1.sid=R2.sid AND R1.bid=B1.bid AND R2.bid=B2.bid)

Nested Queries

Nested Queries can happen in basically any where, including:

SELECTFROMWHERE- etc.

Note

In general, since we have a nested query, the scope of variables look like.

SOME QUERY with Var(S, T)For example:

SELECT S.sname /* only S is visiable */ FROM Sailors S WHERE S.sid IN (SELECT R.sid /* S and R is visible */ FROM Reserves R WHERE R.bid IN (SELECT B.bid /* S, R, B are all visible */ FROM Boats B WHERE B.color=red)where:

again, we are doing:

WHERE S.sid IN (SOME OTHER QUERY RESULT)and that the return type of

SOME OTHER QUERY RESULThas to match the type/format ofS.sid

Nested Queries and IN

IN

- essentially,

xxx IN (some query)means only keeping values ofxxxif it is the set ofsome query

- and since this is basically a set operation, the types of

xxxandsome queryhas to be union compatible- the negation will be

NOT IN

Consider the problem:

- Find names of sailors who’ve reserved boat

#103:

SELECT S.sname

FROM Sailors S

WHERE S.sid IN (

SELECT s.sid

FROM Reserves R

WHERE R.bid = 103

)

Rewriting INTERSECT with IN

Consider:

- Find id/name of sailors who’ve reserved both a red and a green boat:

SELECT S.sid, S.sname

FROM Sailors S

WHERE S.sid IN (SELECT R2.sid

FROM Boats B2, Reserves R2

WHERE R2.bid=B2.bid AND B2.color=‘red’)

AND

S.sid IN (SELECT R2.sid

FROM Boats B2, Reserves R2

WHERE R2.bid=B2.bid AND B2.color=‘green’)

where:

-

basically you are doing

S.sidis in both the sets (results of the inner queries) -

Similarly,

EXCEPT/``MINUSqueries re-written usingNOT IN`.

Nested Queries and FROM

Consider the same problem

- Find names of sailors who’ve reserved boat ``#103`:

SELECT S.sname

FROM Sailors S, (SELECT sid FROM Reserves WHERE bid=103) T

WHERE S.sid=T.sid

notice that:

-

the line:

FROM Sailors S, (SELECT sid FROM Reserves WHERE bid=103) T WHERE S.sid=T.siddid a natural join on

sidfrom the two tablesS,T

Note

if and only if the inner query

SELECT sid FROM Reserves WHERE bid=103results in only one row/result, then it would be also correct to say:SELECT S.sname FROM Sailors S, (SELECT sid FROM Reserves WHERE bid=103) T WHERE S.sid=T

Multi-level Nested Queries

Consider:

SELECT S.sname

FROM Sailors S

WHERE S.sid IN (SELECT R.sid

FROM Reserves R

WHERE R.bid IN (SELECT B.bid

FROM Boats B

WHERE B.color=red)

and it basically does “Find names of sailors who have reserved a red boat”.

Nested Queries with Correlation

Exists and Unique

EXISTSchecks for empty set

- returns true if table is not empty

UNIQUEchecks for duplicate tuples

- returns true if there are no duplicate tuples or the table is empty

Consider the problem

- Find names of sailors who’ve reserved boat

#103:

SELECT S.sname

FROM Sailors S

WHERE EXISTS (SELECT *

FROM Reserves R

WHERE R.bid=103 AND S.sid=R.sid)

where:

-

this is nested query with correlation, because the inner query

WHERE R.bid=103 AND S.sid=R.sidcorrelates/usesS.sidwhich is of the outer query -

therefore, since it used

S.sid=R.sid, this is what happened:SELECT S.sname FROM Sailors S WHERE "If each S.sid EXISTS IN (SELECT * FROM Reserves R WHERE R.bid=103 AND S.sid=R.sid)"

Similarly:

SELECT S.sname

FROM Sailors S

WHERE UNIQUE (SELECT R.bid

FROM Reserves R

WHERE R.bid=103 AND S.sid=R.sid)

Finds sailors with at most one reservation for boat ``#103. Since UNIQUE returns TRUE` if and only if the row is unique or empty.

-

and the correlation

S.sid=R.sidcan be translated to:SELECT S.sname FROM Sailors S WHERE "If each S.sid has UNIQUE data of (SELECT R.bid FROM Reserves R WHERE R.bid=103 AND S.sid=R.sid)"

Nested Queries with Set Comparison

ANY,ALLOperations

<op> ANYreturns true to the row if any of the comparison is true<op> ALLreturns true to the row if all of the comparison is true

For example:

- Find sailors whose rating is greater than that of some sailor called “Mike”

SELECT *

FROM Sailors S

WHERE S.rating > ANY (SELECT S2.rating

FROM Sailors S2

WHERE S2.sname=‘Mike’)

where:

S.rating > ANY (SELECT S2.rating ...)returnstrueto a row ofSailors SifS.ratingis larger than anyS2.ratingentry in the subquery’s table.

Division in SQL

In short, division is not supported naturally. Therefore, you need to do complicated queries.

For Example

- Find sailors who’ve reserved all boats.

SELECT S.sname

FROM Sailors S

WHERE NOT EXISTS

((SELECT B.bid

FROM Boats B)

EXCEPT

(SELECT R.bid

FROM Reserves R

WHERE R.sid=S.sid))

which basically does:

-

SELECT S.sname FROM Sailors S WHERE NOT EXISTS ( ALL_BOAT_IDs EXCEPT (Minus) ALL_BOAT_IDs_RESERVED_BY_A_SAILOR) -

then

NOT EXISTSdoes:SELECT S.sname FROM Sailors S WHERE TRUE IF_BELOW_IS_EMPTY ( ALL_BOAT_IDs EXCEPT (Minus) ALL_BOAT_IDs_RESERVED_BY_A_SAILOR)

Note

- usually, when a query involves the ALL condition, the natural thinking shout be using

DIVISION- However, since

DIVISIONis not supported naturally, when need to often compute the opposite, and minus it.

Aggregate Operators

Supported Aggregates

COUNT(*), COUNT( [DISTINCT] A)

- counts the number of rows/distinct rows of a all columns/column A

SUM( [DISTINCT] A)AVG( [DISTINCT] A)MAX(A), MIN(A)

Reminder:

- the

SELECTpart of a query is executed the last.

For Example

-

Number of sailors

SELECT COUNT (*) FROM Sailors -

Average age of all sailors whose rating is 10:

SELECT AVG (age) FROM Sailors WHERE rating=10 -

Name of sailors with maximum/highest rating

SELECT S.sname FROM Sailors S WHERE S.rating = (SELECT MAX(S2.rating) FROM Sailors S2)

Advanced SQL Constraints

Some basic SQL constraints have been covered in Integrity Constraints.

CHECK Constraints

Check Constraint

- Attribute-based

CHECKconstraints involve a single attribute

- it is basically a boolean expression, and it must evaluate to true for all rows of an attribute that has this constraint.

- If the constraint does not mention any attribute, it applies to the entire table

- e.g. if you use things like

CHECK((SELECT COUNT(*) FROM Sailors) < 100)- If any table in the

CHECKconstraint is empty (i.e. just created with no data yet), thenCHECKconstraints automatically evaluate to true

- e.g.

CHECK((SELECT COUNT(*) FROM Sailors) > 100)is true for empty table

For Example

CREATE TABLE Sailors (

sid INTEGER,

rating INTEGER,

age REAL CHECK(age >= 17), /* all rows must have age >= 17 */

PRIMARY KEY (sid),

CONSTRAINT valid_ratings

CHECK(rating >= 1 AND rating <= 10)

)

where:

-

CHECKconstraints can optionally be named. For example:CONSTRAINT valid_ratings CHECK(rating >= 1 AND rating <= 10) -

the position of

CHECKconstraints does not matter. It can be at the end as well.

For Example:

You can also check tuples:

CREATE TABLE Sailors (

sid INTEGER,

rating INTEGER CHECK (rating >= 1 AND rating <= 10),

age REAL CHECK(age >= 17), /* all rows must have age >= 17 */

PRIMARY KEY (sid),

CHECK( NOT (rating > 5 AND age < 20) ),

CHECK( NOT (rating > 7 AND age < 25) )

)

CHECK with SQL Queries

For Example

CREATE TABLE Sailors (

sid INTEGER,

rating INTEGER CHECK (rating >= 1 AND rating <= 10),

age REAL CHECK(age >= 17), /* all rows must have age >= 17 */

PRIMARY KEY (sid),

CHECK( NOT (rating > 5 AND age < 20) ),

CHECK( NOT (rating > 7 AND age < 25) ),

CHECK((SELECT COUNT(*) FROM Sailors) < 100)

)

which constraints the number of sailors to 100.

Note:

By the definition of

CHECK, the below will be true for empty tableCREATE TABLE Sailors ( sid INTEGER, rating INTEGER CHECK (rating >= 1 AND rating <= 10), age REAL CHECK(age >= 17), /* all rows must have age >= 17 */ PRIMARY KEY (sid), CHECK( NOT (rating > 5 AND age < 20) ), CHECK( NOT (rating > 7 AND age < 25) ), CHECK((SELECT COUNT(*) FROM Sailors) > 100) /* true for empty table */ )

For Example

CREATE TABLE Reserves (

sid INTEGER,

bid INTEGER,

day DATE,

PRIMARY KEY (sid, bid,day),

FOREIGN KEY (sid) REFERENCES Sailors,

FOREIGN KEY (bid) REFERENCES Boats,

CONSTRAINT noInterlakeRes /* this checks for each row of Reserves table */

CHECK( 'Interlake' <> (SELECT B.bnameFROM Boats B WHERE B.bid=bid) )

)

which basically means you cannot reserve the boat ‘Interlake’

- note that

(SELECT B.bnameFROM Boats B WHERE B.bid=bid)returns only a single row for eachbid, so you can directly compare with a string ‘Interlake’.

CHECK with Assertion

In short, when you have a CHECK constraining multiple tables, the problem comes:

- in which table should you put the constraint?

- If any table in the

CHECKconstraint is empty, thenCHECKconstraints automatically evaluate to true. This will cause buggy behavior

Assertion

- Often used for creating

CHECKconstraints for multiple tables- Does not automatically evaluate to

trueif any table is empty

- hence fixes the bug

For Example: The Wrong Approach

I want to create a small club, which that number of sailors + boat $<$ 100

-

the Wrong Approach:

CREATE TABLE Sailors ( sid INTEGER, rating INTEGER, age REAL, PRIMARY KEY (sid), CHECK ( (SELECT COUNT (*) FROM Sailors) + (SELECT COUNT (*) FROM Boats) < 100) ) )which will be buggy if one of the table

Sailors/Boatsis empty, this will be always true -

the Correct Approach:

CREATE ASSERTION smallClub CHECK ( (SELECT COUNT (*) FROM Sailors) + (SELECT COUNT (*) FROM Boats) < 100 )

Using Assertion for Total Participation

For Example

where we need:

- each sailor reserves at least one boat (total participation)

CREATE ASSERTION BusySailors (

CHECK (

NOT EXISTS (SELECT sid

FROM Sailors

WHERE sid NOT IN (SELECT sid

FROM Reserves ))

)

)

which basically means:

-

CREATE ASSERTION BusySailors ( CHECK ( NOT EXISTS (SAILOR_SID_WHO_HAS_NOT_NOT_RESERVED_A_BOAT) ) ) -

then the

NOT EXISTSmeans:CREATE ASSERTION BusySailors ( CHECK ( IS_EMPTY (SAILOR_SID_WHO_HAS_NOT_NOT_RESERVED_A_BOAT) ) )

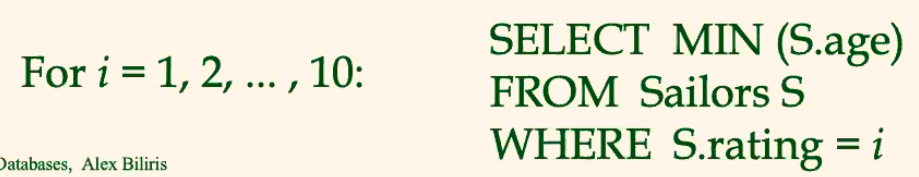

Advanced SQL Queries

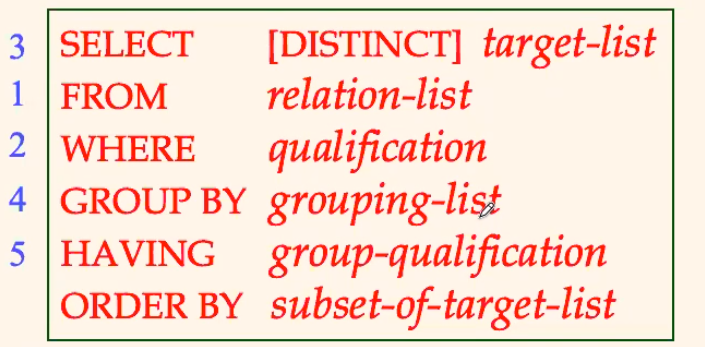

Group By

So far, we’ve applied aggregate operators to all (qualifying) tuples. Sometimes, we want to apply the aggregate operators to each of several groups of tuples.

- e.g.

AVERAGEGPA for students based on different ages (group)

For Example

- Task: Find the age of the youngest sailor for each rating level.

You would like to do this (but you cannot):

where:

- it does not work for all times because:

- often, we do not know what range of $i$ would this take

- often, the values of $i$ might not be integers/equally spaced

Therefore, we need to solve it by using GROUP BY

Queries with Group By

Now, it looks like this:

and it evaluates from:

FROM(trivial)WHERE(trivial)SELECT, basically a projection, but it must make sense for groups. Target-list/Result list here must contains/returns either:

- attribute names mentioned in

grouping-list, or- terms with aggregate operations (e.g.,

MIN (S.age)).

- aggregates are actually evaluated in the end

GROUP BYsort the result from the previous 3 steps into groups

- if you have multiple qualifications, then it sorts qualification iteratively (e.g. first sort by

qualif_1, then inside those groups, sort byqualif_2, … etc)HAVINGuses group qualification, which is basically qualification expression evaluated inside EACH GROUP

- such as the average age of sailors in each group must be > 25

- therefore, this also must use attributes in the

GROUP BYor aggregates- (optionally)

ORDER BYorders the output table from the above

For Example

Task: Find the average rating for each sailor age

Solution:

-

when you see the term for each, very probably you need to create groups

SELECT S.age, AVG(S.rating) FROM Sailors S GROUP BY S.agenote that:

- the select looks for

S.age, AVG(S.rating), which is exactly- attribute names mentioned in

grouping-list, or - terms with aggregate operations (e.g.,

MIN (S.age)`).

- attribute names mentioned in

- the select looks for

For Example

Task: Find the average rating for each sailor age greater than 25

Solution:

-

simply:

SELECT S.age, AVG(S.rating) FROM Sailors S WHERE S.age> 25 GROUP BY S.age

For Example

Task: Find the average rating for each sailor age with more than 4 sailors of that age

Solution:

-

Basically, we need each group have more than 4 members:

SELECT S.age, AVG(S.rating) FROM Sailors S GROUP BY S.age HAVING COUNT(*) > 4where notice that:

- the

HAVING COUNT(*) > 4will be discarding GROUPS fromGROUP BYthat has total member less than 4

- the

For Example

Task: Find the age of the youngest sailor with age > 18, for each rating with at least 2 such sailors (age older than 18).

Solution:

-

the trick is to filter

age>18early:SELECT S.rating, MIN(S.age) FROM Sailors S WHERE S.age > 18 GROUP BY S.rating HAVING COUNT(*) > 1

Under the hood, this is what happens:

-

the

FROMstep is skipped -

WHERE S.age > 18

-

SELECT S.rating, MIN(S.age)

notice that the aggregate operators are not evaluated at this point

-

GROUP BY S.rating

-

HAVING COUNT(*) > 1which eliminates by GROUPS

-

(Book-keeping) the aggregate operator is executed ` MIN(S.age)`:

For Example:

Task: For each red boat, print the bid and the number of reservations for this boat

Solution:

SELECT B.bid, COUNT(*) AS num_of_reservations

FROM Boats B, Reserves R

WHERE R.bid=B.bid AND B.color=‘red’

GROUP BY B.bid

Alternatively:

-

SELECT B.bid, COUNT(*) AS num_of_reservations FROM Boats B, Reserves R WHERE R.bid=B.bid GROUP BY B.bid, B.color HAVING B.color='red'which does:

GROUP BY B.bid, B.colorfirst, and then filter it usingHAVING

Group By With Correlated Queries

Reminder:

HAVINGuses group qualification, which is basically qualification expression evaluated inside EACH GROUP

- such as the average age of sailors in each group must be > 25

For Example:

Task: Find the age of the youngest sailor with age > 18, for each rating with at least 2 sailors (of any age)

Solution:

-

SELECT S.rating, MIN(S.age) FROM Sailors S WHERE S.age > 18 GROUP BY S.rating HAVING 1 < (SELECT COUNT (*) FROM Sailors S2 WHERE S.rating=S2.rating)where we have to use nested correlated SQL queries:

-

SELECT S.rating, MIN(S.age) FROM Sailors S WHERE S.age > 18 GROUP BY S.rating HAVING "FOR EACH GROUP, 1 < (SELECT COUNT (*) FROM Sailors S2 WHERE S.rating_of_each_group =S2.rating)"

-

Alternatively:

SELECT S.rating, MIN (S.age)

FROM Sailors S, (SELECT rating

FROM Sailors

GROUP BY rating

HAVING COUNT(*) >= 2) ValidRatings

WHERE S.rating=ValidRatings.rating AND S.age > 18

GROUP BY S.rating

where you are basically:

- computing ratings with at least 2 sailors first

- filtering the ages to $>$ 18

- group them by each rating, and find minimum

Null Values

Basically, since we have NULL values in SQL, we need to do logical comparisons with 3-values.

AND

| T | F | N | |

|---|---|---|---|

| T | T | F | N |

| F | F | F | F |

| N | N | F | N |

Other Joins

Outer Joins

Outer Joins

- rows included even if there is no match. This row is also indicated with

Nullfields

Left Outer Join

- rows in the left table that do not match any row in the right table appear exactly once, with column from the right table assigned

NULLvalues.

- if there is a match, then there could be multiple values since it is just a cartesian product + selection

- same as Left Join

Reminder

- In the end,

JOINs are just doing a cartesian product and a $\sigma$

SELECT S.sid, R.bid

FROM Sailors S LEFT OUTER JOIN Reserves R ON S.sid = R.sid

so that:

- every row of the left table appears in the result

- if rows from the left table did not match any in the join, then it still appears but has

null- regular join will not have this functionality

RIGHT OUTER JOIN:

- the reverse

FULL OUTER JOIN:

- both, left and right

Examples

Task: For each boat color, print the number of reservations for boats of that color (e.g., (red, 10), (blue, 3), (pink, 0))

Solution

SELECT B.color, COUNT(R.sid)

FROM Boats B LEFT OUTER JOIN Reserves R ON B.bid=R.bid

GROUP BY B.color

where:

- remember that each boat can only have one color

Task: Print the sid and name of every sailor and the number of reservations the sailor has made.

Solution:

SELECT S.sid, S.sname, COUNT(R.bid)

FROM Sailors S LEFT OUTER JOIN Reserves R ON S.sid=R.sid

GROUP BY S.sid, S.sname

Note that

- whenever you have

GROUP BY some_id, no matter how many attributes you add afterwards, it will not affect the result

Task: Print the sid of every sailor and the number of different boats the sailor has reserved.

Solution

SELECT SR.sid, COUNT(DISTINCT SR.bid)

FROM (SELECT S.sid, R.bid

FROM Sailors S LEFT OUTER JOIN Reserves R ON S.sid=R.sid) SR

GROUP BY SR.sid

Values

Basically this is used to create a constant table

For Example:

Creates a constant table

VALUES (1, 'one'), (2, 'two'), (3, 'three')

Insert into:

INSERT INTO Sailors

VALUES (22, ‘dustin’, 7, 30)

Some trick other tricks:

CREATE VIEW TopSailors AS (

SELECT sid, sname

FROM Sailors

WHERE ratings >= 10

UNION

VALUES (2264, ’Alex Biliris’)

)

Building up Complicated SQL Statements

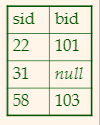

Consider the statement:

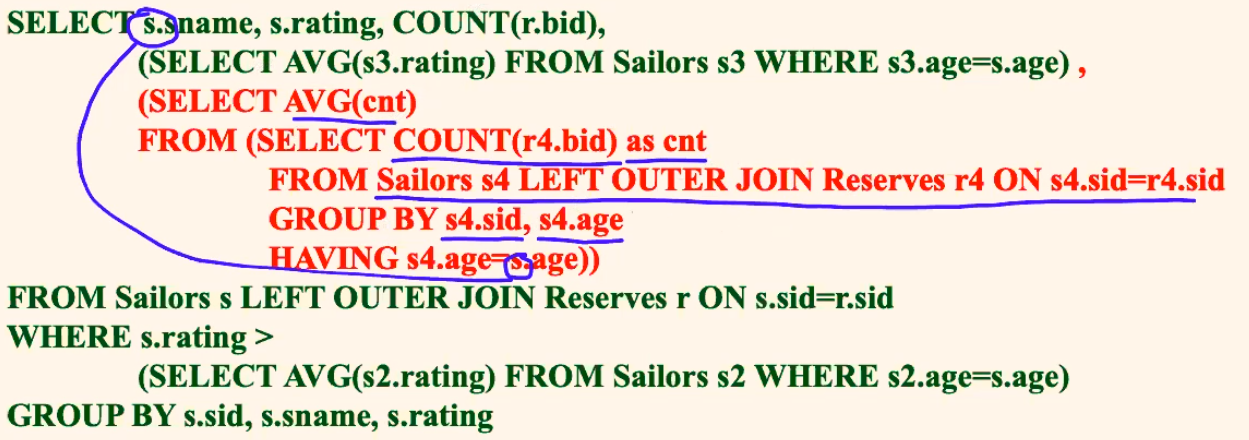

SELECT s.sname, s.rating, COUNT(r.bid),

(SELECT AVG(s3.rating) FROM Sailors s3 WHERE s3.age=s.age) ,

(SELECT AVG(cnt)

FROM (SELECT COUNT(r4.bid) as cnt

FROM Sailors s4 LEFT OUTER JOIN Reserves r4 ON s4.sid=r4.sid

GROUP BY s4.sid, s4.age

HAVING s4.age=s.age))

FROM Sailors s LEFT OUTER JOIN Reserves r ON s.sid=r.sid

WHERE s.rating>

(SELECT AVG(s2.rating) FROM Sailors s2 WHERE s2.age=s.age)

GROUP BY s.sid, s.sname, s.rating

To parse it, we should break it into parts

Part 1:

For each sailor with rating higher than the average rating of all sailors, print the name, rating, number of boats she has reserved

SELECT s.sname, s.rating, COUNT(r.bid)

FROM Sailors s LEFT OUTER JOIN Reserves r ON s.sid=r.sid

WHERE s.rating >

(SELECT AVG(s2.rating) FROM Sailors s2)

GROUP BY s.sid, s.sname, s.rating

Part 2:

For each sailor with rating higher than the average rating of all sailors of the same age, print the name, rating, number of boats she has reserved

SELECT s.sname, s.rating, COUNT(r.bid)

FROM Sailors s LEFT OUTER JOIN Reserves r ON s.sid=r.sid

WHERE s.rating >

(SELECT AVG(s2.rating) FROM Sailors s2 WHERE s2.age=s.age) /* added here */

GROUP BY s.sid, s.sname, s.rating

notice the correlated query:

(SELECT AVG(s2.rating) FROM Sailors s2 WHERE s2.age=s.age)

Part 3

For each sailor with rating higher than the average rating of all sailors of the same age, print the name, rating, number of boats she has reserved, and the average rating of sailors of the same age

SELECT s.sname, s.rating, COUNT(r.bid),

(SELECT AVG(s3.rating) FROM Sailors s3 WHERE s3.age=s.age) /* A COMPUTED COLUMN */

FROM Sailors s LEFT OUTER JOIN Reserves r ON s.sid=r.sid

WHERE s.rating >

(SELECT AVG(s2.rating) FROM Sailors s2 WHERE s2.age=s.age)

GROUP BY s.sid, s.sname, s.rating

notice that the correlated query is now at SELECT

(SELECT AVG(s3.rating) FROM Sailors s3 WHERE s3.age=s.age)which computes the value for each matchings3.age=s.age

Part 4

For each sailor with rating higher than the average rating of all sailors of the same age, print the name, rating, number of boats she has reserved, and the average rating of sailors of the same age and average number of reserved boats by sailors of the same age

SELECT s.sname, s.rating, COUNT(r.bid),

(SELECT AVG(s3.rating) FROM Sailors s3 WHERE s3.age=s.age) ,

(SELECT AVG(cnt) /* added nested query */

FROM (SELECT COUNT(r4.bid) as cnt

FROM Sailors s4 LEFT OUTER JOIN Reserves r4 ON s4.sid=r4.sid

GROUP BY s4.sid, s4.age

HAVING s4.age=s.age))

FROM Sailors s LEFT OUTER JOIN Reserves r ON s.sid=r.sid

WHERE s.rating>

(SELECT AVG(s2.rating) FROM Sailors s2 WHERE s2.age=s.age)

GROUP BY s.sid, s.sname, s.rating

With - Auxiliary SQL

This is a trick so that you can give names to temporary tables AND use them!

Basically, you use a subquery, and name it:

WITH RedBoats AS (

SELECT *

FROM Boats WHERE color = ‘red’

), /* comma, additional WITH query */

RedBoatReservations AS (

SELECT bid, COUNT(*) AS cnt

FROM Reserves

WHERE bid IN (SELECT bid FROM RedBoats)

GROUP BY bid) /* no comma, one main SQL expected */

SELECT bid FROM RedBoatReservations

WHERE cnt> (SELECT AVG(cnt) FROM RedBoatReservations)

Note

- Not all systems support

WITH, but this is obviously very useful since it can:

- make your SQL look easier to understand

- avoid repetitive queries

Security and Authorization

Basically the goal is to make sure:

- Secrecy: Users should not be able to see things they are not supposed to.

- E.g., A student can’t see other students’grades.

- Integrity: Users should not be able to modify things they are not supposed to.

- E.g., Only instructors can assign grades.

- Availability: Users should be able to see and modify things they are allowed to.

Access Control

Access Control

- Based on the concept of access rights or privileges for objects

- e.g. privileges to

select/updatea table, etc.- this is achieved by the

GRANTcommand- Creator of a table or a view automatically gets all privileges on it.

GRANT and Revoke

Grant Command

The command looks like:

GRANT privileges ON object TO users [WITH GRANT OPTION]where:

privilegescan be the following:

SELECTINSERT/UPDATE (col-name)

- can insert/update tuples with non-null or non-default values in this column.

DELETEREFERENCES (col-name)

- can define foreign keys (to the

col-nameof other tables). This is actually powerful, in that the foreign table might not be able to delete/update is there isON DELET NO ACTION, or equivalent.- this also means that by default, you can only foreign key in our own granted access tables

ALLall privileges the issuer ofGRANThasobjectcan be a table, a view, or an entire databaseuserswill be the logged inusernamein the DBMSGRANT OPTION:

- If a user has a privilege with the

GRANT OPTION, the user can pass/propagate privilege on to other users (with or without passing on the GRANT OPTION).However, only owner can execute

CREATE,ALTER, andDROP.

For Example:

GRANT INSERT, SELECT ON Sailors TO Horatio

where Horatio can query Sailors or insert tuples into it.

GRANT DELETE ON Sailors TO Yuppy WITH GRANT OPTION

where Yuppy can delete tuples, and also authorize others to do so.

GRANT UPDATE (rating) ON Sailors TO Dustin, Yuppy

where Dustin and Yuppy can update (only) the rating field of Sailors table.

Revoke

- When a privilege is revoked from

X, it is also revoked from all users who got it solely fromX.

Grant and Revoke on Views

Since Views have some dependencies of the underlying table:

- If the creator of a view loses the

SELECTprivilege on an underlying table, the view is dropped - If the creator of a view loses a privilege held with the

grant optionon an underlying table, (s)he loses the privilege on the view as well; so do users who were granted that privilege on the view (it will cascade/propagate)!

Views and Security

Advantages of using Views

- Views can be used to present necessary information (or a summary), while hiding details in underlying relation(s).

- Creator of view has a privilege on the view if (s)he has at least the

SELECTprivilege on all underlying tables.

Together with GRANT/``REVOKE` commands, views are a very powerful access control tool.

Authorization Graph

This is how the system actually keeps track of all the authorizations.

Authorization Graph

- Authorization Graph Components:

- Nodes are users

- Arcs/Edges are privileges passed from one user to the other

- Labels are the privileges

- The system starts with a “``system

” node as a “user`” granting privileges.

- And for all times, all edges/privileges must be reachable from the

systemnode. If not, the unreachable privilege will be removed as well.- Cycles are possible

Role-Based Authorization

The idea is simple:

- privileges are assigned to roles.

- which can then be assigned to a single user or a group of users.

This means that

- Roles can then be granted to users and to other roles (propagation).

- Reflects how real organizations work.

Database Application Development

This section will talk about:

- SQL in application code

- Embedded SQL

- Cursors

- Dynamic SQL

- Via drivers– API calls (eg, JDBC, SQLJ)

- Stored procedures

The aim is to talk about: how to build an application from databases

SQL in Application Code

In general, there are two approaches:

- Embed SQL in the host language (Embedded SQL, SQLJ)

- in the end, there will be a preprocessor that converts your related code to SQL statements

- Create special API to call SQL commands (JDBC)

- queries using APIs

However, this might be a problem:

Impedance Mismatch

- SQL relations are (multi-) sets of records, with no a priori bound on the number of records.

- No such data structure exist traditionally in procedural programming languages such as C++.

- (Though now: STL)

- SQL supports a mechanism, called cursor, to handle this.

- i.e. we are retrieving the data in steps

Embedded SQL

Approach: Embed SQL in the host language.

- A preprocessor converts the SQL statements into special API calls.

- Then a regular compiler is used to compile the code.

Language constructs:

-

Connecting to a database:

EXEC SQL CONNECT -

Declaring variables

EXEC SQL BEGIN (END) DECLARE SECTION -

Statements

EXEC SQL Statement

For Example: C Application Code

EXEC SQL BEGIN DECLARE SECTION

char c_sname[20];

long c_sid;

short c_rating;

float c_age;

EXEC SQL END DECLARE SECTION

so that the SQL interpreter/convertor knows that:

-

the following variables

char c_sname[20]; long c_sid; short c_rating; float c_age;can be used in queries

Then, you can do:

EXEC SQL SELECT

SELECT S.name, S.age INTO :c_sname, :c_age

FROM Sailors S

WHERE S.Sid = :c_sid

where the INTO keywords stores the queried data into host/code variables.

Note

- For

C, there are two special “error” variables:

SQLCODE(long, is negative if an error has occurred)SQLSTATE(char[6], predefined codes for common errors)- However, a problem is that you sometimes don’t know how many queried data will be returned

- therefore, you need Cursors

Cursor

The idea is simple:

- Declare a cursor on a relation or query statement

- Open/Call that cursor, and repeatedly fetch a tuple then move the cursor, until all tuples have been retrieved.

- basically, you will have an

iterator - Can also modify/delete tuple pointed to by a cursor.

- basically, you will have an

For Example

Cursor that gets names of sailors who’ve reserved a red boat, in alphabetical order

EXEC SQL DECLARE sinfo CURSOR FOR

SELECT S.sname

FROM Sailors S, Boats B, Reserves R

WHERE S.sid = R.sid AND R.bid = B.bid AND B.color='red'

ORDER BY S.sname

/* some other code */

EXEC SQL CLOSE sinfo

In terms of full code:

char SQLSTATE[6];

EXEC SQL BEGIN DECLARE SECTION

char c_sname[20];

short c_minrating;

float c_age;

EXEC SQL END DECLARE SECTION

c_minrating = random();

EXEC SQL DECLARE sinfo CURSOR FOR

SELECT S.sname, S.age

FROM Sailors S

WHERE S.rating > :c_minrating

ORDER BY S.sname

do{

EXEC SQL FETCH sinfo INTO :c_sname, :c_age, /* does the data iteration */

printf("%s is %d years old\n", c_sname, c_age);

} while (SQLSTATE != '02000');

EXEC SQL CLOSE sinfo

where:

SQLSTATE == '02000'means the end of input/cursor

Dynamic SQL

The idea is that:

- the SQL query strings are now dynamic in compile time, based on some of your coding logic

- an example would be the

MyBatisdynamic SQL I used in Spring Boot Projects

Drivers and Database APIs

This is the other approach of using Drivers/APIs

- Rather than modify compiler, add library with database calls (API)

- Pass SQL strings from language, presents result sets in a language-friendly way (CBC, JDBC, and others)

- Supposedly DBMS-neutral

- a “driver” traps the calls and translates them into DBMS-specific code, by their specific Drivers

- e.g. the code you write will be the same, but if you change your DBMS, you need to change your drivers

Stored Procedures

This is actually the third approach of doing this. The idea of a stored procedure is:

- the application related query is stored and executed in the DBMS directly

- of course, they can still have parameters passed in from your code

- therefore, this is executed in the process space of the server

For Example

First, we create and store the needed query in DBMS

CREATE PROCEDURE ShowNumReservations

SELECT S.sid, S.sname, COUNT(*)

FROM Sailors S, Reserves R

WHERE S.sid = R.sid

GROUP BY S.sid, S.sname

where this is just a plain SQL. The more interesting case would be:

CREATE PROCEDURE IncreaseRating(IN sailor_sid INTEGER, IN increase INTEGER)

UPDATE Sailors

SET rating = rating + increase

WHERE sid = sailor_sid

where:

INmeans input parameter form the caller

In general:

- Stored procedures can have parameters with mode

IN,OUT,INOUT

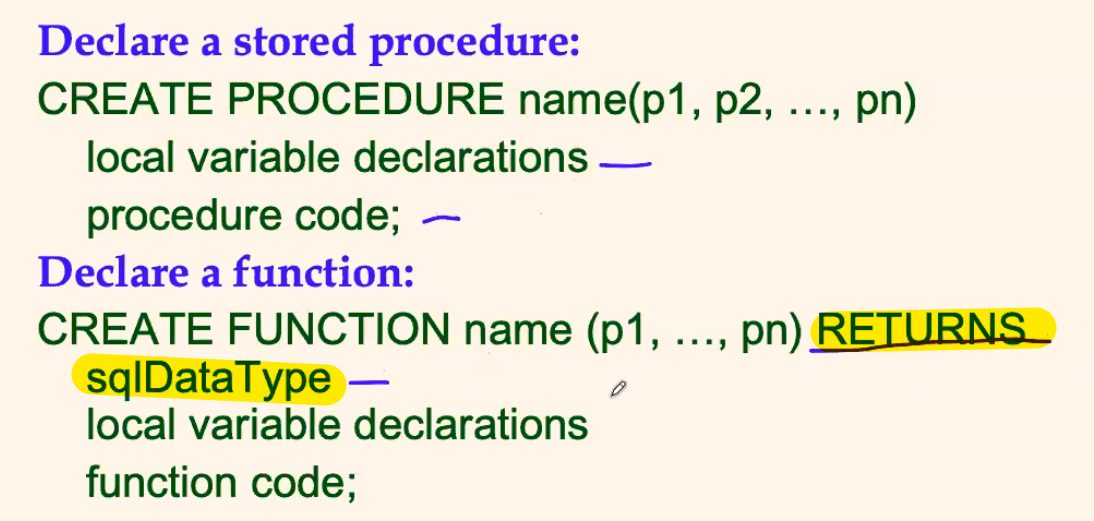

SQL/PSM

Most DBMSs allow users to write stored procedures in a simple, general-purpose language (close to SQL)

- examples would be SQL/PSM standard

The syntax looks like the following:

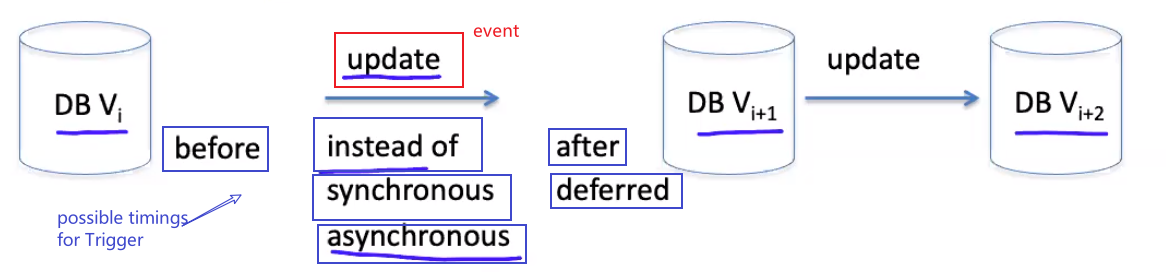

Triggers - Server Programming

A procedure that starts automatically when a specified change occurs

- Changes could be:

INSERT,UPDATE,DELETE(others too)

Triggers

Think of triggers having three parts (ECA Components)

- Event associated with the trigger

- Condition (optional) – should it be fired?

- Action – the trigger procedure/function/what should be executed

Can be attached to both tables and views

Some possible timings that you can specify for a trigger to happen is

where:

beforemeans before the evaluation of theeventaftermeans afterinstead ofmeans, exactly at the point when trigger condition is met, stop the execution of your program, and start triggersynchronousmeans execute the trigger right after your program/eventasynchronousmeans execute the trigger asynchronously when the condition is met. So it does not stop the execution of your program

However, sometimes you might need to consider:

-

Trigger should happen at what granularity of invocation? Record (row) level or statement level?

- A row-level trigger is invoked once for each row affected by the triggering statement

- A statement-level trigger is invoked only once, regardless of the number of rows affected by the triggering statement

-

Hard to determine chain of events

- cascading triggers

- recursive invocation (potentially endless) of the same triggers possible

-

Transactional issues; trigger could be seen as

- part of the triggering statement or

- independent action

i.e. should the trigger be aborted if the transaction is aborted?

Difference between Constraints and Triggers

- Constraints describe what the database’s state is (they have to be

TRUEor else … )

- The effects of constraints are known – no mystery if a constraint is violated (gives error)

- They do not change the state of the database

- Triggers are programs - very flexible mechanism

- theoretically, you might not be entirely sure what happens when a trigger is executed

Order of Execution

Reminder

- Triggers can be configured at many different timings

- The granularity of trigger could be row level of statement level

- Statement-level BEFORE triggers

- fire before the statement starts to do anything

- Statement-level AFTER triggers

- fire at the very end of the statement

- Row-level BEFORE triggers

- fire immediately before a row is operated on

- Row-level AFTER triggers

- fire at the end of the each row operation (before statement-level AFTER triggers)

- Row-level INSTEAD OF triggers (on views only)

- fire immediately as each row in the view is identified as needing to be operated on.

If more than one trigger is defined for the same event/relation

- execute them In alphabetical order by trigger name!

Condition in Triggers

Reminder

- Recall that a triggers having three parts (ECA Components)

- Event associated with the trigger

- Condition (optional) – should it be fired?

- Action – the trigger procedure/function/what should be executed

- Now, we want to look at what conditions can be specified

Commonly:

`WHEN Condition

If it exists, the condition needs to be true for the trigger to fire

- e.g.,

WHEN time-of-day() > 9:30:05Only for row-level

AFTERtriggers, the trigger’s condition can examine the values of the columns of

- the OLD row (before an UPDATE or DELET)

- and the NEW row (after an UPDATE or DELET)

in order words, you can use the parameters

oldandnew:

- e.g.

WHEN new.salary > 10*old.salary.

Examples of Triggers

Syntax:

CREATE TRIGGER name

[BEFORE | AFTER | INSTEAD OF ] event_list ON table

[WHEN trigger_qualifications]

[FOR EACH ROW procedure |

FOR EACH STATEMENT procedure ]

where:

- Event ->

event_list ON table- activates the trigger

- Condition ->

WHEN trigger_qualifications- should it be fired?

- Action ->

[FOR EACH ROW procedure | FOR EACH STATEMENT procedure ]- the actual procedure to run

Then some examples would be:

For Example

CREATE TRIGGER init_count BEFORE INSERT ON Students

DECLARE count INTEGER; /* like a GLOBAL VARIABLE */

BEGIN

count := 0

END

where:

-

the Event is

BEFORE INSERT ON Students -

the Action is:

DECLARE count INTEGER; BEGIN count := 0 END -

the

DECLARE count INTEGER;is like a global variable declared

and then

CREATE TRIGGER incr_count AFTER INSERT ON Students

WHEN (NEW.age < 18)

FOR EACH ROW

BEGIN

count := count + 1;

END

For Example

If we want to populate data into another table automatically:

CREATE TRIGGER stats AFTER INSERT ON Students

REFERENCING NEW TABLE Stats /* if we want to modify/deal with other tables */

FOR EACH STATEMENT

INSERT INTO Stats

SELECT age, AVG(gpa)

FROM Students

GROUP BY age

where:

- now, after you insert new data into

Students, the tableStatswill also be populated

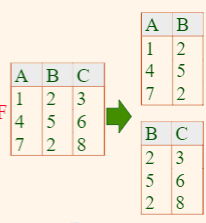

Normal Forms

This section talks about Schema Refinement and Normal Forms.

The Evils of Redundancy

- Redundancy is at the root of several problems associated with relational schemas:

- redundant storage, insert/delete/update anomalies

- Main refinement technique: decomposition (replacing ABCD with, say, AB and BCD, or ACD and ABD).

- in the end, we should have tables with a single, clear functional dependency.

Functional Dependency

Functional Dependency

- A functional dependency $X \to Y$ holds over relation $R$ if, for every instance $r$ of $R$:

- i.e. given two tuples in $r$, if the $X$ values agree, then the $Y$ values must also agree. (X and Y are sets of attributes.). An example would be $X$ being the primary key of a table $r$

- In fact, this means if $K$ is a candidate key of $R$, then $K \to R$

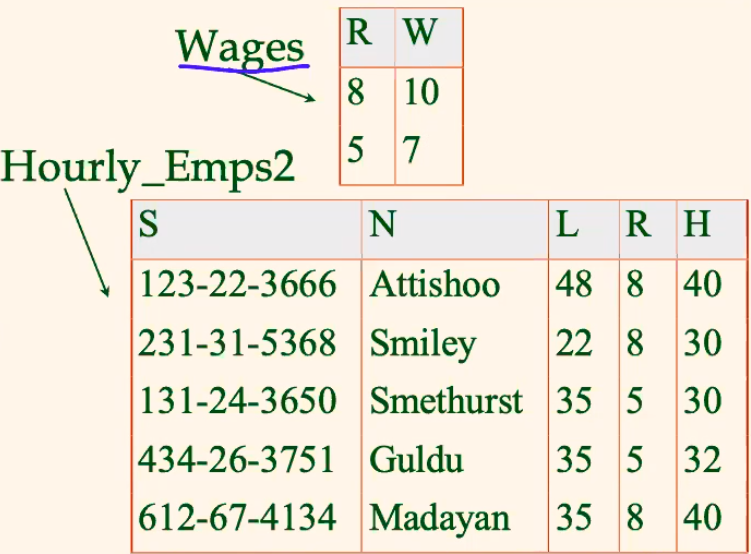

Note that the above notation $X → Y$ is read as:

- $X$ functionally determines $Y$

- or $Y$ functionally depends on $X$

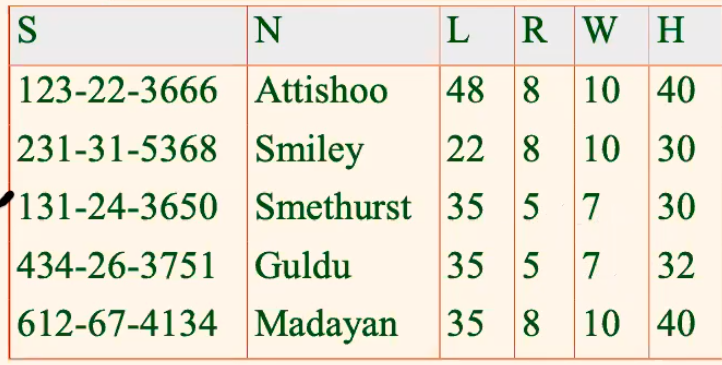

For Example

Consider the schema:

- Consider relation obtained from

Hourly_Emps (ssn, name, lot, rating, hrly_wages, hrs_worked)

We denote this relation schema by listing the attributes: SNLRWH

- This is really the set of attributes

{S,N,L,R,W,H}

Then some functional dependencies include

ssnis the key: $S \to SNLRWH$ratingdetermineshourly_wages: $R \to W$

Note

- the reverse is often not true, i.e. you might not have $W \to R$

- if $R = 10 \to W =100$, and $R=11 \to W=110$

- obviously, reversing does not work

For Example: Functional Dependency

Instead of having:

where:

- $R\to W$, so that $W$ actually does not depend no $S$, but $R$!

then you have the following problem due to redundancy

Update anomaly:

- Can we change $W$ in just the1st tuple of $SNLRWH$? (without messing up the mapping $R\to W$)

Insertion anomaly:

- What if we want to insert an employee and don’t know the hourly wage for his rating?

Deletion anomaly:

- If we delete all employees with rating $5$, we lose the information about the wage for rating $5$!

Therefore:

where now:

-

the table makes it clear that: $S \to R$, and then $R \to W$

-

no redundancy and clear functional dependencies

Checking Functional Decomposition

we have decomposed the schema to better forms. To check if it is correct:

perform a

JOINand see if the table matches the original onethe two tables solution means that it must be

- $S \to R$

- $R \to W$

hence two tables. But if $R \text{ and } H\to W$, then the above design would not work

Properties of Functional Dependency

Some obvious ones are

Armstrong’s Axioms

- Armstrong’s Axioms (X, Y, Z are sets of attributes):

- Reflexivity: If $Y \subseteq X$, then $X \to Y$

- e.g. $Y = BC, X=ABC$, then obviously $ABC \to BC$

- Augmentation: If $X \to Y$, then $XZ \to YZ$ for any Z

- (Note: the opposite is not true!)

- Transitivity: If $X \to Y$ and $Y \to Z$, then $X \to Z$

- Following from the axioms are:

- Union: If $X \to Y$ and $X \to Z$, then $X \to YZ$

- Decomposition: If $X \to YZ$, then $X \to Y$ and $X \to Z$

Closure Property

- the set of all FDs are closed.

- recall that closure means if $F$ is the set of FDs, and I apply functional dependencies on the elements of the set, am I still inside $F$? (not getting anything new).

- e.g. $F = {A \to B, B \to C, C D \to E }$ , can you cannot get $A\to E$. Otherwise it is not closed.

- proof see page 9-10 of PPT

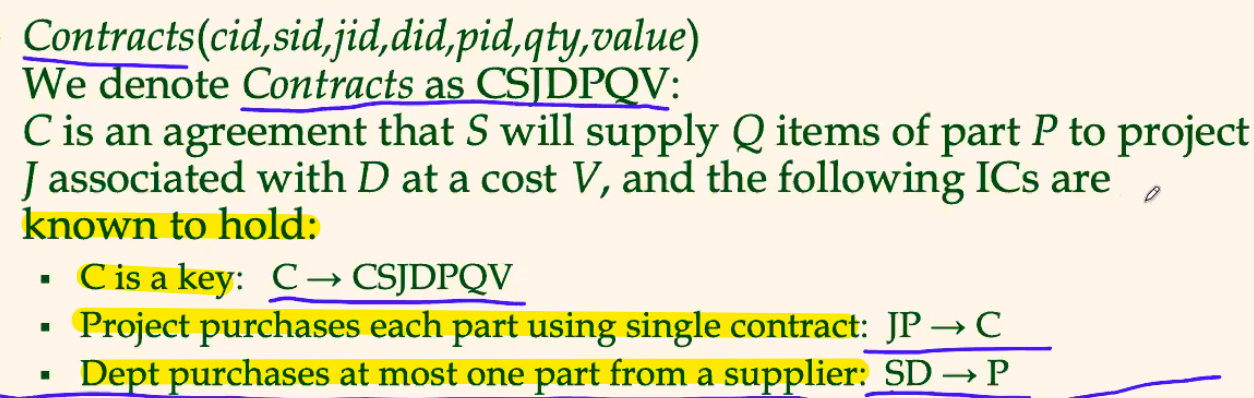

For Example

Then, this means that:

- $JP \to CSJDPQV$

- because $JP \to C$, $C \to CSJDPQV$ using transitivity

- and then $SDJ \to JP$

- because $SD \to P$, using augmentation

- and hence that $SDJ \to CSJDPQV$

- because $SDJ \to JP$, $JP \to CSJDPQV$

So I get two primary keys

- $JP \to CSJDPQV$

- $SDJ \to CSJDPQV$

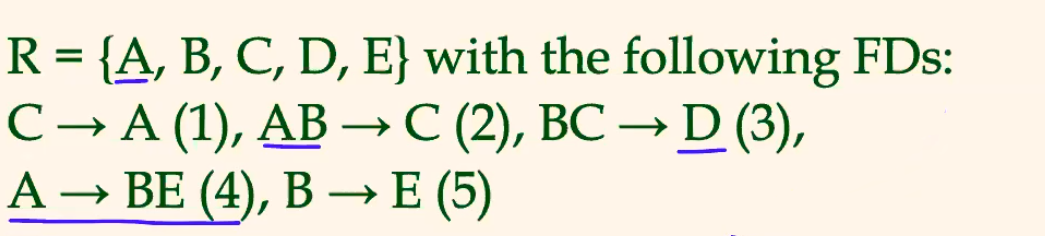

For Example: Closure

Consider:

then we can get: $$

F_A^+ = { A \to A, A\to BE, A\to ABCDE}