COMS4771 Machine Learning

- Logistics

- Basics and MLE

- Nearest Neighbors and Decision Trees

- Perceptron and Kernelization

- Support Vector Machine

- Regression

- Statistical Learning Theory

- Unsupervised Learning

- Future Classes

Logistics

Homework:

- Must type your homework (no handwritten homework).

- Please include your name and UNI.

Exams:

- Exam1 will cover all the material discussed before Exam1

- Exam2 will be comprehensive and will cover all the course material covered during the semester.

- The exams are mainly conceptual, on mathematical models. So there will be no coding questions.

Resources:

- http://www.cs.columbia.edu/~verma/classes/ml/index.html

Basics and MLE

Quick recap on notation.

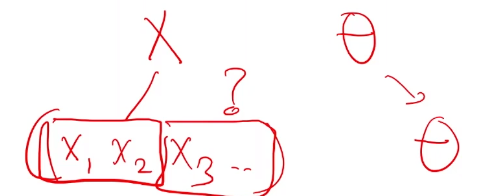

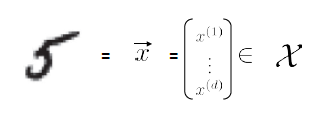

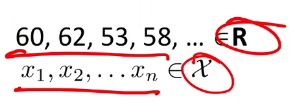

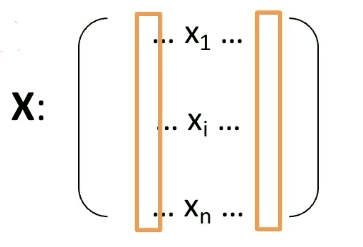

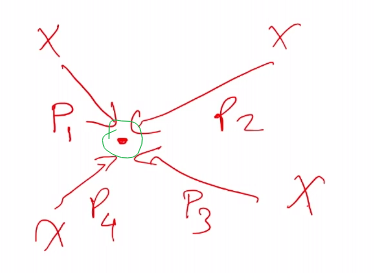

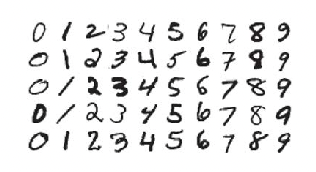

Consider the input of:

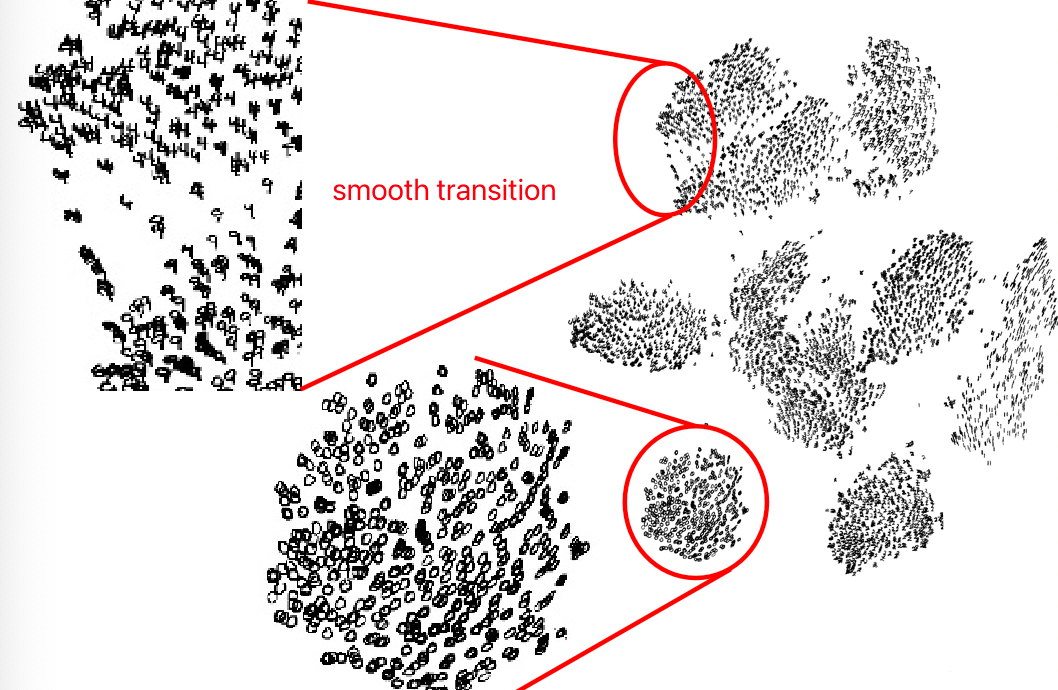

.png)

then basically what we will do is:

where we

- use $d$ to represent that the dimensionality of the input measurement, since $d$=dimension

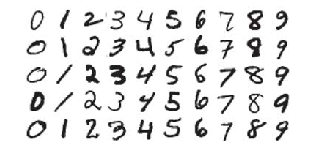

- in this case, we just converted an e.g. $10 \times 10$ sized image to vector with $d=100$

Then, we need some function $f$ that can map that input to the output space $\mathcal{Y}$

where here:

- output space is has only a discrete set of $10$ values

Then obviously, the aim of our task is to figure our $f$.

In summary, for Supervised Learning, we need:

- Data: $(\vec{x}_1, y_1), (\vec{x}_2, y_2), …, (\vec{x}_m, y_m) \in \mathcal{X} \times \mathcal{Y}$

- Assumption: there exists some optimal function $f^* : \mathcal{X} \to \mathcal{Y}$ such that $f^*(\vec{x}_i)=y_i$ for most $i$

- Learning Task: given $n$ examples from the data, find approximation $\hat{f} \approx f^*$ (as close as possible)

- Goal: find $\hat{f}$ that gives the mostly correct prediction in unseen examples

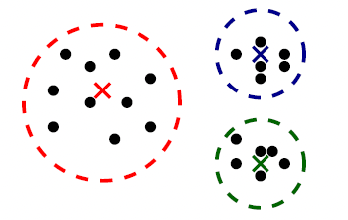

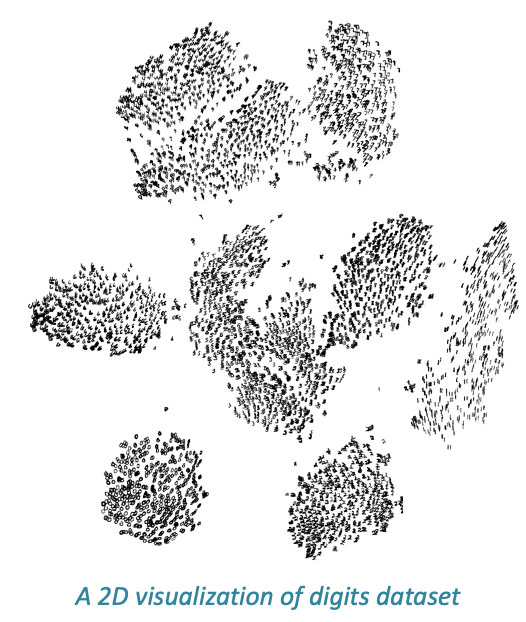

For Unsupervised Learning, the difference is that:

- Data: $\vec{x}_1, \vec{x}_2, …, \vec{x}_m \in \mathcal{X}$

- Learning Task: discover the structure/pattern given $n$ examples from the data

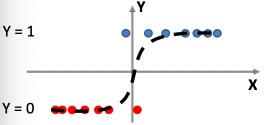

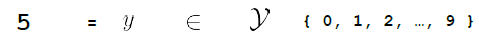

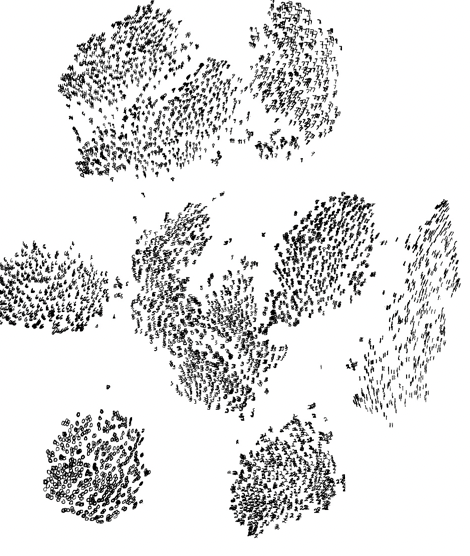

Graphically, we basically do the following:

The key is for models to generalize well, instead of just memorizing the training data. This is basically the hardest part.

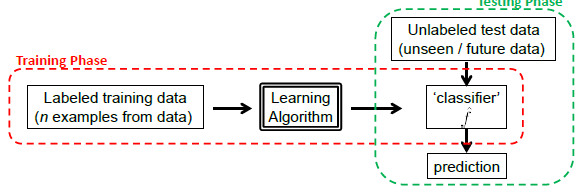

Statistical Modelling Approach

Consider we are given a picture, as shown above as well:

Heuristics:

- let this data be $\vec{x}_1$. Then idea is that the probability of $(\vec{x}_1, y_1=5)$ should be a lot more likely than $\vec{x}_1, y_1=1$, for example. Therefore, we can consider some non-uniform distribution of $(\mathcal{X} \times \mathcal{Y}) \sim D$.

- Then, we basically assume that we have $(\vec{x}_1, y_1), (\vec{x}_2, y_2), …$ drawn IID (Independently and identically distributed) from $\mathcal{D}$.

- independent: drawing data $(\vec{x}_2, y_2)$ does not depend on the value of $(\vec{x}_1, y_1)$

- identically distributed : data are drawn from the same distribution $\mathcal{D}$

Therefore, basically we are doing:

Then, to select $\hat{f} \in \mathcal{F}$, basically there are two approaches:

- Maximum Likelihood Estimation - MLE

- Maximum A Posteriori - MAP

- Optimization of some custom loss criterion

Maximum Likelihood Estimation

Given some data, we consider our model/distribution being defined by some parameters $\theta$, such that:

\[\mathcal{P} = \{ p_\theta | \theta \in \Theta \}\]for instance:

- if you assumed a Gaussian distribution, then $\Theta \in \mathbb{R}^2$ would include any possible combination of $(\mu, \sigma^2)$

- so each model is defined by a $p_\theta$, and the set of all possible models is $\mathcal{P}$

- $p_\theta = p_\theta(\vec{x}_i)$, basically takes in our data and spits out the probability of generating this input

If each model $p_\theta$ is a probability model, then we can find $\theta$ that best fits the data by using the maximum likelihood estimation.

Heuristics

- suppose we have models $p_1, p_2, …$, given my data being $\vec{x}_1, \vec{x}_2, …$, what it the most probable model/distribution that generated my data?

So we define the likelihood to be:

\[\mathcal{L}(\theta | X) := P(X|\theta) = P(\vec{x}_1, ..., \vec{x}_n | \theta)\]where this means that:

- consider we are looking at some model with $\theta$, what is the probability that this model $p_\theta$ generated the data set $X$?

Now, using the IID assumption, we can expand this into:

\[P(\vec{x}_1, ..., \vec{x}_n | \theta) = \prod_{i=1}^n P(\vec{x}_i | \theta) = \prod_{i=1}^n p_\theta(\vec{x}_i)\]The interpretation is simple: How probable(or how likely) is the data $X$ given the model $p_\theta$?

MLE

Therefore, MLE is about:

\[\arg \max_\theta \mathcal{L}(\theta | X) = \arg \max_\theta \prod_{i=1}^n p_\theta (\vec{x}_i)\]

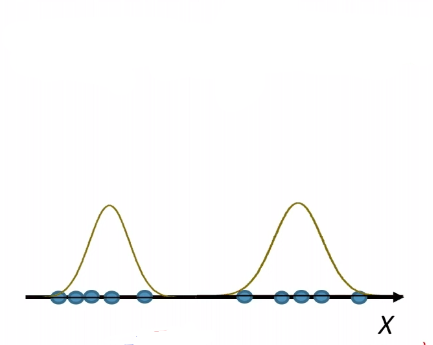

Example: Fitting the best Gaussian

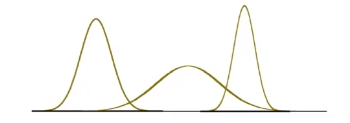

Consider the case where we have some height data:

For now, we ignore the labelling.

- a better way would be to think that all those are having the same label, so we are learning currently $P[X=\vec{x}\vert Y=\text{some label}]$

And suppose that we know this data is generated from a Gaussian, so then we know:

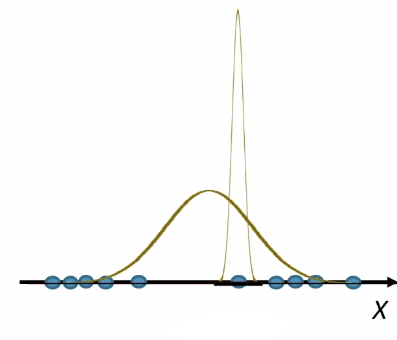

\[p_\theta (x) = p_{\{\mu, \sigma^2\}}(x)L=\frac{1}{\sqrt{2\pi \sigma^2}} \exp(-\frac{(x-\mu)^2}{2\sigma^2})\]So our model space is the set of Gaussians, some of which looks like:

Now, we just need to do the MLE:

\[\begin{align*} \arg \max_\theta \mathcal{L}(\theta | X) &= \arg \max_\theta \prod_{i=1}^n p_\theta (\vec{x}_i)\\ &= \arg \max_\theta \prod_{i=1}^n \frac{1}{\sqrt{2\pi \sigma^2}} \exp(-\frac{(x-\mu)^2}{2\sigma^2}) \end{align*}\]while this may be painful to do, but we have some tricks:

-

Using $\log$ instead, since logarithmic functions are monotonic, hence:

\[\arg \max_\theta \,\,\mathcal{L}(\theta | X) = \arg \max_\theta \,\, \log \mathcal{L}(\theta | X)\] -

To find the maximum, we basically analyze the “stationary points” of the $\mathcal{L}$ by taking derivatives

- for high order parameter, we need to use partial derivatives

Hence we consider:

\[\begin{align*} l(\theta) &= \log L(\theta) \\ &= \log \prod_{i=1}^m \frac{1}{\sqrt{2\pi} \sigma} \exp{\left( - \frac{(x_i - \mu)^2)}{2 \sigma^2} \right)}\\ &= \sum_{i=1}^m \left( \log\frac{1}{\sqrt{2\pi} \sigma} - \frac{(x_i - \mu)^2}{2 \sigma^2} \right)\\ &= m \log\frac{1}{\sqrt{2\pi} \sigma} -\sum_{i=1}^m\left( \frac{(x_i - \mu)^2}{2 \sigma^2} \right)\\ &= m \log\frac{1}{\sqrt{2\pi} \sigma} -\frac{1}{\sigma^2}\cdot \frac{1}{2}\sum_{i=1}^m (x_i - \mu)^2 \end{align*}\]notice here the difference between the other note:

- we had $(y_i - \theta^T x_i)^2$ instead of $(x_i - \mu)^2$, since we are currently ignoring the label.

Now, computing the maximum:

\[\arg \max_{\mu, \sigma^2} \left( m \log\frac{1}{\sqrt{2\pi} \sigma} -\frac{1}{\sigma^2}\cdot \frac{1}{2}\sum_{i=1}^m (x_i - \mu)^2 \right)\]First we compute $\mu$ by partial derivatives:

\[0 = \nabla_\mu \left( m \log\frac{1}{\sqrt{2\pi} \sigma} -\frac{1}{\sigma^2}\cdot \frac{1}{2}\sum_{i=1}^m (x_i - \mu)^2 \right)\]Solving this yields:

\[\mu_{MLE} = \frac{1}{n}\sum_{i=1}^m x_i\]for $m$ samples. This is basically the sample mean.

Similarly, maximizing $\sigma^2$:

\[\sigma^2_{MLE} = \frac{1}{n}\sum_{i=1}^n (x_i - \mu)^2\]Therefore, we have now found our best, MLE, model with Gaussian distribution $p_\theta = p_{{\mu, \sigma^2}}$.

- in other words, MLE is just figuring out the best parameter for the assumed distribution $\mathcal{D}$ for the dataset $X$

Now, for other examples, other models usually used are:

- Bernoulli model (coin tosses)

- Multinomial model (dice rolls)

- Poisson model (rare counting events)

- Multivariate Gaussian Model - most often the case

- Multivariate version of other scale valued models (basically the first three)

Multivariate Gaussian

Basically, we have:

\[p(x;\mu, \Sigma) = \frac{1}{(2\pi)^{d} \det(\Sigma)} \exp\left( -\frac{1}{2}(x-\mu)^T \Sigma^{-1}(x-\mu) \right)\]where:

-

$\mu$ is a vector here in $\mu \in \mathbb{R}^d$

-

$\Sigma \in \mathbb{R}^{d \times d}$ is the variance is now a covariance matrix, which is symmetric and positive semi-definite

MLE to Classification

For labelled data, suppose labels are $y_1, y_2, … \in \mathcal{Y}$.

Then, our ending function should be (this is justified soon):

\[\hat{f}(\vec{x}) = \arg \max_{y \in \mathcal{Y}} P(y \in Y | \vec{x})\]basically, if we have $(y_1=0, y_2=1)$, then we are deciding if $P(y=0\vert \vec{x})$ is larger or $P(y=1\vert \vec{x})$ is larger.

We can simplify this using Bayes Rule:

\[\begin{align*} \hat{f}(\vec{x}) &= \arg \max_{y \in \mathcal{Y}} P(Y=y | X=\vec{x}) \\ &= \arg \max_{y \in \mathcal{Y}} \frac{P(\vec{x} | y)P(y)}{P(\vec{x})}\\ &= \arg \max_{y \in \mathcal{Y}} P(X=\vec{x} | Y=y)\cdot P(y) \end{align*}\]where:

- the third equality comes from the fact that we are looking for $\arg \max_{y \in \mathcal{Y}}$ which is independent of $P(\vec{x})$.

- the probability $P(y)$ is also called the class prior.

- The probability of something happening regardless of your "features/condition".

- the probability $P(\vec{x}\vert y)$ is also called the class conditional/probability model.

- Given a $y$, e.g. being fraud email, how is the feature $\vec{x}$ is distributed over it, e.g. each feature is distributed as a Gaussian

- once we decide on the above, we can plugin an input $\vec{x}^{(1)}$ and compute the probability

Now, we want to use MLE to find out the quantity $P(\vec{x}\vert y)$ and $P(y)$.

Side Note

In statistics, you might see priori and posterior estimation like the follows, though the meaning of them can be applied as the ones above.

Given that the data samples $X=x_1,x_2,…,x_m$ are distributed in some distribution determined by $\mathcal{D}(\theta)$, and that the parameters $\theta$ itself is distributed by $\theta \sim \mathcal{D}(\rho)$, then:

\[P(\theta|X) = \frac{P(\theta)P(X|\theta)}{P(X)}\]where, interpreting it as the following order makes sense:

- $P(X\vert \theta)$ indicates that, if we fixed some parameter $\theta$, then the probability of seeing the data $X$

- $P(\theta)$ indicates the probability of seeing the above fixed $\theta$

and the second one (with $P(\theta)$) is called the a priori estimation, and the posterior estimation is our resulting $P(\theta\vert X)$.

For Example:

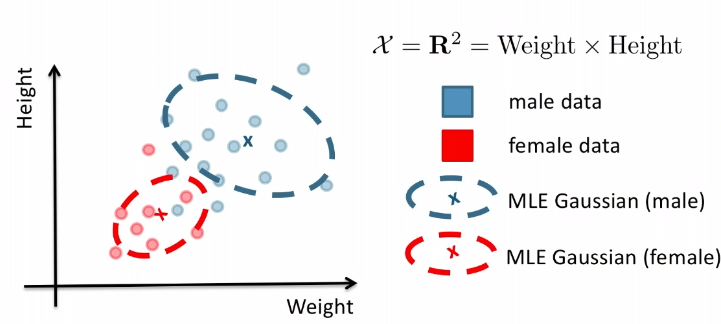

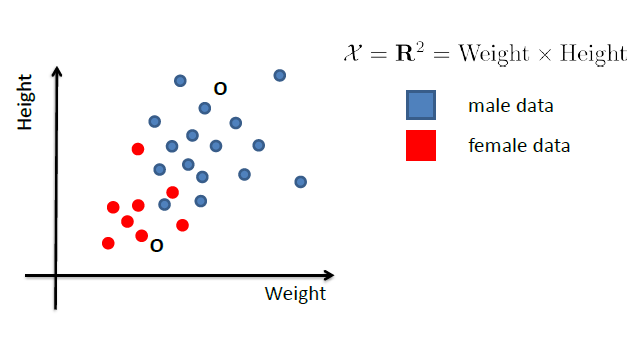

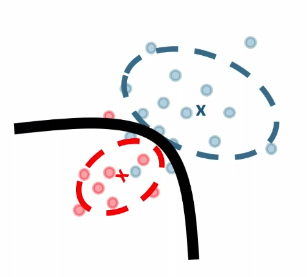

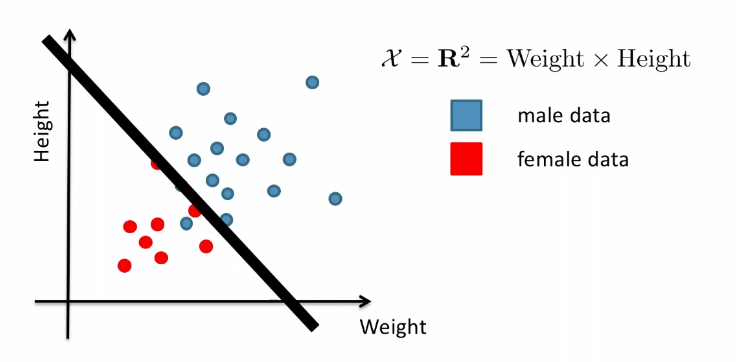

Consider the case of distinguishing between $\text{male}$ and $\text{female}$, based on features such as height and weight.

Given the dataset of $X$.

Then, what we know already would be:

- $P(Y = \text{male})$ = fraction of training data labelled as male

- (note that the TRUE probability is not this, of course, this is just an estimate of what the true probability is)

- $P(Y = \text{female})$ = fraction of training data labelled as female

Finally, to learn the class conditions:

- $P(X\vert Y=\text{male}) = p_{\theta_\text{male}}(X)$

- $P(X\vert Y=\text{female})= p_{\theta_\text{female}}(X)$

This we can do MLE to find out $\theta_{\text{male}}$ and $\theta_{\text{female}}$.

- if it is normally distributed, then we can find the parameter = mean + variance matrix easily

- then we just use MLE to figure out $\mu, \sigma$ for the normal distribution

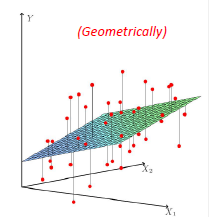

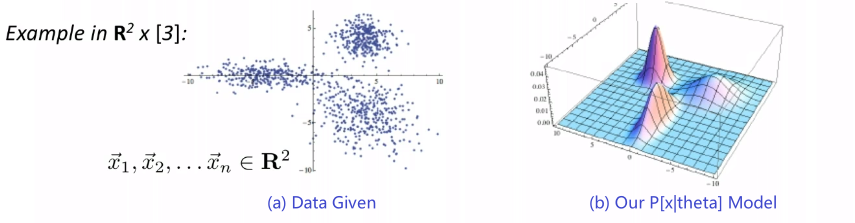

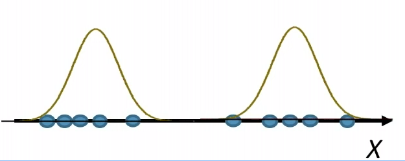

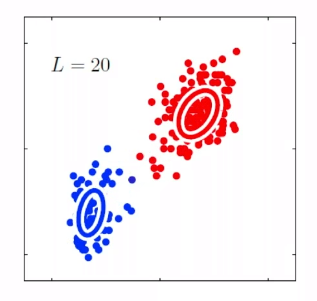

Graphically, the fits might look like:

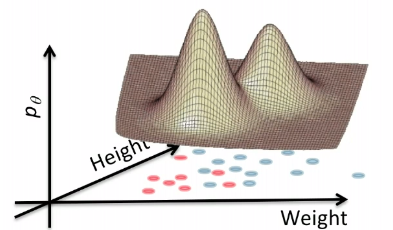

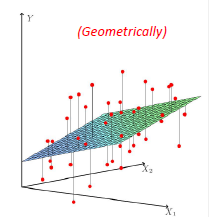

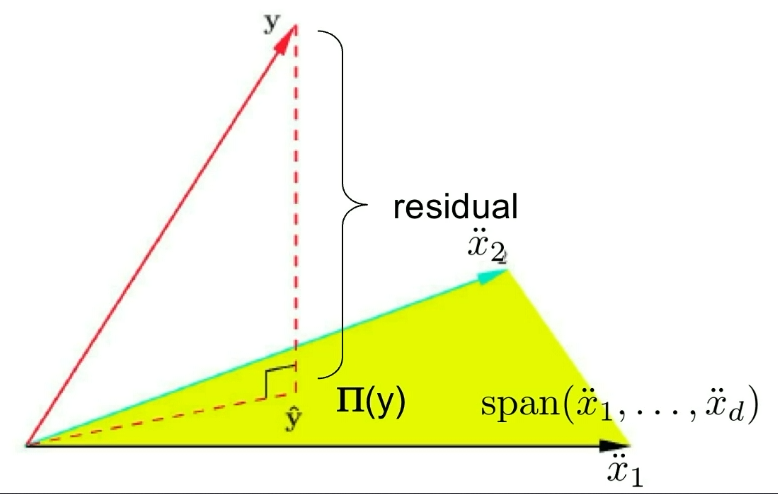

In a 3D fashion:

And basically this is our first predictor!

Now, coming back to the claim that:

\[\hat{f}(\vec{x}) = \arg \max_{y \in \mathcal{Y}} P(y \in Y | \vec{x})\]First, we define the true accuracy of a classifier what have made $f$ to be:

\[P_{\vec{x},y}[f(\vec{x}) = y] = \mathbb{E}_{(\vec{x},y)}[1\{ f(\vec{x}) = y \}]\]note that the notation here is precisely:

-

$\mathbb{E}_{\text{random variable}}[\text{event}]$. So here, we are consider the randomness of $\vec{x},y$ joinly.

-

here, we are assuming this is computed over the true population $(\vec{x},y) \sim \mathcal{D}$, hence equality.

-

recall that the expected value would be calculated by integrating samples from

\[\mathbb{E}_{(\vec{x},y)}[1\{ f(\vec{x}) = y \}] = \int_{(\vec{x},y)}1\{ f(\vec{x}) = y \}\cdot d\mu_{(x,y)}= \int_{(\vec{x},y)}1\{ f(\vec{x}) = y \}\cdot P(\vec{x},y)dV\]so we are integrating over all possible pairs of $(\vec{x},y)$ with a volume element $P(\vec{x},y)dV$ weighted by the probability of each pair, since the probability of each pair of $(\vec{x},y)$ happening is not equal.

Theorem: Optimal Bayes Classifier

Given a space of label $\mathcal{Y}$, consider

\[\begin{align*} \hat{f}(\vec{x}) &= \arg \max_{y \in \mathcal{Y}} P(y | \vec{x}), \quad \text{Bayes Classifier} \\ g(\vec{x}) &= \mathcal{X} \to \{0,1\}, \quad \text{Any Classifier} \end{align*}\]It can be proven that:

\[P_{\vec{x},y}[g(\vec{x}) = y] \le P_{\vec{x},y}[f(\vec{x}) = y]\]i.e. the Bayes Classifier it the optimal if model are evaluated using the above definition of accuracy.

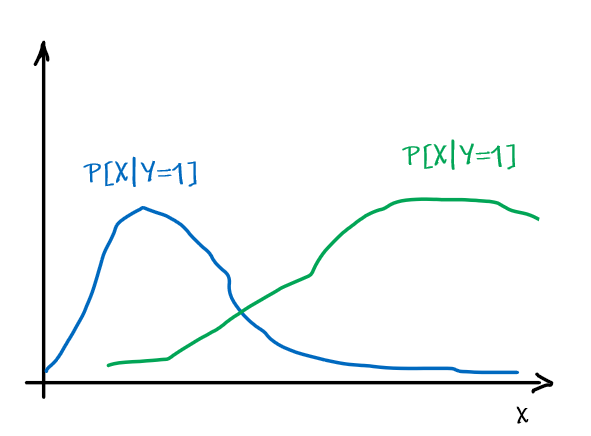

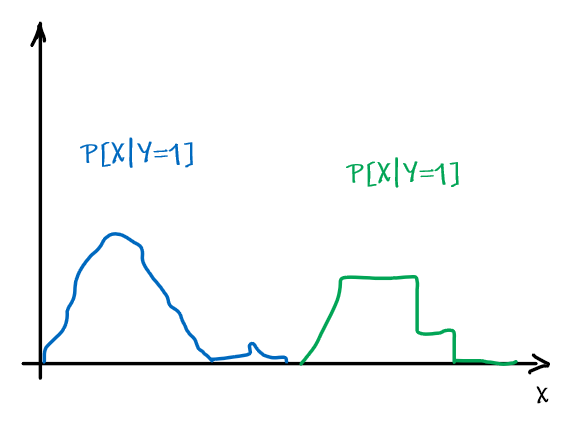

However, note that being optimal does not mean being 100% correct. Suppose we have:

| Optimal Bayes Accuracy = $1-\int \min_{y \in Y}P[ y\vert x]P[x]dx$ | Optimal Bayes Accuracy = $1$ |

|---|---|

|

|

Proof:

Consider any classifier $h$, and for simplicity, assume that the space of labels is ${0,1} = \mathcal{Y}$.

Now, consider some given $\vec{x}$ and its label $y$:

First, we observe that:

\[\begin{align*} P[h(\vec{x})=y|X=\vec{x}] &= P[h(\vec{x})=1, Y=1|X=\vec{x}] + P[h(\vec{x})=0, Y=0|X=\vec{x}] \\ &= 1\{ h(\vec{x})=1 \} \cdot P[Y=1 | X = \vec{x}] + 1\{h(\vec{x} = 0)\} \cdot P[Y=0 | X = \vec{x}] \\ &= 1\{ h(\vec{x})=1 \} \cdot \eta(\vec{x}) + 1\{ h(\vec{x})=0 \} \cdot (1-\eta(\vec{x})) \end{align*}\]where we have:

- the second equality comes from the fact that we have fixed $\vec{x}$, i.e. we have no randomness in $\vec{x}$

- defined $\eta(\vec{x}) \equiv P[Y=1 \vert X = \vec{x}]$

- though we fixed $\vec{x}$, the randomness in $y$ remained (hence the $P$ everywhere) since both $\vec{x},y$ are sampled from some distribution.

Therefore, if we consider:

\[\begin{align*} P[f(\vec{x}) = y | X=\vec{x}] &- P[g(\vec{x})=y|X=\vec{x}] \\ &= \eta(\vec{x})\cdot [1\{ f(\vec{x})=1 \} - 1\{ g(\vec{x})=1 \}] + (1-\eta(\vec{x}))\cdot [1\{ f(\vec{x})=1 \} - 1\{ g(\vec{x})=1 \}] \\ &= (2\eta(\vec{x})-1) [1\{ f(\vec{x})=1 \} - 1\{ g(\vec{x})=1 \}] \end{align*}\]We want to show that this is $\ge 0$. Recall that we have defined:

- $\hat{f}(\vec{x}) = \arg \max_{y \in \mathcal{Y}} P(y \in Y \vert \vec{x})$

So we have two cases:

- if $f(\vec{x})$ and $g(\vec{x})$ gave the same prediction, then it is $0$. Satisfies $\ge 0$

- if $f(\vec{x})$ is different with $g(\vec{x})$, then either:

- $f(\vec{x})=1, [1{ f(\vec{x})=1 } - 1{ g(\vec{x})=1 }] > 0$. Then since $f$ is defined by $\arg \max$, it means that $P[Y=1 \vert X=\vec{x}]\ge P[Y=0 \vert X=\vec{x}]$. Therefore, $P[Y=1 \vert X=\vec{x}] \ge 1/2$. So $(2\eta(\vec{x})-1) \ge 0$.

- $f(\vec{x})=0, [1{ f(\vec{x})=1 } - 1{ g(\vec{x})=1 }] < 0$. By a similar argument, $(2\eta(\vec{x})-1) \le 0$.

- In either case, the product $(2\eta(\vec{x})-1) [1{ f(\vec{x})=1 } - 1{ g(\vec{x})=1 }] \ge 0$

Therefore, this means:

\[P[f(\vec{x}) = y | X=\vec{x}] - P[g(\vec{x})=y|X=\vec{x}] \ge 0\]Integrating over $X$ to remove the condition that we fixed a $X=\vec{x}$, we then complete the proof.

\[P[f(\vec{x})=y] = \int_{\vec{x}}P[f(\vec{x}) = y | X=\vec{x}]\cdot P[X=\vec{x}]d\vec{x}\]Note

We see that the Bayes Classifier is “optimal” for the given criterion of accuracy. But this still imposes three main problems:

- What if the data is extremely unbalanced, such that $99\%$ data is positive? Then a function $f(x)=1$ could achieve $99\%$ accuracy given the definition we had.

- This is optimal if we get the true value of $P[X=\vec{x}\vert Y=y], P[Y=y]$, but we can only estimate it given our data.

- If we don’t know some information of which kind of distribution should be used for $P[X=\vec{x}\vert Y=y]$, we then need to do a non-parametric density estimation, which would require a large amount of data and the convergent rate would be $1/\sqrt{n}^d$, where $d$ is the number of features you have and $n$ the number of sample data.

- this is really bad if we have a lot of features

Next, we will see one example of estimating the quantity:

\[\hat{f}(\vec{x})= \arg \max_{y \in \mathcal{Y}} P[X=\vec{x} | Y=y]\cdot P[Y=y]\]note that

- one known way to estimate $P[X=\vec{x} \vert Y=y]$ would be using MLE, basically fitting the most likely distribution

- we will soon see another way of doing it using some additional assumption (i.e. Navies Bayes)

- other possibilities for $P[X=\vec{x} \vert Y=y]$ could be to use Neural Networks, DL models etc.

Basically, even if we know this is the optimal solution/framework to work under, we need to be able to compute the quantities such as $P[X=\vec{x} \vert Y=y]$. But since we don’t know the true population, all we are doing are the estimates.

In summary

- There are many ways to estimate the class conditional $P[X=\vec{x} \vert Y=y]$, unless you know something specific to the task, we don’t know what is the right way

- Probability density estimation of $P[X=\vec{x} \vert Y=y]$ will degrade as data dimension increases, i.e. the convergent rate $1/\sqrt{n}^d$ requires more data (more $n$).

- note that this assumes that we are imposing a probability density over our training data in our model. We will see that in cases such as Nearest Neighbor, we might need to worry about this.

Naïve Bayes Classifier

If we assume that individual features are independent given the class label. Then we have the Naïve Bayes:

\[\begin{align*} \hat{f}(\vec{x}) &= \arg \max_{y \in \mathcal{Y}} P[X=\vec{x} | Y=y]\cdot P[Y=y]\\ &= \arg \max_{y \in \mathcal{Y}} \prod_{j=1}^d P[X=\vec{x}^{(j)} | Y=y]\cdot P[Y=y] \end{align*}\]where here, we are flipping the notation in our other note, such that:

- superscript $x^{(j)}$ means the $j$-th feature

- subscript $x_i$ means the i-th sample data point

Advantage:

Quick for coding and computation

Maybe fewer samples is needed to figure out the distribution of a particular feature

e.g. if they are dependent, then you need to figure out if it is a positively correlated, negatively correlated, not correlated, etc. So you need to have more samples to know what is happening

e.g. if you are doing a non-parametric fit, you are now estimating $d$ densities, each of which would be $1$-dimensional. This means that you reduced the data needed:

\[\frac{1}{\sqrt{n}^d} \to d\cdot \frac{1}{\sqrt{n}}\]Disadvantage:

The assumption often does not hold, as they might be correlation between features that matters for classification. This will result in giving bad estimates.

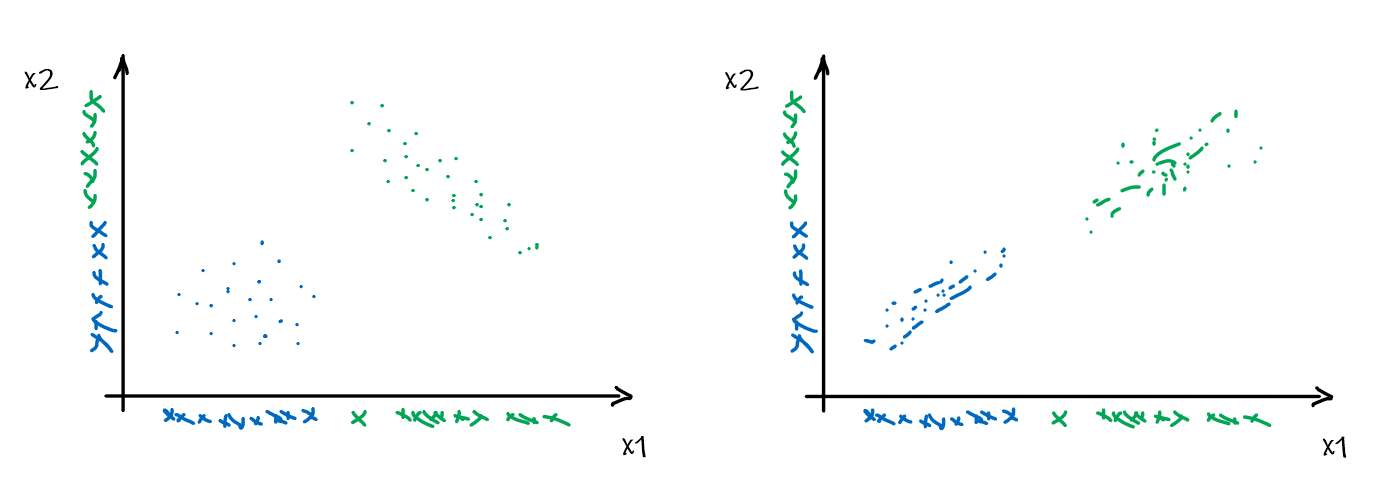

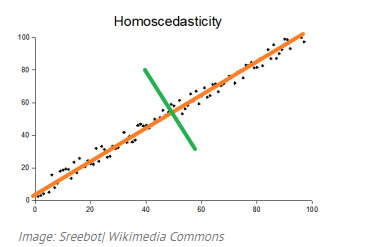

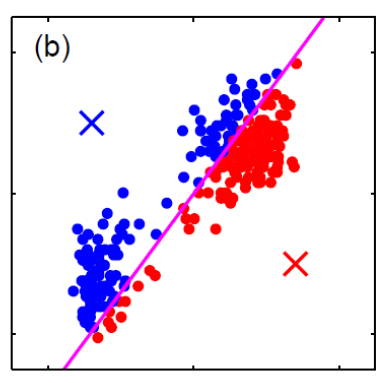

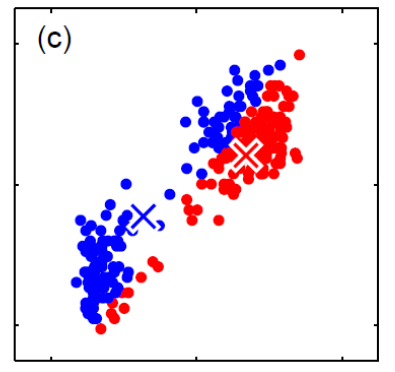

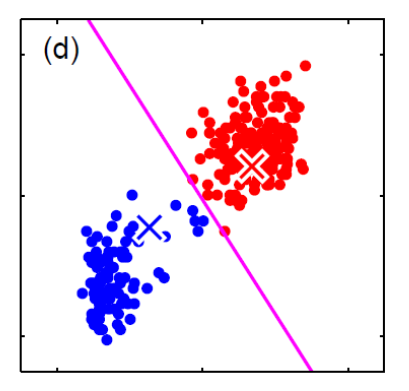

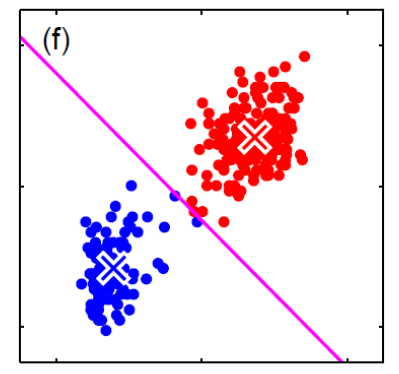

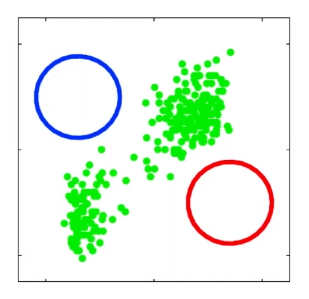

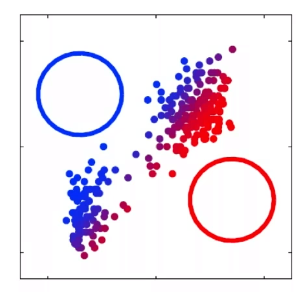

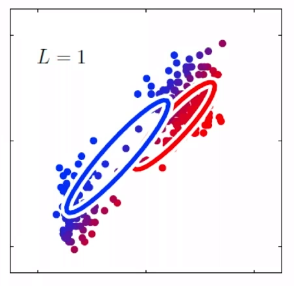

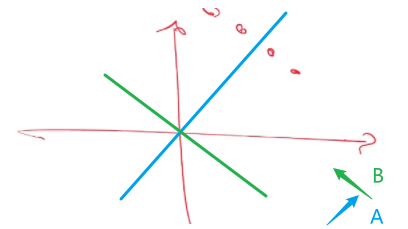

For instance, consider:

where for each green-labeled class, the correlation is difference but projection is the same. So assuming independence might cause some trouble. However, it might not matter for classification since the threshold value is clear/correct even if we used independence.

- at this level, whether if the correlation matters would be tested using trial and error.

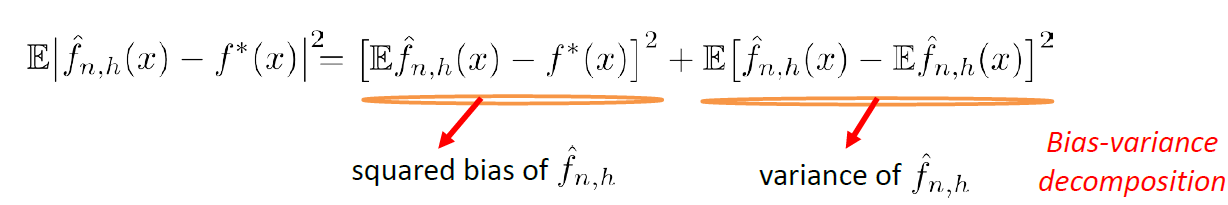

Evaluating Quality of Classifier

Given a classifier $f$, we essentially need to compute the true accuracy:

\[P_{\vec{x},y}[f(\vec{x}) = y] = \mathbb{E}_{(\vec{x},y)}[1\{ f(\vec{x}) = y \}]\]to decide which is a better classifier (this is one and the most common way of doing it), using accuracy.

However, since we don’t know the true population, we can only do an estimate using:

\[\frac{1}{n}\sum_{i=1}^n 1\{ f(\vec{x}_i) = y_i \}\]using the test data.

- if we have used the train data, then the approximation no longer holds because the IID could be falsified. In other words, the data would have been contaminated since we have already seen it. (e.g. think of a classifier being a lookup table)

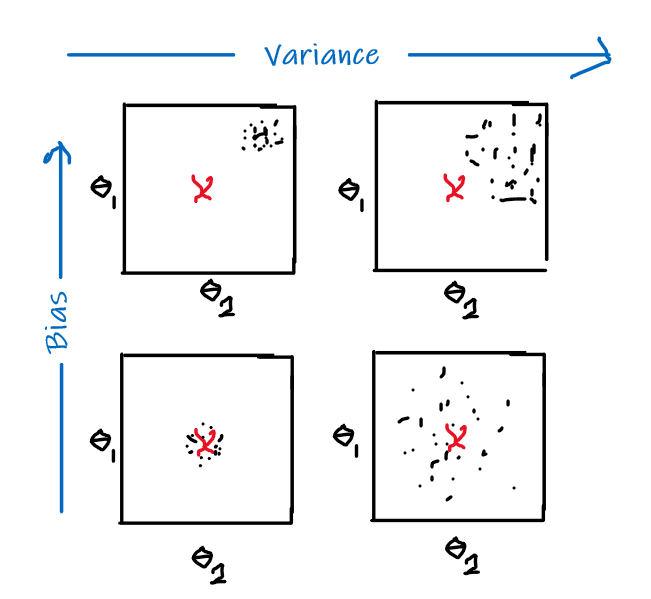

Bias

The idea of bias has also been formalized in the other note, basically we want a low bias to be:

the idea can be formalized by the following.

Bias

- The idea is that, for each time you sample some set of data points from $\mathcal{D}$, your model $\hat{\theta}$ would have trained and split out the learnt parameter. If on average, those parameters are close to the actual parameter $\theta$, then it is unbiased (see bottom left figure as well). Otherwise it is biased.

An unbiased estimator would have:

\[\mathbb{E}_{\vec{x}\sim \mathcal{D}}[\hat{\theta}(\vec{x})] = \lang \hat{\theta}(\vec{x}) \rang = \theta\]where the equation means:

- $\hat{\theta}$ is your estimator/learning model that spits out the learnt parameters given some data point

- $\theta$ is the true/correct parameter for the distribution $\mathcal{D}$, which is unknown (you want to approximate)

- the first equality is because expected value is the same as mean

Notice that again, it is defined upon the true population.

Example:

Suppose your data $X$ is generated from $\vec{x} \sim B(p)$ being a Bernoulli distribution, so $\theta=p$ is the true paramter.

Suppose your estimator then corrected assumed a Bernoulli distribution by giving $\hat{\theta} \to \mathbb{R}$, but stupidly:

\[\hat{\theta}_{\vec{x}\in X}(\vec{x}) = \vec{x}_1\]which basically:

- spits out the first data point in a training set

Then this is actually an unbiased estimator because:

\[\mathbb{E}_{\vec{x} \sim \mathcal{D}}[\hat{\theta}(\vec{x})] = \mathbb{E}_{\vec{x} \sim \mathcal{D}}[\vec{x}] = p = \theta\]since it is a Bernoulli distribution.

Consistency

The other idea is consistency.

Consistency

- The idea is, that if we increase the number of samples, a consistent estimator $\hat{\theta}$ should have spitted out the correct parameter as our number of samples approaches infinity.

We say a model is consistent if:

\[\lim_{n \to \infty} \hat{\theta}_n(\vec{x}) = \theta\]where the equations is similar to the above:

- $\hat{\theta}_n$ means how many sample data $n$ is used in the training process

Example

The same example as above, if we use the estimator

\[\hat{\theta}_{\vec{x}\in X}(\vec{x}) = \vec{x}_1\]for data generated from a Bernoulli $\vec{x} \sim B(p)$, this would be an inconsistent estimator, since:

\[\lim_{n \to \infty} \hat{\theta}_{n}(\vec{x}) = \vec{x}_1 \neq p\]In general, bias usually has nothing to do with consistency, and vice versa.

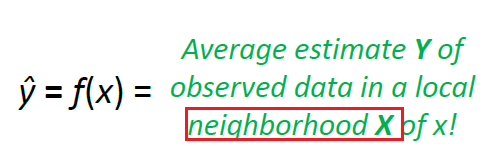

Nearest Neighbors and Decision Trees

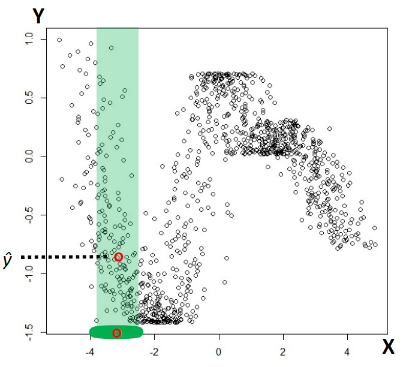

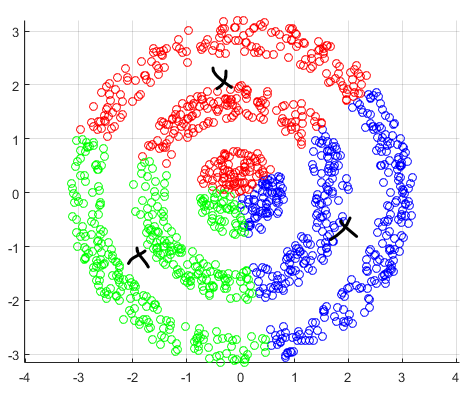

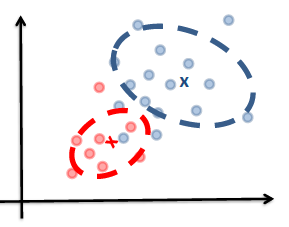

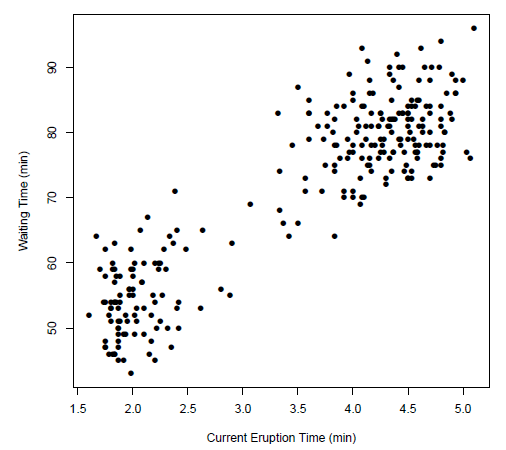

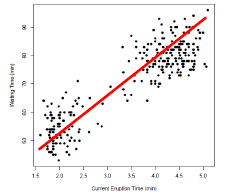

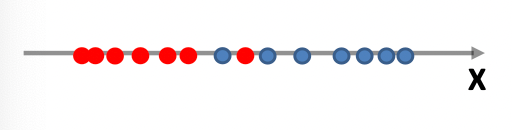

Consider back the data we had before:

instead of trying to fit a probability density for each class of data, one intuitive way would be looking at its neighbors. If its closest neighbor is a $\text{male}$, then it is probably also a $\text{male}$

Nearest Neighbor Classifier

First, we need to define what we mean by distance between two data points. There are in fact many ways to do it:

- Actually compute some sort of distance (smaller the distance, closer the examples)

- Compute some sort of similarity (higher the similarity, closer the examples)

- Can use domain expertise to measure closeness

Once we know how to compute the distance, we are basically done. We just assign the same label to its closest neighbor.

Note

- Since we are assigning the label to the same label as its “closest neighbor”, we are very vulnerable to noise. However, we can reduce this noise by taking the majority of $k$-nearest neighbors.

Computing Distance

Normally we will have $\mathcal{X} = \mathbb{R}^d$, then a few natural ways to computation would be:

Euclidean Distance between to data $\vec{x}_1, \vec{x}_2$:

\[\begin{align*} \rho(\vec{x}_1, \vec{x}_2) &= \left[(x_1^{(1)}-x_2^{(1)})+...+(x_1^{(d)}-x_2^{(d)})\right]^{1/2} \\ &= \left[(\vec{x}_1 - \vec{x}_2)^T(\vec{x}_1 - \vec{x}_2)\right]^{1/2} \\ &= \|\vec{x}_1 - \vec{x}_2\|_2 \end{align*}\]Other normed distance would include the form:

\[\rho(\vec{x}_1, \vec{x}_2) = \left[(x_1^{(1)}-x_2^{(1)})^{p}+...+(x_1^{(d)}-x_2^{(d)})^p\right]^{1/p} = \|\vec{x}_1 - \vec{x}_2\|_p\]where if we have:

- $p=2$ then it is Euclidean Distance

- $p=1$ then it is Manhattan Distance (i.e. we are basically adding up the differences)

- $p=0$ then we are count up the number of differences

- $p=\infty$ then we are finding the maximum distance

the last two needs to be computed by taking a limit.

Computing Similarity

Some typical ways would involve the inverse of a distance:

\[\rho(\vec{x}_1, \vec{x}_2) = \frac{1}{1+\| \vec{x}_1 - \vec{x}_2 \|_2}\]Another one would be the cosine similarly, which depends on the angle $\ang$ between two vector:

\[\rho(\vec{x}_1, \vec{x}_2) = \cos(\ang (\vec{x}_1, \vec{x}_2)) = \frac{\vec{x}_1 \cdot \vec{x}_2}{\| \vec{x}_1\|_\mathrm{2} \,\, \| \vec{x}_2\|_\mathrm{2}}\]Computing Using Domain Expertise

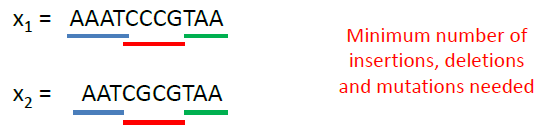

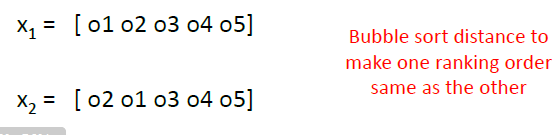

For things related to genome/mutation, we might consider the edit distance:

where here:

- since we only need two edits to make them the same, $\rho(x_1, x_2)=2$.

- this is quite useful for genome related research.

Another example would be the distance between rankings of webpages:

where here:

- bubble sort distance: the number of swaps needed to make one ranking order the same as another.

- so in this case, it will be $\rho(x_1, x_2)=1$ since we only needed one swap

Generative vs Discriminative Approach

We have basically covered both approaches already.

| Generative | Discriminative | |

|---|---|---|

| Idea | we are trying to model the sample population by giving some distribution | we are classifying directly |

| Example |  |

|

| Advantages | A probability model gives interpretation of how data gets generated from population | Typically better classification accuracies since we are doing it directly |

| MLE estimation of the true probability parameter $\rho$ such that true distribution is $D(\rho)$ converges with rate $\vert \rho-\hat{p}_n\vert \le 1/\sqrt[\leftroot{-3}\uproot{3}d]{n}$ where $\hat{p}_n$ is the estimated parameters based on the $n$ training samples | The rate of convergence to optimal bayes classifier is shown in this paper | |

| Disadvantages | Need to pick/assume a probability model, and is doing more work then required to do classification so prone to errors! | Gives no understanding of the population |

- Though it seems that generative approach of assuming a distribution is usually risky, there is an approach of having an non-parametric estimator. Yet the problem is that computing that non-parametric distribution takes too much samples points then we can usually supply.

For instance:

Suppose we are looking at the students who scored 100 on a test (i.e. label $y=100$), and we want to find distribution of the GPA (i.e. a feature) with respect to that label $y=100$.

We might then have the following data.

| GPA | Number of 100 |

|---|---|

| 2.0 | 1 |

| 2.1 | 2 |

| … | … |

| 3.0 | 10 |

| … | … |

| 4.0 | 12 |

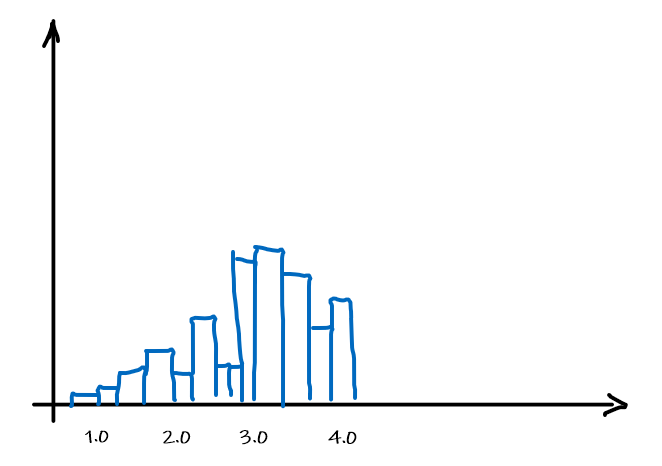

Graphically, we can then fit a distribution by using the data as it is, i.e. the histogram itself

which can be made into a function by basically have a bunch of constant functions.

Now, at this point, it probably make sense that the rate for which this estimator $\hat{\theta}$ approaches correct result would be:

\[|\theta - \hat{\theta}_n| \le \frac{1}{\sqrt{n}}\]But what if we have now two features? Then we need the number of data points squared to make sure all pair of values have some histogram in the $\mathbb{R}^2$. So in general, as the dimension $d$ of data increases:

\[|\theta - \hat{\theta}_n| \le \frac{1}{\sqrt{n}^d}\]which means that if we want the difference to be smaller than $1/2$, we need $n \ge 2^d$ data, which is growing exponentially as we collect more features.

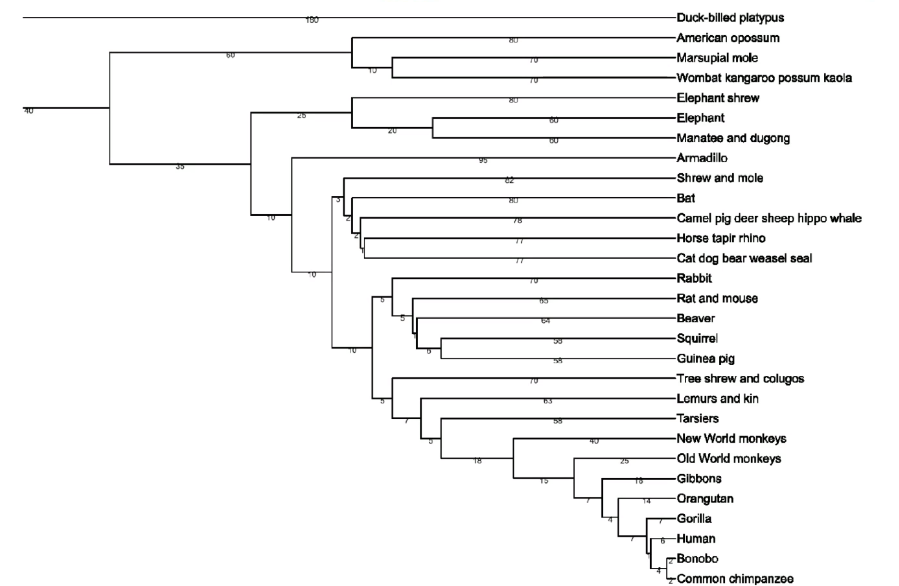

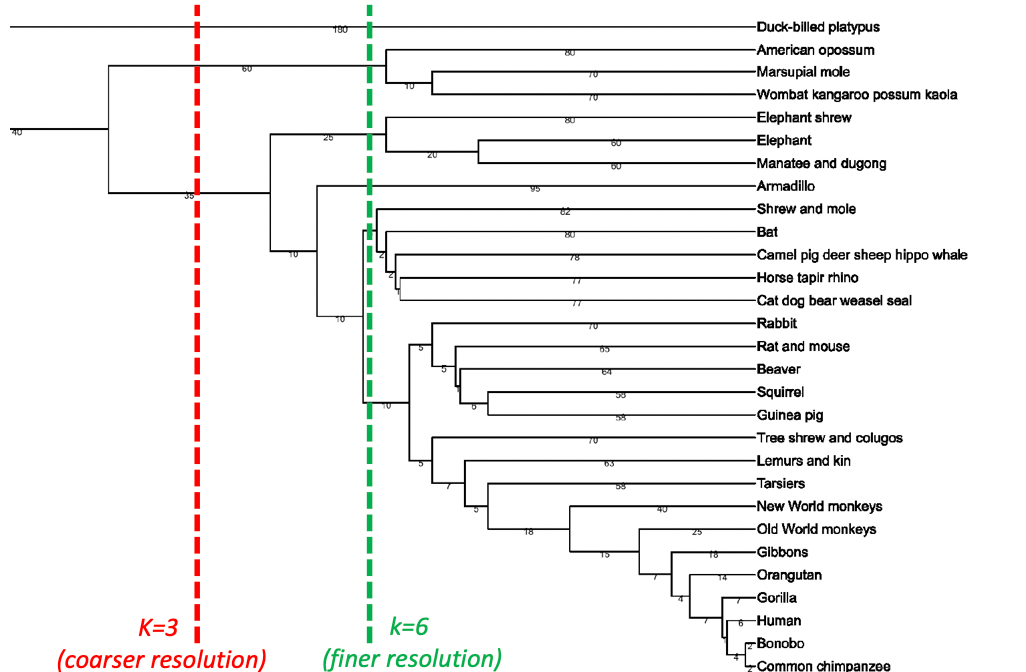

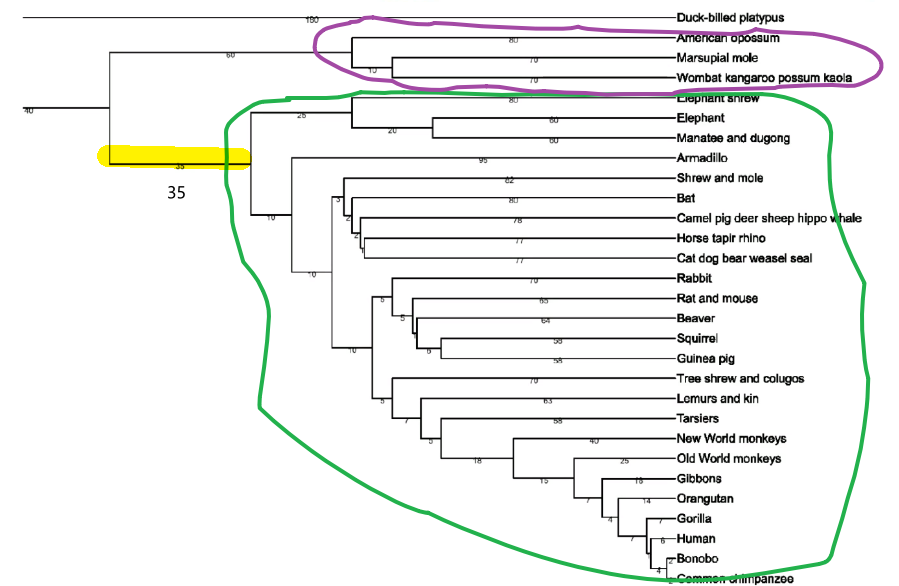

k-NN Optimality

If we are using the $k$-th nearest neighbor, this has some really good advantages.

Theorem:

- For fixed $k$, as and (number of samples) $n \to \infty$ , $k$-NN classifier error converges to no more than twice Bayes classifier error (which is the optimal one).

This will be proved below.

Theorem

- If $k\to \infty$ and $n \to \infty$, but $n$ is growing faster than $k$ such that $k/n\to0$, then $k$-NN classifier converges to Bayes classifier.

This is really technical, so it will not be covered here.

Notice that

- this is “much better” than the MLE with fitting model and estimating $P[X=\vec{x} \vert Y=y]\cdot P[Y=y]$. In that case, we are assuming we know which distribution the data comes from, but if we made a mistake on that, then $P[X=\vec{x} \vert Y=y]$ could be not even close to the optimal Bayes classifier.

Proof Sketch: k-NN with fixed k

First consider with $k=1$. Then consider that we are given a fixed data point $x_{\text{test}}=x_t$, we want to evaluate the error of a given classifier $f$.

Heuristic: What should be the probability of error in this case? Suppose the actual label for $x_t$ is a given $y_t$, then obviously:

\[\text{Error}=1\{ f(x_t) \neq y_t \}\]But since we are not given the $y_t$ but only $x_t$, we need to consider the true distribution of $y_t$ given $x_t$, which gives the probability of error being:

\[\text{Probability of Error}=\mathbb{E}_{y_t}[1\{ f(x_t) \neq y_t \}]\]where basically it is averaging over the distribution of $y_t\vert X=x_t \sim D$.

Now, we need to consider some 1-NN classifier $f_n$ given some arbitrary training dataset $D_n={X_n, Y_n}$ of size $n$. Let the nearest neighbor of a dataset $\mathcal{D}_n$ be $x_n$, and the label of that being $y_n$.

- note that the classifier $f_n$ depends on size $n$ since the nearest point could change

Then the probability of error $P[e]$ for a given $x_t$ for test assumed drawn IID would be:

\[\begin{align*} \lim_{n \to \infty} &P_{y_t, D_n}[e|x_t]\\ &= \lim_{n \to \infty} \int P_{y_t, Y_n}[e|x_tX_n]P[X_n|x_t]dX_n \\ &= \lim_{n \to \infty} \int P_{y_t, y_n}[e|x_t,x_n]P[x_n|x_t]dx_n \\ &= \lim_{n \to \infty} \int \left[1-\sum_{y \in \mathcal{Y}}P[y_t=y,y_n=y|x_t,x_n] \right] P[x_n|x_t] dx_n\\ &= \lim_{n \to \infty} \int \left[1-\sum_{y \in \mathcal{Y}}P[y_t=y|x_t]P[y_n=y|x_n] \right] P[x_n|x_t] dx_n\\ &= 1- \sum_{y \in \mathcal{Y}}P[y_t=y|x_t]^2 \end{align*}\]where:

-

$P_{y_t, D_n}[e\vert x_t]$ means that $y_t, \mathcal{D}_n$ will be randomly drawn/are random variables.

-

the first line of equality comes from the fact that:

\[P[A] = \sum_B P[A,B] = \sum_B P[A|B]P[B]\]and it it is conditioned, then simply add the condition:

\[P[A|C] = \sum_B P[A,B|C] = \sum_B P[A|BC]P[B|C]\] -

the second equality comes from the fact that a 1-NN classifier only depends on 1 nearest neighbor $x_n$. Therefore, it is indepedent of all the others:

\[P[e|X_n] = P[e|x_n]\] -

the third equality comes from evaluation the error. Notice that we are computing over the true distribution of $\mathcal{Y}$

-

the fourth equality comes from that fact that $x_t$ and $x_n$ are drawn independently

-

the last equality assumes a reasonable sample space such that as $n \to \infty$, $x_n \to x_t$ (basically getting really close).

-

in more detailed, this is what happens:

\[\begin{align*} \lim_{n\to\infty}&\int\left[1-\sum_{y \in \mathcal{Y}}P[y_t=y|x_t]P[y_n=y|x_n]\right]P[x_n|x_t]dx_n \\ &= \lim_{n\to\infty}\int P[x_n|x_t]dx_n - \lim_{n\to\infty}\int\left[\sum_{y \in \mathcal{Y}}P[y_t=y|x_t]P[y_n=y|x_n]P[x_n|x_t]\right]dx_n\\ &= 1 - \lim_{n\to\infty}\int\left[\sum_{y \in \mathcal{Y}}P[y_t=y|x_t]P[y_n=y|x_n]P[x_n|x_t]\right]dx_n \\ &= 1 - P[y_t=y|x_t]\lim_{n\to\infty}\int\left[\sum_{y \in \gamma}P[y_n=y|x_n]P[x_n|x_t]\right]dx_n \\ &= 1 - P[y_t=y|x_t]\lim_{n\to\infty}\sum_{y \in \mathcal{Y}}\left[\int P[y_n=y|x_n]P[x_n|x_t]dx_n\right] \\ &= 1 - P[y_t=y|x_t]\lim_{n\to\infty}\sum_{y \in \mathcal{Y}}P[y_n=y|x_t] \\ &= 1 - \sum_{y \in \mathcal{Y}}P[y_t=y|x_t]^2 \end{align*}\] -

the meaning here is that we are sampling twice the $y$ value given that we got $X=x_t$.

-

So now, we get that:

\[\lim_{n \to \infty} P_{y_t, D_n}[e|x_t] = 1- \sum_{y \in \mathcal{Y}}P[y_t=y|x_t]^2\]Now suppose we are comparing this with a Bayes Classifier ($\arg \max_{y \in \mathcal{Y}} P(X=\vec{x} \vert Y=y)\cdot P(y)$), which will assign some label $y^*$ to the data $x_t$. Then hence:

\[\begin{align*} 1- \sum_{y \in \mathcal{Y}}P[y_t=y|x_t]^2 &\le 1 - P^2[y_t=y^*|x_t]\\ &\le 2(1 - P[y_t=y^*|x_t])\\ &= 2P^*[e|x_t] \end{align*}\]where:

- $P^*[e\vert x_t]$ is the error of Bayes Classifier

- the second inequality comes from the fact that $0 \le P[y_t=y^*\vert x_t] \le 1$

then we just need to integrate over $x_t$ (i.e. $P[x_t]dx_t$)for removing the conditional.

Issues with k-NN Classification

In general, three main problems:

- Finding the $k$ closest neighbor takes time! (we need to compute this for every single input $x_t$)

- Most times the ‘closeness’ in raw measurement spaceis not good!

- Need to keep all the training data around during test time!

- as compared with MLE, which only keeps the computed parameter

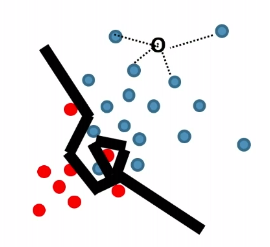

Speed Issue with k-NN

Finding the $k$ closest neighbor takes time! If we are given a $x_t$ for prediction, then we need

\[O(nd)\]where $n$ is the number of training data and $d$ is dimension

Heuristic

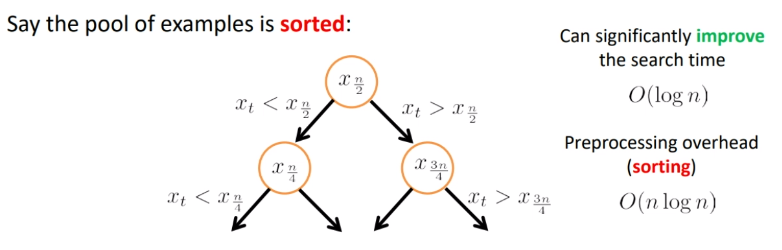

To do it faster, we could do a sorting. H

where the hit is that we need $O(n\log n)$ for preprocessing.

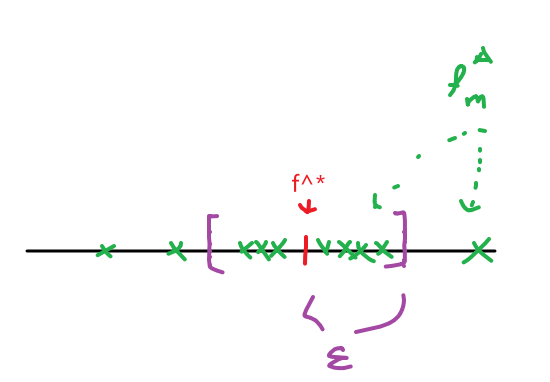

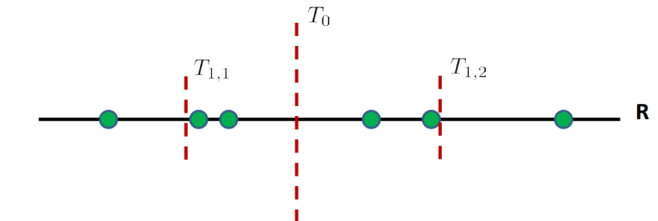

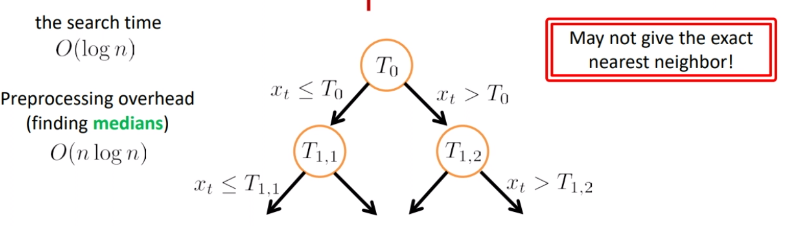

But obviously we cannot compute all the distances and order them, since that takes $O(nd)$. Instead, we can compute the threshold via some binary search:

first, we order the data points. Then, we

- find the first median $T_0$, and see if $x_t$ is larger or smaller than that

- suppose it is larger. Then we look in the right region only

- then we compute the median of the left region of $T_0$, which is $T_{1,2}$, and we compare that to $x_t$

- repeat 1-3 until we just have some constant number of training sample $c$ left in a region

- then compute within that $c$ samples and find the closet neighbor

So basically the search looks like:

where we see the problem is that:

- if the threshold was $T_{1,2}$ and $x_t$ is larger than that, we would have returned the rightest point. Yet the actual nearest neighbor is the one almost on the $T_{1,2}$.

- so we are approximating the closest neighbor (which actually does not reduce the accuracy)

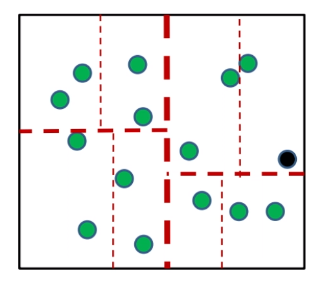

High Dimensional Data

The the idea is that:

given some data points (green):

-

pick a coordinate to work on (the optimal way is to pick the axis with largest variance)

-

e.g. you should pick the green “axis” to split

-

-

split the data in that coordinate by finding the median

-

compare the the component of $x_t$ in that coordinate

-

repeat until the region(cell) has some constant number of training sample $c$ left in a region

- so $c$ is a fixed, tunable parameter

-

find the closet neighbor among those $c$ data points.

This process above is also called the $k$-d trees.

Note

- this split is sensible if the features are sensible/all equally useful.

Other ways to find neighbor fast would be:

Tree-based methods: (recursive)

- k-d trees

- by default it splits on feature axis, but you can do a PCA and split on those eigenvectors. This is another method.

- Cover trees

- Navigation nets

- Ball trees

- Spill trees

- …

Compression-based methods:

- Locality Sensitive Hashing (LSH)

- Vector Quantization (VQ) methods

- Clustering methods

- …

Measurement Space Issue with k-NN

Often times we don’t know what measurements are helpful for classification a priori. So we need to know which features should be more important/useless.

Recall the old task: learn a classifier to distinguish $\text{males}$ from $\text{females}$

But say we don’t know which measurements would be helpful, so we measure a whole bunch:

- height

- weight

- blood type

- eye color

- Income

- Number of friends

- Blood sugar level

- …

Observation:

- Feature measurements not-relevant (noisy) for the classification task simply distorts NN distance computations

- Even highly correlated relevant measurements (signal) distorts the distance comparisons

On idea to solve this would be:

-

Re-weight the contribution of each feature to the distance computation

\[\begin{align*} \rho (\vec{x}_1, \vec{x}_2; \vec{w}) &= \left[w_1 \cdot (\vec{x}_1^{(1)}-\vec{x}_2^{(1)})^2+\cdots + w_d \cdot (\vec{x}_1^{(d)}-\vec{x}_2^{(d)})^2\right]^{1/2}\\ &=\left[ (\vec{x}_1 - \vec{x}_2)^TW (\vec{x}_1 - \vec{x}_2)\right]^{1/2} \end{align*}\]and that $W$ would be a diagonal matrix with $w_i$ terms, assuming we want to use L2 Euclidean distance.

-

essentially, we want to have some linear transformation space

-

in general, even if we include mixing such that $\vec{x} \to L\vec{x}$ where $L$ is non-symmetric nor diagonal. Then, it can be shown that for L2 Euclidian distance, we can show that $W=L^TL$, meaning that $W$ is symmetric and positive semi definite.

-

Our goal is to have $\rho (\vec{x}_1, \vec{x}_2; \vec{w})$:

- data samples from same class yield small values

- data samples from different class yield large values

Then we can create two sets:

- Similar set $S={ (\vec{x}_i,\vec{x}_j)\vert y_i = y_j }$

- Different set $D={ (\vec{x}_i,\vec{x}_j)\vert y_i \neq y_j }$

And we can minimize the cost function $\Psi$

\[\Psi(\vec{w}):=\lambda \sum_{(\vec{x}_i,\vec{x}_j) \in S}\rho(\vec{x}_i,\vec{x}_j;\vec{w}) -(1- \lambda) \sum_{(\vec{x}_i,\vec{x}_j) \in D}\rho(\vec{x}_i,\vec{x}_j;\vec{w})\]where:

- $\lambda\in (0,1)$ is a hyper-parameter that you can pick according to your preference, which side do you place emphasis on

- it might be useful if you picked $\lambda$ relative to the size of $S$ and $D$.

- basically we want to minimize $\sum_{(\vec{x}i,\vec{x}_j) \in S}\rho(\vec{x}_i,\vec{x}_j;\vec{w})$ and maximize $\sum{(\vec{x}_i,\vec{x}_j) \in D}\rho(\vec{x}_i,\vec{x}_j;\vec{w})$.

Note

- we cannot pick values such as $\lambda =1$, because then we will get $\vec{w}=\vec{0}$ since we know the distance is non-negative. A similar argument is for $\lambda = 0$

- since $\Psi(\vec{w})$ is linear in $W$, essentially it is doing a linear transformation of the input space.

Space Issues with k-NN

We need to keep all the data in our trained model. Is there some ways to save some space?

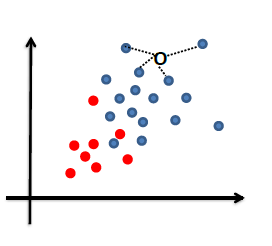

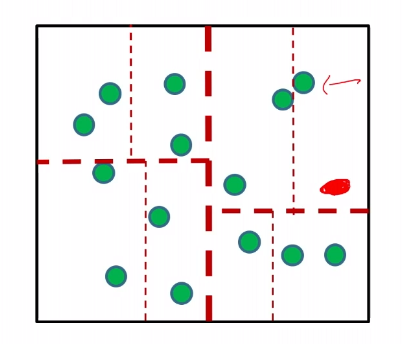

Suppose we are given a red data sample:

then we can just assign a label to each region/cell, by giving the majority label to the region. Then we do not need to keep all the data.

- again, it is about approximation.

So in the end, it looks like this:

Then the space requirement is reduced to:

\[\text{\# cells} = \min \{n, \approx \frac{1}{r^d}\}\]for cells of radius $r$.

k-NN Summary

- A simple and intuitiveway to do classification

- Don’t need to deal with probability modeling

- Care needs to be taken to select the distance metric

- Can improve the basic speed and space requirements for NN

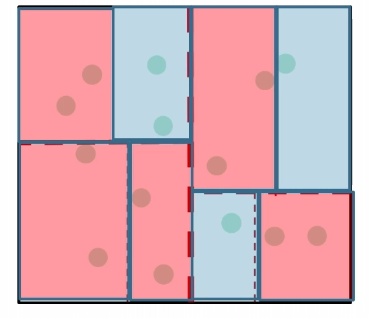

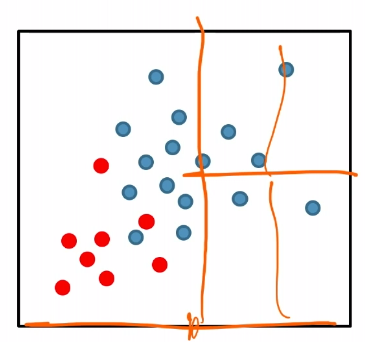

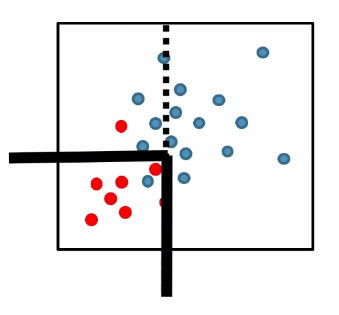

Decision Tree (Tree Based Classification)

$k$-d tree construction was optimizing for:

- come up with the nearest neighbor in a faster and more space efficient way

- but what if two cells are assigned with the same label? Isn’t that a waste of time/space since we could have just kept one label?

For instance:

where we see the splitting in the right is basically useless.

Therefore, $k$-d tree construction was NOT optimizing for for classification accuracy directly.

Heuristic

- Rather than selecting arbitrary feature and splitting at the median (our k-NN model), select the feature and threshold that maximally reduces label uncertainty within that cell!

For instance:

where:

- pick a coordinate and split such that the purity of label would be highest (improved)

- repeat until some given condition is met (e.g. each leaf/cell contains at least 5 data points)

In the end, you just get a bunch of thresholds, which then becomes a tree for input data to be classified.

Therefore, now we need to work out how to measure uncertainty/impurity of labels.

Measuring Label Uncertainty

Let $p_y$ be the fraction of training labelled $y$ in a region $C$.

Some criteria for impurity/uncertainty within a region $C$ would be:

-

classification error:

\[u(C):= 1 - \max_y p_y\] -

Entropy

\[u(C):= \sum_{y \in \mathcal{Y}} p_y \log_2 \frac{1}{p_y}\]the idea behind this is that if an event is unbiased (e.g. coin), then $p_y=0.5$ and you will get entropy $1$. If the coin is perfectly biased, $p_H=1,p_T=0$, you will get no disorder so that entropy is $0$.

-

Gini Index (from economics):

\[u(C):=1- \sum_{y \in \mathcal{Y}}p_y^2\]

Then the idea is to find $C$ such that $u(C)$ is minimized. So we need to find a feature $F$ and the threshold $T$ that maximally reduces the uncertainty of labels:

\[\arg\max_{F,T} [u(C) - (p_L \cdot u(C_L) + p_R \cdot u(C_R))]\]where:

- $u(C)$ was the parent cell’s uncertainty

- $p_L$ is the fraction of the parent cell data in the left cell

- $p_R$ is the fraction of the parent cell data in the right cell

- so $p_L \cdot u(C_L) + p_R \cdot u(C_R)$ is the uncertainty after a split into left and right region

- we want to maximize the reduction in uncertainty after a split, hence the minus sign

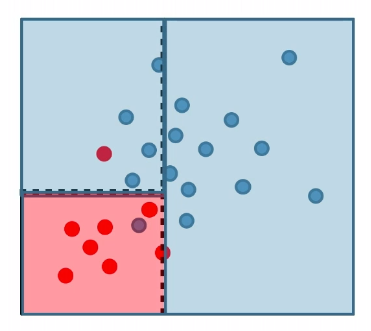

Problems with Decision Tree

Tree complexity is highly dependent on data geometry in the feature space:

where we have basically only rotated the data for 45 degrees.

The problem for a complex classifier is that the more complex your classifier, the poorer it generalizes

- complexity here basically would be the number of nodes/thresholds

On the other hand, this is useful for:

- interpretation. They are more interpretable, explaining why you gave a particular exmaple.

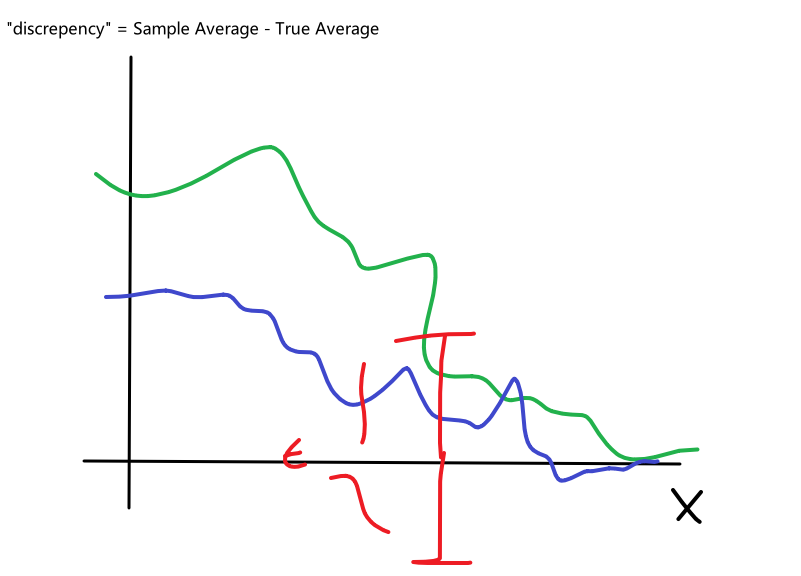

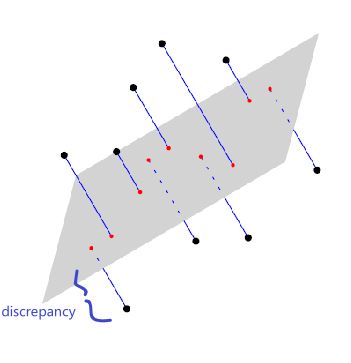

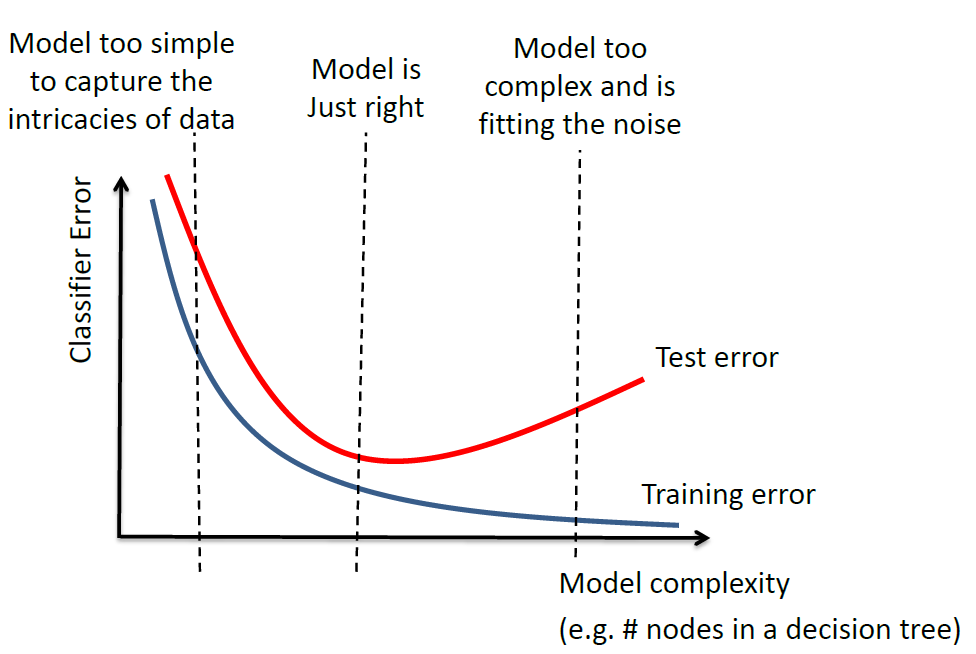

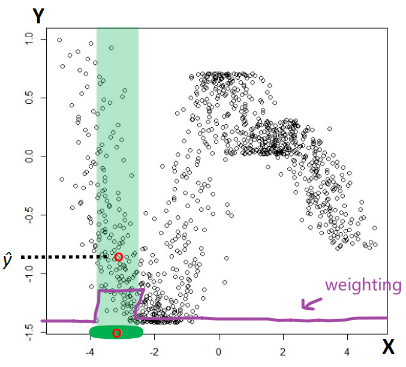

Overfitting

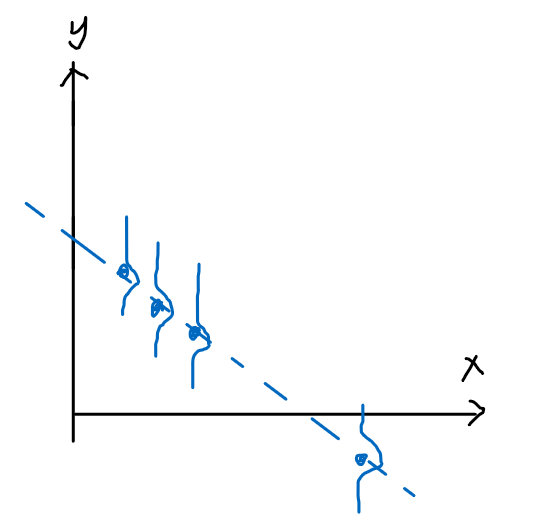

This is the empirical observation.

where:

- model complexity would be measured by the number of parameters in the estimator

- overfitting occurs when you are fitting to the noise (to the right)

- underfitting occurs when your model is too simple (to the left)

note that the gap between the test error and training error depends on the task/data.

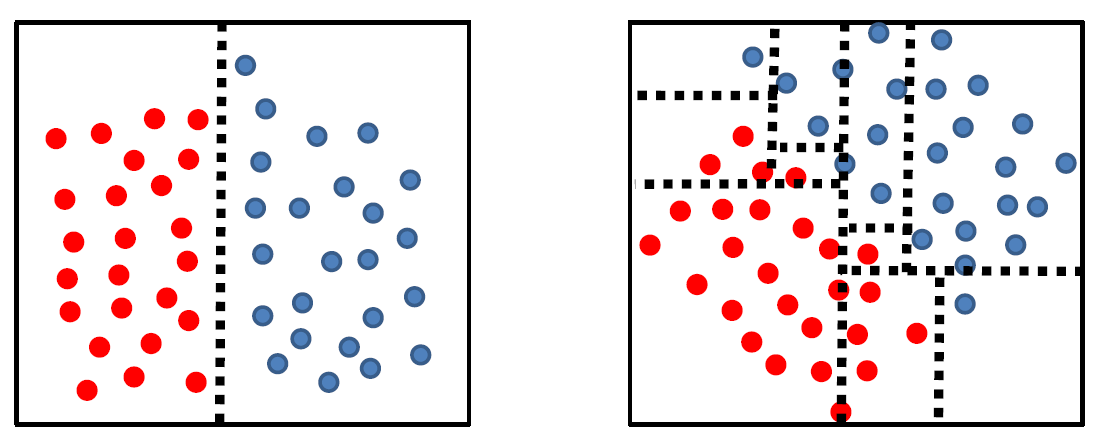

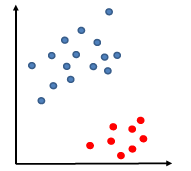

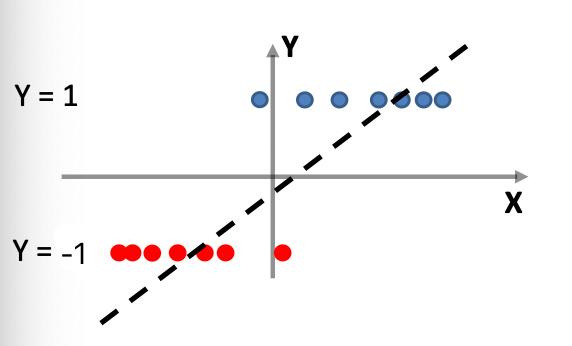

Perceptron and Kernelization

Another way to see the past models is that:

| MLE | k-NN | Decision Tree |

|---|---|---|

|

|

|

where essentially, we are figuring out a boundary that separates the classification.

Heuristic:

Why don’t we find that boundary directly?

- linear decision boundary

- non-linear decision boundary

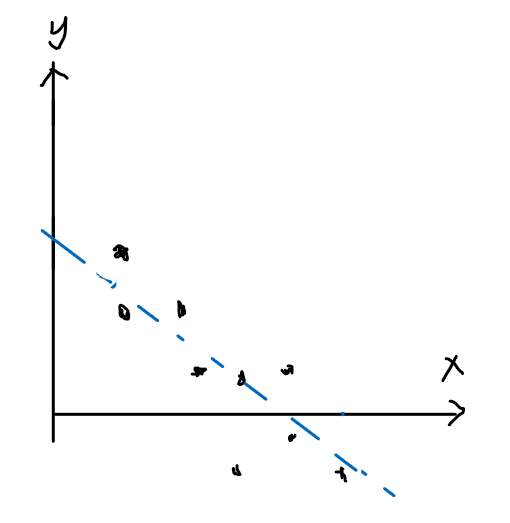

Linear Decision Boundary

Now, given some data, we basically want to come up with:

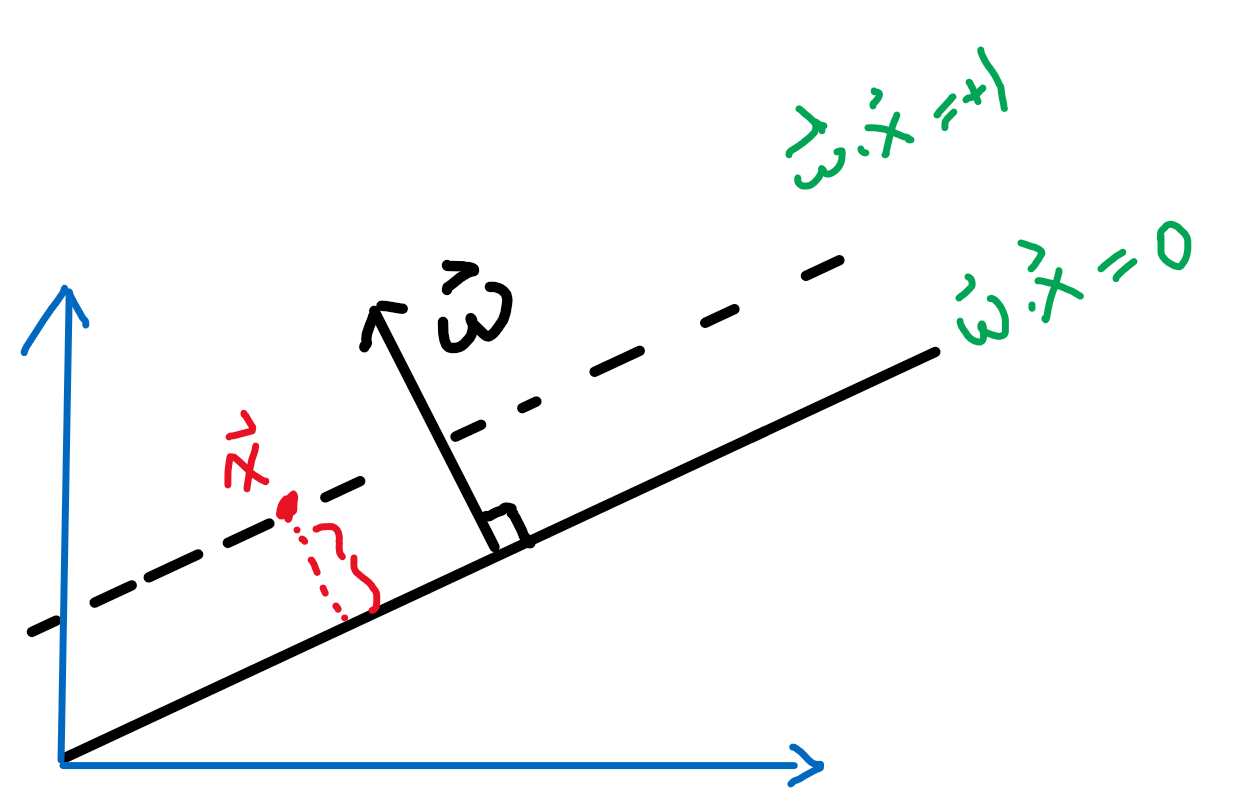

We can describe the plane by (in lower dimension, a line):

\[w^T \vec{x} = w^T\vec{a} = -w_0,\quad \text{or}\quad g(\vec{x}) = w^T\vec{x}+w_0 = 0\]for $\vec{w}$ being the normal vector $\vec{w} \in \mathbb{R}^d$, and $\vec{a}$ being a vector in the plane, $w_0$ being a constant.

-

for example, in $d=1$, we have:

\[g(x) = w_1 x + w_0\]so we have $d+1$ parameters

Hence, our classifier $f(x)$ from the decision boundary would be:

\[f(x):= \begin{cases} +1 & \text{if } g(x) \ge 0\\ -1 & \text{if } g(x) < 0 \end{cases} \quad = \text{sign}(\vec{w}^T\vec{x}+w_0)\]which works because:

-

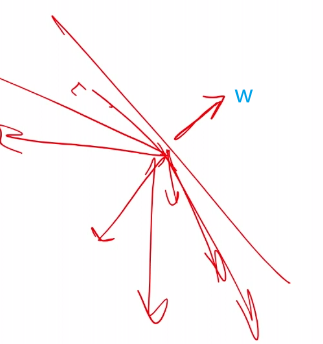

$\vec{w}^T\vec{x}=\vert w\vert \vert x\vert \cos\theta$, and suppose we have $\vec{w}$ looking like this:

so we see that all vectors below that plane will have $g(x) < 0$ due to $\cos \theta < 0$

Dealing with $w_0$

Trick

one trick that we will use would be to rename:

\[g(\vec{x}) = \vec{w}^T\vec{x}+w_0 = \vec{w}^{'T}\vec{x}^{'}\]where we have:

\[\begin{cases} \vec{w}' = \begin{bmatrix} w_0\\ w_1\\ \vdots\\ x_d \end{bmatrix} \\ \vec{x}' = \begin{bmatrix} 1\\ x_1\\ \vdots\\ x_d \end{bmatrix} \\ \end{cases}\]and in fact, $w_0$ is also referred as the bias, since it is shifting things.

So essentially, we lifted the dimension up:

| Original Data | Lifted Data |

|---|---|

|

|

one advantage of this lifted plane is that:

- the lifted plane must have gone through the origin, since $g(\vec{x}) = \vec{w}^{‘T}\vec{x}^{‘}=0$ is homogenous.

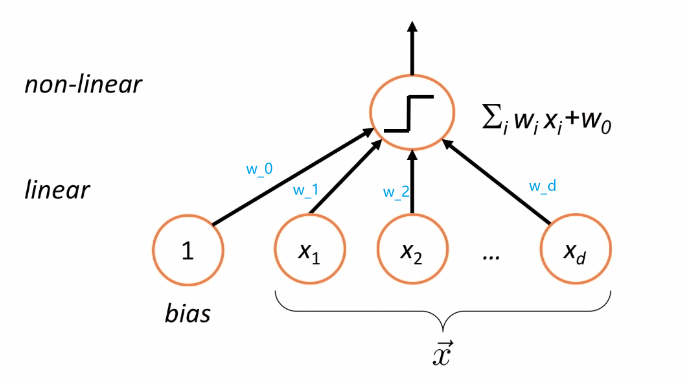

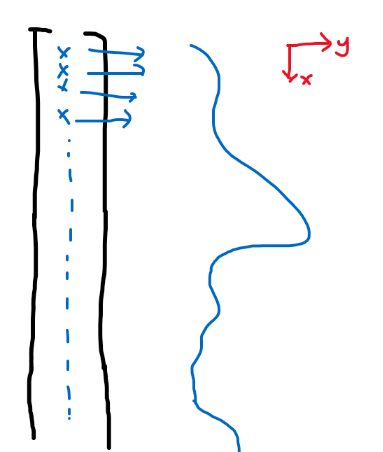

Linear Classifier

Therefore, essentially we are having a model/classifier that does:

where:

- takes a linear combination/weighted sum of the features of a given data $\vec{x}$, i.e. compute $g(\vec{x})$

- pass the result $g(\vec{x})$ to $\text{sign}$ function (nonlinear) to make a classification

So our aim is to find out the optimal value for $\vec{w}$!

Heuristic

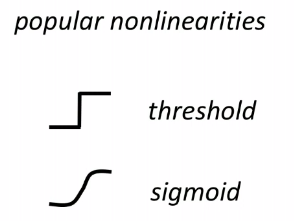

Essentially, our final predictor is $f=f(g(\vec{x}))$, which in turns depend on $\vec{w}$. The last step of our optimization should be taking the computing the minimization of $\text{error}(f)$ , which means we need to take derivatives. It is not good if $f$ is a not differentiable function.

Hence, a reasonable step to do would be to approximate $f$ as a continuous function first, and then the rest should work.

Therefore, we usually use an alternative:

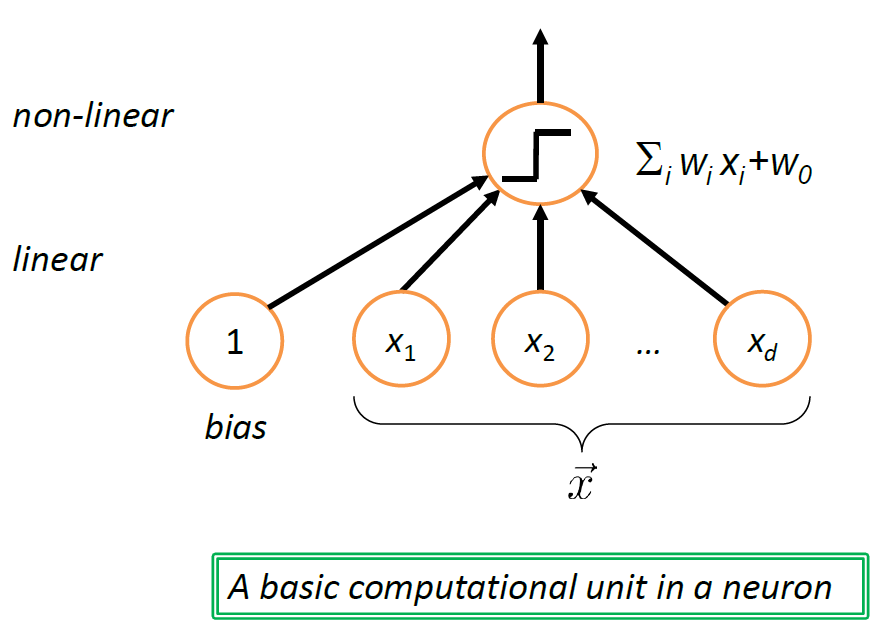

Note: Neural Network

the structure shown above can be interpreted as a unit of neuron (which biologically is triggered based on some activation energy threshold):

where in this case, we get “triggered” if $f(g(\vec{x})) > 0$ for example.

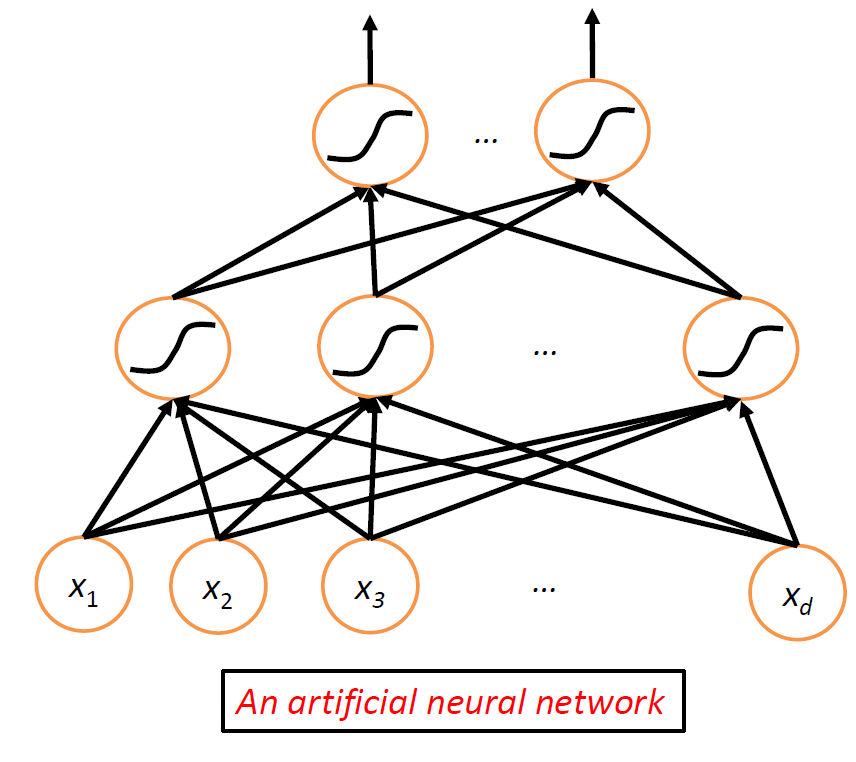

then, essentially we combine a network of neurons to get neuron network:

this is the basic architecture behind a neural network, where nonlinearity comes from the nonlinear functions at the nodes.

The amazing fact here is that:

- this network can approximate any smooth function!

- hence it is very useful

Now, we go back to the single perceptron/neuron case. Essentially, a single linear function $f=\sigma(w^Tx)$, and we need to find $w$.

Learning the Weights

Given some labeled training data, bias included, $(\vec{x}_1,y_1),(\vec{x}_2,y_2),…,(\vec{x}_n,y_n)$, we want to find the optimal $w$ such that the training error is minimized.

\[\arg \min_{\vec{w}} \frac{1}{n} \sum_{i=1}^n 1\{ \text{sign}(\vec{w}^T \vec{x}) \neq y_i \}\]where we are using $\text{sign}$ instead of $\sigma$ to be more exact now. And if you think about this, we cannot compute the derivatives of this. In fact, minimizing this is actually NP-hard or even approximate.

-

For instance, if you used something like:

\[\arg\max \sum y_i\cdot (\sigma(\vec{w}^T \vec{x}_i)-1)\]the problem is that then, (it can be proven) that there exist some dataset where the distance between $\vec{w}^{}$ and the $\vec{w}$ will be very large. Essentially, this *approximation is NOT an approximation since the difference between the optimal solution is unbounded.

For Instance

Consider that the data looks like this:

since we cannot take derivatives of the above eq.53, we basically have to try it out. And this would them be NP-hard.

- maybe we should try to change its representation to some other linearly separable parts first?

So the idea is to consider some assumptions that might simplify the problem: suppose the training data IS linearly separable. Then what can we do?

such that there is a linear decision boundary which can perfectly separate the training data.

- and here, we might want to make the margin $\gamma$ as large as possible

Then, under this assumption, we can then can find $\vec{w}$ such that $y_i (\vec{w} \cdot \vec{x}_i) \ge 0$ for all $i$. Then, we can do a constraint optimization

-

we use $\ge$ instead of $>$ because we are assuming $\gamma \ge 0$ instead of $\gamma > 0$.

-

this is doable, so something like:

\[\DeclareMathOperator*{\argmin}{arg\,min} \argmin_{w\text{ s.t. }y_i (\vec{w} \cdot \vec{x}_i) \ge 0, \forall i}||w||^2\](this is not the only function you can minimize, just an example) Yet, there is a much easier way -> Perceptron Algorithm

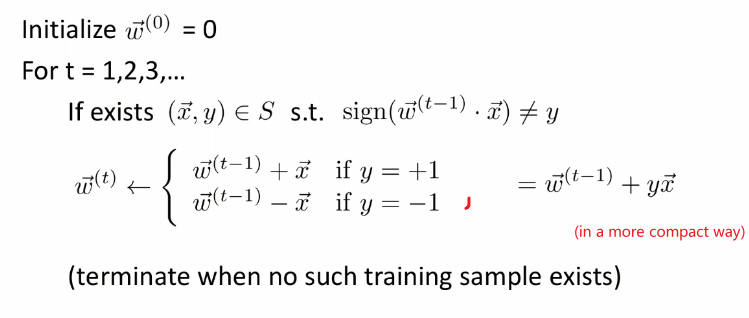

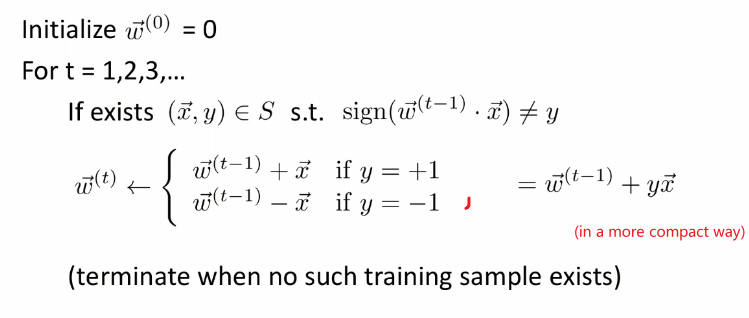

Perceptron Algorithm

The idea is basically do a “gradient descent”. The algorithm is as follows:

where:

- basically, if on $t=k$ step you found an error of prediction, you update $\vec{w} := \vec{w}+y \vec{x}$

Yet some proofs are not clear yet:

- is this guaranteed to terminate?

- can you actually find the correct $\vec{w}$?

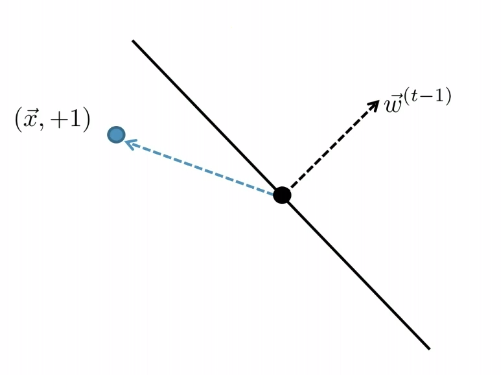

For Example

Consider you started with:

| Started with $t-1$, got an error | Update |

|---|---|

|

|

| error: marked negative but should be positive | though it is not guaranteed you fixed it in one update |

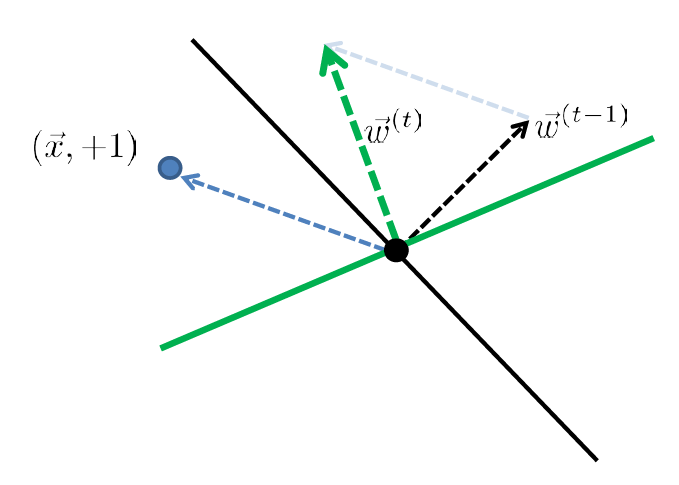

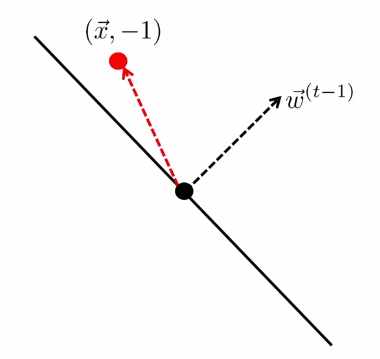

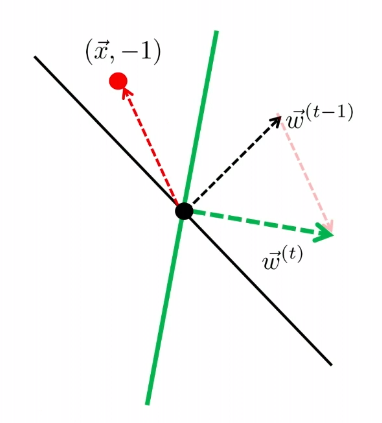

The other case is

| Started with $t-1$, got an error | Update |

|---|---|

|

|

Perceptron Algorithm Guarantees

Theorem (Perceptron mistake bound)

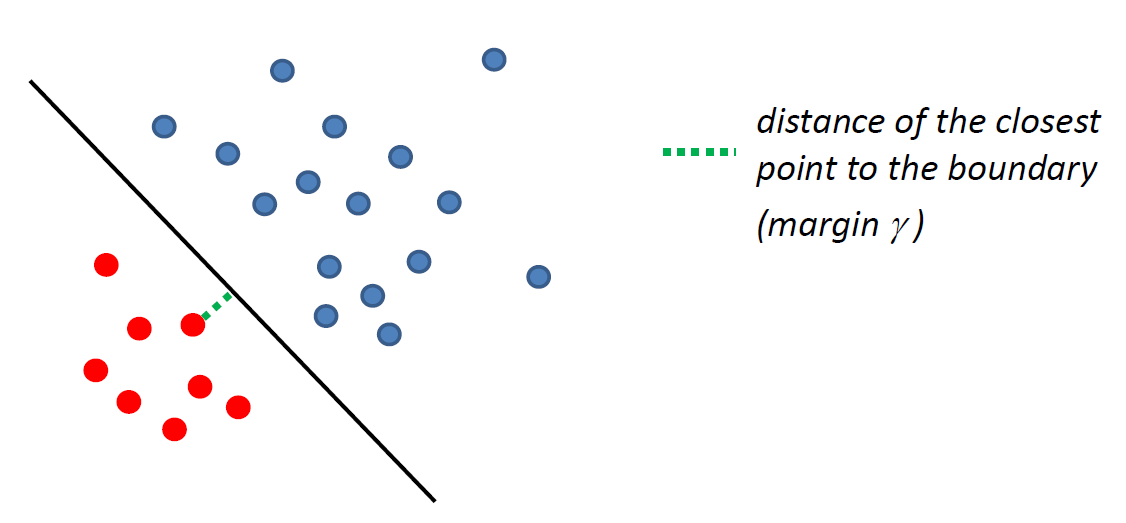

Assume there is a unit length $\vec{w}^*$ that separate the training sample $S$ with margin $\gamma$.

Let $R = \max_{\vec{x}\in S}\vert \vert \vec{x}\vert \vert$ be the radius of your data, for your sample being $S$.

Then we claim that the perceptron algorithm will make at most:

\[T:= \left(\frac{R}{\gamma}\right)^2 \text{ mistakes}\]So that the algorithm will terminate in $T$ rounds, since you only can have $T$ mistakes.

Proof

The key quantity here we think about is: how far away is $\vec{w}^{(t)}$ from $\vec{w}^*$?

- essentially we want to know the angle between the two vector, which then is related to $\vec{w}^{(t)}\cdot \vec{w}^*$.

Suppose the perceptron algorithm makes a mistake in iteration $t$, then (remember we start with $w^{0}$ at $t=1$), then it means a data $\vec{x}$ is classified wrongly. Hence:

\[\begin{align*} \vec{w}^{(t)} \cdot \vec{w}^* &= (\vec{w}^{(t-1)} + y\vec{x}) \cdot \vec{w}^* \\ &\ge \vec{w}^{(t-1)}\cdot \vec{w}^* + \gamma \end{align*}\]because $y\vec{x} \cdot \vec{w}^$ must have been *identified correctly, and that at least $\gamma$ away by definition of $\vec{w}^*$.

Additionally:

\[\begin{align*} ||w^{(t)}||^2 &= || \vec{w}^{(t-1)} + y\vec{x} ||^2 \\ &= ||\vec{w}^{(t-1)}||^2 + 2y(\vec{w}^{(t-1)}\cdot \vec{x}) + ||y\vec{x}||^2 \\ &\le ||\vec{w}^{(t-1)}||^2 + R^2 \end{align*}\]since $y(\vec{w}^{(t-1)}\cdot \vec{x})$ is classified wrongly, so that this must be negative.

Then, since we know that for all iterations $t$, we get:

\[\begin{cases} \vec{w}^{(t)} \cdot \vec{w}^* \ge \vec{w}^{(t-1)}\cdot \vec{w}^* + \gamma \\ ||w^{(t)}||^2 \le ||\vec{w}^{(t-1)}||^2 + R^2 \end{cases}\]from above. Hence, if we made $T$ mistakes/rounds of update, then

\[\begin{cases} \vec{w}^{(T)} \cdot \vec{w}^* \ge T\gamma \\ ||w^{(T)}||||\vec{w}^*|| \le R\sqrt{T} \end{cases}\]because:

- the first inequality comes from considering:

- made a mistake on $t=1$, then $\vec{w}^{(1)} \cdot \vec{w}^* \ge 0 + \gamma$

- made a mistake on $t=2$, then $\vec{w}^{(2)} \cdot \vec{w}^* \ge \vec{w}^{(t-1)}\cdot \vec{w}^* + \gamma \ge 2\gamma$ by substituting from above

- etc.

- the second inequality comes from a similar idea, so that $\vert \vert \vec{w}^{(T)}\vert \vert ^2 \le TR^2$, and since $\vert \vert \vec{w}^*\vert \vert =1$, then the inequality is obvious

Finally, connecting the two inequality:

\[T\gamma \le \vec{w}^{(T)} \cdot \vec{w}^* \le ||w^{(T)}||||\vec{w}^*|| \le R\sqrt{T}\]Hence we get that:

\[T \le \left(\frac{R}{\gamma} \right)^2\]Non-Linear Classifier

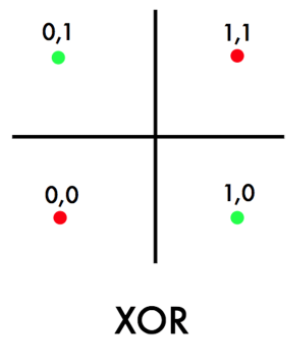

One obvious problem with a linear classifier is that if you have the following data:

where technically, we have:

- ${0,1}^2 = X$, such that the cardinality $\vert X\vert =4$.

- $y=\text{XOR}(x^{(1)}, x^{(2)})$ for $\vec{x} \in X$.

so we see that no linear classifier can classify the above data perfectly correctly.

-

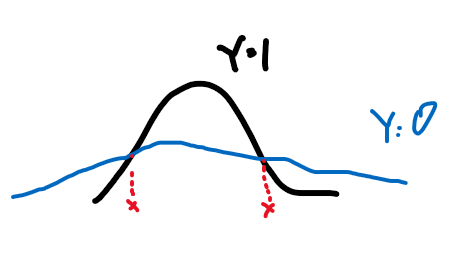

but note that an optimal Bayes classifier would have been able to classify it perfectly. (Anyway Bayes classifier is nonlinear)

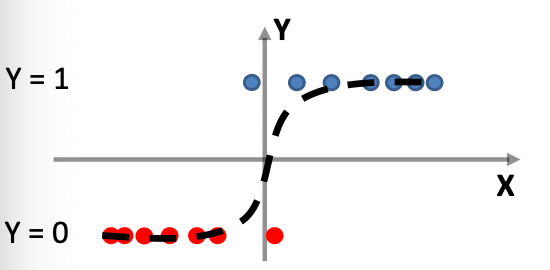

where the boundary even for a 1-D Gaussian can be nonlinear.

Take Away Message

- The idea of a linear classifier is that the decision boundary induced by a linear classifier is LINEAR (AFFINE) in the untransformed input space.

So often the data is not linearly separable, then we need some tricks.

- (Basically applying nonlinear transformation to an input space, so that the data in the end is linearly separable in that space)

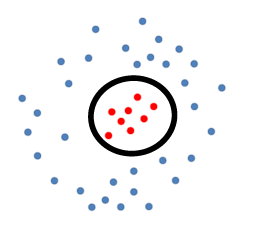

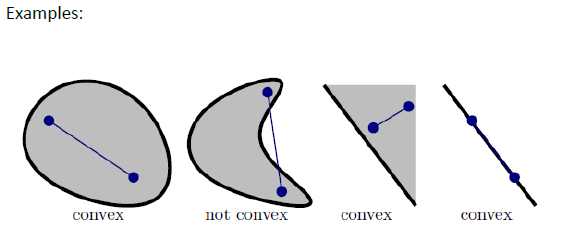

Generalizing Linear Classification

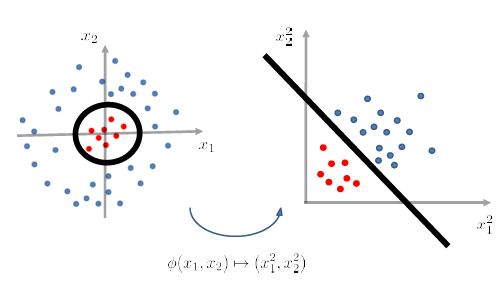

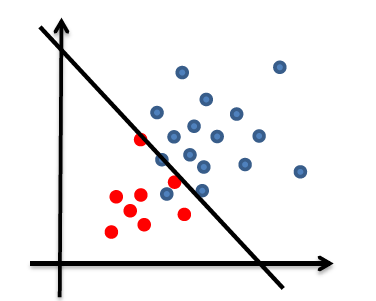

Consider the following data:

where the data is separable, but not linearly separable.

In this case, suppose we knew beforehand the separation boundary is a perfect circle:

\[g(\vec{x}) = w_1 x_1^2 + w_2x_2^2 + w_0 = 0\]which is basically an equation of a circle, parametrized by $\vec{w}$. so basically:

-

$w_1=w_1=1$ and $w_0 = -r^2$ for $r$ being the radius of a unit circle IN THIS CASE.

-

in general, it should then look like:

\[g(\vec{x}) = w_1 x_1^2 + w_2x_2^2 + w_3x_1x_2 + w_4x_1+w_5x_2+w_0 =0\]for ellipses as well.

But we can consider:

\[\begin{align*} w_1 x_1^2 + w_2x_2^2 + w_0 = w_1 \chi_1 + w_2 \chi_2 + w_0 \end{align*}\]such that we have linear/affine in $\chi$ space.

Reminder: Linear Function

linearity test in high dimension input:

\[f(c_1a + c_2b) = c_1f(a) + c_2f(b)\]then $f:\mathbb{R}^d\to \mathbb{R}$.

Take Away Message

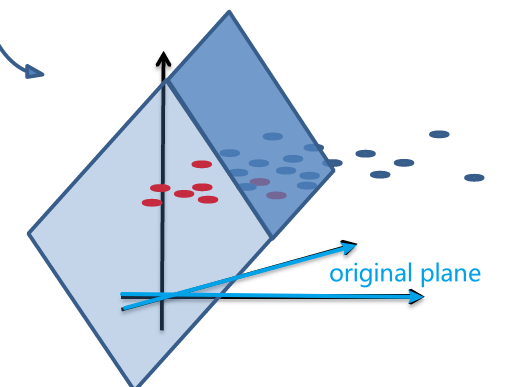

After we have applied a feature transformation $\phi(x_1 , x_2)\mapsto (x_1^2 , x_2^2)$, then $g$ becomes linear in $\phi$-feature space. (i.e. we don’t look at $X$ now, we look at data in $\phi(X)$ instead).

- Then, once in $\phi(X)$ it is linearly separable, we just use Perceptron and we are done.

- feature transformation is sometimes also called the Kernel Transformation

graphically, this is what happened:

yet the problem is that we don’t know what should be $\phi$ beforehand.

- This is why we have soon some kernel tricks which would compute an infinite power of polynomial instead.

Transformation for Quadratic Boundaries

Now, we continue exploring some the general form for quadratic boundaries. (so only up to power of 2)

Suppose we have for only two features, $\vec{x} \in R^2$:

\[g(\vec{x})= w_1 x_1^2 + w_2x_2^2 + w_3x_1x_2 + w_4x_1+w_5x_2+w_0 = \sum_{p+q\le 2} w_{p,q}x_1^px_2^q\]with:

-

$x_i$ being the $i$-th feature of the data point $\vec{x}$

- doing feature transformation of (two features) $\phi(x_1,x_2) \to (x_1^2, x_2^2, x_1x_2, x_1,x_2, 1)$

- so basically $x_1^px_2^q$ are the new $\chi$ dimension

Then, if we consider data of $d$ features, we basically have:

\[g(\vec{x}) = \sum_{i,j}^d\sum_{p+q\le 2} w_{p,q}x_i^px_j^q\]where:

- $x_i$ being the $i$-th feature of the data point $\vec{x}$

- doing feature transformation of ($d$ features) $\phi(x_1, …,x_d) \mapsto (x_1^2,x_2^2,…,x_d^2,x_1x_2,…x_{d-1}x_d,x_1,…,x_d)$

- so you approximately needs $d^p$, where $p$ is the degree of polynomial you want to go up to.

- captures all pairwise interactions between each feature. Note that only pairwise though.

So the problem is that we see, even for only using quadratics, we have too many terms -> too many parameters to fit.

Theorem for Linear Separability

Theorem

Given $n$ distinct points $S=\vec{x}_1,…,\vec{x}_n$

There exists a feature transformation (can be anything, but only looks at feature, not labels) such that for any labelling of $\mathcal{S}$ is linearly separable in that transformed space!

Proof

Given $n$ distinct points, we basically want to show a transformation such that we can find a $\vec{w}$ in that space that perfectly separates the data.

Consider the transformation, for the $i$-th given data point $\vec{x}_i$

\[\phi(\vec{x}_i) = \begin{bmatrix} 0\\ \vdots\\ 1\\ \vdots\\ 0 \end{bmatrix}=\text{only i-th component is 1}\]Then, we need $\vec{w}\cdot \phi(\vec{x}_i)$ to gives us a boundary. This can be easily achieved by:

\[\vec{w}=\begin{bmatrix} y_1\\ \vdots\\ y_n \end{bmatrix}\]where we see that:

- only when we are fitting $\vec{w}$ we look at our labels

- this formation gives $\vec{w}\cdot \phi(\vec{x}_i)=y_i$.

So if we know that $y_i \in {-1,+1}$, then we can have the classifier:

\[\text{sign}(\vec{w}\cdot \phi(\vec{x}_i))\]which is a linear classifier in $\phi(\vec{x})$ space.

Intuitively

If each data point is in its own dimension, then I can build a separating plane by considering in the $\phi(\vec{x})$ space:

- build a plane that classifies the first point $\vec{x}_1$ correctly

- rotate the plane but fix its intersection on $\hat{x}_1$ so that $\vec{x}_2$ can now be classified correctly

- rotate the plane but fix its intersection on $\hat{x}_1, \hat{x}_2$ so that $\vec{x}_3$ can now be classified correctly

- repeat until $\vec{x}_n$ is also classified correctly

And this would work because all data points are now “independent in a new dimension”.

Though this finishes the proof,

- but that transformation cannot be generalized to test data points. So we need to find some reasonable transformations.

- the computational complexity is high (in $\Omega(d’)$ where $d’$ is the new dimension)

- also you have a high chance of overfitting (model complexity)

Kernel Trick

First we discuss how to deal with computation complexity, where even writing down the vector in high dimension will take much time.

Heuristics

Recall that the classifier is computing $\text{sign}(\vec{w}\cdot \vec{x})$. And in the end, you will see that learning $\vec{w}$ can be written as:

\[\vec{w}=\sum_k\alpha_ky_x\vec{x}_k\]

- see section Using Kernel Trick in Perceptron

Then computing $f(\vec{x})=\text{sign}(\vec{w}\cdot \vec{x})$ will only be computing:

\[f(\vec{x})=\text{sign}(\vec{w}\cdot \vec{x}) = \text{sign}\left(\vec{x}\cdot \sum_{k=1}^n\alpha_ky_x\vec{x}_k\right) = \text{sign}\left(\sum_{k=1}^n\alpha_ky_x(\vec{x}_k\cdot \vec{x})\right)\]So we technically just need to make sure $\vec{x}_i \cdot \vec{x}_j$ is fast. And this is doable even if $\vec{x}\to \phi(\vec{x})$ will be large in dimension in the new/transformed dimension, because some kernel transformation can do $\phi(\vec{x}_i)\cdot \phi(\vec{x}_j)$ fast.

An illustration with an example is the fastest.

Consider doing a simple case of mapping to polynomial of degree $2$.

- so we are transforming from input with $d$ dimension to $d’\approx d^2$ dimension.

Note that:

in general, if we are doing polynomial with power of $p$, then we consider each term being:

\[(\_,\_,\_,\_,...,\_)\quad \text{with $p$ blanks}\]for instance if $p=3$, with $\vec{x}=[a,b,c,d]^T$, then one term could be:

\[a*c^2\to (a,c,c)\]so approximately we have the new dimension being $d'=d^p$.

Additionally, suppose our polynomial looks like:

\[\vec{x} \mapsto (x_1^2,...,x_d^2,\sqrt{2}x_1x_2,...,\sqrt{2}x_{d-1}x_d,...,\sqrt{2}x_d,1) = \phi(\vec{x})\]Then obviously computing $\phi(\vec{x}_i) \cdot \phi(\vec{x}_j)$ directly will take $O(d^2)$.

- the fact that it needs to be $\sqrt{2}$ will not affect classification in the end, since the weight is controlled by $\vec{w}$ anyway.

But we know that the above is equivalent to:

\[\phi(\vec{x}_i)\cdot \phi(\vec{x}_j) = (1+\vec{x}_i\cdot \vec{x}_j)^2\]which is only $O(d)$. So for some specific transformation $\phi$, we can make computation efficient.

Proof:

For a 2D case above, let us take $x_1 = (a_1,a_2)$ and $x_2=(b_1, b_2)$. Then the transformation does:

\[\phi(x_1)=\begin{bmatrix} a_1^2\\ a_2^2\\ \sqrt{2}a_1a_2\\ \sqrt{2}a_1\\ \sqrt{2}a_2\\ 1 \end{bmatrix}, \quad \phi(x_2)=\begin{bmatrix} b_1^2\\ b_2^2\\ \sqrt{2}b_1b_2\\ \sqrt{2}b_1\\ \sqrt{2}b_2\\ 1 \end{bmatrix}\]Before computing $\phi(x_1)\cdot \phi(x_2)$ explicitly, let’s look at the trick:

\[(1+x_1\cdot x_2)^2 =\left[ 1+(a_1b_1 + a_2b_2) \right]^2 =1+2a_1b_1+2a_2b_2+2a_1b_1a_2b_2+a_1^2b_1^2+a_2^2b_2^2\]which is exactly $\phi(x_1)\cdot \phi(x_2)$

RBF (Radial Basis Function)

So we have seen one kernel trick, and it turns out that this kernel transformation gets up to infinite dimension.

\[\vec{x} \mapsto \left(\exp(-||\vec{x}-\alpha||^2)\right)_{\alpha \in \mathbb{R}^d},\quad \vec{x}\in \mathbb{R}^d\]this transformed vector even needs infinite space to write down. But the dot products $\phi(\vec{x}_i),\phi(\vec{x}_j)$ is:

\[\phi(\vec{x}_i)\cdot \phi(\vec{x}_j)=\exp(-||\vec{x}_i - \vec{x}_j||^2)\]which again becomes $O(d)$

To understand this, we need to consider another way of visualizing a vector.

First, we can write a vector to be:

\[\vec{x} = \begin{bmatrix} x_1\\ x_2\\ \vdots\\ x_d \end{bmatrix} = (x_i)_{i =1,2,...,d}\]but this only works for a countably infinite dimension. To represent an uncountably infinite dimension, consider:

\[\vec{x}=(x_i)_{i \in \mathbb{R}} = (x_\alpha)_{\alpha \in \mathbb{R}}\]And if we stack the components vertically, then essentially $\vec{x}$ is a function.

where each entry continuously maps to a value. So a $f\to \mathbb{R}$ is like a “1-D vector”.

-

e.g., for a one dimensional data $x=2$, can transform this into infinite dimension (i.e. a “1-D vector”) with:

\[x=2\to\phi(2) =\left(\exp(-(2-\alpha)^2)\right)_{\alpha \in \mathbb{R}}=f(\alpha)=e^{(-(2-\alpha)^2)}\]

Therefore, the dot product becomes integral for functions. For a 1-D case, basically you get:

\[\phi(x_1)\cdot \phi(x_2)= \int_{i\in \mathbb{R}}\phi(x_1)_i\phi(x_2)_idi\]- then for input of $d=2$, we then need to integrate over a surface, so $i\in \mathbb{R}^2$. And this generalizes to $\mathbb{R}^d$

Now, since we know $\phi$ basically is a Gaussian, the actual integral becomes for input of dimension $d$:

\[\phi(\vec{x}_i)\cdot \phi(\vec{x}_j)= \int_{k\in \mathbb{R}^d}\phi(\vec{x}_i)_k\phi(\vec{x}_j)_kd\vec{k} = \exp(-||\vec{x}_i-\vec{x}_j||^2)\]where $\phi(\vec{x}_i)$ as a “vector” has infinite dimensions.

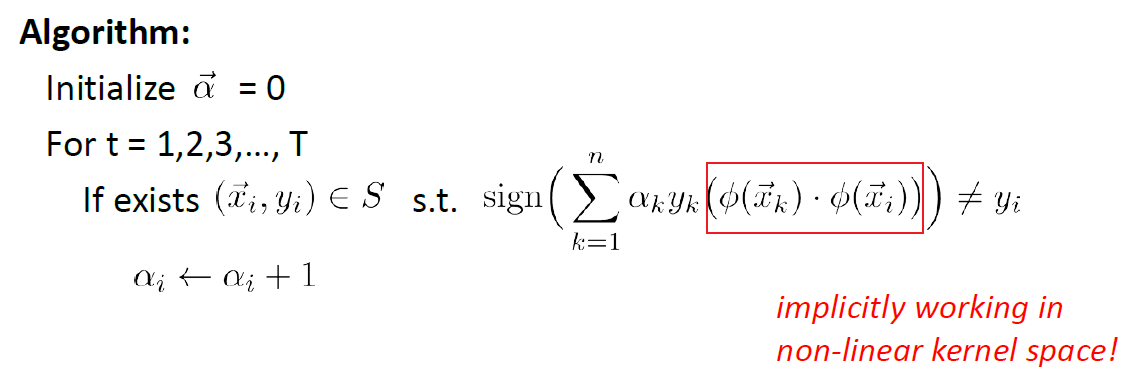

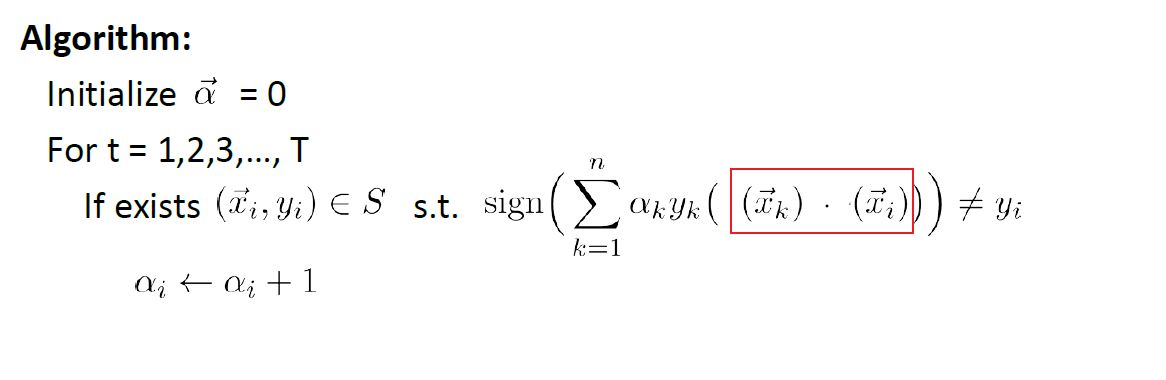

Using Kernel Trick in Perceptron

Now we need to somehow use the trick in our perceptron algorithm. Recall that our algorithm is:

- if we want to apply kernel directly by replacing in $\vec{x} \to \phi(\vec{x})$, we will be stuck at learning $\vec{w}$ because it has $…+y\vec{x}$, which we cannot compute with $\vec{x} \to \phi(\vec{x})$

However, we can rewrite the algorithm. Since each time we we update $\vec{w}$, we are adding a sample data point $\vec{x}_i$. This means that:

\[\vec{w}^{(t)}:=\vec{w}^{(t-1)}+y_k\vec{x}_k,\text{ when $\vec{x}_k$ is wrong}\iff \vec{w}=\sum_k\alpha_ky_x\vec{x}_k\]where:

- $\alpha_k$ is the number of times that we made a mistake on $\vec{x}_k$ (i.e. number of times we added $\vec{x}_k$ as a contribution)

Therefore:

-

The training algorithm becomes:

where basically you fitted all the $\alpha_i$.

-

The testing/prediction functions becomes:

\[f(\vec{x})=\text{sign}(\vec{w}\cdot \vec{x}) = \text{sign}\left(\vec{x}\cdot \sum_{k=1}^n\alpha_ky_x\vec{x}_k\right) = \text{sign}\left(\sum_{k=1}^n\alpha_ky_x(\vec{x}_k\cdot \vec{x})\right)\]

which in both cases are only taking dot products $\vec{x}_i\cdot \vec{x}_j$. So we can replace them with $\phi(\vec{x}_i)\cdot \phi(\vec{x}_j)$.

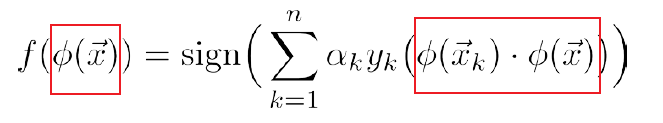

Kernel For Perceptron

During the training phase:

where $\vec{\alpha} \in \mathbb{R}^n$, and that

basically we start with assuming no mistake. Then, when mistake is made on the $i$-th data point, we update $\alpha_i$.

we essentially never compute individually $\phi(\vec{x}_i)$, we only compute the dot product.

During the testing/prediction phase:

so basically in the transformed space:

\[\vec{w} = \sum_{i=1}^n \alpha_i y_i \phi(\vec{x}_i)\](though we never compute it)

So in the end, we can encapsulate $K(\vec{x}_i,\vec{x}_j)$ that performs some kernelization $\phi(\vec{x}_i)\cdot\phi(\vec{x}_j)$ of your choice. But there are still some rules that we need to follow for our choice of $K$:

- For a kernel function $K(x,z)$, there better exists $\phi$ such that $\phi(x)^T\phi(z) = K(x,z)$

- but technically you don’t need to know what is $\phi(x)$. We just need to spit out some number.

- if $x\cdot z \ge x\cdot y$, then $K(x,z) \ge K(x,y)$.

- $K(x,x) = \phi(x)^T\phi(x) \ge 0$

- Kernel Matrix $K$, where $K_{ij}=K(x^{(i)},x^{(j)})=K(x^{(j)}, x^{(i)})=K_{ji}$ is symmetric

- Kernel Matrix $K$ is positive semidefinite (the other direction is also true!!, but not proven here)

- Mercer Kernel Theorem

Disadvantages (of being in an kernel/Hilbert space)

- The classifier needs to hold all the data points around $\vec{x} \in \mathcal{D}$

- The classifier, when predicting needs to iterate through all training data points.

Though in reality, there will be some approximation made.

- for instance, suppose we have training $x_1, x_2$, and test $x$. If $x_1\cdot x_2$ is large, then it is likely that $\phi(x_1) \approx\phi(x_2)$. So we don’t need to compute both $\alpha_1\phi(x_1)\cdot \phi(x)+\alpha_2\phi(x_2)\cdot \phi(x)$ but only a single time.

Note

$R = \max_{\vec{x}\in S}\vert \vert \vec{x}\vert \vert$ becomes $R = \max_{\vec{x}\in S}\vert \vert \phi(\vec{x})\vert \vert$, where recall:

\[||\phi(x)||^2 = \int_{-\infty}^{\infty}\phi^2(x)_idi\]and this is finite (due to Cauchy Schwartz Inequality). Therefore, $R$ is still finite and we can still apply the theorem such that:

\[T \le \left(\frac{R}{\gamma} \right)^2\]

Support Vector Machine

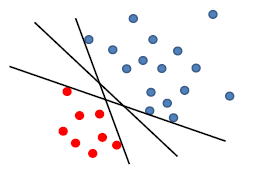

Perceptron and Linear Separability

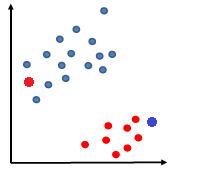

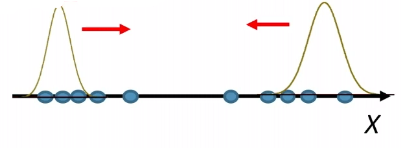

Say there is a linear decision boundary which can perfectly separate the training data.

Now, if we use perceptron:

where any of the above line might be returned from our perceptron algorithm

- e.g. due to the order of us iterating through the data point

- yet in reality, you might want to use the middle line with largest margin $\gamma$, so that your testing phase would perform better (this is the meaning of defining a $\gamma$ anyway)

Motivation

- returns a linear classifier that is stable solution by giving a maximum margin solution (which is not considered in the perceptron algorithm).

- stable: no matter which order of training data ($x_1, x_2,x_3$ vs $x_3,x_1,x_2$) you have, you give the same solution.

- It is kernelizable, so gives an implicit way of yielding non-linear classification.

- maximum margin in the kernel space $\iff$ maximum margin in the original space

- Slight modification to the problem provides a way to deal with non-separable cases (in the original input space)

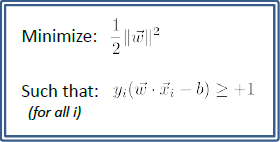

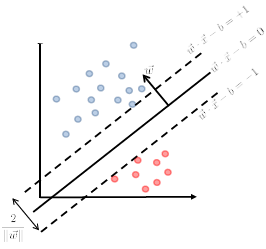

SVM Formulation

Again, let us start with a simple case and move forward.

Say the training data is linearly separable by some margin (but the linear separator does not necessarily passes through the origin).

so that our decision boundary can be:

\[g(\vec{x}) = \vec{w}\cdot \vec{x} - b =0 \to f(\vec{x}) = \text{sign}(\vec{w}\cdot\vec{x}-b)\]Heuristics

- We can try finding two parallel hyperplanes that correctly classify all the points, and maximize the distance between them!

- then, you just need to return the average of the two parallel hyperplanes.

Consider that you get some boundary $\vec{w} \cdot \vec{x}-b=0$ ($\vec{w}$ is not necessarily the best here).

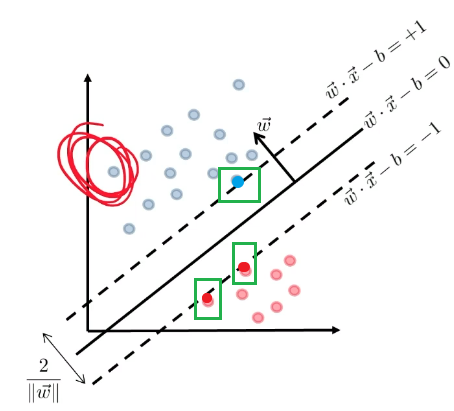

Then we get two planes, being some distance $c$ away such that:

\[\begin{cases} \vec{w}\cdot \vec{x} - b = +c\\ \vec{w}\cdot \vec{x} - b = -c \end{cases}\]but we could divide both side by $c$, such that we get a simpler form (i.e. less parameter to worry about):

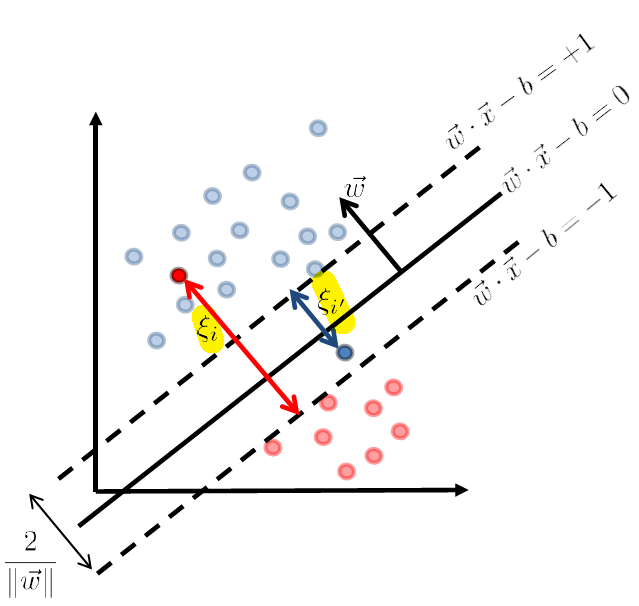

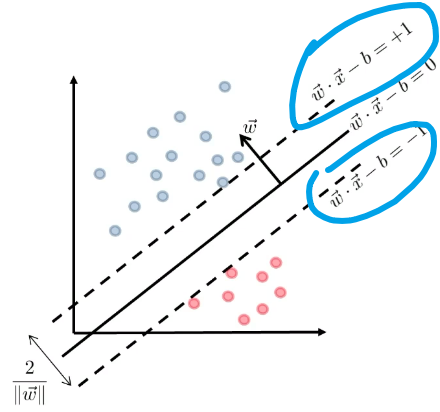

\[\begin{cases} \vec{w}\cdot \vec{x} - b = +1\\ \vec{w}\cdot \vec{x} - b = -1 \end{cases}\]Graphically:

Then we claim that the distance between the two plane is:

\[d=\frac{2}{||\vec{w}||}\]Proof

First, we can shift the entire space such that $b=0$. Then essentially, we consider:

Then obviously the red distance is:

\[\vec{x}\cdot \frac{\vec{w}}{||\vec{w}||} = \frac{1}{||\vec{w}||}\]since the two planes are equidistance apart, hence the distance between the two planes is just multiplied by 2.

Then, since we have two planes, the correct classification means:

\[\begin{cases} \vec{w} \cdot \vec{x}_i - b \ge +1,\quad \text{if $y_i=+1$}\\ \vec{w} \cdot \vec{x}_i - b \le -1,\quad \text{if $y_i=-1$} \end{cases}\]So together, this can be summarized as:

\[y_i(\vec{w} \cdot \vec{x}_i - b) \ge +1,\quad \forall i\]- so basically if we have $n$ data points, this is $n$ constraint equations.

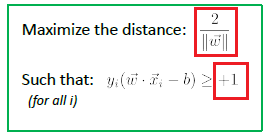

Therefore, our optimization problem is:

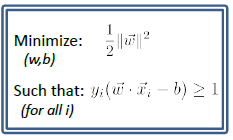

But we would want convexity in the objective function. Therefore, we consider the reciprocal and we get:

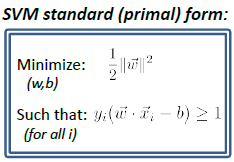

SVM Standard (Primal) Form

where we have converted maximization to minimization of reciprocal.

- note that $(1/2)\vert \vert w\vert \vert ^2$ is convex.

Then, we are left with 3 problems:

- what if the data is not linearly separable (in the raw input space)

- how to actually perform the minimization

- can we make it kernalizable

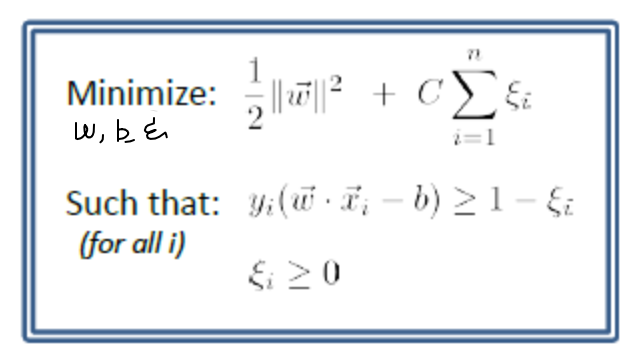

Slacked SVM

We address the first question of how to manage data points that are slightly off:

which made it not linearly separable.

But consider some way to account for the error.

where we denote the error of those points being:

- $\xi_i$ and $\xi_{i’}$ are the error which is the distance $>0$ to the correct (stringent) hyperplane

- $\xi = 0$ if the data is correctly classified

So now the idea is to optimize over $\vec{w}$ AND $\xi_i$ simultaneously. Then we can just associated each training data with a slack/error $x_i \to \xi_i$ such that

where:

-

the constraint:

\[y_i(\vec{w}\cdot \vec{x}_i -b) \ge 1 - \xi_i\]represents us slacking the constraint (due to non-separability)

- this is why $\xi_i$ is the distance. Because if we have some optimal $\vec{w},b$, then eventually $\xi_i$ will be the shortest distance to the correct classification hyperplane.

-

the objective of

\[\frac{1}{2}||w||^2 + C \sum_{i=1}^n\xi_i\]means I want to minimize the slack, if given.

However, also notice that we have attached the $C$ variable, because:

- if we want to just maximize the margin, then minimizing $\frac{1}{2}\vert \vert w\vert \vert ^2$ (do not overfit) would cause $\sum_{i=1}^n\xi_i$ will shoot up

- if we want to just minimize the error, then our margin will become small. This means that $\frac{1}{2}\vert \vert w\vert \vert ^2$ will be large.

Therefore, $C$ is like a hyperparameter telling you which one would you emphasize on.

-

The output is to simultaneously give a combination of $\vec{w}, b, \xi_i$, (if you know one variable, you know everything. But you do NOT know any on the first hand. $\xi_i$ is not the distance prior to the optimization problem).

So the output would be operating on $\vec{w},b,\xi_i$, so dimension $\mathbb{R}^{d+1+n}$

Note

Sometimes you will see the problem be phrased as

\[(1-\lambda)\frac{1}{2}||w||^2 + \lambda \sum_{i=1}^n\xi_i,\quad \lambda \in[0,1]\]which is equivalent to the one with $C$ defined above.

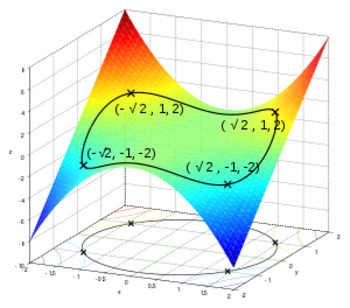

Finding Minimization

Our goal now becomes trying to solve this problem.

note that:

- doing minimization is not the problem, but the $n$ constraint are annoying.

First, let us consider visualization of minimization and functions.

Consider some function $f(y,z) \to \mathbb{R}$. We can visualize this as:

- the input plane/plane we operate on is always y-z plane. The vertical dimension of x is an imagined axis so that we can visualize output.

- the idea is that for a function $f:\mathbb{R}^d \to \mathbb{R}$, we are having base plane (input being $\mathbb{R}^d$), and the output is "imagined to be" perpendicular to the base space.

Therefore, the function

\[f(\vec{w},b,\xi_i)=\frac{1}{2}||\vec{w}||^2 + C \sum_{i=1}^n\xi_i\]is basically attach a “vertical number” to the input space of $\mathbb{R}^{d+1+n}$.

And the constraint of:

\[y_i(\vec{w}_i \cdot \vec{x}_i- b) \ge 1 - \xi_i,\quad \xi_i \ge 0\]is basically constraining the space of $\mathbb{R}^{d+1+n}$ that output can be.

Graphically, it looks like:

where:

- the curves a like constraints, where output in y-z plane should only lie within that region.

Take Away Message

- SVM is basically a problem a constraint optimization problem.

- you cannot do simple gradient descent, because the constraint might not longer be satisfied after you moved.

- the visualization techniques above will help you understand how to solve for the constraint optimization.

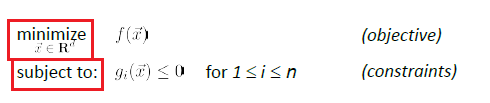

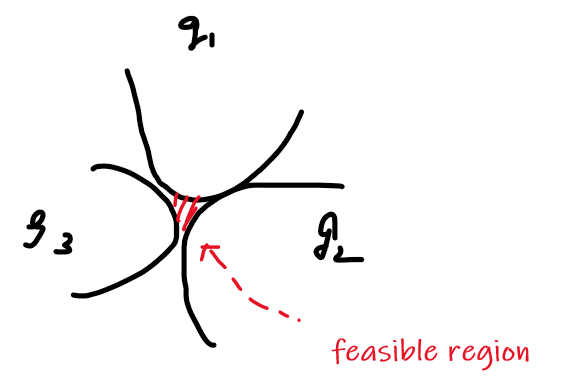

Constrained Optimization

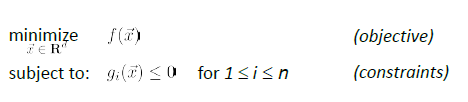

Consider a constraint optimization of:

where basically we are only looking at:

- a finite number of a constraints

- equality constraint can also be made into an inequality constraint. E.g. $x^2=5$ could be transformed to $x^2-5 \ge 0$ and $x^2-5 \le 0$

- and we assume that the problem is feasible.

Some common ways of doing it would be

Projection Methods

- start with a feasible solution $x_0$ (not necessarily minimized)

- find $x_1$ that has slightly lower objective value

- if $x_1$ violates the constraints, project back to the constraints.

- where project back to the constraints is the hard part

- iterate, stop when you cannot find a lower value in step 2

Penalty Methods

-

Use a penalty function to incorporate the constraints into the objective

-

so that you won’t even step into the forbidden region even if you are only looking at the objective function. For instance, the penalty could be infinite once you entered the forbidden region. So you would avoid that.

-

hard to find a working penalty function

-

Lagrange (Penalty) Method

Consider the augmented function

\[L(\vec{x}, \vec{\lambda}) := f(\vec{x}) + \sum_{i=1}^n \lambda_i g_i(\vec{x})\]and recall that :

- our aim was to minimize $f(\vec{x})$ such that $g_i(\vec{x}) \le 0$ is satisfied

- $\vec{x}$ is the original variable, called primal variable as well

- $\lambda_i$ will be some new variable, called Lagrange/Dual Variables.

Observation

-

For any feasible $x$ and all $\lambda_i \ge 0$, then since $g_i(\vec{x}) \le 0$, then obviously:

\[L(\vec{x},\vec{\lambda}) \le f(\vec{x}) \to \max_{\lambda_i \ge 0} L(\vec{x},\vec{\lambda}) \le f(\vec{x})\] -

So the optimal value to the constraint problem is:

\[p^* := \min_{\vec{x}}\max_{\lambda_i \ge 0} L(\vec{x},\vec{\lambda})\]and note that now we have:

- $\max_{\lambda_i \ge 0}$ which is much easier than satisfying entire functions $g_{i}(\vec{x})\le 0$.

- once $\max_{\lambda_i \ge 0}$ this is done, $\min_{\vec{x}}$ has no constraint on $\vec{x}$ anymore

we now show that this $p^*$ actual is the same as our original goal.

Proof

Consider that we landed a $p^*$ that has a $\vec{x}$ being infeasible. Then:

- it means at least one of the $g_i(\vec{x})> 0$ (since $\vec{x}$ is infeasible)

- Therefore, $\max_{\lambda_i \ge 0} L(\vec{x},\vec{\lambda})$ would end up with one $\lambda_i \to \infty$ and $\max_{\lambda_i \ge 0} L(\vec{x},\vec{\lambda}) \to \infty$

- therefore, such a $p^*$ cannot be computed, which is a contradiction.

Consider that we landed a $p^*$ that has a $\vec{x}$ being feasible. Then:

this means all $g_i(\vec{x})\le 0$.

Therefore, $\max_{\lambda_i \ge 0} L(\vec{x},\vec{\lambda})$ would end up with all $\lambda_i = 0$, or $g_i(x)=0$ already. Then, this becomes the same as:

\[\min_\vec{x}\max_{\lambda_i \ge 0} L(\vec{x},\vec{\lambda}) \to \min_{\vec{x}}f(\vec{x})\]which is the same as the original task.

Therefore, this $p^*$ problem is the same as what we wanted to compute, namely:

The problem is that the $\max_{\lambda_i \ge 0} L(\vec{x},\vec{\lambda})$ is difficult to compute on the first hand.

Therefore, we introduce some other thought. Consider

\[\min_{\vec{x}}L(\vec{x},\vec{\lambda}) = \min_{\vec{x}} f(\vec{x}) + \sum_{i=1}^n \lambda_i g_i(\vec{x})\]Then, we observe that:

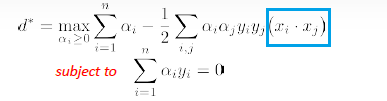

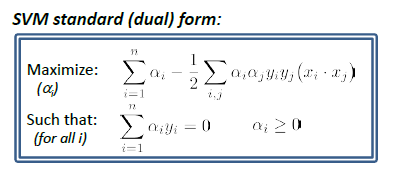

\[p^* =\min_{\vec{x}}\max_{\lambda_i \ge 0} L(\vec{x},\vec{\lambda}) \ge \min_{\vec{x}}L(\vec{x},\vec{\lambda})\]i.e. minimum of a maxi zed function is $\ge$ just minimum of a function. Then, we can define the problem:

\[\min_{\vec{x}}\max_{\lambda_i \ge 0} L(\vec{x},\vec{\lambda}) \ge \max_{\lambda_{i}\ge 0}\min_{\vec{x}}L(\vec{x},\vec{\lambda}) := d^*\]so we get that the dual problem of $d^*$ defined as:

\[d:=\max_{\lambda_{i}\ge 0}\min_{\vec{x}}L(\vec{x},\vec{\lambda}) \le p^*\]Technically, $d^$ is dual problem, and in some cases, $d^ = p^*$. Then this is good because:

- $\min_{\vec{x}}L(\vec{x},\vec{\lambda})$ is unconstraint optimization, and we can compute derivatives

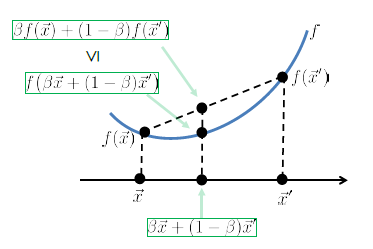

- in fact, $h(\lambda) \equiv \min_{\vec{x}} L(\vec{x},\vec{\lambda})$. Then we can show that every dual problem is concave. This justifies the final maximization procedure.

Proof

Since $L(\vec{x},\vec{\lambda})=f(\vec{x}) + \sum_{i=1}^n \lambda_i g_i(\vec{x})$ is a linear function in $\lambda$ for a particular $\vec{x}$. So graphically:

so we can then draw many $L$ functions for different $\vec{x}$, which will be linear in $\lambda$. Then:

- $h(\lambda) = \min_{\vec{x}} L(\vec{x},\vec{\lambda})$ basically says for each $\lambda$, pick the smallest $\vec{x}_i$. This basically results in the red highlighted line, which is concave.

The general observation is that $h(\lambda)$ is a pointwise minimum of linear functions $L$ in $\lambda$.

- pointwise minimum of linear functions is concave, (pointwise maximum of linear functions is convex)

- so the dual of a problem is concave, and a dual of a dual will be convex.

Therefore, now we have defined two (different yet related) problems

Primal Problem

\[p^* = \min_\vec{x}\max_{\lambda_i \ge 0} L(\vec{x},\vec{\lambda})\]which is the exact problem we needed to solve, but difficult to solve.

Dual Problem

\[d^* = \max_{\lambda_i \ge 0}\min_\vec{x} L(\vec{x},\vec{\lambda})\]which is different from what we need to solve, but much easier to solve. And we know that $d^* \le p^*$

However, the dual problem is useful because under certain conditions, $p^=d^$.

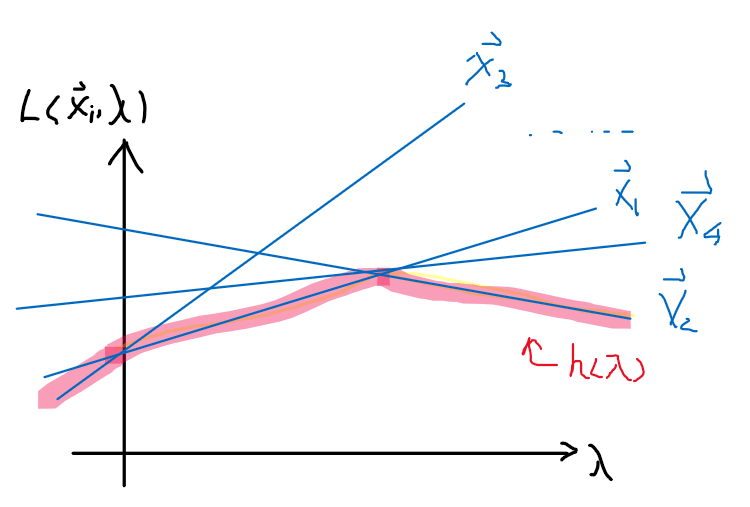

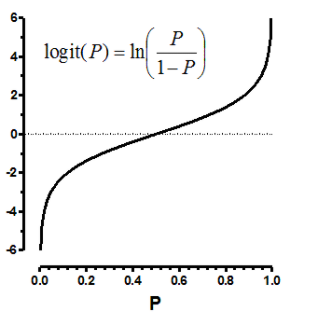

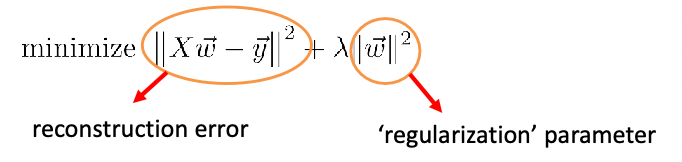

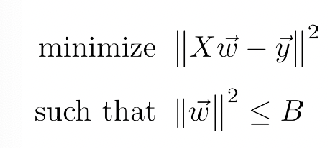

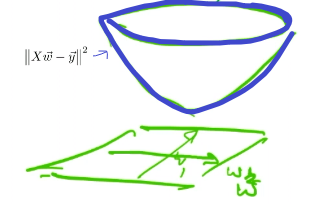

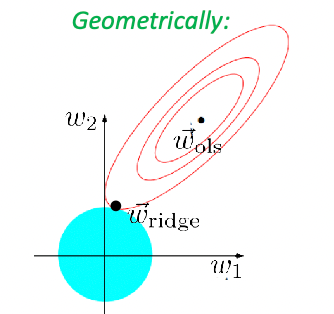

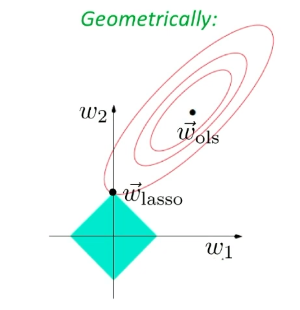

Duality Gap