CSEE4119 Computer Networks

- Chapter 1 Basics

- Chapter 2 Application Layer

- Chapter 3 Transport Layer

- Transport Services and Protocol

- Multiplexing and Demultiplexing

- User Datagram Protocol

- Principle of Reliable Data Transfer

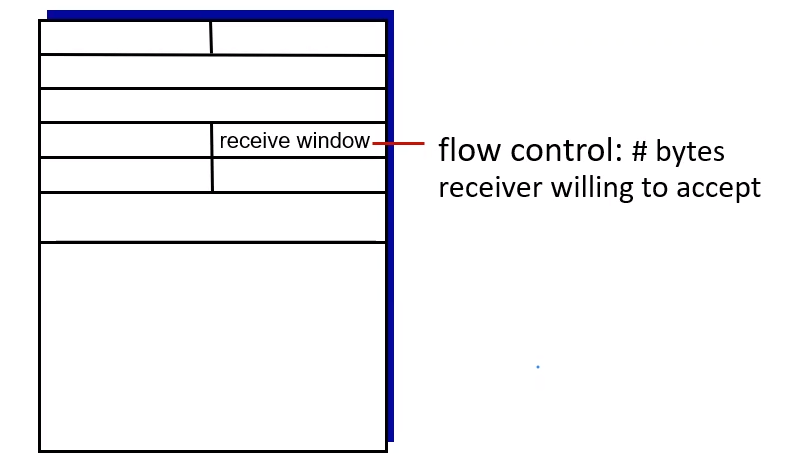

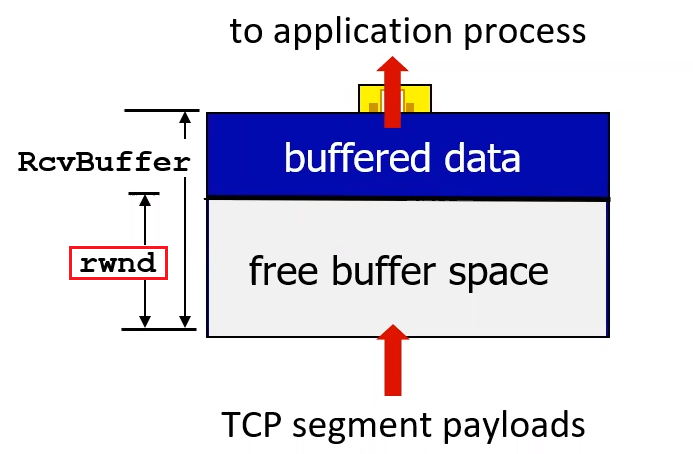

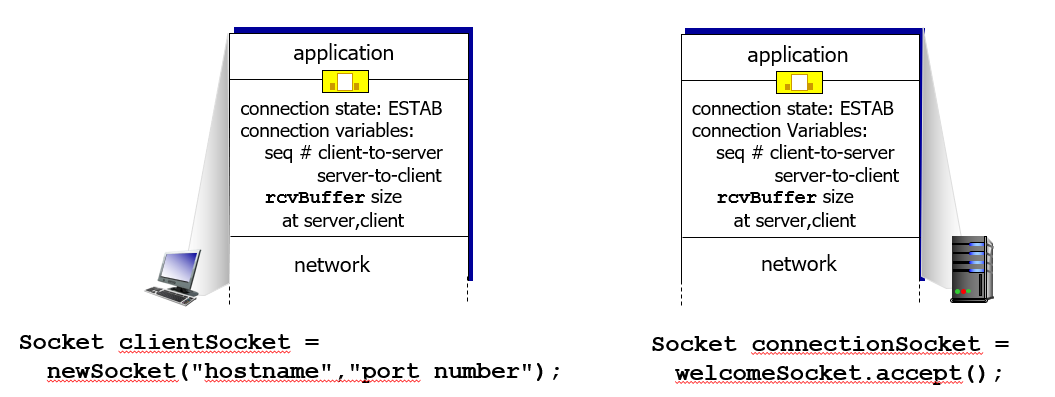

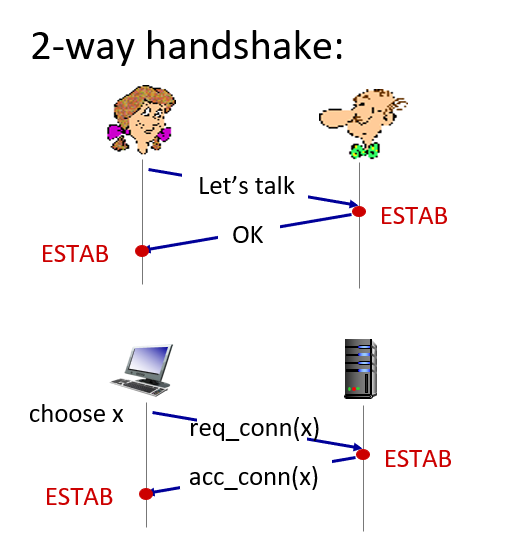

- TCP

- Network Assisted and Delay-Based Algorithm

- Fairness

- Evolving Transport Layer Functionality

- Chapter 4 Network Layer: Data Plane

- Chapter 5 Network Layer: Control Plane

- Chapter 6 Link Layer

- Miscellaneous

Chapter 1 Basics

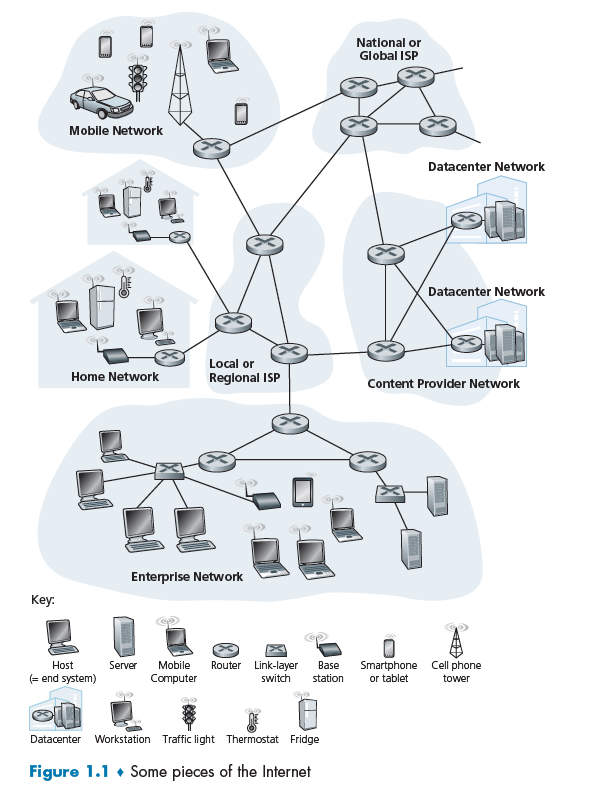

The internet/networking system now evolves into including lots of applications.

Additionally:

- End systems are connected together by a network of communication links (e.g. copper wire) and packet switches

Packet Switch:

A packet switch takes a packet arriving on one of its incoming communication links and forwards that packet on one of its outgoing communication links. e.g. routers and link-layer switches

It might be important to know the difference between a switch and a router.

Router:

Routers are computer networking devices that serve two primary functions:

- create and maintain a local area network

- manage the data entering and leaving the network as well as data moving inside of the network.

- has one connection to the Internet and one connection to your private local network.

Additionally,

- Routers operate at Layer 3 (Network)

- Router store IP address in the routing table

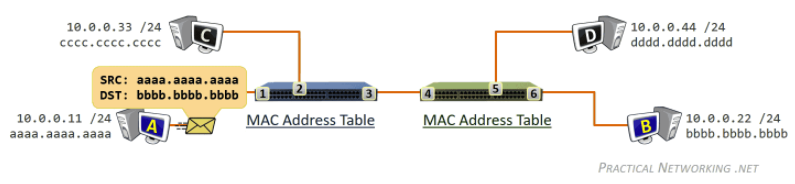

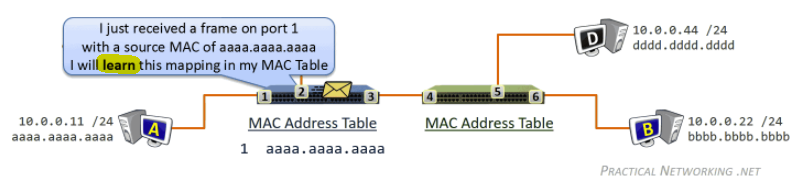

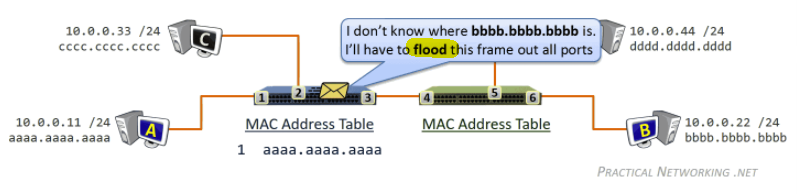

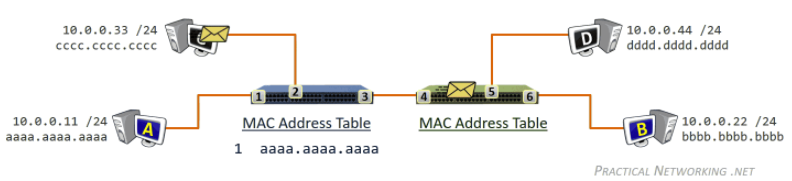

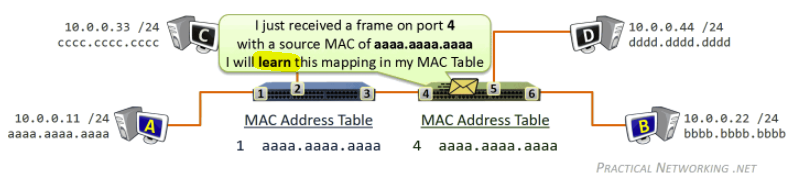

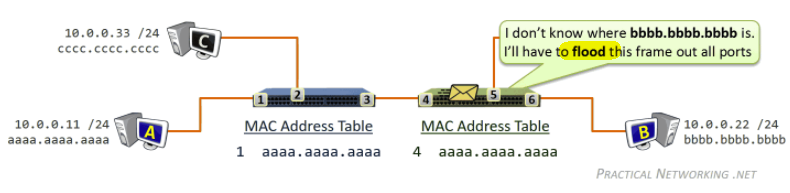

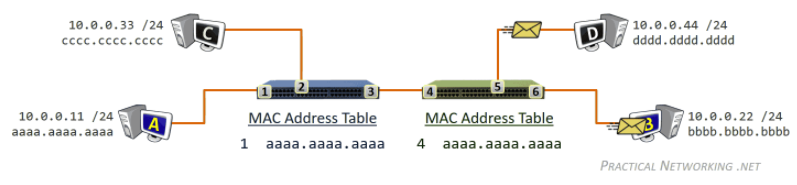

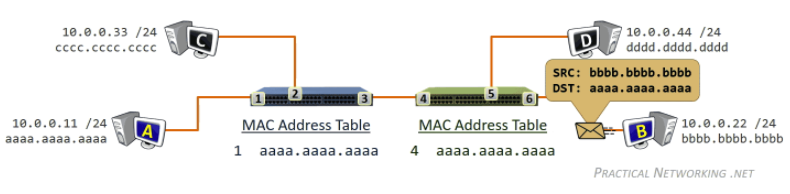

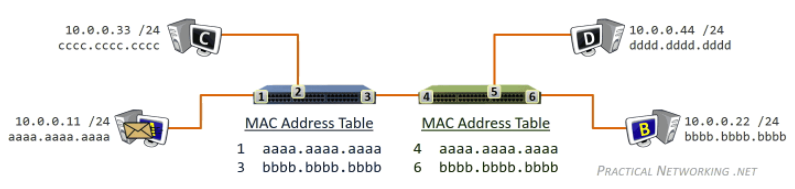

Link-Layer Switch:

A network switch is a computer networking device which connects various devices together on a single computer network. It may also be used to route information in the form of electronic data sent over networks.

So its functions are:

- store MAC address in a lookup table

- decide and forward data to the right destination, i.e. which computer

Additionally,

- Network switches operate at Layer 2 (Data Link Layer)

- Switches store MAC address in a lookup table

Last but not least:

ISP

End systems access the Internet through Internet Service Providers (ISPs), including residential ISPs such as local cable or telephone companies.

To be more detailed, it does:

- Provide a physical network connection to your device (e.g. wireless to your smart phone, or wired to your home router), supporting some basic data communication protocols (e.g. 4G, DSL, DOCSIS, Ethernet, …).

- Providing Internet Protocol (IP) connectivity, i.e. provide your device with an IP address, receive IP traffic from your device and route (send) it onto the Internet, and receive IP traffic from the Internet and route it to your device.

The Network Edge

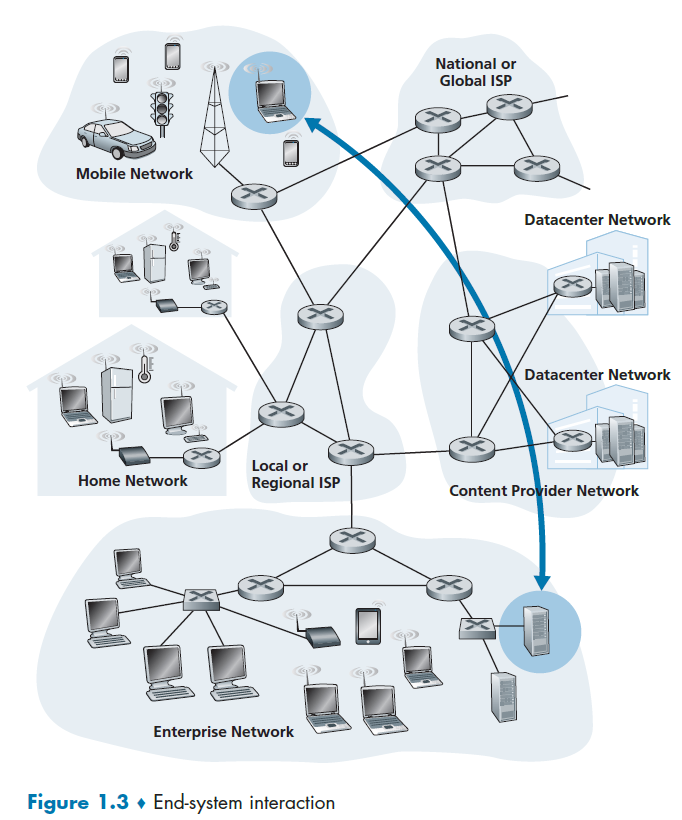

End-system:

Devices sitting at the edge of the Internet. End systems are also referred to as hosts because they host (that is, run) application programs such as a Web browser program, a Web server program, an e-mail client program, or an e-mail server program.

- Hosts are sometimes further divided into two categories: clients and servers. Today, most of the servers from which we receive search results, e-mail, Web pages, videos and mobile app content reside in large data centers.

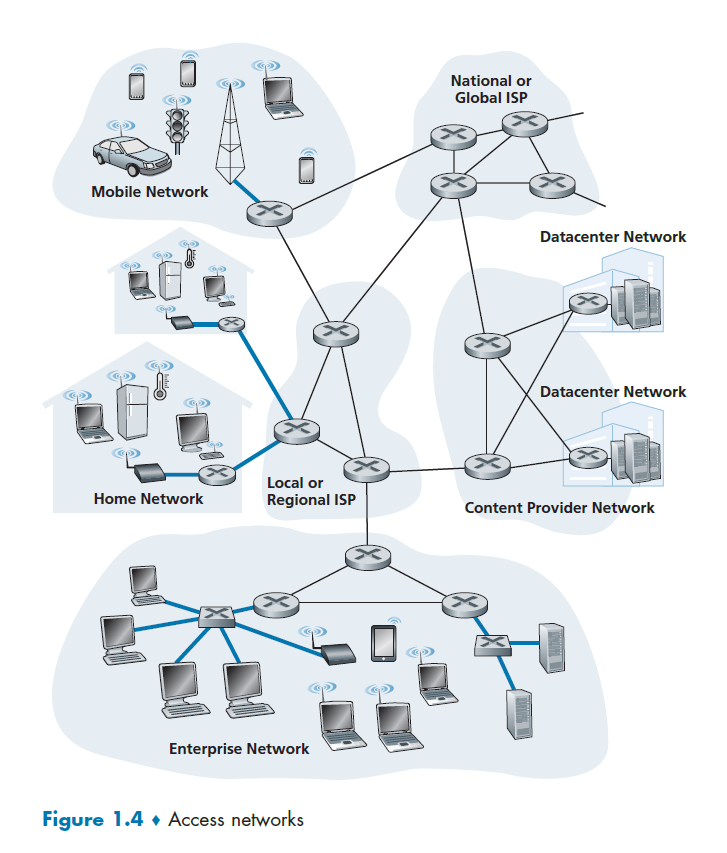

Access Networks

Access Network

The network that physically connects an end system to the first router (also known as the “edge router”)

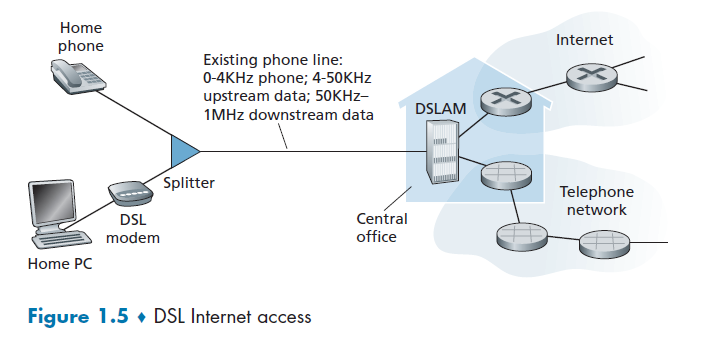

Digital Subscriber Line

A residence typically obtains DSL Internet access from the same local telephone company (telco) that provides its wired local phone access. Thus, when DSL is used, a customer’s telco is also its ISP.

Some components used here are:

DSL Modem

DSL modem uses the existing telephone line exchange data with a digital subscriber line access multiplexer (DSLAM). The home DSL modem takes digital data and translates it to high-frequency tones for transmission over telephone wires to the CO (central office).

This means that the residential telephone line carries both data and traditional telephone signals simultaneously, which are encoded at different frequencies:

- A high-speed downstream channel, in the 50 kHz to 1 MHz band

- A medium-speed upstream channel, in the 4 kHz to 50 kHz band

- An ordinary two-way telephone channel, in the 0 to 4 kHz band

Splitter

Splitter separates the data and telephone signals arriving to the home and forwards the data signal to the DSL modem

DSLAM

DSLAM separates the data and phone signals from home and sends the data into the Internet.

Last but not least:

- this is installed particularly per user (if they have an existing telephone line).

- the DSL standards define multiple transmission rates, including downstream transmission rates of 24 Mbs and 52 Mbs, and upstream rates of 3.5 Mbps and 16 Mbps

Voice Encoding

Since essentially you want to convert a wave (your speech wave -> wave in voltage) to digital bits, you need:

- sample frequency

- number of samples

To decide the quality of your recorded voice. For example, if It captures speech in a range of 300 to 3.4 kHz, samples at 8000 samples/second with 8 bits per sample, then we have it resulting in 64 kbps of voice encoding.

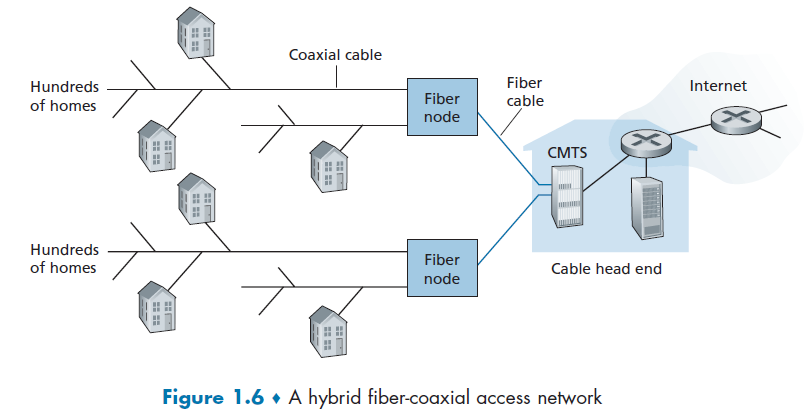

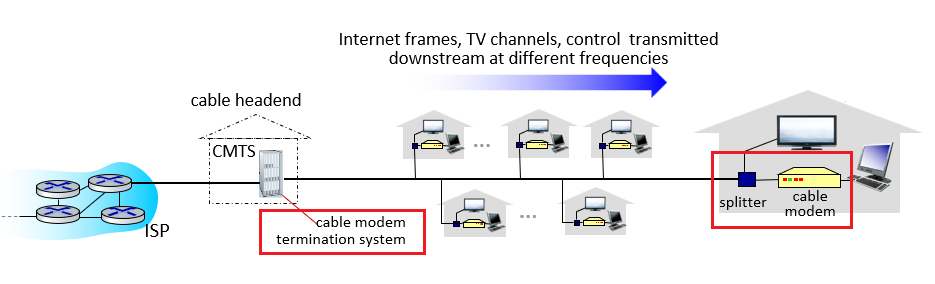

Cable Internet Access

Cable Internet access makes use of the cable television company’s existing cable television infrastructure. Therefore, A residence obtains cable Internet access from the same company that provides its cable television.

some important components here are defined below

HFS

Because both fiber and coaxial cable are employed in this system, it is often referred to as hybrid fiber coax (HFC).

Cable Modems

Cable internet access requires special modems, called cable modems. As with a DSL modem, the cable modem is typically an external device and connects to the home PC through an Ethernet port.

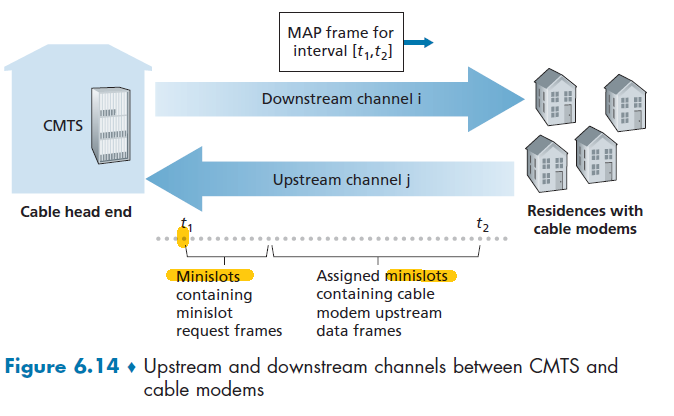

CMTS

At the cable head end, the cable modem termination system (CMTS) serves a similar function as the DSL network’s DSLAM—turning the analog signal sent from the cable modems in many downstream homes back into digital format.

However, the larger difference here is that:

- it is a shared broadcast medium. In particular, every packet sent by the head end travels downstream on every link to every home and every packet sent by a home travels on the upstream channel to the head end. For this reason, download and upload speed are sometimes slowed due to simultaneous uses.

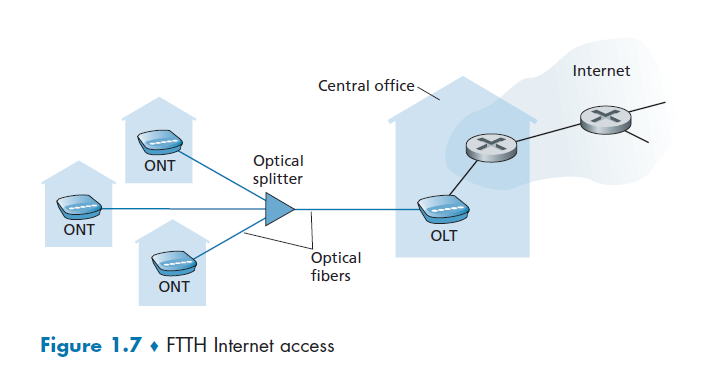

Fiber to Home

Fiber to the home (FTTH). As the name suggests, provides an optical fiber path from the CO directly to the home.

- in the DSL case, telephone line was used

- in the cable internet access case, coaxial cables were shared

where we see that

- it is split into individual customer-specific fibers until the fiber gets relatively close to the homes, but they are all fiber optics

There are two competing optical-distribution network architectures: active optical networks (AONs) and passive optical networks (PONs). AON is essentially switched Ethernet, which is discussed in Chapter 6.

PON, which is used in Verizon’s FiOS service. Figure 1.7 shows FTTH using the PON distribution architecture.

Optical Splitter

The splitter combines a number of homes (typically less than 100, as compared to the cable internet access, which typically has thousands) onto a single, shared optical fiber, which connects to an optical line terminator (OLT) in the telco’s CO

Optical Line Terminator

OLT, providing conversion between optical and electrical signals, connects to the Internet via a telco router.

Optical Network Terminator

At home, users connect a home router (typically a wireless router) to the ONT and access the Internet via this home router. This basically substitutes the role of modems in previous examples.

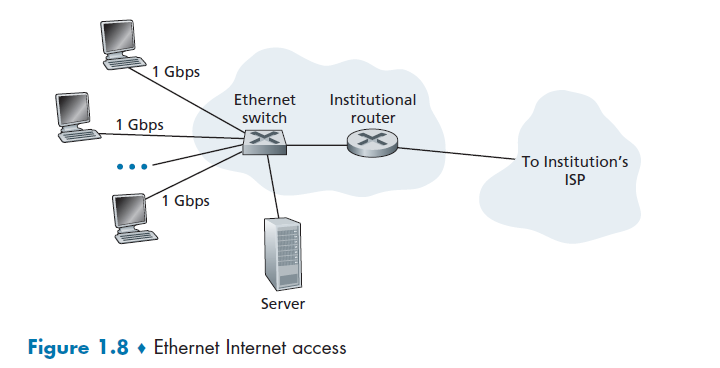

Ethernet and WiFi

Ethernet Switch

Ethernet users use twisted-pair copper wire to connect to an Ethernet switch, and the Ethernet switch, or a network of such interconnected switches, is then in turn connected into the larger Internet.

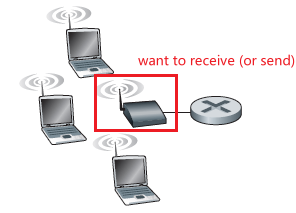

In a wireless LAN setting, wireless users transmit/receive packets to/from an access point that is connected into the enterprise’s network (most likely using wired Ethernet), which in turn is connected to the wired Internet.

Access Point

A networking hardware device that allows other Wi-Fi devices to connect to an existing wired network. Essentially access point (base station) is just a sub-device within the LAN providing another location for devices to connect on the network.

- therefore, access point can create WLANs (Wireless Local Area Networks) while being connected to routers/switches and its functionality is limited to this only. This can be done by routers as well, e.g. embedded in a wireless router, hence you don’t require a separate AP.

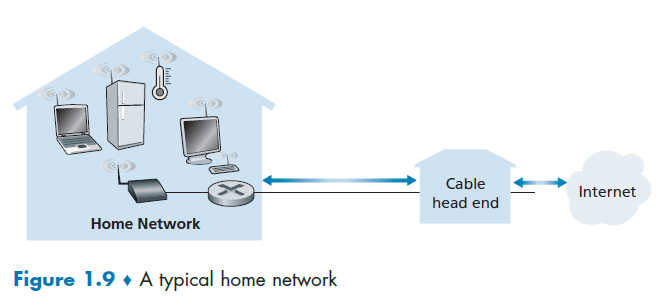

Many homes combine broadband residential access (that is, cable modems or DSL) with these inexpensive wireless LAN technologies. In Figure 1.9 shows a typical home network.

where here:

- base station (the wireless access point), which communicates with the wireless PC and other wireless devices in the home

- home router that connects the wireless access point, and any other wired home devices, to the Internet

Network Core

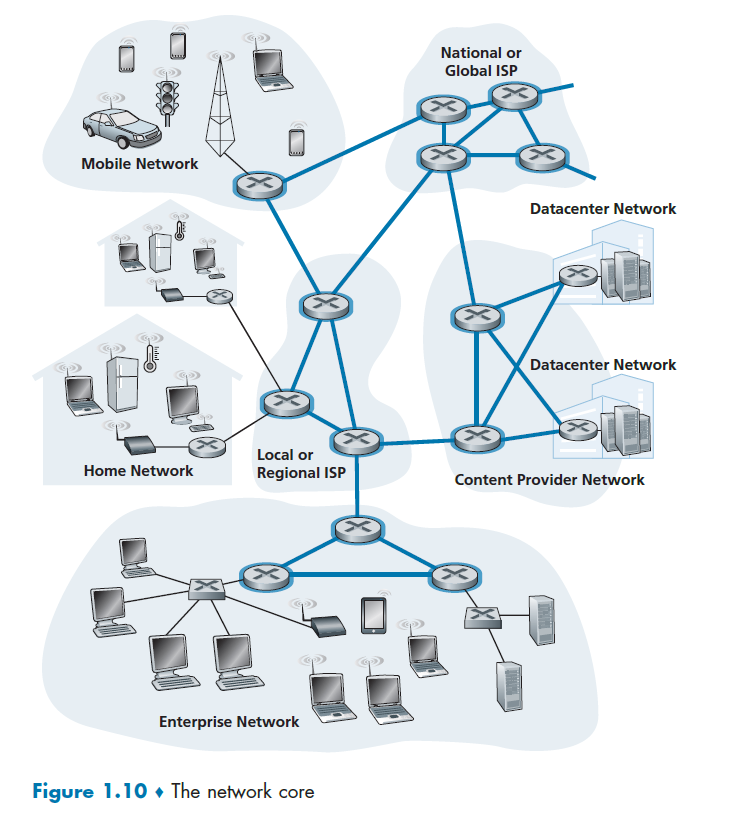

We have covered the edges used in our network. Figure 1.10 highlights the network core with thick, shaded lines.

Performance (Delay, Loss, Throughput)

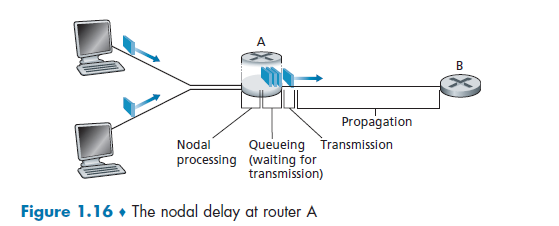

The idea is to get good performance out of switches without guarantee (of delivery). As a packet travels from one node (host or router) to the subsequent node (host or router) along this path, the packet suffers from several types of delays at each node along the path.

First, we need to know how packet loss and delay occurs.

So we get:

- when the packet arrives at router A from the upstream node, router A examines the packet’s header to determine the appropriate outbound link for the packet.

- Then the packet is directed to the queue that precedes the link to router B

- then the packet has to wait on a link until there is no other packet currently being transmitted on the link

- then, it needs to push the bits of the packet onto the link

- finally, the electricity (voltage representing those bits) needs to travel towards B

- at B, we repeat the same step from 1-6

Therefore, the most important delays are

\[d_{\text{total}}=d_{\text{proc}}+d_{\text{queue}}+d_{\text{trans}}+d_{\text{prop}}\]where:

-

$d_{\text{proc}}$ is process delay, required to examine the packet’s header and determine where to direct.

- other usage include time need to check bit errors, etc.

-

$d_{\text{queue}}$ waits to be transmitted onto the link.

- Packet Queue: in general, a switch/router will have a packet queue/buffer, since it could only send one packet at a time.

-

$d_{\text{trans}}$ the amount of time required to push (that is, transmit) all of the packet’s bits into the link. Therefore, it depends on the size of information and the bandwidth

-

this can be computed by:

\[\text{transmission delay} = \frac{L}{R}\]where the length/size of the packet is $L$ bits, and the bandwidth/transmission rate of the route is $R$ bits/sec.

-

-

$d_{\text{prop}}$ time taken to transmit the data over the medium. This then depends on the distance, medium etc.

- e.g. under sea cable would have a larger $d_{\text{prop}}$, but for data centers, this would be small.

Note

- in reality, the routers keep a list of other connected router’s destination. However, the possibility of being congested in some of the router might not be recorded.

- One possible solution would be to measure latency yourself and then decide which route to send to (if you have control of it).

- Though there might be some fine changes of routes within a network, the link/route (i.e. weak tie) across networks will usually be stable.

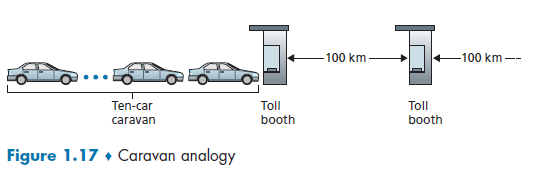

An analogy of the above process would be:

we know that:

- cars (=packet of 1 bit) contain some information

- highway segments between tollbooths (=links)

- tollbooth (=router)

Then, given that cars (=packet) propagates at speed of 100km/hour and each toll booth takes 12 seconds to service car ($d_{\text{trans}}$), we know that, for the last car (bit) to reach the destination

- each car needs to be processed/examined

- each car needs to wait for previous cars

- each car then is pushed on to the highway after 12 seconds of service ($d_{\text{trans}}$)

- each car then travels to the next tollbooth in 1 hour. $(d_{\text{prop}})$

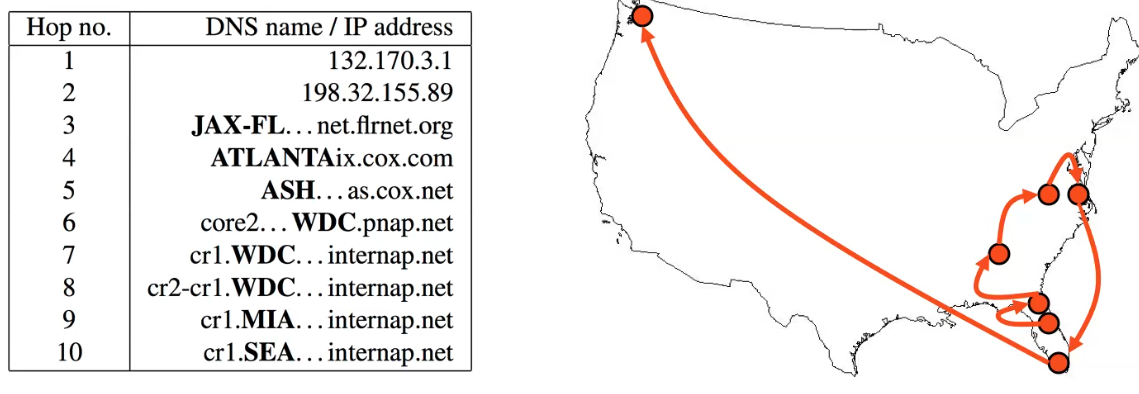

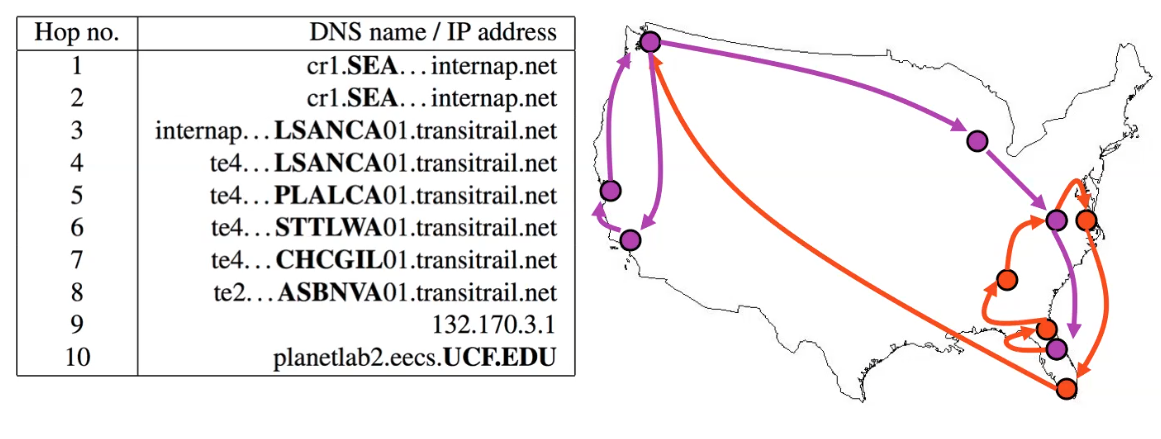

Traceroute

traceroute in Linux:

➜ traceroute nytimes.com

traceroute to nytimes.com (151.101.1.164), 30 hops max, 60 byte packets

1 JASONXYU-NB1.mshome.net (172.27.144.1) 0.347 ms 0.326 ms 0.270 ms

2 cc-wlan-1-vlan3572-1.net.columbia.edu (209.2.216.2) 3.706 ms 3.696 ms 3.681 ms

3 cc-core-1-x-cc-wlan-1.net.columbia.edu (128.59.255.77) 3.673 ms 3.670 ms 3.627 ms

4 nyser32-gw-1-x-cc-core-1.net.columbia.edu (128.59.255.6) 3.658 ms 3.646 ms 3.640 ms

5 199.109.104.13 (199.109.104.13) 5.095 ms 5.087 ms 5.070 ms

6 nyc32-55a1-nyc32-9208.cdn.nysernet.net (199.109.107.202) 5.159 ms 3.572 ms 3.559 ms

...

where basically this is delay in a roundtrip (forth and back).

- the number in the front

1,2,3,4...means the number of TTL defined. In the above, 6 samples are tried, each with TTL 1 up to 6 respectively. - basically this shows you the hops that is needed from your end point to the destination end point, as well as the total latency.

- sometimes there are

*, which indicates that the router did not respond (e.g. configured not to respond to you) cc-wlan-1-vlan3572-1.net.columbia.eduwould be the resolved DNS/host name

Note that:

traceroutein this case would have measured all the four delays for each hop.

TTL (time to live): the number of hops in which the message should reach the destination. This is to prevent the case that your packets will go in a cycle and never reaches the destination. If the TTL gets to zero, then an error message will be sent back to the source

- For example, if the packet doesn’t reach the destination in 20 hops, just give up the packet.

- this is also added in the network layer.

- detailed implementation of

traceroutecan be found in Examples of ICMP Usages- all packets will have this field (defined by the protocol)

For Example

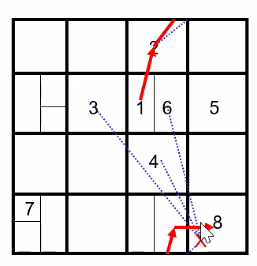

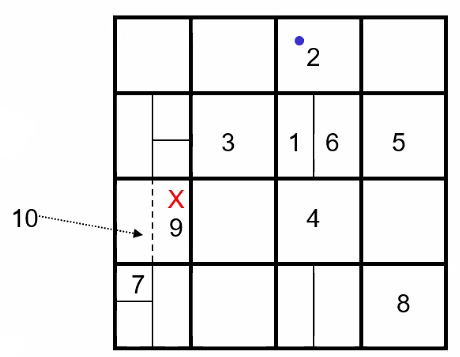

Consider the case when you want to debug the latency from Orlando to Seattle and vice versa.

Suppose you are at Orlando. Upon using traceroute, you see:

so that we have wasted some routes by going back to Florida.

Now, the problem is that routes on the internet are often asymmetric. So that going from Seattle to Orlando, we might have the path in purple:

Therefore, due to the asymmetry:

traceroutefrom a reversed direction might have a different result.

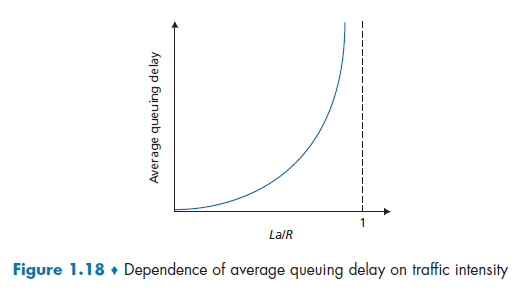

Queuing Delay and Packet Loss

Queuing delay is more complicated than others. Unlike the other delays, this varies from packet to packet.

Therefore, when characterizing queuing delay, one typically uses statistical measures, such as

- average queuing delay

- variance of queuing delay

- probability that the queuing delay exceeds some specified value

Suppose, for simplicity, that all packets consist of $L$ bits, and that on average you get $a$ packets per second. Suppose your transmission rate is $R$ (being pushed off the queue to the link):

Here we notice that:

-

due to variations in packet arrival rate, when the traffic intensity is close to $1$, there will be intervals of time when the arrival rate exceeds the transmission capacity.

- on the other hand, if packets arrive exactly periodically - that is, one packet arrives every $L/R$ seconds - then every packet will arrive at an empty queue and there will be no queuing delay.

-

$La/R$ is also called the traffic intensity.

-

this is assuming there is some distribution of incoming intensity instead of a uniform one, e.g. the Poisson distribution of incoming intensity. As a result, there will be bursts of influxes at times and the queue would build up. The resulting formula would be:

\[d \propto \frac{\rho}{1-\rho}\]where:

- $\rho \equiv La/R$ is the traffic incoming intensity.

However, no loss occurs if we assumes that our router can hold infinitely number of bits. Yet this is often not true.

Packet Loss

- When a packet can arrive to find a full queue, so that there is no place to store such a packet, a router will drop that packet; that is, the packet will be lost.

- In general, the fraction of lost packets increases as the traffic intensity increases

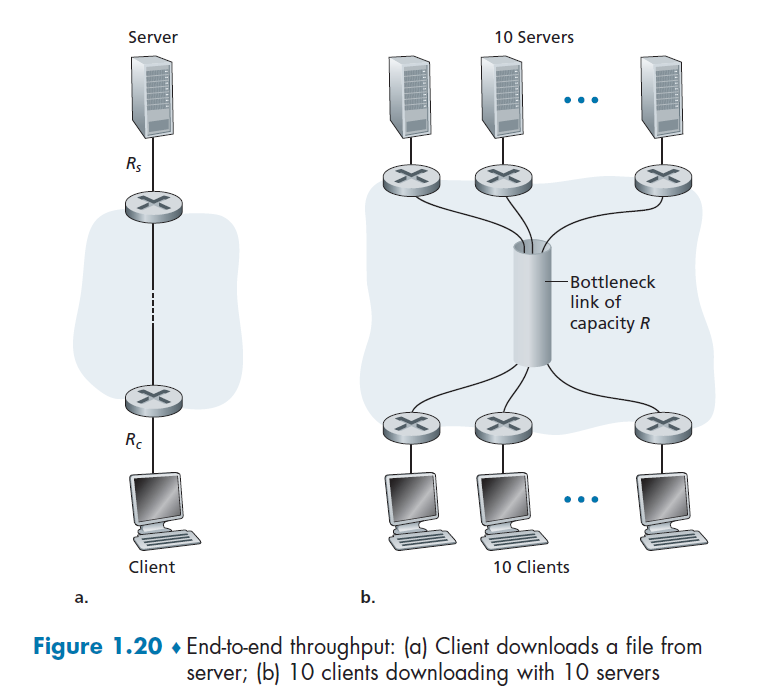

Throughput

Throughput is different against transmission rate/bandwidth, because it is macroscopic.

Throughput

Number of bits per second that a client receives data from a host.

- therefore, it is not able between links. It is more like end-to-end measurement.

Consider the following two cases:

For case a), suppose we have $R_c$ for the bandwidth between router and client, and $R_s$ for the server. Then:

\[\text{Throughput} = \min\{R_c,R_s\}\]due to bottlenecks.

For case b), let all 10 serves have the same $R_s = 2$ Mbps, and all clients have $R_c=1$ Mbps. Assume the shared link is fair to every server, and it has $R=5$ Mbps. Then we have:

\[\text{Throughput} = \min{\{R_s, R/10, R\}} = 5/10 = 500 \text{ Kbps}\]Protocol Layers and Their Service Models

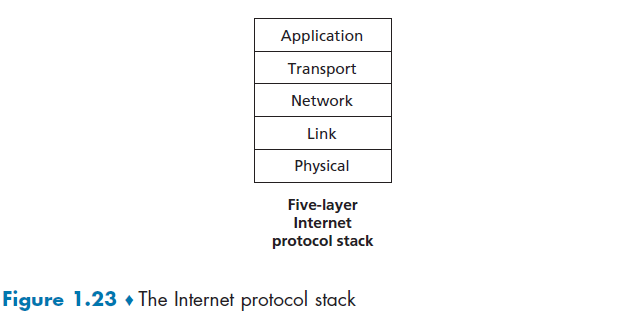

The entire core system of internet is very complex, since we need to organize numerous applications and protocols, various end systems and etc. To manage the complex system, we split them into manageable components (layers) and build them up.

Protocol Layering

The idea is to separating a big task into smaller tasks, and a combination of those small stacks become the protocol stack. This is needed since it needs to go though mediums such as routers, ISP, etc.

Consider the example of sending text massages from me to mom, then some challenges we need to overcome would be:

- security

- data integrity

- routing

- etc.

network designers organize protocols (tasks) - and the network hardware and software that implement the protocols - in layers. When taken together, the protocols of the various layers are called the protocol stack.

where:

- Application Layer: sending data to correct application/software

- Transport: Recovery of lost packets, congestion control, rate control

- Network: Sending packets in the right destination/direction

- Link/MAC: Getting information correctly between 2 physically connected points

- Physical: physical/science needed to send info between 2 physically connected points (i.e. how to physically transmit bit information)

Note

- in each layer, there is some protocols defining what each information received means, and what it should do next.

- in each layer, it assumes the other layer is doing their stuff correctly

Advantage

- Modularity = easier to maintain and update

Disadvantage

- One layer may duplicate lower-layer functionality. For example, many protocol stacks provide error recovery on both a per-link basis and an end-to-end basis

Analogy: Post Office

Consider the example you trying to send a recorded TV show to your friend.

Application: received the recorded disk, knows to use the TV/laptop to play it.

Transport: (e.g. error control) your friend telling you that something went wrong. e.g. you sent me the wrong episode.

Other stuff are all dealt by the post office.

Application Layer

Parsing/forming the packet in the application. So this is application specific, and only implemented in the end points as well.

Some example protocols at this level are:

- HTTP protocol (which provides for Web document request and transfer)

- SMTP (which provides for the transfer of e-mail messages)

- FTP (which provides for the transfer of files between two end systems).

Packets of information on this level will be referred to as a message.

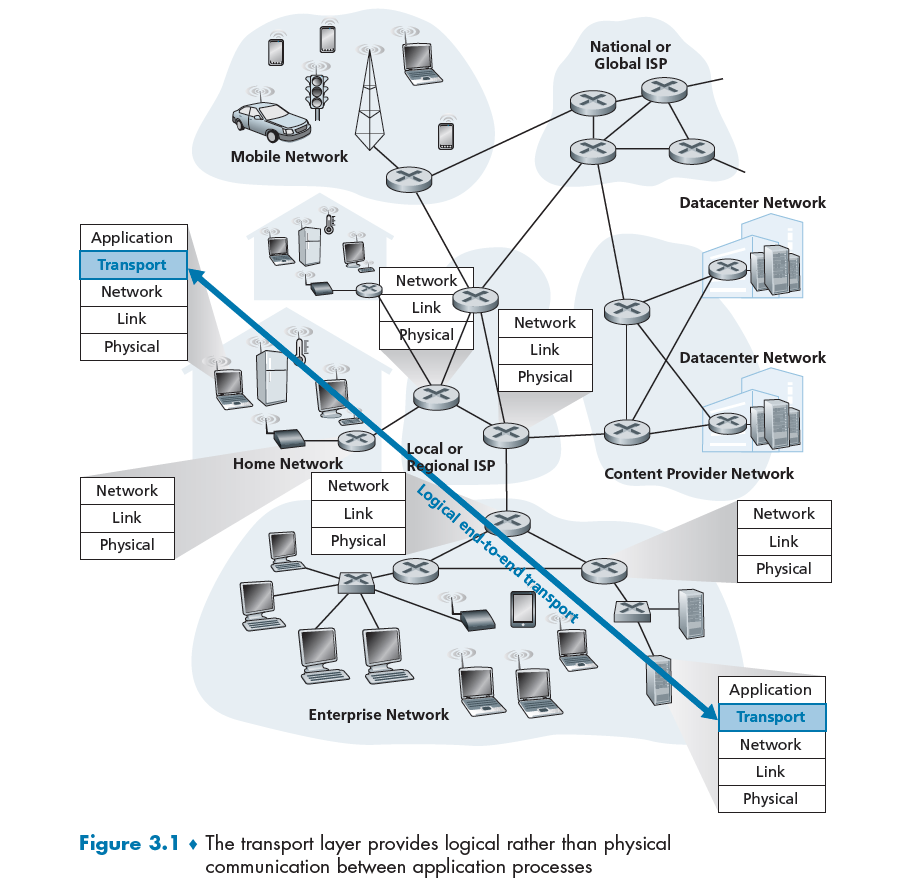

Transport Layer

This is only implemented at end points, and adds information such as your device tells the source to repeat the information if you didn’t “hear it” clearly.

In the Internet, there are two transport protocols:

- TCP (guaranteed delivery of application-layer messages)

- UDP

Some functionalities here include:

- congestion control by having source throttles its transmission rate when the network is congested (TCP).

- breaks long messages into shorter segments

Packets of information on this level will be referred to as a segment.

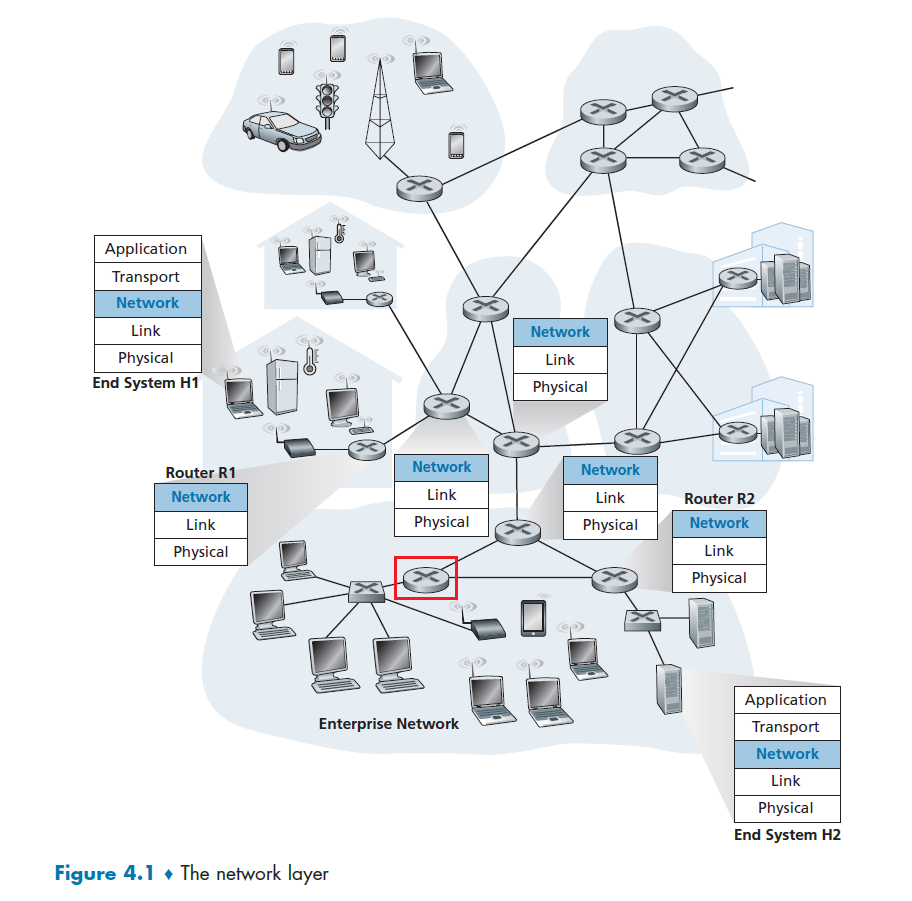

Network Layer

Built upon link layer, assuming we can get a frame across two end points (via lower layers), we need to now consider where to send it. So this layer receives a frame and figures out the next hop.

- moving network-layer packets known as datagrams from one host to another.

The upper level Transport layer passes a segment (packet of information) and a destination address to the network layer (this), so we have the:

- IP protocol (which defines the fields in the datagram as well as how the end systems and routers act on these fields)

- or other routing protocols that determine the routes that datagrams take between sources and destinations

At this level, we say that data sent are datagram/packets.

Link Layer

Sending a frame, i.e. a collection of bits, over the physical layer.

Examples of link-layer protocols include:

- Ethernet

- WiFi

- cable access network DOCSIS protocol

As datagrams typically need to traverse several links to travel from source to destination, a datagram may be handled by different link-layer protocols at different links along its route.

Some link-layer protocols provide reliable delivery, from transmitting node, over one link, to receiving node, which has nothing to do with the reliability of TCP, for example, which guarantees at the application level.

Therefore, this also have additional information added to the packet.

Physical Layer

Having a medium, e.g. a copper wire, to transmit bit information across 2 physically connected points. This is the only and final place where transmission of data happened. All the above protocols are just parsing information and deciding what to do next.

Protocols at this level deal with how bits are moved between the two connected points:

- Ethernet has many physical-layer protocols: one for twisted-pair copper wire, another for coaxial cable, another for fiber, and so on.

Network Interface Card

Physical layer and data link layers are responsible for handling communication over a specific link, they are typically implemented in a network interface card (for example, Ethernet or WiFi interface cards) associated with a given link.

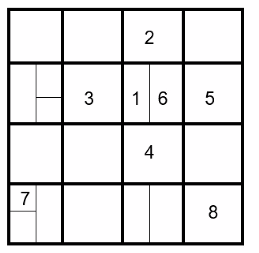

Encapsulation

where:

-

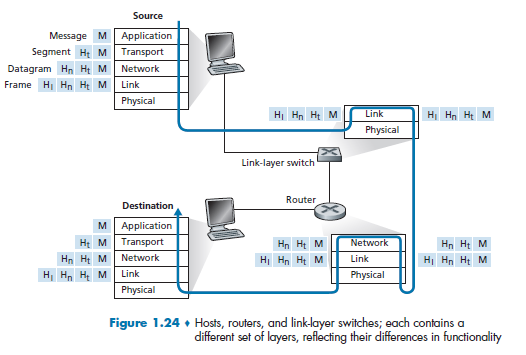

Figure 1.24 shows the physical path that data takes

- we knew that some layers, e.g. Transport and Application, are only implemented at the end points

- what physically connects the two is the edge across physical layers. So in the end, this is the only place where data is actually transmitted.

Note

- that hosts implement all five layers, and must be identical. As otherwise a message from application of user $A$ cannot be understood by the architecture in the computer of $B$.

What actually happens when you send (origin being $A$), e.g. an email would be:

- Email application start with Application Layer forming the message, and ask the Transport Layer to deal with it

- Transport layer then takes the big package and split them into packets (if needed due to large data), and add appends additional information (so-called transport-layer header information) will be used by the receiver-side transport layer

- e.g. ensuring there is destination/source IP, destination/source MAC, packet sequence id, etc. Then, those packets are passed to Network Layer.

- The transport-layer segment thus encapsulates the application-layer message, and pass down to Network Layer

- Network Layer then appends network-layer header information to the segment, such as source and destination end system addresses, creating a network-layer datagram

- figures out where is the next hop, e.g. in the figure, which router/link-layer switch to send to.

- then pass to Link Layer

- Link Layer will add its own link-layer header information and create a link-layer frame, and pass to physical layer

- Physical layer sends the bits to the next hop, a switch in the figure

Now, at the switch:

- receives data from the Physical Layer, parse the data and move up

- parse the data in the Link Layer, recognize the Ethernet addresses and what to send to the next router, so pass down again to physical layer

- Physical Layer then send the frame by bits.

Note

- notice the need to go up and down the stack, if we need to “forward” the data to the next device.

Eventually, repeating the process will let the data reach the destination.

Protocol Stack Variants

Sometimes additional layers could be added in reality, such as:

where here we added a security layer basically.

Note

- This only works if both end-points have the same added layer. Otherwise, it would not be able to process the information correctly.

The more popular ones now is the OSI model:

where:

- this is the most common implemented model now

Chapter 2 Application Layer

In this chapter, we study the conceptual and implementation aspects of network applications.

- for example, we will learn how to create network applications via socket API

Recall that some common network apps include:

- web

- P2P file sharing

- streaming stored video (occupies the highest internet volume)

- etc.

Aim for Application Layer:

- network applications allows you to communicate between end points of different OS! So that you don’t need to write software for network-core devices (abstracted away), for example, a switch.

- i.e. you don’t need to know anything about the network layer when writing code in the application layer

Principles of Network Applications

At the core of network application development is writing programs that run on different end systems and communicate with each other over the network.

- For example, web application there are two distinct programs that communicate with each other: the browser program running in the user’s host (desktop, laptop, tablet, smartphone, and so on); and the Web server program running in the Web server host.

The key idea here is that your don't write and don’t need to write application programs on network-core devices (e.g. switches), as they never include application layer stuff and are already “taken care” of.

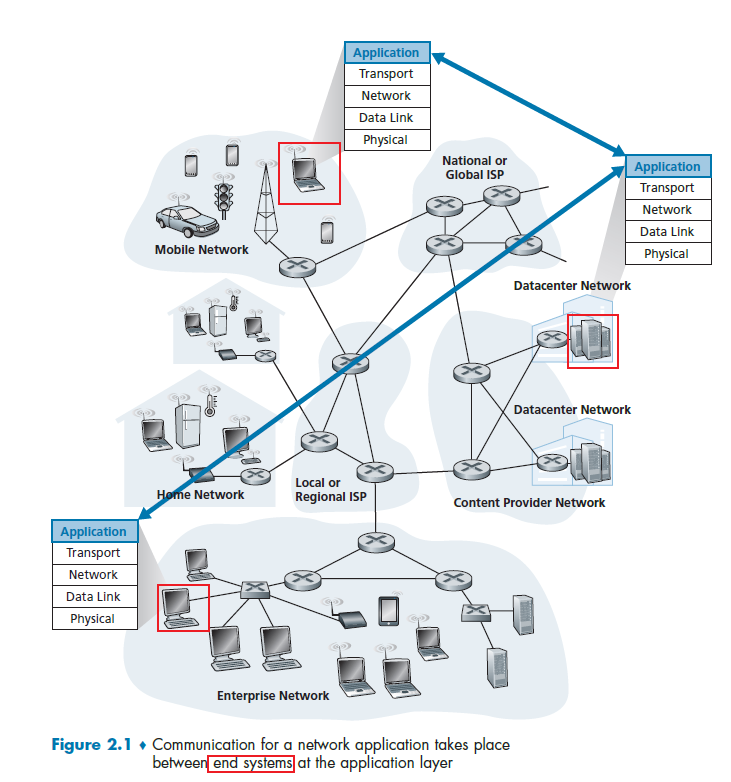

- This basic design- namely, confining application software to the end systems - as shown in Figure 2.1, has facilitated the rapid development and deployment of a vast array of network applications

Application Architecture

Again, the key idea is that, from the application developer’s perspective, the network architecture is fixed and provides a specific set of services to applications (i.e. API’s for them to work). The application architecture, on the other hand, is designed by the application developer and dictates how the application is structured over the various end systems.

Therefore, at this level, we just need to know how end-to-end devices should communicate to each other without worrying about what happens in between.

In modern application architecture (i.e. how end devices talk to other end devices), there are two predominant architecture paradigms:

- client-server architecture

- peer-to-peer (P2P) architecture.

Client-Server Architecture

A common example of network application would be a web browser which uses the following architecture

where basically you have:

- a server

- associated with some address (IP address), (e.g. whatever IP resolved from www.columbia.edu)

- always-on host

- data centers for scaling

- clients

- communicate with server. (the client initiates a connection in this case)

- but clients do not directly communicate with each other

- may have dynamic IP addresses

- essentially we move and connects to different routers, cell towers, etc.

- therefore, packets need to include the client’s IP so that the server can find it

- may be intermittently connected

- communicate with server. (the client initiates a connection in this case)

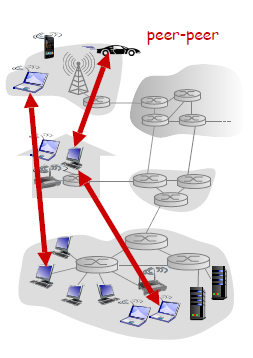

P2P Architecture

Now, there is minimal (or no) reliance on dedicated servers in data centers. Instead, we have application exploits direct communication between pairs of intermittently connected hosts, called peers.

- Basically a client, when joined this kind of network, would be that clients could be a server as well.

the motivation is so that

- resources are distributed between peers/nodes, instead of having a central server holding all the data

- so a peer connects directly with another peer

where each node/device here is:

- no always-on server, since it could be clients

- arbitrary end systems directly communicate

- both servers and clients

- peers request service from other peers, provide service in return to other peers

- self scalability – new peers bring new service capacity, as well as new service demands

- peers are intermittently connected and change IP addresses

- complex management

One example of popular P2P application is the file-sharing application BitTorrent.

For Example

You can think of the following: In a P2P file-sharing system, a file is transferred from a process in one peer to a process in another peer.

Then, this is equivalent of having:

- With P2P file sharing, the peer that is downloading the file is labeled as the client

- the peer that is uploading the file is labeled as the server.

Client vs Server

- In the context of a communication session between a pair of processes, the process that initiates the communication (that is, initially contacts the other process at the beginning of the session) is labeled as the client. The process that waits to be contacted to begin the session is the server.

Processes Communicating

In any application architecture talked about above, we basically have processes on different end points communicating over the network.

- when two processes are on the same device, inter-process communication calls (defined by OS) are used.

- when two processes are on different devices, e.g. a client and a server, then they communicate by exchanging network messages.

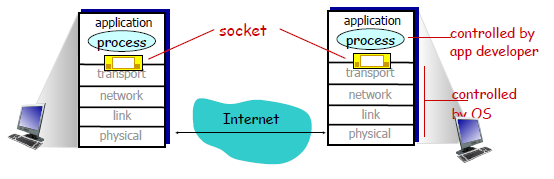

Interface between Process and Computer Network

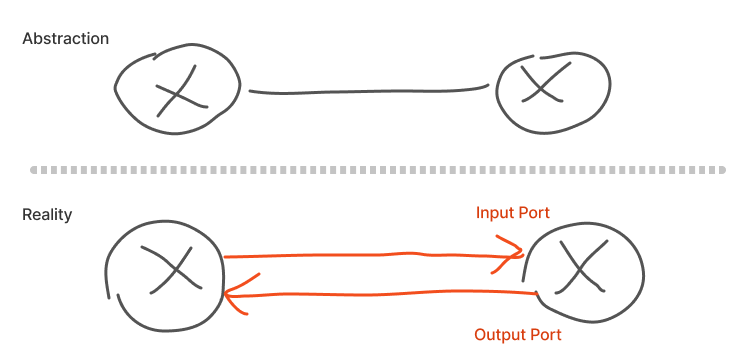

So what is the abstraction of the Transport Layer when you are programming an application?

A process sends messages into, and receives messages from, the network through a software interface called a socket.

- A socket is also referred to the Application Programming Interface (API) between the application and the network

where:

- the Internet in between is the network-core devices, including routes and switches, which is abstracted away from you

The only control that the application developer has on the transport layer is (quite small)

- the choice of transport protocol

- perhaps the ability to fix a few transport-layer parameters such as maximum buffer and maximum segment sizes

Addressing Processes

To receive messages, application processes must have some identifier

To identify the receiving process, two pieces of information need to be specified:

- an IP address: the address of the host

- a port number: an identifier that specifies the receiving process in the destination host.

- identifier includes both IP address and port numbers associated with process on host.

- example port numbers HTTP server: 80 (being also a kind of permanent address)

- in reality, it is common that many different applications ends using

80, which is somewhat now like a directory management server, that tells the application which port to go

Transport Services Available to Applications

On the application layer, we might define each message’s:

- types of messages exchanged, e.g., request, response

- message syntax: what fields in messages & how fields are delineated

-

message semantics: parsing a received message

-

rules for when and how processes send & respond to messages

- open protocols:

- defined in RFCs

- allows for interoperability and discussion/emendation

- e.g., HTTP, SMTP

Recall that the socket is our current interface for Transport-Layer protocol. Since it is an API, we choose the:

- which transport protocol to use

- some parameters available for that protocol

To decide which protocol to use, we can classify each protocol along four dimesions:

- reliable data transfer:

- if a protocol can guarantee that the data sent by one end of the application is delivered correctly and completely to the other end of the application

- so that the sending process can just pass its data into the socket and know with complete confidence that the data will arrive without errors at the receiving process

- on the other hand, you might need to have loss-tolerant applications

- throughput: the rate at which the sending process can deliver bits to the receiving process

- because network bandwidth is shared, available throughput can fluctuate with time

- natural service that a transport-layer protocol could provide, namely, guaranteed available throughput at some specified rate. So that the application could request a guaranteed throughput of $r$ bits/sec

- Applications that have throughput requirements are said to be bandwidth-sensitive applications. Many current multimedia applications are bandwidth sensitive due to encoding.

- timing

- timing guarantees can come in many shapes and forms. For example, every bit that the sender pumps into the socket arrives at the receiver’s socket no more than $100$ msec later (i.e. delay)

- for real-time applications, such as interactive games, this is very important

- security

- a transport protocol can encrypt all data transmitted by the sending process, and in the receiving host, the transport-layer protocol can decrypt the data before delivering the data to the receiving process

Recall that

- The instantaneous throughput at any instant of time is the rate (in bits/sec) at which Host B is receiving the file.

- instantaneous throughput during downloads = download speed

- If the file consists of $F$ bits and the transfer takes $T$ seconds for Host B to receive all F bits, then the average throughput of the file transfer is $F/T$ bits/sec

Note

- in general, the Applications themselves will calculate the delay and throughput of the current network by actively observing it. And in reality, the network usually does not guarantee anything. Therefore, applications needs to have some flexibility in those.

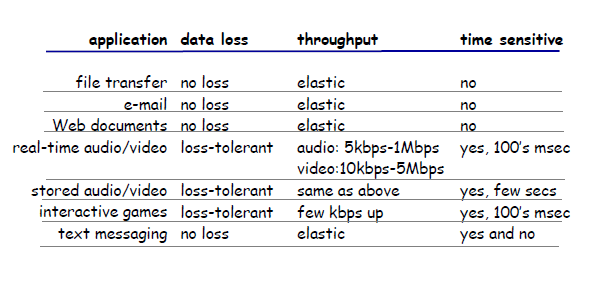

For Example

Transport Services Provided by the Internet

Up until this point, we have been considering transport services that a computer network could provide in general. Let’s now get more specific and examine the type of transport services provided by the Internet.

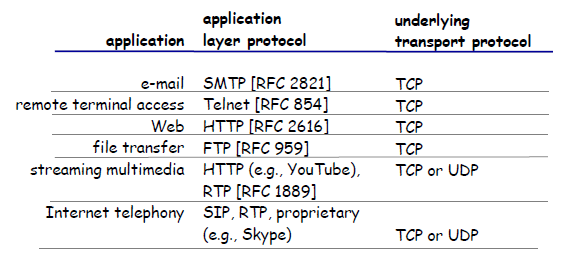

Some common Transport Layer Protocols would be:

- TCP: a connection-oriented service and a reliable data transfer service.

- UDP: simple and straight forward implementation

TCP Services

When an application invokes TCP as its transport protocol, you get (for free):

- Connection-oriented service: TCP has the client and server exchange transport layer control information with each other before the application-level messages begin to flow (i.e. the handshaking procedure)

- Then the connection is established, the connection is a full-duplex connection in that the two processes can send messages to each other over the connection at the same time.

- When the application finishes sending messages, it must tear down the connection

- this provides the basis for flow control and congestion control

- Reliable Data Transfer service: rely on TCP to deliver all data sent without error and in the proper order.

(Additionally, TCP also includes a congestion-control mechanism, a service for the general welfare of the Internet rather than for the direct benefit of the communicating processes.)

- throttles a sending process (client or server) when the network is congested between sender and receiver

UDP Services

UDP is a no-frills, lightweight transport protocol, providing minimal services. UDP is connectionless, so there is no handshaking before the two processes start to communicate. Therefore:

- unreliable data transfer service, so messages that do arrive at the receiving process may arrive out of order

TCP vs UDP

Below summarizes features of the two protocols.

| TCP | UDP |

|---|---|

| reliable transport between sending and receiving process | Nothing, but low overhead. |

| flow control: sender won’t overwhelm receiver | |

| congestion control: throttle sender when network overloaded | |

| connection-oriented: setup required between client and server processes (this is required for flow control or congestion control) | |

| does not provide: timing, minimum throughput guarantee, security | does not provide: reliable or in order delivery, flow control, congestion control, timing, throughput guarantee, security, or connection setup |

Note

- TCP itself does not provide security. In reality, it is usually the TLS = Transport Layer Security that provides encryption.

- In UDP, though packets might arrive out of order, you (application layer) can check and request the sender to sending again the missing packet.

So UDP might be advantageous when the data to transfer is small (e.g. DNS), or when you really need a small overhead for transfer:

Securing TCP

SSL/TLS

- provides encrypted TCP connection

- data integrity

- end-point authentication

- SSL is older, deprecated

TLS is at app layer

- apps use SSL libraries, that “talk” to TCP

- Client/server negotiate use of TLS

TLS socket API

- if you send a cleartext password into socket, it traverses Internet encrypted

Socket Programming

The goal is to learn how to build client/server applications that communicate using sockets.

Recall that there are two socket types for two transport services:

- UDP: unreliable datagram but low overhead

- TCP: reliable, byte stream-oriented

For Example

Application Example:

- client reads a line of characters (data) from its keyboard and sends data to server

- server receives the data and converts characters to uppercase

- server sends modified data to client

- client receives modified data and displays line on its screen

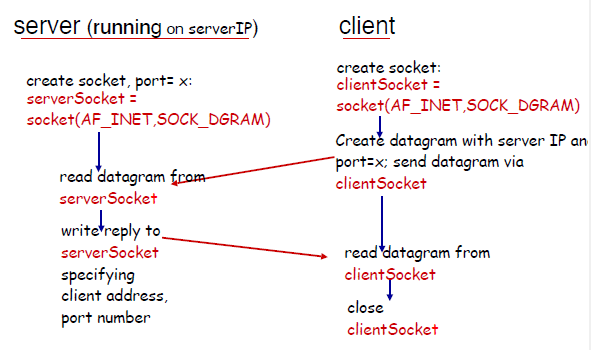

For UDP:

- recall that this is connectionless. Therefore, every packet needs to know its destination. As compared to TCP. which just needs to dump the packet in the established connection and you are done

- no handshaking before sending data

- sender explicitly attaches IP and port destination address for every packet

- transmitted data may be lost or received out-of-order

- (e.g. packet 1 sent on 10 MBPS link and packet 2 on 100MBPS, different routes)

- therefore, the application layer might need to anticipate and check this

In Python, you then get for the client:

from socket import *

serverName = ‘hostname’

serverPort = 12000

# creates socket

clientSocket = socket(AF_INET, SOCK_DGRAM)

message = raw_input(’Input lowercase sentence:’)

clientSocket.sendto(message.encode(), (serverName, serverPort)) # sends to a queue

# receive a message

modifiedMessage, serverAddress = clientSocket.recvfrom(2048)

print modifiedMessage.decode()

clientSocket.close()

- notice that

clientSocket.sendto(message.encode(), (serverName, serverPort))shows that we are specifying destination for every packet

Then for the server:

from socket import *

serverPort = 12000

# create socket

serverSocket = socket(AF_INET, SOCK_DGRAM)

serverSocket.bind(('', serverPort))

print (“The server is ready to receive”)

# listening and receiving

while True:

message, clientAddress = serverSocket.recvfrom(2048) # reads from a queue

modifiedMessage = message.decode().upper()

serverSocket.sendto(modifiedMessage.encode(), clientAddress)

- notice that there is only one socket

- since it is UDP, it also means that if the server if full in queue, the client will have no idea and still sends the packets (TCP would have notified the client)

UDP Takeaway

- the idea is that at every single time, there is only a one-way connection

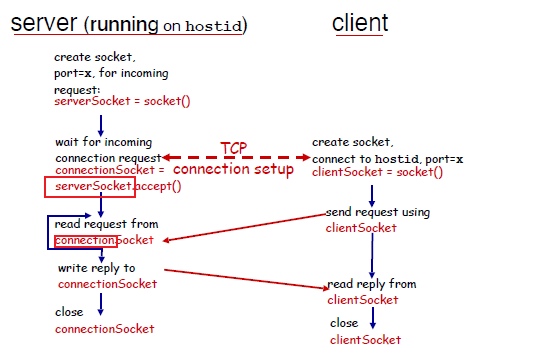

On the other hand, when we have a TCP protocol:

- client needs to be creating TCP socket, specifying IP address, port number of server process at socket creation

- when contacted by client, server TCP creates new socket for server process to communicate with that particular client

- i.e. you

forkthat socket - allows server to talk with multiple clients

- source port numbers used to distinguish clients (more in Chap 3)

- i.e. you

Therefore, the abstraction is:

where we notice that:

- on the server, when connection is established, you will get a listening

serverSocketand aconnectionSocketwhich you would use for sending/receiving

In the client side, you get:

from socket import *

serverName = ’servername’

serverPort = 12000

# creating socket

clientSocket = socket(AF_INET, SOCK_STREAM)

clientSocket.connect((serverName,serverPort)) # server destination specified at CREATION time

# sending/receiving message

sentence = raw_input(‘Input lowercase sentence:’)

clientSocket.send(sentence.encode()) # convert to INTERNET BYTE ORDER (inet_aton)

modifiedSentence = clientSocket.recv(1024)

print (‘From Server:’, modifiedSentence.decode()) # convert back to

clientSocket.close()

- as compared to UDP, which has

clientSocket = socket(AF_INET, SOCK_DGRAM)

The server then does:

from socket import *

serverPort = 12000

# create

serverSocket = socket(AF_INET,SOCK_STREAM)

# bind and listen

serverSocket.bind((‘’,serverPort))

serverSocket.listen(1)

print ‘The server is ready to receive’

# listenning/blocking

while True:

connectionSocket, addr = serverSocket.accept() # gets a new socket with fork

sentence = connectionSocket.recv(1024).decode() # convert to OS endianness

capitalizedSentence = sentence.upper()

connectionSocket.send(capitalizedSentence.encode())

connectionSocket.close() # done

so the extra things we need to do is:

- listen to the socket after

bind- the UDP does not need to

listen

- the UDP does not need to

- accept connection (and fork) to get a connection

- the UDP just needs to have a

serverSocket.recvfrom(2048)from the samebindsocket

- the UDP just needs to have a

Web and HTTP

Here we discuss two protocols that is common in application layer.

We assume that you know

-

how

htmlfile (“a markup language”) works with browsers, including how the images/videos/scripts works -

as well as how path names work

HTTP Overview

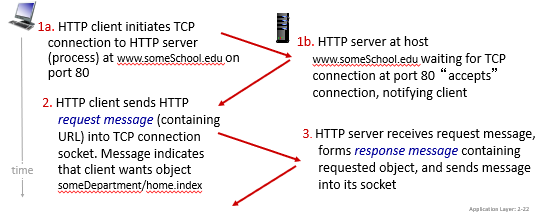

Because we need the browser to render the page correctly, so we need reliable data transfer. Hence, HTTP protocols chooses to use TCP, with port 80

Recall: how we implemented the TCP for sockets:

- client initiates TCP connection (creates socket) to server, port 80

- server accepts TCP connection from client

- HTTP messages (application-layer protocol messages) exchanged between browser (HTTP client) and Web server (HTTP server)

- TCP connection closed

Additionally, by DEFAULT, the protocol of HTTP is “stateless”

- yet we MADE IT stateful by using other tools such as Cookies

Disadvantage of Stateful Connections

- past history (state) must be maintained

- if server/client crashes, their views of “state” may be inconsistent, must be reconciled

- security issues!

HTTP Connections

In generally we had two types:

- Non-persistent HTTP: sending only ONE object (e.g. an image) over one connection

- then if your html has 3 images, 3

css, 1 html, then it needs 7 connections - connection terminates over each single message exchanges

- then if your html has 3 images, 3

- Persistent HTTP: multiple objects can be sent over a single TCP connection

- less overhead, better performances

- connection terminates after multiple exchanges

Non-persistent HTTP: example

Consider the case when you entered www.someSchool.edu/someDepartment/home.index

So basically this is very wasteful.

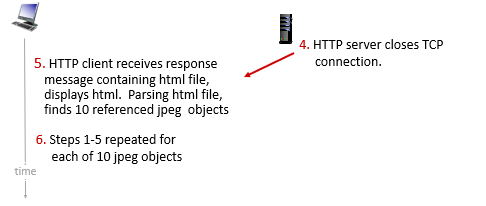

RTT (definition)

- time for a small packet to travel from client to server and back

Persistent HTTP

In this case:

- server leaves connection open after sending response

- the connection to the host, has nothing to do with your path such as

/index.html(remember it is just asocket)

- the connection to the host, has nothing to do with your path such as

- subsequent HTTP messages between same client/server sent over open connection

- here we have the request, i.e.

GET /index.html blablabla

- here we have the request, i.e.

- client sends requests as soon as it encounters a referenced object

- as little as one RTT for all the referenced objects (cutting response time in half)

- the initial connection for (e.g.)

index.htmlwill still take 2 RTT, but for all other (e.g.) 10 objects on thatindex.htmlpage, we just need $10 \times 1$ RTT.

- the initial connection for (e.g.)

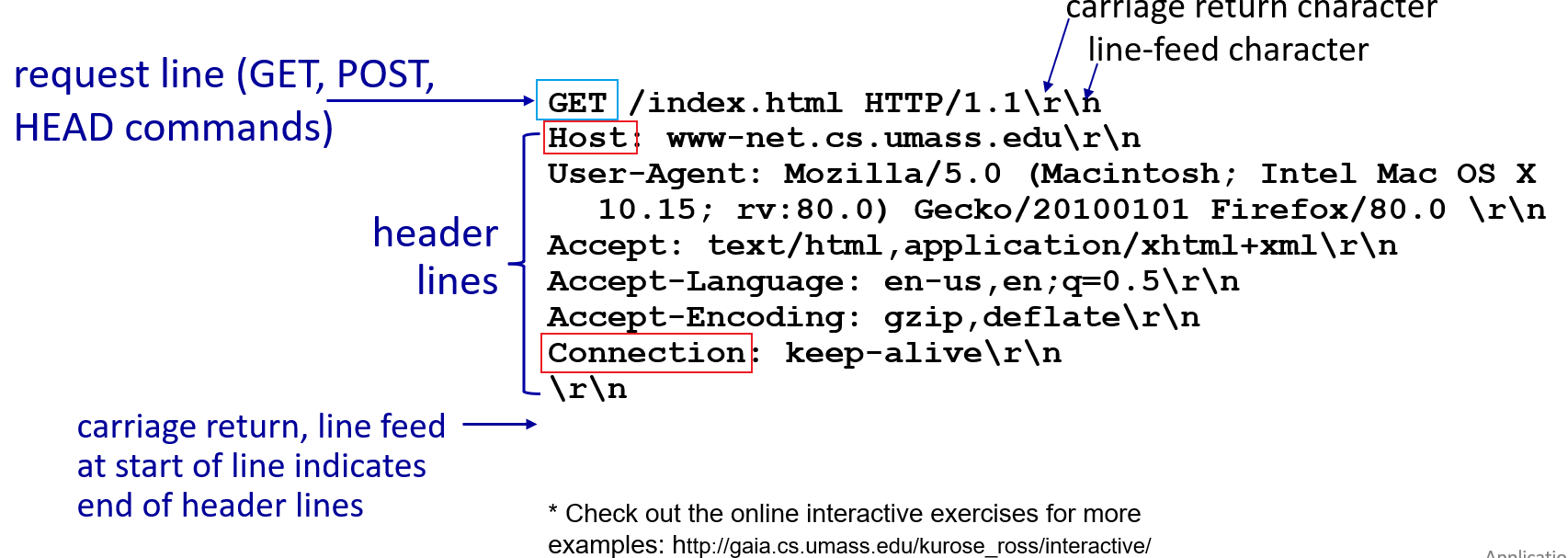

HTTP Message Format

HTTP Request Message

We have two types: request and response.

Each request message looks like

notice that:

- the connection goes to the

HOSTfield - in the connection, the

pathis queried - content is in ASCII

Moreover, remember that we can have user parameters specified with login?a=b&c=d etc.

- fundamentally this is agreed upon in the application side how to parse the stuff after

?

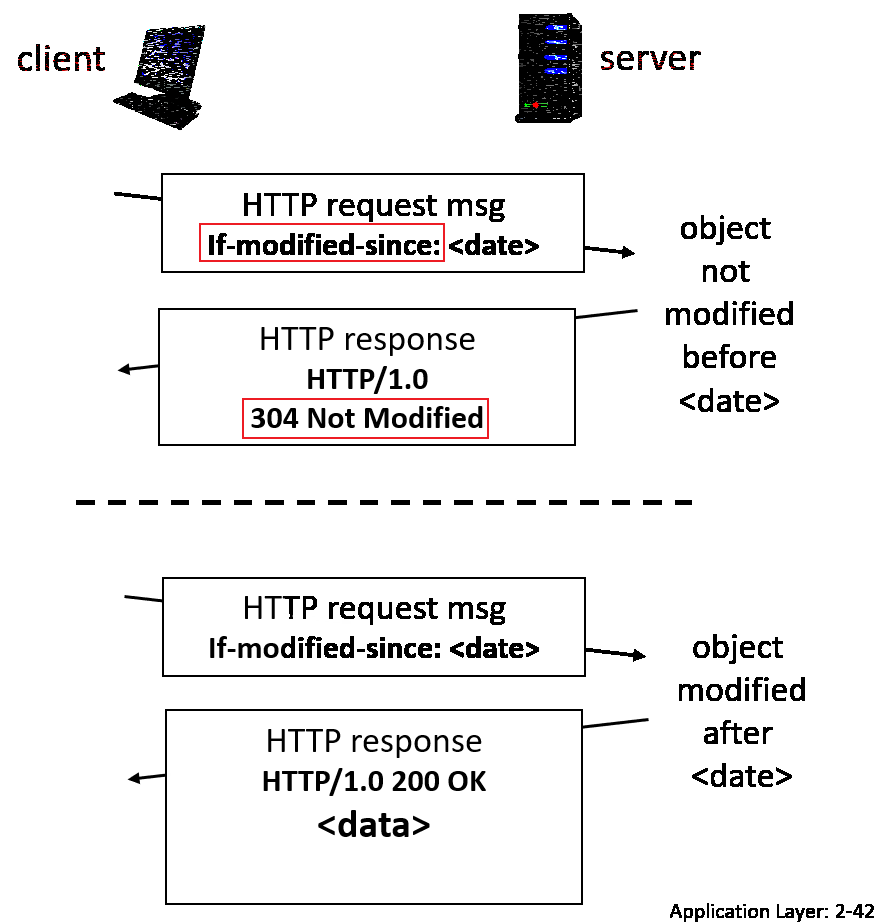

Conditional GET

Goal: don’t send object if cache has up-to-date cached version

where if you get 304, then no data is actually sent over.

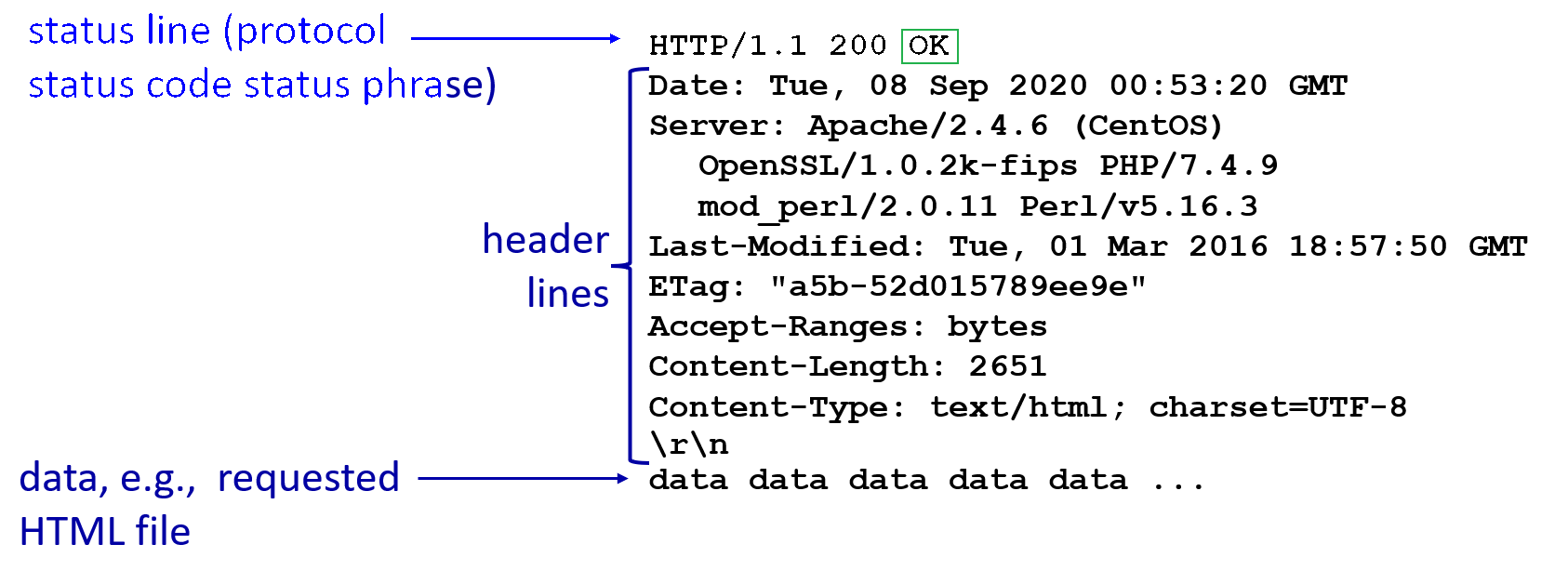

HTTP Response Message

An response message looks like:

where:

- notice the

Last-Modifiedfield. This is used later by the client browser, so that the next time it requests the same resource, it will send a “send if modified” version of a get request, with this date attached. - notice that

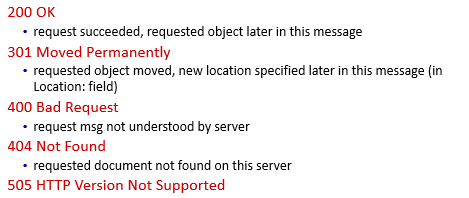

200 OKstatus code

Additionally, some other status codes:

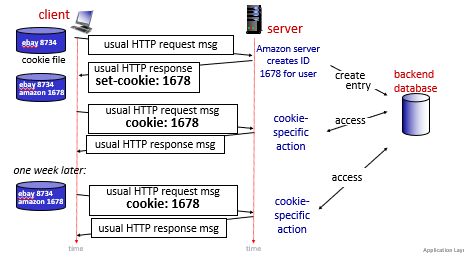

Maintaining State: Cookies

Recall that HTTP protocol is stateless, which means each request is independent of each other.

Then, to emulate a STATEFUL request, we use cookies.

Usually, we have four components interacting when using/managing cookies:

- cookie received from header line of HTTP response message

- cookie sent (from user’s browser) header line in next HTTP request message

- cookie file kept on user’s host, managed by user’s browser

- back-end database at Web site (used for managing what to do with those cookies)

For Example

where:

- the server creates cookies and

- subsequent HTTP requests to this site will contain the cookie ID value

- the above is an example of a persistent cookie

Note

- we have session specific cookies, and persistent cookies

- session specific cookies are erased after you close your session (e.g. browser window)

- persistent cookies has an expiration date, which expires after that but stays there after you close your session

- third party persistent cookies (tracking cookies) allow common identity (cookie value) to be tracked across multiple web sites

- These are usually used for online-advertising purposes and placed on a website through a script or tag. A third-party cookie is accessible on any website that loads the third-party server’s code.

- e.g. once you shopped on Amazon for (e.g.) a pair of brown shoes, it might create some third party cookie. Then future other websites might look at that and provide you relevant ads.

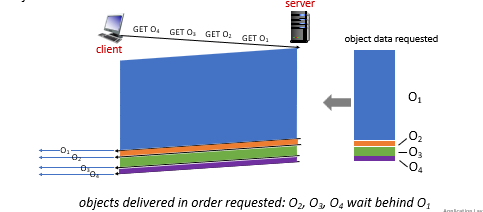

HTTP/2

Goal: Solve the head of the line issue:

- what if the first request is a huge file, and subsequent are small files? The first will block the other requests.

HTTP 1.1: introduced multiple, pipelined GETs over single TCP connection

- server responds in-order (FCFS: first-come-first-served scheduling) to

GETrequests in a pipe- e.g. if you requested for 10 images, they are responded/served in a FIFO

- therefore, may developers tend to still open multiple parallel connections to resolve this

However, the problem with that is:

-

with FCFS, small object may have to wait for transmission (head-of-line (HOL) blocking) behind large object(s)

-

loss recovery (retransmitting lost TCP segments) stalls object transmission

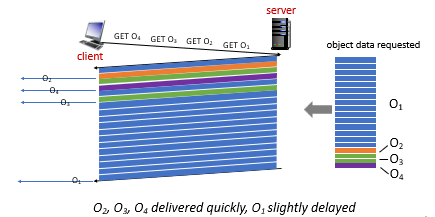

Aim of HTTP/2

One of the primary goals of HTTP/2 is to get rid of (or at least reduce the number of) parallel TCP connections for transporting a single Web page (as a resolution to Head-Of-Line blocking problem)

- TCP congestion control can also operate as intended

The HTTP/2 solution for HOL blocking is to break each message into small frames, and interleave the request and response messages on the same TCP connection.

- of course we need to then reassemble them on the other end. So this protocol has to be made clear on that.

Of course besides that, HTTP/2 also has the following features:

- Response Message Prioritization:

- the client can give a weight to each request for its priority. Numbers range from $1\to 256$. Then the server cans send first the frames for the responses with the highest priority.

- You can also states each message’s dependency on other messages by specifying the ID of the message on which it depends.

- Server Pushing: a server to send multiple responses for a single client request.

- for a single page, there is usually a lot of objects (e.g. images). Instead of waiting for the HTTP requests for these objects, the server can analyze the HTML page, identify the objects that are needed, and send them to the client before receiving explicit requests for these objects.

For Example:

In HTTP 1.1, this is the order of data sent:

For HTTP 2:

where basically you do a Round-Robin.

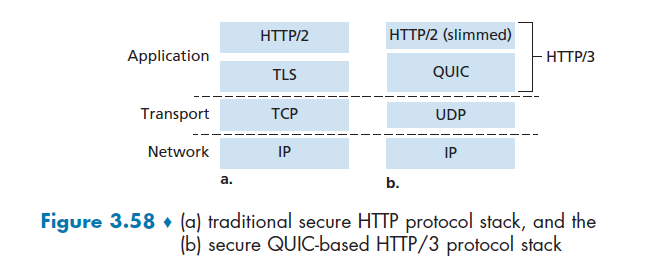

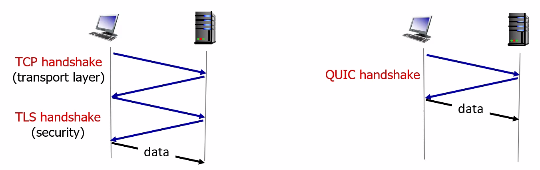

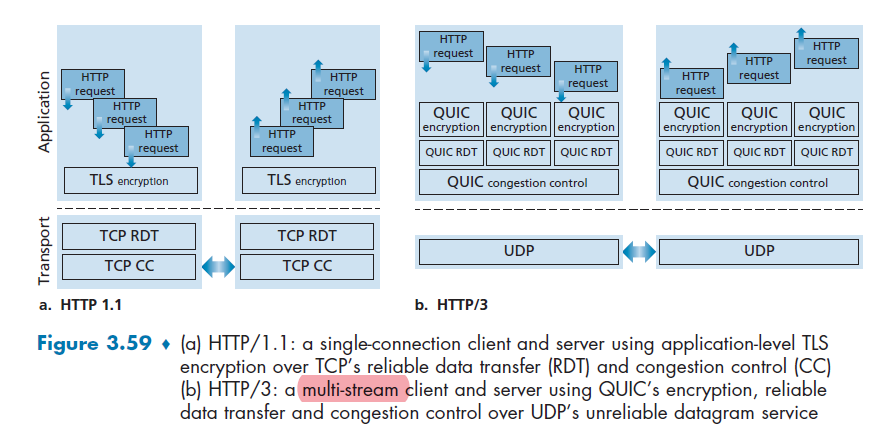

HTTP/3

Thought HTTP/2 is the most common now, it still has some problems. HTTP/2 over single TCP connection means:

- recovery from packet loss still stalls all object transmissions

- no security over vanilla TCP connection

HTTP/3 is yet a new HTTP protocol that is designed to operate over QUIC. As of 2020, HTTP/3 is described in Internet drafts and has not yet been fully standardized.

- QUIC (Quick UDP Internet Connections, pronounced quick) is an experimental transport layer network protocol designed by Google

- Think of QUIC as being similar to TCP+TLS+HTTP/2 implemented on UDP

HTTP/3

HTTP/3: adds security, per object error- and congestion-control (more pipelining) over QUIC (which is UDP)

- QUIC was chosen because many of the HTTP/2 features (such as message interleaving) are subsumed by QUIC

- since it is on UDP, it would be faster as well

More on HTTP/3 in transport layer chapter.

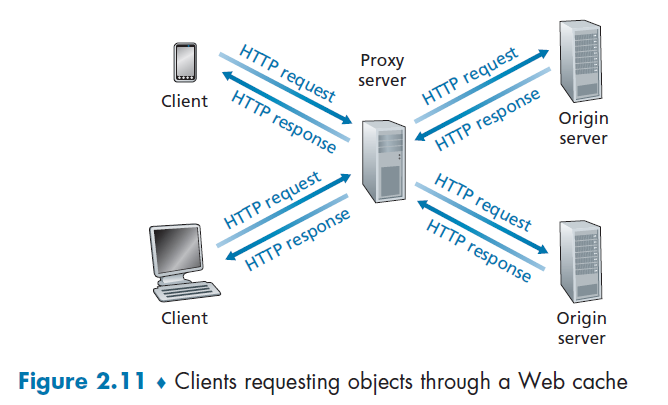

Web Caching

There are two caches:

- the caching of website made by your application

- the caching of website in a Web Cache/proxy server. Here we talk about this one.

Web Caching Server

A Web cache—also called a proxy server—is a network entity that satisfies HTTP requests on the behalf of an origin Web server.

- therefore, the Web cache has its own disk storage and keeps copies of recently requested objects in this storage

The basic diagram looks as follows, and the flow is simple:

Suppose you want to get www.someschool.edu/campus.gif. (You need to configure your web browser so that all of the user’s HTTP requests are first directed to the Web cache first).

Then:

- The browser establishes a TCP connection to the Web cache and sends an HTTP request for the object to the Web cache.

- The Web cache checks to see if it has a copy of the object stored locally.

- If it does, the Web cache returns the object within an HTTP response message to the client browser.

- If the Web cache does not have the object, the Web cache opens a TCP connection to the origin server, that is, to www.someschool.edu. then sends an HTTP request

- When the Web cache receives the object, it stores a copy in its local storage and sends a copy to the client (over the existing TCP connection between the client browser and the Web cache).

So basically a cache is both a server and a client at the same time.

In reality:

- Typically a Web cache is purchased and installed by an ISP. For example, a university might install a cache on its campus network and configure all of the campus browsers to point to the cache

DNS: Domain Name System

The idea is that:

- an internet host is identified by an IP address

- an IP address assigned per network interface. So a device could have multiple IP addresses

- but we use readable formats such as

cs.umass.edu, we need to map them to IP addresses

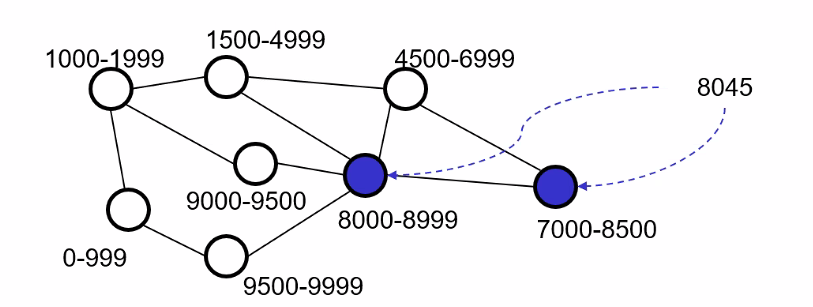

Domain Name System

- distributed database implemented in hierarchy of many name servers

- distributed database = each database contains partial information; synchronization issue is saved

- application-layer protocol: hosts, DNS servers communicate to resolve names (address/name translation)

Disadvantage of Centralized DNS

- single point of failure

- traffic volume

- distant centralized database

- maintenance

DNS Services

Some services provided by the DNS server is:

- hostname-to-IP-address translation

- host aliasing

- Basically you can have two or more domain names that take you to a single site.

- e.g. a hostname such as

relay1.west-coast.enterprise.comcould have, say, two aliases such as www.enterprise1.com and www.enterprise.com. In this case, the hostnamerelay1.west-coast.enterprise.comis said to be a canonical hostname

- mail server aliasing

- all organization has a dedicated mail server

- e.g. Bob has an account with Yahoo Mail, which might be as simple as bob@yahoo.com. However, the hostname of the Yahoo mail server is more complicated and much less mnemonic than simply

yahoo.com. So internally there is some aliasing done.

- load distribution

- there are some replicated Web Servers: many IP addresses correspond to one name

- load balancing: depending on where the request comes from, the service can respond different IP addresses so we can load balance

For Example: Using nslookup

➜ nslookup

> www.columbia.edu

Server: 172.18.48.1

Address: 172.18.48.1#53

Non-authoritative answer:

www.columbia.edu canonical name = www.a.columbia.edu.

www.a.columbia.edu canonical name = www.wwwr53.cc.columbia.edu.

Name: www.wwwr53.cc.columbia.edu

Address: 128.59.105.24

In general, DNS are used:

- trillions of queries/day

- so performance matters!

- databases contains billion records

- decentralized databases

- millions of different organizations responsible for their records

Overview of How DNS Works

where:

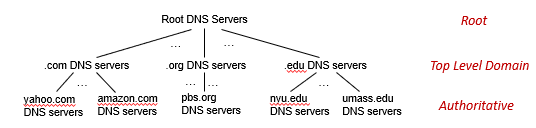

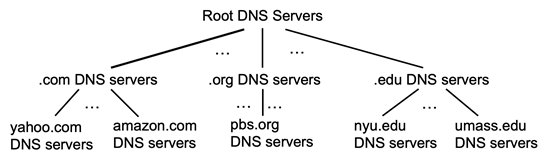

-

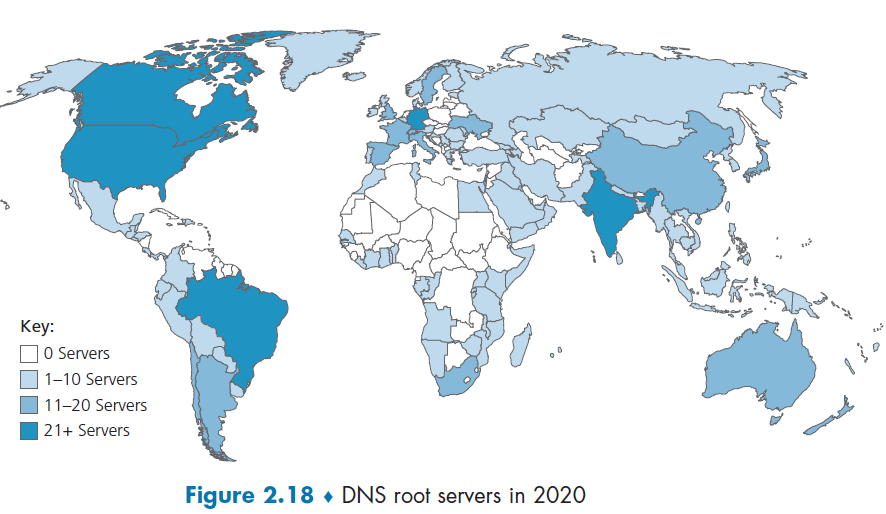

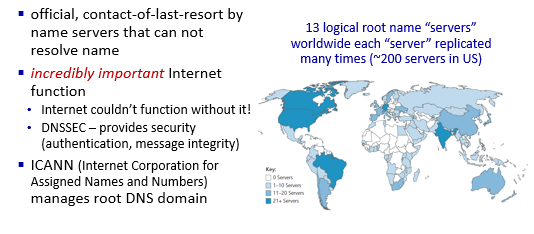

the root server stores IPs for the Top Level Domain servers (TLD)

- There are more than 1000 root servers instances scattered all over the world, as shown in Figure 2.18. These root servers are copies of 13 different root servers, managed by 12 different organizations.

-

the top level domain servers knows about lower level/authoritative DNS servers

- For each of the top-level domains—top-level domains such as com, org, net, edu, and gov, and all of the country top-level domains such as uk, fr, ca, and jp—there is TLD server (or server cluster).

-

the authoritative DNS server basically keeps the actual mapping (so it is managed by the actual organization such as Amazon)

- Every organization with publicly accessible hosts (such as Web servers and mail servers) on the Internet must provide publicly accessible DNS records that map the names of those hosts to IP addresses

-

basically each parent node knows IP address of their child node

Advantages

- the benefit is that if some IP-DNS needs to be changed, only one node needs to be updated.

For Example

Client wants IP address for www.amazon.com; 1st approximation:

- client queries local DNS server first (covered in Local Name Server)

- client queries root server to find

.comDNS server - client queries

.comDNS server to getamazon.comDNS server - client queries

amazon.comDNS server to get IP address for www.amazon.com

However, in reality:

- once a name gets resolved, it will get cached locally (e.g. in your organization DNS server). This means that queries to the root DNS server is actually not a lot

- each cached name will have an expiration time. Before that name expires, you cannot load balance using CDN obviously.

Root Name Server

Top Level Domain Server

Knows about authoritative DNS servers, who stores the actual mapping you need.

Authoritative DNS servers:

- organization’s own DNS server(s), providing authoritative hostname to IP mappings for organization’s named hosts can be maintained by organization or service provider

Local Name Server

This is where things get cached and can be reducing the number of requests to the root server.

So technically, when host makes DNS query, it is first sent to its *local* DNS server

- Local DNS server returns reply if it has been cached

- Forwarding request into DNS hierarchy for resolution if it doesn’t know

local DNS server doesn’t strictly belong to hierarchy

ipconfigWhen you type that command in Windows, you get something like:

DNS 服务器 . . . . . . . . . . . : 128.59.1.3 128.59.1.4and those would be your local name server address

- those are configured once you connect to the WiFi/network

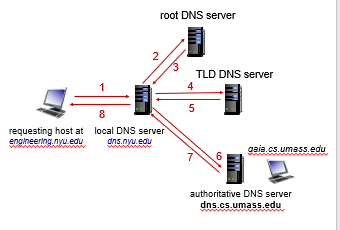

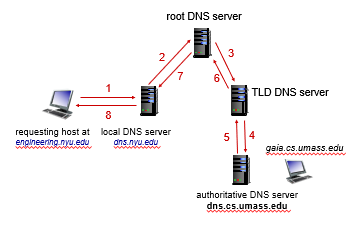

Iterative Query

Now we talk about how DNS actually works.

the advantage of this is:

- your local server can cache also Top Level DNS Server IP, Authoritative DNS Server IP

Recursive Query

this is rarely used due to its problem of

- heavy load on root DNS server

- caching less stuff

In reality, it is more often to see a combination of iterative and recursive approaches.

DNS Records and Messages

DNS distributed database store resource records (RRs), which contains mappings from Domain to IP.

Resource Record

A single record is a four tuple

where:

TTL is the time to live of the resource record; it determines when a resource should be removed from a cache.

- we ignore this field for examples below

Types assign meanings to Name and Value

Type Name Value Example A Hostname IP Address (relay1.bar.foo.com, 145.37.93.126, A)NS Domain Hostname of an authoritative DNS server that knows the IP of this domain (used for build DNS route) (foo.com, dns.foo.com, NS)CNAME alias hostname canonical hostname (foo.com, relay1.bar.foo.com, CNAME)MX alias hostname canonical name of a mail server (foo.com, mail.bar.foo.com, MX)

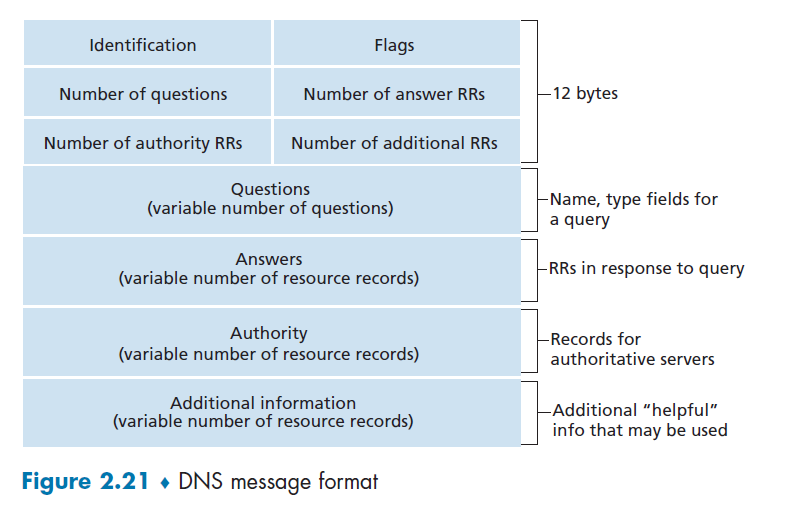

DNS Messages

Therefore, each DNS reply message carries one or more resource records.

In general, we have two types:

- DNS query messages

- DNS reply messages

But both query and reply messages have the same format

where:

- Identification: 16-bit number that identifies the query. This identifier is copied into the reply message to a query, allowing the client to match received replies with sent queries.

- Flags: Basically a bitmap, with each bit:

- 1-bit query/reply flag indicates whether the message is a query (0) or a reply (1).

- 1-bit authoritative flag is set in a reply message when a DNS server is an authoritative server for a queried name.

- 1-bit recursion-desired flag is set when a client (host or DNS server) desires that the DNS server perform recursion

- 1-bit recursion-available field is set in a reply if the DNS server supports recursion.

- Number of xxx: number of occurrences of the four types of data sections that follow the header

- Questions: this section contains information about the query that is being made. This section includes

- a name field that contains the name that is being queried

- type field that indicates the type of question (e.g. type

MX)

- Answer : contains the resource records (i.e. four tuples) for the name that was originally queried

- The rest is labelled

Inserting Records into DNS Database

Suppose you have just created an exciting new startup company called Network Utopia. Then you might want to have your own authoritative DNS running so you can deliver content.

-

register the domain name networkutopia.com at a registrar (i.e. buy it from somewhere)

- A registrar is a commercial entity that verifies the uniqueness of the domain name, enters the domain name into its DNS database (as discussed below), and collects a small fee from you for its services.

-

provide the registrar with the names and IP addresses of your primary and secondary authoritative DNS servers

-

e.g.

dns1.networkutopia.com,dns2.networkutopia.com,212.2.212.1, and212.212.212.2. -

then the registrar would then make sure that a Type NS and a Type A record are entered into the TLD com server

(networkutopia.com, dns1.networkutopia.com, NS) (dns1.networkutopia.com, 212.212.212.1, A)

-

-

Then, in your authoritative DNS server:

- Type A resource record for your Web server www.networkutopia.com and the Type MX resource record for your mail server

mail.networkutopia.comare entered into your authoritative DNS servers.

- Type A resource record for your Web server www.networkutopia.com and the Type MX resource record for your mail server

-

Once all of these steps are completed, people will be able to visit your Web site at www.networkutopia.com and send e-mail to the employees at your company

Then, one someone wanted to visit, the following would actually happen:

- host will first send a DNS query to her local DNS server.

- The local DNS server will then contact a TLD com server. (The local DNS server will also have to contact a root DNS server if the address of a TLD com server is not cached.)

- The TLD com server sends a reply to Alice’s local DNS server, with the reply containing the two resource records of TYPE A and TYPE NS.

- The local DNS server then sends a DNS query to

212.212.212.1, asking for the Type A record corresponding to www.networkutopia.com - Then your authoritative DNS server needs to respond, say

212.212.71.4, which is the IP of your webserver - Alice’s browser can now initiate a TCP connection to the host 212.212.71.4 and send an HTTP request over the connection

DNS Security

In reality, there were two types of attacks on DNS servers.

- DDoS attacks

- bombard root servers with traffic

- not successful to date, since we can do traffic filtering

- bombard TLD servers

- bombard root servers with traffic

- Spoofing attacks

- intercept DNS queries, returning bogus replies

- DNS cache poisoning

Facebook DNS Outrage

Recall that in the end, we will get an IP address. But we still needs to get to that IP address physically.

- each router needs to tell other routers which destination it can reach, by some announcement.

Facebook DNS Outrage

- Some configuration were mistaken, so Facebook routers starts to withdrawn their connection to every ISP. As a result, physically no routing exists to reach a Facebook DNS Server

- Then, due to the absence of DNS requests, those DNS server thought there is an error so they shutted down

- In the end, now there is both NO ROUTES and NO ALIVE DNS. So nobody can actually reach Facebook’s servers.

Video Streaming and Content Distribution Networks

By many estimates, streaming video—including Netflix, YouTube and Amazon Prime—account for about 80% of Internet traffic in 2020. Things to take into account:

- heterogeneity of uses (e.g. bandwidth available)

- need to scale to 1 billion + users

This section discusses how popular video streaming services are implemented in today’s Internet.

- a distributed, application-level infrastructure

Internet Video.

Basically, for pre-recorded videos

- servers have a copy of them

- users send requests to the servers to view the videos on demand

Bit Rate

Bit rate is the number of bits that are conveyed or processed per unit of time.,

- each video is a sequence of images with certain FPS (frame per second)

- each frame/image has a number of pixels

- each pixel is represented by some bits

Then some algorithm can compress those bits per second by utilizing redundancy within and between images (a like

zipcompression techniques). So we need less bits per image = less bits rate.

- spatial compression + temporal compression

But obviously, the higher the bit rate, the better the quality = the higher the bandwidth requirement.

In reality, we then have:

- CBR (constant bit rate), such that video encoding rate is fized

- VBR (variable bit rate), video encoding rate changes depending on amount of spatial and temporal compression we have

- i.e. when a scene changes to a new one, compression changes.

- so to preserve high quality, this is the way

HTTP Streaming and DASH

Now, we know how video is “played”. Here we talked about how to store and deliver the video such that it can be played as mentioned before.

Consider for the simple case

the main problem we are facing is therefore:

- clients tend to have variable bandwidth

- clients might have various packet loss, delay, congestion

Then, in this model, we have:

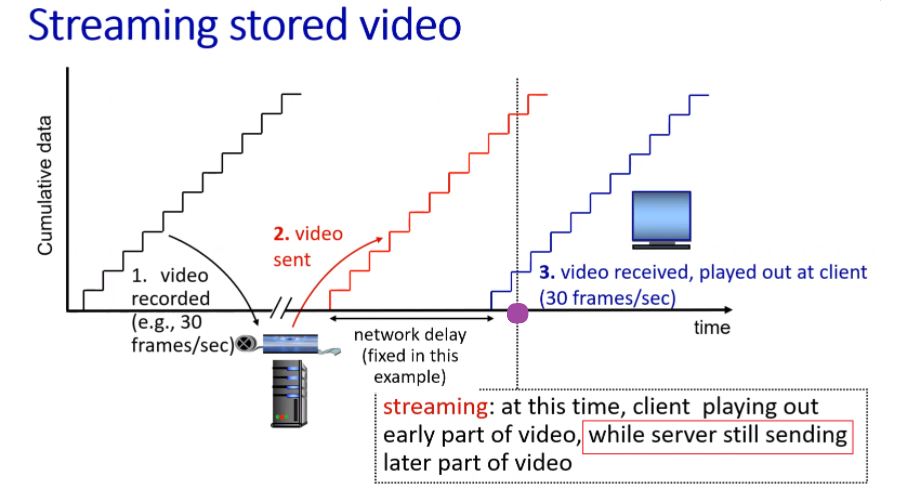

where:

- we assumed some CBR

- due to some network delay, video plays at line 3

- at the time of play, we stored (about) 1 second of received video. So essentially we are still receiving data while playing.

Playback

Once the number of bytes (for the video) in client’s buffer buffer exceeds a predetermined threshold, the client application begins playback — specifically, starts playing by grabbing images from the buffer.

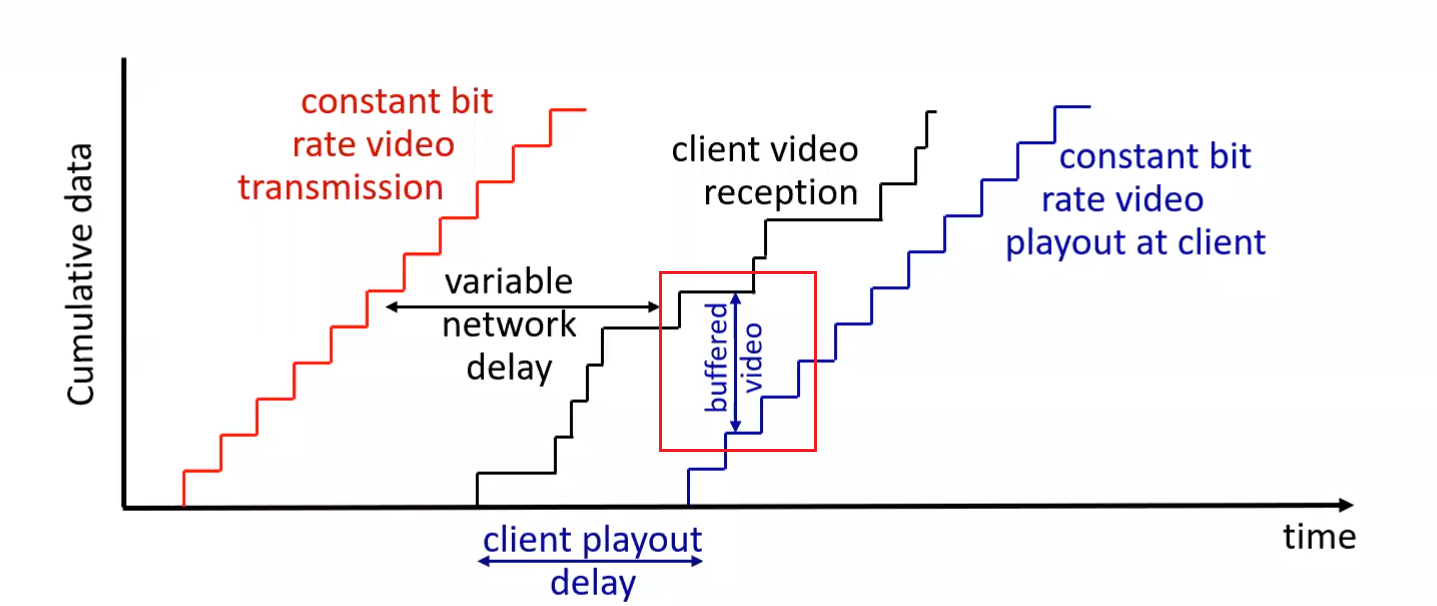

What happens yet in reality is:

where:

- due to the variable network condition, we might need to buffer quite some so that client won’t need to “re-buffer”/pause in the video

- if the black curve intersects with blue curve, we need “re-buffering/pausing” on client side = bad experience

Need Variable Bit Rate

Therefore, when client detects that the two curves are about to intersect, it will lower the bit rate of requested video, so that it can buffer = make sure smooth client experience.

- client experience include fast forward, rewind, etc.

To realize this, this is done by DASH.

Problem with HTTP:

- All clients receive the same encoding of the video, despite the large variations in the amount of bandwidth available to a client, both across different clients and also over time for the same client

DASH - Dynamic Adaptative Streaming over HTTP

In DASH, the video is

- divided in to multiple chunks

- each chunk is encoded into several different versions, with each version having a different bit rate

Therefore, the idea is that:

Server

- holds all those different chunks + versions

- holds a

manifestfile, with a mapping ofurlfor each different chunk - all those chunks=files are then replicated in CDN nodes

Client

- request the

manifestfile- note that this file is the same for all users for the same resource

- periodically estimate the bandwidth

- decide to request for which chunk of video depending on observer bandwidth, and consults the

manifestfor actual request- so we can have different bit rates over time if needed

- so all the intelligence is at client, decides when to request, what bit rate to request, and where to request (

manifest+ closest CDN node)

- use the

urlon themanifestand get the chunk from a CDN node- recall that DNS server is the one that decides which CDN node your data will be from, by look at the

source ip.

- recall that DNS server is the one that decides which CDN node your data will be from, by look at the

Note: when actually sending each chunk, it is done by HTTP

GETrequest messages.

CDN - Content Distribution Networks

Now, the idea is that we prefer having receiving file from geographically close sites.

CDNs typically adopt one of two different server placement philosophies:

- Enter Deep. One philosophy, pioneered by Akamai, is to enter deep into the access networks of Internet Service Providers, by deploying server clusters in access ISPs all over the world

- so we get really close to end users, which means there are clusters in thousands of locations

- smaller storage size

- Bring Home. A second design philosophy, taken by Limelight and many other CDN companies, is to bring the ISPs home by building large clusters at a smaller number (10s)

- lower maintenance and management overhead, but a bit larger delay

- larger storage size

Then, once its clusters are physically in place, the CDN replicates content across its clusters.

Pulling Contents

- The CDN may not want to place a copy of every video in each cluster, since some videos are rarely viewed or are only popular in some countries. In fact, many CDNs do not push videos to their clusters but instead use a simple pull strategy: if request file is not stored in the CDN node, it will retrieve it and store the copy.

- When cluster’s storage is full, it removes the least frequently requested video

Note:

- Above are third-party CDNs, which distribute contents for other content providers.

- There is also private CDN, that is, owned by the content provider itself; for example, Google’s CDN distributes YouTube videos and other types of content.

Load Balancing with DNS

The trick to load balance between CDN nodes is that:

- each

urltoiptranslation is essentially done by a DNS server. So we can store a shortexpirationtime such that it will frequently go to the authoritative DNS server- then authoritative DNS server can then tell you which CDN cluster to use for load balancing purposes.

- then even inside the CDN cluster, you could have some load balancing for which CDN node to use

CDN Operation

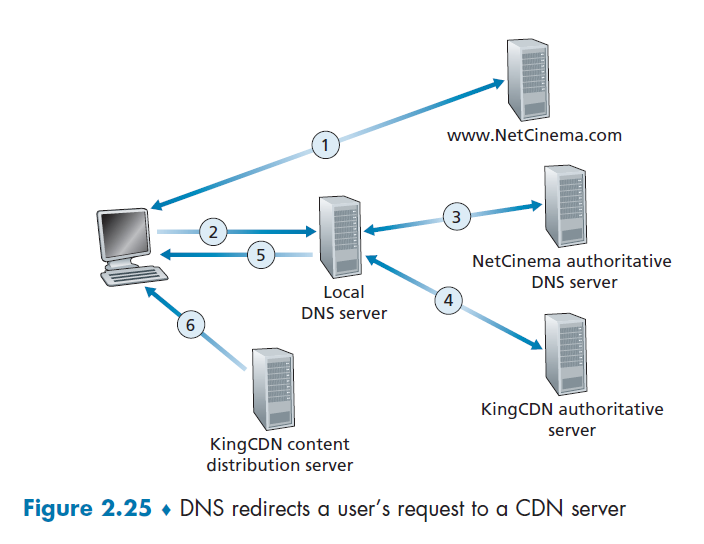

Here we demonstrate how DNS + CDN cluster works.

Suppose that you wanted to watch the film Transformers 7, which on NetCinema.com has the url of http://video.netcinema.com/6Y7B23V. Then, the following would happen:

- The user visited the Web page at

netcinema.comand got http://video.netcinema.com/6Y7B23V - The user clicked http://video.netcinema.com/6Y7B23V to watch the film, which then sends a DNS query for

video.netcinema.com - First it goes to the Local DNS server (LDNS), which then handles the query to the authoritative DNS of NetCinema

- now, instead of returning an IP address, the NetCinema authoritative DNS server returns to the LDNS a hostname in the KingCDN’s domain:

a1105.kingcdn.com

- now, instead of returning an IP address, the NetCinema authoritative DNS server returns to the LDNS a hostname in the KingCDN’s domain:

- Then, the DNS query enters into KingCDN’s private DNS infrastructure. Now, for

a1105.kingcdn.com.- here, it can decide which CDN node to use.

- KingCDN’s DNS system eventually returns the IP addresses of a KingCDN content server node to the LDNS, and LDNS forwards the IP address of the content-serving CDN node to the user’s host

- The users received an

ipfrom the KingCDN node, and will establish the TCP connection there and request for the data.- here, if DASH is used, the server will first respond with a

manifestand etc.

- here, if DASH is used, the server will first respond with a

Peer-to-Peer File Distribution

The applications described in this chapter thus far—including the Web, e-mail, and DNS—all employ client-server architectures with significant reliance on always-on infrastructure servers.

There is minimal (or no) reliance on always-on infrastructure servers. Instead, pairs of intermittently connected hosts, called peers, communicate directly with each other.

- e.g. file distribution (BitTorrent): each torrent address will be a “directory” to the specific peer-to-peer network

- you can download any part of the file first (e.g. the last part might comes first)

- e.g. Streaming (KanKan): we need to download from the first part

- e.g. VoIP (Skype, originally)

Basically the advantage is that your P2P will be self-scaling since each host is also an uploader/server

Scalability of P2P Architecture

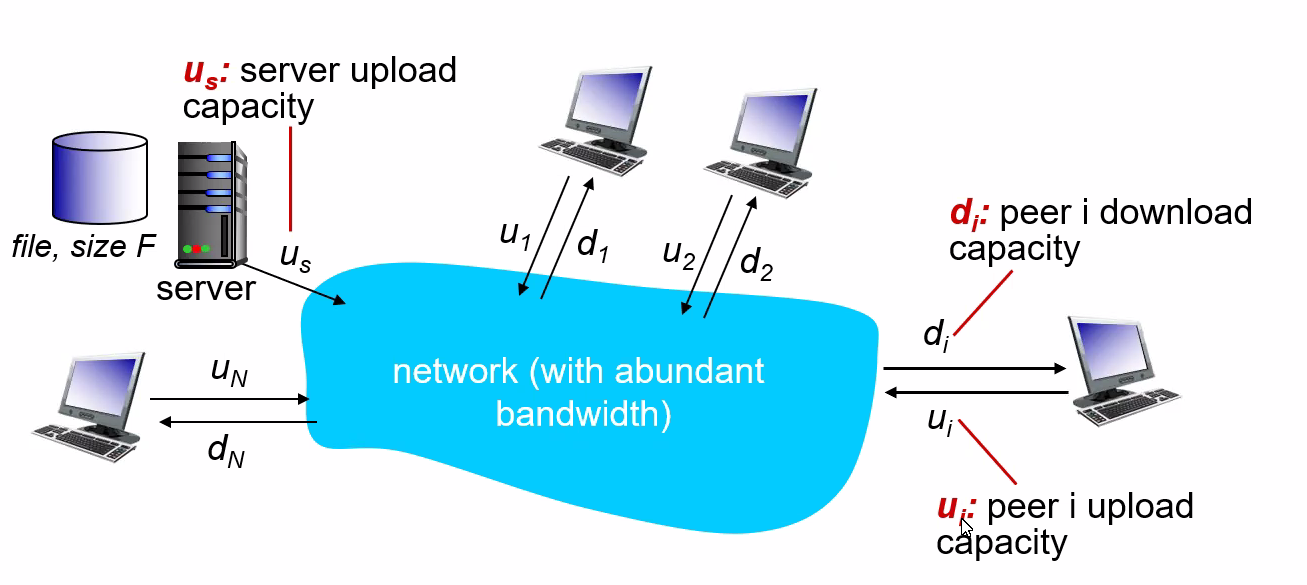

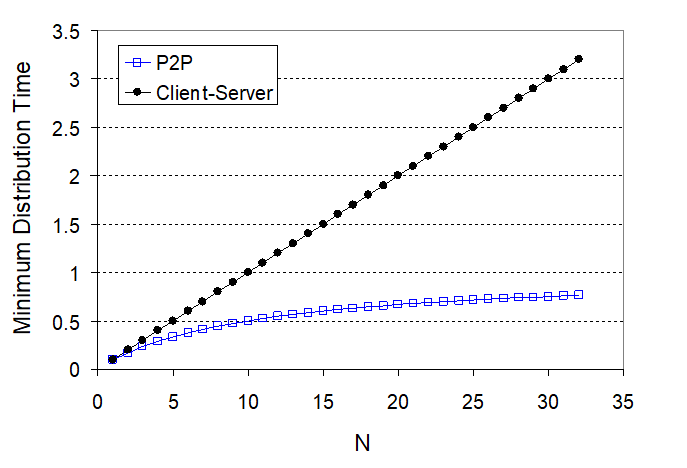

We want to compare the ideal performance of client-server and p2p.

Consider the case when we need to deliver a large file to a larger number of hosts. You will see that:

- In client-server file distribution, the server must send a copy of the file to each of the peers

- In p2p, each peer can redistribute any portion of the file it has received to any other peers.

Let there be $F$ bits of a file to be send, and there are $N$ users. Let the upload speed be $u$, and download speed be $d$. We want to compare the distribution time - the time it takes to get a copy of the file to all N peers:

- (assumption that the Internet core has abundant bandwidth, implying that all of the bottlenecks are in access networks)

Then, we can compute that

-

Distribution time for client-server model $D_{cs}$:

The idea is that we either have server uploading the file into the client or client downloading from server.

-

The server must transmit one copy of the file to each of the $N$ peers. Thus, the server must transmit $NF$ bits. Since the server’s upload rate is $u_s$, the time to distribute the file must be at least $NF/u_s$.

-

the slowest client will have $d_{min}=\min{d_1,…,d_N}$. So if everyone downloads, we have $F/d_{min}$

so distribution time is:

-

notice that $NF/u_s$ increases with $N$ linearly

-

Distribution time for P2P.

The main difference is that when a peer receives some file data, it can use its own upload capacity to redistribute the data to other peers. Therefore:

- If only server has the file, and we assume that each client starts redistributing immediately when they get a bit, then the minimum distribution time is at least $F/u_s$

- The worst case for downloading is that all bits goes to the same host with $d_{min}$. Then in that case, we have $F/d_{min}$.

- The last case is when each host has some share of the file, then to make sure everyone has all $F$ bits, we need to have uploaded $NF$ bits (to each other) with no faster than $u_{\text{total}} =u_s + \sum u_i$. Therefore, in this case, the distribution time is $NF/(u_s + \sum u_i)$.

so performance is:

\[D_{P2P} \ge \max \left\{ \frac{F}{u_s}, \frac{F}{d_{min}},\frac{NF}{u_s + \sum u_i}\right\}\]

notice that the term $NF/(u_s + \sum u_i)$ means increasing $N$ increases both $NF$ and $\sum u_i$. So eventually, this term flattens out even when $N$ is large.

P2P File Distribution

In reality, we would first divide the file into 256Kb chunk, and each host will own some of the chunks.

- $NF/(u_s + \sum u_i)$ in the P2P means we want the peer to become a server as soon as possible

- if you waited for the entire file to be a server, that will take quite a long time/wasted

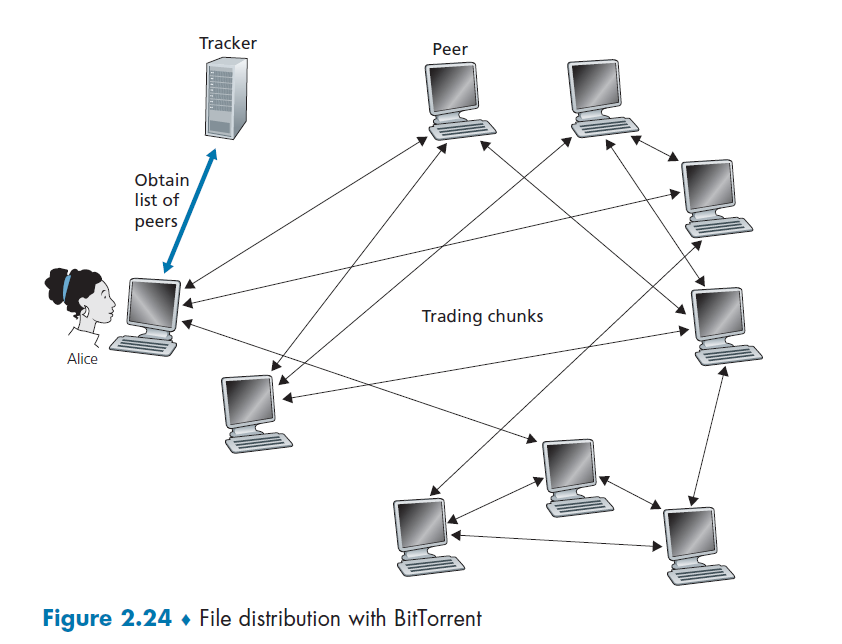

Tracker

- Each torrent has an infrastructure node called a tracker. When a peer joins a torrent, it registers itself with the tracker and periodically informs the tracker that it is still in the torrent.

- Therefore, the tracker keeps track of the peers that are participating in the torrent.

Then, how P2P works is:

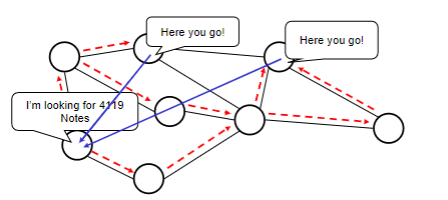

where basically when Alice clicked the magnet link:

-

obtains a list of peers from tracker, and ask the peers for a list of chunks of the file they have

- basically gives you the IP of the peers, then each peer gives you a list of what they have

- let there be $L$ neighbors. Then you get $L$ lists of chunks

-

register yourself to tracker

-

requests missing chunks from peers, rarest first. (the chunks that are the rarest/fewest copies among her neighbors)

-

compute from the $L$ list of chunks which one is the rarest

-

the rarest first is the key, with the aim of maintaining high availability

-

-

while gathering chunks, Alice also sends chunks to some of other peers (algorithm deciding who to send: Tit-for-Tat)

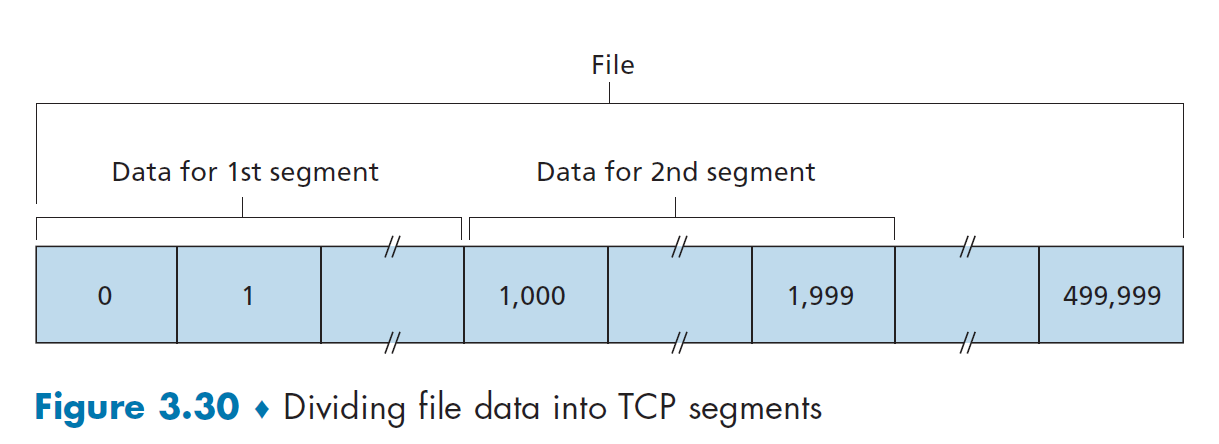

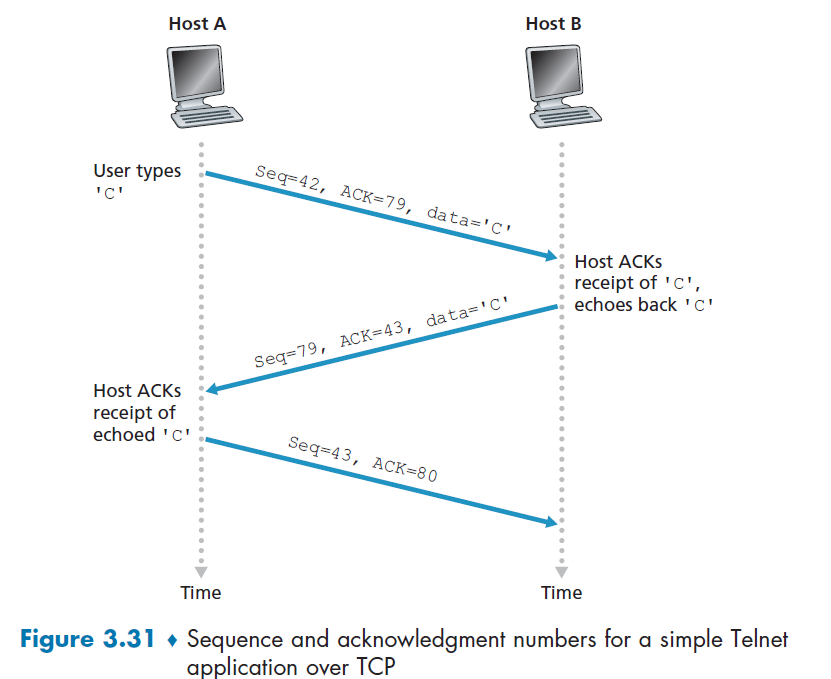

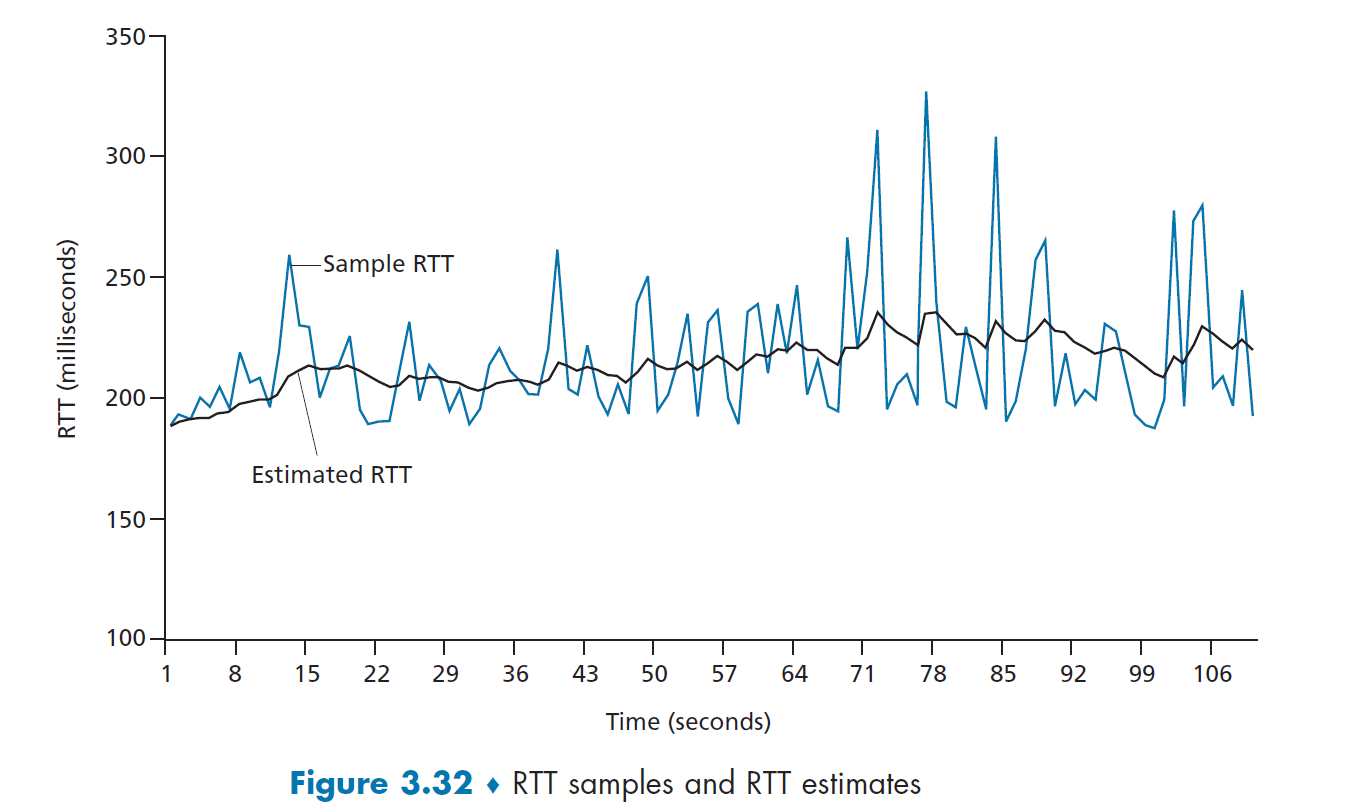

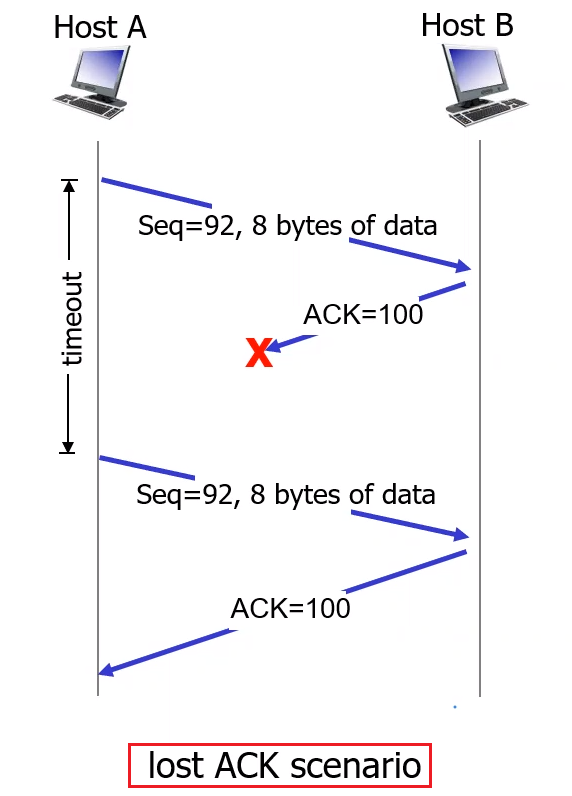

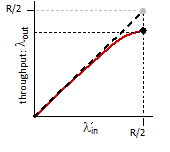

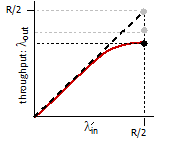

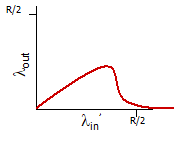

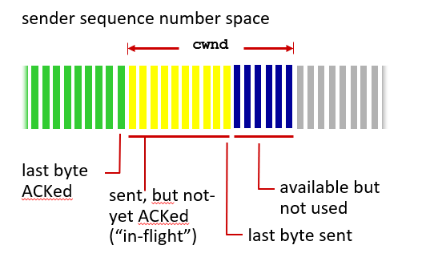

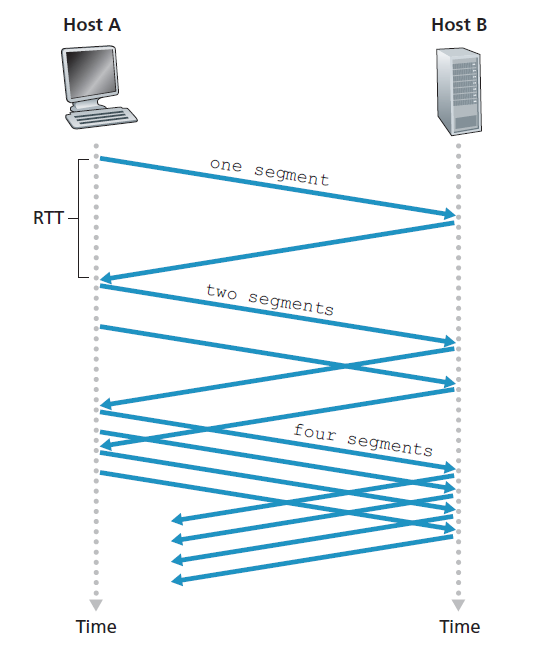

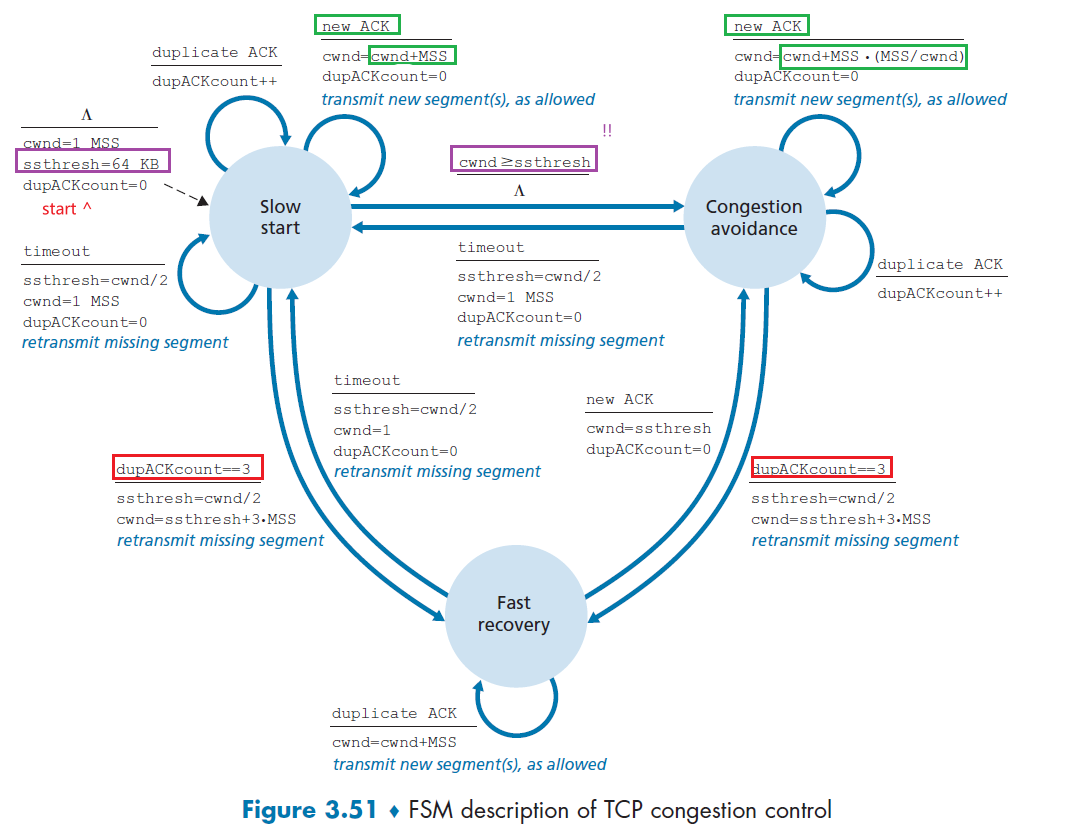

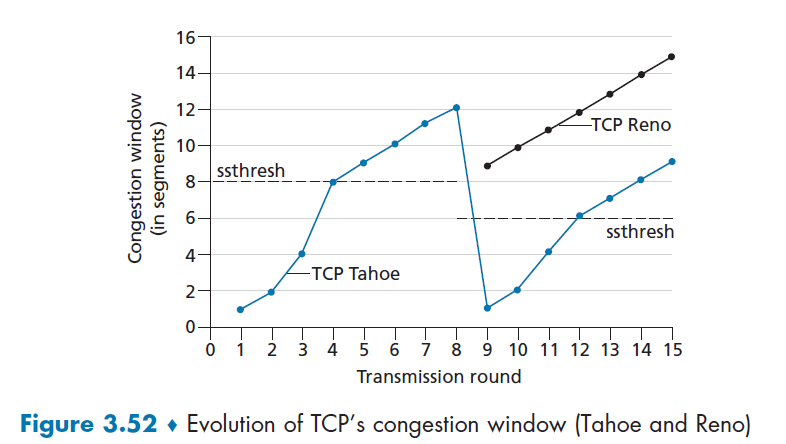

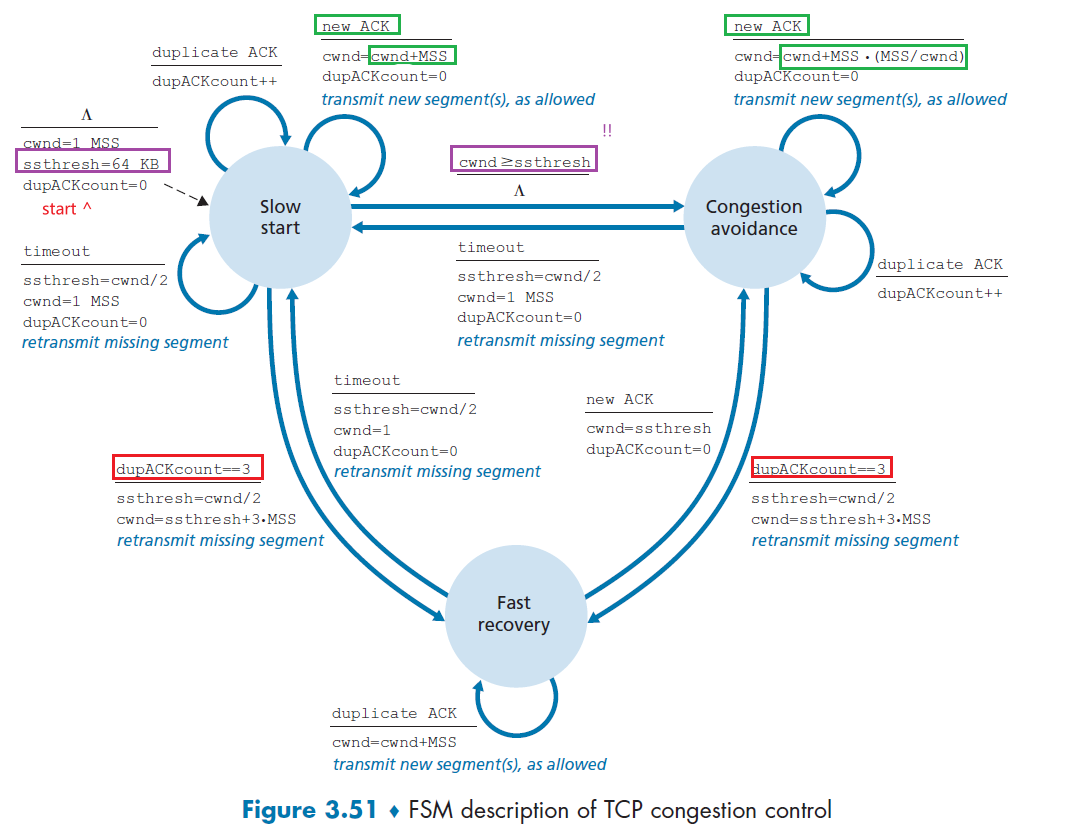

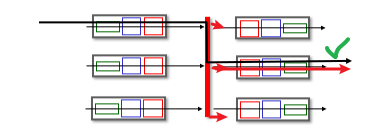

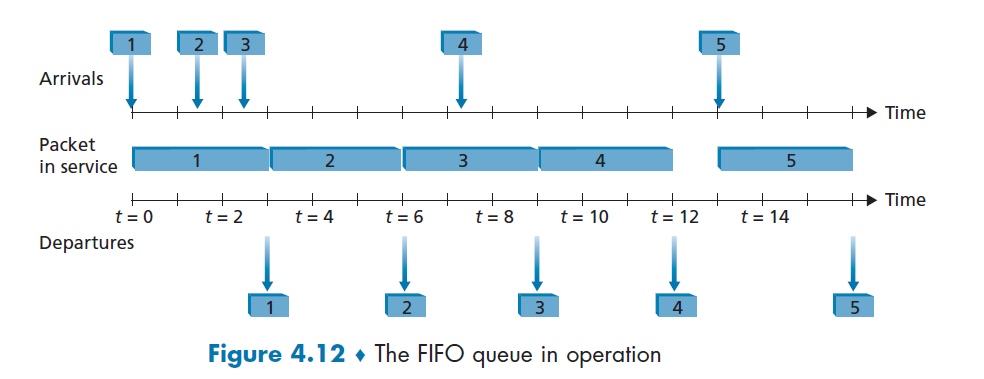

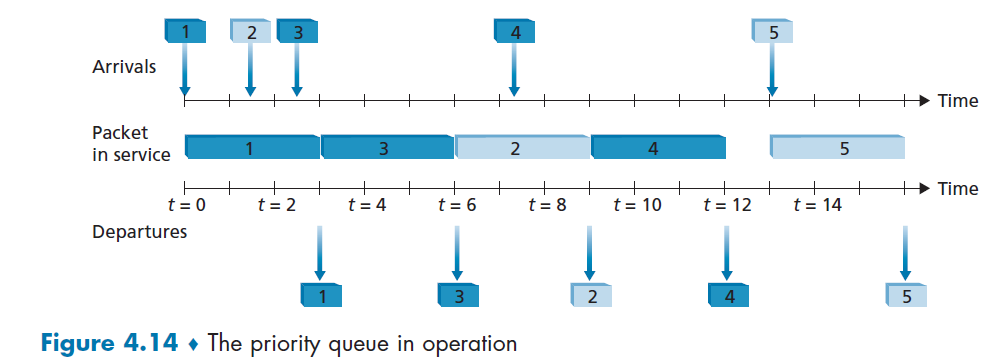

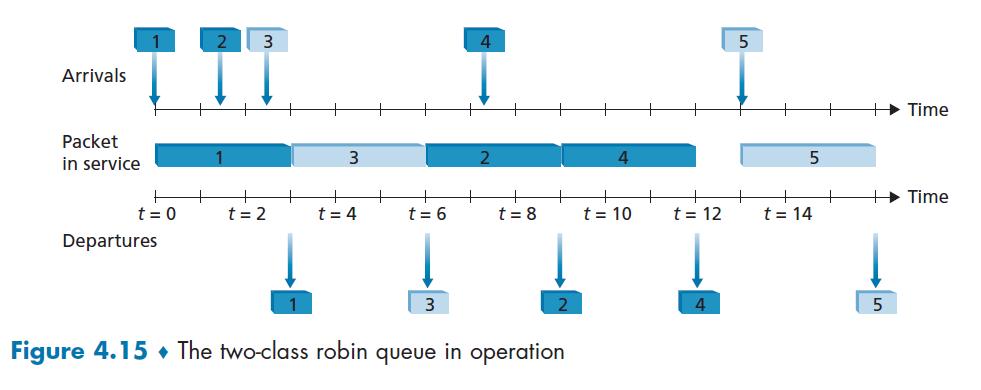

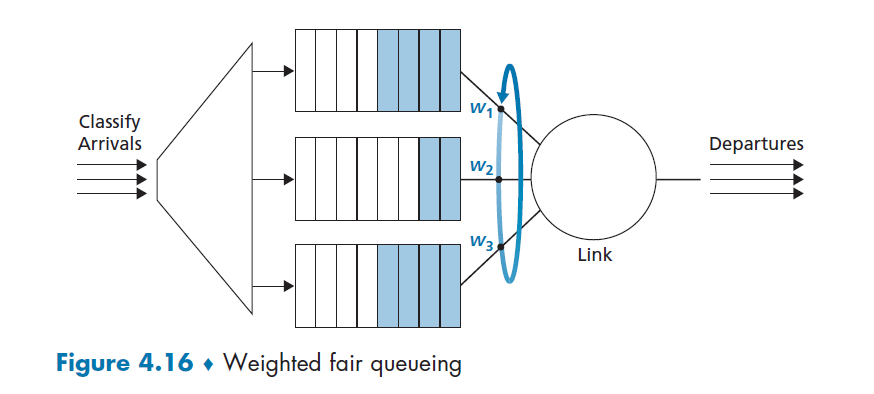

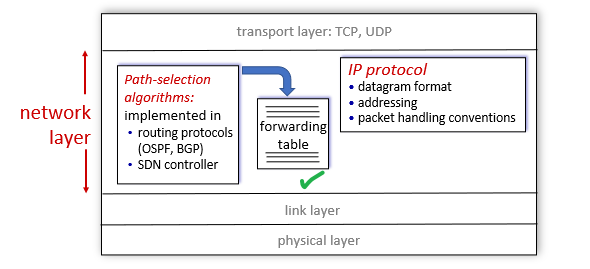

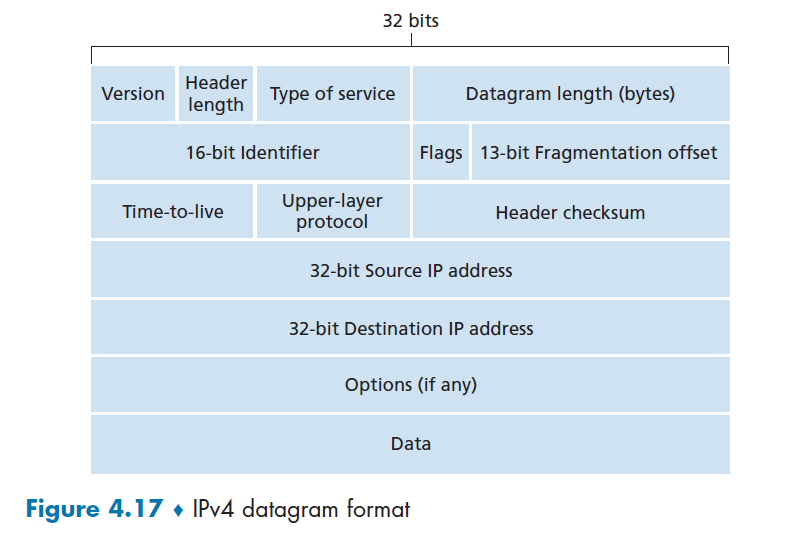

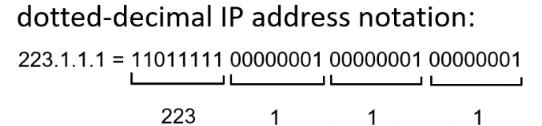

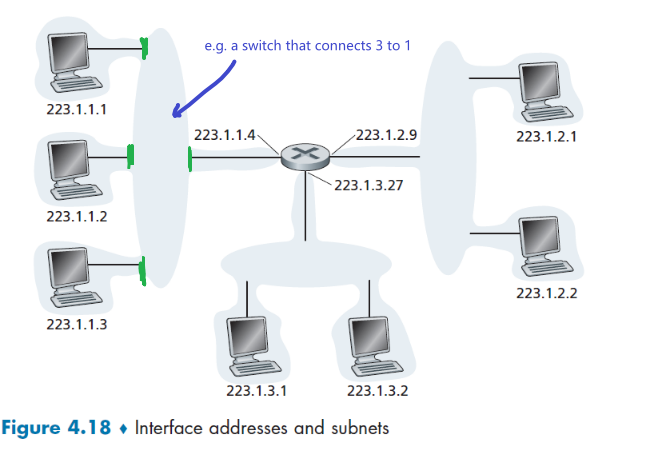

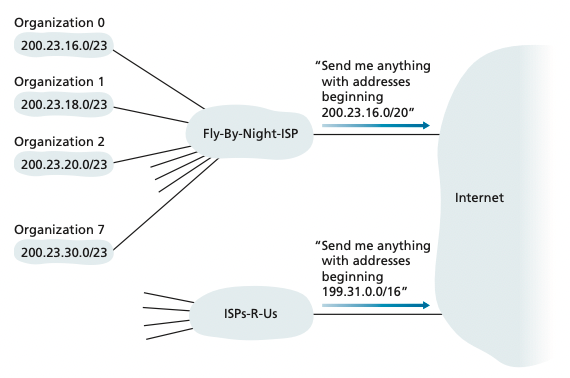

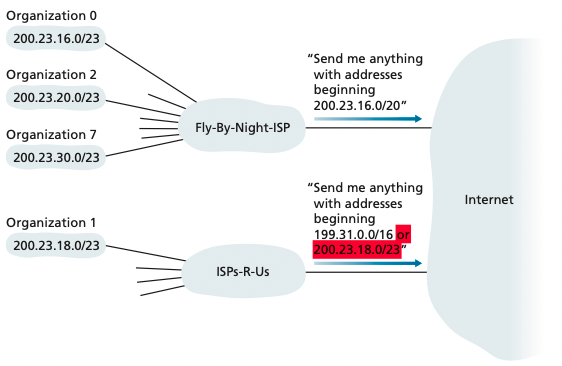

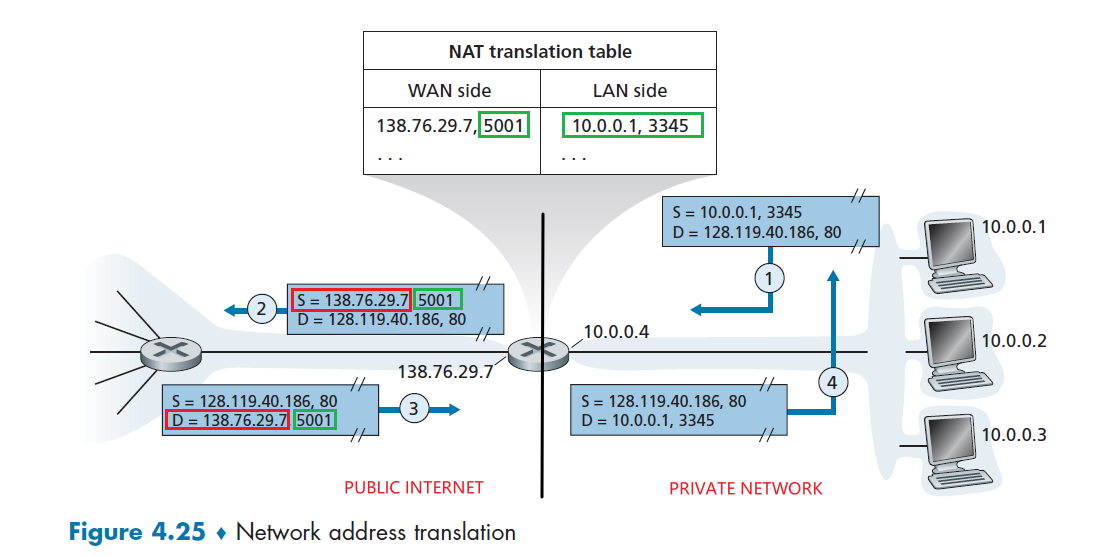

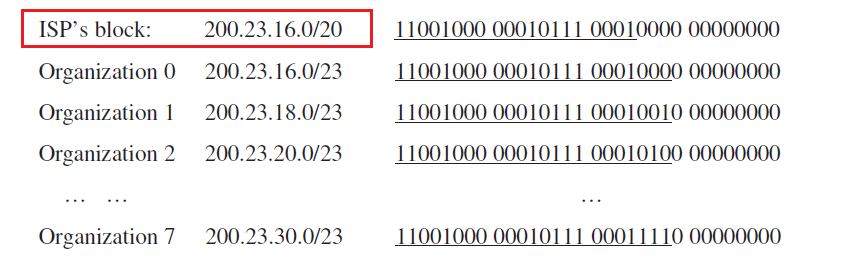

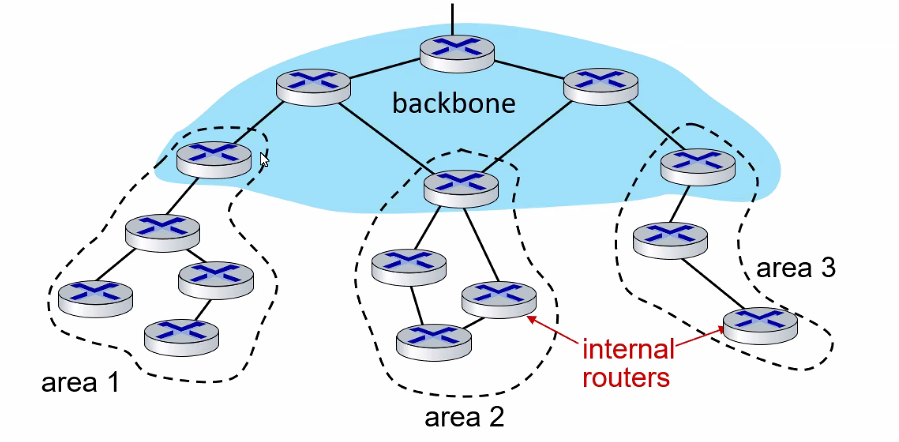

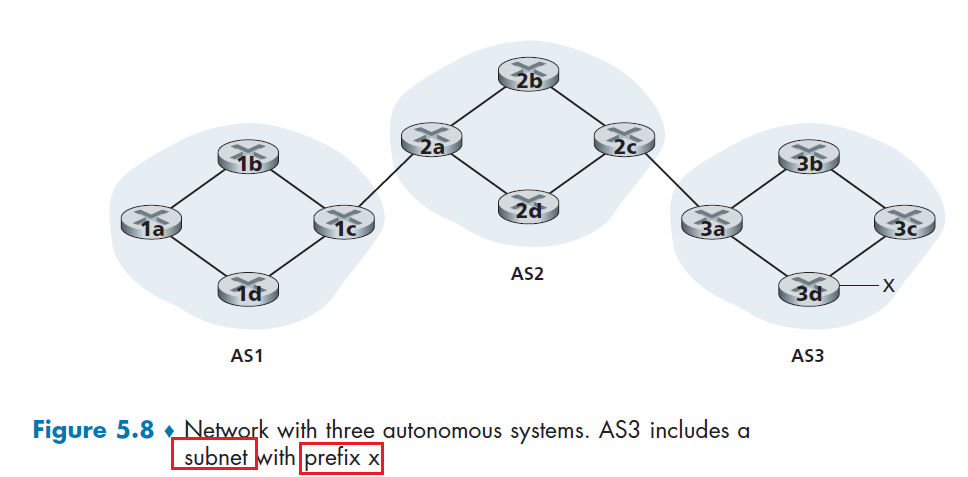

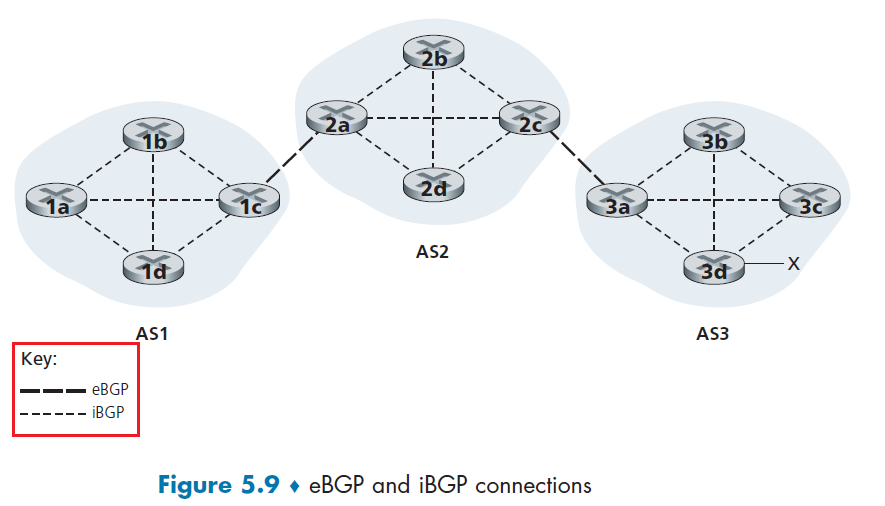

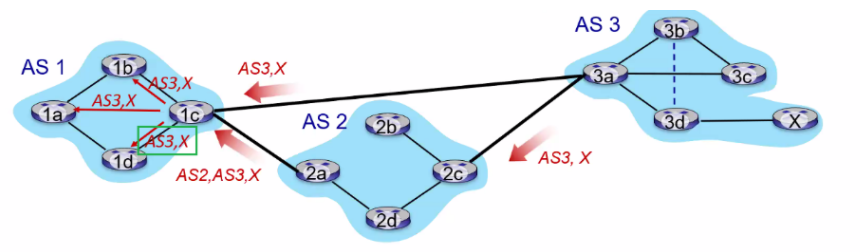

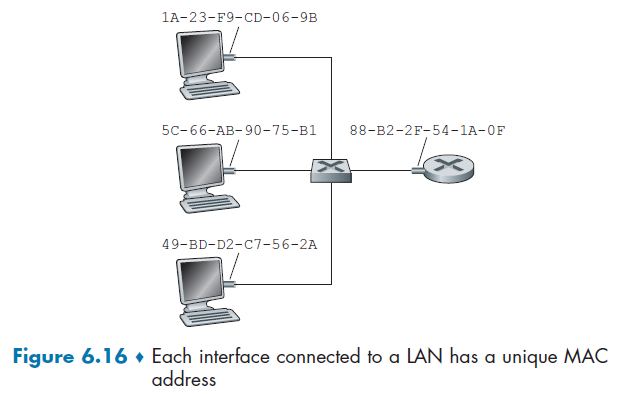

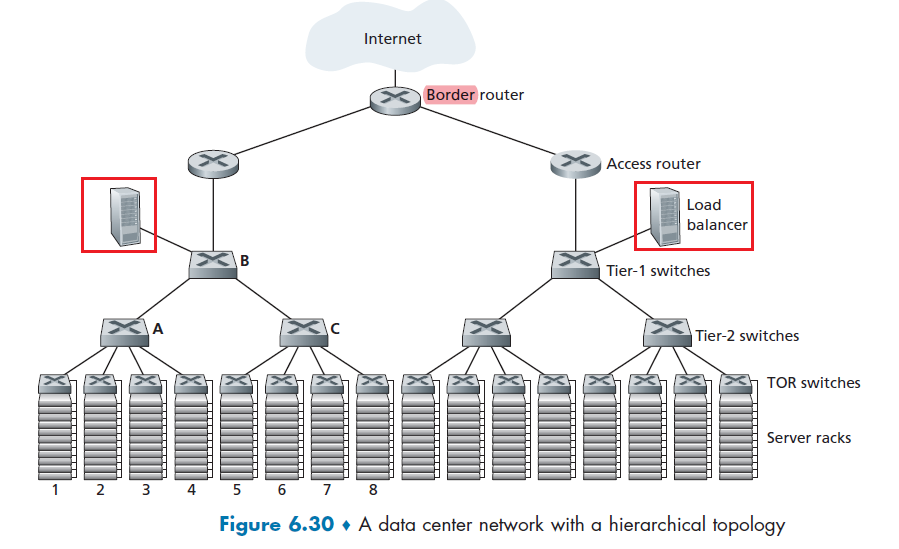

- unchoked peers - send to four peers who are sending Alice the data at the highest rate (reevaluate top 4 every 10 secs)