STFCS229 Machine Learning

- Introduction and Fundamentals

- Machine Learning Techniques

- Linear Regression

- Classification and Logistic Regression

- Generalized Linear Models

- Generative Learning Algorithms

- Support Vector Machines

- Learning Theory

- Regularization and Model Selection

- Decision Tree and Ensembles

- Introduction to Neural Network

Machine Learning - MIT6.036

- https://www.youtube.com/watch?v=0xaLT4Svzgo&list=PLxC_ffO4q_rW0bqQB80_vcQB09HOA3ClV

- full course time

- http://people.csail.mit.edu/tbroderick//ml.html

- Lecture PDFs ^

Stanford CS229

- http://cs229.stanford.edu/syllabus-autumn2018.html

Introduction and Fundamentals

Machine Learning is about, on a high level, making decisions or predictions based on data. So the conceptual basis of learning from data is the problem of induction.

- Great examples are face detection and speech recognition and many kinds of language-processing tasks.

Some general steps that you will have to take involve:

- acquire and organize data

- design a space of possible solutions

- select a learning algorithm and its parameters

- apply the algorithm to the data

- validate the resulting solution to decide whether it’s good enough to use, etc.

The goal is usually to find

- generalization: How can we predict results of a situation or experiment that we have never encountered before in our data set?

In a more concrete manner, we can describe problems and their solutions using six characteristics, three of which characterize the problem and three of which characterize the solution:

- Problem class: What is the nature of the training data and what kinds of queries will be made at testing time?

- Assumptions: What do we know about the source of the data or the form of the solution?

- Evaluation criteria: What is the goal of the prediction or estimation system? How will the answers to individual queries be evaluated? How will the overall performance of the system be measured?

- Model type: Will an intermediate model be made? What aspects of the data will be modeled? How will the model be used to make predictions?

- Model class: What particular parametric class of models will be used? What criterion will we use to pick a particular model from the model class?

- Algorithm: What computational process will be used to fit the model to the data and/or to make predictions?

Problem Class

There are many different problem classes in machine learning. They vary according to what kind of data is provided and what kind of conclusions are to be drawn from it. Here we discuss the most typical:

Supervised Learning

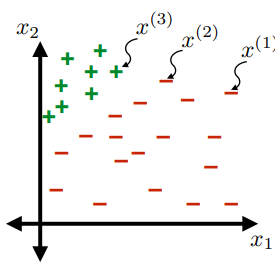

Supervised Learning

The learning system is given both inputs and (the correct) outputs. The goal is then to predict an output for a future, unknown input.

In other words, the training dataset $D_n$ is the form of pairs:

\[D_n = \{ (x^{(1)}, y^{(1)}), (x^{(2)}, y^{(2)}), ..., (x^{(n)}, y^{(n)}) \}\]where:

- $x^{(i)}$ are vectors in $d$ dimensions.

- $y^{(i)}$ are elements of a discrete set of values.

- $x^{(i)}$ represents an input/object to be classified

- $y^{(i)}$ represents an output/target values

The goal in is ultimately, given a new input value $x^{(n+1)}$, to predict the value of $y^{(n+1)}$.

Additionally, we divide up supervised learning based on whether:

- the outputs are drawn from a small finite set (classification)

- A classification problem is binary or two-class if $y^{(i)}$ is drawn from a set of two possible values, $y^{(i)} \in {+1,-1}$ .Otherwise, it is called multi-class.

- or the outputs are a large finite or continuous set (regression).

- Regression is similar, except that $y^{(i)} \in \mathbb{R}^k$

Note:

-

In reality, we might be dealing with inputs such as an actual song. So to abstract those entities to vectors, we use something called a feature mapping:

\[x \mapsto \varphi(x) \in \mathbb{R}^d\]where:

- $x$ is your actual entity, such as the actual song

- $\varphi(x)$ is a function that extracts information/feature from the data to a vector

Unsupervised Learning

Unsupervised Learning

- Here, one is given a data set and generally expected to find some patterns or structure inherent in it.

Common examples include:

- Density estimation: Given samples $x^{(1)}, …, x^{(n)} \in \mathbb{R}^D$ drawn IDD from some distribution $\text{Pr}(X)$, and predict the probability $\text{Pr}(x^{(n+1)})$ of an element drawn from the same distribution.

- IID stands for independent and identically distributed, which means that the elements in the set are related in the sense that they all come from the same underlying probability distribution, but not in any other ways.

- Dimensionality Reduction: Given samples $x^{(1)}, …, x^{(n)} \in \mathbb{R}^D$, the problem is to re-represent them as points in a $d$-dimensional space, where $d<D$. The goal is typically to retain information in the data set that will, e.g., allow elements of one class to be discriminated from another.

Reinforcement Learning

Reinforcement Learning

- The goal is to learn a mapping from input values $x$ to output values $y$, but without a direct supervision signal to specify which output values $y$ are best for a particular input.

- In a sense there is no training set specified a priori.

- Example:

- fantastic can playing games, e.g. AlphaGo

This reinforcement is basically similar to the positive/negative reinforcement in psychology, such that the general setting is:

- The agent observes the current state $x^{(0)}$

- The agent selects an action $y^{(0)}$

- The agent receives a reward, $r^{(0)}$, which is based on $x^{(0)}$ and possibly $y^{(0)}$

- The environment transitions probabilistically to a new state, $x^{(1)}$, with a distribution that depends only on $x^{(0)}, y^{(0)}$

- i.e. the set of possible states after your action is a distribution

- The agent observes the current state $x^{(1)}$

- repeats

The goal is to find a policy $\pi$, mapping $x$ to $y$, (that is, states to actions) such that some long-term sum or average of rewards $r$ is maximized.

Sequence Learning

Sequence Learning

- In sequence learning, the goal is to learn a mapping from input sequences $x_0,…,x_n$ to output sequences $y_1,…,y_m$.

- The mapping is typically represented as a state machine:

- with one function $f$ used to compute the next hidden internal state given the input

- and another function $g$ used to compute the output given the current hidden state.

ML Example

Consider an example of predicting whether if a newborn baby in the hospital will have a seizure.

We are provided beforehand with:

-

$n$ training data points

\[\mathcal{D}_n = \{ (x^{(1)}, y^{(1)}), ..., (x^{(n)}, y^{(n)}) \}\]- each data point, $x^{(i)}, i \in {1,…,n}$ is a feature vector, so that $x^{(i)} \in \mathbb{R}^d$

- for example, $x^{(i)}_1$ could represent the amount of oxygen the baby is breathing, and $x^{(i)}_2$ could represent the amount of movement the baby has

- each data point, $x^{(i)}$ has a corresponding label, $y^{(i)} \in {-1,+1}$, so that

- $-1$ means newborn baby $i$ did not have a seizure

- and $+1$ means newborn baby $i$ did have a seizure

- each data point, $x^{(i)}, i \in {1,…,n}$ is a feature vector, so that $x^{(i)} \in \mathbb{R}^d$

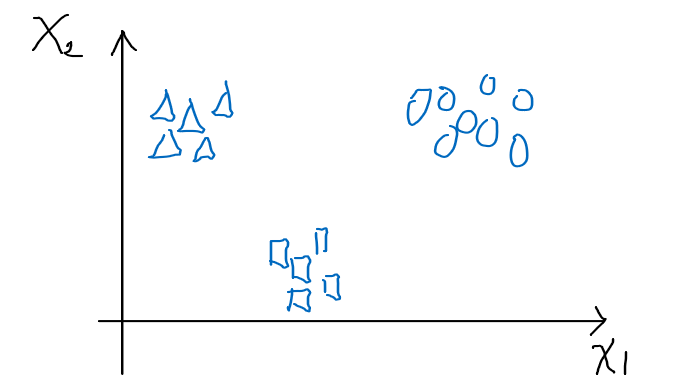

So that we have the following:

- in this simple example, $d=2$

Questions to Answer

- How can we label those points?

- What are good ways to label them?

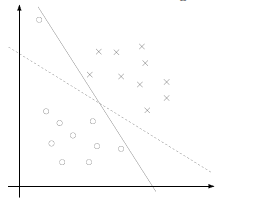

Possible Solutions

-

Labeling Hypothesis: we need a “function” $h$ that does:

\[h: \mathbb{R}^d \mapsto \{-1, +1\}\] -

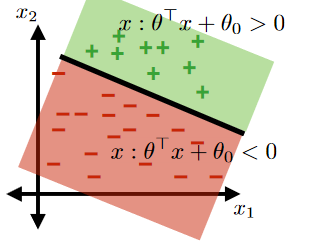

Linear Classifiers is a good set of hypothesis to use.

- e.g. the hypothesis class $\mathcal{H}: \text{set of }h \in \mathcal{H}$ such that:

- labels $+1$ on one side of a line, and $-1$ on the other side of the line

- e.g. the hypothesis class $\mathcal{H}: \text{set of }h \in \mathcal{H}$ such that:

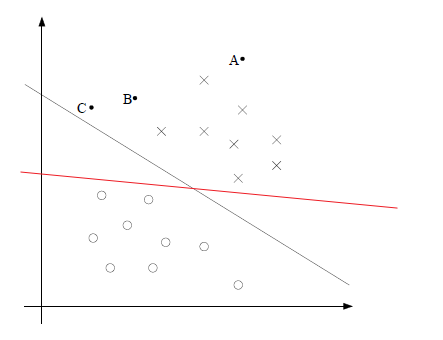

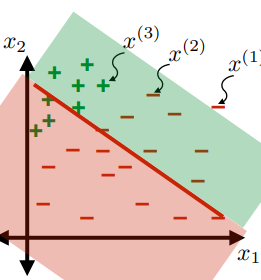

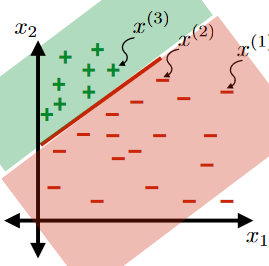

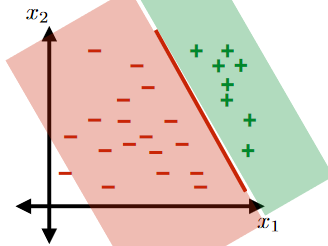

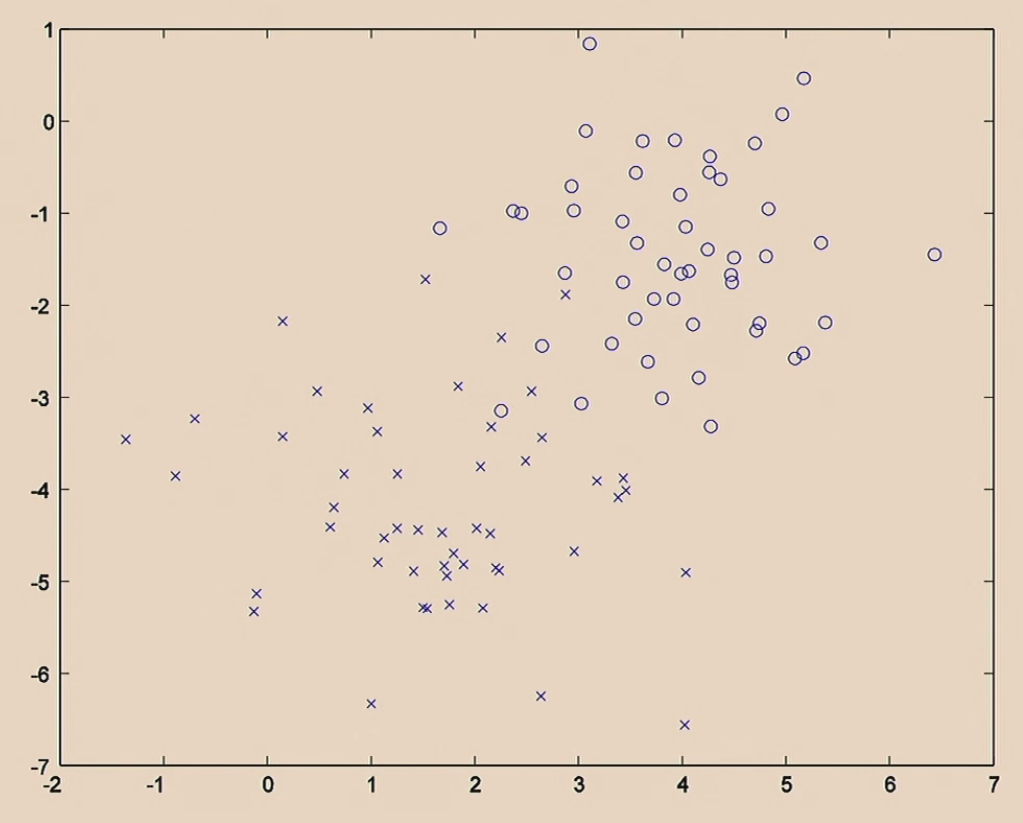

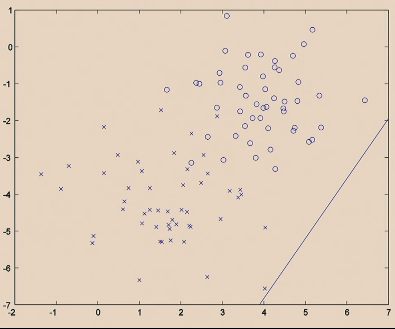

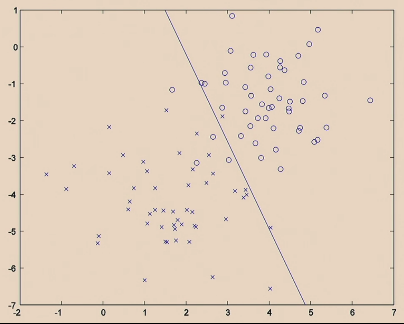

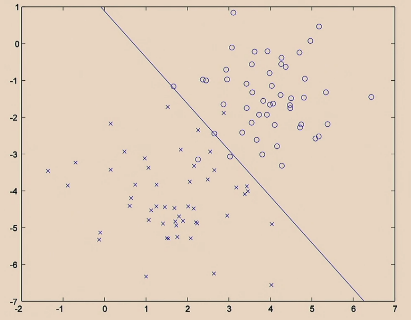

Linear Classifiers

For the same given (training) data:

Consider the hypothesis class $\mathcal{H}= \text{set of }h \in \mathcal{H}$ such that:

- labels $+1$ on one side of a line, and $-1$ on the other side of the line

- below are some hypothesis in this class $\mathcal{H}$

|  |

|  |

| ———————————————————— | ———————————————————— |

|

| ———————————————————— | ———————————————————— |

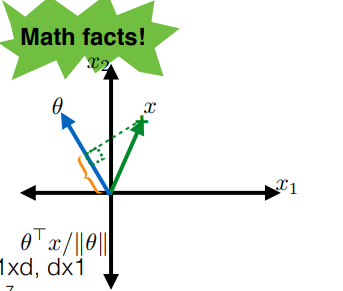

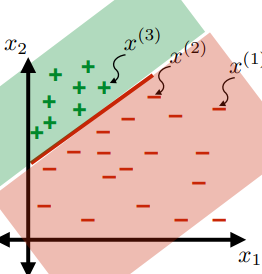

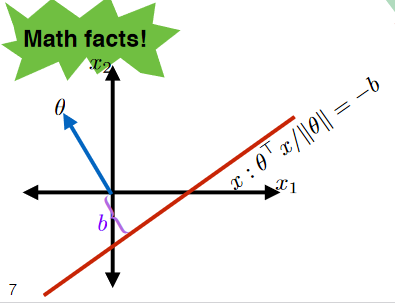

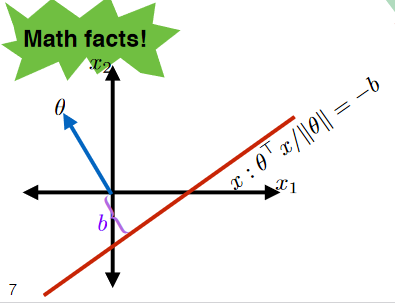

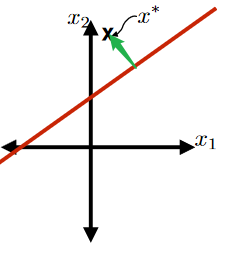

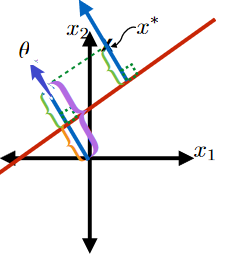

Review

Recall that if we have the two vectors $\theta, x$:

then the dot product:

\[\frac{x \cdot \theta}{||\theta||} = \frac{x^T \theta }{||\theta||}\]represents the projection of $x$ onto $\theta$.

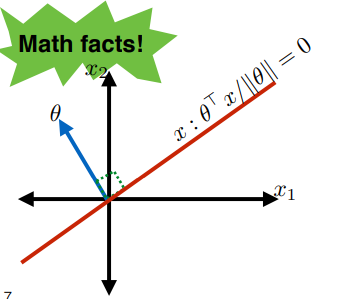

Therefore, an important point is that:

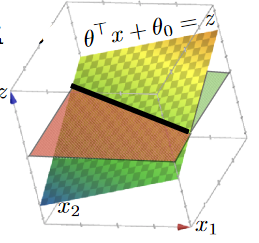

where the red line (set of $x$) can be represented by:

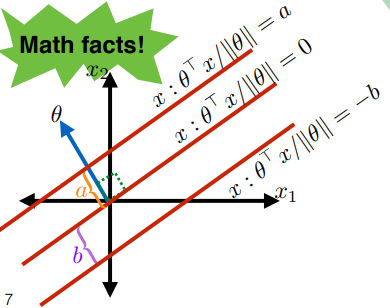

\[x : \frac{\theta \cdot x}{||\theta||} = 0\]and that:

\[\begin{align*} x : \frac{\theta \cdot x}{||\theta||} &= a \\ x : \frac{\theta \cdot x}{||\theta||} &= -b \end{align*}\]defines the following additional two lines:

where the advantage of defining lines here is that:

- all we used are data points

Therefore, we can say that for we can describe any line in 2 dimension as:

\[\begin{align*} \frac{\theta^T x}{||\theta||} &= -b \\ \theta^T x + b ||\theta|| &= 0\\ \theta^T x + \theta_0 &= 0 \end{align*}\]

So the linear classifier can just look at the sign of $x$ relative to the line

\[h(x) = h(x; \theta, \theta_0)=\text{sign}(\theta^T x + \theta_0) = \begin{cases} +1, & \text{if } \theta^T x + \theta_0 > 0\\ -1, & \text{if } \theta^T x + \theta_0 \le 0\\ \end{cases}\]where:

- the equality $\le$ is arbitrary. It could be applied on $>$ alternatively.

- $h(x; \theta, \theta_0)$ means $h$ is a function of input $x$, with parameter $\theta, \theta_0$ that has nothing to do with input

Now, we can define the linear classifier class to be:

\[\mathcal{H} = \{h(x;\theta, \theta_0), \forall \theta, \theta_0\}\]where again the input is $x$

Note:

At this point, all that we have to do is:

- be able to evaluate how good a hypothesis $h$ is

- be able to find the best $h$ in the class

Evaluating a Classifier

Note:

- This discussion applies to the general case of any classifier

To be able to determine if a hypothesis is good or not, we can look at the loss function:

\[L(g,a)\]where:

- $g$ means the our guess from hypothesis

- $a$ means the actual value

- note that it does not depend on input, since this basically just describes how bad it is if we guessed incorrectly

For Example:

Symmetric Loss

\[L(g,a) = \begin{cases} 0, & \text{if }g =a \text{, i.e. guessed correctly}\\ 1, &\text{otherwise} \end{cases}\]however, a better loss might be:

\[L(g,a) = \begin{cases} 0, & \text{if }g =a \text{, i.e. guessed correctly}\\ 1, & \text{if }g=1,a=-1\\ 100, &\text{if }g=-1,a=1 \end{cases}\]where:

- $g=-1,a=1$ represents we predicting newborn NOT having a seizure, but they actually had. Therefore, it is detrimental and weighted more.

Therefore, we can define:

Test Error

For $n’$ points of new data, the test error of a hypothesis $h$ is:

\[\mathcal{E}(h) = \frac{1}{n'} \sum_{i=n+1}^{n+n'}L(h(x^{(i)}), y^{(i)})\]where basically:

- we are averaging over the loss function, and the guess comes from the hypothesis $h$

Training Error

For $n$ points of old/given data, the training error of a hypothesis $h$ is:

\[\mathcal{E}_n(h) = \frac{1}{n} \sum_{i=1}^{n}L(h(x^{(i)}), y^{(i)})\]where basically:

- we are using existing data as input for $h$

Then, we can say I prefer hypothesis $h$ over $\bar{h}$ if (for example):

\[\mathcal{E}_n(h) < \mathcal{E}_n(\bar{h})\]i.e. $h$ has a smaller training error (common) than $\bar{h}$

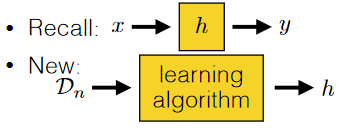

Picking a Classifier

Obviously we cannot just try out every classifier and compute the $\mathcal{E}$. Therefore, we think about

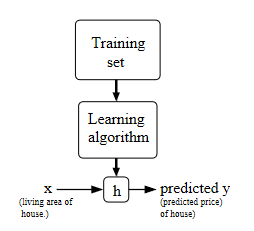

So in general, this is what is happening:

where:

- the output of your algorithm is a hypothesis, which is basically a function that can give you predicted output based on some input

where we can use a learning algorithm to spit out a *good* classifier by:

-

taking in an entire dataset $\mathcal{D_n}$

e.g.

|

|

|  |

| :———————————————————-: | :———————————————————-: |

|

| :———————————————————-: | :———————————————————-: |

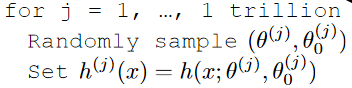

For Example

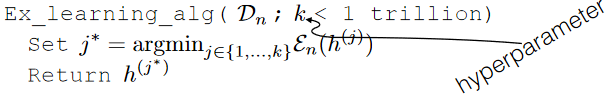

Your hypothesis class is generated by your friend to be:

where basically you get a trillion hypothesis.

A simple learning algorithm would be:

where:

- $k$ is a hyperparameter, which does not change the logic of algorithm at all. It is just a parameter we can choose as programmer

- we basically found $h^{(j^*)}$ such that it has the smallest training error among $k$ classifiers

Note:

- notice that it means $\text{Ex_learning_alg}(\mathcal{D}_n;2)$ must output an algorithm that is at least as good as $\text{Ex_learning_alg}(\mathcal{D}_n;1)$.

- this means that as $k$ increases, we will get better classifiers

In practice, if we took:

\[L(g,a) = \begin{cases} 0, & \text{if }g =a \text{, i.e. guessed correctly}\\ 1, &\text{otherwise} \end{cases}\]and that we use training error to decide upon the better hypothesis.

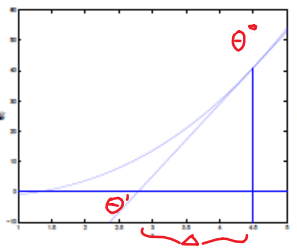

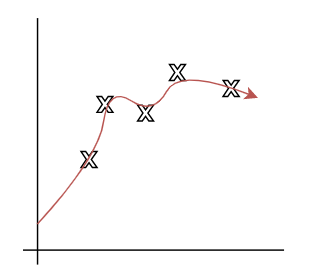

Then the process of $\text{Ex_learning_alg}(\mathcal{D}_n;k)$ looks like:

-

On the first run with $h^{1}$

where the top graph has:

- a dotted line indicating our hypothesis $h^{(1)}$

- current training error set to $1$ which is the maximum

- $h^{(1)}$ is having a training error of $0.47$

the bottom graph is the plot of training errors with respect to $k$-th hypothesis

- therefore $j^*=1$ currently

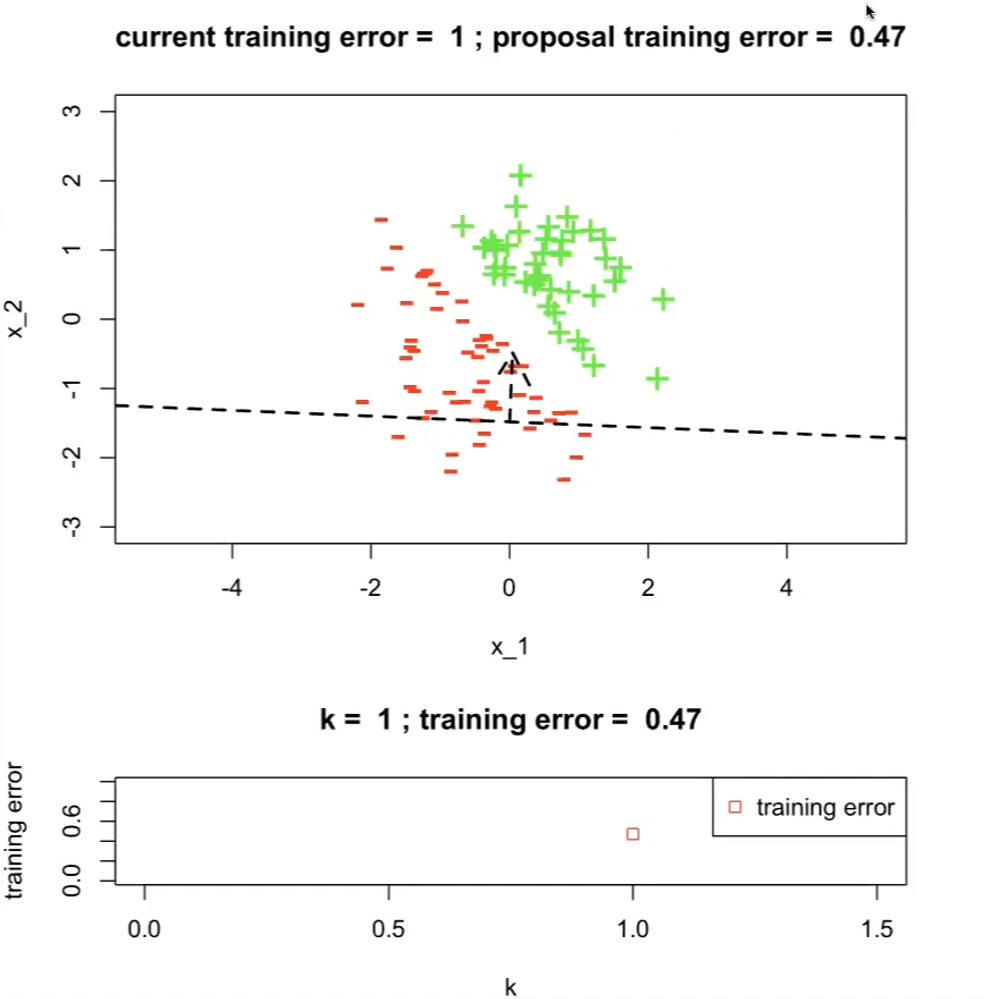

-

The next proposal/hypothesis $h^{(2)}$ has a better training error:

now $j^*=2$

-

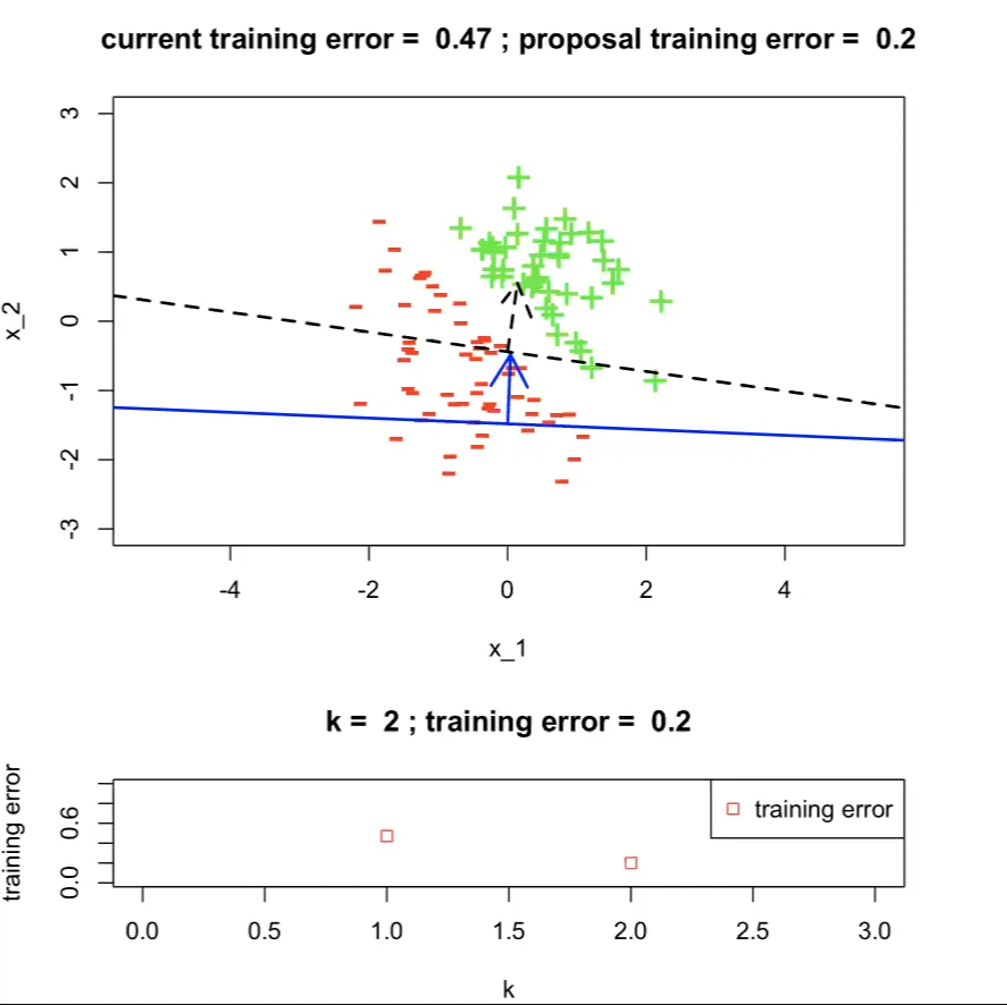

….

-

Over 413 runs, we get up to $0.01$ for error. But obviously there is one that would give $\mathcal{E}(h)=0$

Therefore, we kind of need a better learning algorithm

Machine Learning Techniques

The examples in the last lecture talks about problem of classification, to be specific, a binary classification.

classDiagram

Supervised_Learning <|-- Regression

Supervised_Learning <|-- Classification

Unsupervised_Learning <|-- _

Classification <|-- Binary_Classification

Classification <|-- Multi_Class_Classification

Classification:

Learning an input mapping to a discrete set

\[\mathbb{R}^d \mapsto \{...\}\]where:

- input is $\mathbb{R}^d$

- output is ${…}$

In specific, what we have met is the binary classification:

Binary Classification

Learning an input mapping to a discrete set of two values

\[\mathbb{R}^d \mapsto \{-1,+1\}\]for example, we can have a linear classifier (i.e. a hyperplane) to solve such problem

Multi-class Classification

Learning an input mapping to a discrete set of more than two values

e.g.

usually in this case, a linear classifier would not work properly

In other cases, we might want get some continuous output. For example, the real-time temperature in a room. Then we usually use regression

Regression

Learning an input mapping to continuous values

\[\mathbb{R}^d \mapsto \mathbb{R}^k\]e.g.

Lastly, unsupervised learning talks about:

Unsupervised Learning

- No labels for input. The aim is to find patterns in a given dataset.

General Steps for ML Analysis

In general, you would have needed to:

-

Establish a goal & find data

- Example goal: diagnose whether people have heart disease based on their available information

-

Encode data in useful form for the ML algorithm

- some sort of transformation (i.e. feature function) to the dataset

-

Run the ML algorithm & return a classifier (i.e. a function/hypothesis $h$)

-

if the problem is supervised learning

-

Example algorithms include perceptron algorithm, average perceptron algorithm, etc.

-

-

Interpretation and Evaluation

Feature:

A function that takes the input/raw data and converts it to some ML usable form for later processing

\[x \mapsto \phi(x)\]for example, one hot encoding (see section below)

Note:

- sometimes, feature functions $\phi(x)$ are just referred to as features $\phi$, since it is usually the resulting value/vector that we care about in ML

- Those feature functions does not have to be reversible

Example

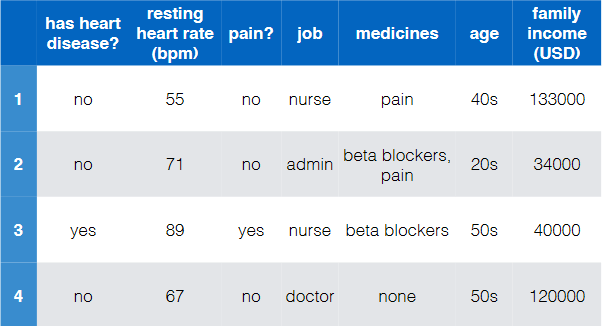

Consider the task of diagnose whether people have heart disease based on their available information

- First, need goal & data

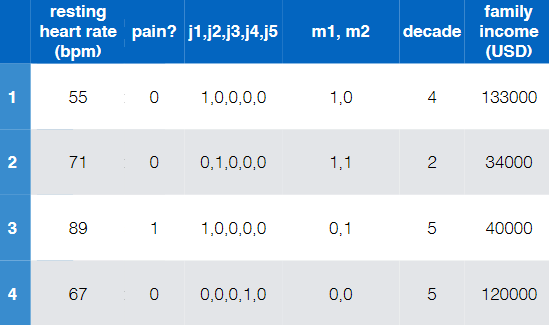

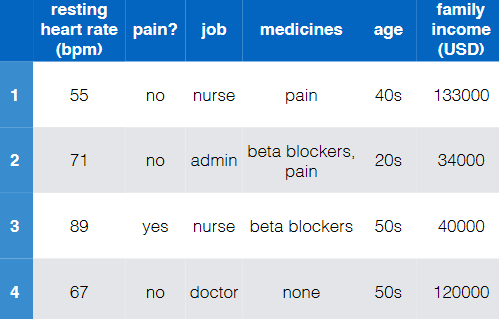

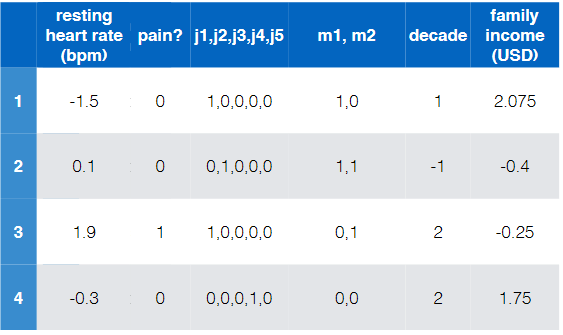

e.g.

where notice that:

- there are of course more data to these four

- this is labelled and is binary (has heart disease?)

- so this is a binary/two-class classification

- the input features are basically all the rest

Note that:

- Even if we have the input features ready, we need to find some way to convert string (non-numeric) information to numbers. Otherwise we cannot use our established models

-

Encode data in a usable form

-

We can easily convert the labels=”has heart disease” to:

\[\{\text{'yes'}, \text{'no'}\} \iff \{ +1,-1 \}\] -

The data/inputs needs some work. We need to use some feature $\phi$ that:

\[x \mapsto \phi(x)\]where:

- old/raw features is $x$

- new feature for ML is $\phi(x)$

e.g.

-

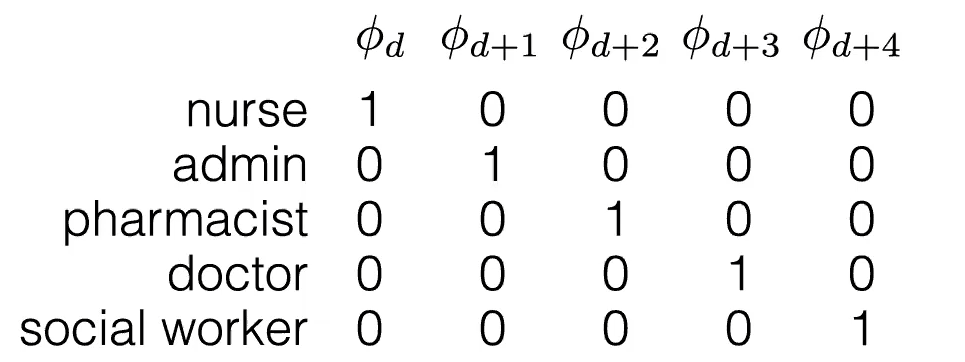

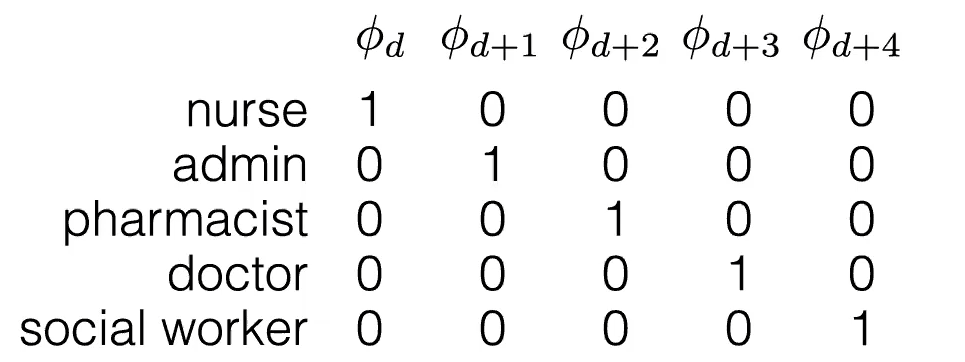

some interesting encoding to see would be:

where:

- this is also called a one hot encoding

-

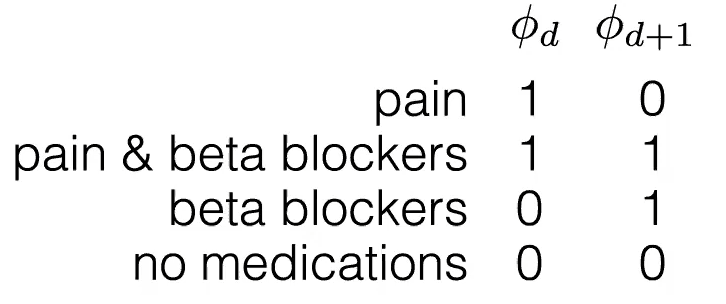

and factored encoding (since there are overlaps):

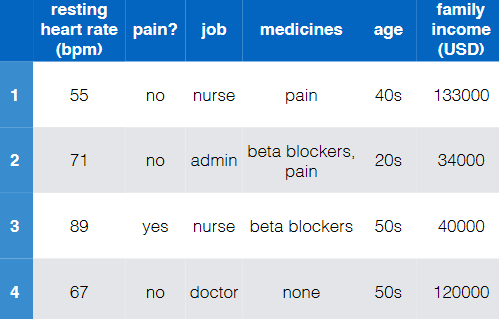

Overall:

$x$, old data/feature $\phi(x)$, new data/feature

-

Note

-

In step 2, some other ideas could be:

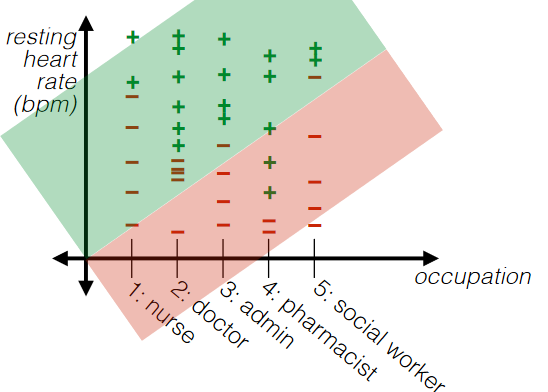

For categorial jobs:

-

if we turned categorial information into some unique natural numbers:

then we are imposing some sort of ORDERINGS to the categories. This sometimes is not a good idea.

-

A good idea is to turn each category into a unique feature. For example:

then the advantage is that you can always "separate/group" those categories as they are always “apart”

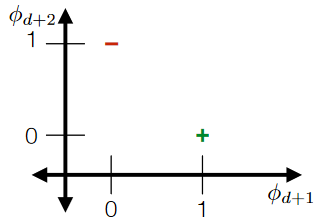

For overlapping “categories”:

-

Notice that since there are overlaps, something like this would be nice:

such that it should still be separable (think about cases when it is not):

Note

- this is strictly different from a binary encoding, because we kind of chose some useful orderings/groupings to show those overlaps.

-

Encode Ordinal Data

In general, you would meet three kinds of data to go through your feature (function):

-

Numerical Data: order on data values, and differences in value are meaningful

- e.g. heart rate = 56, 67, 60, etc

- e.g. filtering useless ones; standardization

-

Categorical Data: no order information

- e.g. job = doctor, nurse, pharmacist

- e.g. one hot encoding

-

Ordinal Data: order on data values, but differences not meaningful without some processing

-

e.g. Likert Scale

is the difference between each state exactly the same?

-

For Example

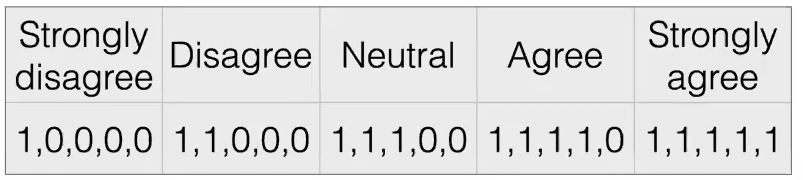

Unary/Thermometer Code

| $x$ | $\phi(x)$ |

|---|---|

|

|

where notice that the advantage is:

- order information still exists

- difference information is “removed”

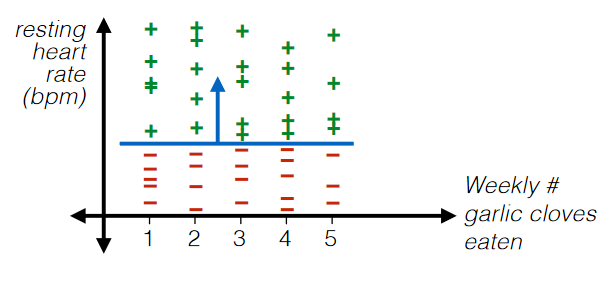

For Example: Filtering

Even numerical data could be useless. Consider

where:

- in this case, “Weekly # Garlic Clove Eaten” is useless

- maybe ask some experts on this. This might be important unless you are sure.

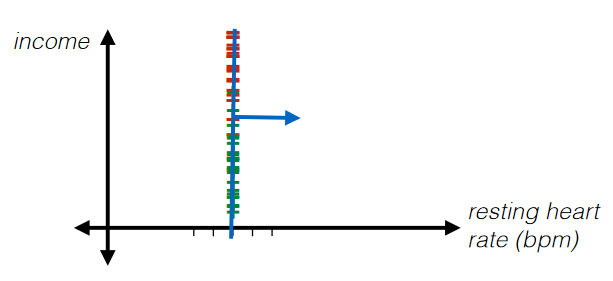

For Example: Standardization

| $x$ | $\phi(x)$ |

|---|---|

|

|

where basically each data point has gone through:

Standardization

Given some data point $x^{(d)} \in \mathcal{D}_n$:

\[\phi_d^{(k)} = \frac{x_d^{(k)}-\text{mean}_d}{\text{stddev}_d}\]where:

- doing $\frac{1}{\text{stddev}_d}$ basically zooms in the data

where the advantage of this is that:

- such classifier would be independent of the scale of axis that you chose to plot at

- also, it would sometimes be easier to interpret the result/classifier

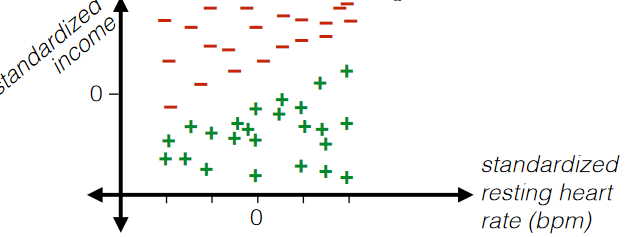

Therefore, we could further encode the example in the previous lecture to be:

| $x$ | $\phi(x)$ |

|---|---|

|

|

where notice that:

- “resting heart rate” and “family income (USD)” has now been standardized

Nonlinear Boundaries

Review

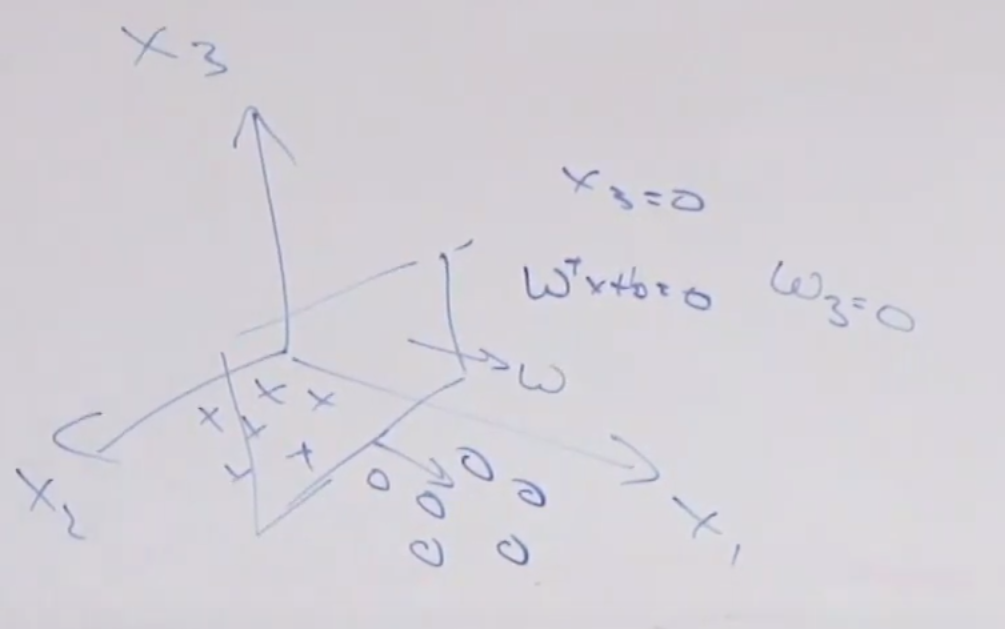

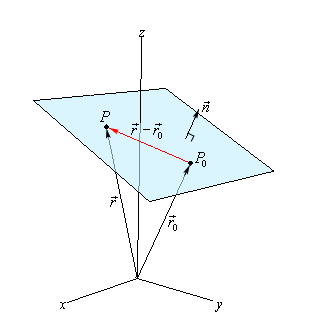

-

Recall that a plane can be defined a point $\vec{r}_0$ in the plane AND its normal vector $\vec{n}$:

\[\begin{align*} \vec{n} \cdot (\vec{r}-\vec{r}_0) &= 0 \\ \vec{n} \cdot \vec{r} &= \vec{n} \cdot \vec{r}_0 \end{align*}\]

where:

- $\vec{r}$ basically is “input”

- $\vec{n}\cdot \vec{r}_0$ basically is a constant

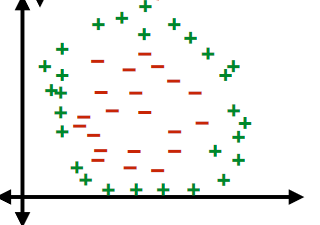

Therefore, consider classifying:

where now your “hyperplane classifier” is:

- $\theta^T x + \theta_0 = 0$, $x \in \mathbb{R}^2$

By adding a new dimension $z$, and then you have

now your “hyperplane classifier” becomes:

- $\theta^T x + \theta_0 = z$, $x \in \mathbb{R}^2$

An advantage of this idea is to classify:

| dataset | hyperplane/classifier: $f(x)=z,x\in \mathbb{R}^2$ |

|---|---|

|

|

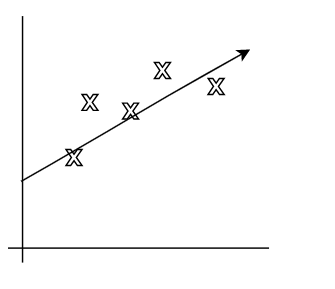

Linear Regression

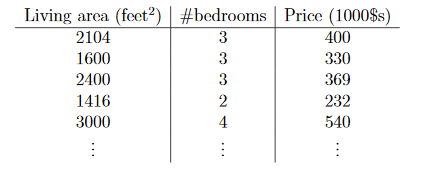

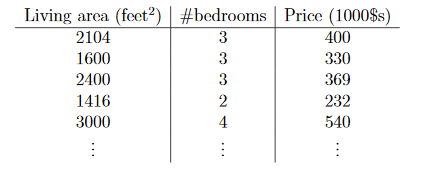

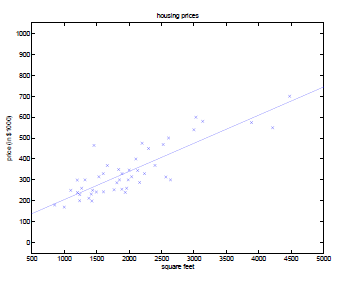

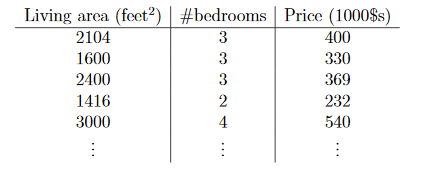

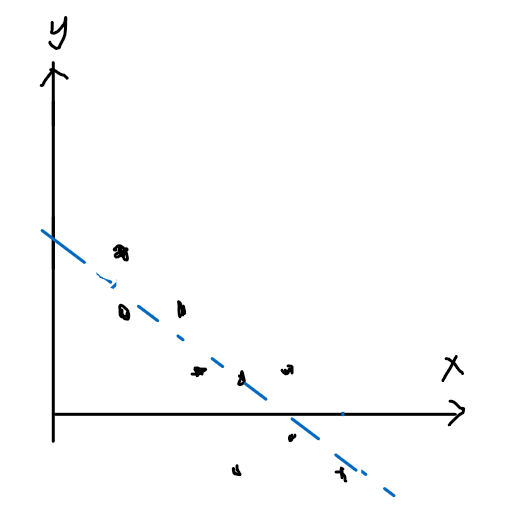

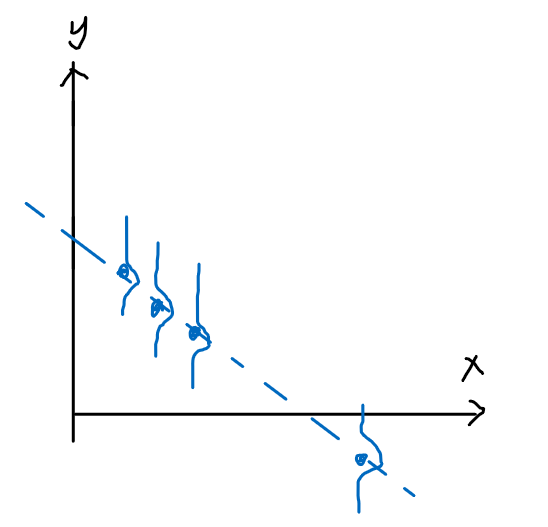

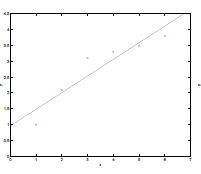

Consider the following data:

where:

- $x^{(i)}_1$is the living area of the $i$-th house in the training set, and $x^{(i)}_2$ is its number of bedrooms.

- $y$ is a continuous (assuming continuous price) output

- since output/labels are given, this is supervised learning

Then, an example would be to use linear regression

\[h(x;\theta)=h_\theta(x) = \theta_0 + \theta_1 x_1 + \theta_2 x_2\]Alternatively, letting $x_0\equiv 1$, we can have a compacter form:

\[h(x) = \sum_{i=0}^n \theta_i x_i = \theta^T x\]where now we basically have:

-

input/feature $x=\begin{bmatrix} 1 \x_1 \x_2 \ \end{bmatrix}=\begin{bmatrix} x_0 \x_1 \x_2 \ \end{bmatrix}$

-

parameter $\theta = \begin{bmatrix} \theta_0 \\theta_1 \\theta_2 \ \end{bmatrix}$ would be the job of Learning Algorithm to choose

Therefore, the task of Learning Algorithm is to decide $\theta$ such that $h(x) \approx y$ for $x \in \mathcal{D}_m$ being the training dataset consisting of $m$ data pairs.

Some sensible idea would be to minimize the square of the error:

- Gradient Descent Algorithm

- Normal Equation

LMS Algorithm

The aim of Learning Algorithm is to decide $\theta$ such that $h(x) \approx y$ for $x \in \mathcal{D}_m$ being the training dataset consisting of $m$ data pairs.

LMS Algorithm

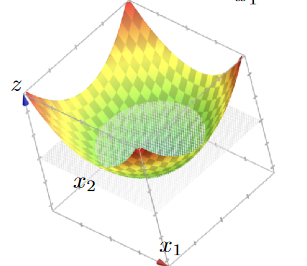

We first define the cost function $J$ to be:

\[J(\theta) = \frac{1}{2} \sum_{i=1}^{m}\left( h_\theta(x^{(i)})-y^{(i)} \right)^2\]where:

- the $\frac{1}{2}$ there is just so that taking derivatives later would look nicer

Therefore, now the aim is to choose $\theta$ to minimize $J(\theta)$

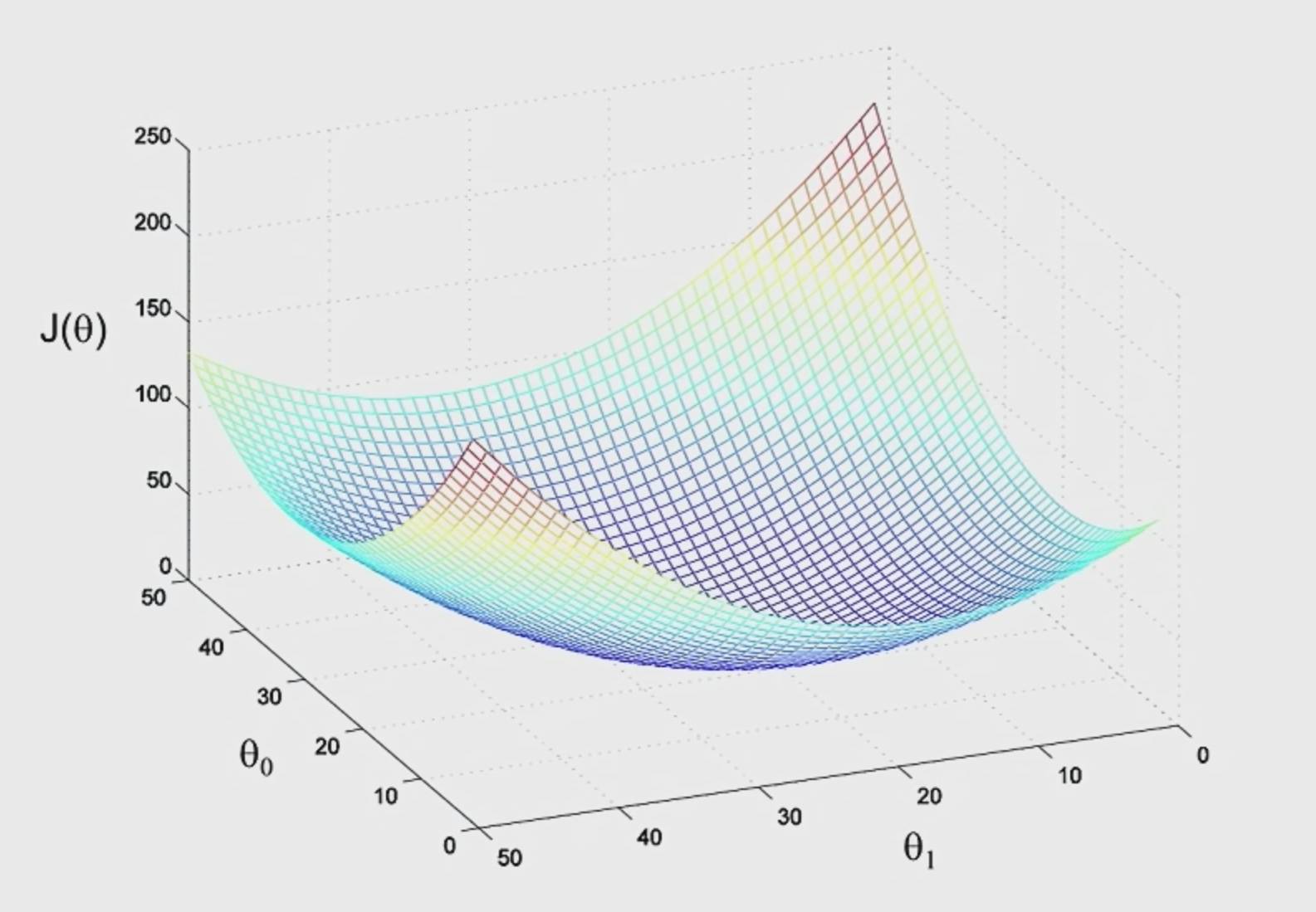

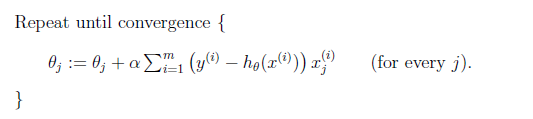

Batch Gradient Descent Algorithm

To achieve this, one way is to use (Batch) Gradient Descent Algorithm.

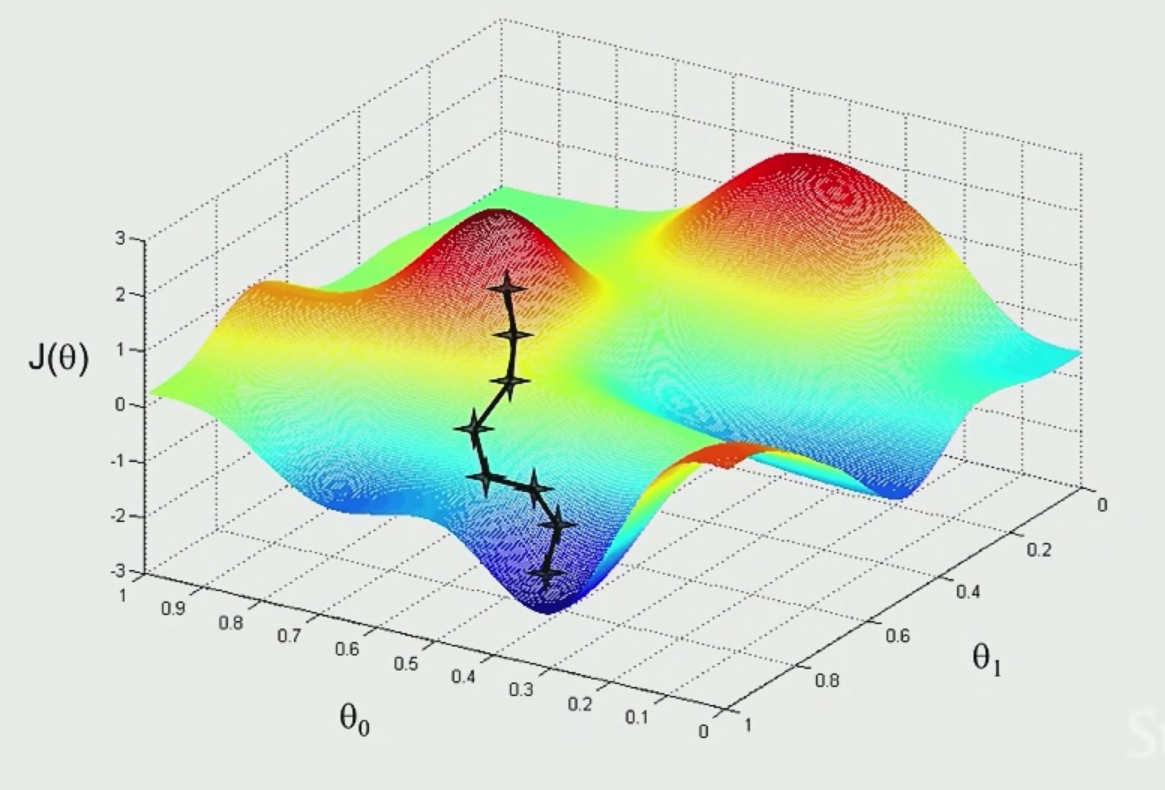

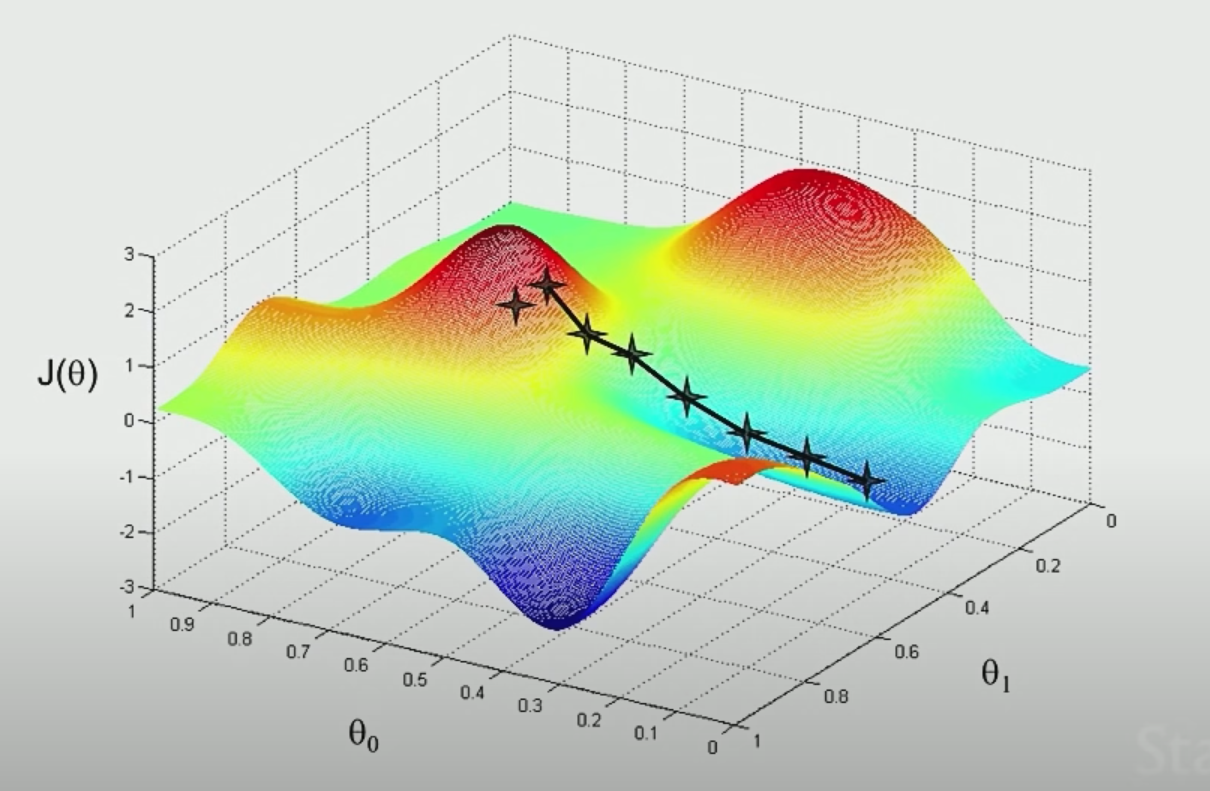

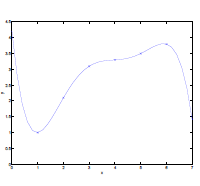

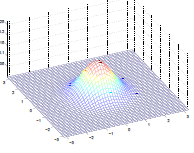

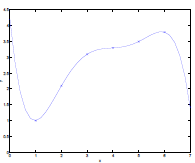

The idea is that if we plot $\theta_1,\theta_2$ and $J$ (not the $J$ defined above, which should be a convex function), we see something like:

|  |

|  |

| :———————————————————-: | :———————————————————-: |

|

| :———————————————————-: | :———————————————————-: |

where the aim is to find the minima point:

- pick some point to start with

- for each point, decide where to move down next based on gradient vector

- move in that direction and repeat step 2

However, notice that the final local minima sometimes depends on where you start with.

Gradient Descent Algorithm

Basically start with some initial $\theta=\begin{bmatrix}\theta_0 \ \theta_1 \ …\end{bmatrix}$, and then repeatedly move, $\forall j$:

\[\begin{align*} \theta_j &:=\theta_j - \alpha \frac{\partial}{\partial \theta_j} J(\theta) \\ &=\theta_j - \alpha \sum_{i=1}^m \left[ \left(h_\theta(x^{(i)})-y^{(i)}\right)x_j^{(i)} \right]\\ \end{align*}\]where:

- $:=$ is the assignment, which takes value from the right and assign it to the left

- $\alpha$ is called the learning rate, usually set to $0.01$ and manipulate around

- the $-\alpha$ indicates that we are moving DOWN the slope to minima.

- This method looks at every data point in the entire training set for each step (of computing the next $\theta_j$ value), and is called batch gradient descent (i.e. so a batch here would mean the entire dataset $\mathcal{D}_m$).

Then you repeat until convergence

notice that the definition of $J$ would cause our function to only have one global minimum. Therefore with appropriate $\alpha$, you should always get your data to converge

\[J(\theta) = \frac{1}{2} \sum_{i=1}^{m}\left( h_\theta(x^{(i)})-y^{(i)} \right)^2\]

- note that this is a convex function. This is because the sum of convex function $\left( h_\theta(x^{(i)})-y^{(i)} \right)^2$ is still a convex function. (for a simple case, consider the sum of quadratics still being a quadratic)

Therefore $J$ typically looks like:

So in summary, this algorithm does:

Note:

-

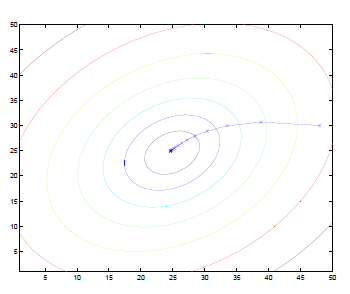

If we plot the contour, then the gradient will always look like:

where:

- basically we are moving perpendicular (gradient) to the contours

-

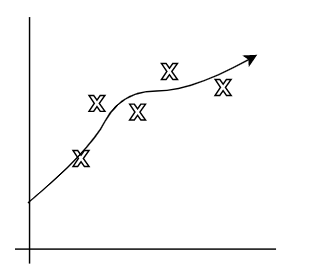

This also implies some choice of $\alpha$

- if you choose your $\alpha$ to be too large, then the last steps might overshoot pass the minima

- if you choose your $\alpha$ to be too small, then you need a lot of iterations to get to the minima

Proof

Consider the case when there is only one data point in $\mathcal{D}_m$, then:

\[\begin{align*} \theta_j &:=\theta_j - \alpha \frac{\partial}{\partial \theta_j} J(\theta) \\ &:=\theta_j - \alpha \frac{\partial}{\partial \theta_j} \left( \frac{1}{2} (h_\theta(x) - y)^2\right)\\ &:=\theta_j - \alpha (h_\theta (x) - y)\frac{\partial}{\partial\theta_j}(h_\theta(x)-y)\\ &:=\theta_j - \alpha (h_\theta (x) - y)\frac{\partial}{\partial\theta_j}(\theta^Tx-y)\\ &:=\theta_j - \alpha (h_\theta (x) - y)\frac{\partial}{\partial\theta_j}\left[ (\theta_0 x_0 + \theta_1x_1+...) -y\right]\\ &:=\theta_j - \alpha (h_\theta (x) - y)x_j \end{align*}\]Now, if you take all the data points, recall that:

\[\begin{align*} \frac{\partial}{\partial \theta_j} \left( \sum_{i=1}^m \frac{1}{2} (h_\theta(x^{(i)}) - y^{(i)})^2\right) &=\sum_{i=1}^m\frac{\partial}{\partial \theta_j} \left( \frac{1}{2} (h_\theta(x^{(i)}) - y^{(i)})^2\right)\\ &=\sum_{i=1}^m \left[ \left(h_\theta(x^{(i)})-y^{(i)}\right)x_j^{(i)} \right]\\ \end{align*}\]Therefore you get:

\[\begin{align*} \theta_j &:=\theta_j - \alpha \frac{\partial}{\partial \theta_j} J(\theta) \\ &=\theta_j - \alpha \sum_{i=1}^m \left[ \left(h_\theta(x^{(i)})-y^{(i)}\right)x_j^{(i)} \right]\\ \end{align*}\]In the example of:

The gradient descent algorithm will result in a $\theta$ such that our line looks like:

Problem of Gradient Descent

- This method of Gradient Descent looks at every data point in the entire training set for each step (i.e. so a batch here would mean the entire dataset $\mathcal{D}_m$). This means we for each step, we need to compute for all data points, which is very expensive if there are a lot of data points.

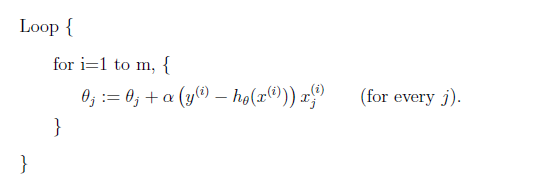

Stochastic Gradient Descent Algorithm

Stochastic Gradient Descent Algorithm

Instead of making one update for every scan through dataset, we can make one update per data point. Therefore, the algorithm looks like:

where:

- in practice, this does make you move faster towards the minima

However, the upshot of this is that it may never converge, since you are now only updating per each data point

- this could be “minimized” by continuously decreasing the learning rate $\alpha$, so that the oscillation would be slower

The Normal Equation

Gradient descent gives one way of minimizing $J$. A second way of doing so is to perform the minimization explicitly and without resorting to an iterative algorithm.

- therefore, this is much faster than the algorithms above

- but this works iff there is a solution

Recall/Notations

-

if you have $J(\theta)$, where input $\theta \in \mathbb{R}^{m+1}$, then, if for example $m=2$, we have:

\[\nabla_\theta J(\theta) = \begin{bmatrix} \frac{\partial }{\partial \theta_1}J\\ \frac{\partial }{\partial \theta_2}J\\ \frac{\partial }{\partial \theta_3}J \end{bmatrix}\] -

now, you can also have input as matrix, e.g. input being $A \in \mathbb{R}^{2 \times 2}$ sand that a function $f$ maps $f:\mathbb{R}^{2\times 2} \to \mathbb{R}$

\[\nabla_A f(A) = \begin{bmatrix} \frac{\partial f}{\partial A_{11}} & \frac{\partial f}{\partial A_{12}}\\ \frac{\partial f}{\partial A_{21}} & \frac{\partial f}{\partial A_{22}} \end{bmatrix}\] -

If we have a square matrix $A \in \mathbb{R}^{n \times n}$, then the trace of $A$ $\text{tr}(A)$ is:

\[\text{tr}(A) = \sum_{i=1}^n A_{ii}\]some common properties:

-

$\text{tr}ABC=\text{tr}CAB=\text{tr}BCA$

-

$\nabla_A \text{tr}AB = B^T$

-

$\nabla_{A^T}f(A) = (\nabla_A f(A))^T$

-

$\nabla_A \text{tr} AA^TC = CA+C^TA$

-

$\nabla_A \text{tr}ABA^TC = CAB + C^TAB^T$

- from 2 and 3 above

- $\nabla_A\vert A\vert = \vert A\vert (A^{-1})^T$

-

The aim is to achieve:

\[\nabla_\theta J(\theta) = 0\]but:

- computing it directly might be expensive since $J(\theta)$ needs to look through all data

Then consider the design matrix, which is $X$ by stacking input data point $x^{(i)} \in \mathbb{R}^{n+1}$ to be:

\[X = \begin{bmatrix} -(x^{(1)})^T- \\ -(x^{(2)})^T-\\ \vdots \\ -(x^{(m)})^T- \end{bmatrix}\]where:

- $X \in \mathbb{R}^{m \times (n+1)}$

Similarly, define the labels to be:

\[\vec{y} = \begin{bmatrix} y^{(1)} \\ y^{(2)}\\ \vdots \\ y^{(m)} \end{bmatrix}\]Therefore, we have:

\[\begin{align*} X\theta &=\begin{bmatrix} -(x^{(1)})^T- \\ -(x^{(2)})^T-\\ \vdots \\ -(x^{(m)})^T- \end{bmatrix}\begin{bmatrix} \theta_1 \\ \theta_2\\ \vdots \\ \theta_n \end{bmatrix} = \begin{bmatrix} (x^{(1)})^T \theta \\ (x^{(2)})^T \theta \\ \vdots\\ (x^{(m)})^T \theta \end{bmatrix} = \begin{bmatrix} h_\theta(x^{(1)})\\ h_\theta(x^{(2)}) \\ \vdots\\ h_\theta(x^{(m)}) \end{bmatrix}\\ \end{align*}\]And more importantly:

\[\begin{align*} J(\theta) &= \frac{1}{2} \sum_{i=1}^m (h_\theta(x^{(i)})-y^{(i)})^2 \\ &= \frac{1}{2} (X\theta -\vec{y})^T(X\theta -\vec{y}) \end{align*}\]Normal Equation

Therefore, since we are finding the minimum point, we basically just do:

\[\begin{align*} \nabla_\theta J(\theta) &= 0\\ X^T (X\theta - \vec{y}) &= 0 \end{align*}\]where:

- $X^T (X\theta - \vec{y}) = 0$ is the also called the normal equation

The solution $\theta$ therefore satisfies:

\[\theta = (X^TX)^{-1}X^T \vec{y}\]

Proof

we already know that:

\[\begin{align*} J(\theta) &= \frac{1}{2} \sum_{i=1}^m (h_\theta(x^{(i)})-y^{(i)})^2 \\ &= \frac{1}{2} (X\theta -\vec{y})^T(X\theta -\vec{y}) \end{align*}\]Then:

\[\begin{align*} \nabla_\theta J(\theta) &= \nabla_\theta\frac{1}{2} (X\theta -\vec{y})^T(X\theta -\vec{y}) \\ &= \frac{1}{2}\nabla_\theta (\theta^TX^T -\vec{y}^T)(X\theta -\vec{y}) \\ &= \frac{1}{2}\nabla_\theta (\theta^TX^T X\theta -\vec{y}^T X\theta -\theta^TX^T \vec{y} + \vec{y}^T\vec{y}) \\ &= \frac{1}{2}\nabla_\theta \text{tr}(\theta^TX^T X\theta -\vec{y}^T X\theta -\theta^TX^T \vec{y} + \vec{y}^T\vec{y}) \\ &= \frac{1}{2}\nabla_\theta (\text{tr }\theta^TX^T X\theta -2\text{tr }\vec{y}^TX\theta) \\ &= \frac{1}{2}\left( X^TX\theta + X^TX\theta -2X^T \vec{y} \right)\\ &= X^TX \theta - X^T \vec{y} \end{align*}\]Therefore, setting it to zero gives:

\[\begin{align*} \nabla_\theta J(\theta) = X^TX \theta - X^T \vec{y} &= 0\\ X^T (X\theta - \vec{y}) &= 0 \end{align*}\]Therefore, the optimum value for $\theta$ becomes:

\[\begin{align*} X^T X\theta &= X^T \vec{y}\\ \theta &= (X^TX)^{-1}X^T \vec{y} \end{align*}\]Nonlinear Fits

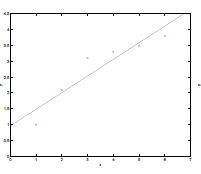

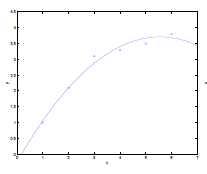

Now suppose you want to fit some of your data in a nonlinear form.

Or even:

\[\theta_0 + \theta_1 x^{(i)}_1 + \theta_2 \sqrt{x^{(i)}_2} + \theta_3 \log x^{(i)}_3\]What you can do is simply:

- let $x^{(i)}_2 := \sqrt{x^{(i)}_2}$, let $x^{(i)}_3 := \log x^{(i)}_3$

- then you are backed to a linear regression problem, and can apply the same techniques as above

Problem

- the problem with this is that you need to know which equation in advance. e.g. are you sure it is square root for a feature $x^{(i)}_2$? Logarithmic for $x^{(i)}_3$? etc.

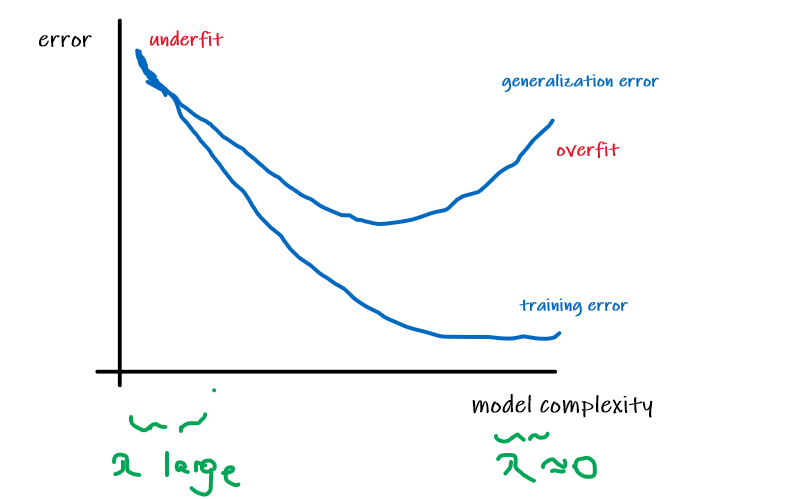

Overfitting and Underfitting

The idea for both is common but straight-forward

- Underfitting: model has not captured the structure for data in the training set

- Overfitting: model imitated the data but NOT the structure. i.e. the data is distributed in $x^2$, but $x^3$, or $x^4$ is used.

For Example:

Consider the data being actually distributed in $x \mapsto O(x^2)$, such that we have:

| Underfitting | Actual | Overfitting |

|---|---|---|

|

|

|

where:

- first figure is underfitted as we only imagined $y = \theta_0 + \theta_1 x$.

- third figure is overfitting as we imaged $y=\theta_0 + \theta_1 x + \theta_2 x^2 + … + \theta_5 x^5$

- we could interpret this as having five features, $x_1 = x,\,x_2 = x^2,\,x_3=x^3,\,…\,x_5=x^5$,and fitting them all in a linear manner

- These notions will be formalized later.

Take-away Message

- the choice of features/hypothesis is important to ensuring good performance of a learning algorithm. (When we talk about model selection, we’ll also see algorithms for automatically choosing a good set of features.)

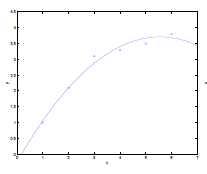

Locally Weighted Linear Regression

Advantage for Overfitting

- One advantage of locally weighted linear regression (LWR) algorithm is, assuming there is sufficient training data, makes the choice of features less critical. (as it is a local model)

The Linear Regression discussed in section Linear Regression is a type of “Parametric” Learning Algorithm, because the output/aim is a fixed size of parameters $\theta = \begin{bmatrix} \theta_0 \\\vdots\\\theta_n \\ \end{bmatrix}$ to a dataset

- this means that the output ($\theta$) is constant in size for any dataset

However, the LWR (Locally Weighted Linear Regression) would be “Non-parametric” Learning Algorithm, because the output/aim are parameters that grows/increases linearly with size of dataset

- this means that you can easily fit nonlinear dataset, but the output might be large for large dataset

The idea of LWR is as follows.

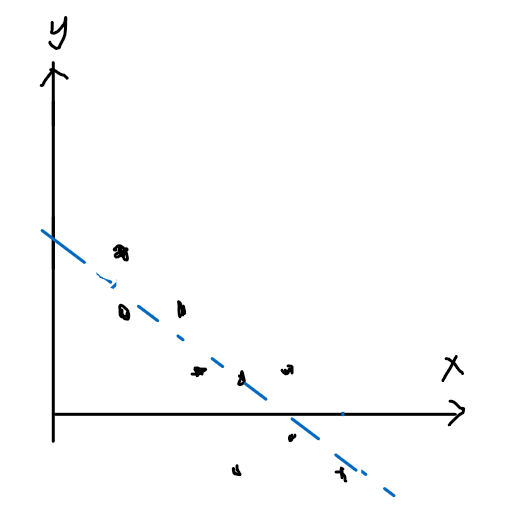

Consider the following dataset:

where, for each desired output of an input $x$, we consider:

- mostly importantly the neighborhood around/near the input $x$

- slightly consider (less weight) on the data far away from the input $x$

- Fit $\theta$ that minimizes $J$ using the above two steps

LWR

Instead, we have the error function $J$ to be:

\[J(\theta) = \frac{1}{2} \sum_{i=1}^{m} w^{(i)}\left( h_\theta(x^{(i)})-y^{(i)} \right)^2\]where:

- basically we are adding weight $w^{(i)}$ to each data point,

A common $w$ to use is:

\[w^{(i)} = \exp{\left(-\frac{(x^{(i)}-x)^2}{2\tau^2}\right)}\]so that:

- $x$ is the input which we want to get the prediction of

- so if $x$ is close to some points $x^{(i)}$, then the weight is close to $1$. Otherwise, far away points have weight close to $0$

- $\tau$ specifies the width of the bell shaped curve, and is called the bandwidth parameter. This will be a hyper-parameter.

Therefore, performing $J(\theta)$ means:

- we are “only” computing prediction errors near input point $x$

Probabilistic Interpretation

This section aims to answer questions such as:

- Why using Least Squares for Error?

Consider the case for housing data:

And assuming the actual distribution of data is:

\[y^{(i)} = \theta^T x^{(i)} + \epsilon^{(i)}\]where:

- so housing prices is a linear function on the features

- $\epsilon^{(i)}$ means the error term that captures either unmodeled effects or random noise.

- e.g. the seller’s mood on that day

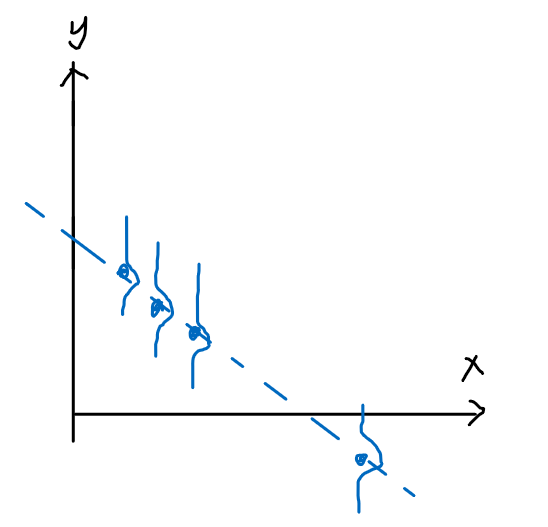

Pictorially

| Model: | Actual Data: |

|---|---|

|

|

And that we assume the error term $\epsilon^{(i)}$ is distributed IID (independently and identically distributed according to a Gaussian Distribution, also called a Normal Distribution):

\[\epsilon^{(i)} \sim \mathcal{N}(0, \sigma^2)\]where this normal distribution has:

- mean being $0$

- standard deviation of $\sigma$

- in general, for those types of data, the distributions would be Gaussian due to the Central Limit Theorem

So that this means the density of $\epsilon^{(i)}$ is given by:

\[p(\epsilon^{(i)}) = \frac{1}{\sqrt{2\pi} \sigma} \exp{\left( - \frac{(\epsilon^{(i)})^2}{2 \sigma^2} \right)}\]Therefore, since now we know the density of $\epsilon^{(i)}$ and we know $y^{(i)} = \theta^T x^{(i)} + \epsilon^{(i)}$, this gives:

\[p(y^{(i)} | x^{(i)}; \theta) = \frac{1}{\sqrt{2\pi} \sigma} \exp{\left( - \frac{(y^{(i)} - \theta^T x^{(i)})^2}{2 \sigma^2} \right)}\]where:

-

$p(y^{(i)} \vert x^{(i)}; \theta)$ means the distribution of $y^{(i)}$ given $x^{(i)}$ and parametrized by $\theta$

- so only $x^{(i)}$ is the random variable

-

alternatively, you can write it as:

\[y^{(i)} | x^{(i)}; \theta \sim \mathcal{N}(\theta^T x^{(i)}, \sigma^2)\]

Now, the important step is to ask: given the input/design matrix $X$, what is the distribution of $y^{(i)}$? i.e. How probable is each of the $y^{(i)}$ in your dataset?

-

By definition, this quantity is typically viewed a function of $\vec{y}$ (and perhaps $X$), for a fixed value of $\theta$.

-

However, remember that our aim is to get $\theta$, so we want to view it as a function of $\theta$, i.e. the likelihood function $L(\theta)$:

\[L(\theta) = L(\theta; X, \vec{y}) = p(\vec{y}|X;\theta)\]

Hence, using the independent assumption (IID), we get:

\[\begin{align*} L(\theta) =p(\vec{y}|X;\theta) &= \prod_{i=1}^m p(y^{(i)}|x^{(i)};\theta) \\ &= \prod_{i=1}^m \frac{1}{\sqrt{2\pi} \sigma} \exp{\left( - \frac{(y^{(i)} - \theta^T x^{(i)})^2}{2 \sigma^2} \right)} \end{align*}\]Therefore, all we need to do it to get the maximum likelihood for $\theta$.

-

Instead of doing it for $L(\theta)$, it is easier to first consider $l(\theta)=\log L(\theta)$

\[\begin{align*} l(\theta) &= \log L(\theta) \\ &= \log \prod_{i=1}^m \frac{1}{\sqrt{2\pi} \sigma} \exp{\left( - \frac{(y^{(i)} - \theta^T x^{(i)})^2}{2 \sigma^2} \right)}\\ &= \sum_{i=1}^m \left( \log\frac{1}{\sqrt{2\pi} \sigma} - \frac{(y^{(i)} - \theta^T x^{(i)})^2}{2 \sigma^2} \right)\\ &= m \log\frac{1}{\sqrt{2\pi} \sigma} -\sum_{i=1}^m\left( \frac{(y^{(i)} - \theta^T x^{(i)})^2}{2 \sigma^2} \right)\\ &= m \log\frac{1}{\sqrt{2\pi} \sigma} -\frac{1}{\sigma^2}\cdot \frac{1}{2}\sum_{i=1}^m (y^{(i)} - \theta^T x^{(i)})^2 \end{align*}\] -

Therefore, to maximize $l(\theta)$ is the same as minimizing:

\[\frac{1}{2}\sum_{i=1}^m (y^{(i)} - \theta^T x^{(i)})^2 = J(\theta)\]

Therefore, the take away message is that:

- the Least Square Algorithm defining $J(\theta)=\frac{1}{2}\sum_{i=1}^m (y^{(i)} - \theta^T x^{(i)})^2$ is equivalent as saying that the error term $\epsilon$ from $y^{(i)} = \theta^T x^{(i)} + \epsilon^{(i)}$ is distributed IDD

- therefore, the aim of minimizing $J(\theta)$ is the same as maximizing likelihood $L(\theta)$ given $X, \vec{y}$

Classification and Logistic Regression

Let’s now talk about the classification problem. This is just like the regression problem, except that the values $y$ we now want to predict take on only a small set of discrete values.

For now, we will focus on the binary classification problem in which $y$ can take on only two values, 0 and 1.

- However, most of what we say here will also generalize to the multiple-class case.

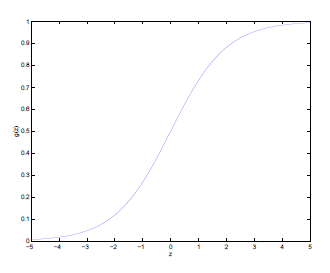

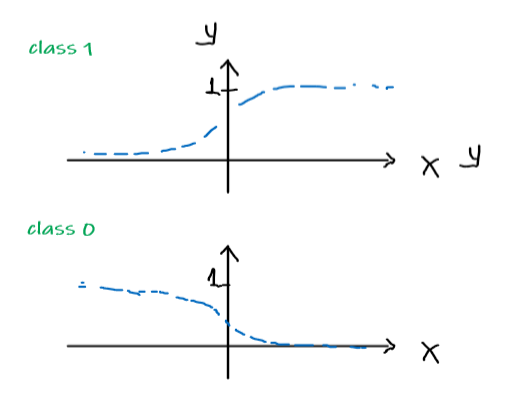

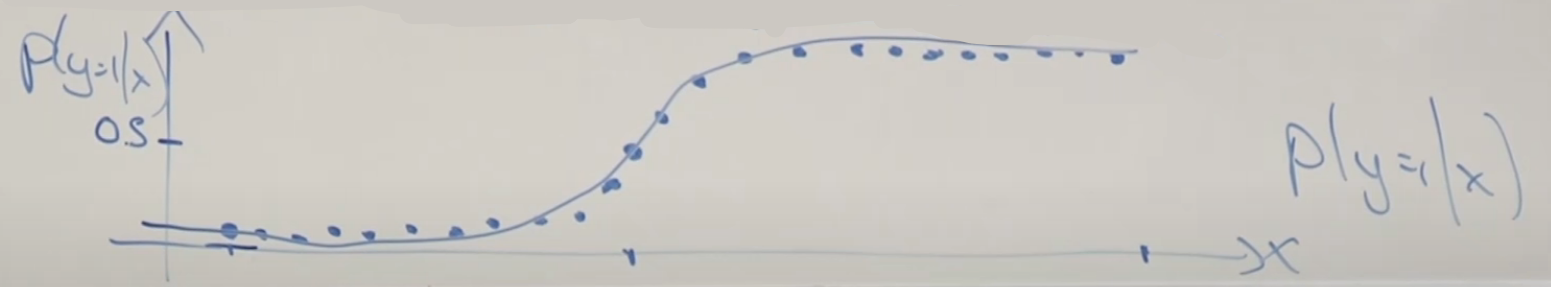

Logistic Regression

The idea is that, if we are given some binary data, such that a linear fit would be bad because:

- gradient changes if we add more data on both ends

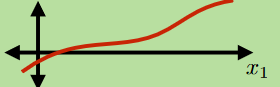

Therefore, we consider functions that looks like:

which is the logistic function or sigmoid function

\[g(z) = \frac{1}{1+e^{-z}}\]which basically:

- outputs result between $0,1$

- we chose this function specifically because this is actually the special case of the Generalized Linear Model

Useful Properties of Sigmoid Function

-

Consider the derivative of the function $g$:

\[\begin{align*} g'(z) &= \frac{d}{dz} \frac{1}{1+e^{-z}} \\ &= \frac{1}{(1+e^{-z})^2}(-e^{-z}) \\ &= \frac{1}{1+e^{-z}} \frac{-e^{-z}}{1+e^{-z}} \\ &= \frac{1}{1+e^{-z}}\left( 1 - \frac{1}{1+e^{-z}} \right) \\ &= g(z)\left(1-g(z)\right) \end{align*}\]

Therefore, now we consider the hypothesis:

\[h_\theta(x)=g(\theta^T x)=\frac{1}{1+e^{-\theta^T x}}\]And the task now is to find out a good cost function.

Heuristics

Recall that for linear regression, we used least squares error which could be derived using the probabilistic model as the maximum likelihood estimator under a set of assumptions, by the following setups:

In the linear regression model, assume the distribution data follows the form of our hypothesis

\[y^{(i)} = \theta^T x^{(i)} + \epsilon^{(i)}\]where $\epsilon$ is the perturbation which basically introduces the second step

Assuming the perturbation being IID, consider the probability of actually getting $y$ from some $x$:

\[p(y^{(i)} | x^{(i)}; \theta) = \frac{1}{\sqrt{2\pi} \sigma} \exp{\left( - \frac{(y^{(i)} - \theta^T x^{(i)})^2}{2 \sigma^2} \right)}\]Maximize the probability of $p(y^{(i)}\vert x^{(i)};\theta)$ by optimizing $\theta$, which reaches the conclusion of minimizing:

\[\frac{1}{2}\sum_{i=1}^m (y^{(i)} - \theta^T x^{(i)})^2 = J(\theta)\]which becomes the error function

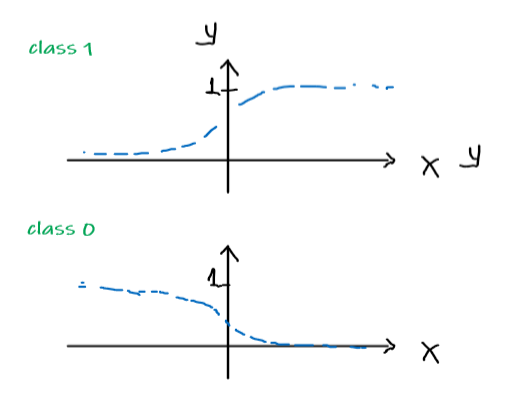

Therefore, first we do a similar step of:

-

Considering a single point, and assume that the distribution of data follows the form of the hypothesis:

\[\begin{align*} P(y = 1 | x;\theta) &= h_\theta (x) \\ P(y = 0 | x; \theta)&= 1 - h_\theta(x) \end{align*}\]Pictorially

Model: Actual Data:

or compactly, since $y \in {0,1}$:

\[p(y | x; \theta) = (h_\theta(x))^y(1-h_\theta(x))^{1-y}\]which is also called the Bernoulli Distribution, which has

- the probability mass function being $f(k;\phi) = \phi^k(1-\phi)^{1-k}$ for $k \in {0,1}$

- https://en.wikipedia.org/wiki/Bernoulli_distribution

-

Assuming that the training samples are generated IID, such that we have the likelihood of parameter/probability of data being given by:

\[\begin{align*} L(\theta) &= p(\vec{y} | X; \theta) \\ &= \prod_{i=1}^m p(y^{(i)}| x^{(i)}; \theta) \\ &= \prod_{i=1}^m (h_\theta(x^{(i)}))^{y^{(i)}}(1-h_\theta(x^{(i)}))^{1-y^{(i)}} \end{align*}\]Again, to convert the product to easier sums, we consider the log likelihood which is monotonic (i.e. if $f(x)$ increases/decreases, then $\log(f(x))$ also increases/decreases)

\[l(\theta) = \log(L(\theta)) = \sum_{i=0}^m y^{(i)}\log h_\theta(x^{(i)}) + (1-y^{(i)}) \log \left( 1-h_\theta(x^{(i)}) \right)\]Note

- This function turns out to be a concave function, in other words, there is only one maxima

-

Now, to maximize the (log) likelihood:

-

as compared to the normal equation in linear regression, doing it directly seems non-trivial. It turns out that there is no “normal equation equivalent” for logistic regression

-

another “brute-force” away is to consider the (batch) gradient ascent method, where we want to do:

\[\theta := \theta + \alpha \nabla_\theta l(\theta)\]Or written in the form of individual components:

\[\theta_j := \theta_j + \alpha\frac{\partial}{\partial \theta_j}l(\theta)\]Differences against Linear Regression

-

For linear regression, we had batch gradient Descent, which involved: \(\theta_j := \theta_j - \alpha\frac{\partial}{\partial \theta_j}J(\theta)\) so we see that the differences are:

- the “cost” function is now $l(\theta)$

- we are ascending with a plus sign in $+ \frac{\partial}{\partial \theta_j}l(\theta)$, instead of descending with a minus sign in $- \frac{\partial}{\partial \theta_j}J(\theta)$. Even though both are maximizing likelihood

-

-

Batch Gradient Ascent Algorithm

Batch Gradient Ascent

The aim is to compute

\[\theta_j := \theta_j + \alpha\frac{\partial}{\partial \theta_j}l(\theta)\]And below we computed the derivative, so in the end we are computing:

\[\theta_j := \theta_j + \alpha \sum_{i=1}^m (y^{(i)} - h_\theta(x^{(i)})) x^{(i)}_j\]which again does it by batches (of the entire dataset)

Proof for Derivative of $l(\theta)$:

The gradient ascent algorithm needs the $\frac{\partial}{\partial \theta_j} l(\theta)$ quantity. Like before, we first consider having on one training sample $x^{(1)}$

\[\begin{align*} \frac{\partial}{\partial \theta_j} l(\theta) &= \left( y \frac{1}{g(\theta^T x)} - (1-y)\frac{1}{1-g(\theta^T x)} \right)\frac{\partial}{\partial \theta_j}g(\theta^T x)\\ &= \left( y \frac{1}{g(\theta^T x)} - (1-y)\frac{1}{1-g(\theta^T x)} \right)\left( g'(\theta^Tx) \cdot \frac{\partial}{\partial \theta_j} \theta^T x \right)\\ &= \left( y \frac{1}{g(\theta^T x)} - (1-y)\frac{1}{1-g(\theta^T x)} \right)\left( g(\theta^T x)(1-g(\theta^T x)) \cdot \frac{\partial}{\partial \theta_j} \theta^T x \right)\\ &= \left[ y (1-g(\theta^T x)) - (1-y)g(\theta^Tx)\right] x_j \\ &= (y - h_\theta(x)) x_j \end{align*}\]where:

-

obviously $h_\theta(x)=g(\theta^T x)=\frac{1}{1+e^{-\theta^T x}}$

-

from step 2 to step 3 we used the fact $g’(z) = g(z)(1-g(z))$ from above (property 1 of sigmoid function)

Therefore, for $m$ training samples, we have:

\[\frac{\partial}{\partial \theta_j} l(\theta) = \sum_{i=1}^m (y^{(i)} - h_\theta(x^{(i)})) x^{(i)}_j\]Perceptron Algorithm

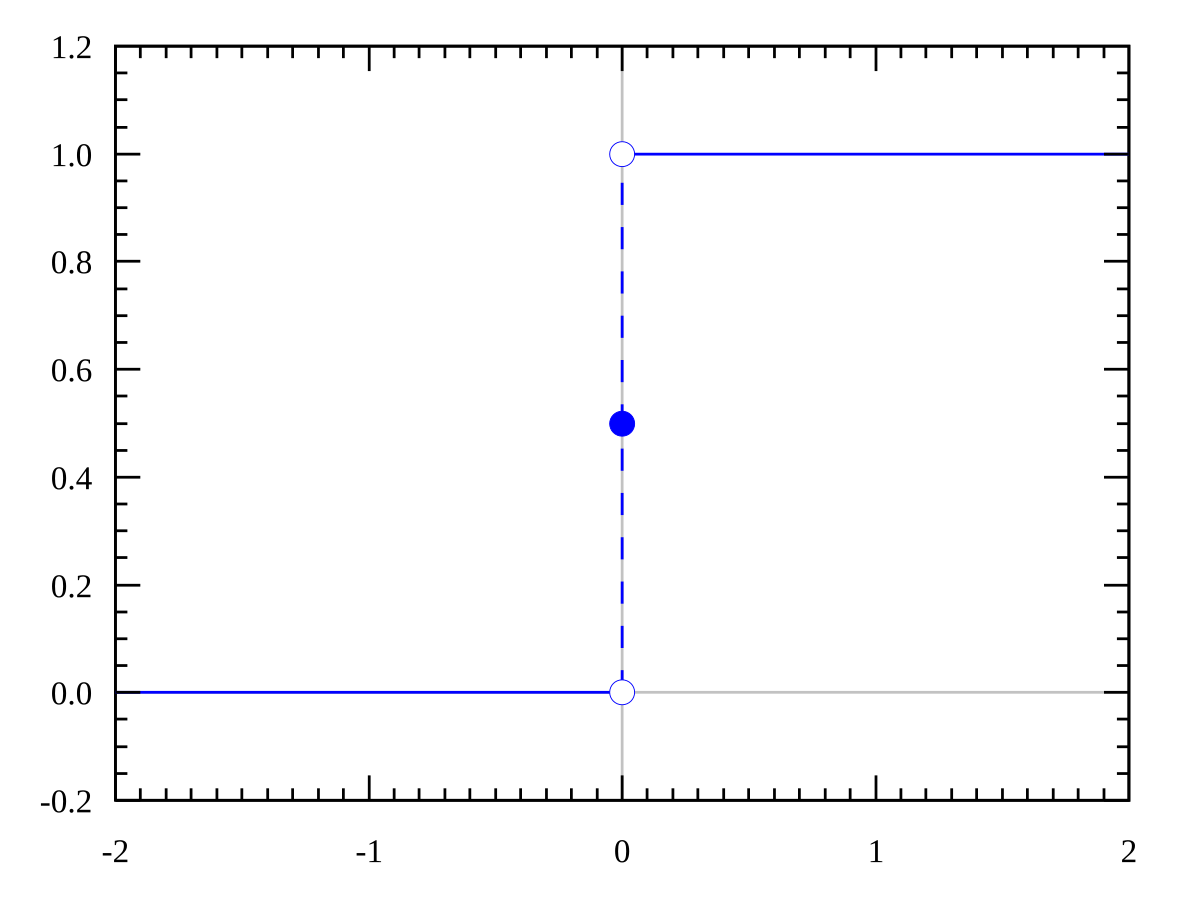

Consider modifying the logistic regression method to be stricter such that it outputs 0 or 1 exactly:

\[g(z) = \begin{cases} 1, & \text{if $z \ge 0$}\\ 0, & \text{if $z < 0$} \end{cases}\]

Therefore, we can see this as the stricter version of the sigmoid function:

And more importantly:

Perceptron Algorithm

using this modified definition of $g$, and we use the update rule

\[\theta_j := \theta_j + \alpha (y^{(i)} - h_\theta(x^{(i)})) x^{(i)}_j\]however, the problem with this is that there turns out to have no obvious probabilistic interpretation of this

Perceptron Algorithm implementation

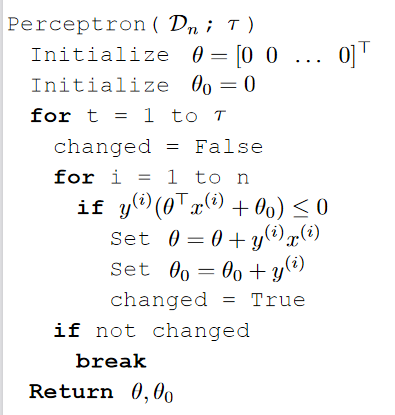

The perceptron algorithm $\text{Perceptron}(\mathcal{D}_n; \tau)$:

where notice that:

- $n$ is the number of data points in your data set

$\tau$ is again a hyperparameter (nothing to do with the logic of algorithm) that you can adjust

$\theta$ is initialized to be a vector in $d$ dimension (same as data points $x$)

$\theta_0$ initialized to be $0$

changedindicates whether if our prediction on each data point is correct or not.for example, $y^{(i)}\cdot (\theta^T x^{(i)} + \theta_0) \le 0$, if

- a data point is not on the line AND current prediction is wrong

- a data point is on the line

- initial step/loop of iteration

Therefore this actually specifies incorrect prediction of a data point

The part that updates $\theta, \theta_0$ basically does aims to improve $y^{(i)}\cdot (\theta^T x^{(i)} + \theta_0) \le 0$. So that the next iteration looks like:

\[\begin{align*} y^{(i)}\cdot \left((\theta + y^{(i)}x^{(i)})^Tx^{(i)} +(\theta_0 + y^{(i)})\right) &= y^{(i)} (\theta^T x^{(i)} + \theta_0)+(y^{(i)})^2(x^{(i)^T} x^{(i)} + 1)\\ &= y^{(i)} (\theta^T x^{(i)} + \theta_0) + (||x^{(i)}||^2+1) \end{align*}\]where:

- basically we moved the original prediction $y^{(i)} (\theta^T x^{(i)}+\theta_0)$ by some positive value $\vert \vert x^{(i)}\vert \vert ^2+1$

- we assumed that $y^{(i)} \in {-1, +1}$ in this case

- we return the best line by return the parameter $\theta, \theta_0$

Note

-

recall first that:

\[\begin{align*} \frac{\theta^T x}{||\theta||} &= -b \\ \theta^T x + b ||\theta|| &= 0\\ \theta^T x + \theta_0 &= 0 \end{align*}\]

so that the more positive the $\theta_0$, the lower/downwards we are moving the line

-

notice the sign on $\theta_0$ update:

\[\theta_0 = \theta_0 + y^{(i)}\]this means that if we predicted wrong and that

- $y^{(i)} > 0$, then we need to move the line downwards

- $y^{(i)} < 0$, then we need to move the line upwards

-

the $\theta$ update:

- rotate the current vector $\theta$ towards the correct/actual result

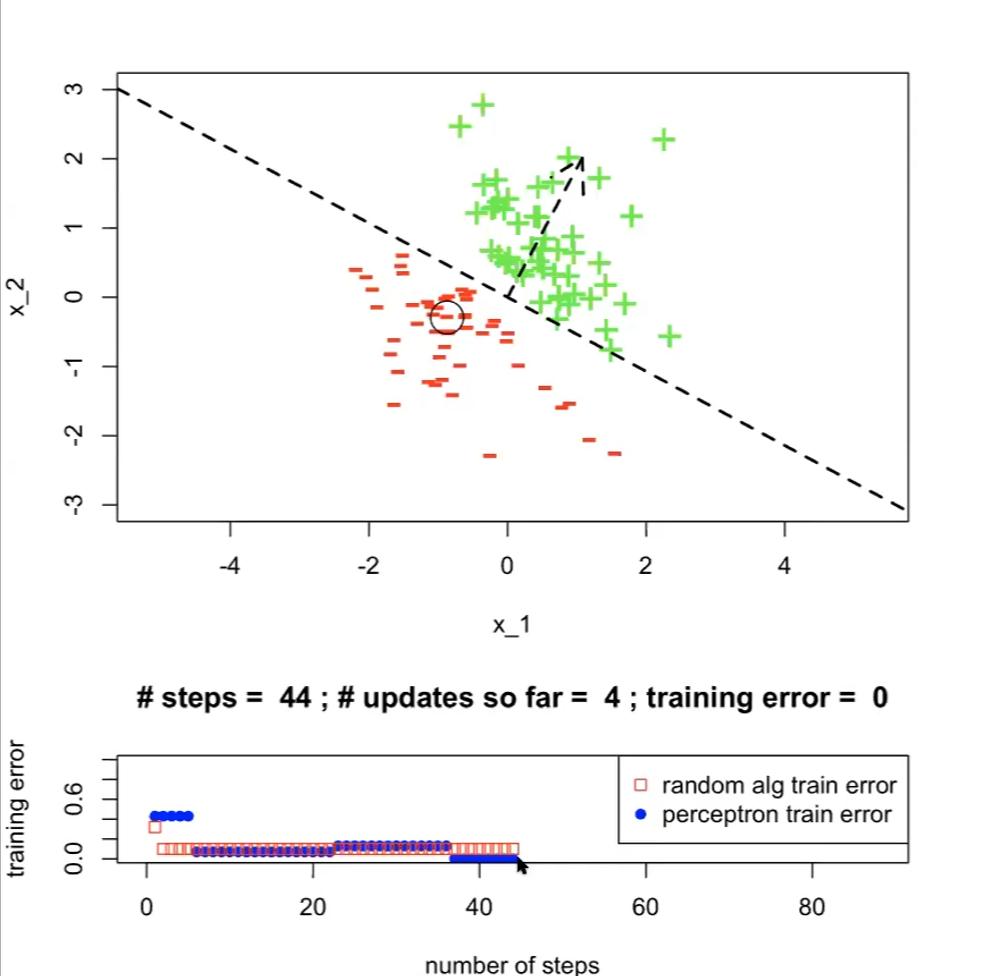

For Example

Running the above perceptron algorithm would look like:

where:

- we finished when $t=1, i=44$ (#steps=44)

- the “random alg train” in the bottom plot is the $\mathrm{ExLearningAlg}(\mathcal{D}_n;k)$ mentioned before

- note that having more steps might increase train error, since

- $\text{ExLearningAlg}(\mathcal{D}_n;k)$ is iterating a step per hypothesis

- $\text{Perceptron}(\mathcal{D}_n; \tau)$ here is iterating over $\tau$ and each point in dataset, and that the adjustment in $\theta, \theta_0$ only moves more towards the current data point

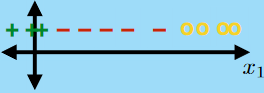

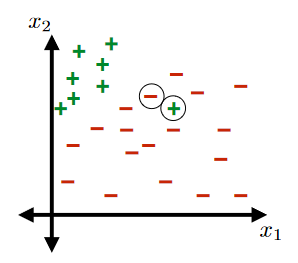

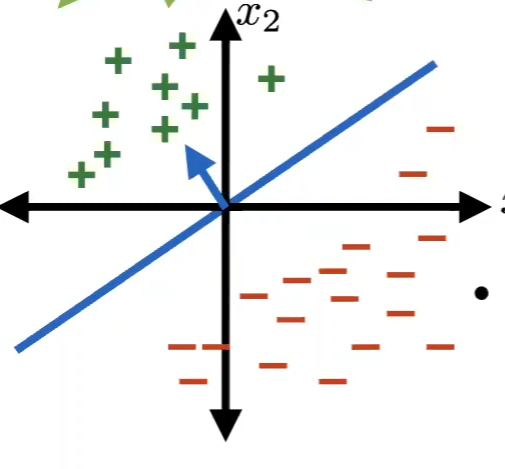

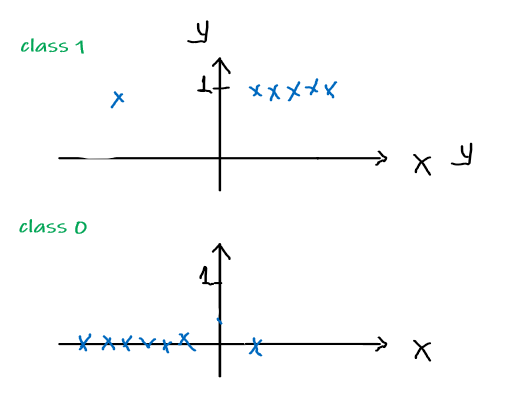

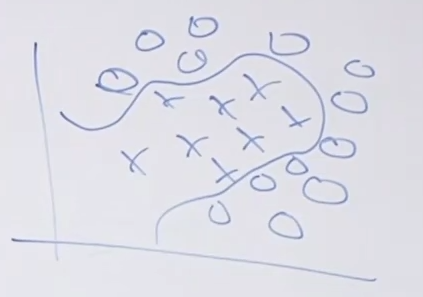

Linearly Separable Dataset

Notice that the above $\text{Perceptron}(\mathcal{D}_n, \tau)$ works if and only if there exists a line that can distinctly separate the binary dataset.

Linearly Separable

A training set $\mathcal{D}_n$ is linearly separable if there exist $\theta, \theta_0$ such that, for every point index $i \in {1,…,n}$, we have:

\[y^{(i)}\cdot (\theta^T x^{(i)} + \theta_0) > 0\]i.e. correct prediction for all data points

TODO: How do we prove that if a training set is NOT linearly separable?

For Example:

This would not be a linearly separable data set:

Margin of Training Set

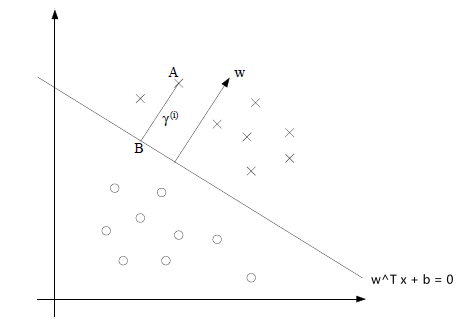

Review:

-

Consider the signed distance (green part) from a hyperplane defined by $\theta, \theta_0$ to a point $x^*$ below:

This green part is equivalent to the purple part minus the orange part:

where the

- purple part would be $\frac{\theta^T x^*}{\vert \vert \theta\vert \vert }$

- orange part be $-\frac{\theta_0}{\vert \vert \theta\vert \vert }$

so this is easily:

\[\text{Signed Distance of $x^*$ from Hyperplane}=\frac{\theta^T x^* + \theta_0}{||\theta||}\]

Margin of a Labelled Point

Consider now a labelled point $(x^, y^)$, where $y^{} \in {-1,+1}$. Then the margin of the labelled point $x^, y^*$ with respect to the hyperplane defined by $\theta, \theta_0$ is:

\[\text{Margin of $(x^*, y^*)$}=y^* \left( \frac{\theta^T x^* + \theta_0}{||\theta||} \right)\]where:

- notice the part $\theta^T x^* + \theta_0$ is basically the our prediction of $h(x;\theta, \theta_0)$

- $\text{margin}$ of a labelled point would therefore be positive if your hypothesis guessed correctly.

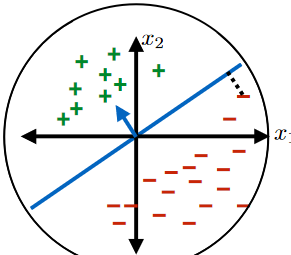

For Example

The hyperplane (blue line) of a specific $\theta, \theta_0$ in the dataset below should have a large margin:

Margin of a Training Set

The margin of a training set $\mathcal{D}_n$ with respect to a hyperplane defined by $\theta, \theta_0$ is:

\[\min_{i \in \{1,...,n\}}y^{(i)}\left(\frac{\theta^T x^* + \theta_0}{||\theta||}\right)\]where notice that:

- if any data point is misclassified, then the margin would be negative

- if all data points are classified correctly, then the margin would be positive

The more positive the margin, the easier the classifier is/more separable the dataset

Perceptron Algorithm Performance

Perceptron Performance

The perceptron algorithm will make at most $(R/\gamma)^2$ updates to $\theta$ (i.e. rotate the hyperplane at most $(R/\gamma)^2$ times) util it hits the solution hypothesis, if and only if the following assumptions holds true:

The Hypothesis Class is the set of all linear classifiers with separating hyperplanes that pass through the origin.

- this means that $\theta_0=0$ and the hyperplane is completely determined by $\theta$

There exists a $\theta^*$ and $\gamma$ such that $\gamma > 0$ and for every $i \in {1,…,n}$ we have:

\[\text{Margin of $(x^{(i)}, y^{(i)})$}=y^{(i)} \left( \frac{\theta^T x^{(i)} + \theta_0}{||\theta||} \right) > \gamma\]

- this means that the dataset is linearly separable

There exists a radius $R$ such that for every $i \in {1,…,n}$, we have $\vert \vert x^{(i)}\vert \vert \le R$

Proof of Assumption 1

Consider a classifier with offset described by $\theta, \theta_0$:

\[x:\theta^Tx + \theta_0 = 0, \quad \text{so that } x\in \mathbb{R}^d,\theta\in \mathbb{R}^d,\theta_0\in \mathbb{R}\]Then we can convert this equation and eliminate $\theta_0$ by considering:

\[x_\text{new}=[x_1,...,x_d,1]^T, \theta_\text{new}=[\theta_1, ..., \theta_d, \theta_0]^T, \quad \text{so that }x_\text{new} \in \mathbb{R}^{d+1}, \theta_{\text{new}} \in \mathbb{R}^{d+1}\]Then we have the following being equivalent:

\[\begin{align*} x:\theta^Tx + \theta_0 &= 0 \\ x_{\text{new},1:d}:\theta^T_{\text{new}}x_{\text{new}}&=0 \end{align*}\]where:

- $x_{\text{new},1:d}$ means the first $d$ dimension of the vector $x_\text{new}$

- $\theta_0$ has been included in the expanded feature space of $\theta_\text{new}$

Newton’s Method

Comparison against Batch Gradient Ascent

- one advantage of this over Batch Gradient Ascent is that it will take less iterations (quadratic convergence) to achieve a good result

- one disadvantage is that it is more computationally expensive. See section Higher Dimensional Newton’s Method

Newton’s Method

Task: given a function $f$, the idea is to find $\theta$ such that $f(\theta) = 0$

The idea is to approximate the function near $\theta$ to be a straight line, therefore the next guess of zero is located at:

\[\theta := \theta - \frac{f(\theta)}{f'(\theta)}\]Usage:

Since we want to maximize $l(\theta)$, we can see it as figuring at $l’(\theta) = 0$ since there is only one global maximum, hence:

\[\theta := \theta - \frac{\ell'(\theta)}{\ell''(\theta)}\]

Proof:

The idea is simple:

where we want to perform the update of:

\[\theta_1 := \theta_0 - \Delta\]And we know that:

\[\begin{align*} f'(\theta_0) &= \frac{f(\theta_0)}{\Delta}\\ \Delta &= \frac{f(\theta_0)}{f'(\theta_0)}\\ \end{align*}\]Therefore, we arrive simply at:

\[\theta := \theta - \frac{f(\theta)}{f'(\theta)}\]Example

| Steps | Graphical Illustration |

|---|---|

| Step 0: given $f$, and a $\theta$ to start with |  |

| Step 1: Assume that the $f(\theta’)=0$ can be found by going down linearly |  |

| Step 2: Repeat step 1 until $f(\theta’)=0$ |  |

Quadratic Convergence

- The idea (informally) is that: if newton’s method on the first iteration has error $0.01$ (away from the true zero), then:

- second iteration error=$0.0001$

- third iteration error=$0.00000001$

- etc.

Higher Dimensional Newton’s Method

Since in some cases we have $\theta$ being a vector in $\mathbb{R}^{n+1}$, then:

Newton’s Method in Higher Dimension

The generalization of Newtons method to this multidimensional setting is:

\[\theta := \theta - H^{-1} \nabla_\theta \ell (\theta)\]as compared to the one dimensional $\theta := \theta - \frac{1}{\ell’’(\theta)}\ell’(\theta)$, where you now have:

Hessian $H$ being a $\mathbb{R}^{(n+1)\times(n+1)}$ matrix such that

\[H_{ij} = \frac{\partial^2 \ell(\theta)}{\partial \theta_i \partial \theta_j}\]

However, obviously now it becomes computationally difficult to compute $H^{-1}$ if $n+1$ is large for your dataset, i.e. you have lots of features.

Generalized Linear Models

So far, we’ve seen a regression example, and a classification example. In the regression example, we had $y\vert x; \theta ∼ N(\mu, \sigma^2)$, and in the classification one, $y\vert x; \theta ∼ \text{Bernoulli}(\phi)$, for some appropriate definitions of $\mu$ and $\phi$ as functions of $x$ and $\theta$.

In this section, we will show that both of these methods are special cases of a broader family of models, called Generalized Linear Models (GLMs).

Terminologies

- Probability Mass Function: distribution for discrete values.

- e.g. Poisson Distribution, Bernoulli Distribution

- Probability Density Function (PDF): distribution for continuous values.

- e.g. Normal Distribution

Exponential Family

To work our way up to GLMs, first we need to define the exponential family distributions.

Exponential Family Distribution

We say that a class of distributions is in the exponential family if it can be written in the form:

\[p(y; \eta) = b(y) \exp(\eta \, T(y) - a(\eta))\]where:

- $y$ means the labels/target in your dataset

- $\eta$ is the natural parameter (also called the canonical parameter) of the distribution

- $b(y)$ is the base measure

- $T(y)$ is the sufficient statistic (see later examples, you often see $T(y)=y$)

- $a(\eta)$ is the log partition function, which basically has $e^{-a(\eta)}$ playing the role of normalization constant

so basically you can expression some distribution with the above form with any choice of $b(y), T(y), a(\eta)$, then that expression is in the exponential family.

We now show that the Bernoulli and the Gaussian distributions are examples of exponential family distributions.

Example: Bernoulli Distribution

For $y \in {0,1}$, we have the Bernoulli Distribution (recall that we used $h_\theta(x)=\phi$):

\[p(y ; \phi) = \phi^y(1-\phi)^{1-y}\]We can convert this to the exponential family by:

\[\begin{align*} \phi^y(1-\phi)^{1-y} &= \exp\left( \log(\phi^y(1-\phi)^{1-y}) \right) \\ &= \exp\left(y \log \phi + (1-y)\log (1-\phi)\right)\\ &= 1 \cdot \exp\left( \log\left( \frac{\phi}{1-\phi} \right) y + \log(1-\phi)\right)\\ \end{align*}\]Therefore, this is in the exponential family with:

- $b(y)$ = 1

- $T(y) = y$

- $\eta = \log\left( \frac{\phi}{1- \phi} \right)$, so that we get $\phi = \frac{1}{1+e^{-\eta}}$

- recall that $\phi = h_\theta(x)$, and that $h_\theta(x)= \frac{1}{1+e^{-\theta^T x}}$ in a similar form!

- $a(\eta) = -\log(1-\phi) = \log(1+e^\eta)$

Example: Gaussian/Normal Distribution

For algebraic simplicity, assume that $\sigma^2 = 1$ (recall that when deriving linear regression, the value of $\sigma$ had no effect on our final choice of $\theta$. Therefore, this is also “justified”)

Then we have:

\[p(y; \mu) = \frac{1}{\sqrt{2 \pi}} \exp\left( - \frac{(y-\mu)^2}{2} \right)\]and doing a similar step as above:

\[\begin{align*} \frac{1}{\sqrt{2 \pi}} \exp\left( - \frac{(y-\mu)^2}{2} \right) &= \frac{1}{\sqrt{2 \pi}} e^{-y^2/2} \cdot \exp\left( \mu y - \frac{1}{2}\mu^2 \right) \end{align*}\]where now this is matched easily:

- $b(y) = \frac{1}{\sqrt{2 \pi}} e^{-y^2/2}$

- $T(y) = y$

- $\eta = \mu$ which is the natural parameter

- $a(\eta) = \mu^2/2 = \eta^2/2$

Properties of Exponential Family

We use the GLM because they have some very nice properties:

- Maximum Likelihood Estimate (MLE) with respect to $\eta$ is concave, and the Negative Log Likelihood (NLL) with respect to $\eta$ is convex

- The expected value $E[y; \eta] = \frac{\partial}{\partial \eta} a(\eta)$

- The variance $\text{Var}[y;\eta] = \frac{\partial^2}{\partial \eta^2} a(\eta)$

Note that the expected value and the variance do not involve integrals now.

Constructing the GLMs

More generally, consider a classification or regression problem where we would like to predict the value of some random variable $y$ as a function of $x$.

- e.g. you would like to build a model to estimate the number $y$ of customers arriving in your store (or number of page-views on your website) in any given hour, based on certain features $x$ such as store promotions, recent advertising, weather, day-of-week, etc. (you might think about Poisson Distribution, but that is also a GLM)

To derive a GLM for a problem, we will make the following three assumptions about the conditional distribution of $y$ given $x$ and about our model:

- The distribution is in the exponential family, such that $y\vert x;\theta \sim \text{Exponential Family}(\eta)$

- The goal is to predict $T(y)$, (in most of the time, $T(y)=y$), so we want to compute $h(x)$. But that $h(x)$ needs to be $h_\theta(x)=E[y\vert x;\theta]$

- The natural parameter $\eta$ and inputs $x$ are related linearly, so that $\eta = \theta^T x$. (if $\eta$ is a vector, $\eta_i = \theta^T_i x$)

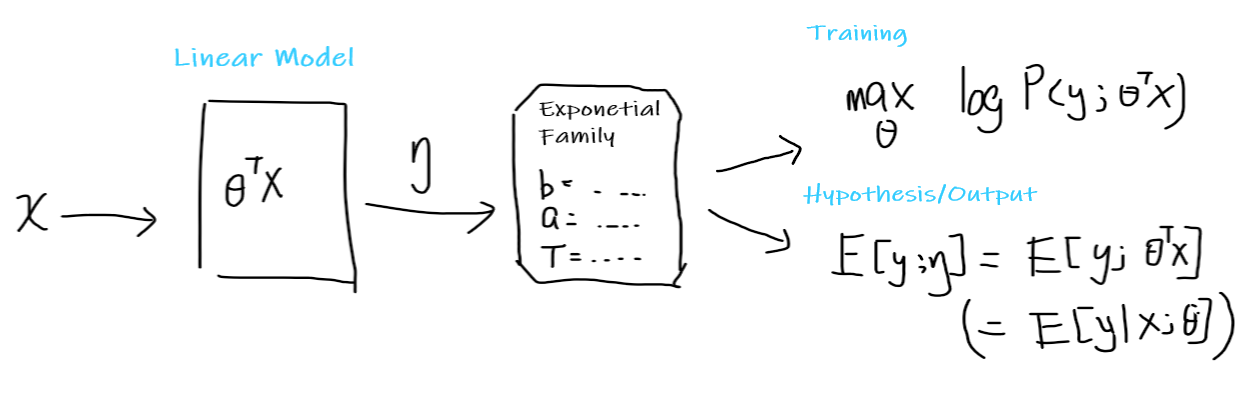

Pictorially, you think of the following:

where:

-

you start with some input $x$

-

you assume that it is a linear model, so that there exists some learnable parameter $\theta$

- this is the most important assumption here

-

you convert your input to $\theta^Tx = \eta$

-

$\eta$ now will give some distribution in the exponential family, by then choosing some appropriate $b(y),a(\eta), T(y)$

- basically your task will give you some hint on what distribution to choose. e.g. modelling website clicks/counts -> use Poisson Distribution, etc.

-

Using the exponential family:

-

your hypothesis function is then simply $h_\theta(x) = E[y;\eta] = E[y;\theta^Tx]=E[y\vert x;\theta]$

-

you want to train it to find $\theta$ by maximize likelihood, using the learning update rule

\[\theta_j := \theta_j + \alpha (y^{(i)} - h_\theta(x^{(i)})) x^{(i)}_j\]note that this holds for any distribution in exponential family (or you could use the Batch Gradient Descent, which adds a summation, or the Newton’s Method that we covered above)

-

Basically, if you your data can be modelled by some distribution in the Exponential Family, you can use GLM above and do the learning.

Terminologies

- $\mu = E[y; \eta]= g(\eta)$ is called the canonical response function

- recall that $g(\eta) = \frac{\partial}{\partial \eta} a(\eta)$

- $\eta = g^{-1}(\mu)$ is called the canonical link function

- Here, we have three types of parameters involved in the GLM,:

- model parameter $\theta$, which we learn in model

- natural parameter $\eta$, which is assumed $\eta = \theta^T x$ to be linear

- canonical parameter, $\phi$ for Bernoulli, $\mu,\sigma$ for Gaussian, $\lambda$ for Poisson, …

- use $g(\eta)$ to get those canonical parameters from natural parameter

- use $g^{-1}$ to swap back to natural parameter from canonical parameter

Linear Regression as GLM

Recall that we assumed in Linear Regression:

| Model: | Actual Data: |

|---|---|

|

|

where we model the conditional distribution of $y$ given x as as a Gaussian $N(\mu, \sigma^2)$.

Since we have proven that the Gaussian is in Exponential Family, and that we have proven:

- $\mu = \eta$

Therefore, we get from the second assumption in Constructing the GLMs:

\[\begin{align*} h_\theta(x) &= E[y|x; \theta]\\ &= \mu\\ &= \eta \\ &= \theta^T x \end{align*}\]where we get back the linear hypothesis:

- the second equality comes from the fact that $y\vert x;\theta \sim \mathcal{N}(\mu, \sigma^2)$

- the last equality comes from the third assumption in Constructing the GLMs:

Logistic Regression as GLM

Recall that for logistic regression:

Pictorially

| Model: | Actual Data: |

|---|---|

|

|

where we model the conditional distribution of $y$ given x as as the Bernoulli Distribution $y\vert x;\theta \sim \text{Bernoulli}(\phi)$.

Since we have also proven that Bernoulli Distribution is in the Exponential Family, and that:

- $\phi = 1/(1+e^{-\eta})$

Following a similar derivation from above:

\[\begin{align*} h_\theta(x) &= E[y|x; \theta]\\ &= \phi\\ &= 1/(1+e^{-\eta}) \\ &= 1/(1+e^{-\theta^T x}) \end{align*}\]which gives back the hypothesis for logistic regression.

- once we assume that $y$ conditioned on $x$ is Bernoulli, the sigmoid function arises as a consequence of the definition of GLMs and exponential family distributions.

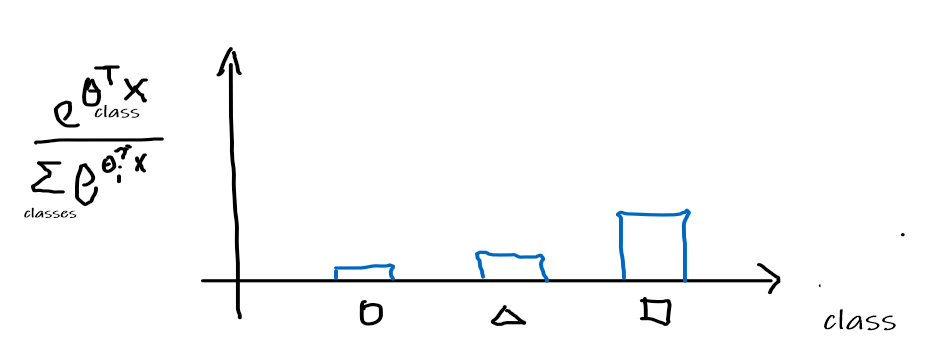

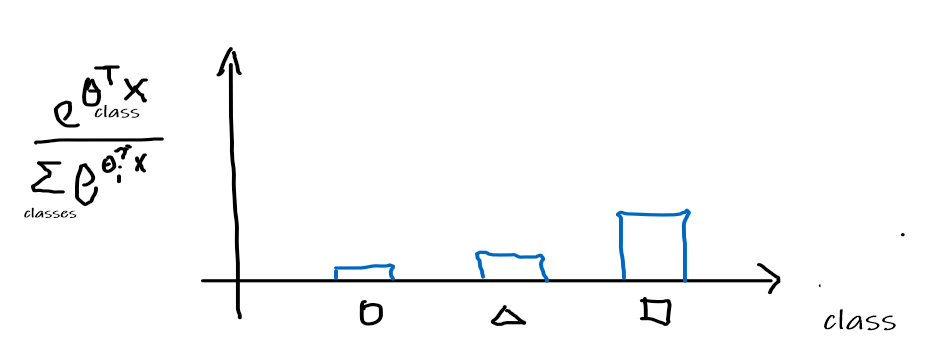

Softmax Regression

Here we cover the cross entropy interpretation of this method. For the graphical method please refer to the note.

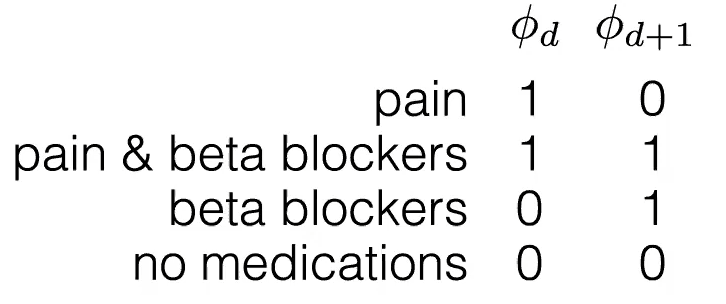

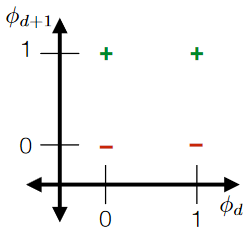

Consider the case when we have to classify more than one class

Then we consider the following representation of this task:

- we have $k$ classes

- input $x^{(i)} \in \mathbb{R}^n$

- label $y$ is a one hot vector, such that $y={0,1}^k$

- e.g. if there are 3 classes, then being in class 1 means $y=[1,0,0]^T$

- each class has its own parameter $\theta_1,\theta_2,…,\theta_k$, or basically $\phi_1, \phi_2, … \phi_k$ (since $\phi = \theta^T x$)

- technically we only need $k-1$ of those parameters, since it must be that $\sum_{i=1}^k \phi_i = 1$

Then the idea is as follows:

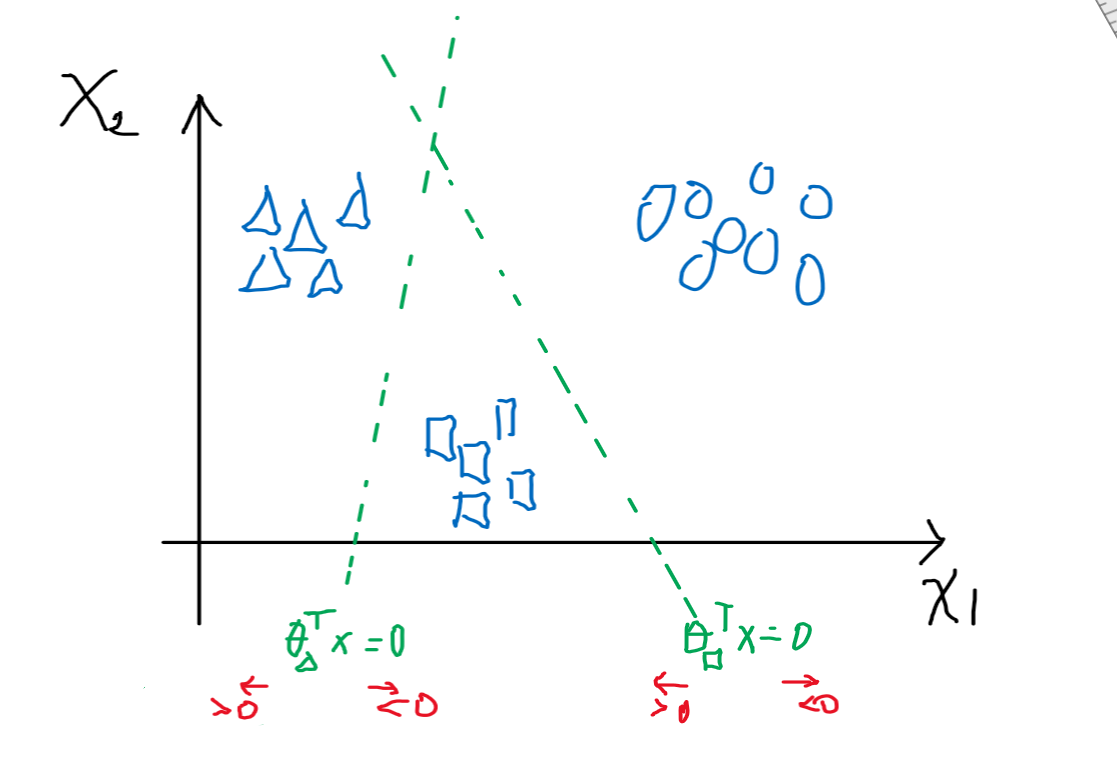

-

Consider that we have already solved the $\theta_1$ for triangle class, $\theta_2$ for square class, etc

where:

- recall that $\theta^T x \mapsto 1/(1+e^{-\theta^Tx})$, which basically gives the probability of being in the class or not

- i.e. $\theta^Tx > 0$ means being in the class

- recall that $\theta^T x \mapsto 1/(1+e^{-\theta^Tx})$, which basically gives the probability of being in the class or not

-

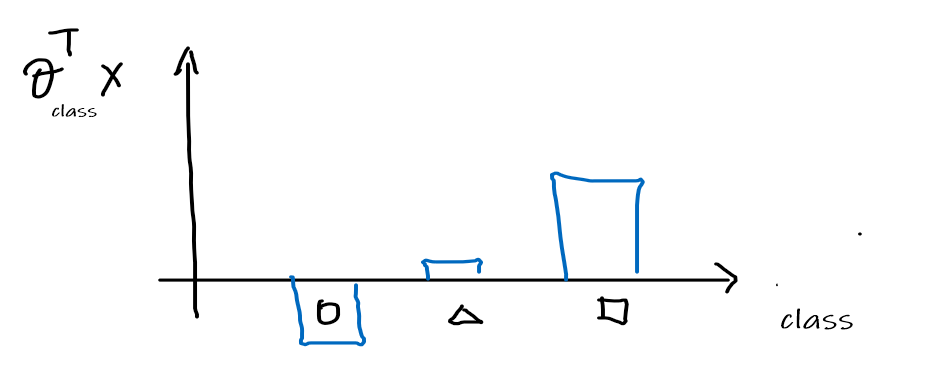

Then this means that for any given $x$, we can get compute the $\theta_{\text{class}}^T x$:

-

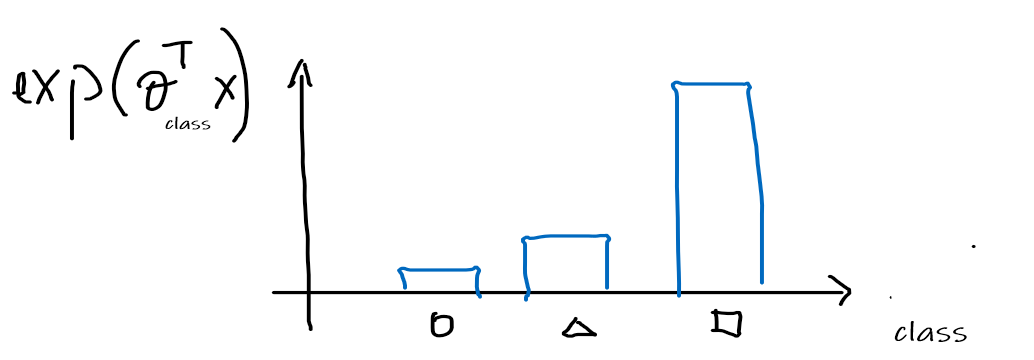

Then we can convert this to distribution like values by first making them all positive, applying $\exp$

-

Normalize the distribution to be a probability distribution

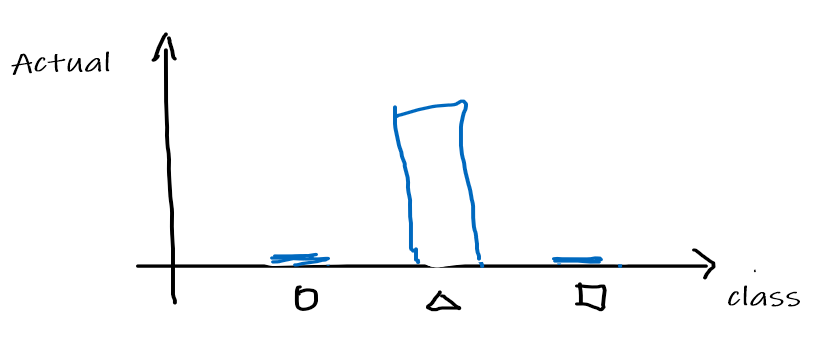

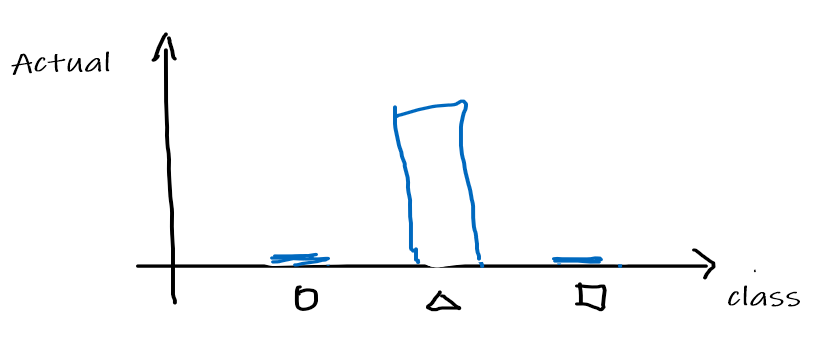

Therefore, this means that given an input $x$, we are outputting a probability distribution

-

Now, suppose that the actual answer for that $x$ is a triangle, so that $[0,1,0]^T$ is the correct answer. We can convert the answer/label into a distribution as:

Therefore, then the task/learning algorithm needs to minimize the "difference/distance"=cross entropy between the following two:

Current Model Target

and then output the optimal $\theta_i$ for each class

Therefore, then we need to define:

Cross Entropy

The cross entropy between a prediction $\hat{y}$ and the actual class $y \in {\text{classes}}$

\[\begin{align*} \text{Cross Entropy}(p,\hat{p}|x) &= -\sum_{y \in \text{classes}}p(y) \log \hat{p}(y) \\ &= -\log \hat{p}(y_{\text{correct class}}) \\ &= -\log \frac{e^{\theta_{\text{correct class}}^T x}}{\sum_{i \in \text{classes}}e^{\theta_i^T x}} \end{align*}\]where:

- notice that iff we guessed it right, then this quantity will be zero

- otherwise, this will be positive due to the $-$ sign

Our goal is to minimize this quantity, and then we just use gradient descent

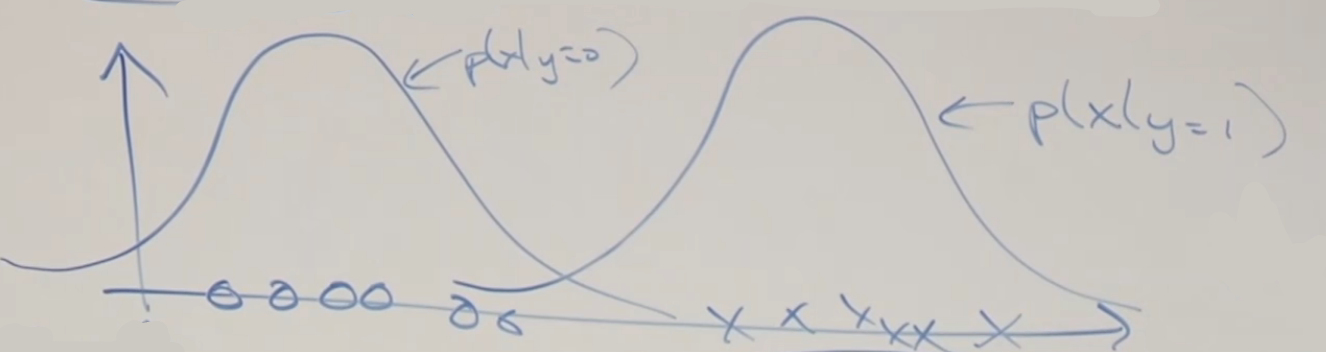

Generative Learning Algorithms

Algorithms that try to learn $p(y\vert x)$ directly (such as logistic regression), or algorithms that try to learn mappings directly from the space of inputs $X$ to the labels ${0, 1}$, (such as the perceptron algorithm) are called discriminative learning algorithms. Here, we’ll talk about algorithms that instead try to model $p(x\vert y)$ (and $p(y)$). These algorithms are called generative learning algorithms.

- for example, consider the case of classifying between elephants $y=1$ and dogs $y=0$ . A discriminative algorithm would learn the mapping from input features to the animals. A different approach is to build a feature model for each class, which is called the generative learning algorithm

The idea is as follows. If we know $p(x\vert y)$ and $p(y)$, then we can use Bayes’ Rule:

\[p(y|x) = \frac{p(x|y)p(y)}{p(x)} = \frac{p(x|y)p(y)}{p(x|y=1)p(y=1)+p(x|y=0)p(y=0)}\]For instance, given an input $x$, the probability of it being an elephant $y=1$ is:

\[p(y=1|x) = \frac{p(x|y=1)p(y=1)}{p(x)}\]where:

- $p(y)$ is called the class priors. Basically the probability of something happening regardless of your “features/condition”

- $p(x\vert y)$ is your modelled feature

- $p(x)$ basically indicates the probability of this set of feature $x$ occurring at all in your sample space

Gaussian Discriminative Analysis

The big assumptions in this model is that $p(x\vert y)$ are distributed as a Gaussian. In other words, if you have many features for $x \in \mathbb{R}^n$ (notice we dropped $x_0=1$ since we are now learning $x$ from $y$), then we assume that $p(x\vert y)$ is distributed as a Multivariate Gaussian.

The Multivariate Normal Distribution

This is basically converting the 1-D Gaussian into something like this:

Multivariable Normal Distribution

This distribution $\mathcal{N}(\mu, \Sigma)$ is parametrized by a mean vector $\mu \in \mathbb{R}^n$ and a covariance matrix $\Sigma \in \mathbb{R}^{n \times n}$, where $\Sigma \ge 0$ and is symmetric and positive semi-definite.

The density function is then given by:

\[p(x;\mu, \Sigma) = \frac{1}{(2\pi)^{n/2} |\Sigma|^{1/2}} \exp\left( -\frac{1}{2}(x-\mu)^T \Sigma^{-1}(x-\mu) \right)\]where obviously now $x \in \mathbb{R}^n$ is a vector, and that:

- $\vert \Sigma\vert$ is the discriminant of $\Sigma$

The mean of a random variable $X \sim \mathcal{N}(\mu, \Sigma)$ is given by:

\[E[X] = \int_x x \,p(x;\mu, \Sigma)dx = \mu\]The covariance of a vector-valued random variable $Z$ is defined as $\text{Cov}(Z)$:

\[\text{Cov}(Z) = E[\,(Z-E[Z])(Z-E[Z])^T\,] = E[ZZ^T] - (E[Z])(E[Z])^T = \Sigma\]

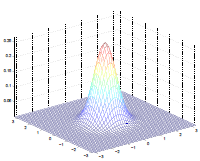

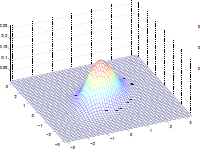

Examples:

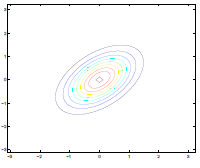

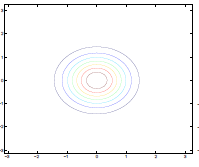

The by varying the covariance $\Sigma$, we could:

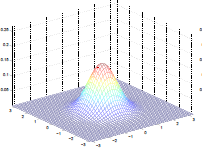

| $\Sigma=\begin{bmatrix} 1& 0 \ 0 & 1\end{bmatrix}$ | $\Sigma=\begin{bmatrix} 0.6& 0 \ 0 & 0.6\end{bmatrix}$ | $\Sigma=\begin{bmatrix} 2& 0 \ 0 & 2\end{bmatrix}$ |

|---|---|---|

|

|

|

which makes sense because:

- as covariance $\Sigma \to 0.6\Sigma$, the variation becomes smaller and hence a higher peak

- as covariance $\Sigma \to 2\Sigma$, the variation becomes larger and hence a more spread out shape

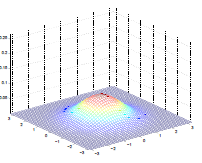

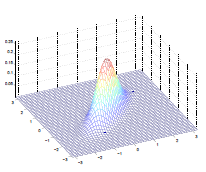

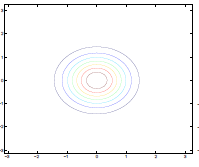

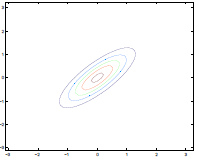

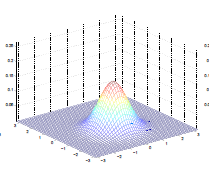

Additionally, we could have non-zero terms to off-diagonal entries

| $\Sigma=\begin{bmatrix} 1& 0 \ 0 & 1\end{bmatrix}$ | $\Sigma=\begin{bmatrix} 1& 0.5 \ 0.5 & 1\end{bmatrix}$ | $\Sigma=\begin{bmatrix} 1& 0.8 \ 0.8 & 1\end{bmatrix}$ |

|---|---|---|

|

|

|

|

|

|

where we basically see that:

- increasing off-diagonal entries makes the shape more compressed along $x_1=x_2$ $\to$ increases the positive correlation between $x_1, x_2$

- technically bottom left contour plots should be circles. Due to aspect ratio problems, they are rendered as ellipses

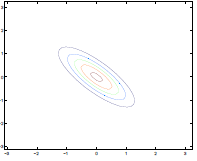

On the contrary, having negative entries creates puts $x_1, x_2$ to have negative correlation

| $\Sigma=\begin{bmatrix} 1& 0 \ 0 & 1\end{bmatrix}$ | $\Sigma=\begin{bmatrix} 1& -0.8 \ -0.8 & 1\end{bmatrix}$ |

|---|---|

|

|

Lastly, varying $\mu$ basically shifts the distribution

| $\mu = \begin{bmatrix}1\0\end{bmatrix}$ | $\mu = \begin{bmatrix}-0.5\0\end{bmatrix}$ | $\mu = \begin{bmatrix}-1\-1.5\end{bmatrix}$ |

|---|---|---|

|

|

|

The Gaussian Discriminative Analysis Model

Consider the case when we have classification for benign tumors $y=0$ and malignant tumors $y=1$ based on a set of features $x$.

Then if the input features are continuous variables, we start by assuming the following distribution:

\[\begin{align*} y &\sim \text{Bernoulli}(\phi) \\ x|y =0 &\sim \mathcal{N}(\mu_0, \Sigma) \\ x|y =1 &\sim \mathcal{N}(\mu_1, \Sigma) \end{align*}\]or equivalently:

\[\begin{align*} p(y) &= \phi^y (1-\phi)^{1-y} \\ p(x|y=0) &= \frac{1}{(2\pi)^{n/2} |\Sigma|^{1/2}} \exp\left( -\frac{1}{2}(x-\mu_0)^T \Sigma^{-1}(x-\mu_0) \right) \\ p(x|y=1) &= \frac{1}{(2\pi)^{n/2} |\Sigma|^{1/2}} \exp\left( -\frac{1}{2}(x-\mu_1)^T \Sigma^{-1}(x-\mu_1) \right) \\ \end{align*}\]where notice that the parameters of the models are:

- $\phi, \Sigma, \mu_0, \mu_1$, so that they share the same covariance matrix

- this is assumed that $\Sigma_0=\Sigma_1=\Sigma$, which is usually done, but it does not have to be.

Therefore, given a training set ${(x^{(i)}, (y^{(i)})}_{i=1}^m$, we consider maximizing the (log) Joint Likelihood

\[\begin{align*} L(\phi, \Sigma, \mu_0, \mu_1) &= \prod_{i=1}^m p(x^{(i)}, y^{(i)} ; \phi, \mu_0, \mu_1, \Sigma) \\ &= \prod_{i=1}^m p(x^{(i)}|y^{(i)} ; \phi, \mu_0, \mu_1, \Sigma)p(y^{(i)};\Sigma) \end{align*}\]where notice that:

- now we consider $p(x^{(i)}, y^{(i)} ; \phi, \mu_0, \mu_1, \Sigma)$, which is the probability of $x^{(i)}$ AND $y^{(i)}$ happening at the same time, instead of the linear models which maximizes the conditional probability $p(y\vert x)$

GDA Model

Consider the case when we have a classification problem where features $x$ are all continuous values. Then we can consider the following distribution:

\[\begin{align*} y &\sim \text{Bernoulli}(\phi) \\ x|y =0 &\sim \mathcal{N}(\mu_0, \Sigma) \\ x|y =1 &\sim \mathcal{N}(\mu_1, \Sigma) \end{align*}\]And then maximize the log of the Joint Likelihood

\[\begin{align*} l(\phi, \Sigma, \mu_0, \mu_1) =\log L(\phi, \Sigma, \mu_0, \mu_1) = \log \prod_{i=1}^m p(x^{(i)}|y^{(i)} ; \phi, \mu_0, \mu_1, \Sigma)p(y^{(i)};\Sigma) \end{align*}\]By maximizing the log Joint Likelihood (taking derivatives and setting it to zero), you will find that:

\[\begin{align*} \phi &= \frac{1}{m} \sum_{i=0}^m y^{(i)} = \frac{1}{m} \sum_{i=0}^m 1\{y^{(i)}=1\} \\ \mu_0 &= \frac{\sum_{i=1}^m 1\{y^{(i)}=0\}x^{(i)}}{\sum_{i=1}^m 1\{y^{(i)}=0\}} \\ \mu_1 &= \frac{\sum_{i=1}^m 1\{y^{(i)}=1\}x^{(i)}}{\sum_{i=1}^m 1\{y^{(i)}=1\}} \\ \Sigma &= \frac{1}{m} \sum_{i=1}^m (x^{(i)} - \mu_{y^{(i)}})(x^{(i)} - \mu_{y^{(i)}})^T \end{align*}\]where:

- the indicator function behaves as $1{\text{true}}=1$, and $1{\text{false}}=0$

- the $\phi$ quantity just computes the average number of patients with malignant tumor

- the $\mu_0$ computes the mean of all feature vectors $x$ that corresponds to a benign tumor $y=0$

- the $\mu_1$ computes the mean of all feature vectors $x$ that corresponds to a malignant tumor $y=1$

- the $\Sigma$ computes the covariance of all feature vectors from their corresponding $\mu_{y^{(i)}}$

Therefore, to make a prediction given some $x$, we compute the quantity:

\[\arg\max_y p(y|x) = \arg\max_y \frac{p(x|y)p(y)}{p(x)} = \arg\max_y p(x|y)p(y)\]where:

- since $p(x)$ is a constant given a $x$, in $\arg \max$ it does not matter

Reminder:

$\arg \max$ or $\arg \min$ works as follows:

\[\begin{align*} \min (z-5)^2 &= 0 \\ \arg \min_z (z-5)^2 &= 5 \end{align*}\]where basically you care about the argument instead of the output

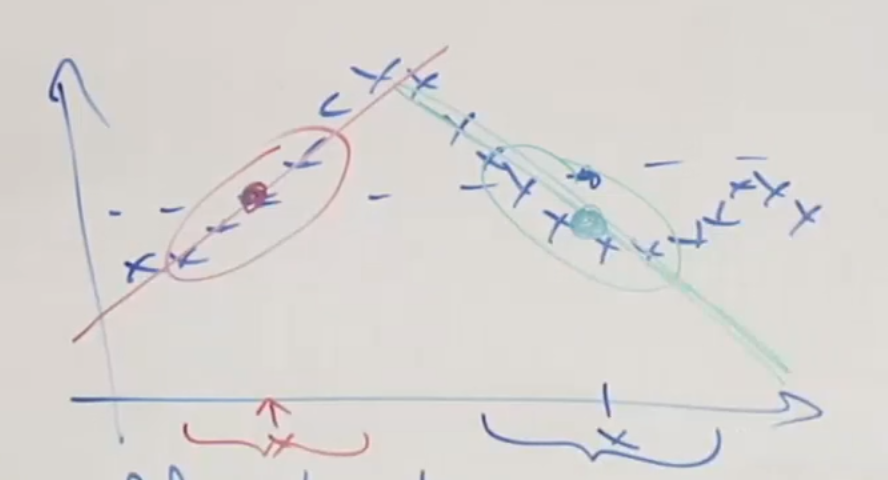

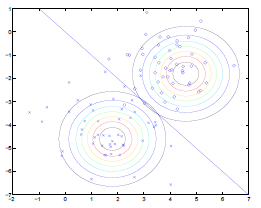

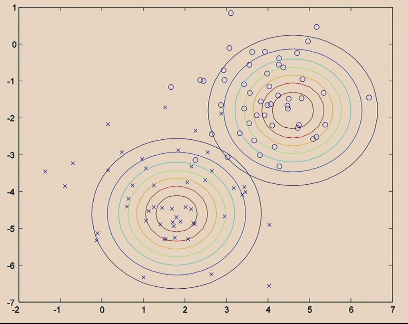

Pictorially, the computation for $\phi, \mu_0, \mu_1, \Sigma$ is doing the following:

where:

- $\Sigma$ fits the contours/shapes of the two Gaussian

- made the two Gaussians have contours that are the same shape and orientation

- one outcome is that the decision boundary will be linear

- $\mu_0, \mu_1$ shifts the Gaussian to the “best” place

- $\phi$ draws the decision boundary

- technically it is unknown already once the Gaussians are in place, i.e. you know which $y$ is more probable given an $x$

GDA and Logistics Regression

First let’s recall what each algorithm does.

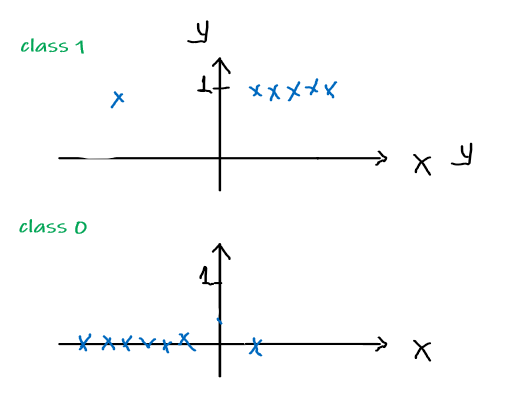

Beginning with the data:

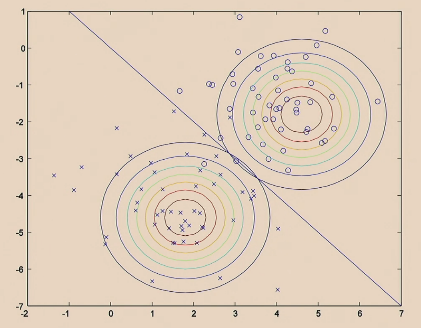

Then logistic regression does:

| Iteration 1. initialize randomly |  |

|---|---|

| Iteration 2. |  |

| Iteration … | |

| Iteration 20. |  |

The GDA Model does:

| Step 1. Fit Gaussian for each label |  |

|---|---|

| Step 2. Decision boundary is implied by the probability for $y$ of each $x$ |  |

Comparison of the two algorithms:

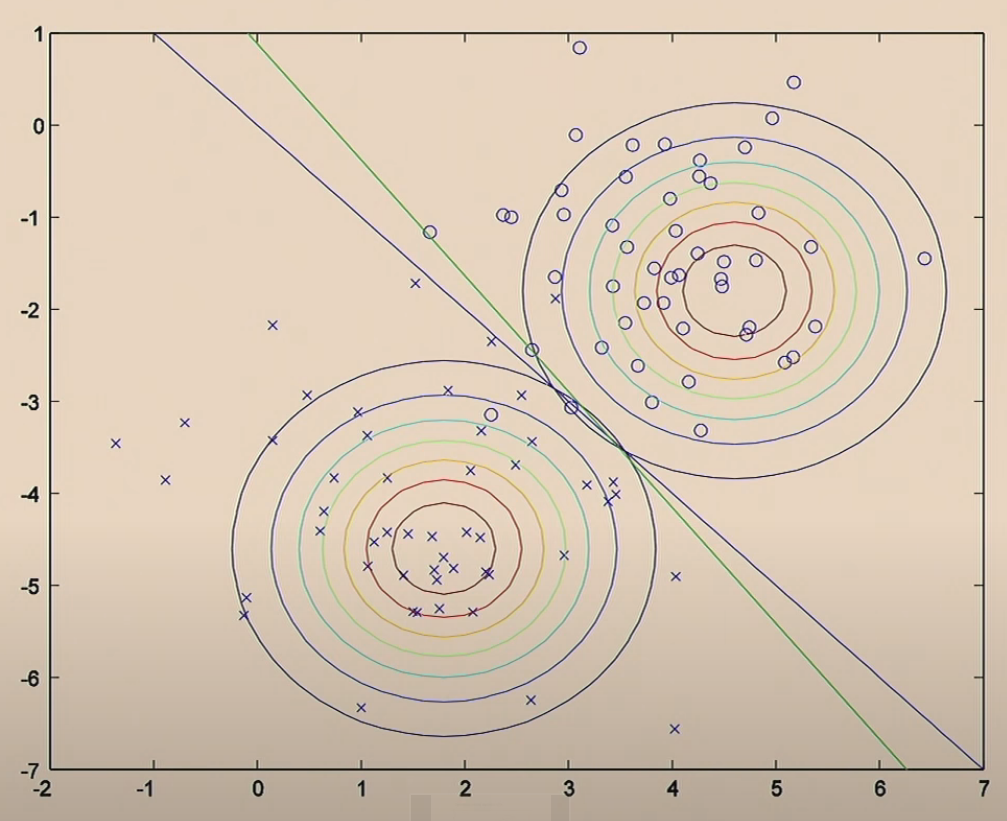

On the other hand, if we consider the function from GDA, which considers $p(x\vert y)$, and the Bayes Rule:

\[p(y|x) = \frac{p(x|y)p(y)}{p(x)}\]Consider viewing the quantity $p(y=1\vert x; \Sigma,\mu_0, \mu_1, \phi)$ as a function of $x$, then the following data:

would compute to:

where:

- basically points to the left has a very low probability of being $y=1$

- points to the far right is almost certainly $y=1$

In fact, it can be proven that this is exactly a sigmoid function

\[p(y=1|x,\phi, \Sigma, \mu_0, \mu_1) = \frac{1}{1+\exp(-\theta^T x)}\]for $\theta$ being some appropriate function of $\phi, \Sigma, \mu_0, \mu_1$.

Take Away Message

The above basically argues that if $p(x\vert y)$ is multivariate gaussian (with shared $\Sigma$), i.e.

\[\begin{align*} y &\sim \text{Bernoulli}(\phi) \\ x|y =0 &\sim \mathcal{N}(\mu_0, \Sigma) \\ x|y =1 &\sim \mathcal{N}(\mu_1, \Sigma) \end{align*}\]then $p(y\vert x)$ necessarily follows a logistic function:

\[\begin{align*} p(y=1|x) &= \frac{1}{1+e^{-\theta^T x}}\\ p(y=0|x) &=1- \frac{1}{1+e^{-\theta^T x}} \end{align*}\](in fact, this is true for any pair of distributions from the exponential family)

The converse, however, is not true. So in a way the GDA algorithm makes a stronger set of assumptions.

- but this means that if assumptions in GDA are correct, then GDA does better than logistic regression since you are telling it more information.

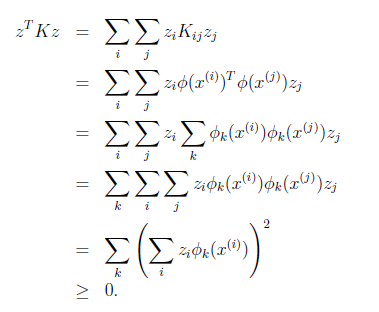

Naïve Bayes

In GDA, the feature vectors $x$ were continuous, real-valued vectors. Let’s now talk about a different learning algorithm in which the $x$’s are discrete valued.

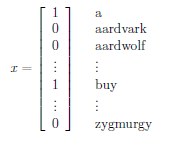

For instance, consider the case of building a email span detector. First you will need to encode text-data into feature vectors. One way to do this is to represent an email by a vector whose length is the number of words in a dictionary:

- if an email contains the $i$-th word of the dictionary, then we will set $x_i = 1$. e.g.:

- so that $x \in {0,1}^n$

The task is to model $p(x\vert y)$ and $p(y)$ for this generative algorithms.

- note that if the size of vocabulary (number of words encoded in the feature vector) is $10,000$, then other models might not work well since you have excessive number of parameters.

Reminder

-

by the chain rule of probability, we know that:

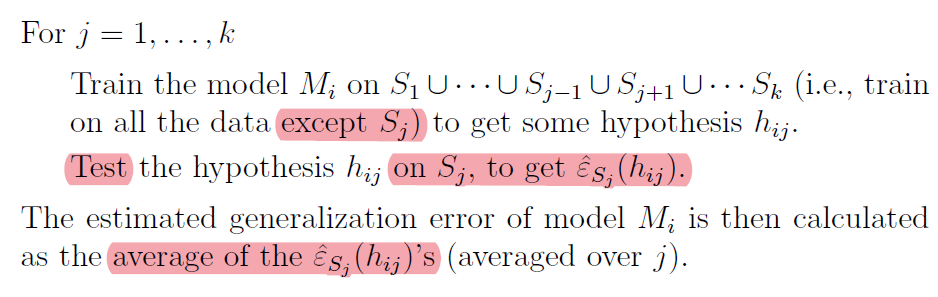

\[p(x_1, x_2, ...,x_{10,000} | y) = p(x_1|y)\,p(x_2|x_1,y)\,p(x_3|x_1,x_2,y)...p(x_{10,000}|...)\]