COMS4995 Deep Learning part1

- Logistics and Introduction

- Forward and Back Propagation

- Optimization

- Regularization

- Convolutional Neural Networks

- Sequence Models

- Graph Neural Network

- Transformers

- Generative Adversarial Network

- Deep Variational Inference

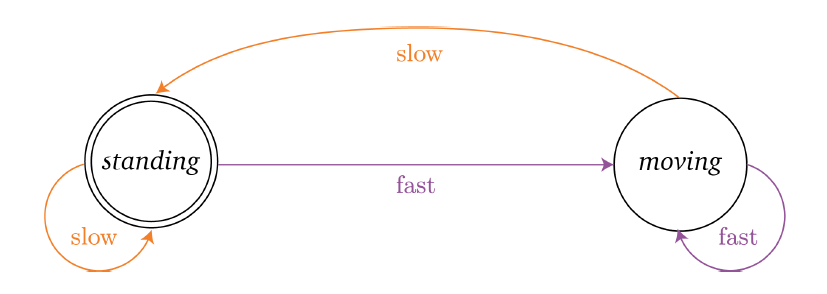

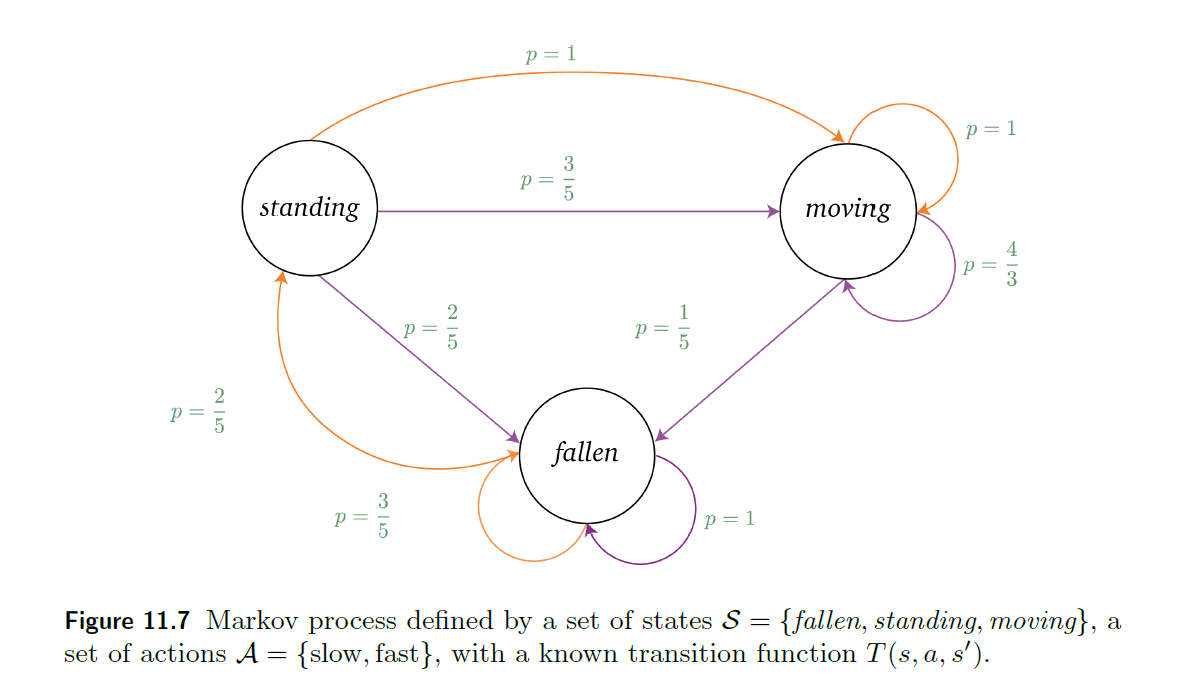

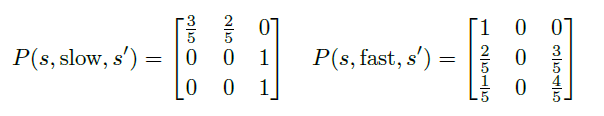

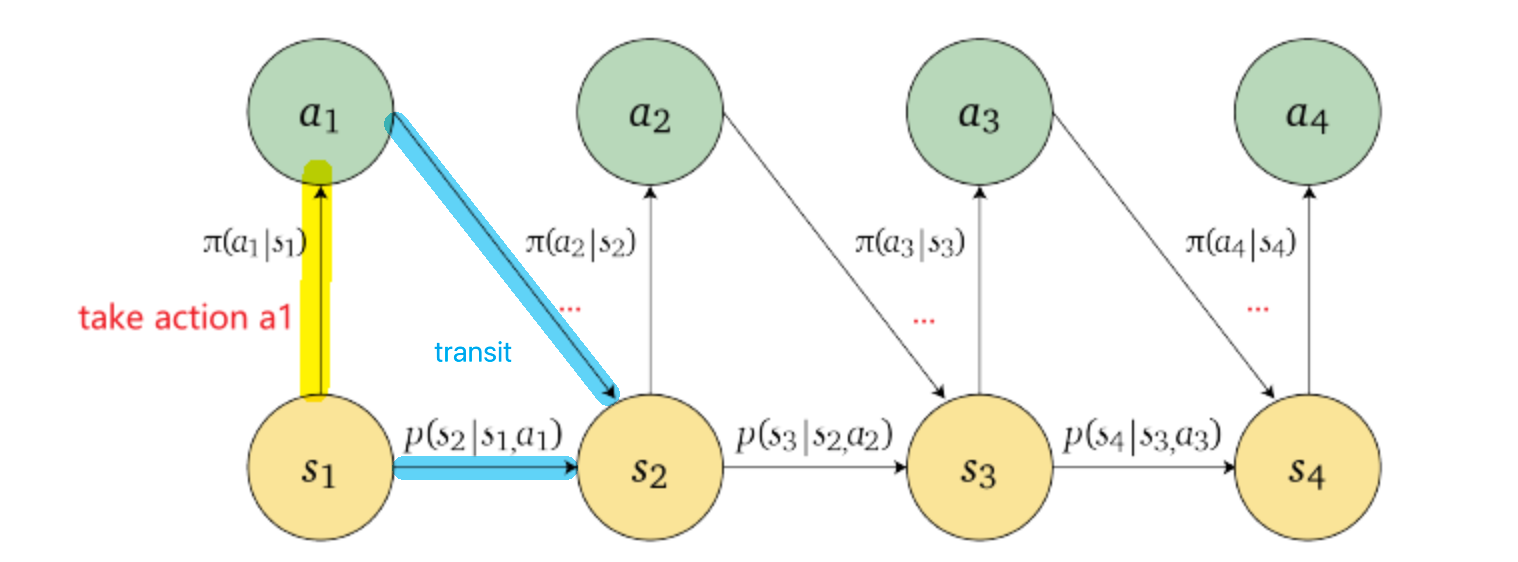

- Reinforcement Learning

- Deep Reinforcement Learning

- Optional

- Tutorials

- Competitions

Logistics and Introduction

Office hours

- Lecturer, Iddo Drori (idrori@cs.columbia.edu), Tuesday 2:30pm, Zoom (Links to an external site.)

- CA, Anusha Misra, Wednesday 3:30-4:30pm, Zoom (Links to an external site.)

- CA, Vaibhav Goyal, Friday 3-4pm, Zoom (Links to an external site.)

- CA, Chaewon Park (cp3227@columbia.edu), Thursday 3:30-4:30PM, Zoom (Links to an external site.)

- CA, Vibhas Naik (vn2302@columbia.edu), Monday 11AM-12PM, Zoom

Grades:

- 9 Exercises (30%, 3% each, individual, quizzes on Canvas)

- quizzes will timed in some of the live lectures. So attend lectures!

- Competition (30%, in pairs)

- Projects (40%, in teams of 3 students)

Projects Timeline

- Feb 18: Form Teams and Signup

- Feb 25: Select project

- Mar 10-11: Project Kick-off and proposal meetings

- Mar 31 - Apr 1: Milestone Meetings

- Apr 21-11: Final Project Meetings

- Apr 28: Project poster session

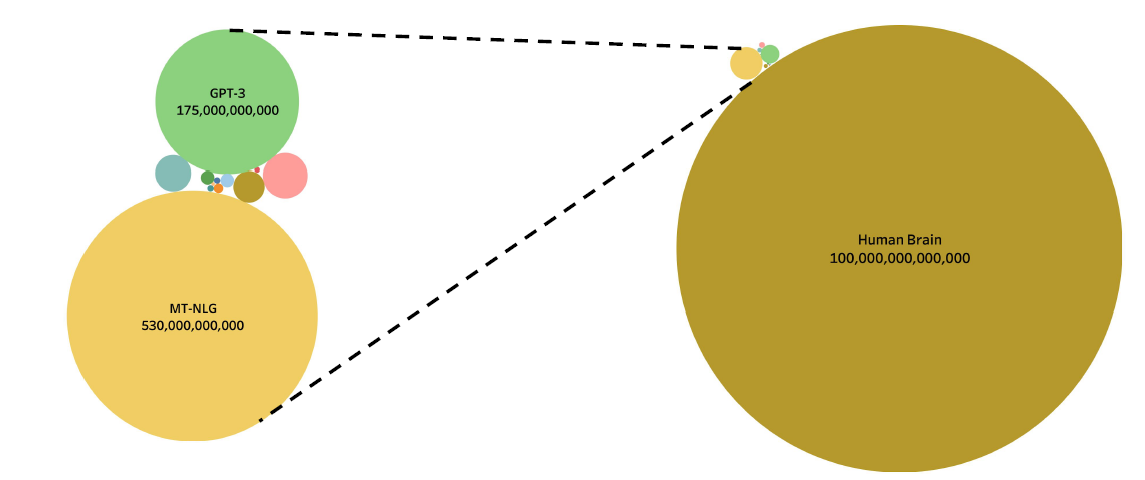

Human Brain Deep Learning

In comparison of sizes of number of neurons:

where the left hand size are the deep learning models and the right hand size the human brain

- however, it is also said that humans are “generalized”, where machines are “specialized”

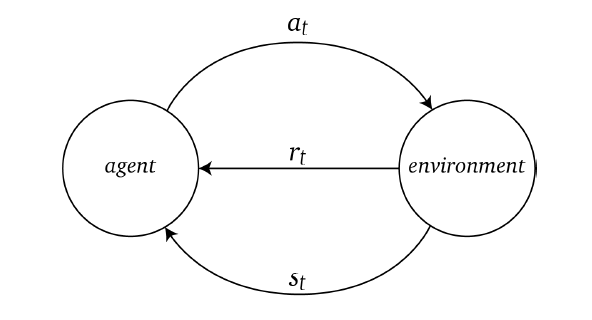

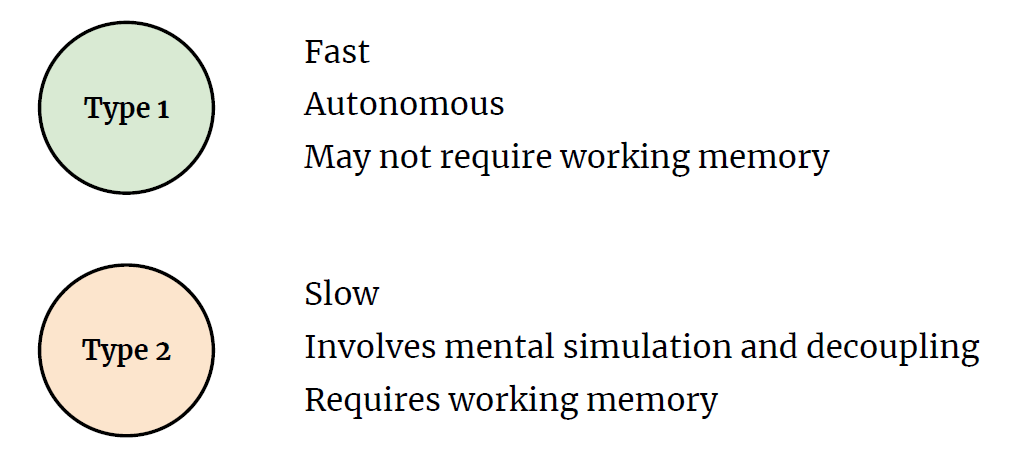

What happens

|  |

|  |

| ———————————————————— | ———————————————————— |

|

| ———————————————————— | ———————————————————— |

- Type 2 process, your pupil will dilate (slow)

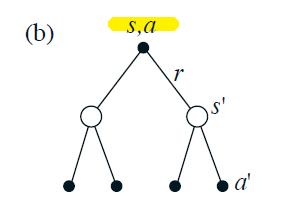

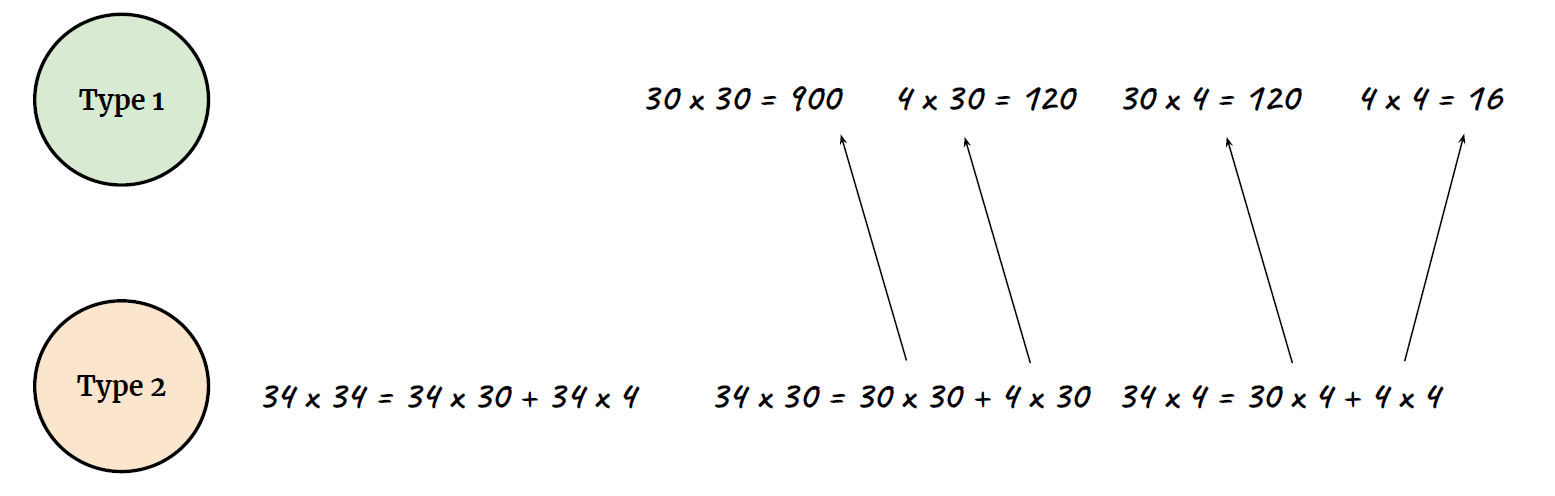

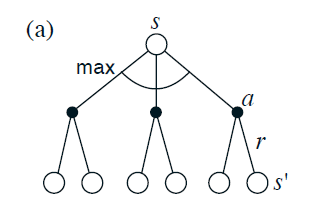

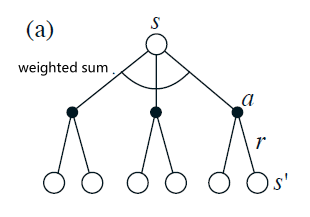

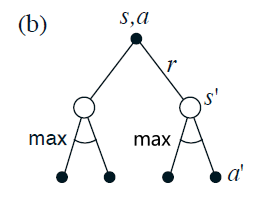

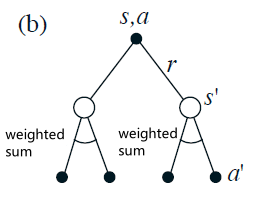

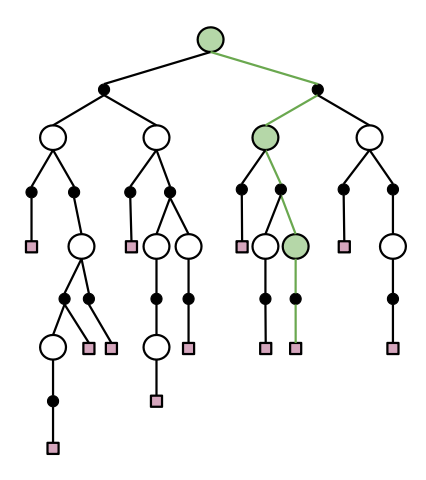

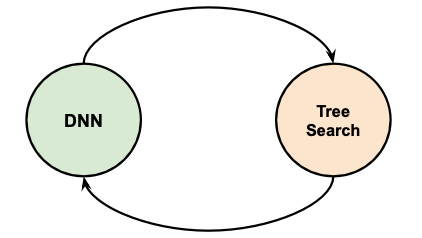

Then, in AlphaGo, as well as other models, essentially it is doing:

where the

- neural networks DNN are doing Type 1 processes

- tree search doing Type 2. (e.g. Monte Carlo Tree Search)

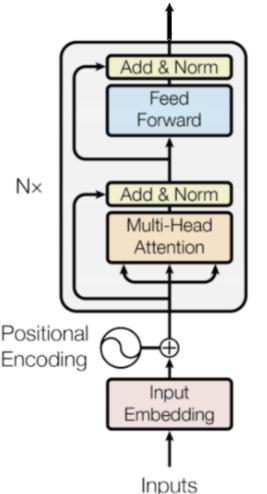

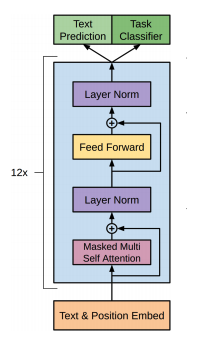

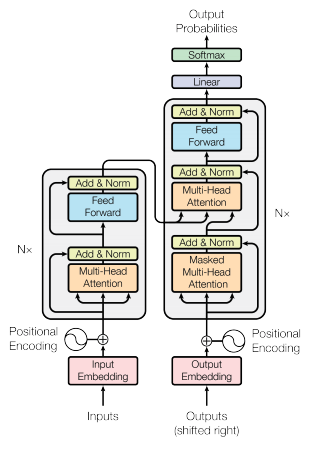

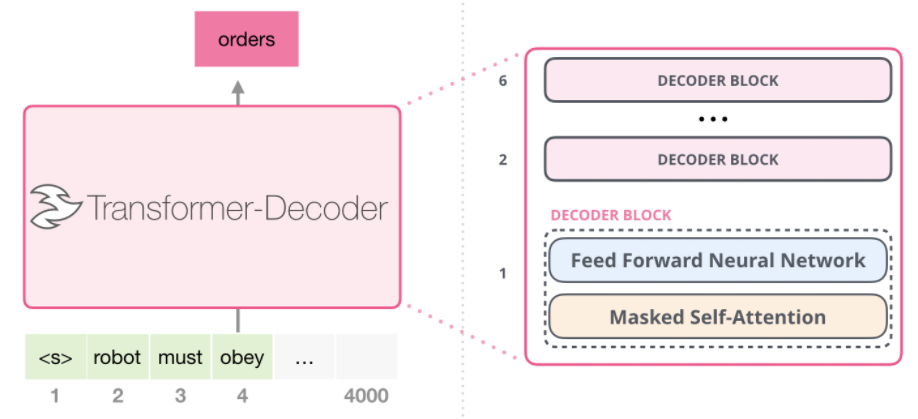

Intro: Transformers

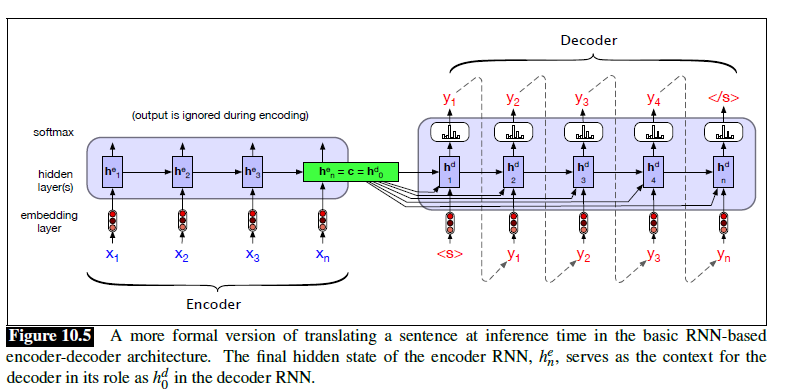

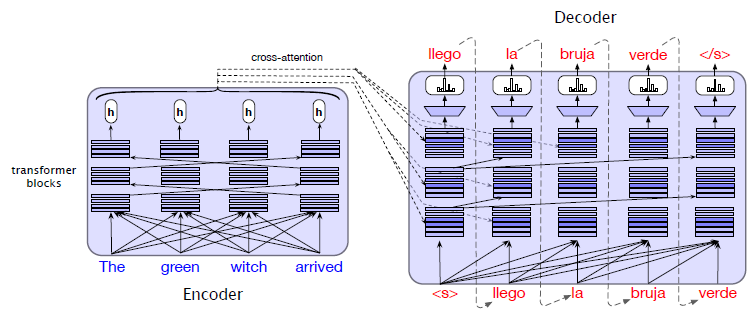

The paper ‘Attention Is All You Need’ describes transformers and what is called a sequence-to-sequence architecture. Sequence-to-Sequence (or Seq2Seq) is a neural net that transforms a given sequence of elements, such as the sequence of words in a sentence, into another sequence. (Well, this might not surprise you considering the name.)

Seq2Seq models are particularly good at translation, where the sequence of words from one language is transformed into a sequence of different words in another language. A brief sketch of how it works would be:

- Input (e.g. in English) passes through an encoder

- Encoder takes the input sequence and maps it into a higher dimensional space (imagine translating it to some imaginary language $A$)

- Decoder takes in the sequence in the imaginary language $A$ and turns it into an output sequence (e.g. French)

Initially, neither the Encoder or the Decoder is very fluent in the imaginary language. To learn it, we train them (the model) on a lot of examples.

- A very basic choice for the Encoder and the Decoder of the Seq2Seq model is a single LSTM for each of them.

More details:

-

https://medium.com/inside-machine-learning/what-is-a-transformer-d07dd1fbec04

-

https://towardsdatascience.com/transformers-141e32e69591

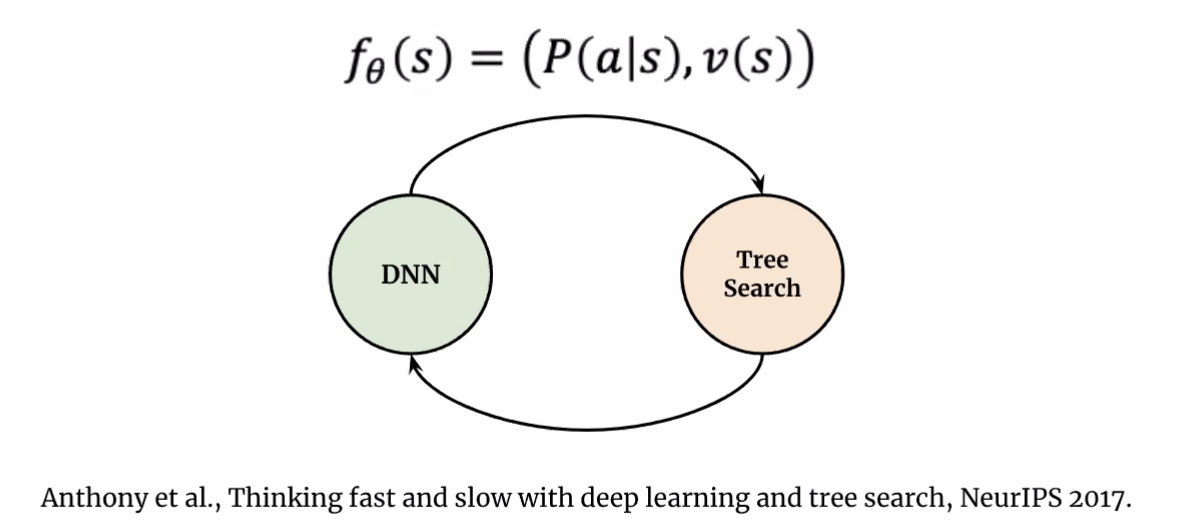

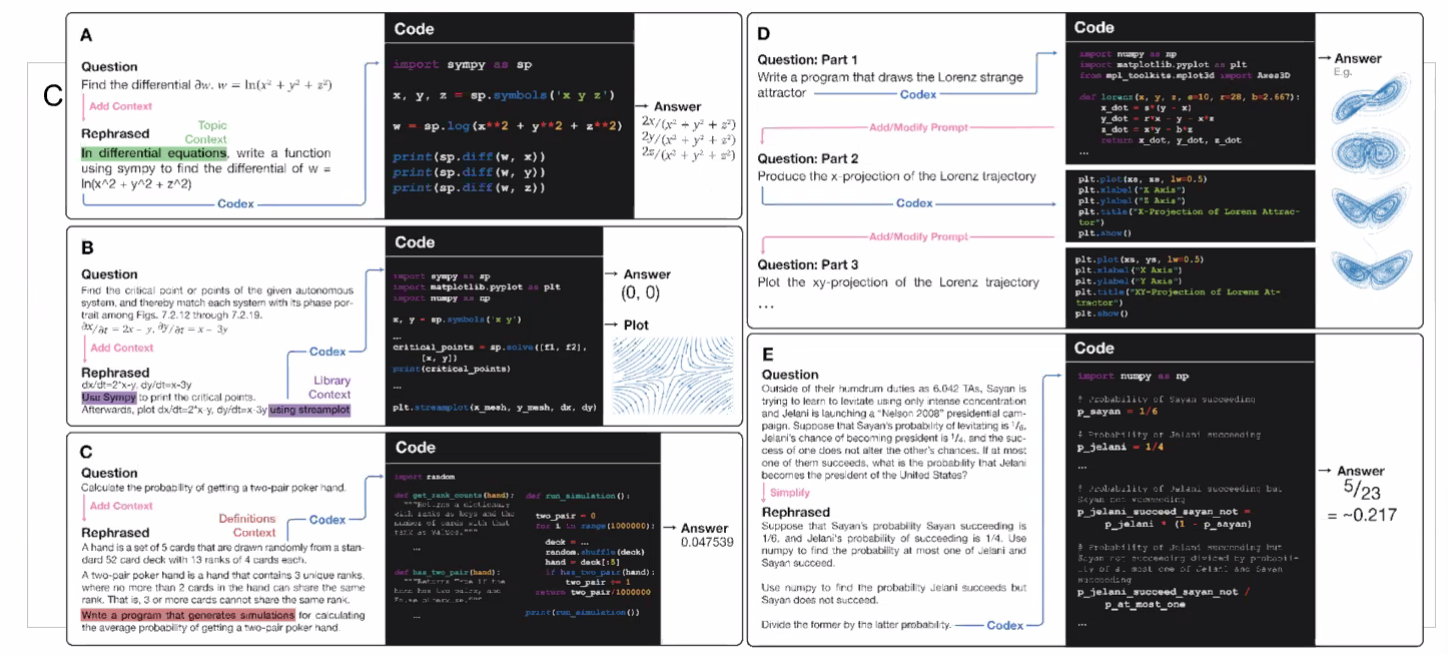

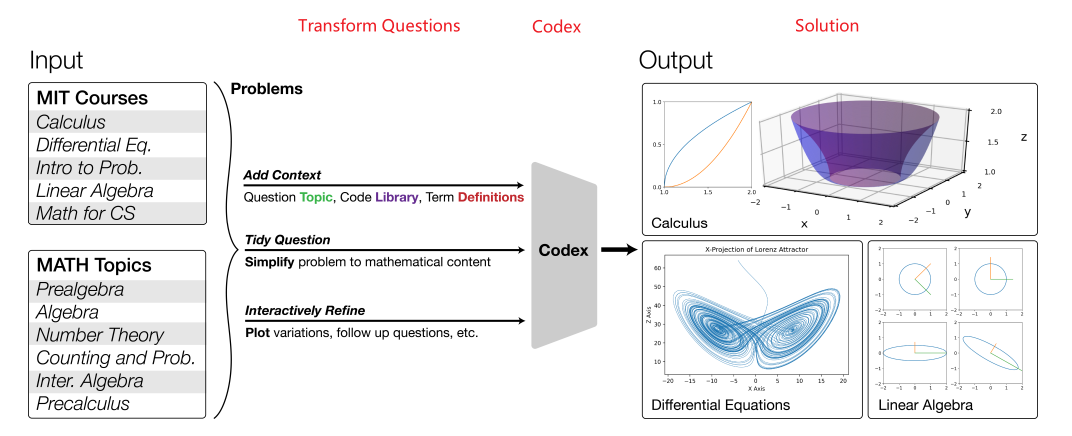

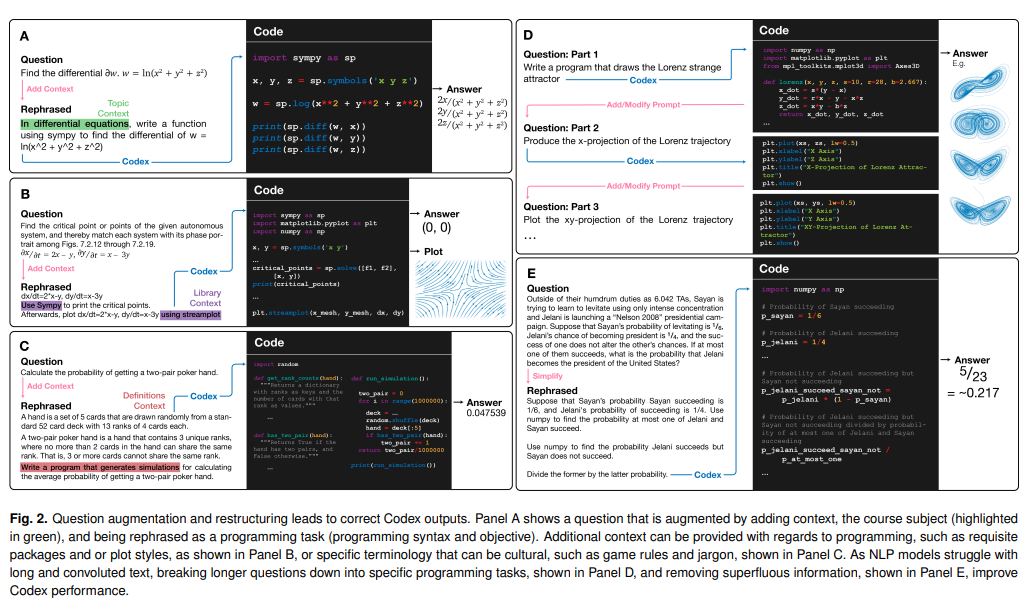

Example Application

Consider the task of trying to use AI to solve math problems (e.g. written in English)

It turns out that using language models, it doesn’t work if you want to do those math questions. However, if you turn math questions into programs, then it worked!

Then, some example output of this using Codex would be:

notice that questions will need to be able to rephrased/transformed so that it is clearly a programming task, before putting into learning models.

- this also means a full automation would be difficult

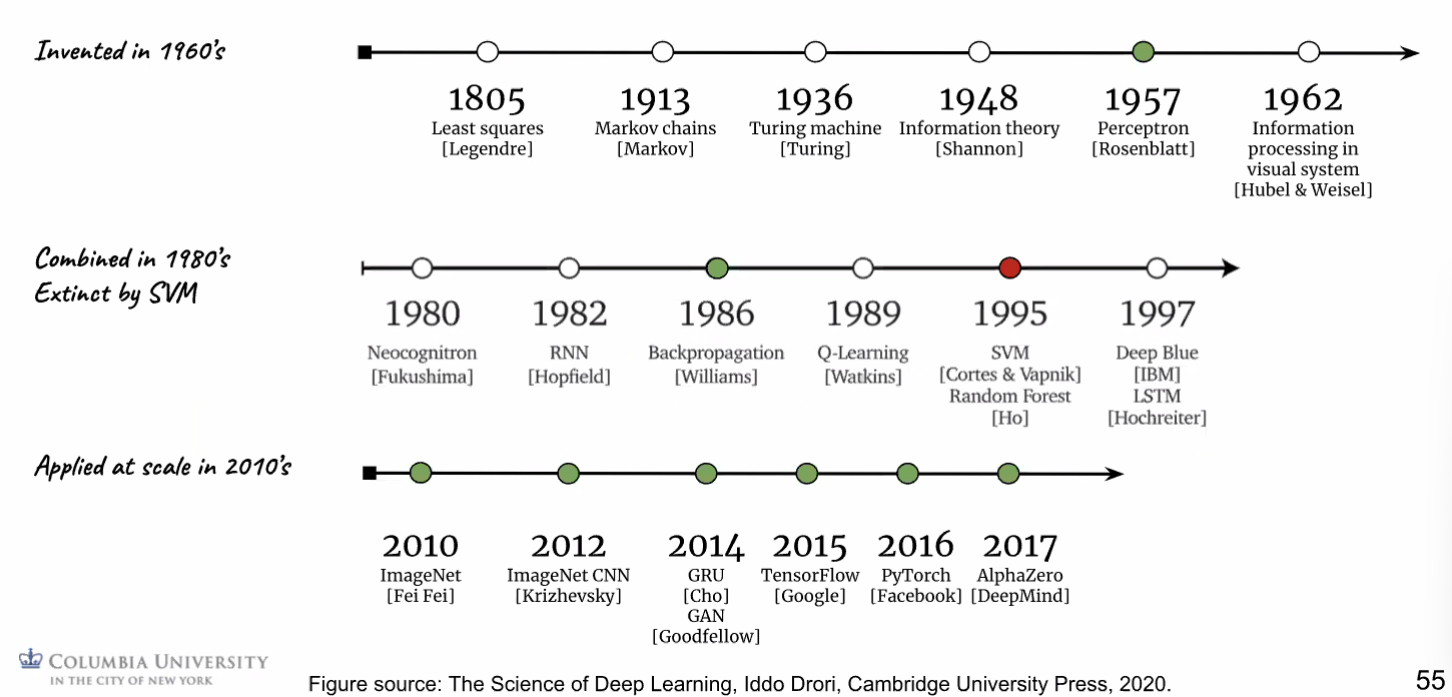

DL Timeline

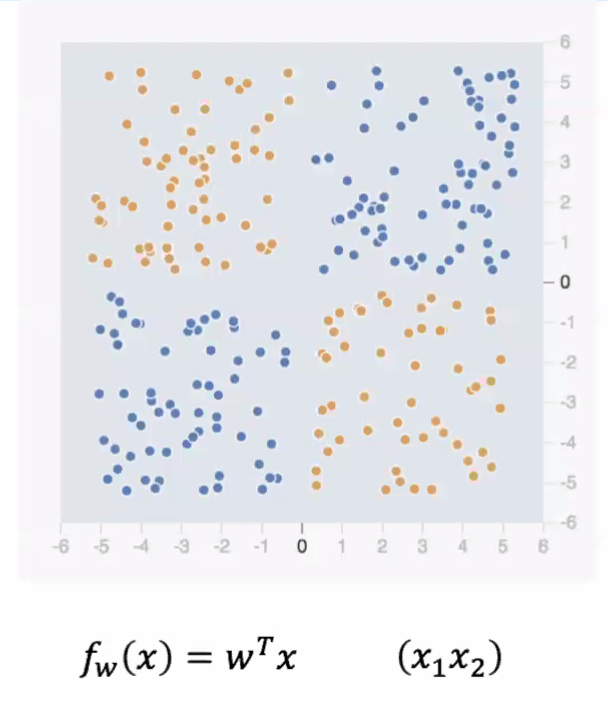

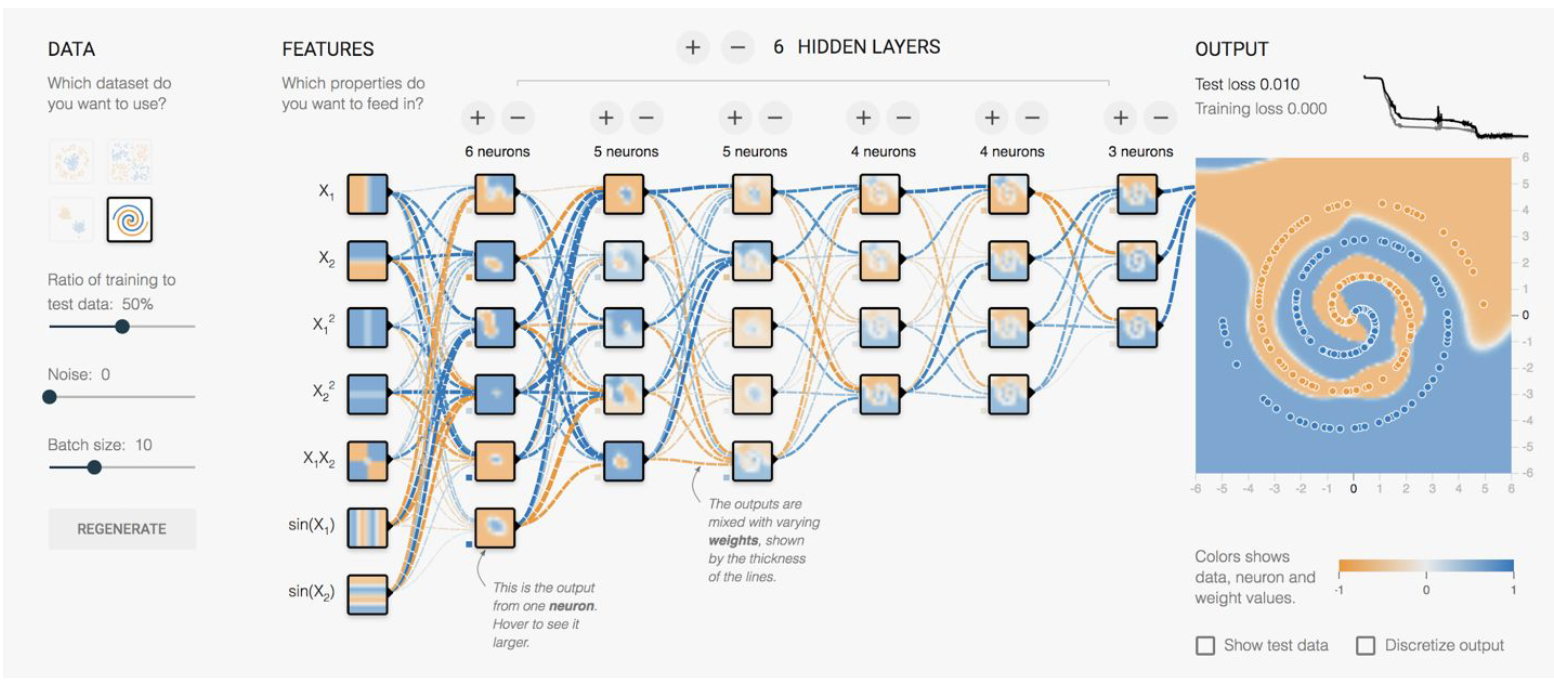

Supervised Deep Learning Example

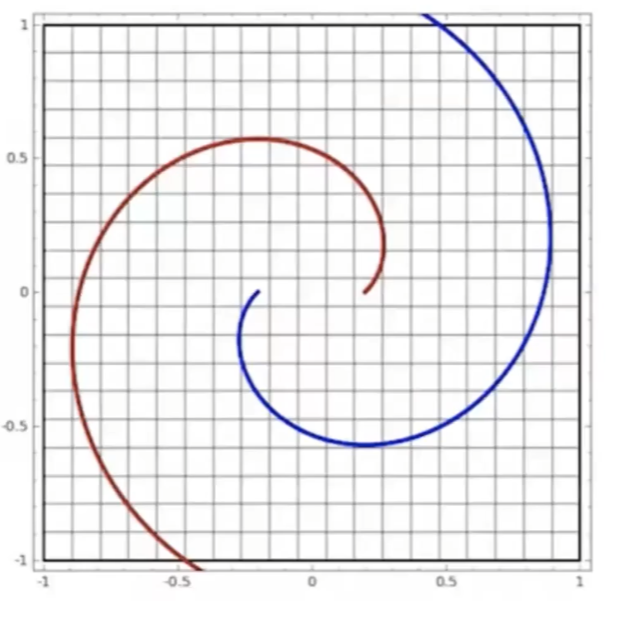

Consider the following data, and we want to use a linear model to separate the data

notice that by default, a linear transformer does not work. Hence we need to consider $x_1x_2$ as a feature

- the idea is that we may want to consider feature extraction/processing before putting them into the network

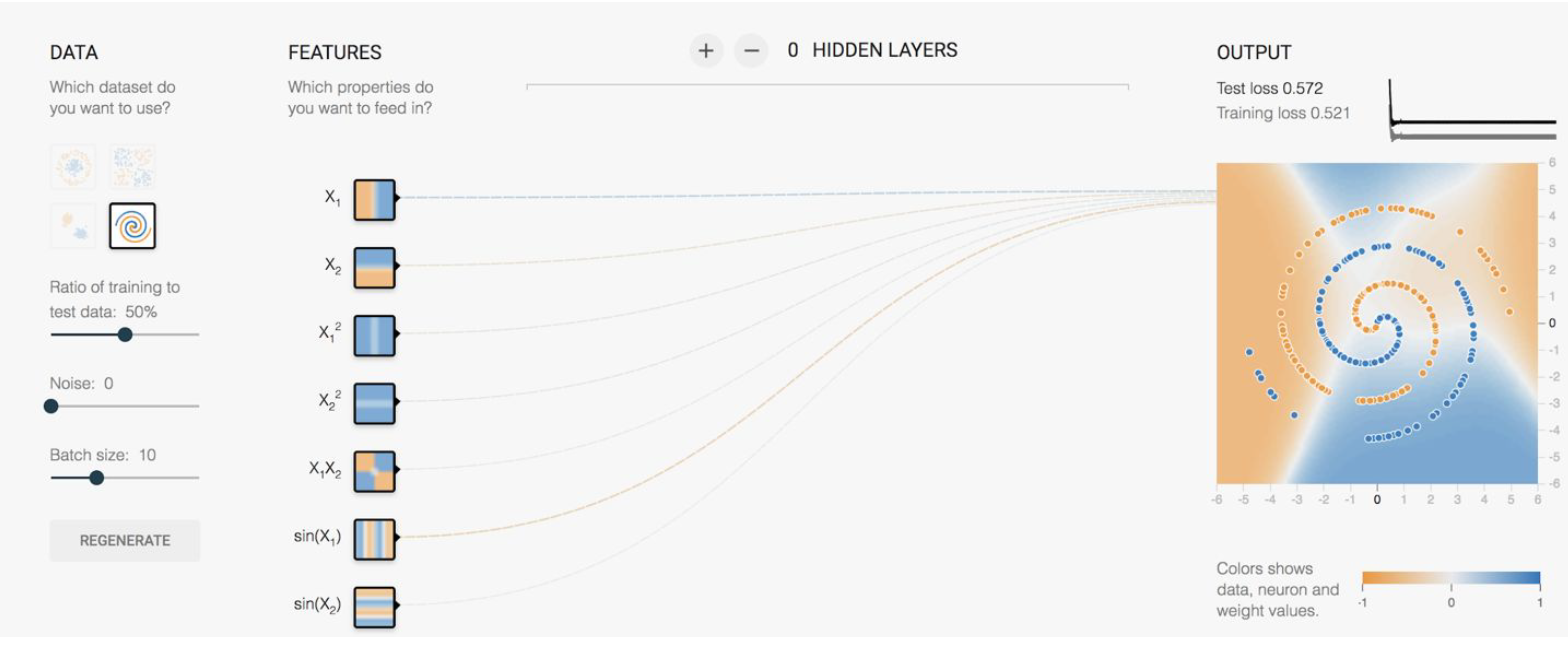

However, what if we are given the following data:

where there doesn’t seem to be a clear/easy solution if we stick with a linear classifier even with some single layer feature transformation. As a result, in this case you will have to use a neural network:

the upshot is that we really need to consider extracting features and use linear classifiers before using deep neural network, which is necessary only in some cases like the one above.

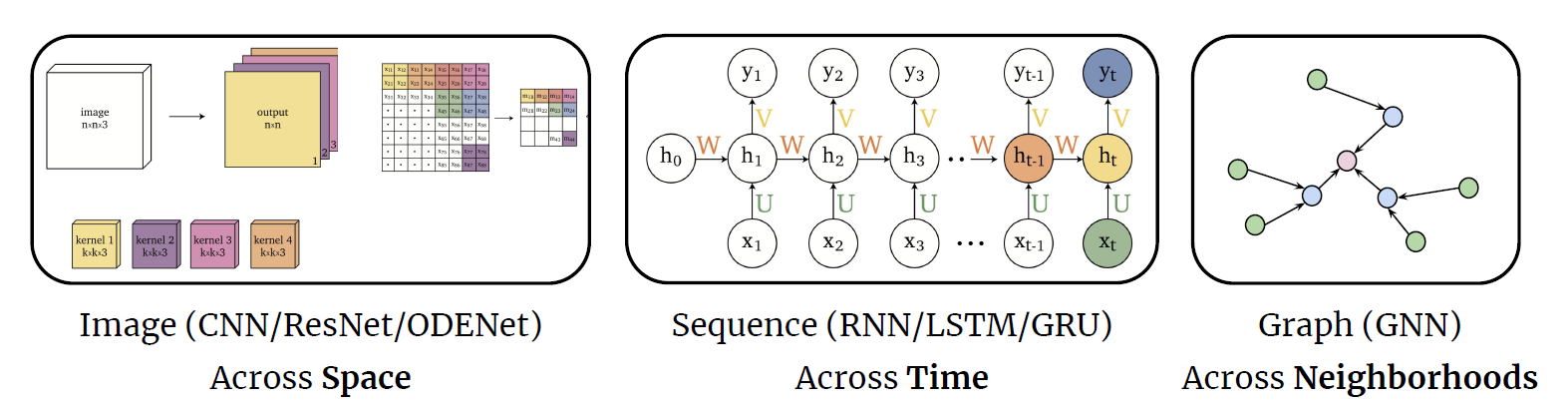

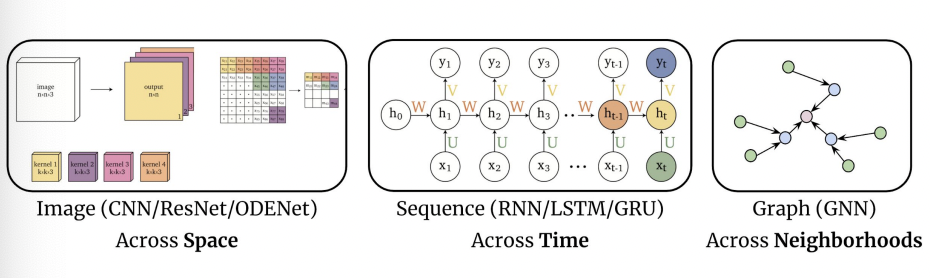

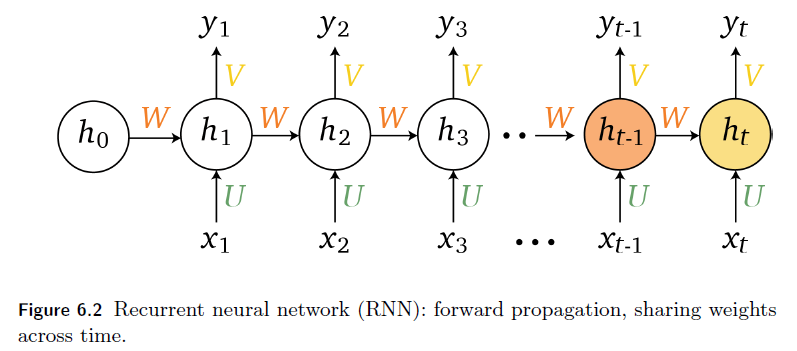

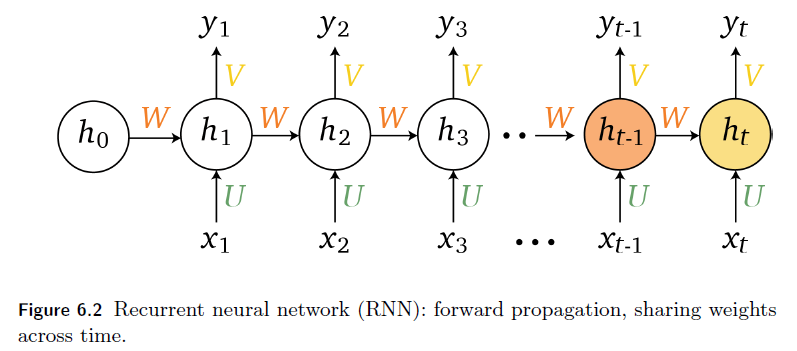

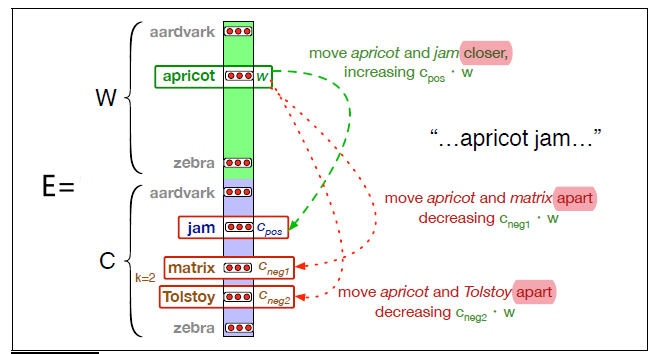

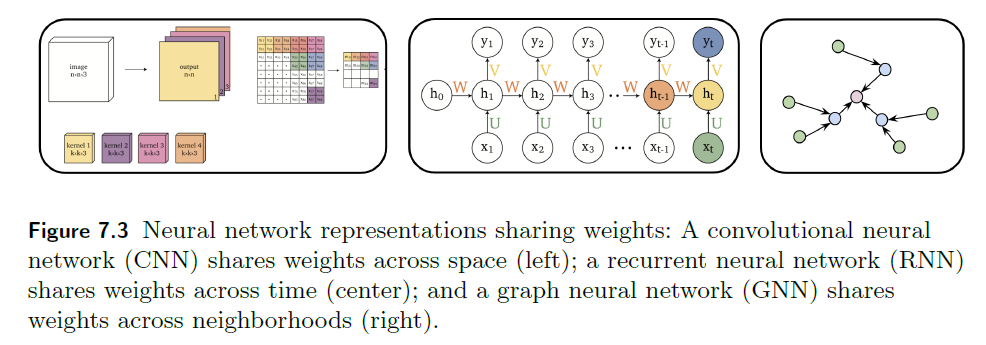

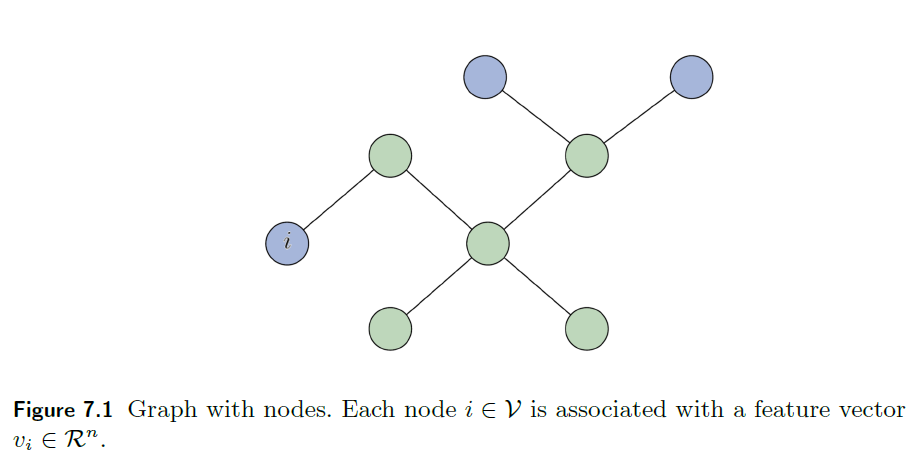

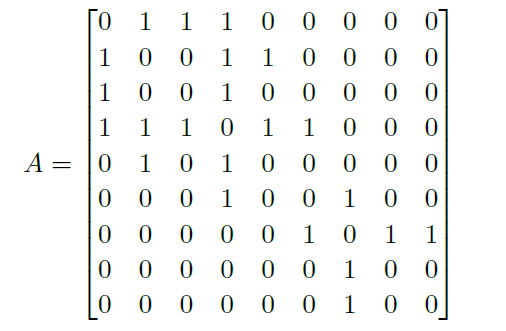

Representations Sharing Weights

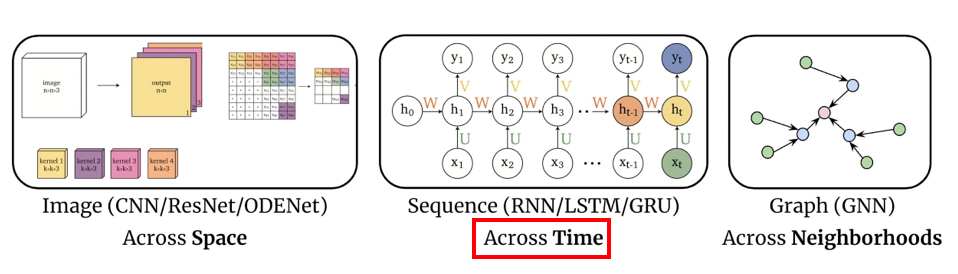

Some nowadays popular NN architectures are:

where notice that one common feature that made them successful is to share weights $W$ between layers/neurons:

-

CNN share $W$ through space

-

RNN share $W$ through time

-

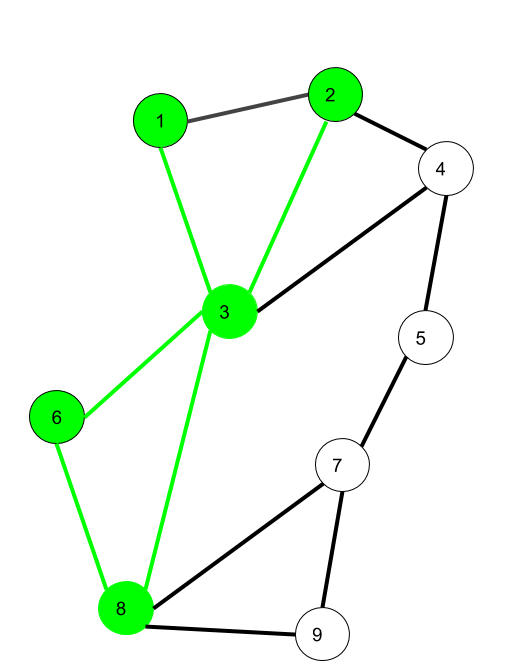

GNN share $W$ across neighborhoods

Forward and Back Propagation

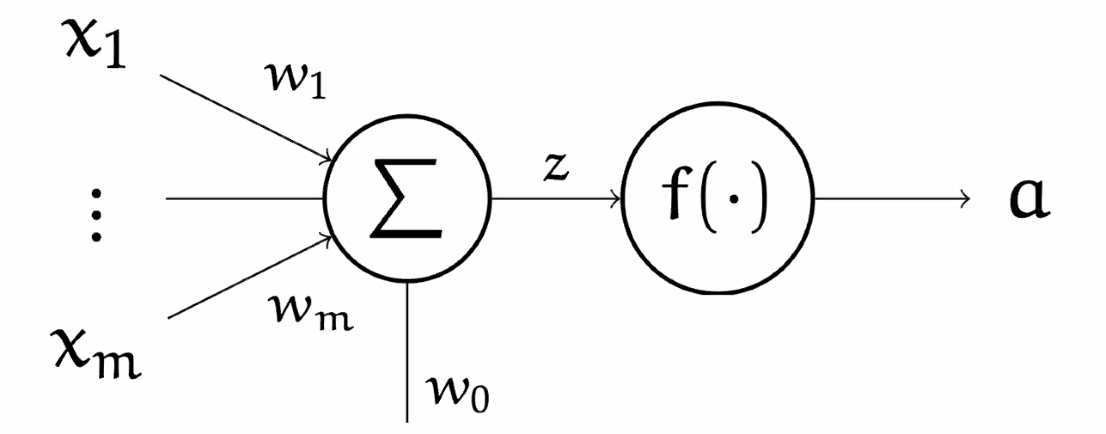

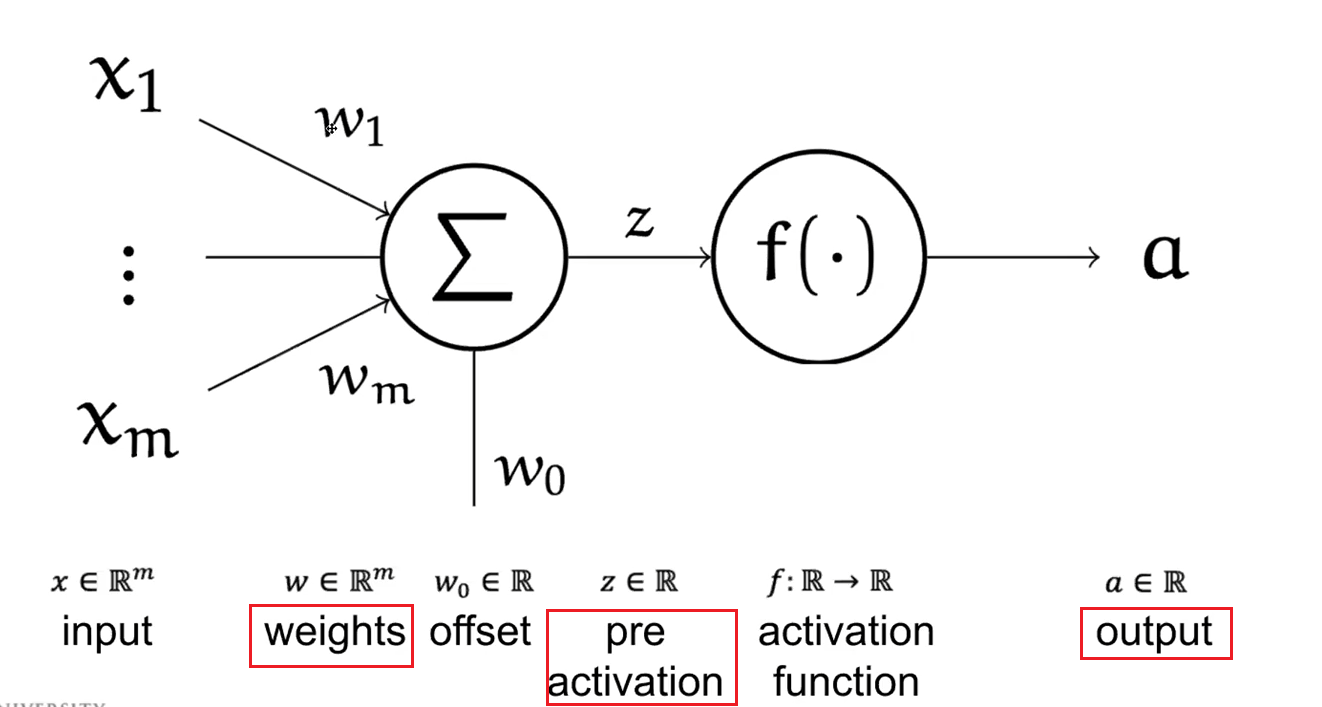

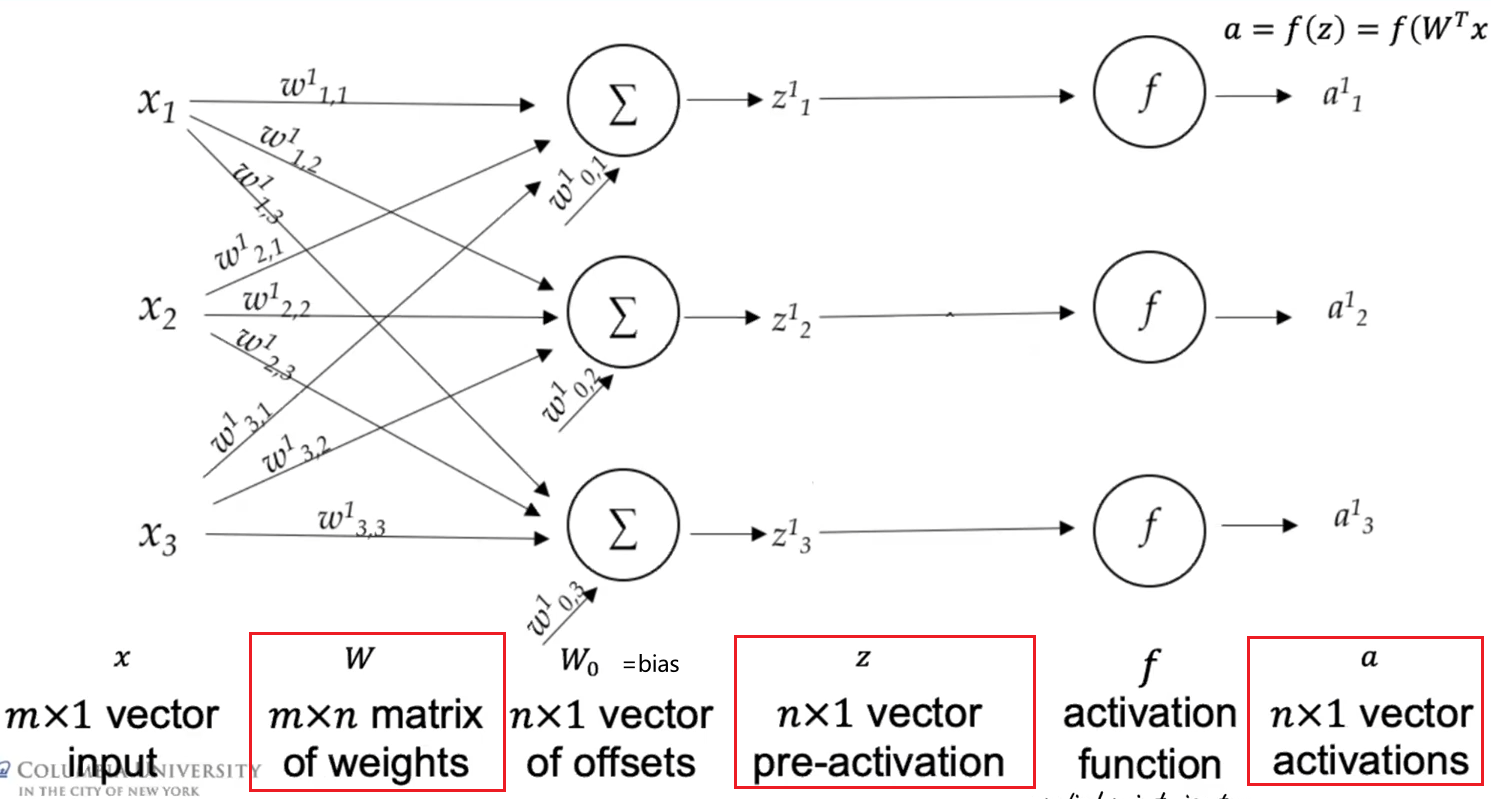

The basics of all the NN is a Neuron/Perceptron

where:

- input is a single vector

- the $\Sigma$ represents we are summing the components of $\vec{x}$ ($w_0$ is a scalar representing bias)

- then the scalar is passed into an activation function $f$, often non-linear

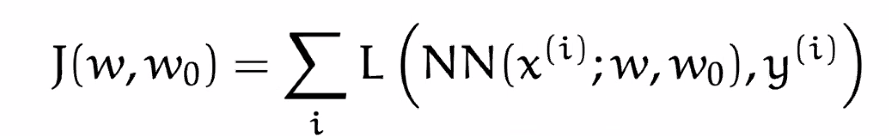

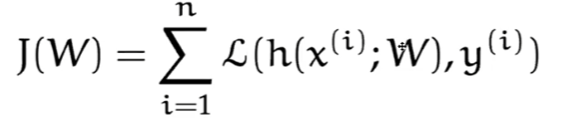

Now, remember that our aim is to minimize loss with a given training label:

where $\mathcal{L}$ is a loss function of our choice:

- this is summed over all the training $m$ samples

- $w_0$ will represent the bias, often/later absorbed into $W$

- our objective is to minimize this $J$

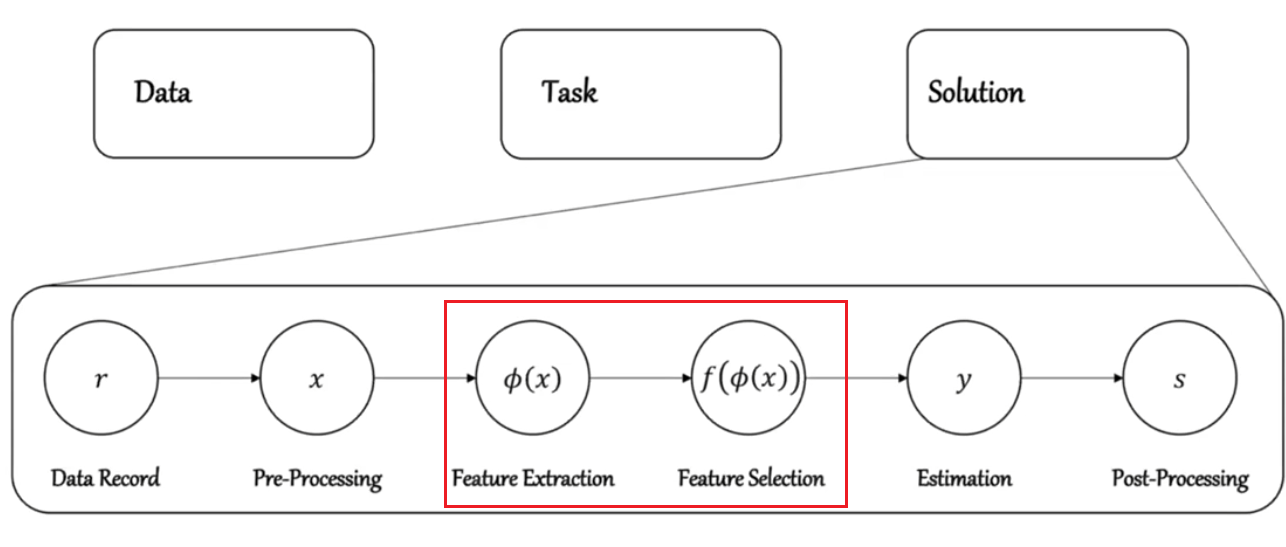

Note

Remember that for any Learning task

Before the step of feature extraction and selection, you may want to pay careful attention on how to do feature extraction/selection to improve your model.

Neural Networks

The simplest component is a Neuron/Perceptron, whose mathematical model was basically finding:

\[g(\vec{x}) = w^T\vec{x}+w_0 = 0\]and doing the following for classification:

\[f(x):= \begin{cases} +1 & \text{if } g(x) \ge 0\\ -1 & \text{if } g(x) < 0 \end{cases} \quad = \text{sign}(\vec{w}^T\vec{x}+w_0)\]However, since the output is $\text{sign}$ function which is not differentiable, in Neural Network we will use $\sigma$ sigmoid instead. This also means that, instead of using Perceptron Algorithm to learn, we can use backpropagation (basically a taking derivatives using chain rule backwards).

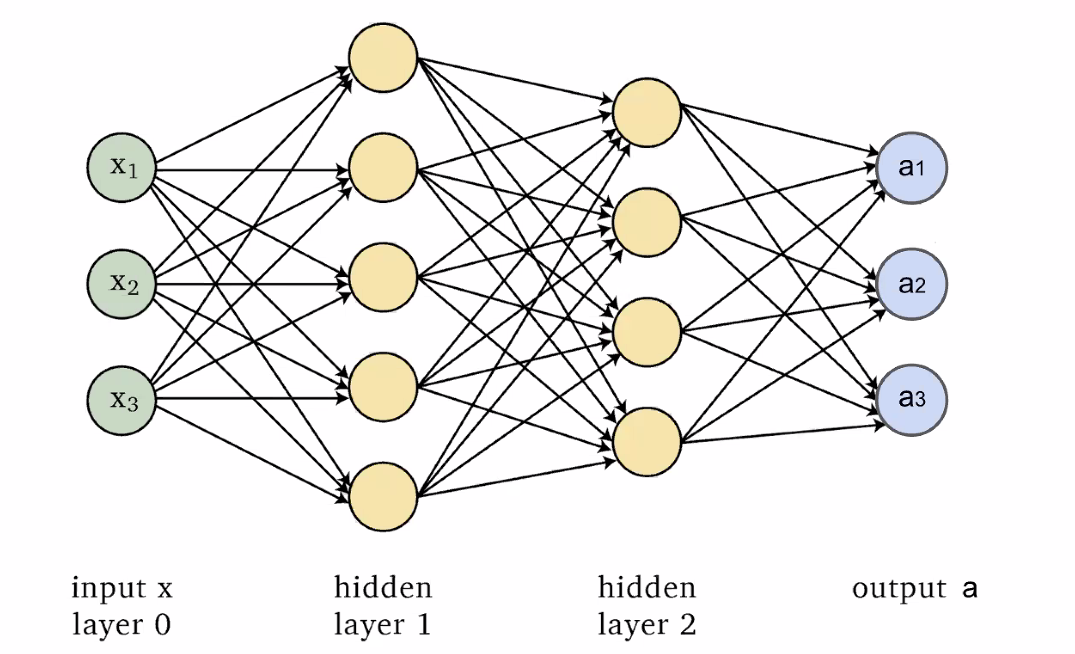

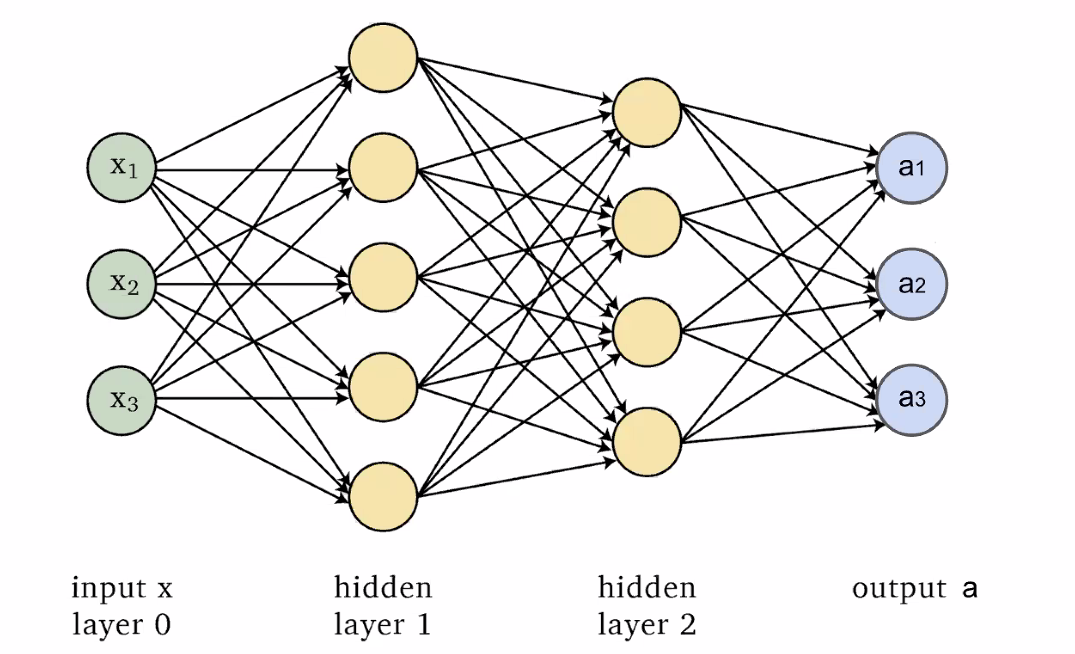

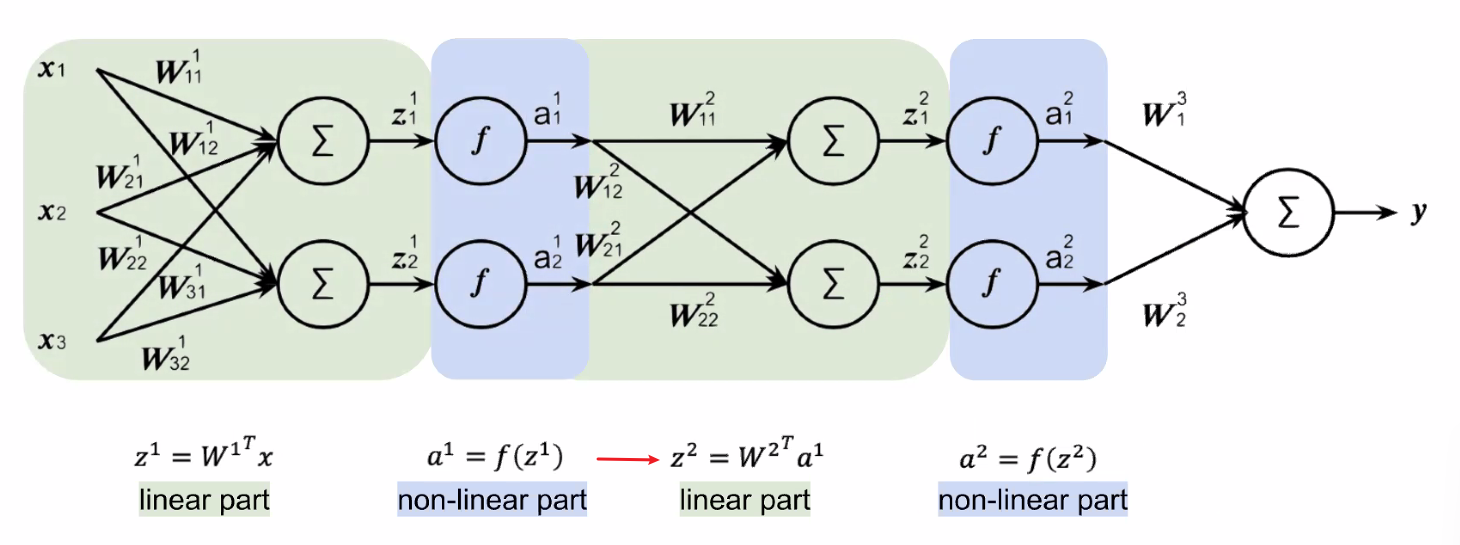

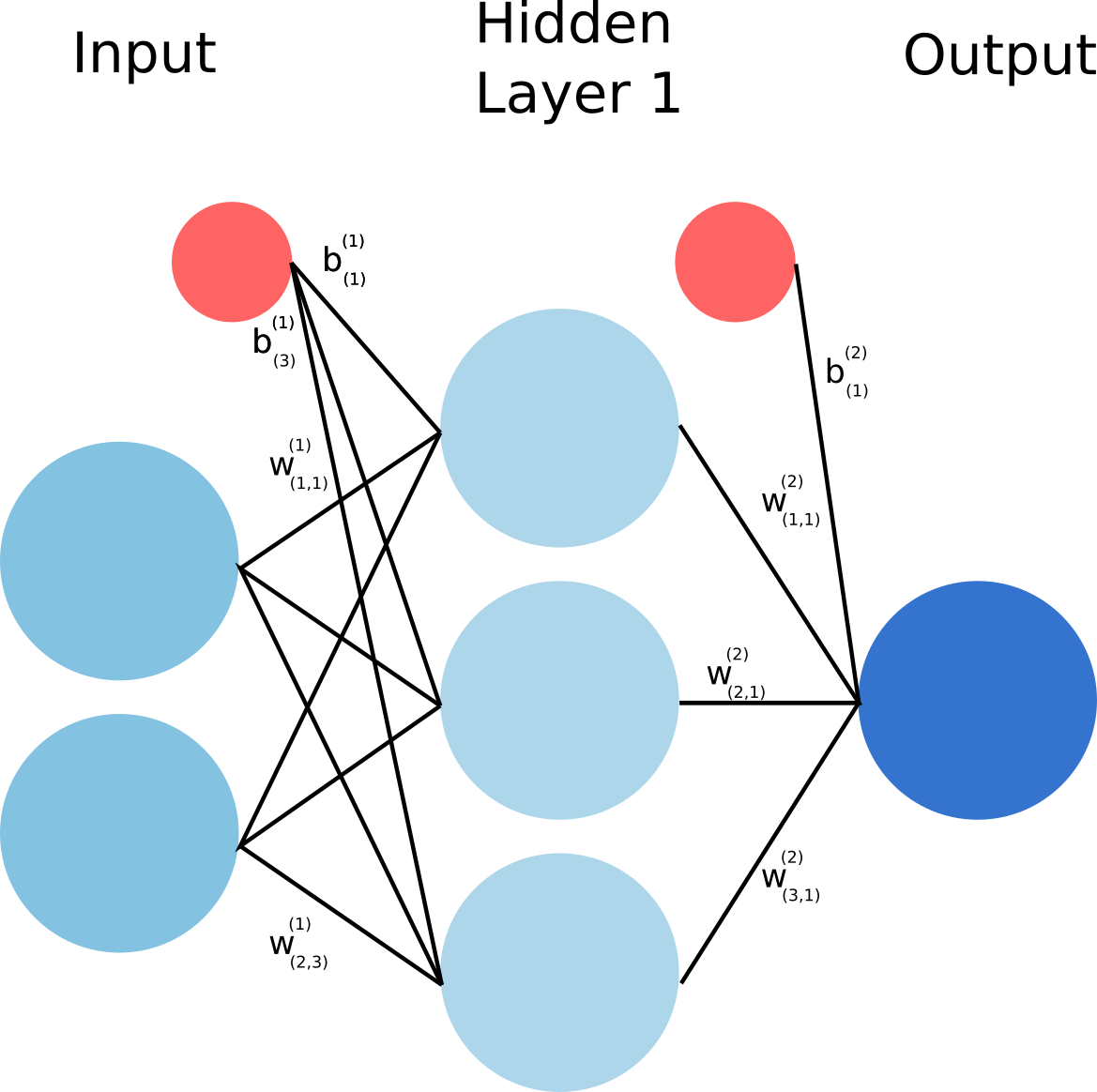

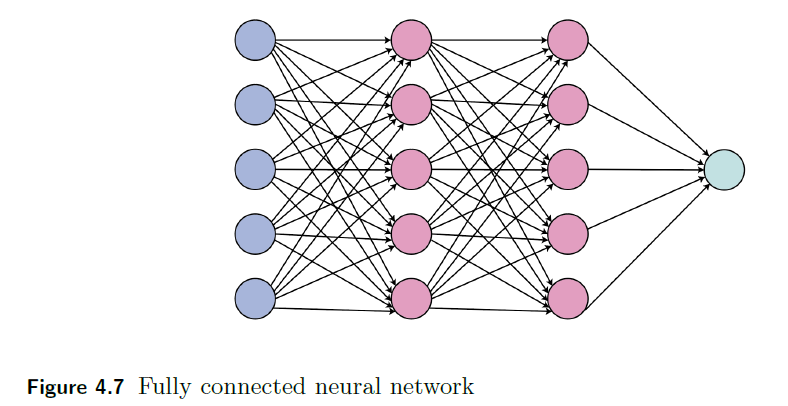

That said, a Neural Network basically involves connecting a number of neurons:

where since at $\Sigma$ we are just doing $\vec{w}^T \vec{x}$, which is a linear combination, we used the $\Sigma$ symbol.

Then, combining those neurons in a fully connected network:

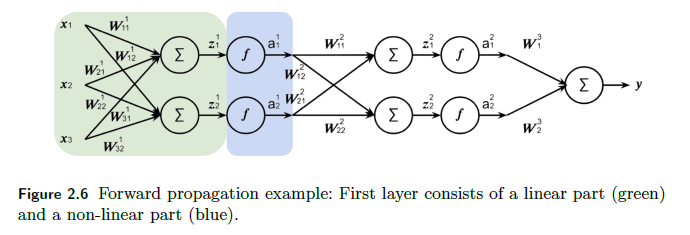

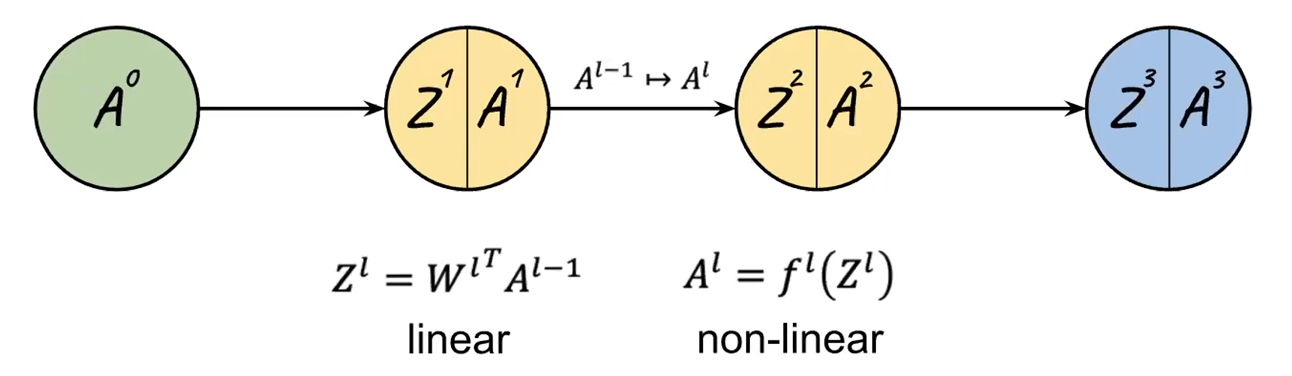

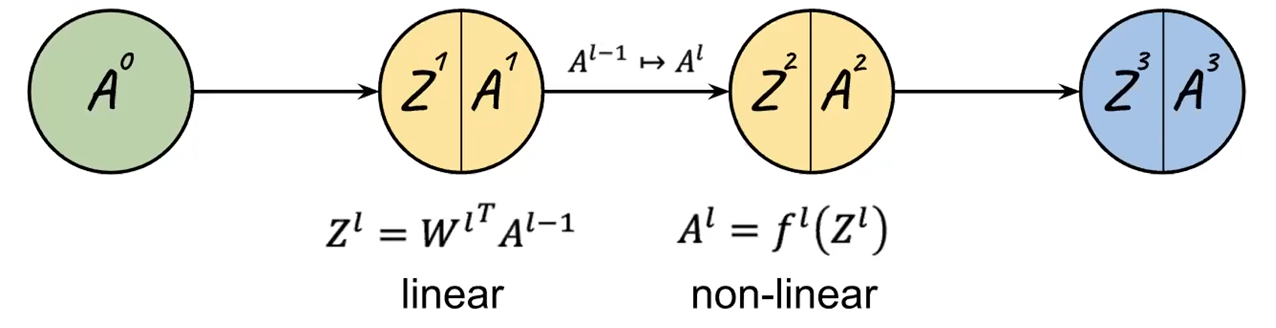

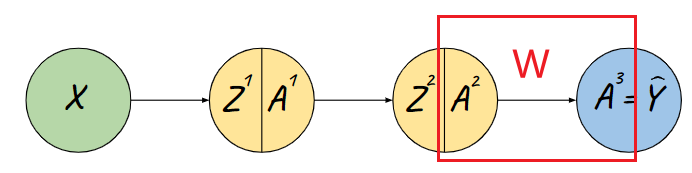

Conceptually, since each neuron/layer $l$ basically does two things, from the book:

Hence each layer can be expressed as:

\[z^l = W^l \vec{a}^{l-1},\quad \vec{a}^l = f(z^l)\]where we assumed a single data point input (i.e. a vector instead of a matrix), but note that:

-

$Z^l$ is the linear transformation, $a^l$ is the $\sigma$ applied to each element if activation is $\sigma$.

Therefore, since we are just doing a bunch of linear transformation (stretchy) and passing to a sigmoid (squash):

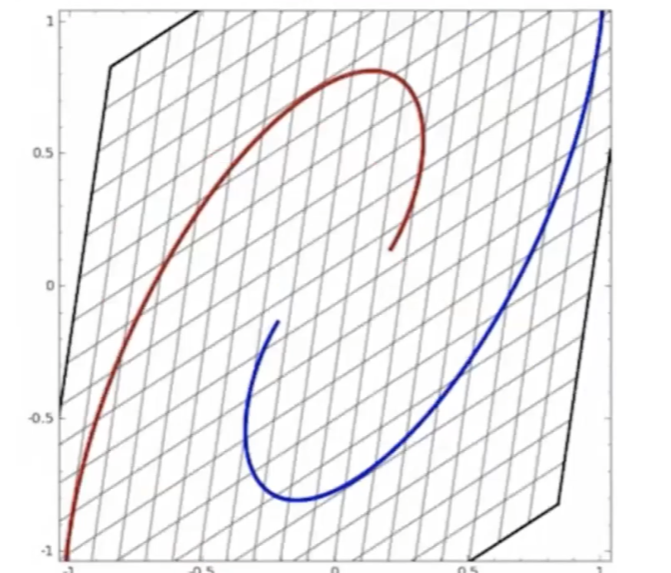

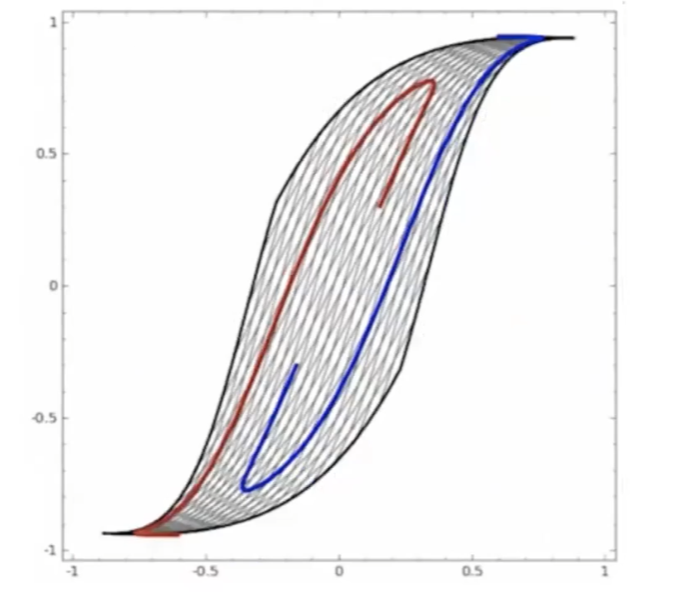

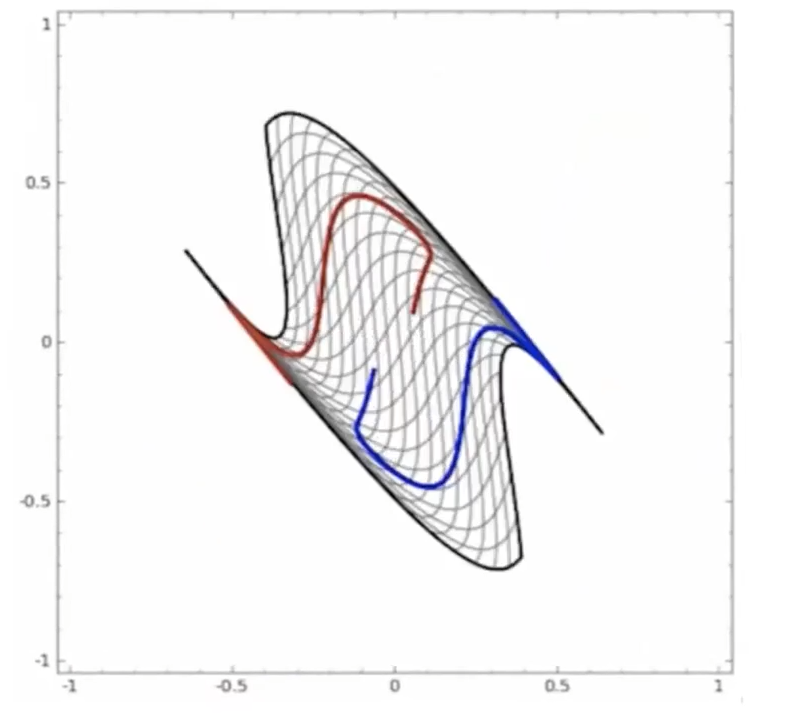

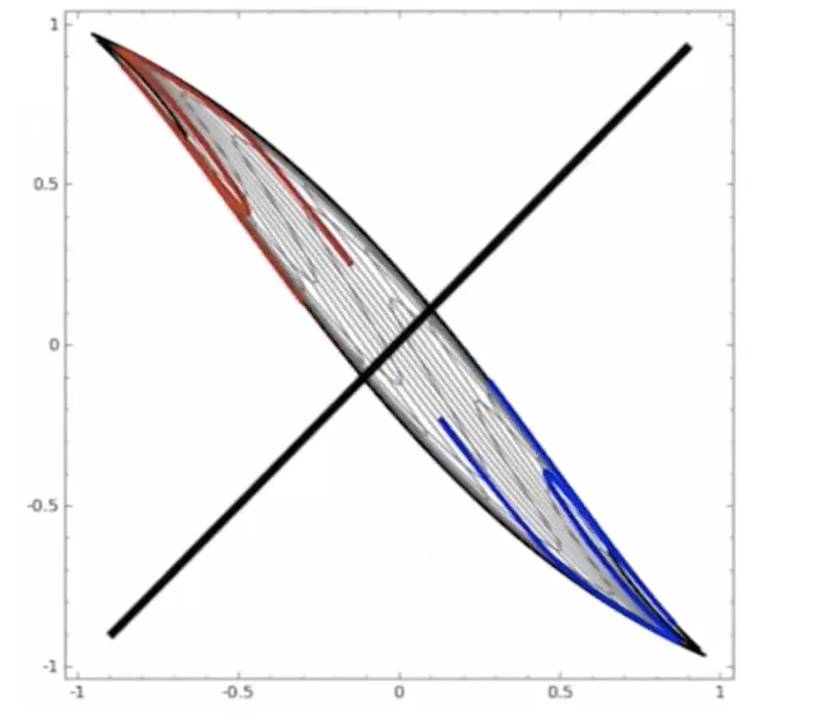

Start

where:

- at the start, the red will be labeled as $+1$, and blue $-1$ in a space perpendicular to the graph

- the last one looks separable.

-

This can be easily gerealized when you have $n$ data points, so that:

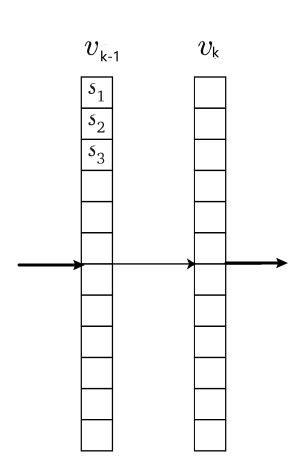

\[Z^l = (W^{l})^T A^{l-1},\quad A^l = f(Z^l)\]basically you have now matrices as input and output, weights are still the same. To be more precise, a layer then looks like

so that you are doing $z^l = (W^{l})^T a^{l-1}+W_0$, if again, we have a single data point input of $m$ dimension. Yet often we would have absorbed the $W_0$ by lifting, so that we have:

\[\vec{a} = \begin{bmatrix} a_1\\ \vdots\\ a_m\\ 1 \end{bmatrix} \in \mathbb{R}^{m+1}, \quad W^T \leftarrow [W^T, W_0] \in \mathbb{R}^{n \times (m+1)}\]

In general, if there are $n_l$ neurons in the $l$-th layer, then at the $l$-th layer:

\[A^{l-1} = \text{input} \in \mathbb{R}^{(n_{l-1}+1)) \times m},\quad (W^l)^T \in \mathbb{R}^{n_l \times (n_{l-1} + 1)}\]where $n_0 = m$ is the dimension of the data, and we absorbed in the bias.

- a quick check is to make sure that $Z^l = (W^l)^T A^{l-1}$ works

- a visualization is that data points are now aligned vertically in the matrix

Neuron Layer

To make it easier to digest mathematically, we can think of each layer as a single operation. Graphically, we convert:

To this, simply three “neurons” (excluding the input):

where notice that:

-

$F(x) = f(W^Tx)$ is a shorthand for notating the entire linear and nonlinear operation. This would be nonlinear.

-

therefore, each layer $l$ basically just does:

\[F^l(A^{l-1}) = A^l\]outputting $A^l$. Hence we can do a “Markov chain” view of the NN as just doing:

\[F(x) = F^3 (F^2 (F^1(x)))\] -

if $f$ are identities, then the output is just a linear transformation since $F$ would be linear.

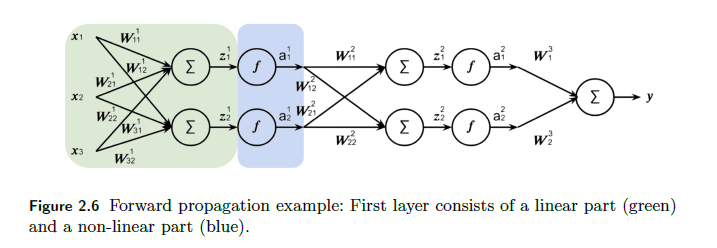

For Example:

Consider the following NN. For brevity, I only cover the first layer:

First we do the pre-activation $z^1$ for the input of a single data point of dimension $3$:

\[z^1 = (W^1)^T a^{0} = \begin{bmatrix} w_{11} & w_{21} & w_{31}\\ w_{12} & w_{22} & w_{32} \\ \end{bmatrix}\begin{bmatrix} x_{1}\\ x_2\\ x_3 \end{bmatrix} = \begin{bmatrix} z_{1}^1\\ z_2^1\\ \end{bmatrix}\]where bias would be ignored for now (otherwise there will be one more column of $[b_1, b_2^T$ for $(W^1)^T$ and a row of $1$ for $a^0$. Then the activation does:

\[a^1 = \begin{bmatrix} f(z_{1}^1)\\ f(z_{2}^1) \end{bmatrix}= \begin{bmatrix} a_1^1\\ a_2^1 \end{bmatrix}\]which will be input of the second layer. Eventually:

the last part does not have an activation since we are doing a regression instead of classification.

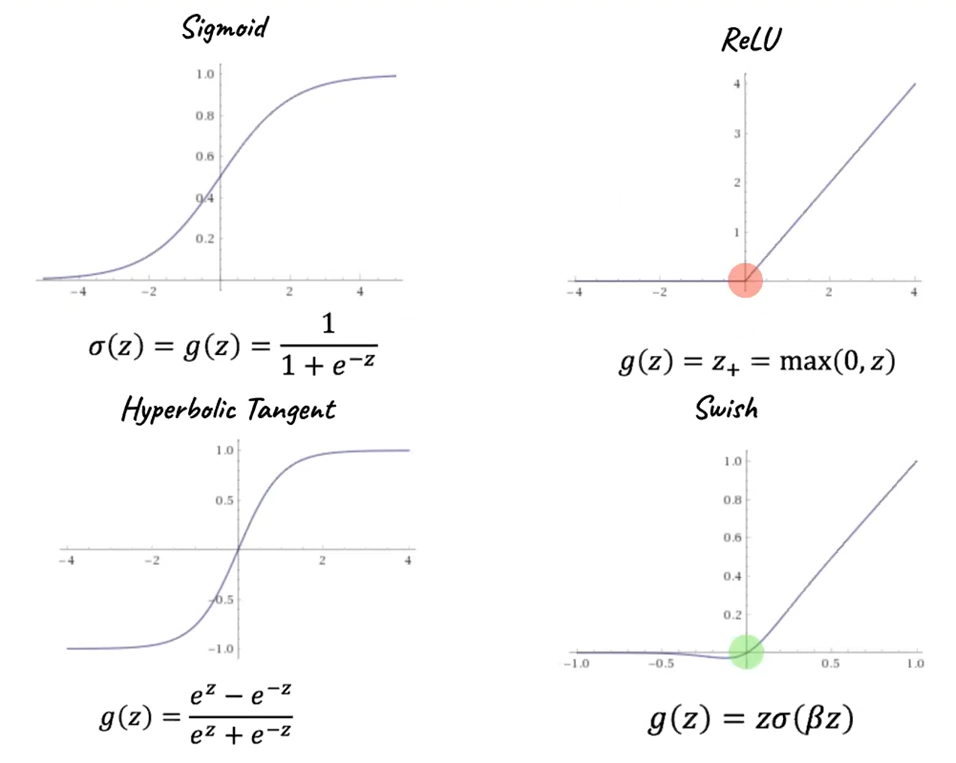

Activation Functions

The activation function always does a mapping from $f: \mathbb{R} \to \mathbb{R}$. Hence they are applied element-wise.

Common examples of activation functions include:

where:

-

a difference between Swish and ReLU is that Switch has a defined derivative at $z=0$

-

In the case of ReLU, consider an input space of dimension $2$. Now fold it along an axis, returning two separately smooth planes intersecting at a “fold”. At this point, one of them is flat, and the other is angled at $45$ degrees. Since the input dimension is $2$, we will be folding again at the y-axis.

- By repeating this to create many ‘folds’, we can use the ReLU function to generate an “n-fold hyperplane” from the smooth 2D input plane.

Therefore, combined with linear transformation, the folds will be slanted:

in the end we just have a sharded space from many folds.

-

Swish: notice that $\lim_{\beta \to \infty}$ Swish becomes ReLU

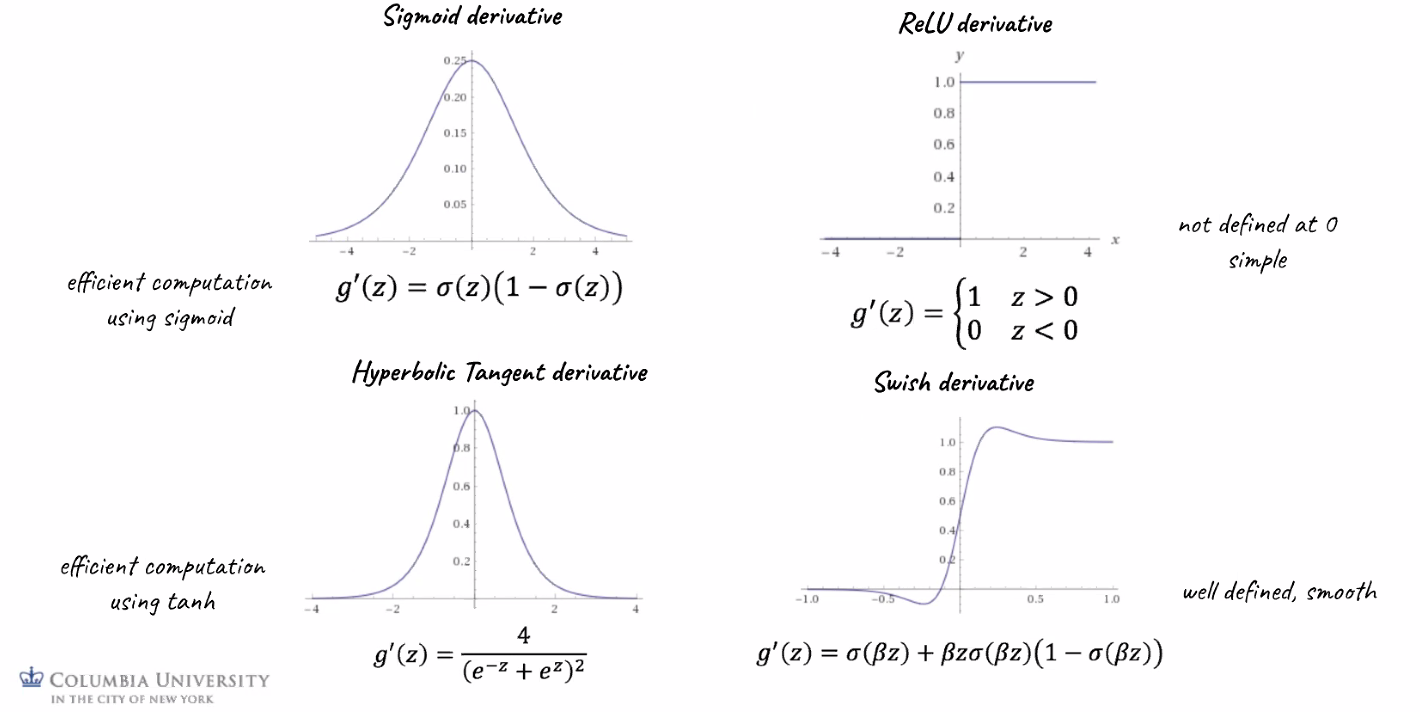

Their derivatives are important to know since we will use them when taking derivatives during backpropagation:

where notice that

- Sigmoid: $\sigma’$ depends on $\sigma$ means we would have already computed this in the forward pass. This will save computational effort!

- ReLU: the simplest derivative among all. Saves computational effort.

Last but not least, for multiclass classification, often the last layer uses SoftMax, which maps $\mathbb{R}^d \to \mathbb{R}^d$. This is often used only for the last layer if we are doing multiclass:

\[g^L(z^L)_i = \frac{e^{z_i^L}}{\sum_{j=1}^d e^{z_{j}^L}}\]where:

-

$z_i^L$ basically is the pre-activatoin on the last layer $l$

-

this is a generalizatoin of logistic regression because $\sum_i^d g(z)_i = 1$, i.e. elements sum up to 1.

-

also pretty easy to implemnet:

lambda z: np.exp(z) / np.sum(np.exp(z))

yet since they add up to 1, they can also be seen as a generalization of logistic regressoin.

Loss Functions

Now we have several choice of $f$, our final objective of minimize is:

\[\frac{1}{n} \sum_{i=1}^n \mathcal{L}(y^i, \hat{y}^i)\]for $\hat{y}^i$ is basically the $F^3 (F^2 (F^1(\vec{x}^i)))$, for example. Therefore, a couple of different choices for $\mathcal{L}$ function:

-

Mean Squared Error

\[\mathcal{L}(y^i, \hat{y}^i) = (y^i - \hat{y}^i)^2\] -

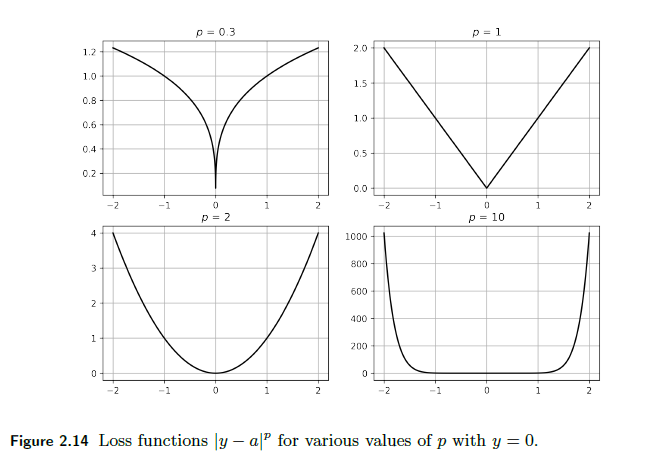

Other power $p$ error:

\[\mathcal{L}(y^i, \hat{y}^i) = |y^i - \hat{y}^i|^p\]Graphically:

-

Logistic Regression Loss (Cross-Entropy Loss)

\[\mathcal{L}(y^i, \hat{y}^i) = -y^i \log(\hat{y}^i) - (1-y^i) \log (1-\hat{y}^i)\]If this loss is used, the objective $J$ is convex in $W$.

-

There is also a Softmax version/multiclass version of the logistic loss. Checkout the book for more info.

Note

In general, the loss function is not convex with respect to $W$, therefore solving:

\[\min_W \frac{1}{n} \sum_{i=1}^n \mathcal{L}(y^i, \hat{y}^i)=\min_W \frac{1}{n} \sum_{i=1}^n \mathcal{L}(y^i, F(x^i, W))\]does not guarantee a global minimum. We therefore use gradient descent to find a local minimum.

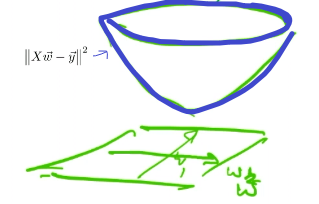

Regularization

What happens if we add regularization, such that we consider:

\[\min_W \frac{1}{n} \sum_{i=1}^n \mathcal{L}(y^i, F(x^i, W)) + R(W)\]In general, this will more or less force values inside $W$ to be small.

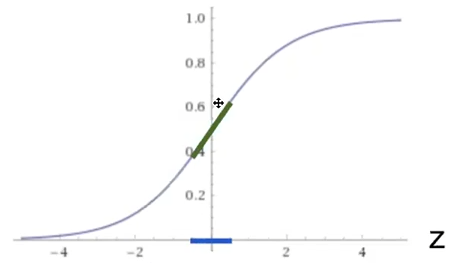

Now, recall that $W$ basically does the linear part/composes the pre-activation before putting into $f$:

So we if have $f$ being a function such as sigmoid, it means that pre-activation would be most at the blue part:

where notice that:

- adding regularization will likely shrink the $W$, which means that $Z^l$ would be small. Hence, $A^l=f^l(Z^l)$ would likely be linear (the green part)!

- This means our activation being less complex, i.e. $f$ becomes almost a linear operation. Intuitively, the more we regularize, the less complicated our model

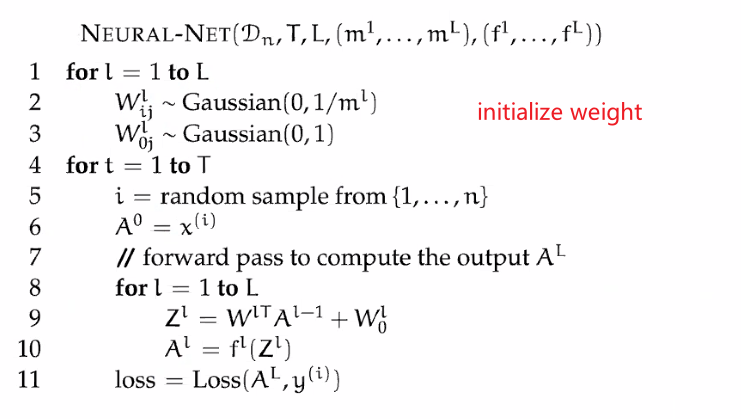

Forward Propagation

where if we use the previous example:

-

e.g. $L=3$ since we have 3 layers

-

$T$ is the number of iterations we want to perform (e.g. number of epochs if we are doing over the entire batch)

-

the initialization must be randomized, so that the updates for new $W$ will not be identical/same (when doing back prop)

-

If we set all the weights to be the same, then all the the neurons in the same layer performs the same calculation, there by making the whole deep net useless. If the weights are zero, complexity of the whole deep net would be the same as that of a single neuron.

E.g.

\[z^1 = (W^1)^T a^{0} = \begin{bmatrix} w_{11} & w_{21} & w_{31}\\ w_{12} & w_{22} & w_{32} \\ \end{bmatrix}\begin{bmatrix} x_{1}\\ x_2\\ x_3 \end{bmatrix} = \begin{bmatrix} z_{1}^1\\ z_2^1\\ \end{bmatrix}\]will have $z_1^1 = z_2^1$ and updates within the same layer will be identical.

-

-

we are picking only one sample from the set because we will be doing stochastic gradient descent with the backprop algorithm. This is commonly used when datasets are large so we don’t want to do the entire dataset per step.

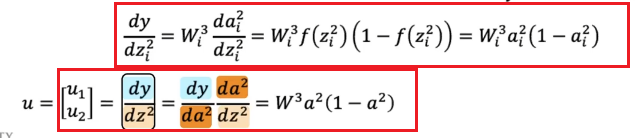

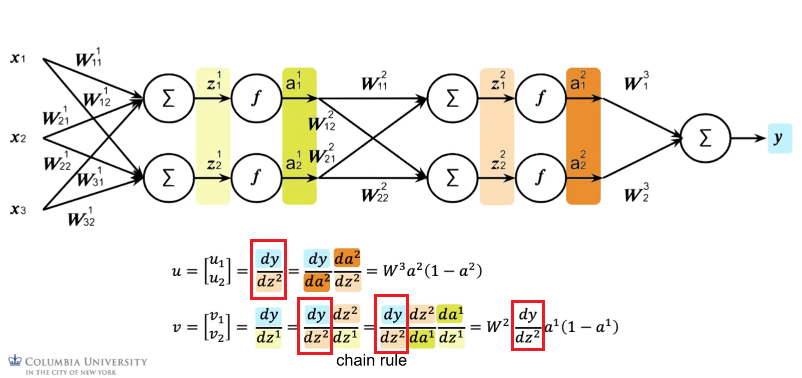

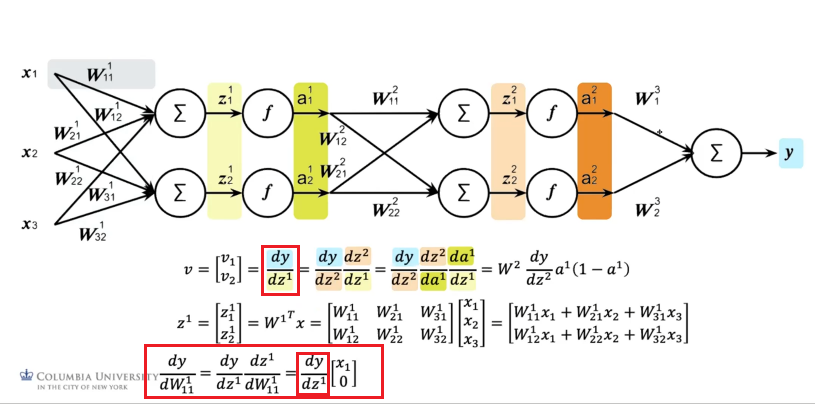

Back Propagation

The aim of this algorithm is, for each $W^l$ at layer $l$ (assumed bias is absorbed):

\[W^l := W^l - \alpha \frac{\partial \mathcal{L}}{\partial \mathcal{W^l}}\]basically doing a gradient descent to minimize our loss:

- $\alpha$ would be the learning step size, which we can tune as a parameter

Now, to compute the derivative, instead of doing it in a forward pass so that we need to do:

- compute $\partial \mathcal{L}/\partial \mathcal{W^1}$

- then compute $\partial \mathcal{L}/\partial \mathcal{W^2}$

- then compute $\partial \mathcal{L}/\partial \mathcal{W^3}$

if we have 3 layers. However, it turns out we can do achieve all the calculations if we do it in a backward pass:

-

Notice that:

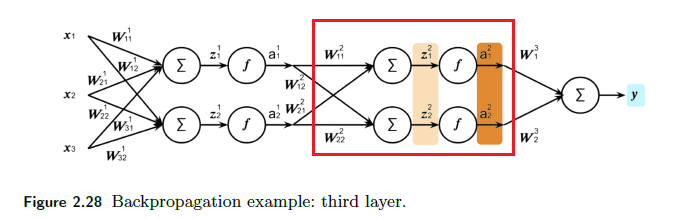

\[\frac{\partial \mathcal{L}}{\partial \mathcal{W^l}}=\frac{\partial \mathcal{L}}{\partial \mathcal{A^l}}\frac{\partial \mathcal{A^l}}{\partial \mathcal{Z^l}}\frac{\partial \mathcal{Z^l}}{\partial \mathcal{W^l}} = \frac{\partial \mathcal{L}}{\partial \mathcal{Z^l}}\frac{\partial \mathcal{Z^l}}{\partial \mathcal{W^l}} = \frac{\partial \mathcal{L}}{\partial \mathcal{Z^l}}(A^{l-1})^T\]since $Z^l = (W^l)^TA^{l-1}$. notice that $A^{l-1}$ is already computed in the forward pass. Graphically, if $l=2$, we are here:

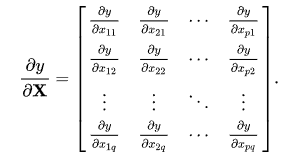

note that we basically are doing derivative of a scalar w.r.t. a vector, so Jacobians would be the brute force way:

yet the point is that we can save much effort using chain rule.

- if you have a $f:\mathbb{R}^n \to \mathbb{R}^m$, then the Jacobian will be dimension $\mathbb{R}^{m \times n}$. You can image each row doing $[df_i/dx_1,…,df_i/dx_n]$.

-

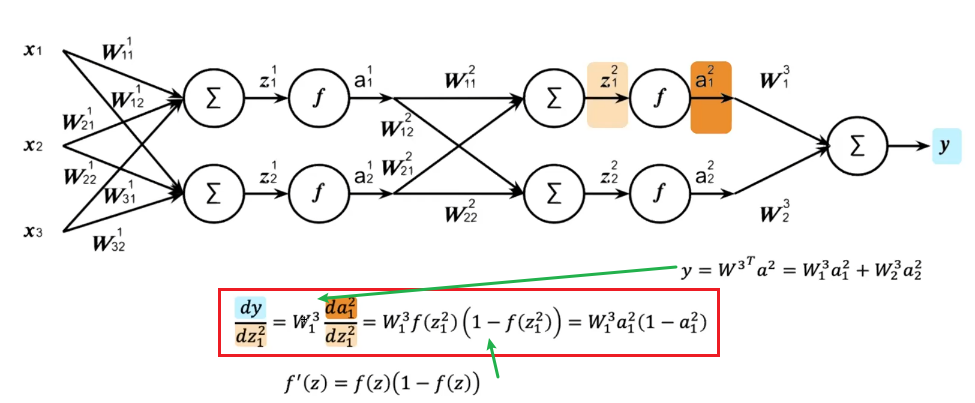

Then, we need:

\[\frac{\partial \mathcal{L}}{\partial \mathcal{Z^l}} = \frac{\partial \mathcal{L}}{\partial \mathcal{A^l}}\frac{\partial \mathcal{A^l}}{\partial \mathcal{Z^l}} =\frac{\partial \mathcal{L}}{\partial \mathcal{A^l}}\frac{\partial \mathcal{f(Z^l)}}{\partial \mathcal{Z^l}}\]since we know $A^l=f(Z^l)$. If we have sigmoid, then we know $\sigma’(x) = \sigma(x)\cdot(1- \sigma(x))$.

-

Hence each element such as

\[\frac{\partial \mathcal{L}}{\partial \mathcal{Z^l_{11}}} = \frac{\partial \mathcal{L}}{\partial \mathcal{A^l}}\frac{\partial \mathcal{A^l}}{\partial \mathcal{Z^l_{11}}} =\frac{\partial \mathcal{L}}{\partial \mathcal{A^l}}\frac{\partial \mathcal{\sigma(Z^l_{11})}}{\partial \mathcal{Z^l_{11}}} =\sigma(Z_{11}^l)\cdot(1-\sigma(Z_{11}^l))\frac{\partial \mathcal{L}}{\partial \mathcal{A^l}} =A_{11}^l\cdot(1-A_{11}^l)\frac{\partial \mathcal{L}}{\partial \mathcal{A^l}}\]so then computing for the derivative for the entire matrix, means just doing the above for each element

-

so the one thing we actually have to compute would be:

\[\frac{\partial \mathcal{L}}{\partial \mathcal{A^l}}\]though we know $\partial Z^l / \partial A^{l-1}=W^l$, we don’t know the loss function. However, it turns out we only needed to compute this for one (the last) layer (see next step)

-

-

Lastly, the real shortcut in back propagation is that:

\[\frac{\partial \mathcal{L}}{\partial \mathcal{A^{l-1}}} =\frac{\partial \mathcal{L}}{\partial \mathcal{Z^{l}}}\frac{\partial \mathcal{Z^l}}{\partial \mathcal{A^{l-1}}} =W^l\frac{\partial \mathcal{L}}{\partial \mathcal{Z^{l}}}\]hence, the other thing we have to compute is:

\[\frac{\partial \mathcal{L}}{\partial \mathcal{Z^{l}}}\]and that from this formula, knowing $\partial L / \partial Z^{l}$ means we can compute $\partial L / \partial A^{l-1}$ of the previous layer!

In summary

To compute

\[\frac{\partial \mathcal{L}}{\partial \mathcal{W^l}}= \frac{\partial \mathcal{L}}{\partial \mathcal{Z^l}}(A^{l-1})^T\]we needed to know

\[\frac{\partial \mathcal{L}}{\partial \mathcal{Z^l}} =\frac{\partial \mathcal{L}}{\partial \mathcal{A^l}}\frac{\partial \mathcal{f(Z^l)}}{\partial \mathcal{Z^l}}\]

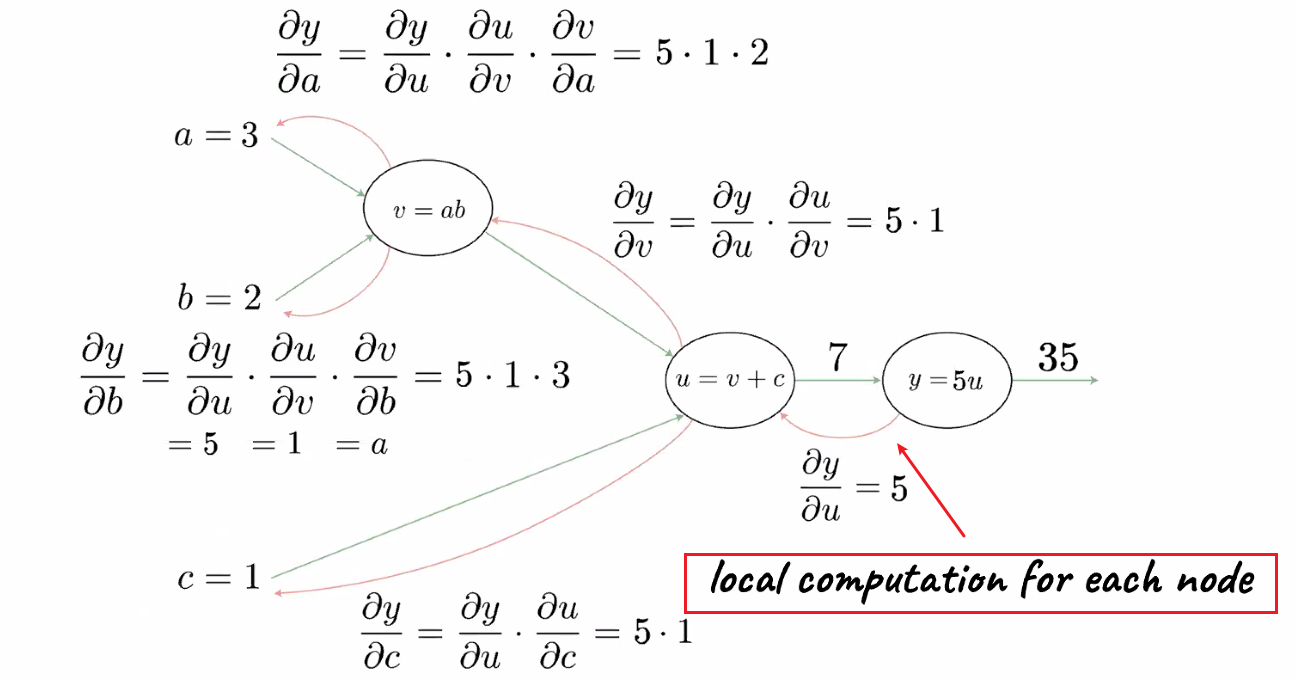

- notice that this computation is local: it only involves stuff from layer $l$

which requires

\[\frac{\partial \mathcal{L}}{\partial \mathcal{A^l}}\]which can be done in an efficient way once we are done with a layer:

\[\frac{\partial \mathcal{L}}{\partial \mathcal{A^{l-1}}} =\frac{\partial \mathcal{L}}{\partial \mathcal{Z^{l}}}\frac{\partial \mathcal{Z^l}}{\partial \mathcal{A^{l-1}}} =W^l\frac{\partial \mathcal{L}}{\partial \mathcal{Z^{l}}}\]

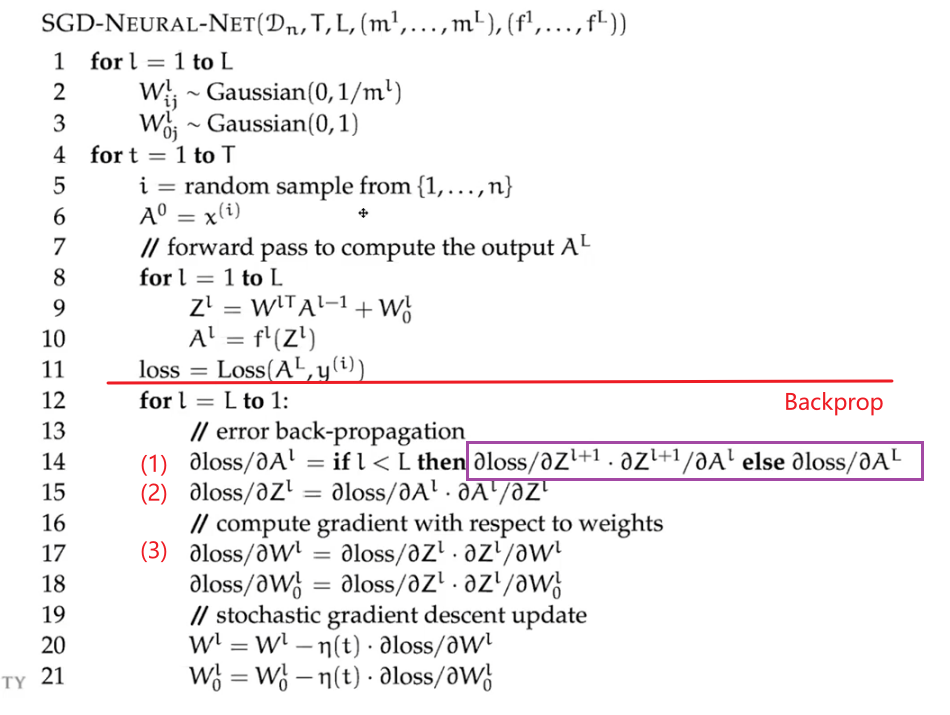

Therefore, the back propagation algorithm looks like:

where notice that:

-

we have an “extra” step here because this assumes that the biases are not absorbed

- the three main steps are outlined in the summary mentioned above. Due to dependency, they are done in reverse order

- the purple line shows the efficiency, that $\partial L / \partial A^{l}$ can be computed from previous result with layer $l+1$. The only one what requires computation is the first iteration/last layer.

- this is basically the entire model! i.e. back propagation does the training/update of the parameters

For Example

In the first iteration, if we don’t care about losses yet, we need to figure out the second step on $\partial L / \partial Z^{l}$:

which we are doing element-wise for clarity. This can be easily generalized and placed into a vector:

-

then we can compute easily:

\[\frac{\partial \mathcal{L}}{\partial \mathcal{W^l}}= \frac{\partial \mathcal{L}}{\partial \mathcal{Z^l}}(A^{l-1})^T\]

In the second iteration, we notice that we can reuse the results above:

- which then can compute the $$

Lastly:

which again reused the result from its higher up layer.

Note

If we take derivatives in the forward pass, we get only derivative of one variable. If we do it in the backward pass, we do it once and get all the derivatives with little effort

A big advantage of back propagation is also utilizing the fact that we are doing local computation (and passing on the result)

which means backpropagation is a special case of differential programming, which can be optimized.

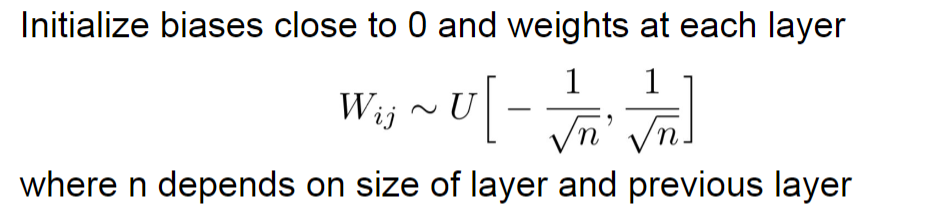

Weight Initialization

Basically a uniform distribution for weights and zeros for bias

Program

for l in range(1, self.num_layers):

# glorot init

epa = np.sqrt(2.0 / layer_dimensions[l] + layer_dimensions[l-1])

self.parameters["W" + str(l)] = np.random.rand(layer_dimensions[l], layer_dimensions[l-1]) * eps

self.parameters["b" + str(l)] = np.zeros((layer_dimensions[l], 1)) + 0.01

Problems with NN

Recall that our objective is to minimize:

This implies the following problem:

- What if $n$ is large? Since our loss sums over all $n$ data points, it would take a long time to compute

- Solution: Stochastic Gradient Descent

- Computing derivatives/doing gradient descent of large network takes time

- Solution: Backpropagation

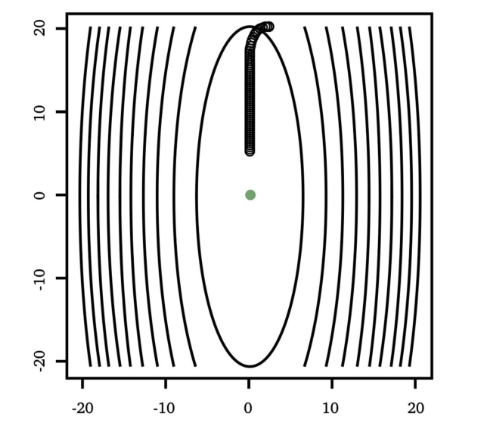

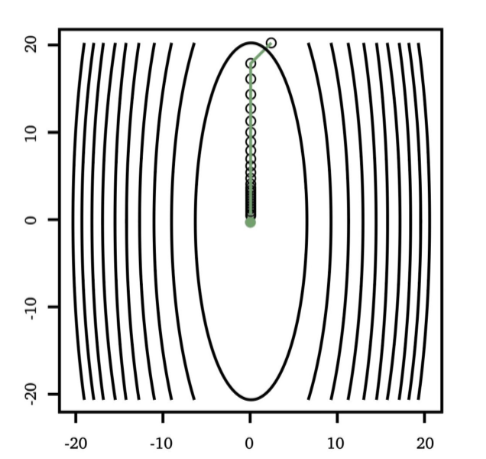

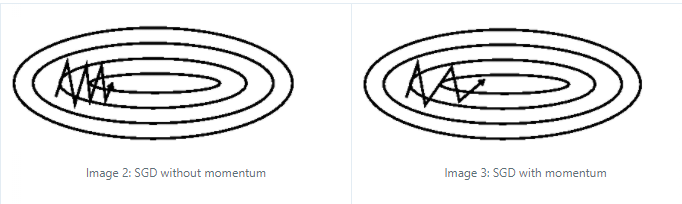

- For each time step/update, the gradient would be perpendicular to the previous one, forming a slow zig-zag pattern (slow to converge).

- Solution: Adaptive gradient Descent

Example Implementation

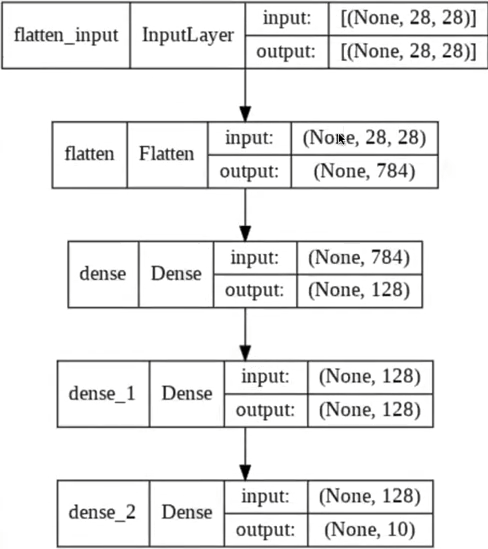

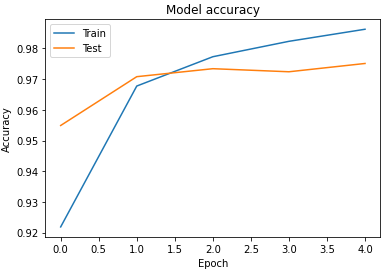

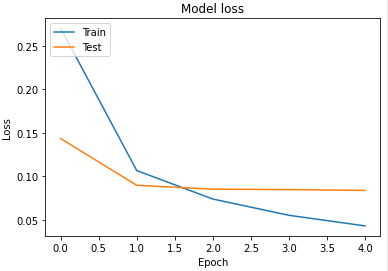

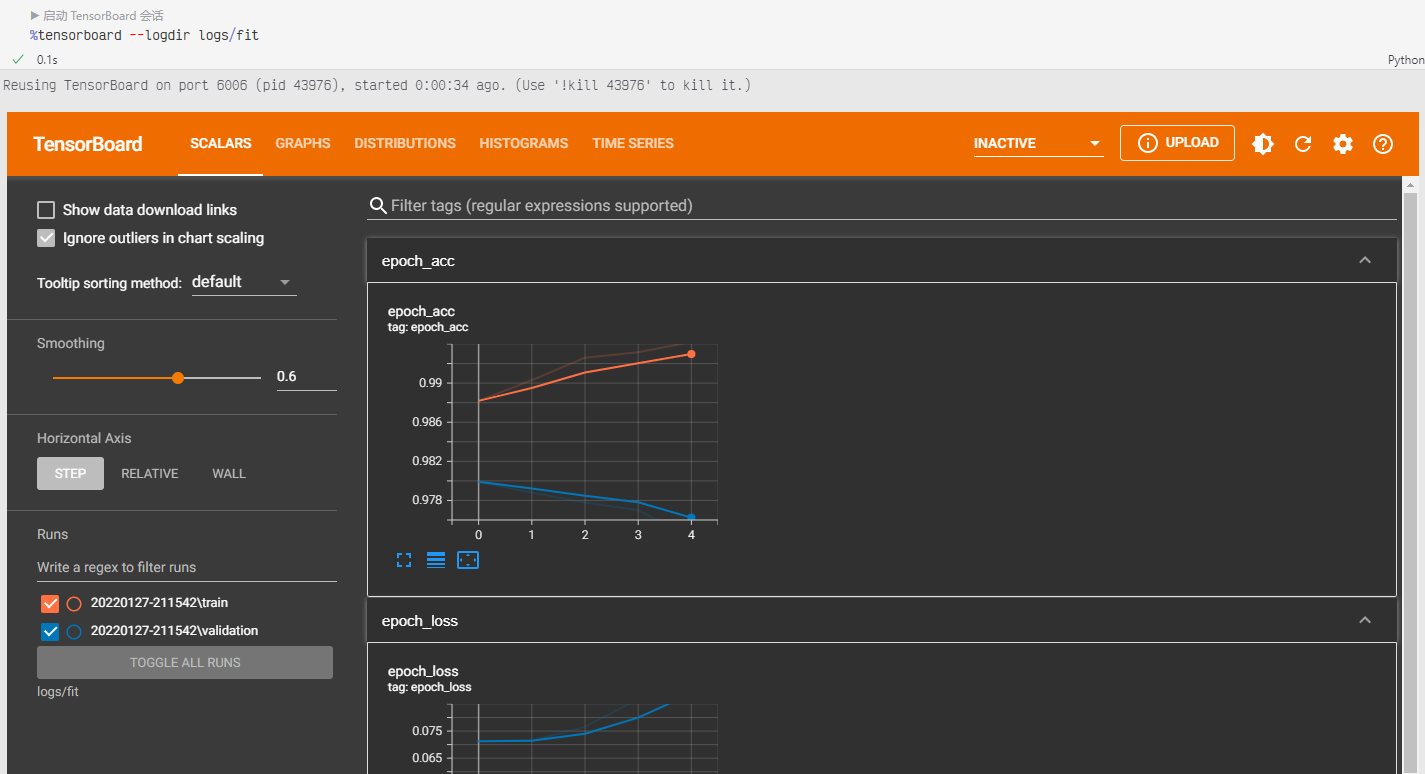

Consider the implementing the following architecture

Then we would have:

#Implement the forward pass

def forward_propagation(X, weights):

Z1 = np.dot(X, weights['W1'].T) + weights['b1']

H = sigmoid(Z1)

Z2 = np.dot(H, weights['W2'].T + weights['b2'])

Y = sigmoid(Z2)

# Z1 -> output of the hidden layer before applying activation

# H -> output of the hidden layer after applying activation

# Z2 -> output of the final layer before applying activation

# Y -> output of the final layer after applying activation

return Y, Z2, H, Z1

And backward:

# Implement the backward pass

# Y_T are the ground truth labels

def back_propagation(X, Y_T, weights):

N_points = X.shape[0]

# forward propagation

Y, Z2, H, Z1 = forward_propagation(X, weights)

L = (1/(2*N_points)) * np.square(np.sum(Y - Y_T))

# back propagation

dLdY = 1/N_points * (Y - Y_T)

# dLdZ2 = dLdA2 * dA2dZ2 = dLdA2 * sig(Z2)*[1-sig(1-Z2)] # broadcast multiply

dLdZ2 = np.multiply(dLdY, (sigmoid(Z2)*(1-sigmoid(Z2))))

# dLW2 = dLdA2 * dA2dZ2 * dZ2dW2 = dLdZ2 * dZ2dW2 = dLdZ2 * A1 # matrix multiply

dLdW2 = np.dot(H.T, dLdZ2)

# dLb2 = dLdA2 * dA2dZ2 * dZ2db2 = dLdZ2 * dZ2db2 = dLdZ2 * 1

dLdb2 = np.dot(dLdZ2.T, np.ones(N_points))

# dLdA1 = dLdA2 * dA2dZ2 * dZ2dA1 = dLdZ2 * dZ2dA1 = dLdZ2 * W2

dLdA1 = np.dot(dLdZ2, weights['W2'])

# dLdZ1 = dLdA1 * dA1dZ1 = dLdA1 * sig(A1) * [1-sig(A1)] # broadcast multiply

dLdZ1 = np.multiply(dLdA1, sigmoid(H)*(1-sigmoid(H)))

# dLW1 = dLdA1 * dA2dZ1 * dZ2dW1 = dLdZ1 * dZ2dW1 = dLdZ1 * A0 # matrix multiply

dLdW1 = np.dot(X.T, dLdZ1)

dLdb1 = np.dot(dLdZ1.T, np.ones(N_points))

gradients = {

'W1': dLdW1,

'b1': dLdb1,

'W2': dLdW2,

'b2': dLdb2,

}

return gradients, L

Optimization

In practice, it is important to have the some understanding of the optimizer we will use (e.g. how to gradient descent), because they perform differently in different scenarios.

The ones we use in practice the different algorithms to find minima can be separated into the following three classes:

- First order methods.

- Gradient Descent (Stochastic, Mini-Batch, Adaptive)

- Momentum Related (Adagrad, Adam, Hypergradient Descent)

- Second order methods

- Newton’s Method (generally faster than gradient descent because it uses Hessian/is higher order)

- Quasi Newton’s Method (SR1 update, DFP, BFGS)

- Evolution Strategies

- Cross-Entropy Method (uses cluster of initial points as initial conditions, then descent as a group)

- Distributed Evolution Strategies, Neural Evolution Strategies

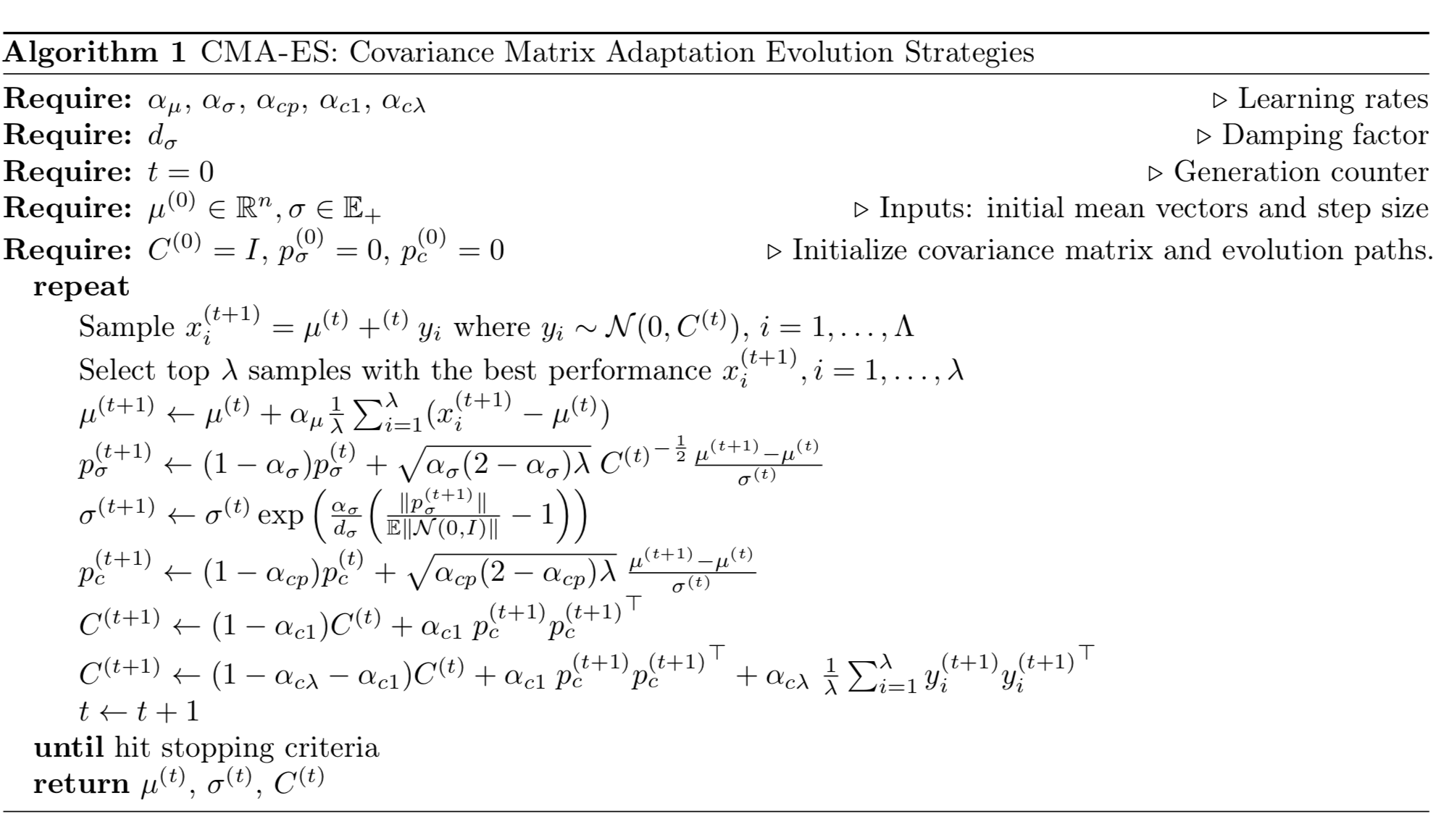

- Covariance Matrix Adaptation

Overview

Again, our goal of learning this is to understand, given an optimization problem of finding best $\theta^*$:

\[\theta^* = \arg\min_\theta J(\theta)\]where $J$ would be the total loss we are dealing with.

-

an example for $J$ would be

\[J(\theta) = \left( \frac{1}{n} \sum_{i=1}^n \mathcal{L}(y^{(i)}, h(x^{(i)}; \theta)) \right) + \lambda R(\theta)\]where we included a regularization term $R(\theta)$ here as well.

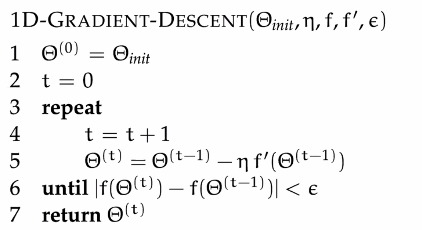

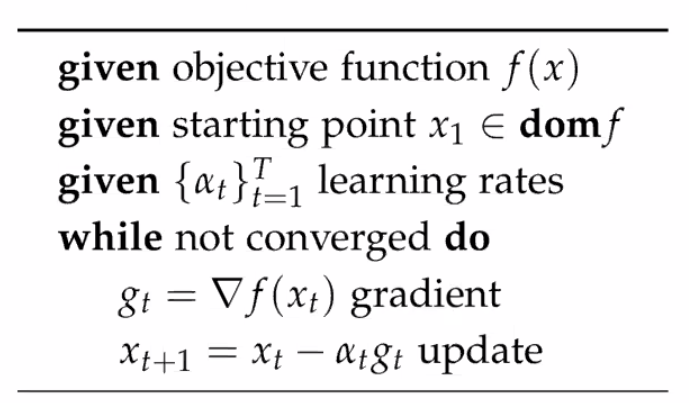

First, let us recall that the basic gradient descent algorithm generally looks like

where $\eta$ would be tunable:

- its aim is to obvious find the $\theta^*$, but it might get stuck at local minima

- in that case, we need to add some noise, or use stochastic gradient descent

- for large NN, finding $dJ/dW$ takes effort.

- this is optimized with back-propagation algorithm

- but obviously this is not the only way to do it, as you shall see soon

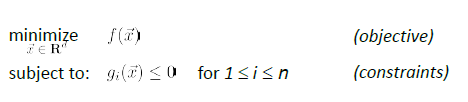

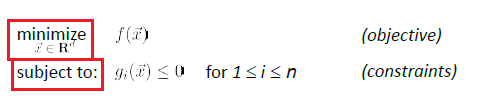

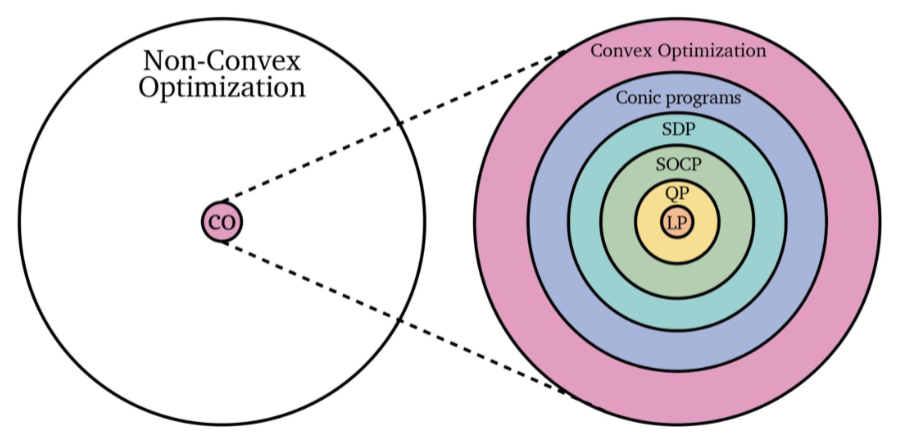

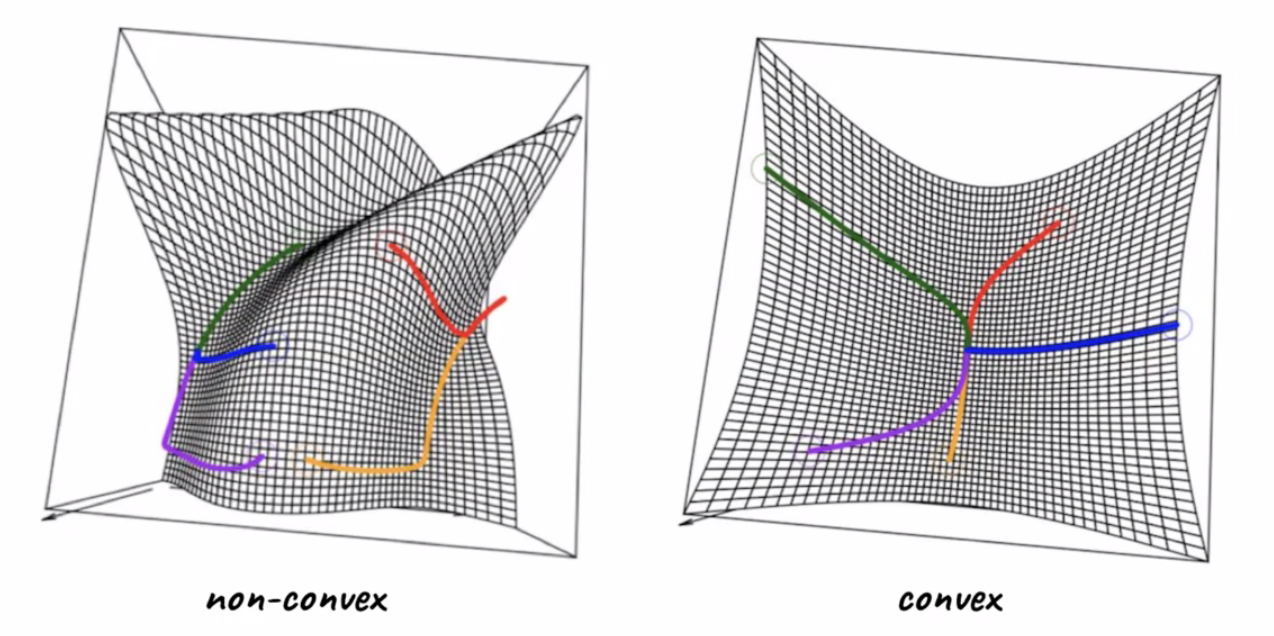

Convex Optimization

It would be so little pain if $J$ is convex w.r.t. $W$, so that any local minimum is also a global minimum.

- but often in NN, $J$ is not a convex function of $W$, so we do have the problem of stopping at local minimas.

For a problem to be a convex optimization (with constraints):

this problem is a convex optimization IFF both holds:

- the feasible region of output (due to the constraint) is a convex set

- the objective function $f(\vec{x})$ is a convex function

But in reality, this is what are we are facing:

where recall that:

- Linear programs: objective function is linear (affine), and constraints are also linear (affine)

- so that the feasible region is a convex set (because the feasible region is always a polygon = convex set)

- Quadratic program: objective function is quadratic, and constraints are linear (affine)

- if constraints are quadratic, then the feasible region might not be a convex set.

- Conic Program: where constraints are a conic shaped region

- Other common solvers include:

CVX,SeDuMi,C-SALSA,

For Example:

where:

- LHS shows starting with different initial points yields different result, i.e. some ended up at local minimas

- RHS shows starting from different initial points yields the same result, i.e. global minimum.

Derivative and Gradient

Most of the cases we will be dealing with $f(\vec{x})$ where $\vec{x}$ is multi-dimensional. For instance $L(W)$ with loss being dependent on weights. Then, an obvious usage of this would be in gradient descent:

\[w_{t+1} = w_t - \alpha_t \nabla f(w_t)\]for us using $\alpha_t$ because it can be changing (e.g. in adaptive methods)

Recall

\[\nabla f(\vec{x}) = (\frac{\partial f}{\partial x_1}, \frac{\partial f}{\partial x_2} , ..., \frac{\partial f}{\partial x_n} )\]being a $n$ dimensional vector:

- imagine graphing $f(\vec{x})$ in a $\mathbb{R}^{n+1}$ since $\vec{x}\in \mathbb{R}^n$

- then $\nabla f$ points at direction of steepest ascent

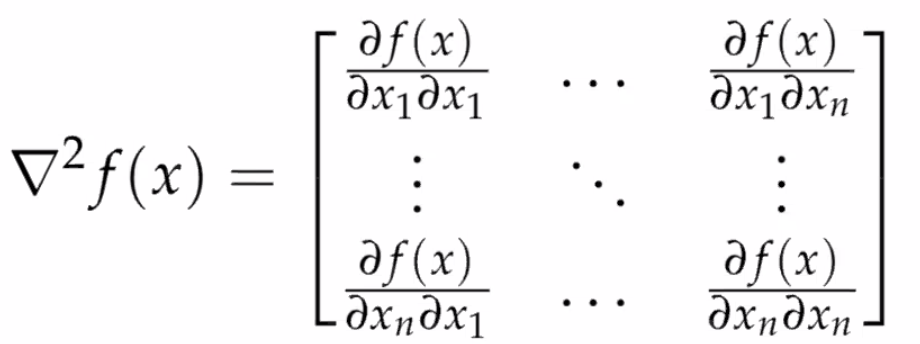

Another useful quantity would be the second derivative:

Hessian

Again, for a scalar function with vector input:

which is useful because:

- $\vec{x}^*$ is a local minimum if $H\equiv \nabla^2f$ is positive semi-definite

- in the case of $x\in \mathbb{R}$, we know that $f’‘(x) \ge 0$ means minima. In the case of vector input space, you have $n$-directions to look at. If each direction satisfies $Hx = ax$ for $a \ge 0$, then obviously it is “concave up”, and that $Hx = ax$ for all $x$ means $H$ contains only non-negative eigenvalues -> positive semidefinite

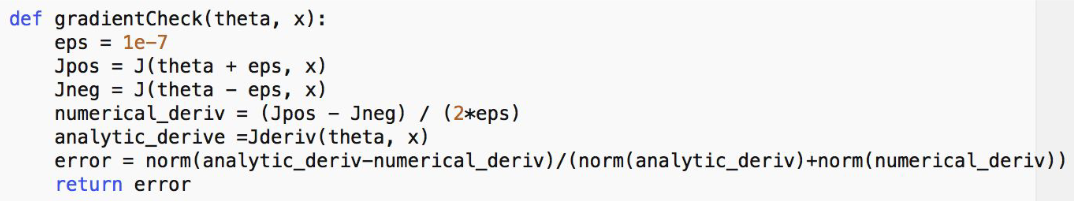

Sometimes, we may want to use numerical calculations of derivatives to make sure our formula put in practice is correct:

\[f'(x) \approx \frac{f(x+\epsilon) - f(x - \epsilon)}{2 \epsilon}\]for small $\epsilon$.

-

notice we are all using $x$ as the input variable. It might be useful in context if we think of $x \to \vec{w}$ being the weights that we need to optimize on.

-

an example program would be

Note

To compute gradient using:

- analytic equation: exact, fast

- e.g. for NN, we derive the derivatives and used the formula

- numerical equation: slow, contains error

- useful for debugging, .e.g if backprop is implemented correctly

First Order Methods

Now, we talk about first order methods: using only first order derivative $g_t \equiv \nabla f(x_t)$ for weight (remember we generalized weights $w \to x$ any input) at iteration $t$.

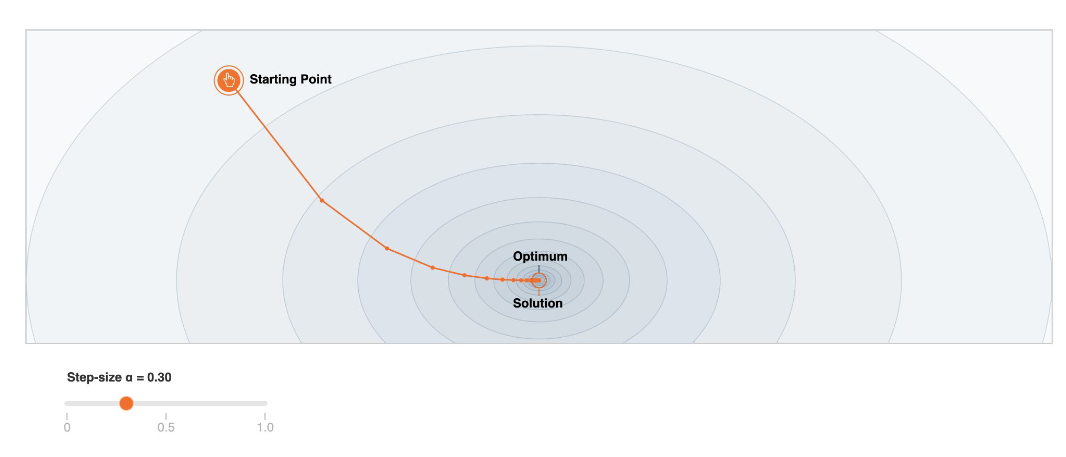

Gradient Descent

The easiest and direct use of $\nabla f(x_t)$:

where note that:

-

you could add an early-stopping criteria at the end

-

the tunable learning rate $\alpha_t$ is critical:

Too Big Too Small Just Right

but as you might have guessed, step size $\alpha_t$ could be updated automatically in some other methods

-

However, at saddle points, it may cause the update be too small. Hence often a noise term will be added

\[x_{t+1} = x_t - \alpha_tg_t + \epsilon_t\]for $\epsilon_t \sim N(0, \sigma)$ hoping that it goes out of local minima/saddle points. (related: Vanishing/Exploding Gradient)

For Example

Consider logistic loss:

\[J(x;\theta) = -\frac{1}{m}\sum_{i=1}^m \left[ y^{(i)}\log\left(h_\theta \left(x^{(i)}\right)\right) + (1 -y^{(i)})\log\left(1-h_\theta \left(x^{(i)}\right)\right)\right]\]Then, compute the gradient:

\[\frac{\partial J(\theta)}{\partial \theta_j} = \frac{\partial}{\partial \theta_j} \,\frac{-1}{m}\sum_{i=1}^m \left[ y^{(i)}\log\left(h_\theta \left(x^{(i)}\right)\right) + (1 -y^{(i)})\log\left(1-h_\theta \left(x^{(i)}\right)\right)\right]\]Carefully computing the derivative yields:

\[\frac{\partial J(\theta)}{\partial \theta_j} = \frac{1}{m}\sum_{i=1}^m\left[h_\theta\left(x^{(i)}\right)-y^{(i)}\right]\,x_j^{(i)}\]Then you can update $\theta_j$ using this.

Problems with Gradient Descent

-

The above takes an entire training set for computing the loss. Takes time.

- use mini-batch or stochastic

-

Computing derivative w.r.t weights $\theta$ takes effort if $\theta$ is high dimensional

- use backpropagation

-

What step-size should we use? We may overshoot if too large of a stepsize.

- adaptive learning rate

-

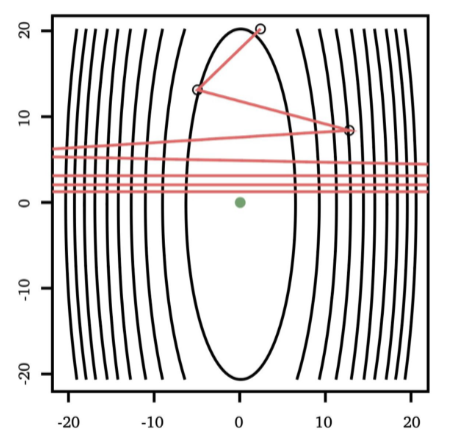

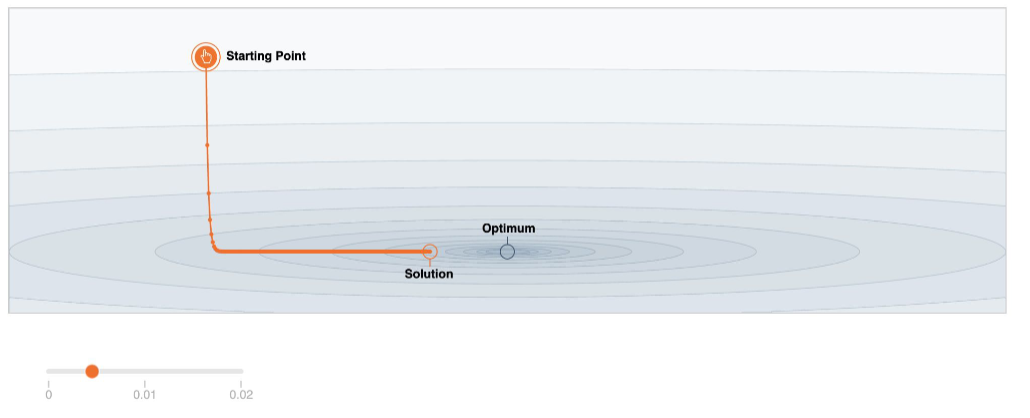

Gradient descent typically spend too much time in regions that is relatively flat as gradient is small

-

e.g.

Normal Region Flat Region

-

Adaptive Step Size

There are certain options we can choose from:

-

decrease learning rate as training progresses (learn less in the future -> prevent overfitting)

-

this can be done using either a decay factor that gets smaller over time:

\[\alpha_t = \alpha_0 \frac{1}{1+t\beta}\]for $\beta$ being small

-

simply exponential decay:

\[\alpha_t = \alpha_0 \gamma^t\]for some $\gamma < 1$ but close to $1$, or

\[\alpha_t = \alpha_0 \exp(-\beta t)\]

-

-

line searches: given some $\min_x f(x)$, and suppose we are currently at $x_t$ being our current best guess. We know the current gradient is $g_t = \nabla f(x)\vert _{x_t} = \nabla f(x_t)$. We consider some step size $\alpha_t$ we might take:

\[\phi(\alpha_t) \equiv f(x_t + \alpha_t g_t)\]and we want to approximately minimize $\phi(\alpha_t)$ to output a $\alpha_t$ to use. Basically we are sliding along the tangent line of the current point and see how far we should slide

-

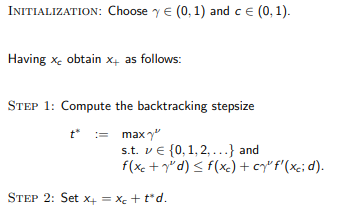

backtrack line search

where $t^*$ is our desired $\alpha_t$

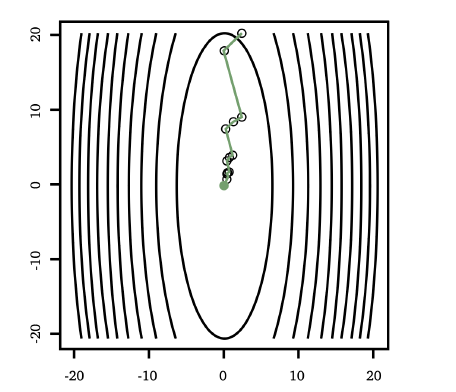

Graphically:

-

exact line search

Solve the following exactly:

\[\min_{\alpha_t} f(x_t + \alpha_t \hat{g}_t)\]where $\hat{g}_t = g_t /\vert g_t\vert$. So this means we want:

\[\nabla f(x_t + \alpha_t \hat{g}_t) \cdot \hat{g}_t = 0\]and we need to solve this for $\alpha_t$

-

one property of this result is that, since we know:

\[\quad \hat{g}_{t+1} = \nabla f(x_t + \alpha_t \hat{g}_t)\]So we see that

\[\hat{g}_{t+1} \cdot \hat{g}_t = 0\]meaning consecutive runs gives perpendicular gradient direction. This makes sense since we are taking the optimal step size, i.e. we have walked the farthest along that direction.

-

Finding $\alpha_t$ is computationally expensive as we need to solve for it, so it is rarely used

-

-

adaptive line search: skipped

-

Mini-Batch Gradient Descent

A common technique within gradient descent is to split your dataset into $n$ sets of size $k$, and train each set as one step for updating the gradient.

- so that we don’t spend time computing the loss function on the entire data

- if you want, you can also parallelize this computation

This is useful because, for a sample of size $k$, the sample mean follows the central limit theorem:

\[\hat{\mu} \sim N(\mu, \sigma^2/n)\]which can be easily seen because:

-

$\text{Var}[X_i] = \sigma^2$, and using linearity:

\[\text{Var}\left[\frac{1}{n}\sum X_i\right] = \frac{1}{n^2} \text{Var}\left[\sum X_i\right] = \frac{1}{n^2} \sum\text{Var}\left[ X_i\right] = \sigma^2 / n\] -

This is good because **standard deviation of $\hat{\mu} \propto 1/\sqrt{n}$ **

So if using 100 samples vs 10000 samples means:

- faster computation for factor of 100

- but only more error of factor of 10

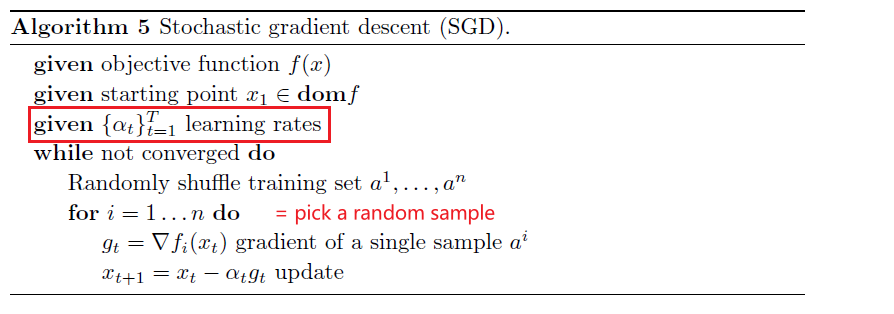

Stochastic Gradient Descent

Basically equivalent of Mini-batch of size $1$

- i.e. each update involves taking 1 random sample

Some common nomenclatures:

- Epoch: a pass through the entire data

Some properties:

- unbiased estimators of the true gradient

-

early steps often converge fast towards the minimum

- very noisy -> but increases the chance of getting a global minima

- e.g. at saddle points, it may cause the step be too small. But this is already noisy, so no problem.

Adaptive Gradient Descent

Either we use normal gradient descent, or gradient descent with optimized steps, we faced the problem of taking too long to converge in flat regions.

An overview would be that it uses gradients from previous steps to compute current gradient.

- want to achieve faster convergence by move faster in dimension with low curvature, and slower in dimension with oscillations

-

the more official documentation: AdaGrad for short, is an extension of the gradient descent optimization algorithm that allows the step size in each dimension used by the optimization algorithm to be automatically adapted based on the gradients seen for the variable

- however, some critics of this would say that it yields different result with gradient descent

Examples with adaptive gradients include:

- Momentum

- AdaGrad

- Adam

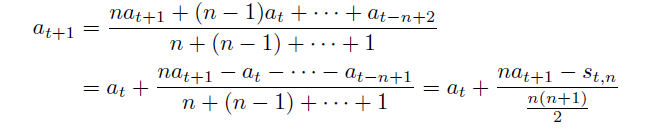

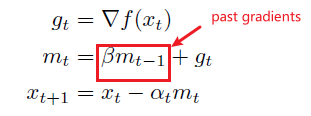

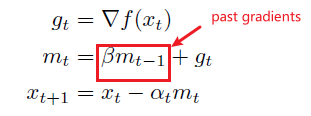

Momentum

The basic idea is that the momentum vector accumulates gradients from previous iterations for computing the current gradient.

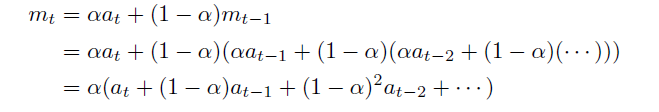

Arithmetically weighted moving average

\[a_t = \frac{na_t + (n-1)a_{t-1}) + ... + a_{t-n+1}}{n+(n-1)+ ... + 1}\]for basically imagining $a_t \to g_t$ is the gradient

- $n$ is the weight which we can specify

- basically this is in a weighted moving average the weights decrease arithmetically, normalized by the sum of weights

In the end, we see accumulation of gradients because:

where notice that:

- $s_t = \sum_{i=t-n+1}^ta_i$ is the accumulation of past gradients, since $a_t \to g_t$

Alternatively, there is also an expoentially weighted version

Exponentially Weighted Moving Average

where here:

-

again basically $a_t \to g_t$

-

the parameter is actually $(1-\alpha) = \beta$ for convenience, and we want $\beta \in [0,1)$

-

in an algorithm:

where basically $x$ would be our weights.

Note

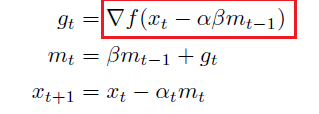

A first problem with momentum is that the step sizes may not decrease once we have reached close to the minimum that may cause oscillations, which can be remedied by using Nesterov momentum (Dozat 2016) that replaces the gradient with the gradient after computing momentum (Dozat 2016):

Graphically

AdaGrad

This deals with the case that we didn’t talk about what to do with $\alpha_t$:

So one variation, AdaGrad, adapts the learning rate to the parameters, i.e. $\alpha_t$ is different for each $\theta_i$/parameter:

- performing smaller updates (i.e. low learning rates) for parameters associated with frequently occurring features

- larger updates (i.e. high learning rates) for parameters associated with infrequent features

For this reason, it is well-suited for dealing with sparse data, and suitable for SGD.

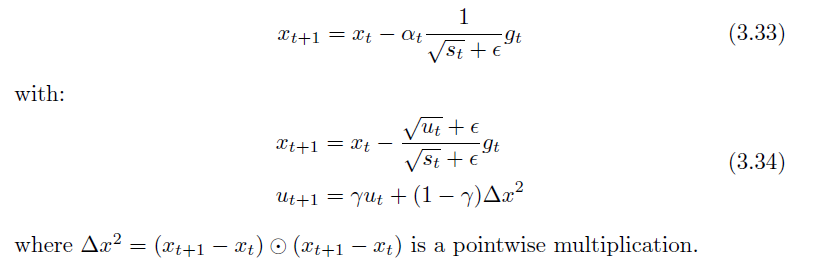

Instead of using $\alpha_t$ for all parameters at current time, use

\[\theta_{t+1,i} = \theta_{t,i} - \frac{\alpha_t}{\sqrt{s_{t,i} + \epsilon}} g_{t,i}\]and that $s_{t,i}$ is a weighted sum of gradients of $\theta_i$ up to time $t$:

\[s_{t,i} = \beta s_{t-1,i} + g_{t,i}^2\]for $g_{t,i}$ is the gradient for the $\theta_i$.

Problem

This in turn causes the learning rate to shrink and eventually become infinitesimally small, at which point the algorithm is no longer able to acquire additional knowledge:

\[\lim_{s_{t,i} \to \infty} \frac{1}{\sqrt{s_{t,i} + \epsilon}} = 0\]This is then solved by:

-

Adadelta

An exponential decaying average of square updates without a learning rate, replacing

-

RMSProp

Adagrad using a weighted moving average, replacing:

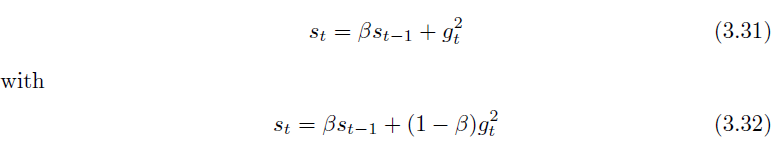

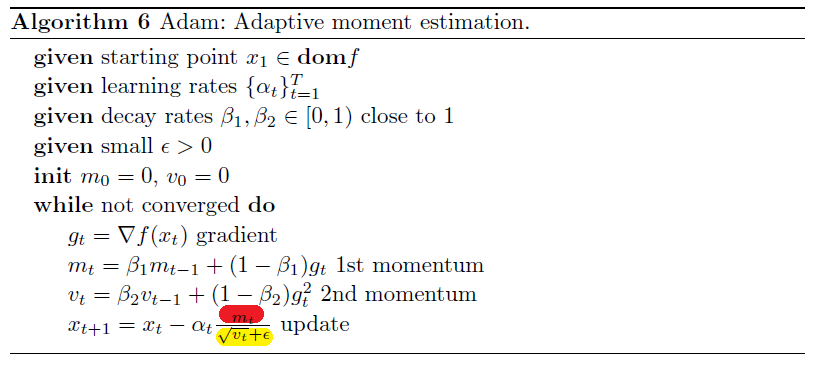

Adam

Adaptive moment estimation, or Adam (Kingma & Ba 2014), combines the best of both momentum updates and Adagrad-based methods as shown in Algorithm 6.

where basically:

- uses momentum in the red part

- uses AdaGrad like adaptive learning rate on the yellow part

- since it combined two models, we have two parameters to specify. Typically $\beta_1 = 0.9, \beta_2 = 0.99$

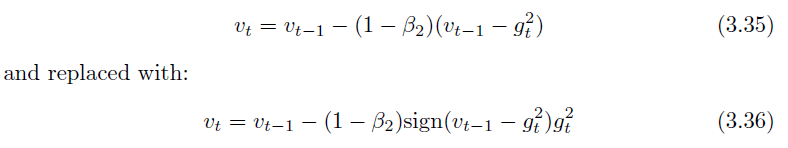

Several improvements upon Adam include:

-

NAdam (Dozat 2016) is Adam with Nesterov momentum

-

Yogi (Zaheer, Reddi, Sachan, Kale & Kumar 2018) is Adam with an improvement to the second momentum term which is re-written as:

-

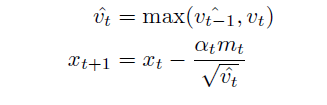

AMSGrad (Reddi, Kale & Kumar 2018) is Adam with the following improvement

Hyper-gradient Descent

Hypergradient descent (Baydin, Cornish, Rubio, Schmidt & Wood 2018) performs gradient descent on the learning rate within gradient descent.

- may be applied to any adaptive stochastic gradient descent method

The basic idea is to consider $\partial f(x_t)/ \partial \alpha$, for $x \to w$

\[\frac{\partial f(w_t)}{\partial \alpha} = \frac{\partial f(w_t)}{\partial w_t} \frac{\partial w_t}{\partial \alpha}\]we know that $w_t = w_{t-1} - \alpha g_{t-1}$:

\[\frac{\partial f(w_t)}{\partial \alpha} = g_t \cdot \frac{\partial }{\partial \alpha} ( w_{t-1} - \alpha g_{t-1})\]where:

- The schedule may lower the learning rate when the network gets stuck in a local minimum, and increase the learning rate when the network is progressing well.

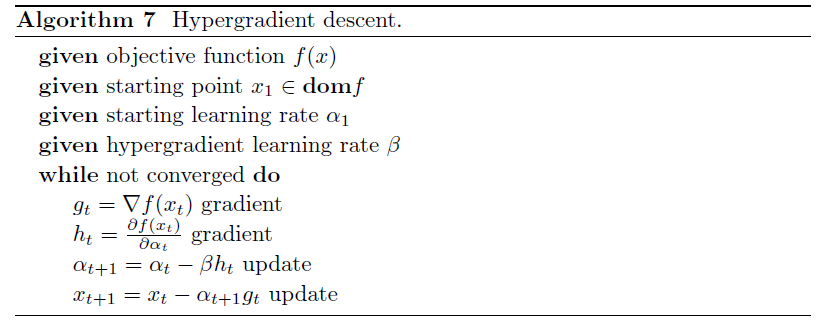

Algorithm:

Vanishing/Exploding Gradient

These problem is encountered when training artificial neural networks with gradient-based learning methods and backpropagation. In such methods:

-

vanishing gradient: during each iteration of training each of the neural network’s weights receives an update proportional to the partial derivative of the error function with respect to the current weight.

\[W^l := W^l - \alpha \frac{\partial L}{\partial W^l}\]The problem is that in some cases, the gradient will be vanishingly small, effectively preventing the weight from changing its value. In the worst case, this may completely stop the neural network from further training.

-

When activation functions are used whose derivatives can take on larger values, one risks encountering the related exploding gradient problem

One example of the problem cause for vanishing gradient

- traditional activation functions such as the hyperbolic tangent function have gradients in the range $(0,1]$, is very small

- Since backpropagation computes gradients by the chain rule. This has the effect of multiplying $n$ of these small numbers to compute gradients of the early layers in an n-layer network, meaning that the gradient (error signal) decreases exponentially

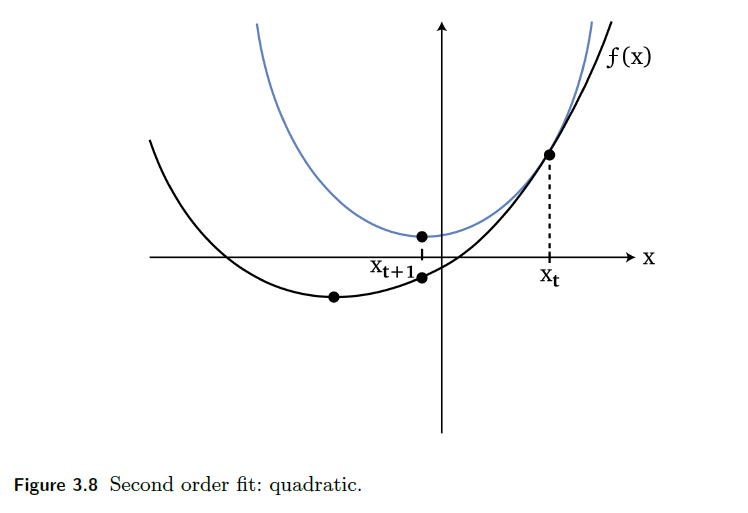

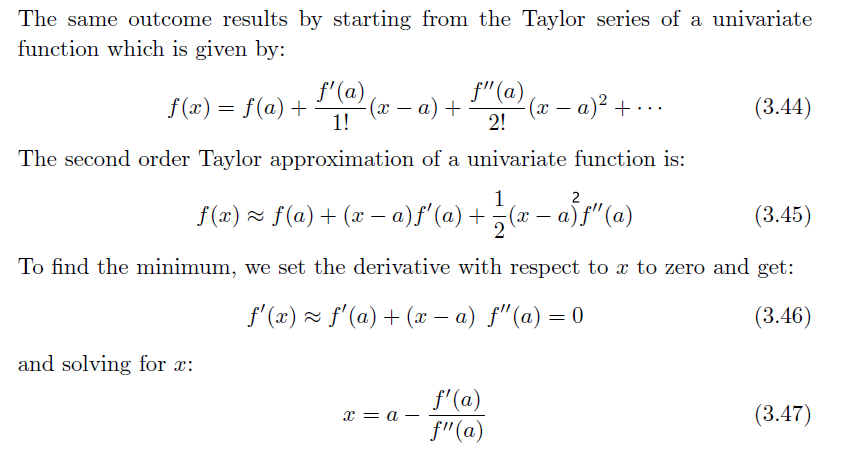

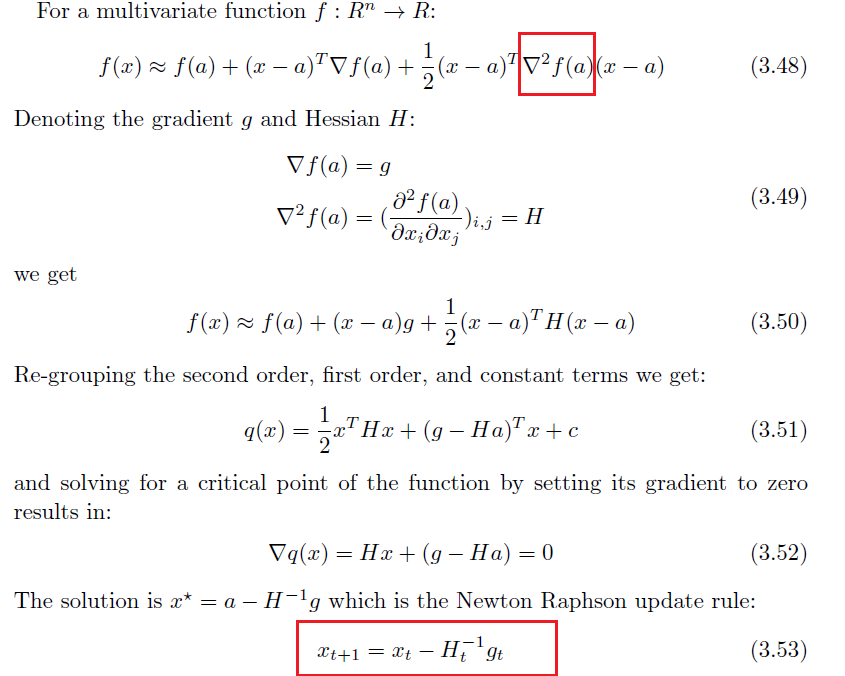

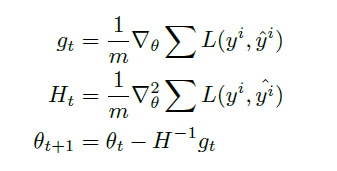

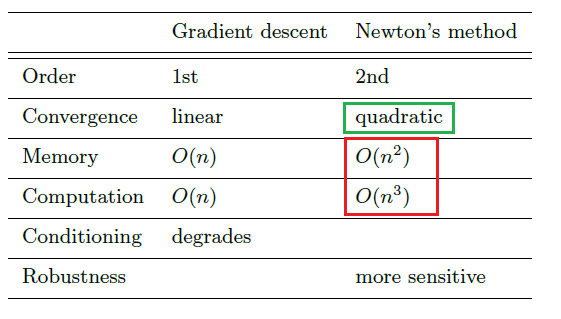

Second Order Methods

First order methods are easier to implement and understand, but they are less efficient than second order methods.

- Second order methods use the first and second derivatives of a univariate function or the gradient and Hessian of a multivariate function to compute the step direction

- Second order methods approximate the objective function using a quadratic which results in faster convergence

- imagine basically 2nd order methods -> parabola -> go down a bowl with a bowl (2nd order); as compared to with a ruler (1st order method)

- but a problem that they need to overcome is how to deal with computing/storing Hessian matrix, which could be large

Newton’s Method

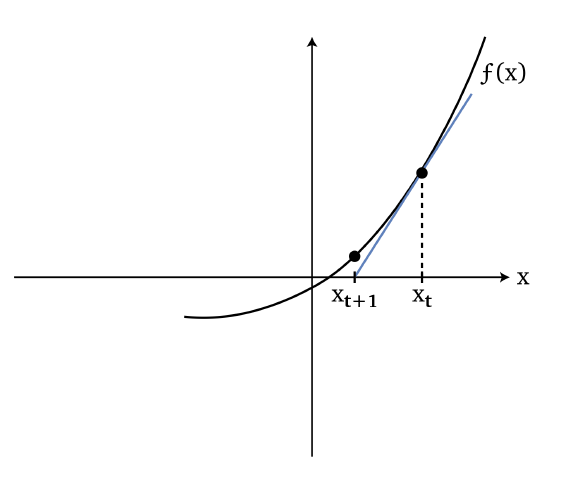

Basically we know that we can find the root of an equation using newton’s method:

so we basically guessed $x_{t+1}$ to be the root by fitting a line:

\[x_{t+1} = x_t - \frac{f(x_t)}{f'(x_t)}\]which basically does:

- the number of steps to move being $\Delta x = f(x_t)/{f’(x_t)}$

Then, since our goal is to solve (in 1-D case):

\[\min f(x) \to f'(x) = 0\]So basically we consider finding root for $f’(x)$:

\[x_{t+1} = x_t - \frac{f'(x_t)}{f''(x_t)}\]This results in

where the blue line is the “imagined function” using Newton’s Method

- notice it is a quadratic

- therefore, it goes down the “bowl” faster than first order methods as mentioned before

The same formula can be derived using Taylor’s methods as well

However, this is useful because it guides on how to deal with vector input functions $f(\vec{x})$ which we need to deal with:

where the last step is basically our new update rule.

-

note that this $H^{-1}$ basically takes place of the $\alpha_t$ we had in first order methods

-

therefore the algorithm is:

However, some problem resides:

notice that computing and inverting Hessian takes lots of computation, and storing Hessian takes space!

Quasi-Newton Methods

Quasi-Newton methods, which provide an iterative approximation to the inverse Hessian $H^{-1}$, so that it may:

- avoid computing the second derivatives

- avoid inverting the Hessian

- may also avoid storing the Hessian matrix.

The idea is to start thinking exactly what we need to approximate. Our goal is anyway the iterative update:

\[x^+ = x - B^{-1}\nabla f(x)\]so our goal is to approximate $B^{-1} \approx \nabla^2 f$. The task is therefore find some conditions to calculate $B$

By definition of second derivative, we know that:

\[\nabla f(x^k+s^k) - \nabla f(x^k) \approx B^k s^k\]where:

- $s^k$ is the step size at iteration $k$

- $B^k$ is our approximation of Hessian/second derivative at step $k$

Now, since it will be an approximation, we want to impose some constraints to make the approximation good:

-

Second equation for next $B$ should hold eaxctly:

\[\nabla f(x^{k+1}) - \nabla f(x^k) = B^{k+1}s^k\]where $x^{k+1} = x^k+s^k$. This will be then represented as:

\[B^{k+1}s^k = y^k\]for $\nabla f(x^{k+1}) - \nabla f(x^k) \equiv y^k$, or even more simply:

\[B^{+}s = y\] -

We also want the following desirable properties

- $B^+$ is symmetric, as Hessians are symmetric

- $B^+$ should be close to $B$, which is the previous approximation

- $B,B^+$ being positive definite

Now, we explore some approximations for $B^+$ that attempts to satisfy the above constraint.

SR1 Update

This is the simpliest update procedure, such that $B^+$ can be close to $B$, and it will be symmetric:

\[B^+ = B + a u u^T\]for some $a,u$ we will solve soon. Notice that if we let this be our update rule for $B$ (first iteration just initialize $B=I$), and we have the enforcement that secant equation should hold:

\[y=B^+s = Bs + a u u^Ts=Bs + (au^Ts)u\]for $s, u, y$ all being vectors. Notice that this means:

\[y - Bs = (au^Ts)u\]where:

- both sides of the equation are vectors! This means that $u$ is a scalar multiple of $y-Bs$.

So we can solve for $u,a$, and obtain the solution and plug back into our update rule for $B^+$:

\[B^+ = B + \frac{(y-Bs)(y-Bs)^T}{(y-Bs)^Ts}\]where at iteration $k$, we already know $B\equiv B^k, s \equiv s^k$ and $y$, so we can compute $B^+$ at iteration $k$.

Just to be clear, using the above formula our descent algorithm would be:

At iteration $k$

- compute $(B^{k})^{-1} \nabla f(x^k)$

- do the descent $x^{k+1} = x^k - \alpha_k (B^{k})^{-1} \nabla f(x^k)$ for some tunable parameter $\alpha_k$

- prepare $B^{k+1}$ using the above formula.

Now, while this technically computes the approximation, we can make the algorithm even better by directly computing $H = B^{-1}$ and its updates using the above formula for $B^+$.

Using the following theorem:

We can show that $(B^+)^{-1}$ can be computed directly

\[(B^+)^{-1} = H^+ = H + \frac{(s-Hy)(s-Hy)^T}{(s-Hy)^Ty}\]then we just use $H$ and $H^+$ all the time instead of $B,B^+$ in the above algorithm.

Other Approximations

The David-Fletcher-Powell (DFP) correction is defined by

\[H^+ = H+ \frac{ss^T}{y^Ts} - \frac{(Hy)(Hy)^T}{y^T(Hy)}\]The Broyden-Fletcher-Goldfarb-Shannon (BFGS) is defined by:

\[H^+ = H+ \frac{2(Hy)s^T}{y^T(Hy)} - \left( 1 + \frac{y^T s^T}{y^T(Hy)} \right)\frac{(Hy)(Hy)^T}{y^T(Hy)}\]In summary, they all attempt to approximate the real Hessian, which is expensive in computation.

Note

These methods are similar to each other in that they all begin by initializing the inverse Hessian to the identity matrix and then iteratively update the inverse Hessian. These three update rules differ from each other in that their convergence properties improve upon one another.

Evolution Strategies

In contrast to gradient descent methods which advance a single point towards a local minimum, evolution strategies update a probability distribution, from which multiple points are sampled, lending itself to a highly efficient distributed computation

Useful resource

- https://lilianweng.github.io/lil-log/2019/09/05/evolution-strategies.html#simple-gaussian-evolution-strategies

Intuition: Instead of updating a single initial point and go downhill, use a distribution of points to go downhill

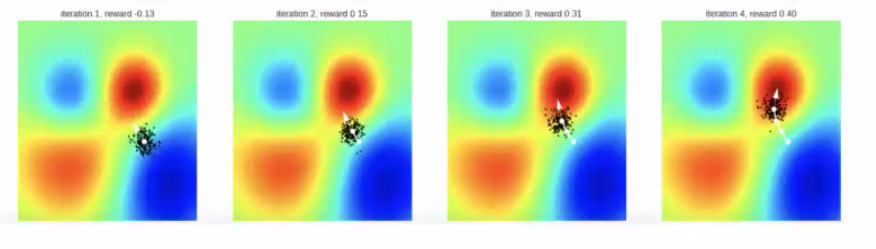

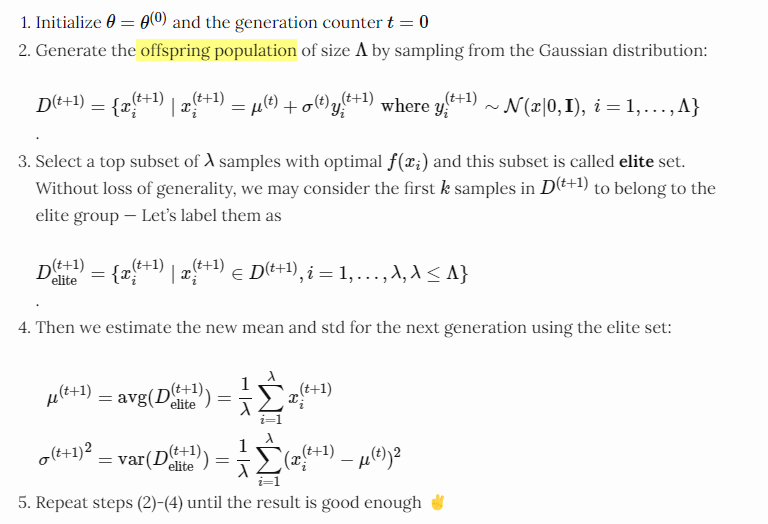

Simple Gaussian Evolution Strategies

at each iteration, we sample from distribution and update that distribution

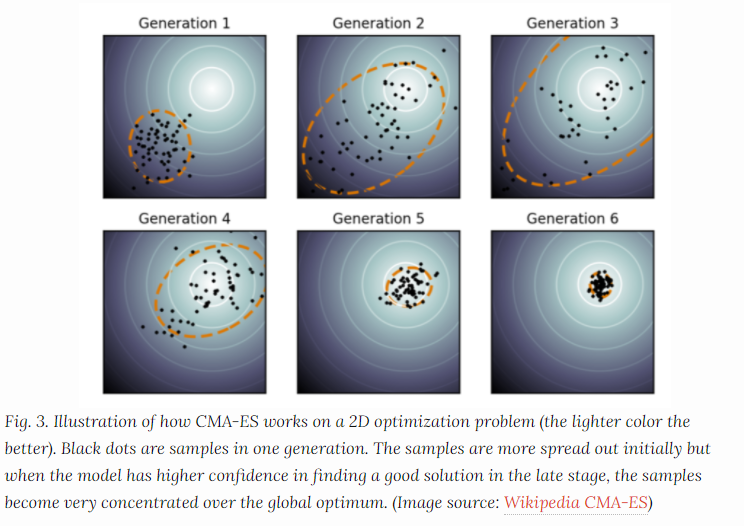

Covariance Matrix Adaptation

Example:

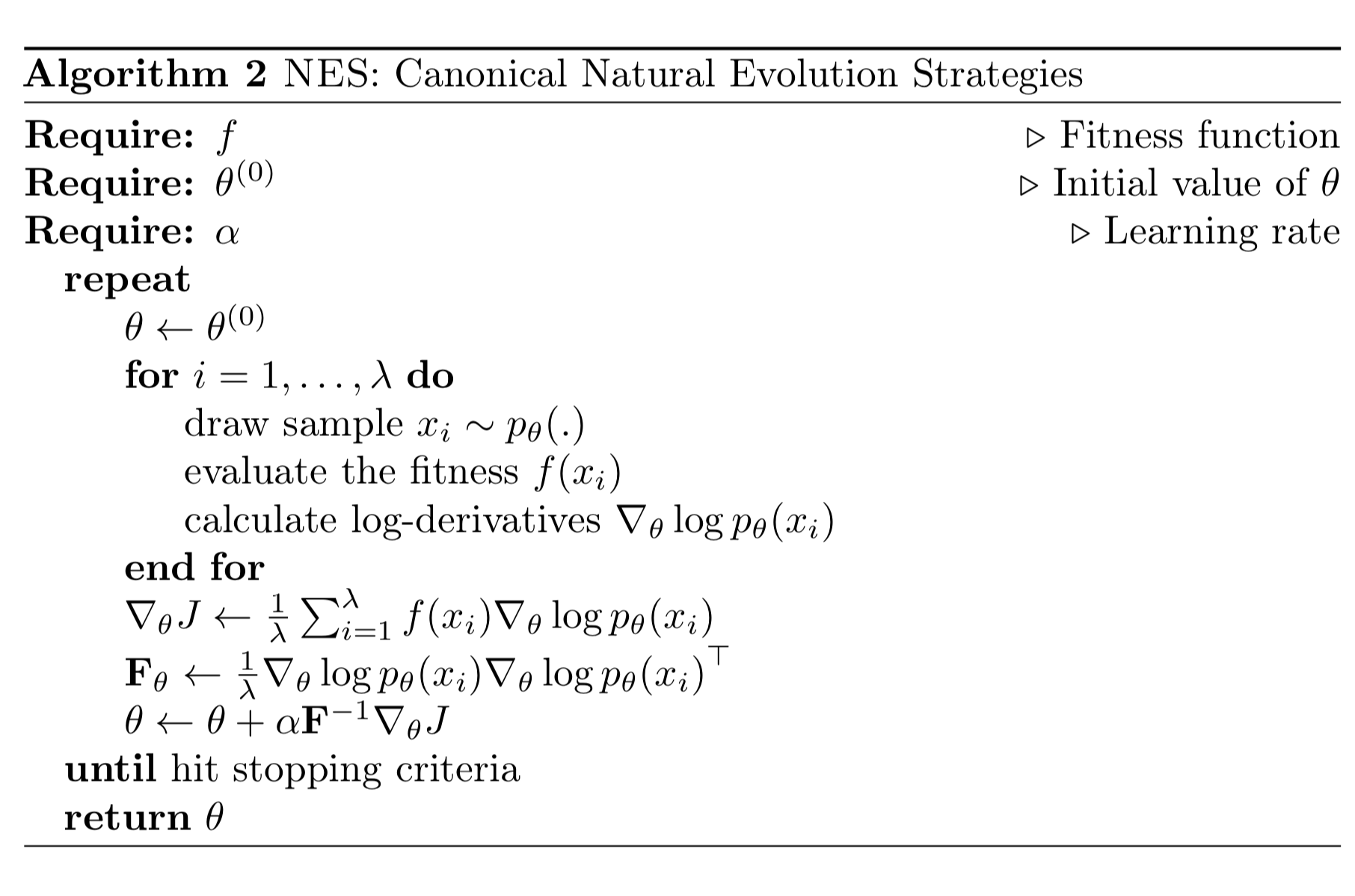

Natural Evolution Strategies

Regularization

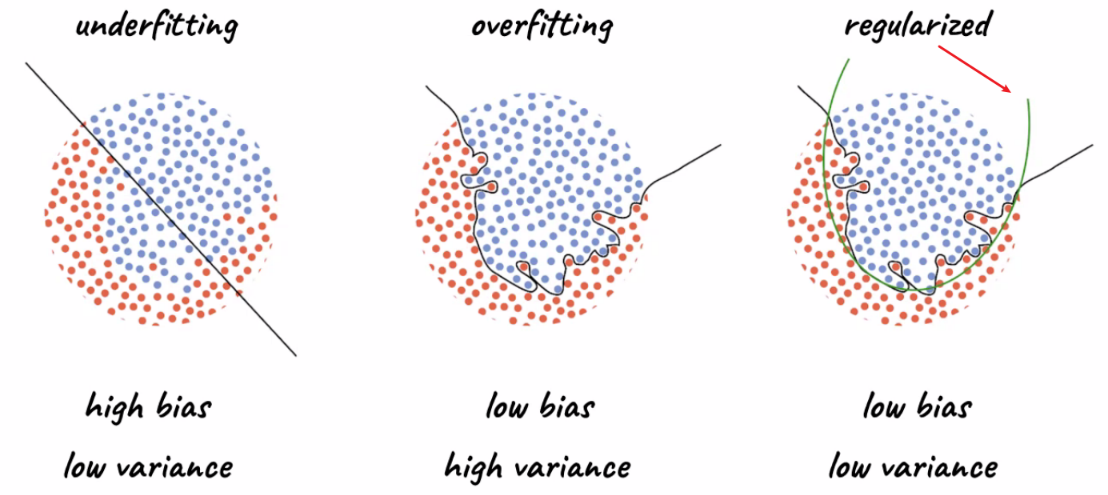

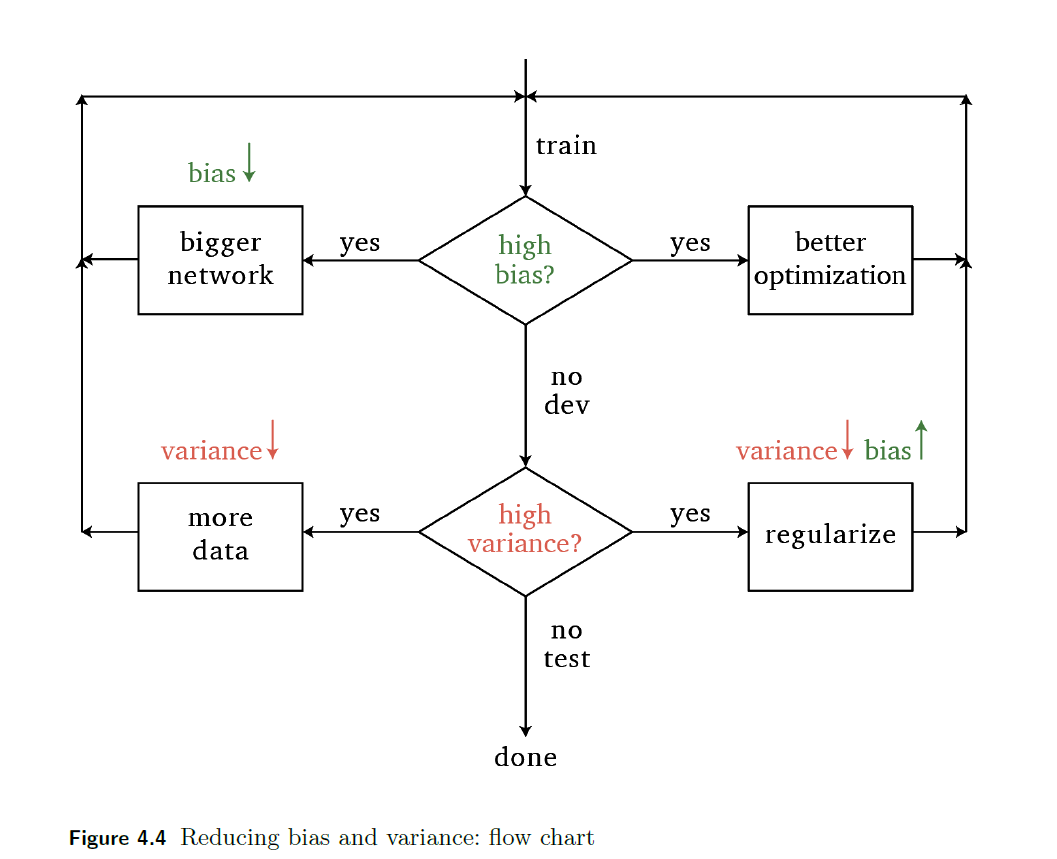

Regularization is a technique that helps prevent over-fitting by penalizing the complexity of the network. Often, we want our model to achieve low bias and low variance for test set:

where

- our final aim is to have the model generalize to unseen data.

Why overfitting happens? In general it is because your training dataset is not representative of all the trends in the population, so that you could fit too much to the training data and miss the real “trends” in the population.

- hence, it cannot generalize to test sets well

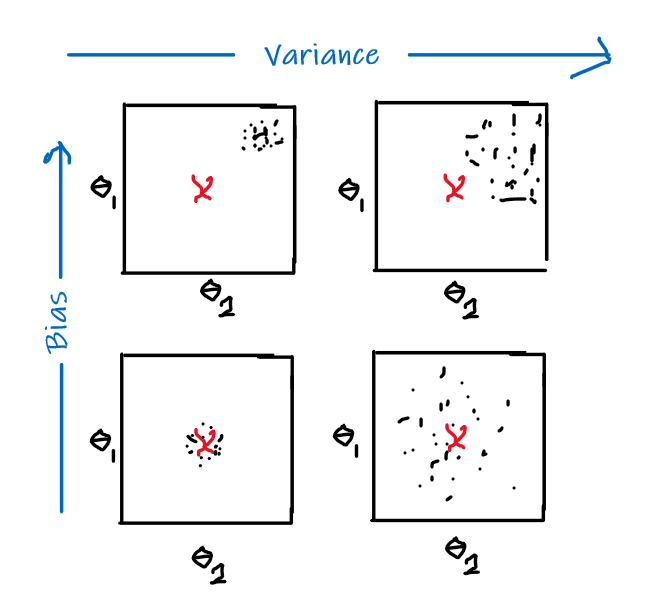

Recall that

Bias and variance are basically:

and intuitively:

- bias: error introduced by approximating a complicated true model by a simpler model

- variance: amount by which our approximation/model would change for different training sets

e.g. an unbiased estimator would have:

\[\mathbb{E}_{\vec{x}\sim \mathcal{D}}[\hat{\theta}(\vec{x})] = \lang \hat{\theta}(\vec{x}) \rang = \theta\]which is different from consistency:

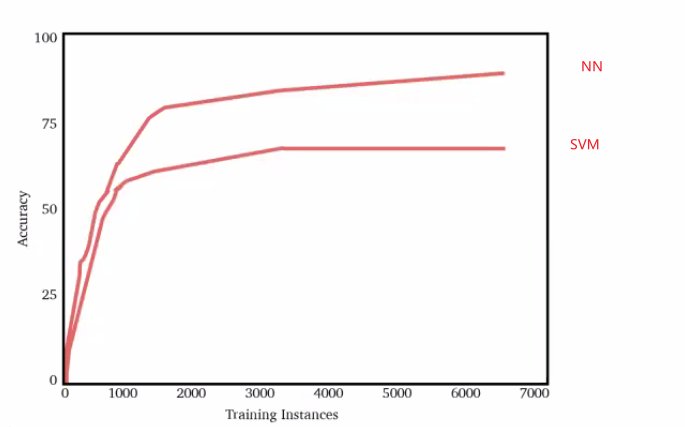

\[\lim_{n \to \infty} \hat{\theta}_n(\vec{x}) = \theta\]In reality, NN does better than traditional ML models such as SVM by being more complicated:

Main regularization techniques here include:

- add a penalty term of the weights $W$ directly to the loss function

- use dropouts, which randomly “disables” some neuron during training

- augment the data

Generalization

Training data is a sample from a population and we would like our neural network model to generalize well to unseen test data drawn from the same population.

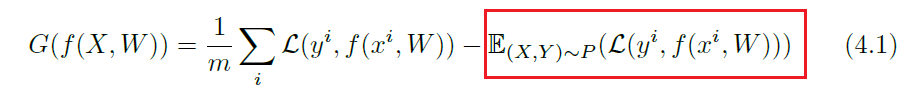

Specifically, the definition of generalization error would be the difference between the empirical loss and expected loss

where in Machine Learning, you would have seen something like this:

\[\mathrm{err}(f) := \mathbb{P}_{(x,y)\sim \mathcal{D}}[f(x) \neq y]\]here:

-

$f$ is a given model to test, and $\text{err}(f)$ is the generalization error.

-

so it is technically equivalent to the red highlighted box only. Yet the whole expression resembles the PAC learning criterion:

\[\mathrm{err}(f_m^A) - \mathrm{err}(f^*) \le \epsilon\]where $f^$ is the optimal predictor in the class $\mathcal{F}$, such that $f^ =\arg\min_{f \in \mathcal{F}}\mathrm{err}(f)$.

- however, notice that it is not, because here we are computing $\text{err}$ which is “generalization error” for both, instead of computing sample error.

The difference between the two is important:

- suppose $G(f(X,W)) = 0$ for our model $f$. Then it means our empirical loss is as good as the expected loss. This only implies that our model has done the “best it could”.

- but suppose $\mathrm{err}(f)=0$, this means that our model is performing perfectly on the population, which I think is a stronger statement than the above.

The key idea is that, if generalization error is low, then our model is not overfitting (doing the same performance for both train and test dataset)

- though this metric is not computable since we don’t have the population, there are various ways to “estimate” it, like doing Cross Validation with many folds. (Cross Validation)

However, it is important to remember that generalization error depends on both variance and bias (and noise) of the model/dataset:

\[\mathbb{E}[y-\hat{f}(x)]^2 = \text{Var}[\hat{f}] + ( \text{Bias}(\hat{f}))^2 + \text{Var}[\epsilon]\]where $\text{Var}[\epsilon]$ is the variance of the noise of the data, i.e. $y = W^Tx + \epsilon$ if you think about regression.

- therefore, this is why we want to reduce bias and variance!

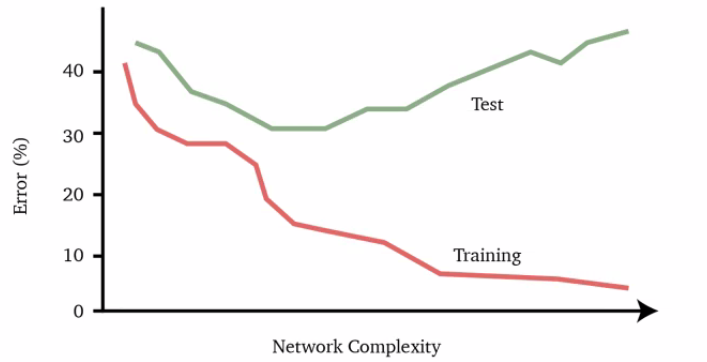

In practice, we see things like this:

where usually:

- Adding more training data $(X, Y)$ increases the generalization accuracy until a limit, i.e. the best our model can do anyway $\neq$ the optimal bayes

- Unless we have sufficient data, a very complex neural network may fit the training data very well at the expense of a poor fit to the test data, resulting in a large gap between the training error and test error, which is over-fitting, as shown above

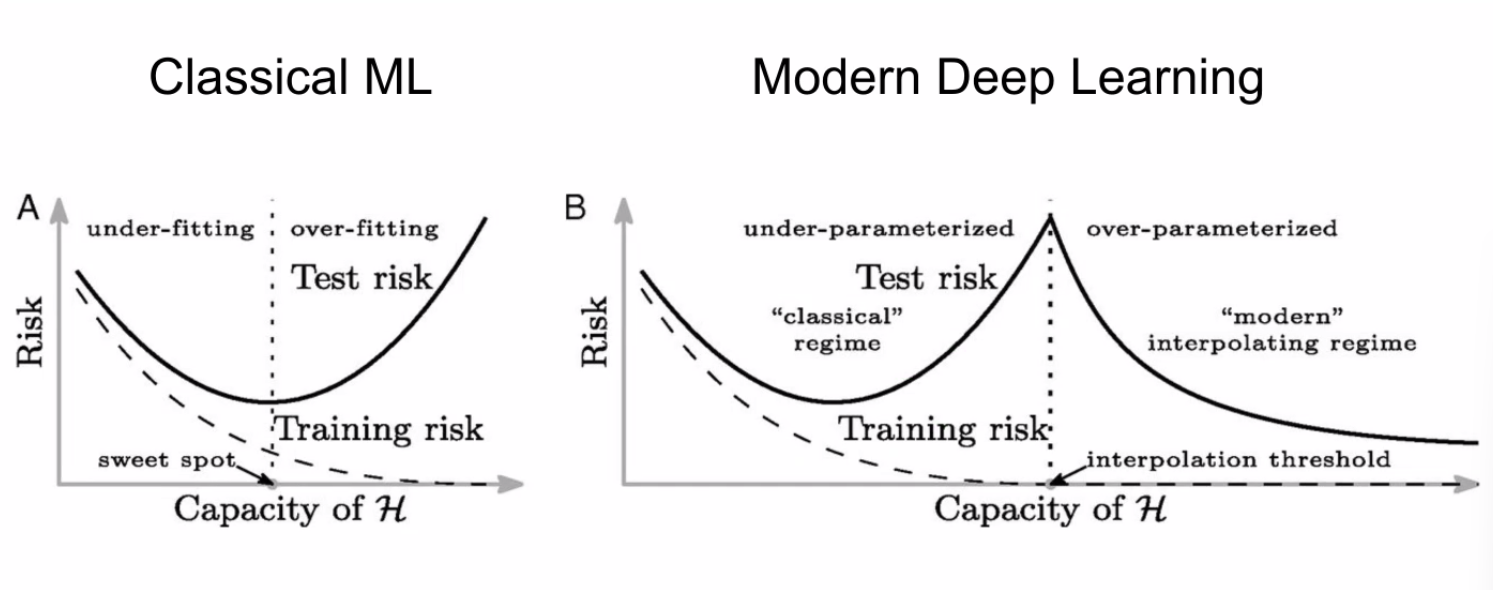

However, in Deep Learning, recent results have shown:

where:

- for DL it seems that we are double descending, so we may want to “overfit”

Cross Validation

Cross validation allows us to estimate the mean and variance of the generalization error using our limited data.

- mean generalization error is the average of the generalization error over all $k$ models and is a good indicator for how well a model performs on unseen data

In short, the idea is simple. We randomly split the data into $k$ folds

- take the $i$-th fold to be testing, and the rest, $k-1$ folds being training data

- learn a model $f_i$

- compute generalization error of $f_i$

- repeat 1-3 for $k$ times, but with a new $i$

This is useful because now we can compute the mean and variance of generalization error using the $k$ different models we trained.

- i.e. think of the evaluation metric being applied on your model choice/architecture, hence the need of mean/variance so it doesn’t depend much on “which data is chosen to be training”

Note

We can also use cross validation to select features for building a model. We can build many models with different subsets of features and then compute their mean and variance of the generalization error to determine which subset performs best.

Regularization Methods

Our general goal is to reduce both bias and variance

where:

- “better optimization” means using a better optimizer, as covered in the previous chapter

- here, our focus is on the bottom right part: regularize

Some main methods we will discuss

- add a penalty term of the weights $W$ directly to the loss function, usually using the norm

- use dropouts, which randomly “disables” some neuron during training

- augment the data

Different methods have different specific effects technically, though the overall effect is that they reduce overfitting.

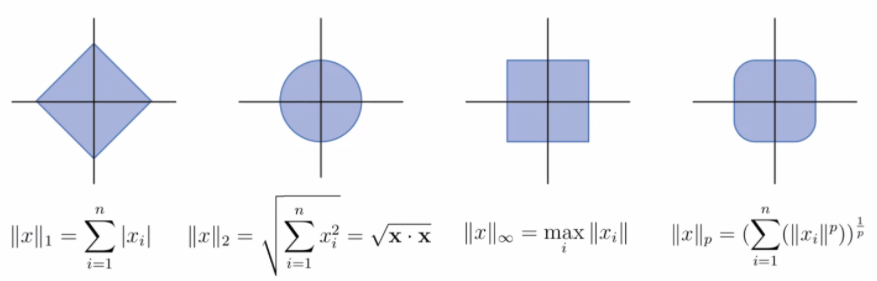

Vector Norms

We define vector norms before discussing regularization using different norms.

For all vectors $x$, $y$ and scalars $\alpha$ all vector norms must satisfy

- $\vert \vert x\vert \vert \ge 0$ and $\vert \vert x\vert \vert =0$ iff $x = 0$

- $\vert \vert x+y\vert \vert \le \vert \vert x\vert \vert + \vert \vert y\vert \vert$

- $\vert \vert \alpha x\vert \vert = \vert \alpha\vert \,\vert \vert x\vert \vert$

Note

Under this definition:

- $L_p$ for $p < 1$ will have non-convex shapes

- $L_0$ does not count as a norm

The general equation is simply:

\[||x||_p = \left( \sum_{i=1}^n |x_i|^p \right)^{1/p}\]Some most common norms include:

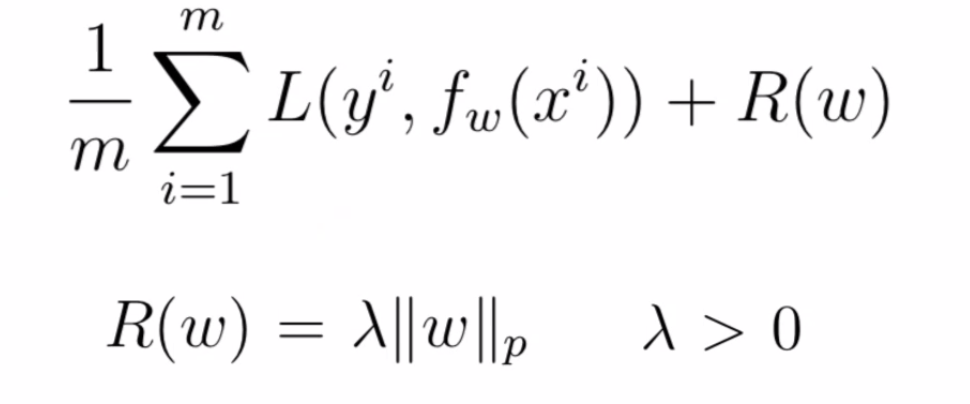

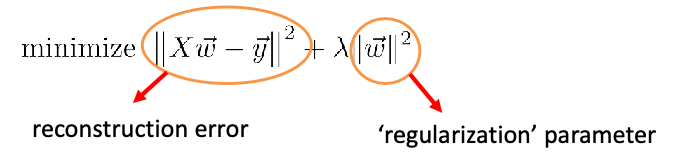

Regularized Loss Functions

One way to regularize it to use regularized loss function:

where:

- $\lambda$ would be tunable.

- If $\lambda$ = 0, then no regularization occurs.

- If $\lambda$ = 1, then all weights are penalized equally.

- A value between $0$ and $1$ gives us a tradeoff between fitting complex models and fitting simple models

- Notice that here $R(w)$ refers to regularizing the entire weight of the network $W$.

- if you want to have different regularization for different layers, you need to do $R_1(W^1)+R_2(W^2)$ in the final loss term.

- Common types of regularization are $L_1$ and $L_2$

- $L_1$ regularization is a penalty on the sum of absolute weights which promote sparsity

- $L_2$ regularization is a penalty on the sum of the squares of the weights which prefer feature contribution being distributed evenly

For Example

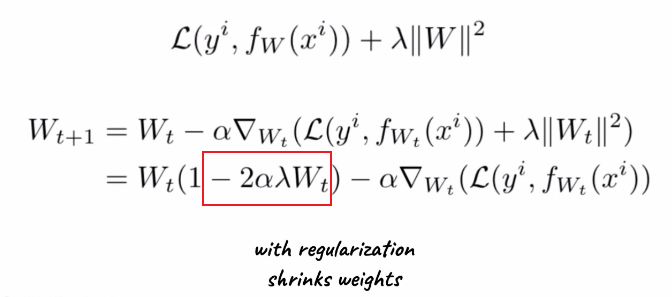

If we are using SGD, and we added a $L_2$ regularization would cause our gradient update rules to change:

where:

- the extra term is due to regularization. so if $\lambda \to 0$, we get back to regular SGD

- also confirms that $W_t$ in general are smaller if we have regularization

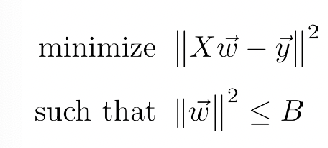

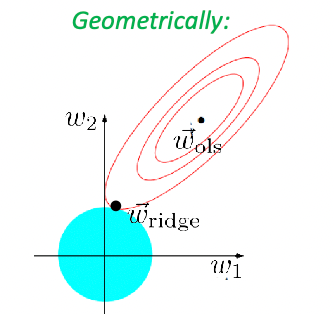

Ridge and Lasso Regression

Recall that in machine learning, the following is Ridge Regression

The solution for the ridge regression can be solved exactly:

\[\vec{w}_{ridge}=(X^TX + \lambda I)^{-1}X^T \vec{y}\]which basically comes from taking the derivative of the objective and setting it to zero, and note that:

- this matrix $X^TX + \lambda I$ is exactly invertible since it is now positive definite (because we added some positive number to diagonal)

- since $X^TX + \lambda I$ is invertible, this always result in a unique solution.

Note

analytic solution doesn’t exist for DL, since our prediction is no longer:

\[\hat{y} = XI\beta\]which is for simple linear regression.

But in DL, we have a NN with many nonlinear functions nested like:

\[\hat{y} = f_3(W^3 \, f_{2}(W^2\,f_{1}(W^1X)))\]where each layer $f$ are the activation functions for each layer. The solution of this is no longer analytic.

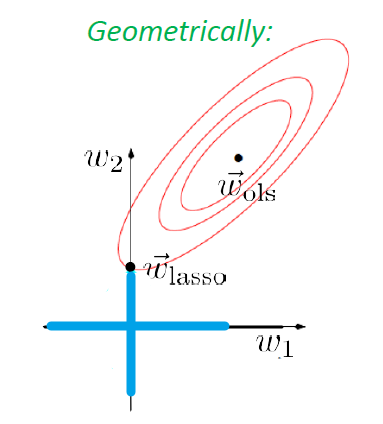

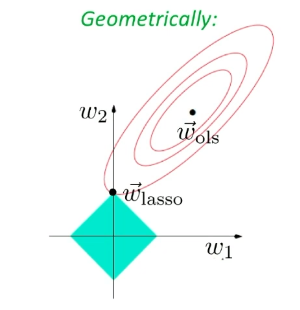

Yet, since this problem can be converted to the constraint optimization problem

using Lagrange Method, then, the problem basically looks like:

| Objective Function | Contour Projection into $w$ Space |

|---|---|

|

|

Recall: Lagrange Penalty Method

Consider the problem of:

This problem will be the same as minimizing the augmented function

\[L(\vec{x}, \vec{\lambda}) := f(\vec{x}) + \sum_{i=1}^n \lambda_i g_i(\vec{x})\]and recall that :

- our aim was to minimize $f(\vec{x})$ such that $g_i(\vec{x}) \le 0$ is satisfied

- $\vec{x}$ is the original variable, called primal variable as well

- $\lambda_i$ will be some new variable, called Lagrange/Dual Variables.

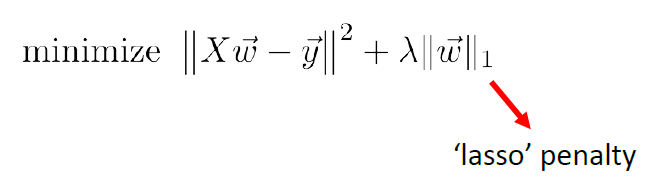

Similarly, if we use $L_1$ norm, then we have Lasso’s Regression

Geographically, we are looking at:

| Actual Aim (Sparsity) | Lasso’s Approximation |

|---|---|

|

|

Sadly, there is no closed form solution even for simple regression in this case.

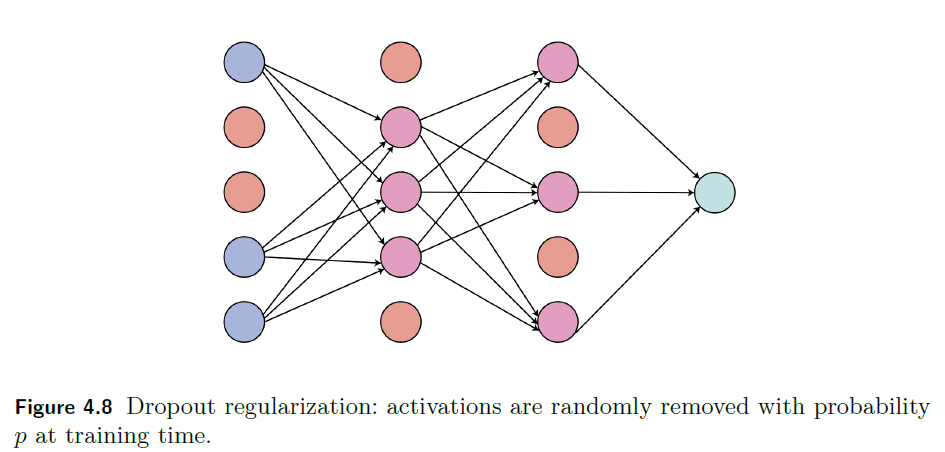

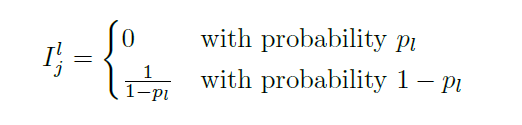

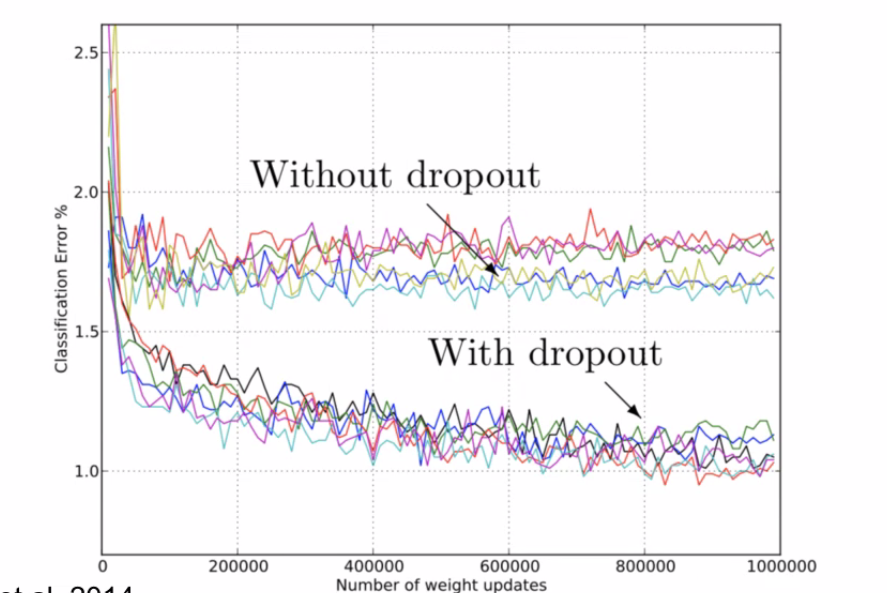

Dropout Regularization

The idea is that we randomly dropout neurons by setting their activations to be $0$.

-

this will reduce cause the training to be less accurate, but makes it more robust in overfitting as those neurons “won’t fit all the time” to the data

-

this is done in training only. When testing, we don’t drop them out

Graphically:

| Fully connected neural network | Dropout regularization |

|---|---|

|

|

Since activations are randomly set to zero during training, we basically implement it by adding a layer before after activation

\[a_j^l := a_j^l I_{j}^l\]where $I_j^l$ is like a mask, deciding whether if it will be dropped

Intuitively:

- over iterations, some neurons will be dropped -> less overfitting on those neurons. In some other cases, those neurons will need to stand in for others.

-

overall, we want to keep the same magnitudes of “neurons” for that layer even if we dropped out, hence $1/(1-p_l)$ scale up, so that they stand in for the dropped out neurons

- $p_l$ is a hyper-parameter. In some framework we can set it, in some other like

kerasit is automatically tuned

To implement it in code, we use a mask:

def dropout(self, A, prob):

# a mask

M = np.random.rand(A.shape[0], A.shape[1])

M = (M > prob) * 1.0

M /= (1 - prob)

A *= M # applying the mask

return A, M

note that:

- forward propagation: apply and store the mask

- backward propagation: load the mask and apply derivatives

- since $a_j^l := a_j^l I_{j}^l$, then backpropagation equation needs to be updated as well

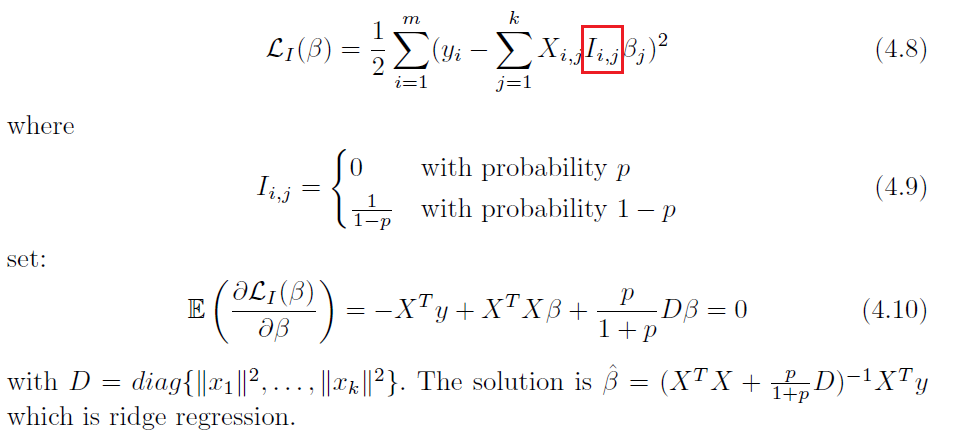

For Examples

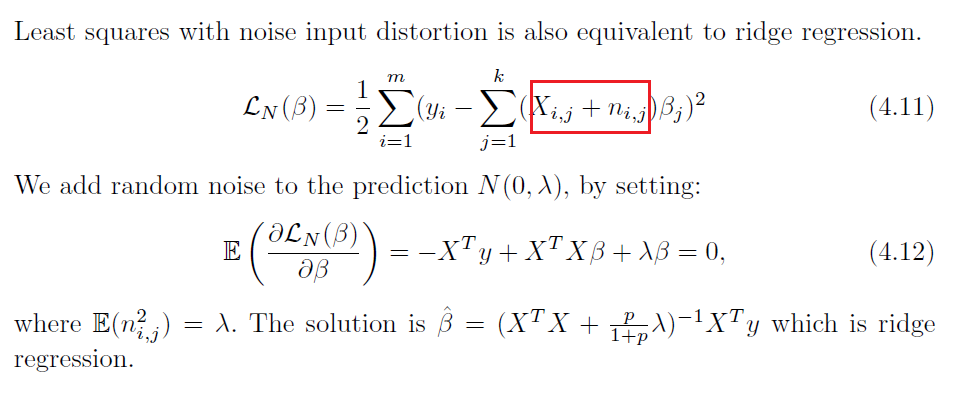

Least Square Dropout

Dropout is actually not completely new

where notice that:

- the solution is exact

Note

- again, analytic solution doesn’t exist for DL, since our prediction is not a simple linear regression but concatenating a bunch of nonlinear operations as well

Least Squares with Noise Input

Data Augmentation

Data augmentation is the process of generating new data points by transforming existing ones.

- For example, if a dataset has a lot of images of cars, data augmentation might generate new images by rotating them or changing their color. Then, it is used to train a neural network

Data augmentation may be used to reduce overfitting. Overfitting occurs when a model is too closely tailored to the training data and does not generalize well to new data. Data augmentation can be used to generate new training data points that are similar to the existing training data points, but are not identical copies.

- This helps the model avoid overfitting and generalize better to new data.

The general idea here is that we augment the training data by replacing each example pair with a set of pairs

\[(x_i, y_i) \to \{(x_i^{*^b} , y_i)\}_{b=1}^B\]by, transformations including

- e.g. rotation, reflection, translation, shearing, crop, color transformation, and added noise.

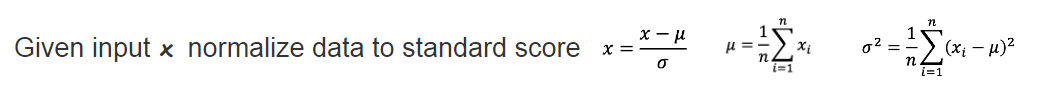

Input Normalization

This is simply to normalize the input in the beginning

Then perform:

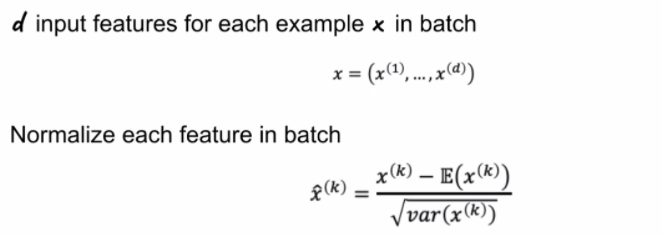

\[\hat{x} = \frac{x- \mu}{\sigma}\]Batch Normalization

Batch normalization basically standardizes the inputs to a layer for each mini-batch. You can think of this as doing normalization for each layer, for each batch

- advantage: avoid exploding/vanishing gradients if the inputs are small!

- usually not only normalizing the input, but also for each layer over and over again.

So basically, for input of next layer:

\[Z = g(\text{BN}(WA))\]where $\text{BN}$ is doing batch normalization. In essence:

note that:

- this means you backpropagation equation/derivatives needs also to include that term.

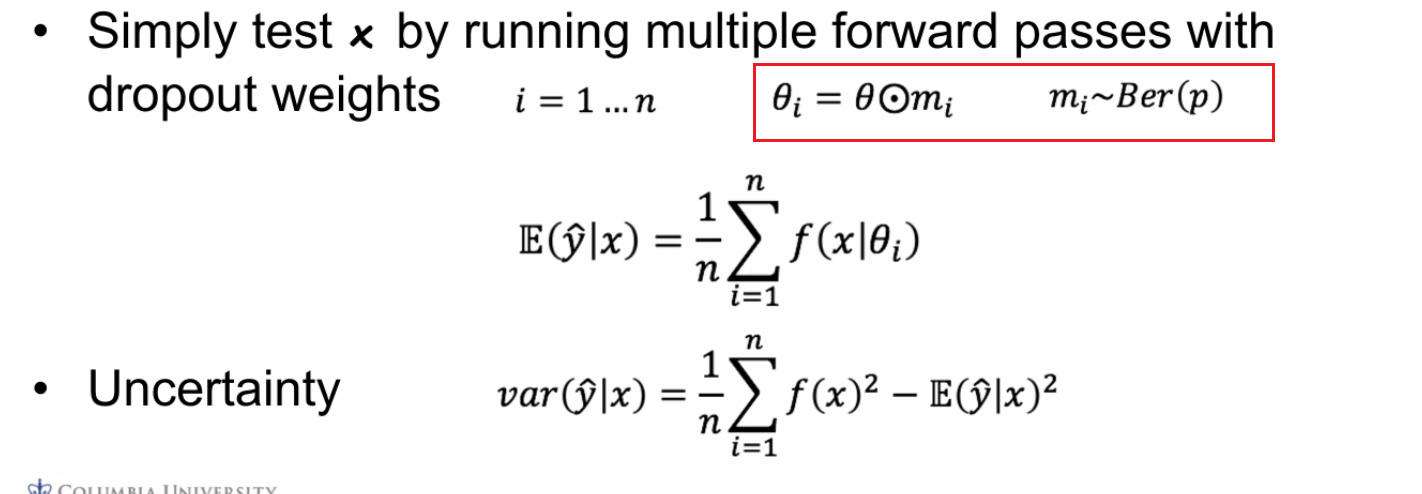

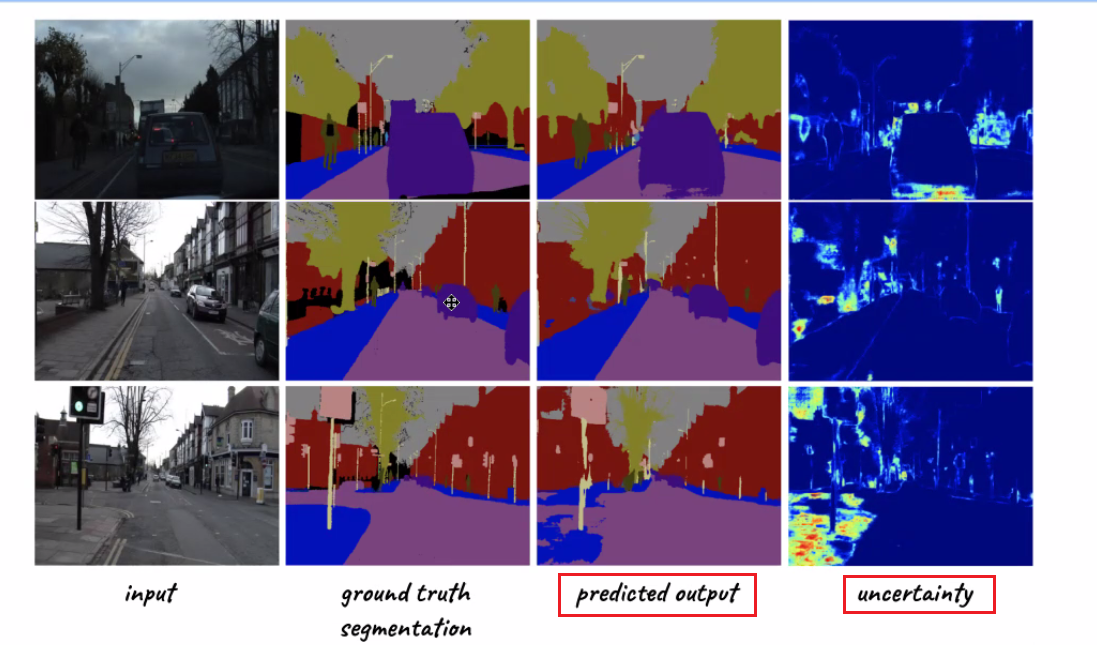

Uncertainty in DNNs

Sometimes we also want to measure the confidence about the outputs of a neural network.

- for instance, confidence in our learnt paramotor $\theta$

In theory, we want ask the question: What is the distribution over weights $\theta$ given the data? (from which we know the confidence of our current learnt parameter)

\[p(\theta |x,y) = \frac{p(y|x,\theta) p(\theta)}{p(y|x)}\]where $p(\theta)$ would be the prior, and $p(\theta\vert x,y)$ would be the posterior since we see the data.

- this is not possible to compute since we don’t know them.

In practice, we can roughly compute confidence of current prediction by “dropout masks”

For instance:

so we are not only outputting predictions, but also outputting confidence of our predictions

Convolutional Neural Networks

The major aim of this section is to discuss models that solves ML problems with images

A brief overview of what we will discuss:

- CNN is basically a “preprocessing neural network” that replaces Linear part from $W^lA^{l-1}$ to convolution with kernel (which is also linear)

- problems with deeper CNN layers causes vanishing gradients, hence models such as Residual NN and DenseNet are introduced

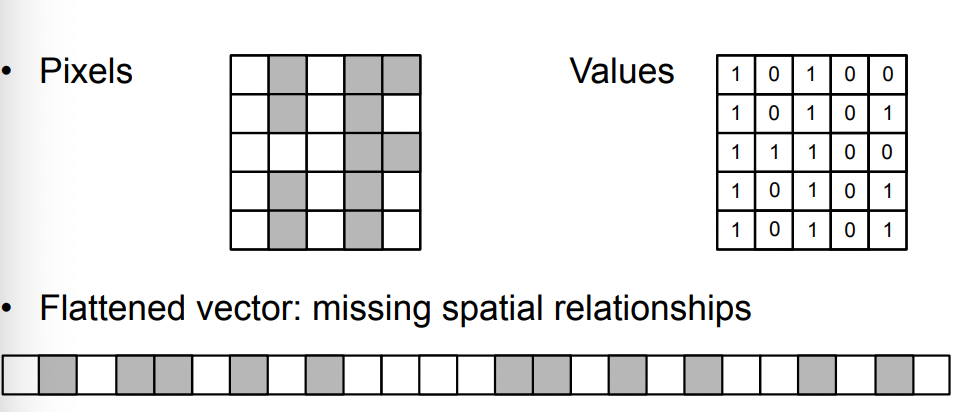

On a high level, we should know that treating images means our input vector would be large in size, with $n$ dimension (after flattening) means that, if we use vanilla model, we need $O(n n_{1})$ matrix for the first layer with $n$ neurons!

Then, CNN aims to

- deal with this storage/computation problem by using sparse matrix (kernel), which are essentially matrices with repeated elements.

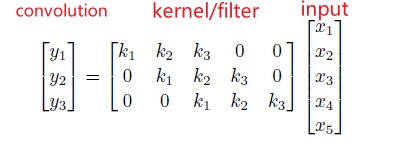

since the output is size $3$, it means we basically have 3 neurons essentially having the same weight ($k_1, k_2, k_3$). Therefore, we also call this sharing weights across space.

notice that in this case, our number of parameters to learn is $O(3)=O(1)$ is constant!

-

another problem of CNN is to encode spatial and local information, which would be otherwise lost if we directly flatten it and pass it onto a normal NN.

where you will see the aim of kernels would be that they captures features such as edges/texture/objects, which obviously has spatial relationships in the image.

Note

The fact that weights learnt in CNN preprocessing part of the architecture are constant can be thought of as the result of the constraints we placed on those weights: we need them to be kernels, hence we need symmetry, sparsity, and the particular output shape as shown above.

After using the kernel, CNN architecture then would add a bias and an activation, all of which would assemble the actions taken in one layer.

- essentially the linear $W^l A^{l-1}$ is replaced by convolution

- each filter in a layer is of effectively $O(1)$ in size, but we can learn multiple such filters. Finally it may look like this

where notice that:

- the output after several such layers will be piped to a normal NN, for instance, for the final specific classification tasks.

- subsampling are basically techniques such as pooling, which will be covered later. The aim is to reduce the dimension (width $\times$ height).

- To reduce the number of channels, you can use $f$ number of $1\times 1\times c$ filters, which can reduce to $f$ channels.

- make sense since doing $1\times 1\times c$ convolution is telling how to sum the pixel on each channel into $1$ single pixel.

Convolution

Basically it is nothing than doing:

- elementwise multiplication between a patch of a matrix and a filter/kernel

- summing them up

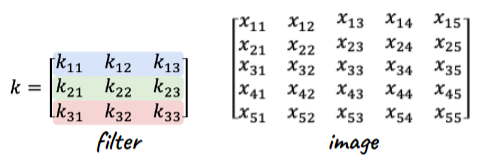

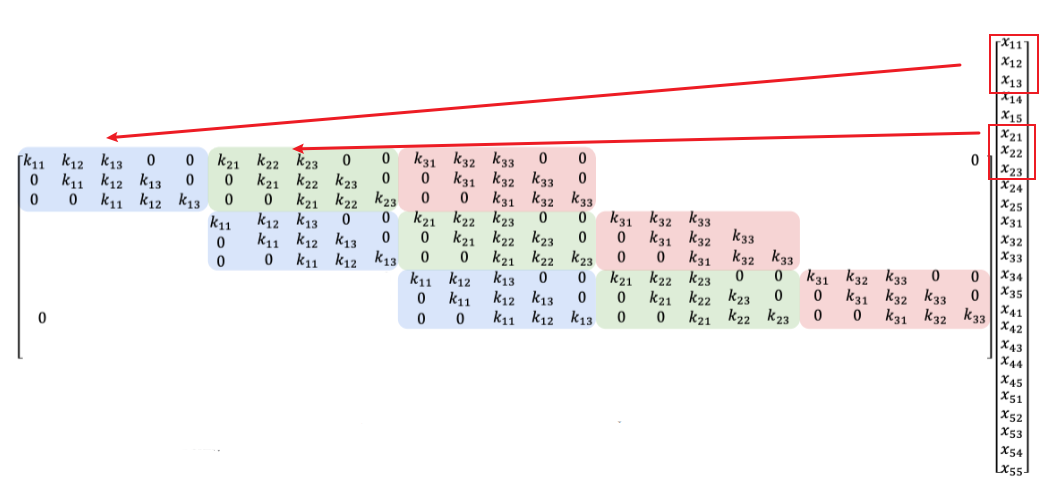

Therefore, convolutions in any dimension can be represented as a matrix vector multiplication:

\[k * x = Kx_{\text{flatten}}\]where:

- $K$ is the kernel, and $x_{\text{flatten}}$ is the flattened version of the image $x$.

- the exact shape of $K$ would be interesting. Think about how you would realize the above equation.

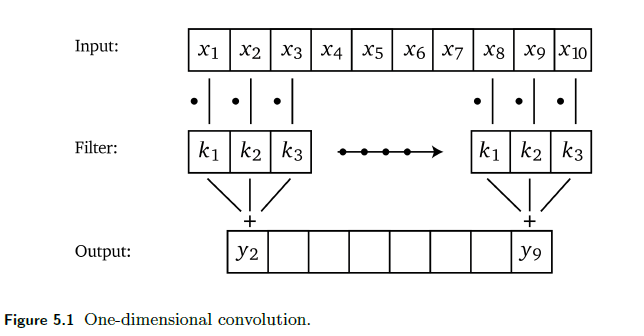

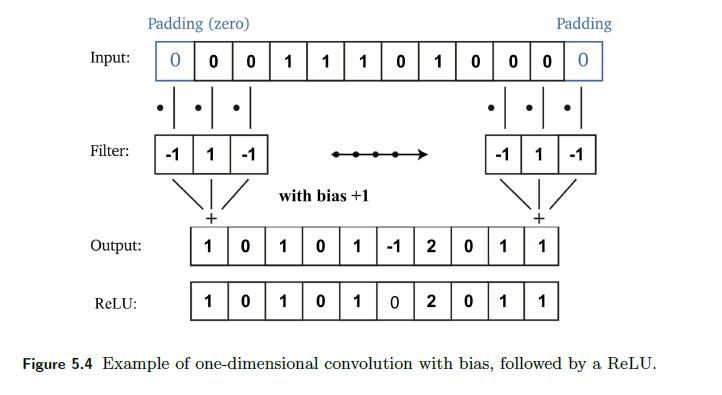

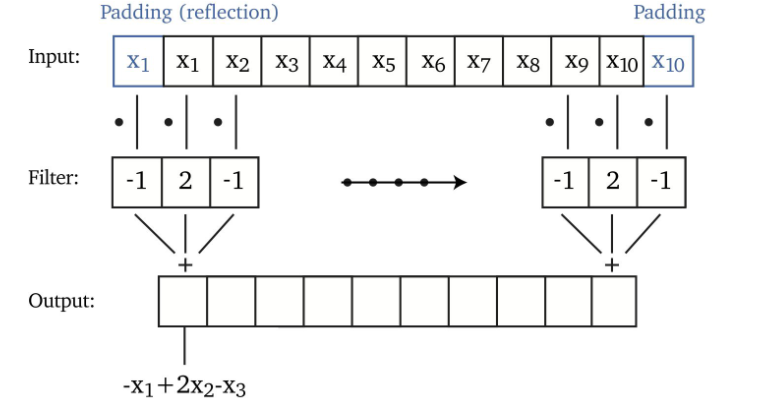

One-Dimensional Convolution

The idea is simple, if we are given a 1D vector and a 1D kernel:

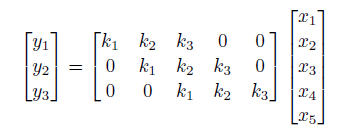

so essentially it is a locally weighted sum. To think about how we represent this in linear algrebra, consider that we have a $1 \times 5$ input with size $3$ kernel:

verify that the above works.

- notice that the output is of dimension $3$. This must be the case because there are only $3$ unique positions to place the size $3$ filter inside the size $5$ input vector.

- this matrix is also called Koeplitz matrix

Therefore, we can reason this as:

\[k * x = Kx = \sum_{i=1}^3 k_iS_i x\]where $S_i$ are the matrices where only “diagonal” entries are ones, otherwise zeros.

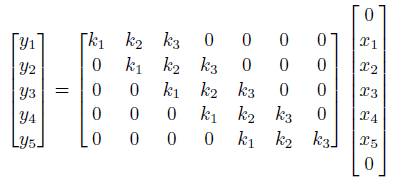

Now, one problem is that we noticed the output size is smaller, which can be bad in some cases. We can fix this by adding padding to the edges:

however:

- one problem with zero padding is that it introduces discontinuities at boundaries

- another technique is to pad with reflection, i.e. replacing the top $0 \to x_1$, and bottom $0 \to x_5$.

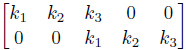

For Example: Stride with size 2

The above all assumed a stride with size 1. We can perform the task with stride 2 by doing

this could be useful as the output size is decreased by a factor of $2$.

For Example: A Simple Single Conv Layer

A typical layer looks like:

where notice that:

- pass the 1D image vector to filter (replacing the linear part to $Kx$)

- optionally you would then also add the bias to the output $Kx + b$

- notice that this linear operation has a very sparse matrix, $K$

- shortened version of the vector then goes through activation

This particular setup in the end can detect any block of lonely $1$ in the input.

Note

The fact that we are applying a kernel everywhere the same is so that it preserves the property that images are translational invariant.

Other Filters

Sharpening:

more filters are omitted.

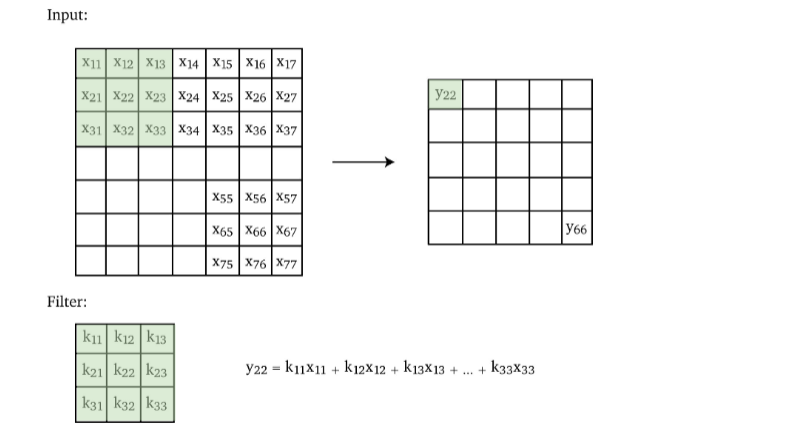

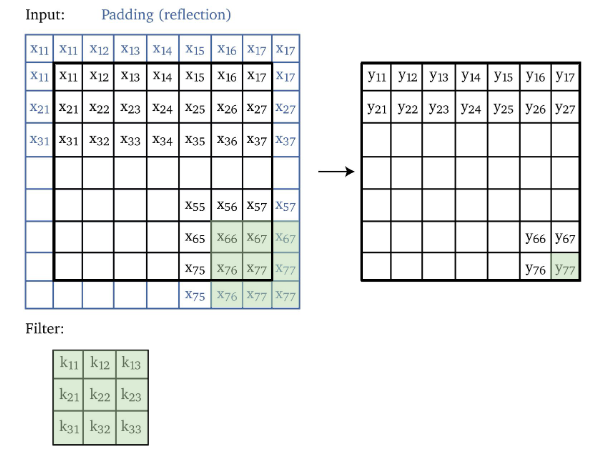

Multi-Dimensional Convolution

Since our images are usually 2D if grey scale, so we extend the convolution to a 2D kernel.

- the pattern you will see is easily generalizable to 3D inputs as well.

Then, if we want to add paddings

But more importantly, we can put this in a matrix vector multiplication as well:

- flattening 2D matrix to $[x_{11}, x_{12}, …, x_{nm}]^T$.

- the shape of kernel would be repeatedly assembling 1D filters

Consider the following operatoin:

Can be done by:

which is basically:

- the lowest diagonal is the 1D Toeplitz matrix for the first row of $k$

- the second lowest diagonal is the 1D Toeplitz matrix for the second row of $k$

- etc.

- finally, the output is a vector, which can be interpreted as a flattened 2D image

Therefore, convolution with 3D images using 3D kernels, basically is equivalent of matrix-vector multiplication with:

- flattened image to 1D

- repeatedly assembling 2D Toeplitz matrix for the “$i$-th place” to form a 2D matrix.

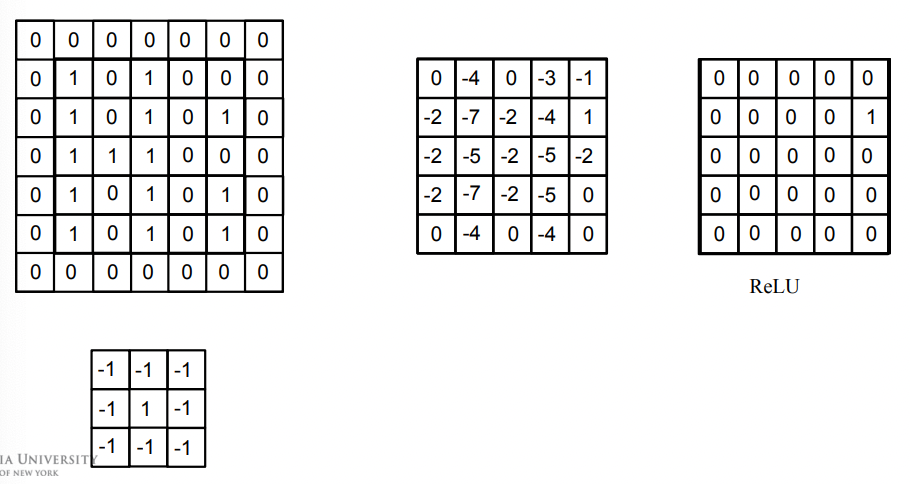

For Example

To find the lonely one:

Three Dimensional Convolution

The technique of expanding 2D Toeplitz matrix for 3D convolution basically does the following for convolution:

which basically outputs a single 2D matrix.

- makes sense that the number of channels in both kernel and input lines up, as in the end we just do a element-wise multiplication and sum up.

However, another way would be to do two dimensional convolution on each channel

notice that:

- this means we would have 3 filters (may be the same), each is a 2D matrix/kernel/filter

- the number of channels for both kernel and their respective input is $1$.

- outputs 3 channels instead of $1$, as compared to the previous case

Properties of Convolution

There are several nice properties of convolution that are handy for optimizing computation complexity.

First, the most obvious ones are due to convolution are essentially matrix/vector multiplication as they are from linear algebra

- Commutative: $f*g = g * f$

- Associative: $f(gh) = (f*g) * h$

- Distributive: $f*(g + h) = f * g + f * h$

- Differentiation: $\frac{d}{dx} (f * g) = \frac{df}{dx}* g = f * \frac{dg}{dx}$

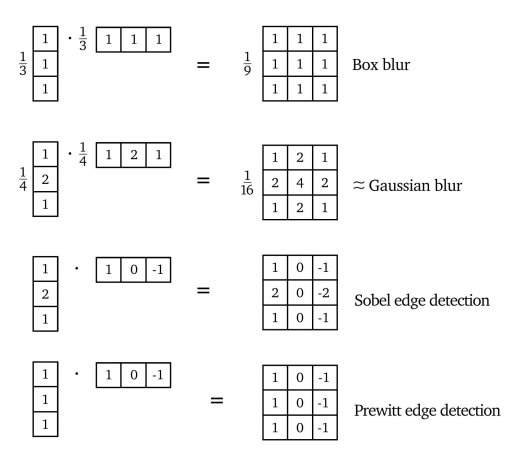

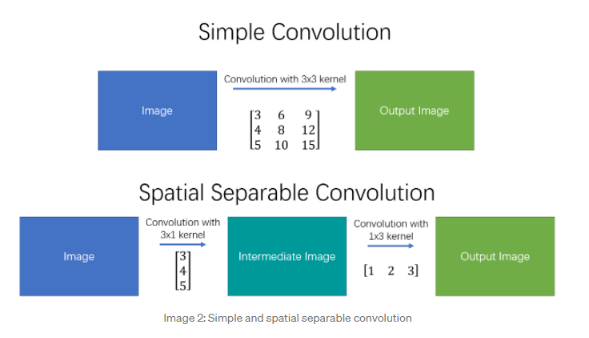

Separable Kernels

Some kernels would be separable like:

Then, we can use the property that:

where this is very useful because, if the image is size $n \times n$, and separable kernel $k \times k$

- directly convovling needs $O(n^2 k^2)$, since each of the $\approx n^2$ output pixel needs $k^2$ computation.

- if we do it with two simpler convolutions, then $O(2n^2k)=O(n^2k)$ which is better

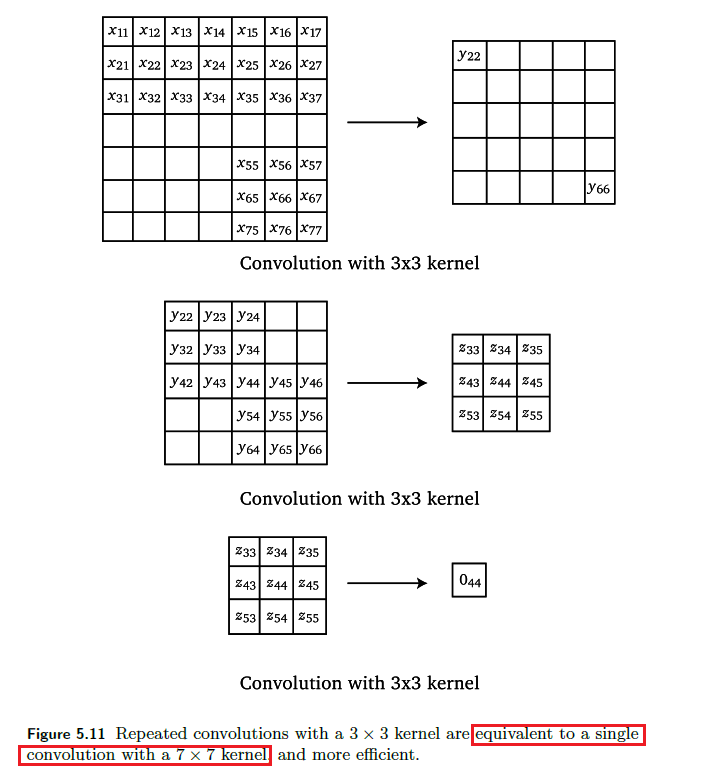

Composition

Since we know convolutions is basically matrix-vector multiplication: Repeated convolutions with a small kernel are equivalent to a single convolution with a large kernel

where this is useful because it is more efficient.

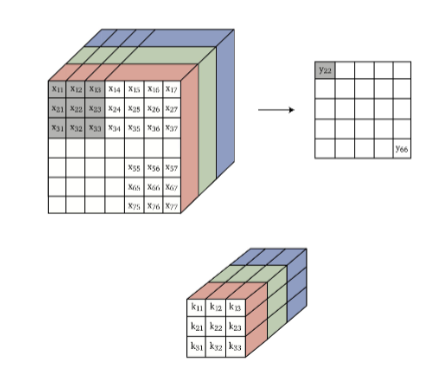

Convolutional Layers

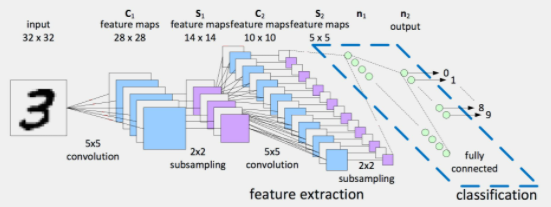

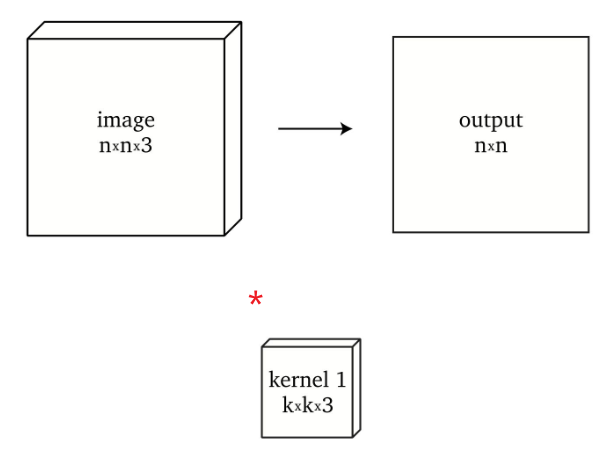

Now, we discuss what happens in convolutional layers in a NN such as the following

Notice that we know:

-

convolution of $n \times n \times 3$ with a single kernel $k \times k \times 3$ produces $n \times n$ (if we have padding)

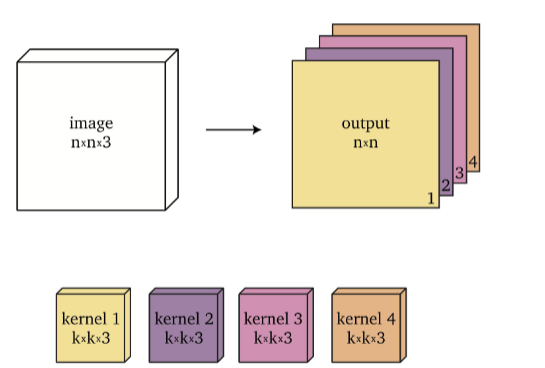

-

if prepare $4$ different $k \times k \times 3$ kernel and we do this separately for $4$ times, we get $n \times n \times 4$ output

Therefore, since in general a single layer would have many different filters, if we have $f$ filters, we would produce $n \times n \times f$ as our output size.

- however, before we put this through activation, notice that having $n \times n \times f$ is pretty large. (in practice the performance gain is not too huge, so it doesn’t matter if we put it before or after activation)

So, before activation, we would use pooling techniques to reduce the dimension.

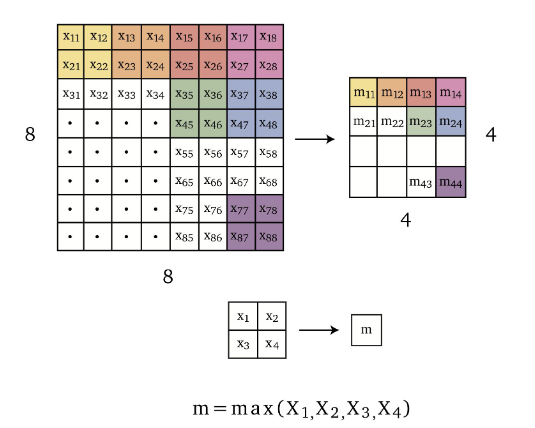

Pooling

Pooling is an operation that reduces the dimensionality of the input. Some simple and common ones are

Max pooling takes the maximum over image patches.

for example over $2 \times 2$ grids of neighboring pixels $m =\max{x_1, x_2, x_3, x_4}$, hence reducing dimensionality in half in each spatial dimension as shown in Figure 5.18.

notice that this does not reduce the number of channels!

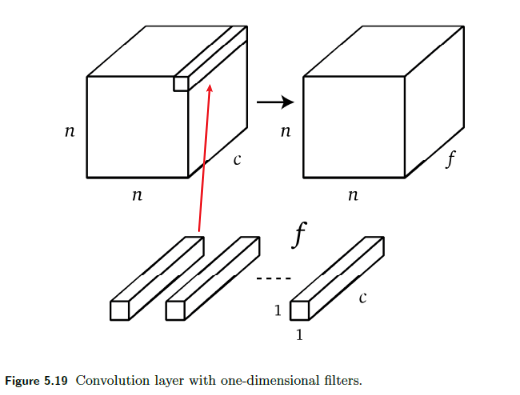

To reduce the number of channels, it is essentially saying that how do we want to sum pixels in different channel? Therefore, it makes sense that we can use a one-dimensional convolution to solve this

One dimensional convolution with $f$ filters also allows reducing the number of channels to $f$ as shown in Figure 5.19.

- which makes sense as if $f=1$, essentially you summed over all channels, collapsing all channels to $1$ channel.

Simple Convolutional Layer

Putting everything above together, a typical convolutional layer involves three operations:

- convolution with kernel (linear)

- pooling

- activation (nonlinear)

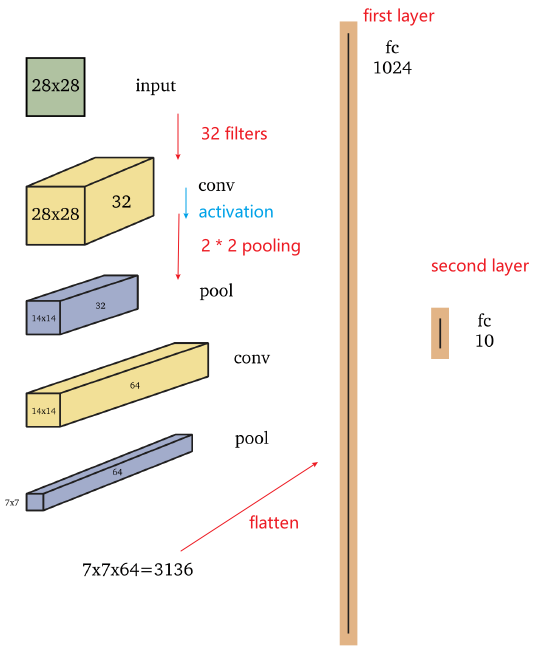

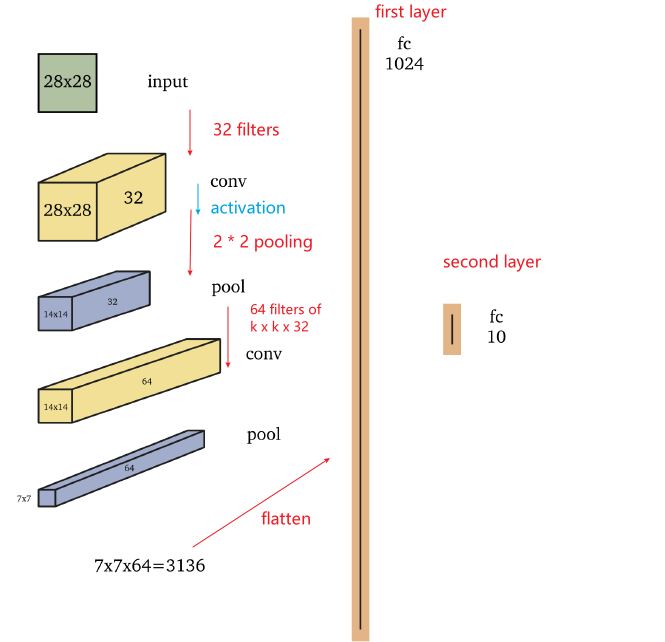

An example would be:

where, In this example, the input is a $28 \times 28$ grayscale image and the output is one of ten classes, such as the digits $0-9$

- The first convolutional layer consists of $32$ filters, such as $5 \times 5$ filters, which are applied to the image with padding which yields a $28 \times 28 \times 32$ volume.

- Next, a non-linear function, such as the ReLU, is applied pointwise to each element in the volume

- The first convolution layer of the network shown above is followed by a $2 \times 2$ max pooling operation which reduces dimensionality in half in each spatial dimension, to $14 \times 14 \times 32$.

- then, go from $14 \times 14 \times 32$ to $14 \times 14 \times 64$, you would have 64 filters of size $5 \times 5 \times 32$, for example

Architectures

Now we talked about some of the modern architectures that builds up on the basic CNN we discussed before.

- in fact, now as Vision Transformers are out, processing with images have now been mostly done using that as Transformers itself is quite a generic model

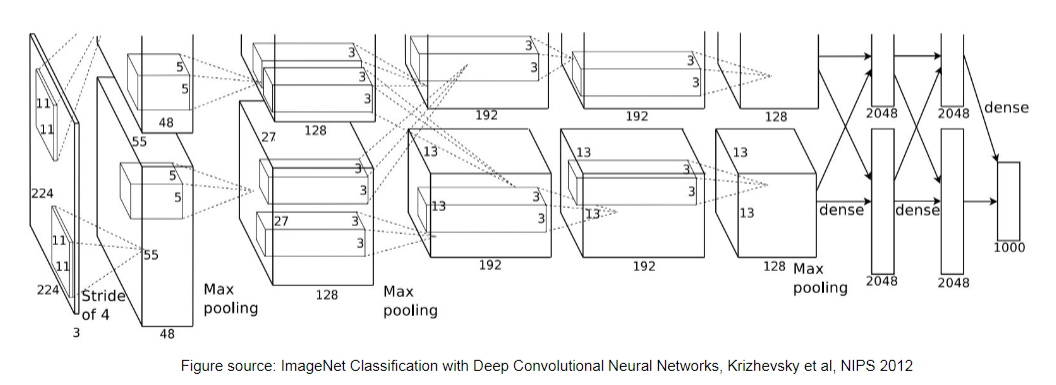

CNN

A basic example of CNN is shown in this example

- A deeper network of eight layers may resemble the cortical visual pathways in the brain (Cichy, Khosla, Pantazis, Torralba & Oliva 2016).

- note that we are doing activation then pooling here

- max-pooling and monotonely increasing non-linearities commute. This means that $\text{MaxPool(Relu(x)) = Relu(MaxPool(x))}$ for any input.

- So the result is the same in that case. Technically it is better to first subsample through max-pooling and then apply the non-linearity (if it is costly, such as the sigmoid). In practice it is often done the other way round - it doesn’t seem to change much in performance.

- the second Convolution Layer comes from applying 64 filters of size $k \times k \times 32$. Usually this can be abbreviated to say applying $k \times k$ dimension filters.

- Many early implementations of CNN architectures were handcrafted for specific image classification tasks. These include

- LeNet (LeCun, Kavukcuoglu & Farabet 2010),

- AlexNet (Krizhevsky, Sutskever & Hinton 2012)

- VGGNet (Simonyan & Zisserman 2014),

- GoogLeNet (Szegedy, Liu, Jia, Sermanet, Reed, Anguelov, Erhan, Vanhoucke, Rabinovich et al. 2015)

- Inception (Szegedy, Vanhoucke, Io↵e, Shlens & Wojna 2016).

(but now, vision transformers are of big focus due to its generality)

ResNet

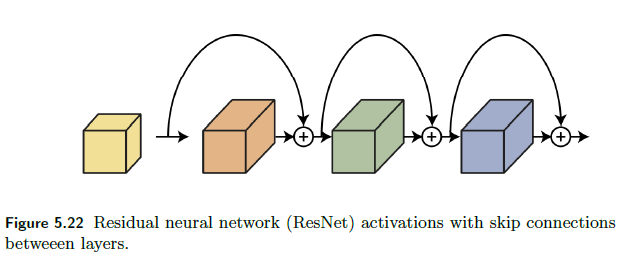

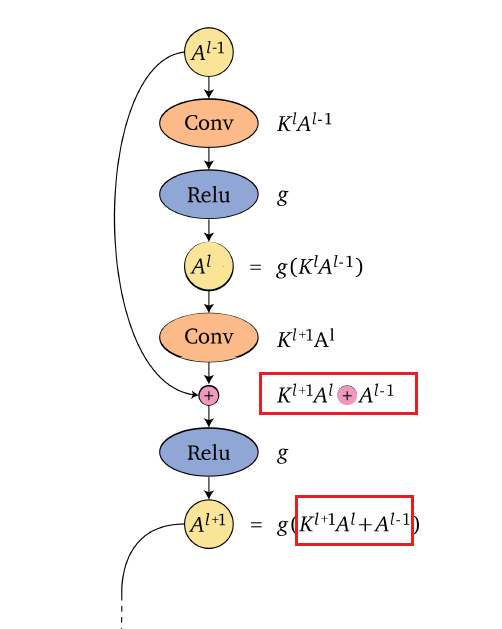

The deep residual neural network (ResNet) architecture (He, Zhang, Ren & Sun 2016a), (He, Zhang, Ren & Sun 2016b), introduced skip connections between consecutive layers as shown below

| Architecture | Details |

|---|---|

|

|

The idea is simple, the pre-activation of a layer $z^l$ now has a residual term from previous layer

\[z^{l+1} = f(W^l,a^l)+a^l;\quad a^{l+1} = g(z^{l+1})\]instead of $z^{l+1} = f(W^l,a^l)$.

- adding those skip connections/residual terms allow training deeper neural networks by avoiding vanishing gradients. The ResNet architecture enables training very deep neural networks with hundreds of layers.

- Adding a new layer to a neural network with a skip connection does not reduce its representation power. Adding a residual layer results in the network being able to represent all the functions that the network was able to represent before adding the layer plus additional functions, thus increasing the space of functions.

For instance, a three layer network composition originally would look like

\[F(x)=f(f(f(x)))\]Now it becomes, if each layer has a residual:

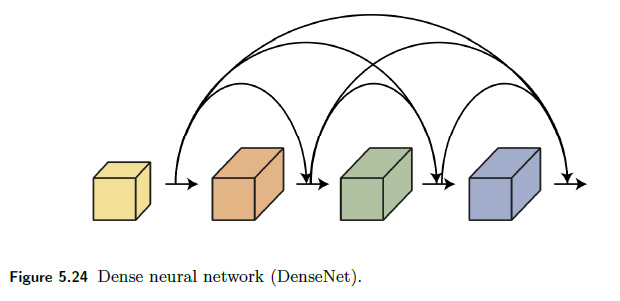

DenseNet

A DenseNet (Huang, Liu, van der Maaten & Weinberger 2017) layer concatenates the input $x$ and output $f(x)$ of each layer to form the next layer $[f(x), x]$.

- because it is concatenating it, the input in the first layer also directly appears in input to any further layers

- in the ResNet, input in the first layer indirectly appears as they are absorbed in, such as $f(f(x)+x)$

Therefore, graphically:

And the formula composition for three layers look like

\[F(x) = f(f([f(x),x]),[f(x),x]), f([f(x),x]),[f(x),x]\]Understanding CNNs

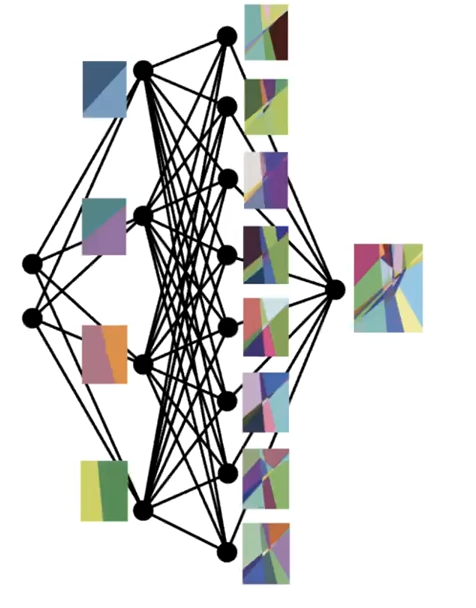

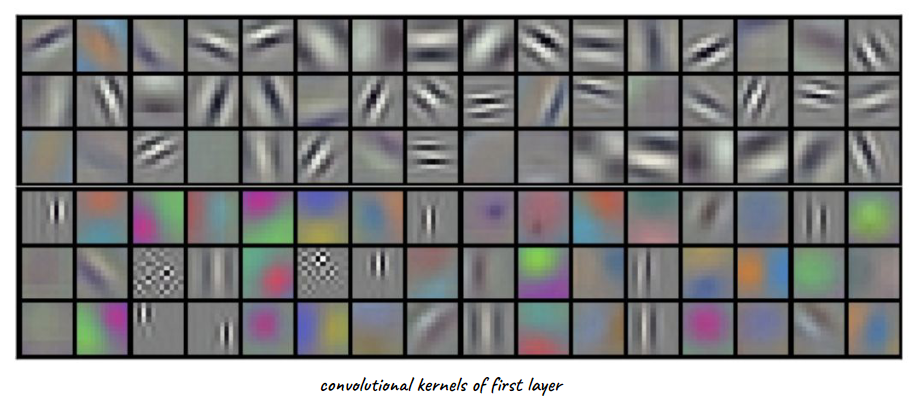

Consider the model ImageNet, which has the following architecture

When trained on a large dataset, since we also gradient descent to learn kernel/filters in CNN, we can look at the learnt kernels for different layers.

-

In the end the kernel is just a matrix with some constraints

-

therefore, we can impose those constraints only and let back propagation to learn those weights

which, interestingly, coincides with many of the handcrafted ones we had before

Note

- now we learn filters, but we need to specify the architecture

- this is now superseded with vision transformer, which learns both the architecture and the kernel

However, we are interested in knowing what patterns do each layer learn. How do we do that?

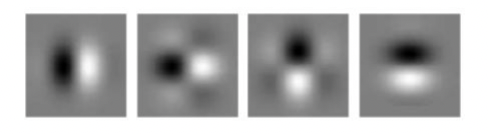

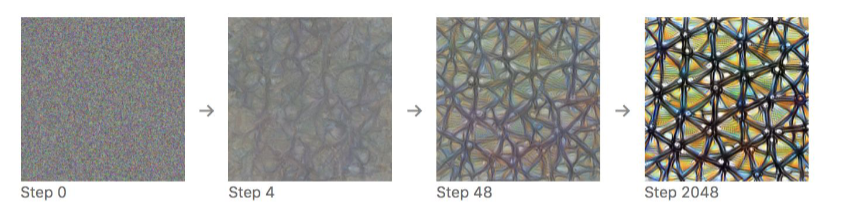

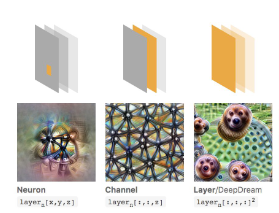

Input Maximizing Activation

Consider transferring the above to an optimization problem: given trained network with weights $W$, find input $x$ which maximizes activation.

\[\arg\max_x a^l_{i}(W, x)\]which we can find by gradient ascent.

-

e.g. given some kernel, doing gradient ascent gives:

where the first steps are basically initializing with random noise

Applying this technique to multiple layers, and we find that

so basically:

- first layers learn the edges

- then textures

- then objects

Alternatively, you can also use this technique to find out what patch of images this kernel is bad at:

Transfer Learning

The fact that those CNN learn fundamental concepts such as edges and textures means we can do transfer learning

Task 1: learn to recognize animals given many (10M) examples which are not horses

Task 2: learn to recognize horses given a few (100) examples

- Keep layers from task 1, re-train on last layer

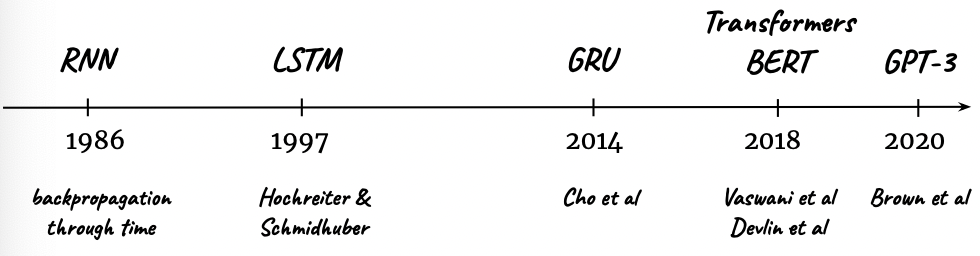

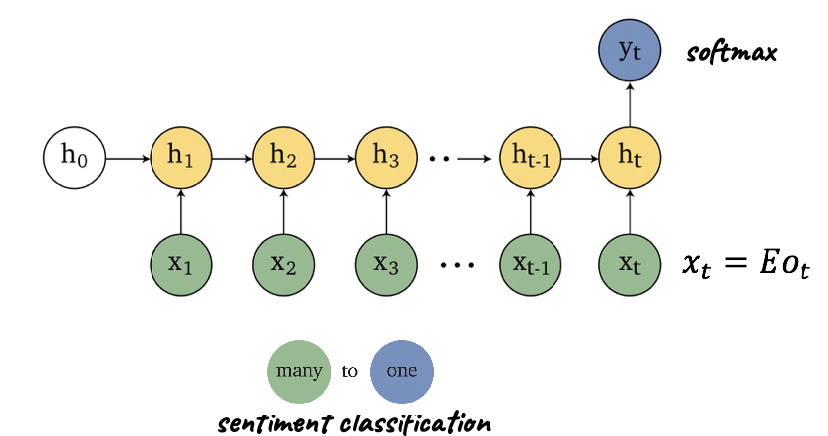

Sequence Models

Applications using sequence models include machine translation, protein structure prediction, DNA sequence analysis, and etc. All of which needs some representation that remembers previous data/state.

An overview of what will be discussed

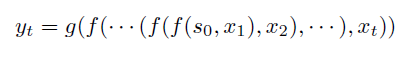

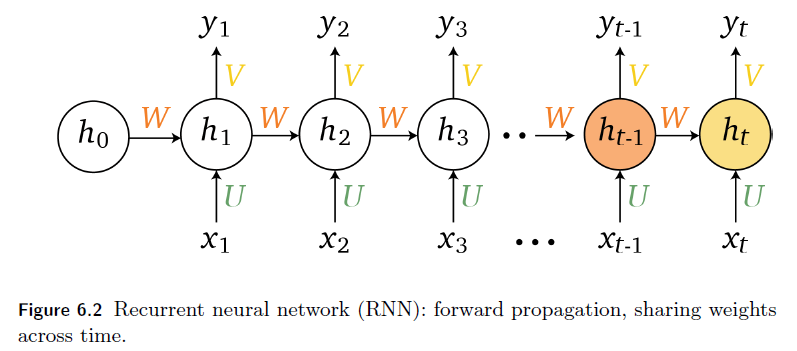

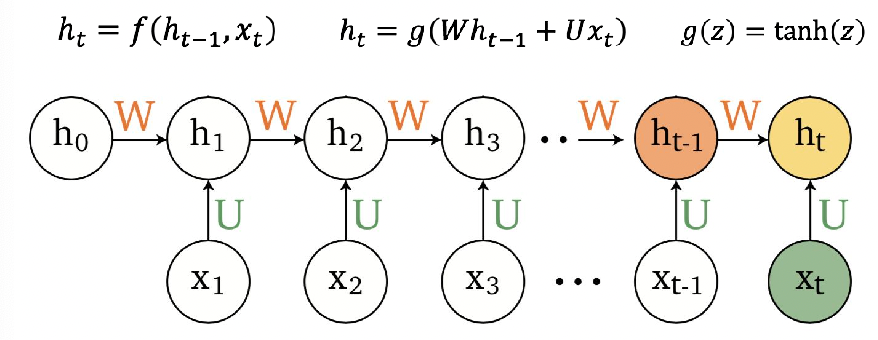

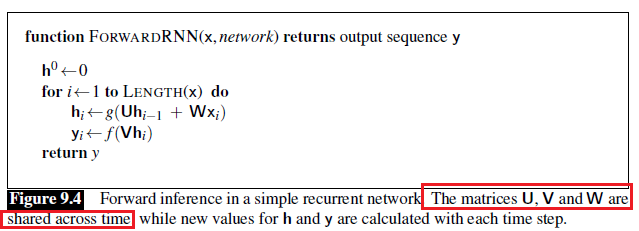

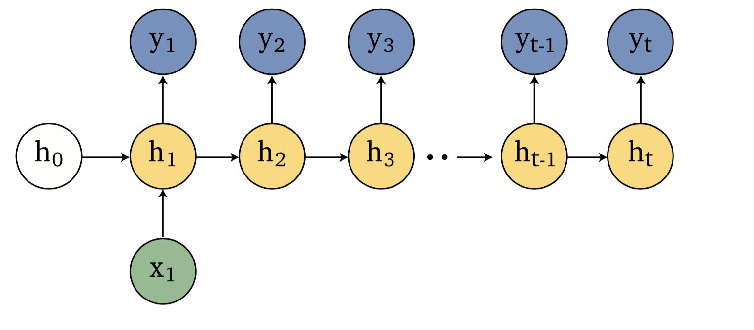

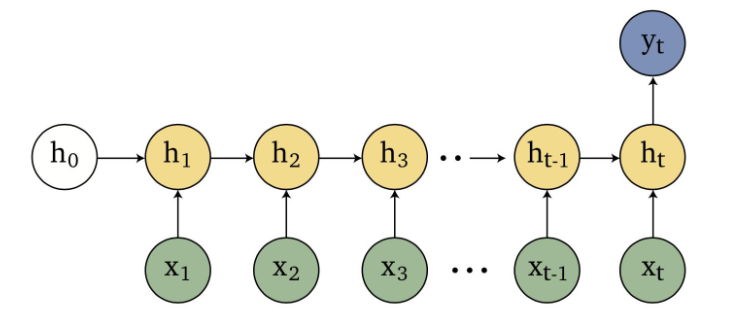

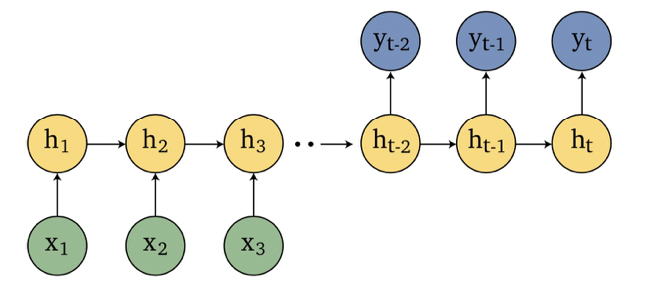

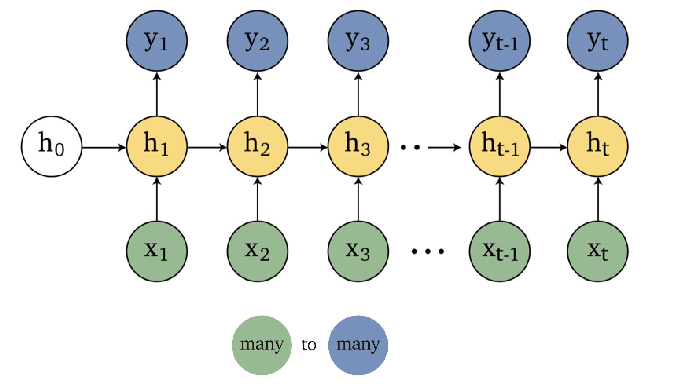

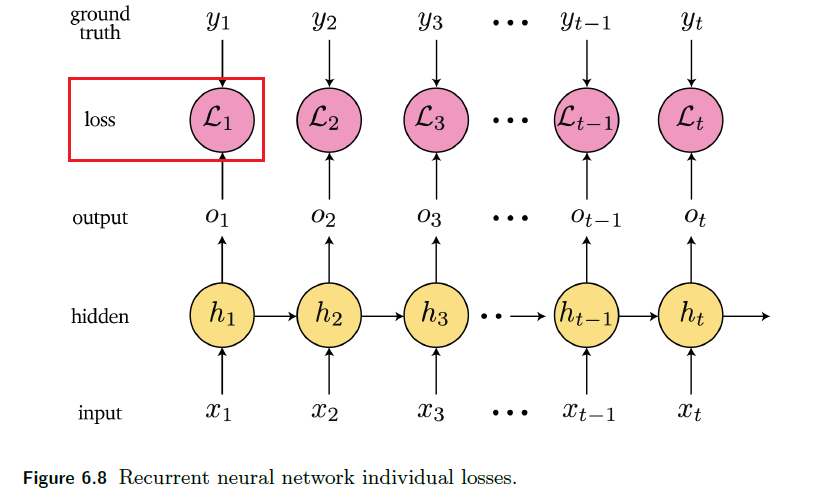

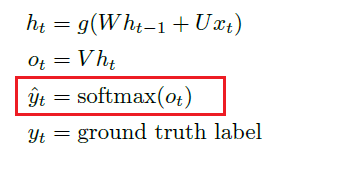

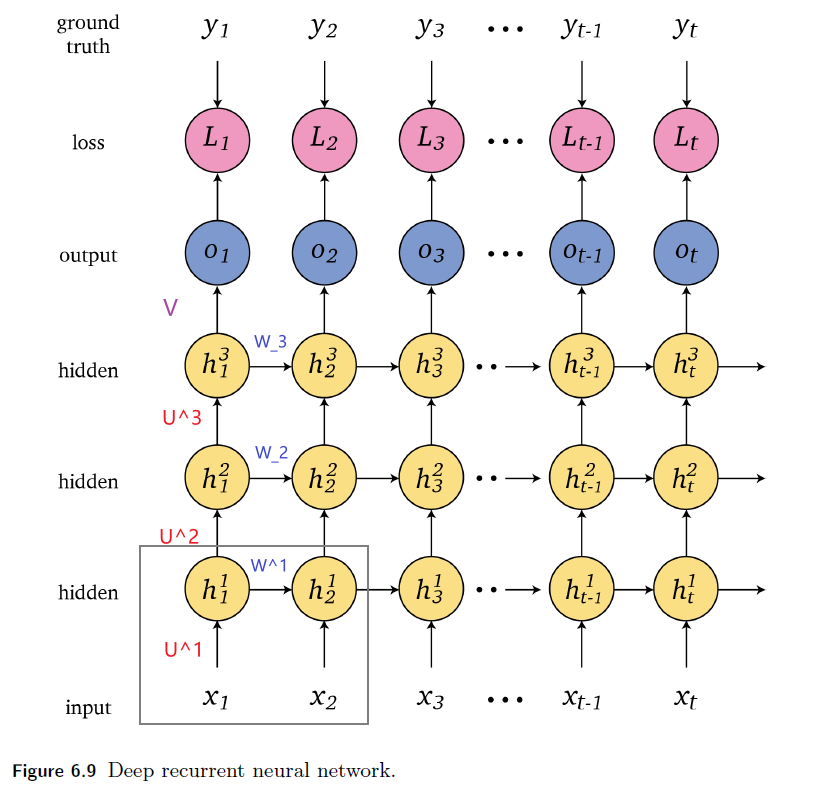

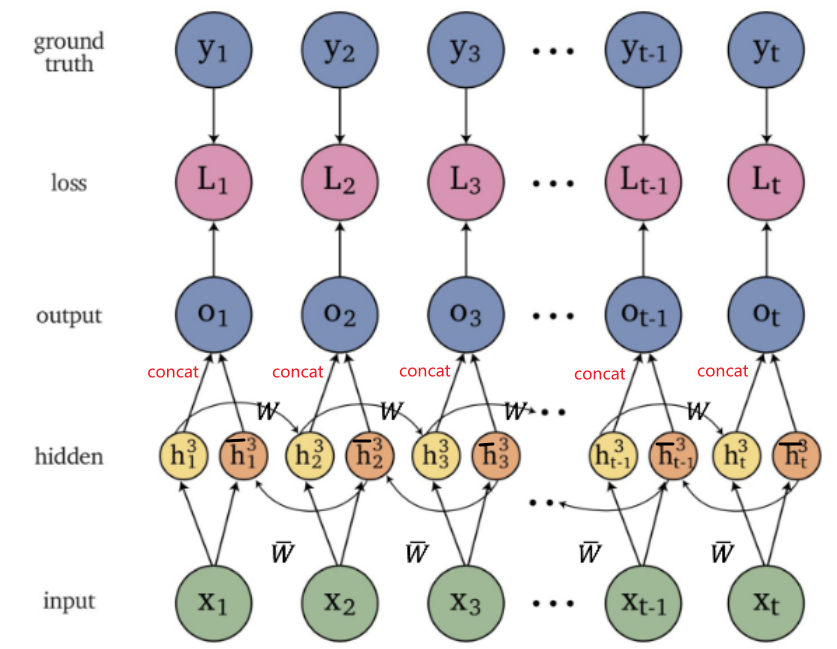

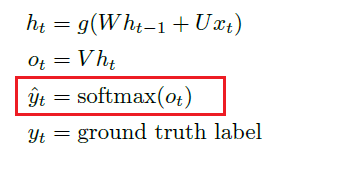

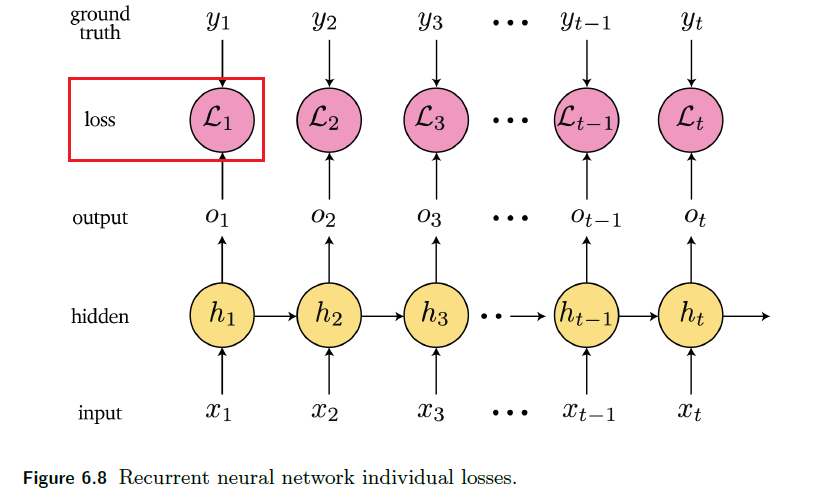

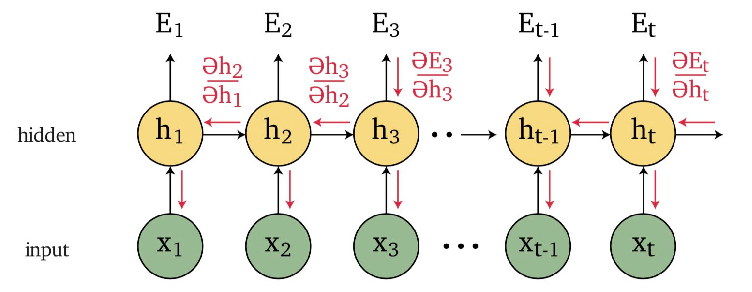

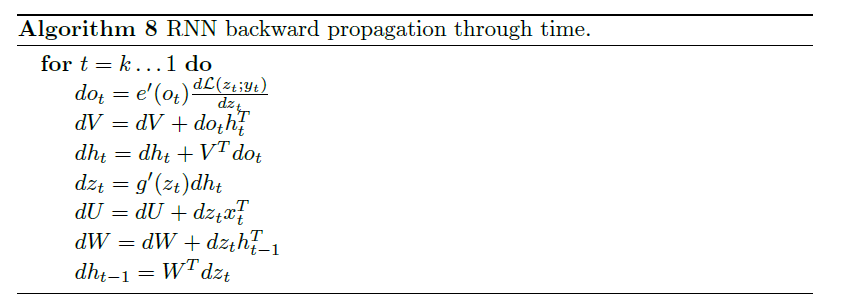

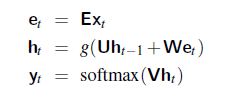

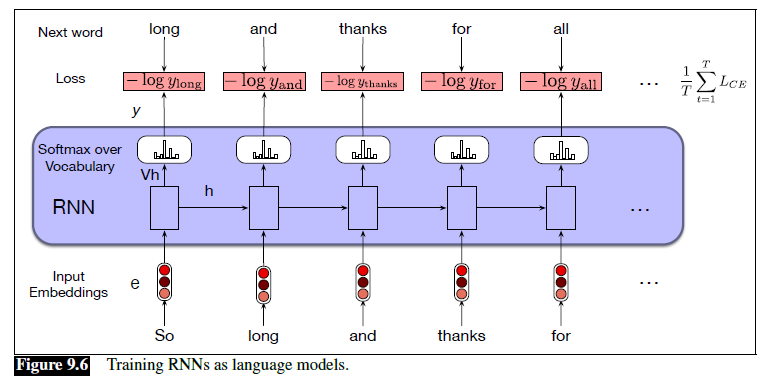

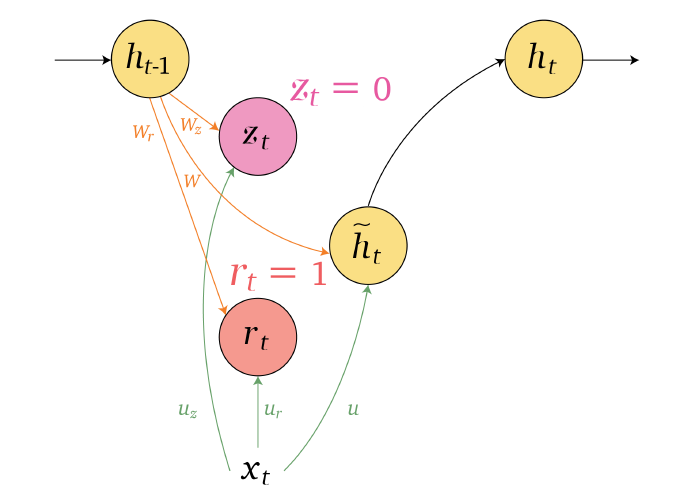

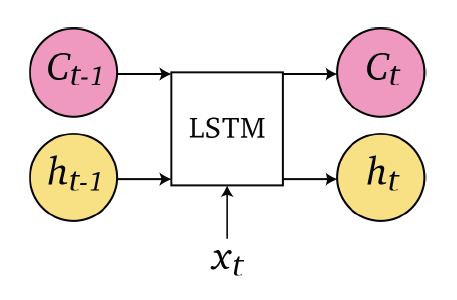

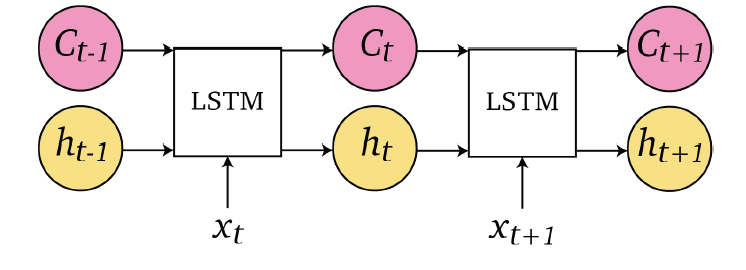

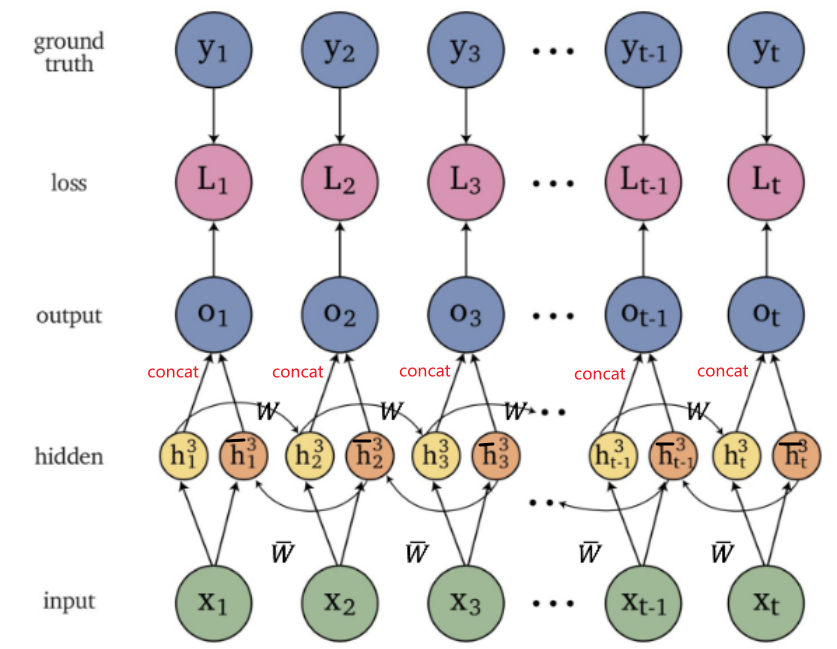

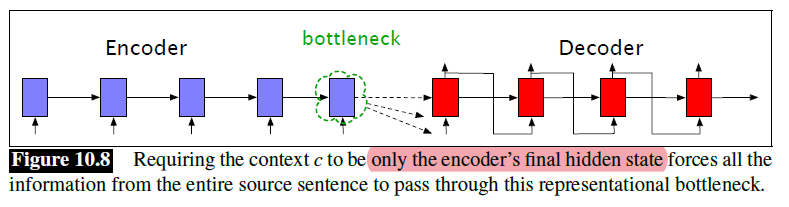

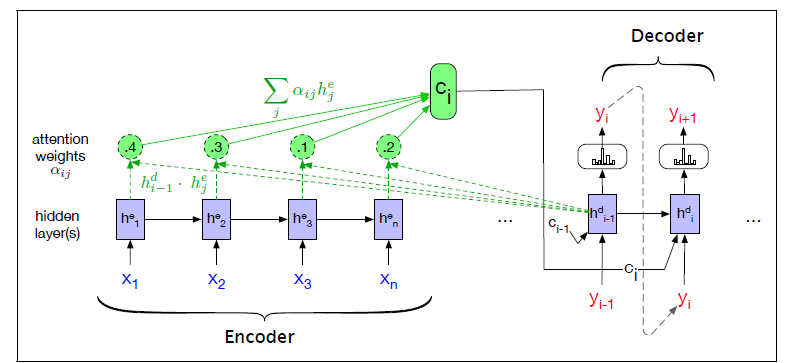

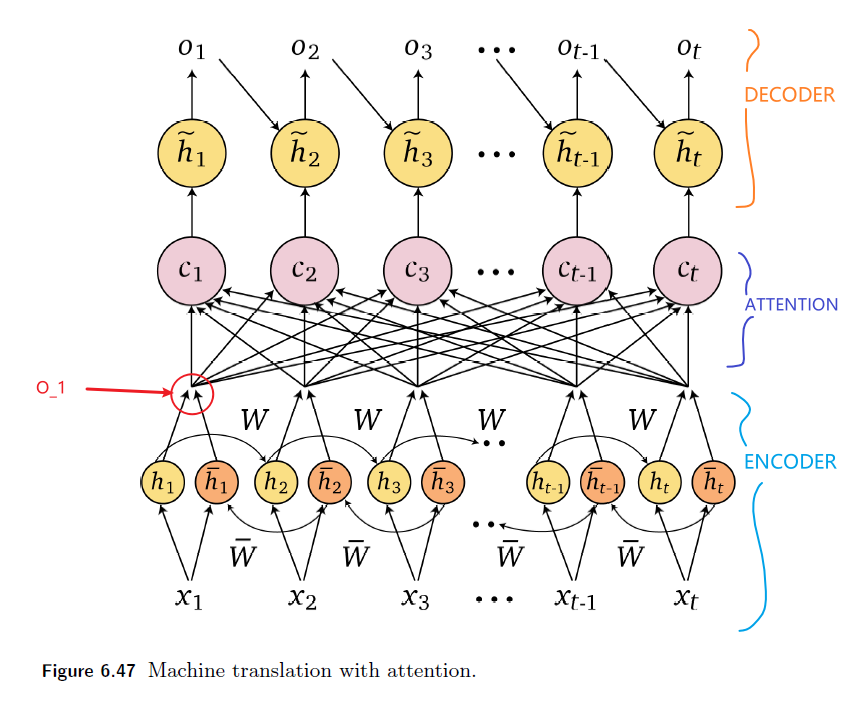

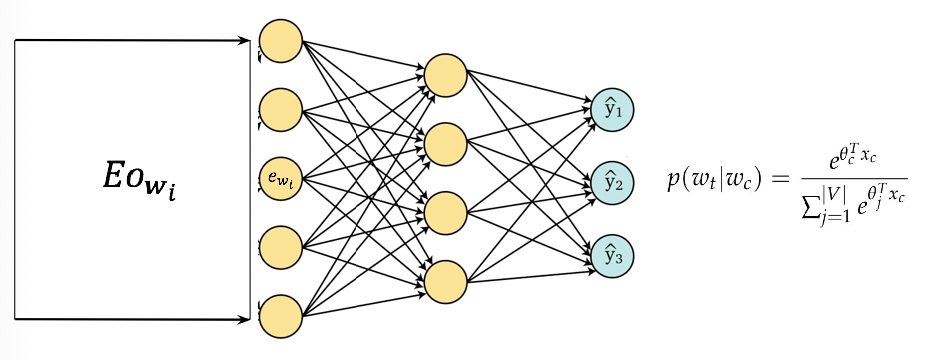

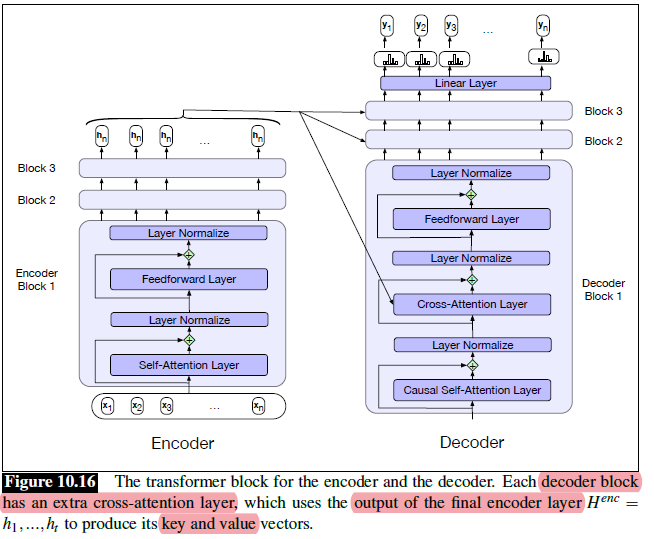

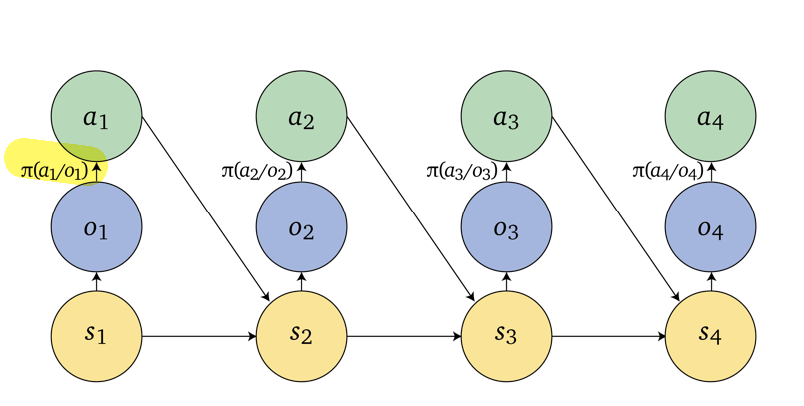

- Sequence models