COMS4118 Operating Systems

- Week 1 - Introduction

- Week 2

- Week 3 - 4

- Week 5

- Midterm Solution

- Week 6 - Process Scheduling

- Week 7 - Memory Management

- Week 8 - 10 - File System

- Virtual File System

- Disk and Disk I/O

- Data Organization on HDD

- Data Organization on SSD

- HDD and RAID

- File System Review

- Actual Linux Implementation

- Linux File System

- struct inode (in memory)

- struct file (in memory)

- struct superblock (in memory)

- struct dentry (in memory)

- struct task_struct (in memory)

- c libfs.c (generic)

- c init.c

- func do_mount()

- func legacy_get_tree()

- c fs/open.c

- func dcache_dir_open

- func dcache_readdir

- func d_alloc_name()

- snippet sub-dir iteration

- snippet iget_locked

- snippet create file/folder

- Linux Disk I/O

- Linux File System

- Week 11 - I/O System

- Useful Kernel Data Structures

- Useful Things to Know

Week 1 - Introduction

OS 1 Logistics

- Course Website

- includes TA OH sessions

- https://www.cs.columbia.edu/~nieh/teaching/w4118/

- Issues with the course

- Use the w4118 staff mailing list,

w4118@lists.cs.columbia.edu- e.g. regrade request

- Or email to Professor Jason personally

- Use the w4118 staff mailing list,

- Homework Assignments 50%

- Lowest will be dropped

- Submitted via

git, and by default latest submission counts (or, you can specify which submission)- instructions available on https://w4118.github.io/

- Codes can be written anywhere, but testing must be done in the VM machine of the course

- Using materials/code outside of your work needs a citation (in top-level

references.txt)- this includes discussions from fri.,ends

- except for materials in the textbooks

- see http://www.cs.columbia.edu/~nieh/teaching/w4118/homeworks/references.txt

- Details see http://www.cs.columbia.edu/~nieh/teaching/w4118/homeworks/

- Synchronous Exams 50%

- Midterm 20%

- Final 30%

Cores of the Course:

- How the underlying C APIs work

- Concurrency

- threading

- synchronization

- Programming in the Kernel

- build, compile, run your own OS

What is an OS

Heuristics: Are applications part of an OS? Is browser part of an OS?

Microsoft and Netscape. Microscope basically bundled the browser Netscape with their OS!

- the government accused Microsoft for taking a potential monopoly in software as well

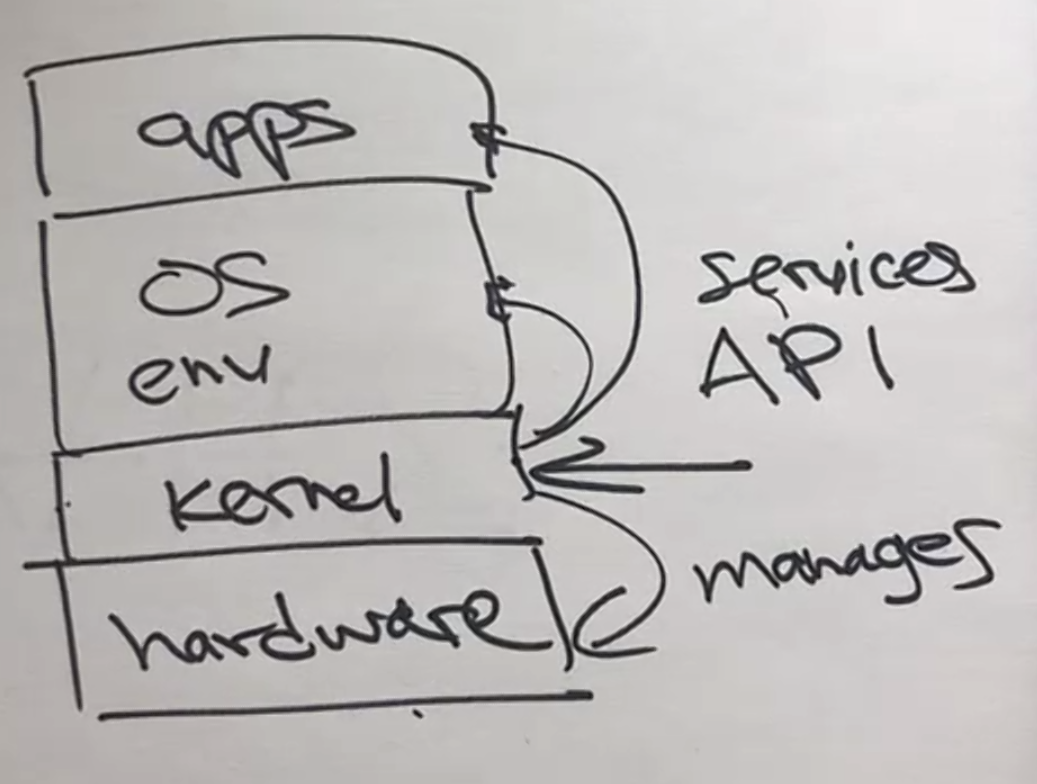

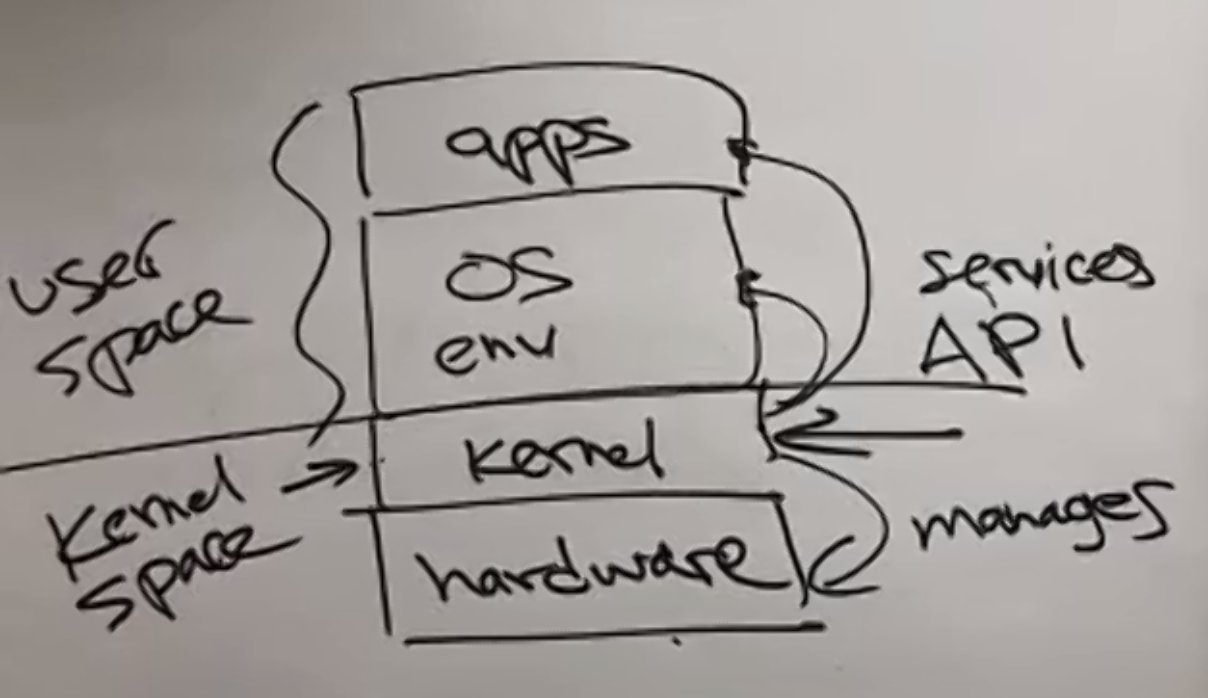

OS Kernel + OS environment

Kernel = Core part of an OS

where:

- A kernel provides APIs to its upper levels

Things we need an OS to do:

- manage the computer’s hardware

- provides a basis for application programs and acts as an intermediary between the computer user (and applications) and the computer hardware

- to do this, OS supply some API/System Calls so that users/applications can use them

- run a program (process)

Reminder:

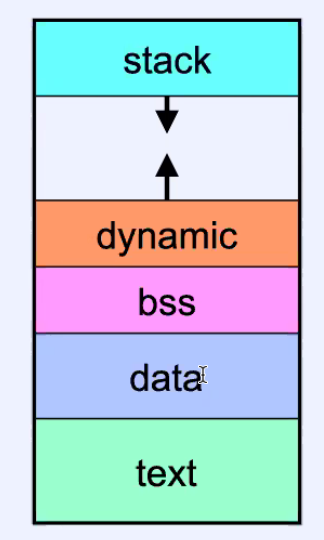

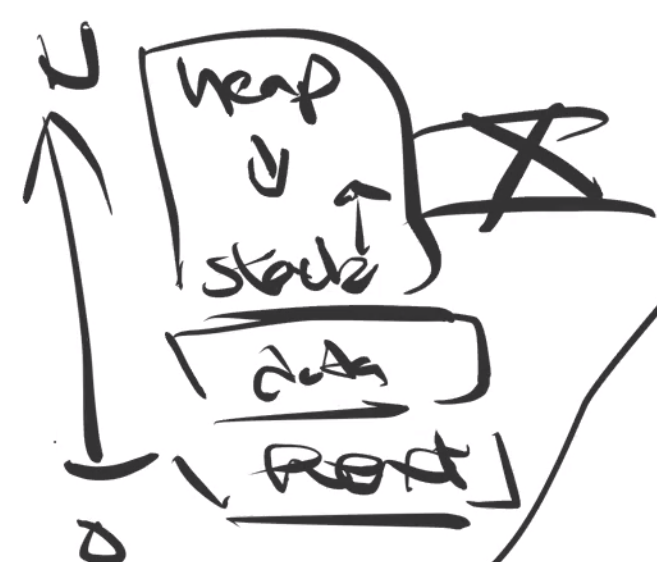

To run that process, the OS needs to manage the Unix Address Space

where:

- remember that your code stays in

text, static/global data indataandbss, and etc.

- the difference between

dataandbssis that one of them stores initialized data, one of them initialized data

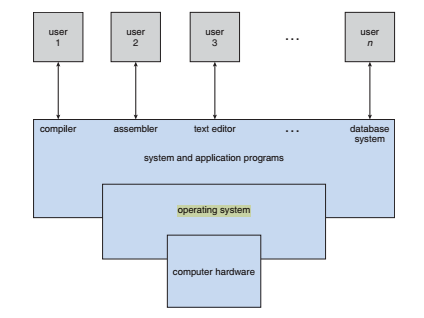

What OS Do

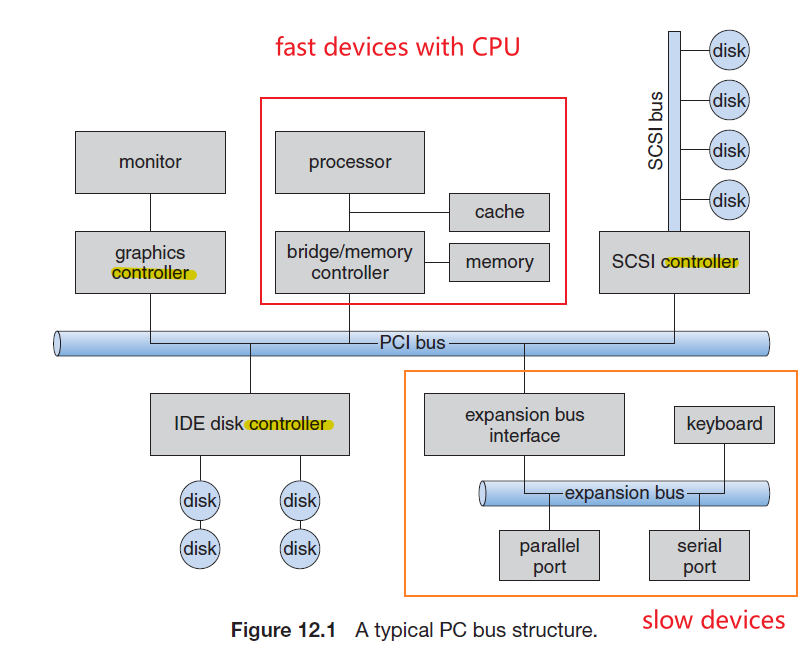

First, a computer system contains:

- A hardware

- CPU, memory, I/O devices

- An OS

- provides an environment within which other programs can do useful work

- depends on the need, OS can “act” completely differently (different implementation)

- for example, when you have a shared system, the OS will tend to maximize resource utilization— to assure that all available CPU time, memory, and I/O are used efficiently and that no individual user takes more than her fair share. (As compared to a personal laptop, which does not care about resource sharing)

- from the computer’s point of view, the operating system is the program most intimately involved with the hardware.

- In this context, we can view an operating system as a resource allocator.

- An application program

- word processors, spreadsheets

A small history of OS

- The fundamental goal of computer systems is to execute user programs and to make solving user problems easier. Computer hardware is constructed toward this goal.

- Since bare hardware alone is not particularly easy to use, application programs are developed. These programs require certain common operations, such as those controlling the I/O devices. The common functions of controlling and allocating resources are then brought together into one piece of software: the operating system.

However, we have no universally accepted definition of what is part of the operating system.

Yet a popular definition is: operating system is the one program running at all times on the computer—usually called the kernel.

- Along with the kernel, there are two other types of programs:

- system programs, which are associated with the operating system but are not necessarily part of the kernel, e.g.

- System Software maintain the system resources and give the path for application software to run. An important thing is that without system software, system can not run.

- application programs, which include all programs not associated with the operation of the system, e.g. a web browser

- system programs, which are associated with the operating system but are not necessarily part of the kernel, e.g.

where:

- usually when you execute commands in CLI such as

ls, they basically executes a system program (a file), which then in turn will execute some underlying system calls to perform the task

Kernel vs OS

- Sometimes, people refer to the OS as the entire environment of the computing system, including the kernel, system programs, and GUIs (possibly some native applications as well).

- Below shows the general structure of a UNIX system, in particular, what a kernel does and the structure of the entire layered environment

System Calls

Definition:

- A system call (commonly abbreviated to

syscall) is the programmatic way in which a computer program requests a service from the kernel of the operating system- Therefore, it is the sole interface between a user and a kernel

- it is also made to return integers

Here, you need to know that:

-

the difference between user space and kernel space is:

Definition: Errors in System Calls

- Since using the APIs depend on the programmer, OS puts error code in

errnovariable

- errors are typically indicated by returns of

-1

For Example: A System Call

write() is actually a system call of UNIX:

if(write(df, buffer, bufsize) == -1){

// error!

printf("error %d\n", errno); // for errno, see below

// perror

}

where:

- Many system calls and system-level functions use the

errnofacility. To deal with these calls, you will need to includeerrno.h, which will provide something that looks like a global integer variable callederrno.- There are a standard set of predefined constants corresponding to common error conditions (see

errno.hfor details).

- There are a standard set of predefined constants corresponding to common error conditions (see

Reminder:

- C does not have any standard exception signaling/handling mechanism, so errors are handled by normal function return values (or by side affecting some data).

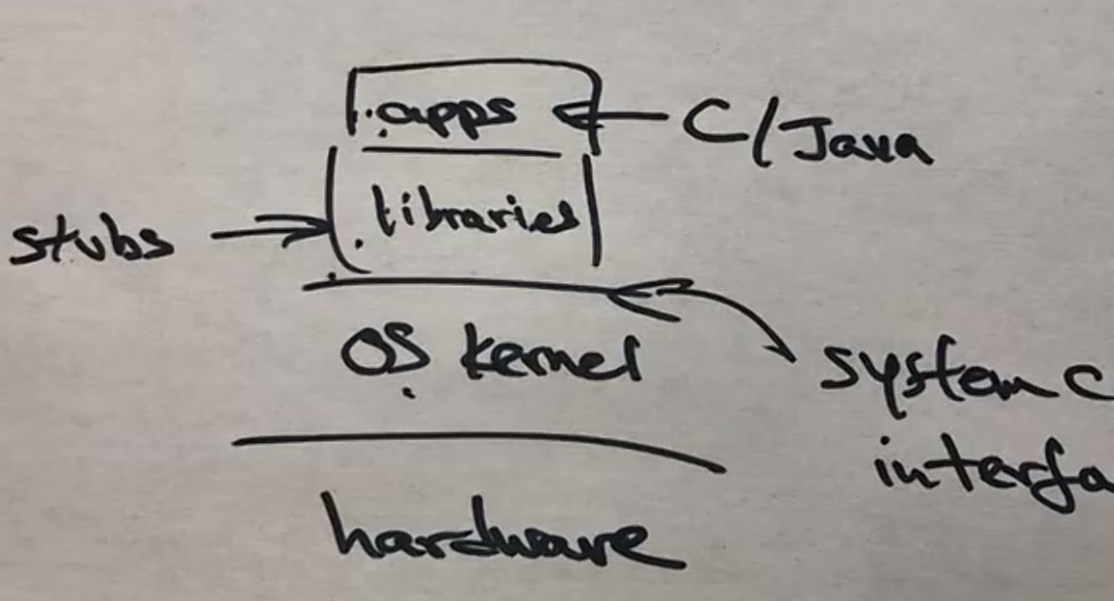

However, for most of the other cases, this is what happens:

where:

- the library does a little abstraction for making system calls easier

- for example, the

libclibrary - for example,

fread()andfwrite()are library functions. (They also do not return integers.)

- for example, the

Creating a Process

Heuristics:

- How does an OS create and run a process?

- First, we need to remember that a process has:

- an Unix Address Space (stack, heap, etc.) to keep track of what the program is doing

- a stack pointer to tell you where the stack is

- a program counter to tell you which line of code/instruction to run

- registers for storing data

- etc.

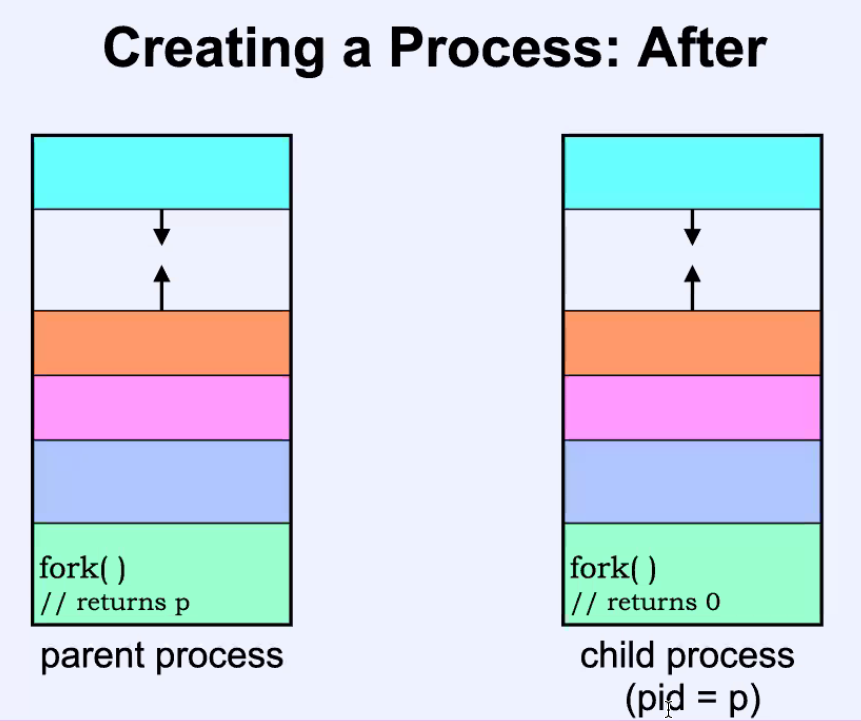

In fact, we know one C function (abstracted away by the OS) to create a process: fork()

Reminder:

- After calling

fork()during one of your process, it will create another process:

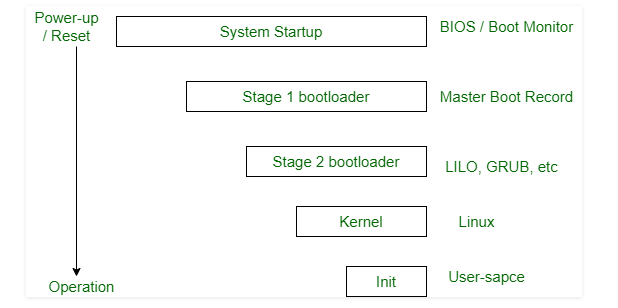

Booting an OS

In summary, the following happens:

- When a CPU receives a reset event—for instance, when it is powered up or rebooted—the instruction register is loaded with a predefined memory location, and execution starts there

- At that location is the initial bootstrap program. This program is in the form of read-only memory (ROM). (This means it is fixed code)

- The PC will be loaded with something called a BIOS (Basic Input/Output System.) On modern PCs, the CPU loads UEFI (Unified Extensible Firmware Interface) firmware instead.

- Then, that bootstrap program (e.g. BIOS) hand off to a boot device

- typically, it is on your hard disk/SSD. Traditionally, a BIOS looked at the MBR (master boot record), a special boot sector at the beginning of a disk. The MBR contains code that loads the rest of the operating system, known as a “bootloader.”

- basically, it will read a single block at a fixed location (say block zero) from disk (so the bootloader) into memory and execute the code from that boot block

- For large operating systems (including most general-purpose operating systems like Windows, Mac OS X, and UNIX) or for systems that change frequently, the operating system is on disk.

- boot device is configurable from within the UEFI or BIOS setup screen.

- so you can switch that to read from a USB stick

- typically, it is on your hard disk/SSD. Traditionally, a BIOS looked at the MBR (master boot record), a special boot sector at the beginning of a disk. The MBR contains code that loads the rest of the operating system, known as a “bootloader.”

- Then, depending on the choice, that boot block/bootloader (e.g. GRUB) can:

- contain the entire sophisticated code that load the entire OS into memory and begin execution

- contain a simple code that only knows where to find the more sophisticated bootstrap program on disk and also the length of that program.

- On Windows, the Windows Boot Manager (bootloader) finds and starts the Windows OS Loader. The OS loader loads essential hardware drivers that are required to run the kernel—the core part of the Windows operating system—and then launches the kernel.

- On Linux, the GRUB boot loader loads the Linux kernel.

- Now that the full bootstrap program has been loaded, and you can now work with it.

- On Linux, at this point, the kernel also starts the

initsystem—that’ssystemdon most modern Linux distributions

- On Linux, at this point, the kernel also starts the

The next step of

initis to start up various daemons that support networking and other services.

- X server daemon is one of the most important daemon. It manages display, keyboard, and mouse. When X server daemon is started you see a Graphical Interface and a login screen is displayed.

Questions

-

Question

“The boot block can contain a simple code that only knows where to find the more sophisticated bootstrap program and the length…”. Why do the more work of locating the other bootstrap program? Why can’t it just load the sophisticated boot strap program directly?

Is it there to allow users to potentially install and then choose from different OS?

Week 2

Using your Computer System

After booted, how do we use it?

- Explanation of what happens at boot up time is written in section Booting an OS

Some History:

Before OS comes up:

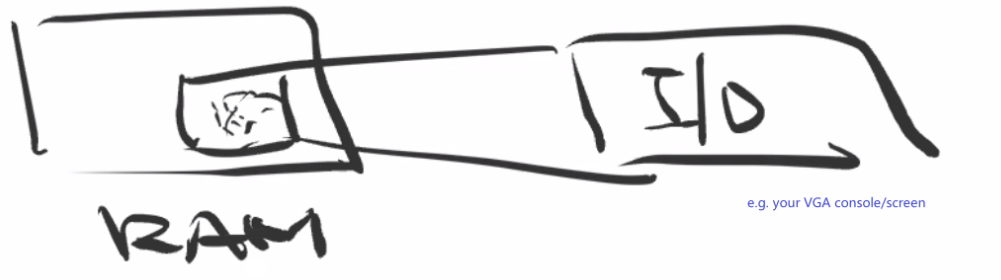

people uses assembly code to manipulate registers/RAM

commonly used ones include x86 with AT&T syntax:

mov $0xDEAD, %ax # to move the value 0xDEAD into register axthis means that to do I/O, you will need to use a Memory Mapped I/O, which basically maps addresses of an I/O device to your RAM, so that you have a way to directly read/write data into the I/O

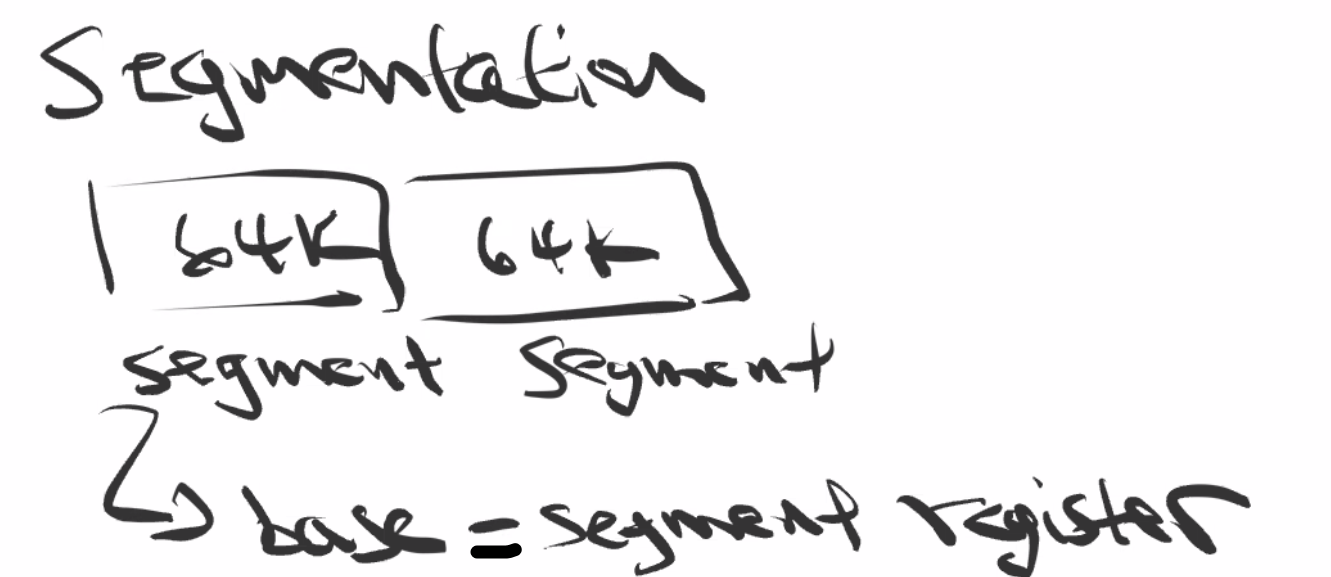

However, when the OS booted up, it is in 16-bit mode, so that you can only address up to

0xFFFFTherefore, to access addresses outside of that, we need to use segmentation:

So addresses are calculated with:

address = base * 0x10 + offsetthen to access address

0xf0ff0:mov $0xf000, %ax mov %ax, %ds # you cannot directly copy literals into ds register movb $1, (0xff0) # accesses 0xf0ff0

Now, when you have an OS

- All we needed to do is to use

OS System Calls- without all the difficulty with assembly code and stuff

- Can use the C library

- Can have multiple programs and run multiple processes

But in essence, it just becomes that the OS have the control all the hardware.

- instead of yourself being able to manipulate via the assembly code

- so the OS has to deal with:

- program interrupt timers

Interrupts

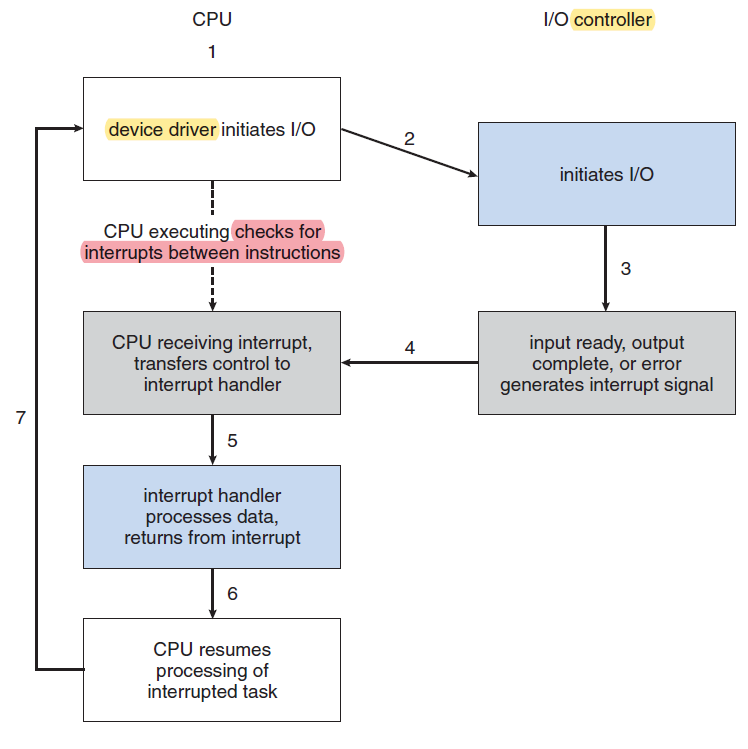

How does interrupt works?

In between your instructions, the CPU checks from the interrupt line, whether there has been an interrupt, and will handle that before proceeding.

where:

- attached to the CPU, you have an INTR(upt) line

- from that PIC, you can attach things such as:

- timer

- disk

- networks

- all of which that can generate interrupts

Interrupt vs Trap

- A trap is a software-generated interrupt.

- e.g. to call system calls

- Interrupts are hardware interrupts.

Typically, there is also an interrupt descriptor table (IDT) to that contains pointers to handlers to each type of interrupt

- so it has a bunch of functions, that can be used to handle different types of interrupts

- for example, when a time interrupt happened, the CPU checks the table, and looks at and goes to the address of the time interrupt handle (where some code will be there to handle time interrupts)

- those handlers will be OS code (managed by the OS)

- this IDT table is stored in the

idtregister- this will be setup by the OS at boot/load time

Note

- When your CPU has to deal with an interrupt, you need your CPU to push every register holding current data into the stack

- Then, when the CPU is done with the interrupts, it pops off from the stack, so that it can deal with last processed code

Exception

Exceptions happen within the instruction, as compared to interrupts between instructions

- for example, when you attempt to divide by 0, the CPU itself will throw an interrupt

CPUs tend to throw an exception interrupt (in between exceptions), on things like division by zero, or dereferencing a NULL pointer.

- These interrupts are trapped, like when hardware interrupts, halting execution of current program and return control to the OS, which then handles the event.

CPU Modes

This could be very useful to deal with constrain accesses/control security .

- user mode

- privileged mode (kernel)

When you are running user programs, your OS switches the CPU into user mode

When you are running necessary system calls, your OS switches the CPU into kernel mode

Some privileged instructions involve:

- writing the

idtregister - disable interrupts

- if disabled, it means interrupts still comes, but the CPU will not check them between instructions anymore

- switch into kernel/privileged mode

- etc, so that the OS can maintain control

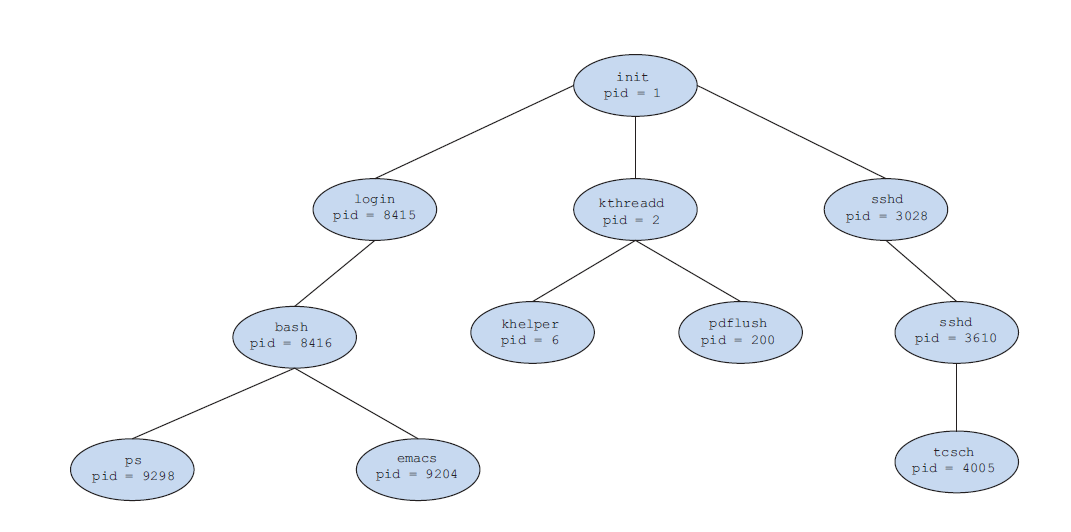

Init Process

- The

initprocess (referred in UNIX often) sets up everything, and is the first process - All the following processes are the children of this

initprocess

Week 3 - 4

Processes

Each process is represented in the operating system by a process control block (PCB)—also called a task control block:

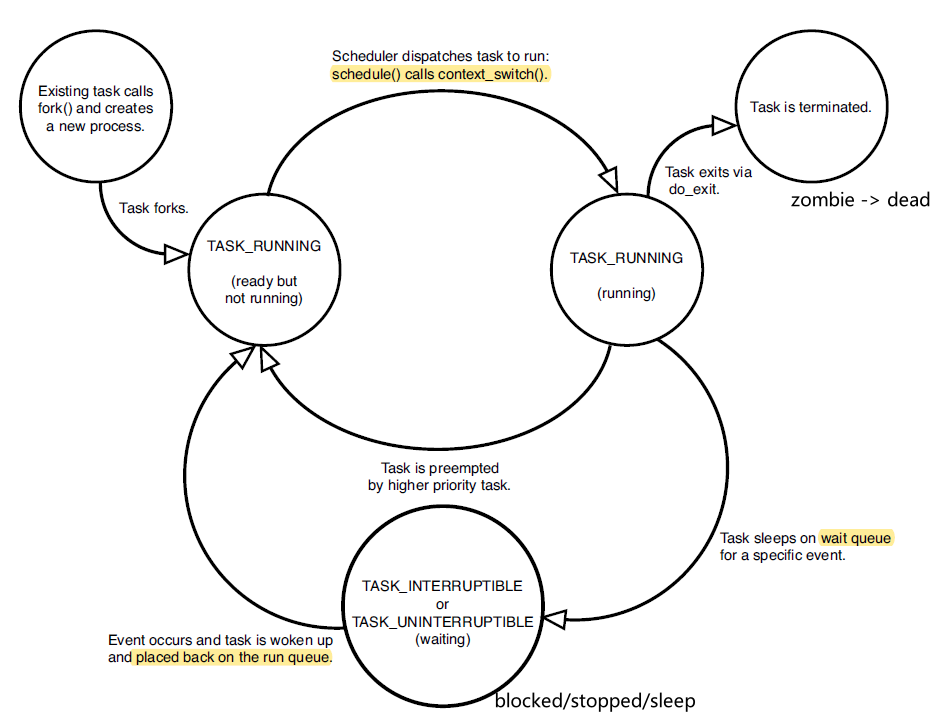

Life Cycle of a Process

-

it is created

- e.g.

fork()

- e.g.

-

it is queued in a ready queue

- process scheduling

- in the state diagram:

- runnable

-

the scheduler calls the process and now it is running

- in the state diagram

- running

- blocked means your program is waiting on something (e.g. User input, I/O), and not proceeding in execution

- wakes up means your process has got what it is waiting, and goes back in the queue

- in the state diagram

-

program terminates

- the program will not exit unless it has entered the running state

- this explains why, sometimes sending

Ctrl-Cwill not work, because the process has not yet went out from the blocked state

- this explains why, sometimes sending

- in the state diagram

- terminate

- for a process, first it becomes a zombie (parent has not called

wait()yet), then it is dead

- for a process, first it becomes a zombie (parent has not called

- terminate

- the program will not exit unless it has entered the running state

In Linux, this is how the state is recorded

#define TASK_RUNNING 0x0000 /* the obvious one */ #define TASK_INTERRUPTIBLE 0x0001 /* e.g. the Ctrl-C comes, OS interrupts/switches it to runnable */ #define TASK_UNINTERRUPTIBLE 0x0002 /* e.g. the Ctrl-C comes, it is still blocked */ #define __TASK_STOPPED 0x0004 #define __TASK_TRACED 0x0008 /* Used in tsk->exit_state: */ #define EXIT_DEAD 0x0010 /* Process exited/dead AND it has been wait() on by its parent */ #define EXIT_ZOMBIE 0x0020 /* Process exited/dead, but the task struct is still in the OS */ #define EXIT_TRACE (EXIT_ZOMBIE | EXIT_DEAD) /* Used in tsk->state again: */ #define TASK_PARKED 0x0040 #define TASK_DEAD 0x0080 /* First state when Processes is killed FROM running. */ /* Afterwards, it goes to EXIT_ZOMBIE */ #define TASK_WAKEKILL 0x0100 #define TASK_WAKING 0x0200 #define TASK_NOLOAD 0x0400 #define TASK_NEW 0x0800 #define TASK_STATE_MAX 0x1000

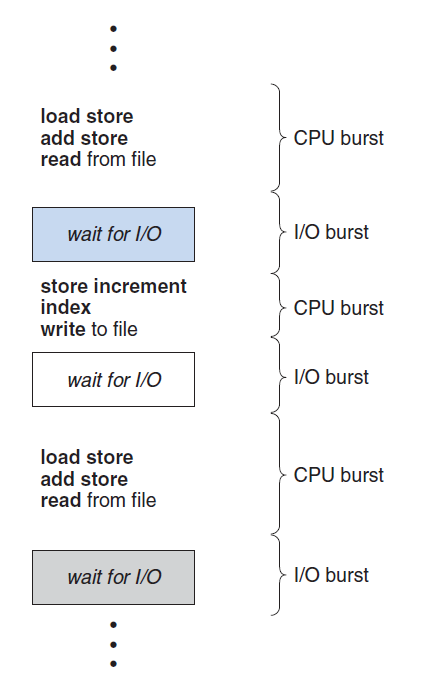

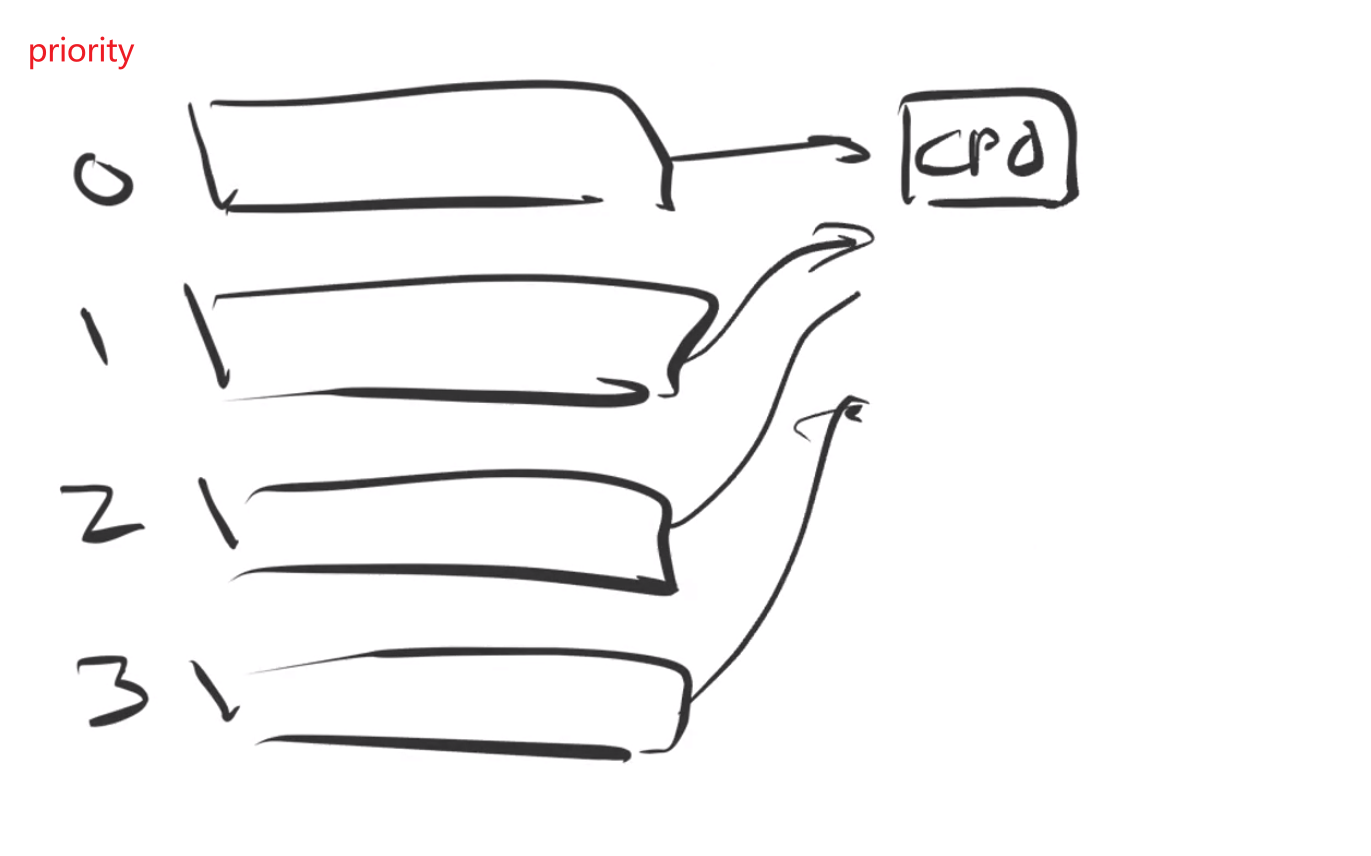

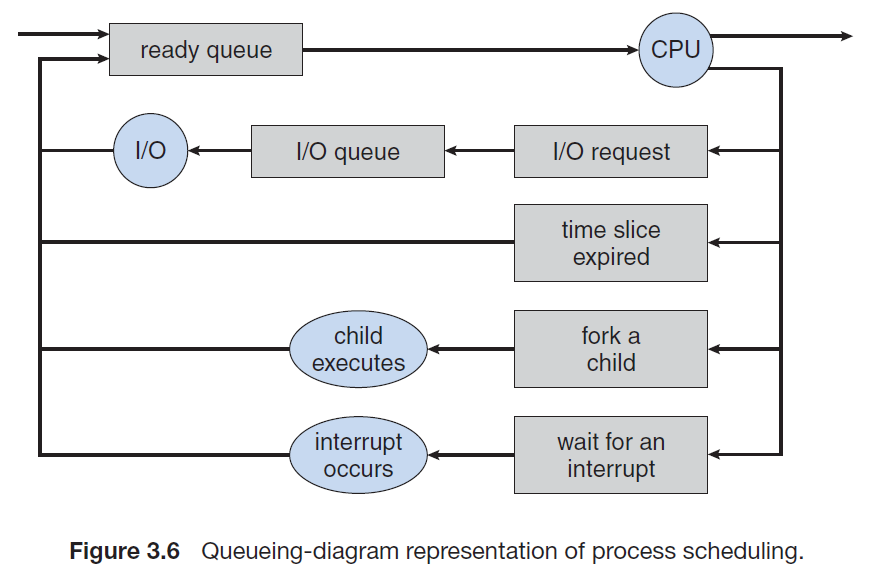

Process Scheduling

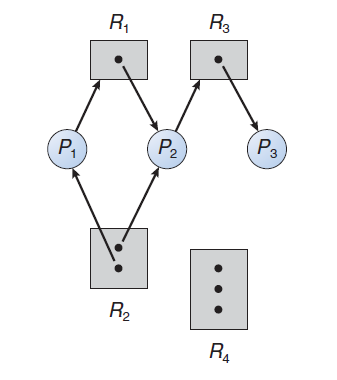

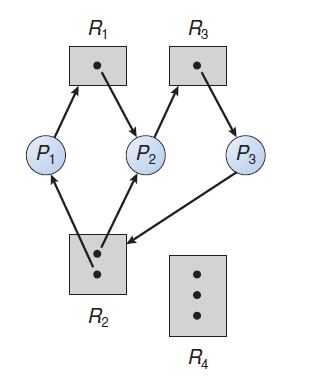

where:

- Each rectangular box represents a queue.

- Two types of queues are present: the ready queue and a set of device queues.

- The circles represent the resources that serve the queues, and the arrows indicate the flow of processes in the system.

A new process is initially put in the ready queue. It waits there until it is selected for execution, or dispatched. Once the process is allocated the CPU and is executing, one of several events could occur:

- The process could issue an I/O request and then be placed in an I/O queue.

- The process could create a new child process and wait for the child’s termination.

- The process could be removed forcibly from the CPU, as a result of an interrupt, and be put back in the ready queue.

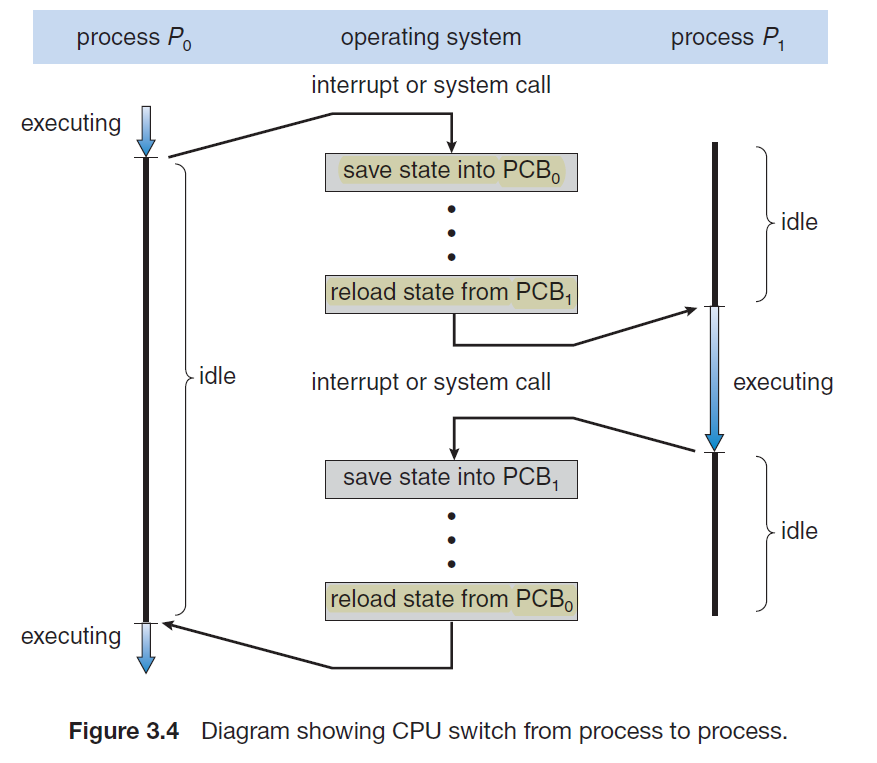

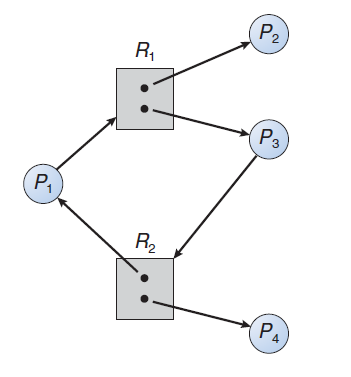

Context Switch

This basically refers to the phenomenon that the OS scheduler decides to switch the running current process to running another one (e.g. due to time being used up).

For Example:

- A user process

Phas used up the time it has for execution - Time Interrupt sent to kernel

- Kernel

scheduleanother processQto run. In fact, this is what happens:- process

Pchanges its state to, for example,Running -> Runnable - process

Pthen callsscheduleto schedule another process to run

- process

- So at this point, a context switch happened

- you need to store the state of process

P

- you need to store the state of process

Process Creation

The init process (which always has a pid of 1) serves as the root parent process for all user processes.

Once the system has booted, the init process can also create various user processes, such as a web or print server, an ssh server, and the like

When a process creates a new process, two possibilities for execution exist:

- The parent continues to execute concurrently with its children.

- The parent waits until some or all of its children have terminated.

There are also two address-space possibilities for the new process:

- The child process is a duplicate of the parent process (it has the same program and data as the parent).

- The child process has a new program loaded into it.

Process Termination

A process terminates when it finishes executing its final statement and asks the operating system to delete it by using the exit() system call.

- At that point, the process may return a status value (typically an integer) to its parent process (via the

wait()system call). All the resources of the process—including physical and virtual memory, open files, and I/O buffers—are deallocated by the operating system.

Zombie Process

When a process terminates, its resources are deallocated by the operating system. However, its entry in the process table must remain there until the parent calls

wait(), because the process table contains the process’s exit status.So, a process that has terminated, but whose parent has not yet called

wait(), is known as a zombie process.

- All processes transition to this state when they terminate, but generally they exist as zombies only briefly. Once the parent calls

wait(), the process identifier of the zombie process and its entry in the process table are released.

Orphaned Process

Now consider what would happen if a parent did not invoke

wait()and instead terminated, thereby leaving its child processes as orphans.Linux and UNIX address this scenario by assigning the

initprocess as the new parent

- The

initprocess periodically invokeswait(), thereby allowing the exit status of any orphaned process to be collected and releasing the orphan’s process identifier and process-table entry.

Process Communication

Processes have its own address space. What if we need to share something between two processes?

Inter-process communication (IPC), can be done in two ways:

- message passing

- e.g. signals

- shared memory

Message Passing

Simple Message Passing

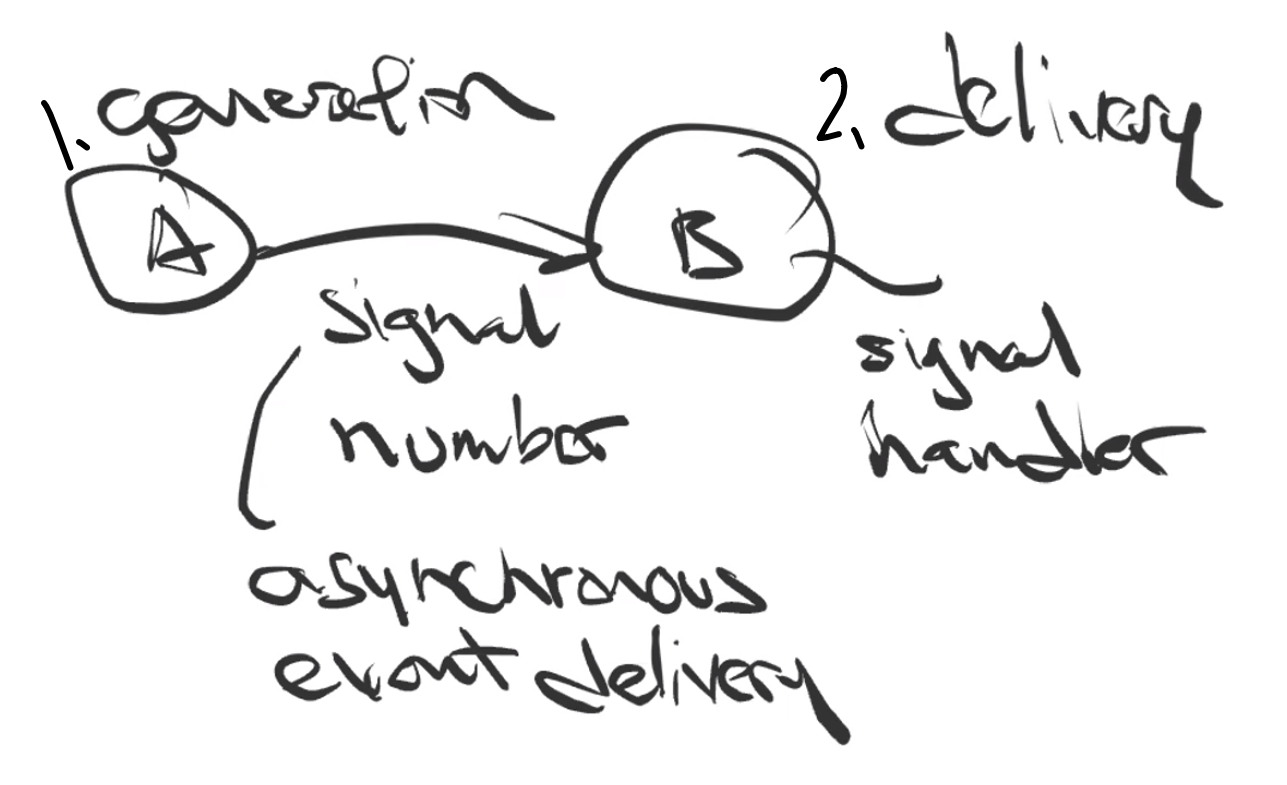

This is

where:

signal number, a number indicating some status, such asAhas done its work, and it needsBto proceed

- has nothing to do with Interrupts

singal handler, so that the other process knows what to do with the signal

- obviously,

Bcannot handle the signal untilBruns- e.g.

SIGINT, aims to kill a process, but you could change its implementation in thesignal handler. This is generated byCtrl-C

SIGKILL(forcibly kills the process, does not allow implementation insignal handler)

Under the hood, this is how it is implemented. Consider the SIGKILL signal is received by a process:

-

inside

kernel/signal.c-

it has

static int kill_something_info -

in the end, the OS does:

static int send_signal(int sig, struct kernel_siginfo *info, struct task_struct *t, enum pid_type type) { /* Should SIGKILL or SIGSTOP be received by a pid namespace init? */ bool force = false; /* bunch of stuff omitted */ return __send_signal(sig, info, t, type, force); }

-

-

In the end, there is a

sigaddset(&pending->signal, sig);, which adds a signal to the receiving process- the

pendingis basically a field of the task struct, which is a bit map indicating the current signals- in the end,

pendingis asigset_twhich looks likeunsigned long sig[_NSIG_WORDS]

- in the end,

- the

-

After setting the signal, the function in

static int kill_something_infocallscomplete_signal()- this essentially calls

signal_wake_up(), which will wake up the receiving process

- this essentially calls

-

Lastly, the receiving process eventually runs, which means that the OS wants to return back to User mode and run the code of that program.

-

now, during that process, the OS makes the process to be

RUNNING, and the OS does something like (might not be entirely correct):-

prepare_exit_to_usermode(), which calls -

exit_to_usermode_loop, which contains the following:static void exit_to_usermode_loop(struct pt_regs *regs, u32 cached_flags) { while (true) { /* Some code omitted here */ /* deal with pending signal delivery!! */ if (cached_flags & _TIF_SIGPENDING) do_signal(regs); /* other code omitted here */ } } - inside

do_signal, first we callbool get_signal(struct ksignal *ksig) - if you have

SIGKILL, then it will go intodo_group_exit() - then it does

void __noreturn do_exit(long code). This is also what theexit()system call does in the end- in addition to clean ups and exiting, it also sets the process

tsk->exit_state = EXIT_ZOMBIE; - then there is the ` do_task_dead(void)

, which calls__schedule(false);` to tell the OS to schedule another task to do some work, since this task is dead

- in addition to clean ups and exiting, it also sets the process

-

-

Note:

- In step 4 of the above, we see that if the process is still blocked and cannot get back to

RUNNABLE->RUNNING, then the process cannot process its signal, hence pressingCtrl-Cwill not exit it.

Lastly, how does the OS implement the wait() functionality for zombies?

- under the cover, it uses the

wait4()function inkernel/exit.c- this calls

kernel_wait_4, which eventually callsdo_wait() - which calls

do_wait_thread() - which calls

wait_consider_task() - if the task has exit state being a

Zombie, then it callswait_task_zombie()- this changes the state to

EXIT_DEAD - then it calls

release_task(). At this state, everything is cleaned up for the task and it is gone

- this changes the state to

- this calls

Shared Memory

In short, there APIs for a program to share memory, but it is difficult to use

- as a result, a solution is to use threads, which has the default behavior of shared heap/data

- therefore, it is much more easier to use threads for shared memory

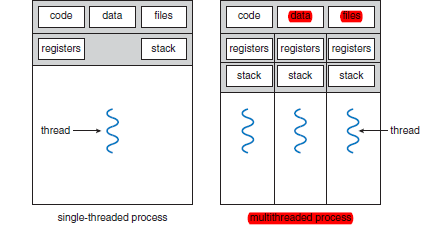

Threads and Cores

Cores

- each core appears as a separate processor to the OS

- so with 4-cores, you can execute 4-threads in parallel

- with 1-core, you can execute 4-threads concurrently (by interleaving)

Threads

-

a thread is a part of a process, and is like a virtual CPU for the programmer

-

a thread provides better code/data/file sharing between other threads, which is much more efficient than the message passing/memory sharing technique between processes

A Thread is:

- basically an independent thread of execution, so that you can execute stuff

A Thread contains:

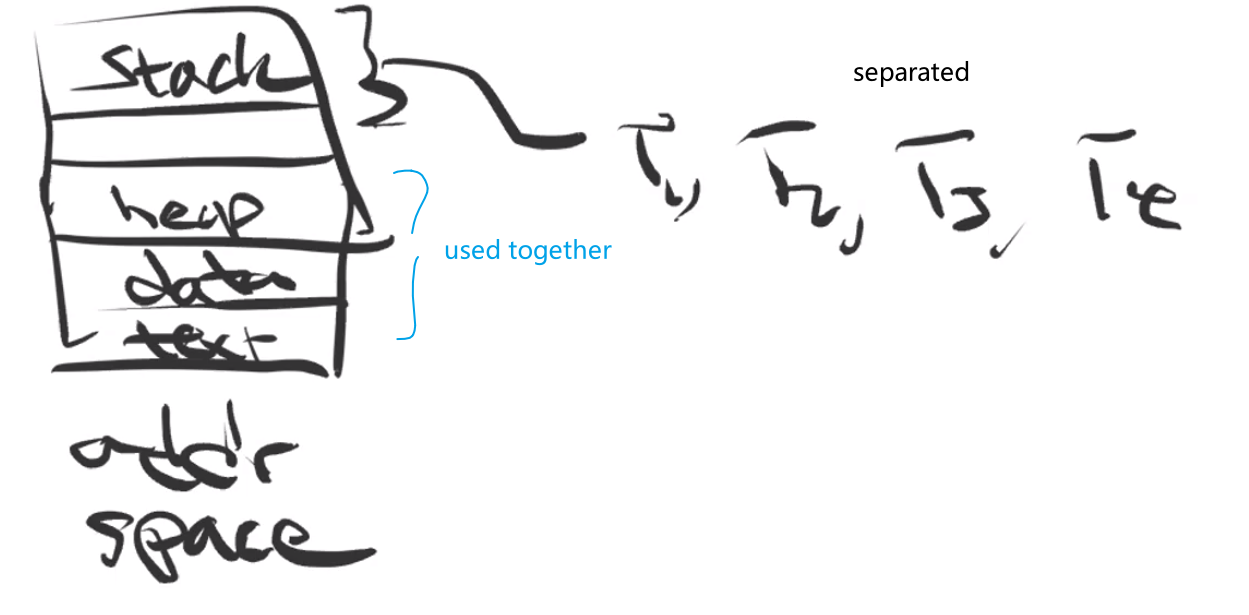

CPU Registers such as

PC,SP,GPR, etc (separated)Stack (separated)

Other Memories (e.g. heap) is shared

- so you basically have the same address space

where:

- in the end there is just one address space for those threads

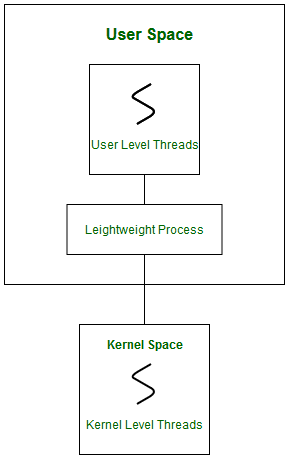

Multithreading Models

In real life, the threading happens as follows/is implemented as follows:

- user thread - the user controllable/programmable thread.

- The kernel does NOT know about them. This means they are by default belonging to a single process.

- this means for multithreading to actually occur, you need to create/manage copies of the processes

- kernel thread - managed by the OS, hence manages user threads

- your OS knows about them, so if one thread gets a

SEGFAULTfor example, then only that thread will die, but not other kernel threads - if your OS has kernel threads, then this could lead a user thread maps to a kernel thread for actual execution.

- your OS knows about them, so if one thread gets a

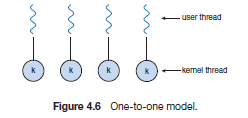

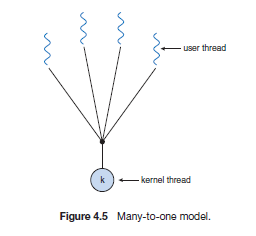

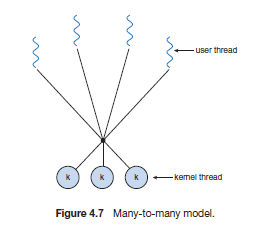

For mapping user thread to kernel thread, there are the following possibilities:

-

one to one model

currently used by many OS.

- Disadvantage: creating a user thread means also needing to create a kernel thread, which is some overhead

-

many to one model

- Disadvantage: no parallelism, only concurrency. Also, if one thread invoked a blocking system call, all the rest of the threads are blocked as well.

-

many to many model

Linux Implementation of Threads

In Linux, a thread is represented as the same as a process:

-

it is

struct task_struct -

the only difference would be the

memory spaceof atask_struct, which would be shared for threads -

a common function to use would be

clone()-

using

CLONE_VMflag, then threads will share their memory/address space -

using

CLONE_THREADflag, then threads are grouped together, hence associated to a single process -

for example:

#define CSIGNAL 0x000000ff /* signal mask to be sent at exit */ #define CLONE_VM 0x00000100 /* set if VM shared between processes */ #define CLONE_FS 0x00000200 /* set if fs info shared between processes */ #define CLONE_FILES 0x00000400 /* set if open files shared between processes */ #define CLONE_SIGHAND 0x00000800 /* set if signal handlers and blocked signals shared */ /* etc. */

-

struct task_struct {

/* stuff omitted */

struct mm_struct *mm; /* address apce */

struct mm_struct *active_mm;

/* stuff omitted */

}

Forking Implementation

Forking

- The behavior for a thread of a process to

fork()is defined as follows:

- it will copy the thread itself, and allocating a separate memory address

What happens exactly is:

-

A thread calls

__do_fork(), which callscopy_process()- remember that a thread and a process to Linux are the same, i.e.

struct task_struct

- remember that a thread and a process to Linux are the same, i.e.

-

inside the

copy_process(), it- creates/allocates a new

task_struct - initializes some fields

- copies information from the previous process, such as

copy_mm()

- creates/allocates a new

-

for example, inside

copy_mm(), if we have setCLONE_VM:-

static int copy_mm(unsigned long clone_flags, struct task_struct *tsk){ /* bunch of codes omitted */ if (clone_flags & CLONE_VM) { mmget(oldmm); mm = oldmm; /* shared address space */ goto good_mm; } /* otherwise, a separate address space */ mm = dup_mm(tsk, current->mm); } -

additionally. with

CLONE_THREAD:if (clone_flags & CLONE_THREAD) { p->exit_signal = -1; /* adds the group leader */ p->group_leader = current->group_leader; p->tgid = current->tgid; } else { if (clone_flags & CLONE_PARENT) p->exit_signal = current->group_leader->exit_signal; else p->exit_signal = args->exit_signal; p->group_leader = p; p->tgid = p->pid; }

-

Group Leader

- By default, each thread/process is its own group leader

- in the end, added to a new

thread_groupof the group leader being itself- e.g. if you

fork(), you are creating a new process/thread, so the new guy will be its own group leader- If you

clone()and configuredCLONE_THREAD, then the new guy will have the calling thread’s group leader as the group leader

- in the end, added to the existing

thread_group- this also populates

siblingandreal_parentand etc.

Threading APIs

Some threading APIs are implemented on the kernel level (results directly in system calls), whereas some are implemented on the user level.

For example, consider the task of calculating:

\[\sum_{i=1}^n i\]- POSIX Pthread

#include <pthread.h>

#include <stdio.h>

int sum; /* this data is shared by the thread(s) */

void *runner(void *param); /* threads call this function */

int main(int argc, char *argv[])

{

pthread t tid; /* the thread identifier */

pthread attr t attr; /* set of thread attributes */

if (argc != 2) {

fprintf(stderr,"usage: a.out <integer value>\n");

return -1;

}

if (atoi(argv[1]) < 0) {

fprintf(stderr,"%d must be >= 0\n",atoi(argv[1]));

return -1;

}

/* get the default attributes */

pthread attr init(&attr);

/* create the thread */

pthread create(&tid,&attr,runner,argv[1]);

/* wait for the thread to exit */

pthread join(tid,NULL);

printf("sum = %d\n",sum);

}

/* The thread will begin control in this function */

void *runner(void *param)

{

int i, upper = atoi(param);

sum = 0;

for (i = 1; i <= upper; i++)

sum += i;

pthread exit(0);

}

Note:

to join multiple threads, use a

forloop:#define NUM THREADS 10 /* an array of threads to be joined upon */ pthread t workers[NUM THREADS]; for (int i = 0; i < NUM THREADS; i++) pthread join(workers[i], NULL);

- Windows Threads

#include <windows.h>

#include <stdio.h>

DWORD Sum; /* data is shared by the thread(s) */

/* the thread runs in this separate function */

DWORD WINAPI Summation(LPVOID Param)

{

DWORD Upper = *(DWORD*)Param;

for (DWORD i = 0; i <= Upper; i++)

Sum += i;

return 0;

}

int main(int argc, char *argv[])

{

DWORD ThreadId;

HANDLE ThreadHandle;

int Param;

if (argc != 2) {

fprintf(stderr,"An integer parameter is required\n");

return -1;

}

Param = atoi(argv[1]);

if (Param < 0) {

fprintf(stderr,"An integer >= 0 is required\n");

return -1;

}

/* create the thread */

ThreadHandle = CreateThread(

NULL, /* default security attributes */

0, /* default stack size */

Summation, /* thread function */

&Param, /* parameter to thread function */

0, /* default creation flags */

&ThreadId); /* returns the thread identifier */

if (ThreadHandle != NULL) {

/* now wait for the thread to finish */

WaitForSingleObject(ThreadHandle,INFINITE);

/* close the thread handle */

CloseHandle(ThreadHandle);

printf("sum = %d\n",Sum);

}

}

-

Java Threading

Since Java does not have a global variable, it uses objects in Heap to share data (you need to pass the object into the thread)

class Sum

{

private int sum;

public int getSum() {

return sum;

}

public void setSum(int sum) {

this.sum = sum;

}

}

class Summation implements Runnable

{

private int upper;

private Sum sumValue;

public Summation(int upper, Sum sumValue) {

this.upper = upper;

this.sumValue = sumValue;

}

public void run() {

int sum = 0;

for (int i = 0; i <= upper; i++)

sum += i;

sumValue.setSum(sum);

}

}

public class Driver

{

public static void main(String[] args) {

if (args.length > 0) {

if (Integer.parseInt(args[0]) < 0)

System.err.println(args[0] + " must be >= 0.");

else {

Sum sumObject = new Sum();

int upper = Integer.parseInt(args[0]);

Thread thrd = new Thread(new Summation(upper, sumObject));

thrd.start();

try {

thrd.join();

System.out.println

("The sum of "+upper+" is "+sumObject.getSum());

} catch (InterruptedException ie) { }

}

}

else

System.err.println("Usage: Summation <integer value>"); }

}

Note

There are two techniques for creating threads in a Java program.

One approach is to create a new class that is derived from the

Threadclass and to override itsrun()method.An alternative —and more commonly used— technique is to define a class that implements the

Runnableinterface. TheRunnableinterface is defined as follows:public interface Runnable { public abstract void run(); }

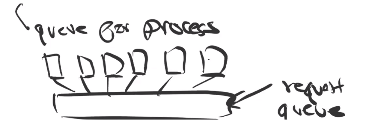

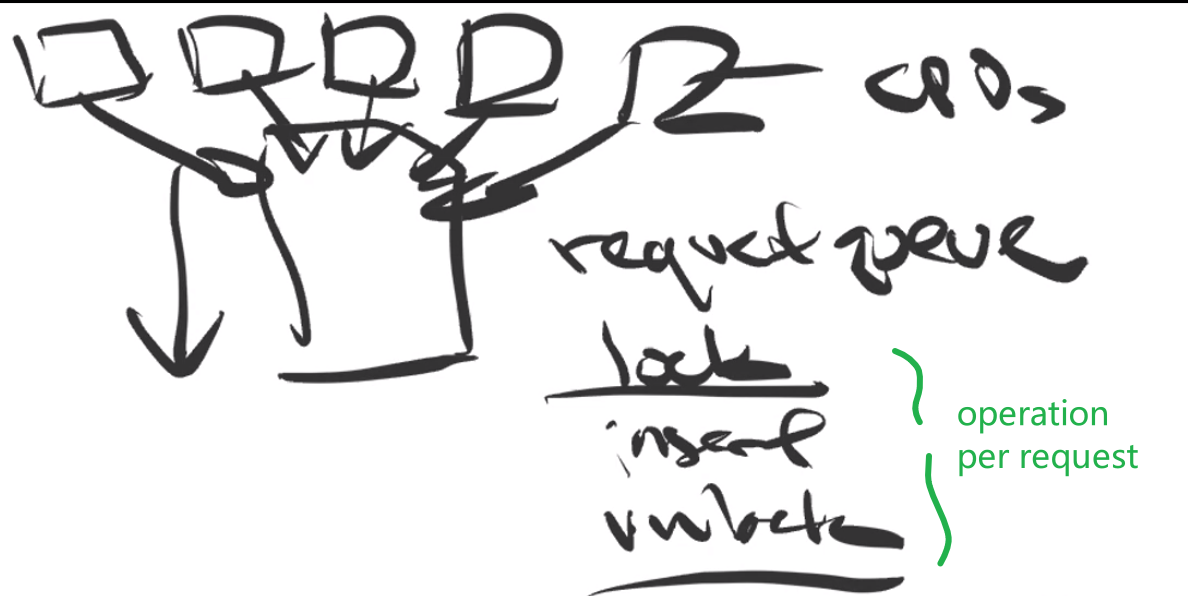

Thread Pools

The problem with the above essentially free threading is:

-

Creation of each thread takes some time

-

Unlimited threads could exhaust system resources, such as CPU time or memory.

One solution to this problem is to use a thread pool.

- The general idea behind a thread pool is to create a number of threads at process startup and place them into a pool, where they sit and wait for work.

- When a server receives a request, it awakens a thread from this pool—if one is available—and passes it the request for service.

- Once the thread completes its service, it returns to the pool and awaits more work.

- If the pool contains no available thread, the server waits until one becomes free.

OS Control

In the following situations, the OS takes control (i.e. OS code runs, including interrupt handler codes)

- On boot time

- When Interrupt occurs

- also Exceptions

- Dealing with Main Memory

- e.g. setting your program address space to use

addrwithin $base \le addr \le limit$

- e.g. setting your program address space to use

- System Calls (API for outside users, a way to enter the kernel)

- e.g. by using a software interrupt, so that OS can take control in between your program

- jumps to interrupt handler to handle it (OS code)

- e.g. implements using a special

syscallinstruction

- e.g. by using a software interrupt, so that OS can take control in between your program

- CPU modes (e.g. kernel mode)

- dealing with security

Kernel

Relationship between OS and Kernel

Operating System Kernel Operating System is a system software. Kernel is system software which is part of operating system. Operating System provides interface b/w user and hardware. kernel provides interface b/w application and hardware. It also provides protection and security. It’s main purpose is memory management, disk management, process management and task management. All system needs operating system to run. All operating system needs kernel to run. Type of operating system includes single and multiuser OS, multiprocessor OS, real-time OS, Distributed OS. Type of kernel includes Monolithic and Micro kernel. It is the first program to load when computer boots up. It is the first program to load when operating system loads.

Reminder:

The Linux Kernel is the first thing that your OS loads:

System calls provide userland processes a way to request services from the kernel. Those services are managed by operating system like storage, memory, network, process management etc.

- For example if a user process wants to read a file, it will have to make

openandreasystem calls. Generally system calls are not called by processes directly. C library provides an interface to all system calls.

Kernel has a hardware dependent part and a hardware-independent part. Codes on this site: https://elixir.bootlin.com/linux/v5.10.10/source contains:

arch/x86/boot- first codes to be executed- initializes keyboard, heap, etc, then starts the

initprogram

- initializes keyboard, heap, etc, then starts the

arch/x86/entrycontains system call codes- remember, user calling system call = stopping current process and switching/entering to OS control

Kernel System Calls

What Happens when a process execute system call?

Basically, the following graph:

in words, the following happened:

- Application program makes a system call by invoking wrapper function in C library

- This wrapper functions makes sure that all the system call arguments are available to trap-handling routine

- the wrapper function also takes care of copying these arguments to specific registers

- The wrapper function again copies the system call number (of itself) into specific CPU registers

- Now the wrapper function executes trap instruction (int 0x80). This instruction causes the processor to switch from ‘User Mode’ to ‘Kernel Mode’

- The code pointed out by location 0x80 is executed (Most modern machines use

sysenterrather than 0x80 trap instruction)- In response to trap to location 0x80, kernel invokes

system_call()routine which is located in assembler filearch/i386/entry.S- the rest is covered below.

How does the kernel implement/deal with system calls? (reference: http://articles.manugarg.com/systemcallinlinux2_6.html)

- You need to first get into Kernel

- Then locate that system call

- Run that system call

In the past:

- Linux used to implement system calls on all x86 platforms using software interrupts.

- To execute a system call, user process will copy desired system call number to

%eaxand will execute ‘int 0x80’. This will generate interrupt0x80and an interrupt service routine will be called.

- For interrupt 0x80, this routine is an “all system calls handling” routine. This routine will execute in ring 0 (privileged mode). This routine, as defined in the file

/usr/src/linux/arch/i386/kernel/entry.S, will save the current state and call appropriate system call handler based on the value in%eax.

For Modern x86, there is a specific SYSCALL instruction

-

Inside

arch/x86/entry/entry.S-

SYSYCALL begins/enters via the line

ENTRY(entry_SYSCALL_64),where:

entry_SYSCALL_64contains/is initialized with an address to the actual function ofentry_SYSCALL_64- it can take up to 6 arguments/6 registers

and after entering, it needs

- a register containing address of the implemented SYSCALL code

-

-

Inside the

ENTRY(entry_SYSCALL_64)-

after a bunch of stack savings, it does

do_syscall_64where:

- this is when the actual code is executed

do_syscall_64again points to an address of the C functiondo_syscall_64(nr, *regs), withnrwould represent theidfor each system call*regswould contain the arguments for the system call- similar to interrupt handler table, there is a “table” for SYSCALL

asm/syscalls_64.hwhich is generated byarch/x86/entry/syscalls/sysycall_64.tbldynamically depending on the hardware spec- for example, one line inside the

tblhas0 common read __x64_sys_read

- for example, one line inside the

some code there does:

- check

nrbeing a legal system call number in the tablesys_call_table - runs that system call with

sys_call_table[nr](regs), wheresys_call_table[nr]would basically be a function

-

-

Inside

include/linux/syscalls.h- you can find the actual definition of SYSCALL in C, which can be used to find the actual implementation

- for example,

SYSCALL_DEFINE0wrapper for functions that takes0arguments,SYSCALL_DEFINE1, …, up toSYSCALL_DEFINE6 - for example,

sys_read()

- for example,

- you can find the actual definition of SYSCALL in C, which can be used to find the actual implementation

-

Inside

kernel/sys.c- you can find the actual implementations of SYSCALL in C

- for example,

SYSCALL_DEFINE0(getpid), which will get translated to__x64_sys_getpid

- for example,

- you can find the actual implementations of SYSCALL in C

Note

sys_read()would be converted dynamically to__x64_sys_readby using#defineswhich basically adds a wrapper__x86to the functions

- this is configured by the

Kconfigfile, and the wrapper is defined atarch/x86/include/asm/syscall_wrapper.h

In the View of a Programmer

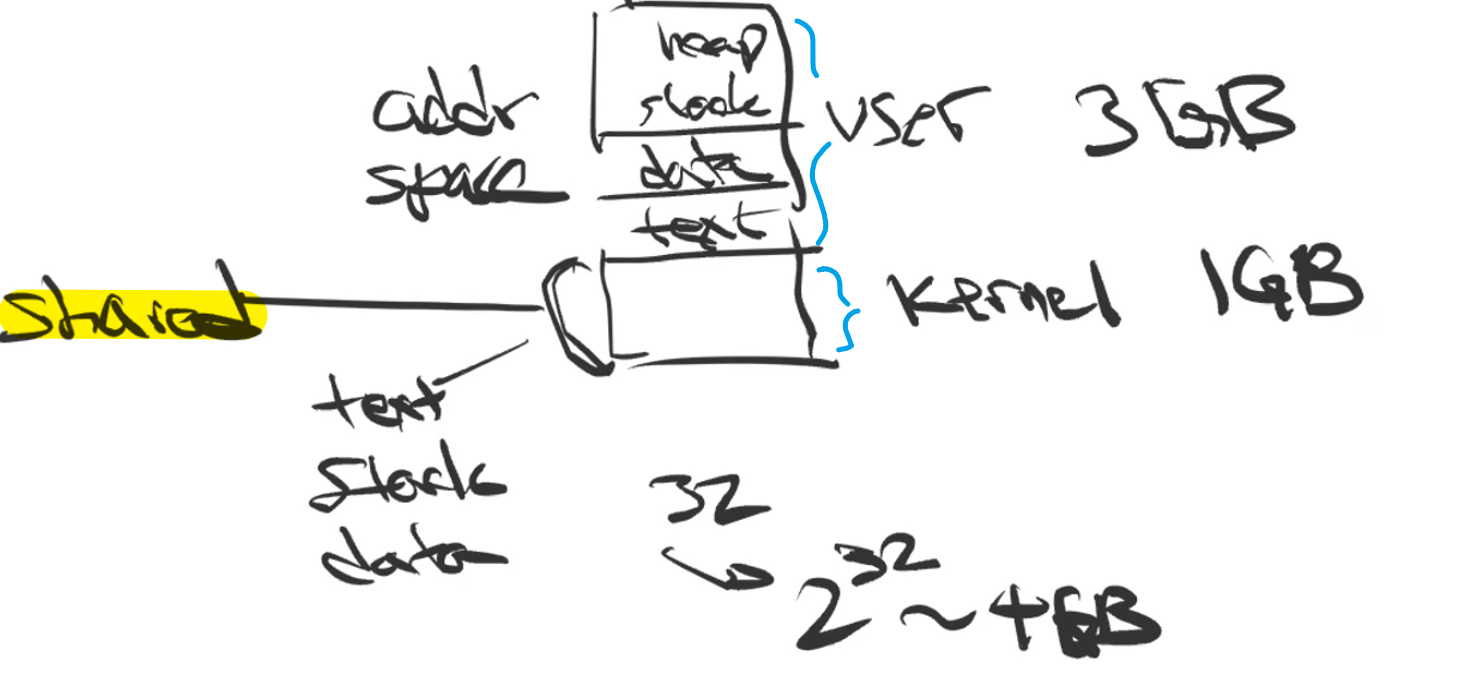

this is what happens in the virtual memory space:

where:

- every process has a separate/independent user space, but a shared kernel space for system calls (the kernel image is loaded there as well).

- this also means the stack for system call is very limited. So you should not write recursion functions for system calls.

System Call Example

Consider the function gettimeofday

SYSCALL_DEFINE2(gettimeofday, struct timeval __user *, tv,

struct timezone __user *, tz)

{

if (likely(tv != NULL)) {

struct timespec64 ts;

ktime_get_real_ts64(&ts);

if (put_user(ts.tv_sec, &tv->tv_sec) ||

put_user(ts.tv_nsec / 1000, &tv->tv_usec))

return -EFAULT;

}

if (unlikely(tz != NULL)) {

if (copy_to_user(tz, &sys_tz, sizeof(sys_tz)))

return -EFAULT;

}

return 0;

}

where:

- user arguments comes in like

struct timeval __user *, tv__userdenotes that the pointer comes from user spacetvis the variable name

-

since the variables comes from user space, we need to be careful of its validity:

-

struct timespec64 ts; ktime_get_real_ts64(&ts); if (put_user(ts.tv_sec, &tv->tv_sec) || put_user(ts.tv_nsec / 1000, &tv->tv_usec)) return -EFAULT;where we basically create our own structure and copied fields to user’s variable with

put_user()

-

- the error that system call returns is not directly

-1return -EFAULT;- and then a wrapper will populate the error information and then

return -1

Note

- In general, there is no explicit check done by the OS on the number of arguments you passed in for a system call. This means that all you should check in your function are the validity of the arguments you need.

More details on copy_to_user and the other ones:

Copy Data to/from Kernel

Function Description copy_from_user(to, from, n)_copy_from_userCopies a string of n bytes from from (userspace) to to (kernel space). get_user(type *to, type* ptr)_get_userReads a simple variable (char, long, … ) from ptr to to; depending on pointer type, the kernel decides automatically to transfer 1, 2, 4, or 8 bytes. put_user(type *from, type *to)_put_userCopies a simple value from from (kernel space) to to (userspace); the relevant value is determined automatically from the pointer type passed. copy_to_user(to, from, n)_copy_to_userCopies n bytes from from (kernel space) to to (userspace).

Week 5

Process Synchronization

Reminder:

- Cooperating processes can either directly share a logical address space (that is, both code and data) or be allowed to share data only through files or messages.

- The former case is achieved through the use of threads (see Threads and Cores

This chapter addresses how concurrent or parallel execution can contribute to issues involving the integrity of data shared by several processes.

Race Condition and Lock

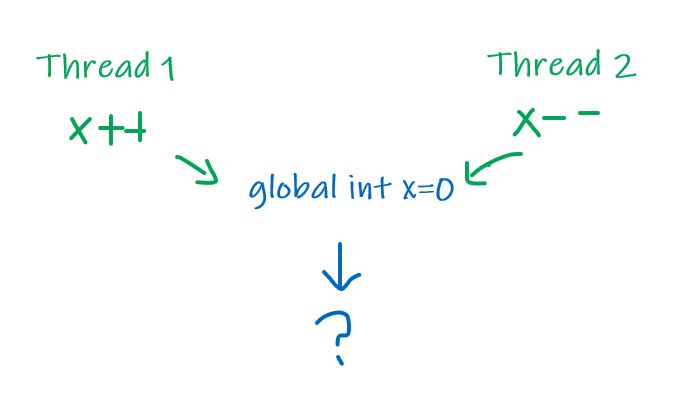

Consider the case (thread race):

the result has the following possibility:

1, ifT1/T2loadsx=0into register, andT1writes later thanT2back to the memory0, ifT1loadsx=0into register, and writes back to the memory, and thenT2loads and writes back-1, ifT1/T2loadsx=0into register, andT2writes later thanT1back to the memory

This is the problem of none-Atomic operations

- so that the actual operation in CPU has multiple steps

- as a result, you have ambiguity of result when multiple these operations are acting on the same data

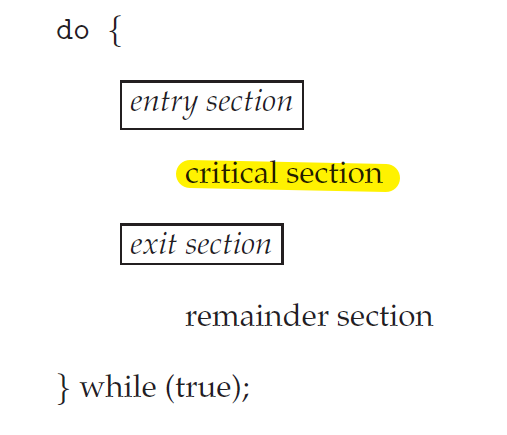

Critical Section:

- the critical section is a segment of code that manipulates a data object, which two or more concurrent threads are trying to modify

To guard against the race condition above, we need to ensure that only one process at a time can be manipulating the variable. To make such a guarantee, we require that the processes be synchronized in some way.

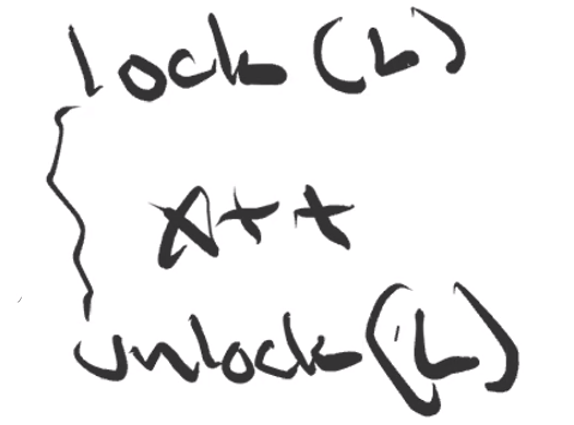

- The solution is to get a lock for the critical section:

Lock

so that all operations within a lock will be atomic/mutually exclusive

a lock would have the following property:

- mutual exclusion - only one thread can access the critical section at a time

- forward progress - if no one has the lock for the critical section, I should be able to get it

- bounded waiting - there can’t be an indefinite wait for getting a lock for the critical section

for example, to make

x++atomic with locks:

In general, this is what we need to do:

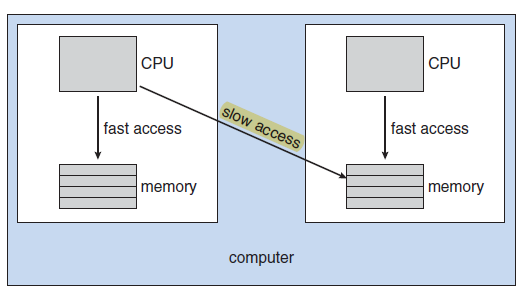

This is actually very important if you have multiprocessor CPUs:

- since multiple processes can be in the kernel in this case (e.g. calling system calls), they could be manipulating some same kernel data.

- therefore, you need to find a way to lock stuff.

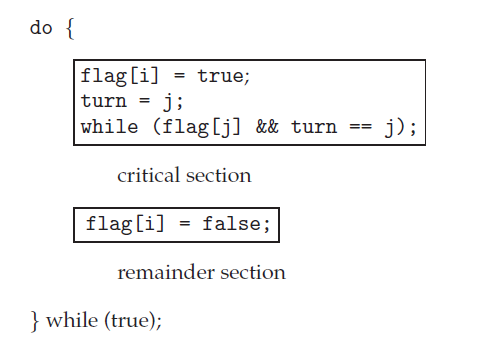

Peterson’s Solution

This is a heuristic solution that:

- demonstrates the idea of a lock

- only works for two processes

- not guaranteed to work on modern computer architectures.

where:

irepresentsProcess i=Process 0jrepresentsProcess j=Process 1

and that:

- Mutual exclusion is preserved.

- $P_0$ and and $P_1$ could not have successfully executed their statements at about the same time 2. The progress requirement is satisfied.

- The bounded-waiting requirement is met.

- There is a bounded waiting, since if one process $P_0=P_i$ is stuck in the loop, the process can get out either by $P_1=P_j$ setting the

flag[j] = falseafter execution or settingturn = i. Both of which guaranteed that $P_0=P_i$ will have at most one wait for one loop in $P_j$

- There is a bounded waiting, since if one process $P_0=P_i$ is stuck in the loop, the process can get out either by $P_1=P_j$ setting the

The actual solution for 2 processes is:

Hardware Implementation

Therefore, one hardware solution to solve this would be:

- disable interrupts, so that once a process is in the critical section, no other process can do work.

- disabling interrupt is atomic itself

However, this is problematic because:

- need privileged instruction (i.e. normal user outside kernel can’t use it directly)

- can only have one lock in the system, since interrupts are disabled for the entire CPU.

- if you have multiple processors, this does not work at all

- need all processors to disable interrupt

- if process $P_0$ is running on $CPU_0$ and process $P_1$ is $CPU_1$. Disabling interrupt on both does not change/interrupt what is already running. Therefore, both $P_0,P_1$ will be running.

The solution for MP (multi-processor) is using:

-

test-and-set (ensured by hardware to be atomic)

boolean test_and_set(boolean *target) { boolean rv = *target; *target = true; return rv; } -

compare-and-swap (ensured by hardware to be atomic)

/* this is made atomic by hardware */ int compare_and_swap(int *value, int expected, int new value) { int temp = *value; if (*value == expected) *value = new value; return temp; }

and implementing:

- spin locks

- blocking blocks

Note:

- under the hood, the code themselves involves more than one instruction. However, this is supported natively by hardware, so that this will be atomic (e.g. locking the communication channel to the main memory when you are loading).

- both lock implementation are:

- busy waiting

- since we have the

whileloop for testing the lock- spin locks

- since we have the

whileloop for waiting- Therefore, both are problematic because spinning locks will be eating up the CPU cycles if there are lots of contentions over a lock

Other Atomic Instructions

For x86 architecture, there are actually a bunch of useful atomic instructions you can use.

For Example:

-

atomic_read(const atomic_t *v) atomic_add(int i, atomic_t *v)- …

- more inside

include/asm-generic/atomic-instrumented.h

Therefore for simple code, you can just use those atomic instructions instead of explicitly grabbing the lock.

Spin Locks

Conventions for Using Spin Locks

the same task that grabs the spin lock should also releases the spin lock

the task that grabs the spin lock cannot sleep/block before releasing it

- otherwise, you might cause deadlock situations

the usual usage of spin locks in code/by programs looks like:

initialize *x; spin_lock(x); /* some work, not sleeping/blocked */ spin_unlock(x);

Advantages of Spin Lock

- No context switch is required when a process must wait on a lock (as compare to a blocking lock), and context switch may take considerable time.

Disadvantages of Spin Lock

- Wastes much of the CPU power if a lot are running for a long time.

Test and Set Spin Lock

Consider the case when you have a global lock of boolean lock=false.

/* this is made atomic by hardware */

boolean test_and_set(boolean *target) {

boolean rv = *target;

*target = true;

return rv;

}

then the spin lock implementation/usage would be:

do {

while (test_and_set(&lock))

; /* do nothing */

/* critical section */

lock = false;

/* remainder section */

} while (true)

where:

test_and_set(&lock)basically locks it by atomically:- letting itself through if

lock=0and assigning it tolock=1to lock it.

- letting itself through if

However, bounded-waiting is not satisfied:

- consider a process that is super lucky and gets to set

lock=1every time before other processes. Then all other processes will have to wait indefinitely.

Compare and Swap Spin Lock

Consider the case when you have a global lock of int lock=0.

/* this is made atomic by hardware */

int compare_and_swap(int *value, int expected, int new value) {

int temp = *value;

if (*value == expected)

*value = new value;

return temp;

}

then the spin lock implementation/usage:

do {

while (compare_and_swap(&lock, 0, 1) != 0)

; /* do nothing */

/* critical section */

lock = 0;

/* remainder section */

} while (true);

where:

- the idea is that the one process will set

lock=1before it enters the critical block, so that other processes cannot enter.

However, bounded-waiting is not satisfied:

- consider a process that is super lucky and gets to set

lock=1every time before other processes. Then all other processes will have to wait indefinitely.

Try Lock

This is basically a lock that, instead of spinning, either:

- obtains the lock

- tries once and stop

Therefore, it is not-spinning, but technically it is not waiting either.

static __always_inline int spin_trylock(spinlock_t *lock)

{

return raw_spin_trylock(&lock->rlock);

}

Kernel Code Examples

First, a couple of code to know:

-

the declaration for

spin_lock()insideinclude/linux/spinlock.h:static __always_inline void spin_lock(spinlock_t *lock) { raw_spin_lock(&lock->rlock); } /* other declarations */ static __always_inline void spin_unlock(spinlock_t *lock) { raw_spin_unlock(&lock->rlock); }which if you dig deep into what it did:

-

spin_lock()eventually calls ` __raw_spin_lock(raw_spinlock_t *lock)` and does 3 thingspreempt_disable();, so that no other process can preempt/context switch this process when it holds the spin lock.- same principle as not sleeping during holding a spin lock

- this has a similar effect (but not as strong) as disabling interrupts

-

` spin_acquire(&lock->dep_map, 0, 0, RET_IP);` grabs the lock

-

LOCK_CONTENDED(lock, do_raw_spin_trylock, do_raw_spin_lock);configures this function into play:static __always_inline void queued_spin_lock(struct qspinlock *lock) { u32 val = 0; /* which in the end is the ATOMIC compare_and_swap in ASSEMBLY CODE */ /* this would happen in __raw_cmpxchg(ptr, old, new, size, lock) */ if (likely(atomic_try_cmpxchg_acquire(&lock->val, &val, _Q_LOCKED_VAL))) return; queued_spin_lock_slowpath(lock, val); }

-

spin_unlock()does the opposite three things:spin_release(&lock->dep_map, 1, _RET_IP_);do_raw_spin_unlock(lock);preempt_enable();

-

-

the declaration for defining your own spin lock is inside

include/linux/spinlock_types.h:#define DEFINE_SPINLOCK(x) spinlock_t x = __SPIN_LOCK_UNLOCKED(x)which if you dig deep into what it did:

- essentially sets a

raw_lock’scountervalue to0

- essentially sets a

-

the declaration for

spin_lock_irq()insideinclude/linux/spinlock.h:- consider the problem that: if a process hold a spin lock

Lis interrupted, and the interrupted handler needs that lockLas well- this causes a deadlock situation, because an interrupted process cannot proceed

- therefore,

spin_lock_irq()in addition disables interrupt

- consider the problem that: if a process hold a spin lock

-

the declaration for

spin_lock_irq_save()insideinclude/linux/spinlock.h:- this solves the problem that if, before calling

spin_lock_irq, the interrupt has already been disabled for other processes. Therefore, here you need to:- save the previous “disabled interrupt’s configuration”

- this solves the problem that if, before calling

Note

- One trick thing that you have to find out yourself is which lock in Kernel to use

- for example,

task_listhas its own lock.- To choose between

spin_lock()andspin_lock_irq(), simply think about:

- will this lock be used by Interrupt Handlers? If not, use

spin_lock()suffices.

For Example:

-

Spin lock used in

/arch/parisc/mm/init.cunsigned long alloc_sid(void) { unsigned long index; spin_lock(&sid_lock); /* some code omitted */ index = find_next_zero_bit(space_id, NR_SPACE_IDS, space_id_index); space_id[index >> SHIFT_PER_LONG] |= (1L << (index & (BITS_PER_LONG - 1))); space_id_index = index; spin_unlock(&sid_lock); return index << SPACEID_SHIFT; } -

Spin lock defined and used

defined inside

arch/alpha/kernel/time.cDEFINE_SPINLOCK(rtc_lock);then, some other code using the lock:

ssize_t atari_nvram_read(char *buf, size_t count, loff_t *ppos) { char *p = buf; loff_t i; spin_lock_irq(&rtc_lock); if (!__nvram_check_checksum()) { spin_unlock_irq(&rtc_lock); return -EIO; } for (i = *ppos; count > 0 && i < NVRAM_BYTES; --count, ++i, ++p) *p = __nvram_read_byte(i); spin_unlock_irq(&rtc_lock); *ppos = i; return p - buf; }

Blocking Locks

Instead of spin lock, we

- send other processes to sleep/blocked when one process is doing critical work.

- wake the waiting process up when the process has finished the work

This implementation in Linux is called mutex, or semaphore.

- mutex may be (often is) implemented using semaphore.

Blocking Semaphore

The blocking semaphore has:

- lock =

P(semaphore)=wait() - unlock =

V(semaphore)=signal()

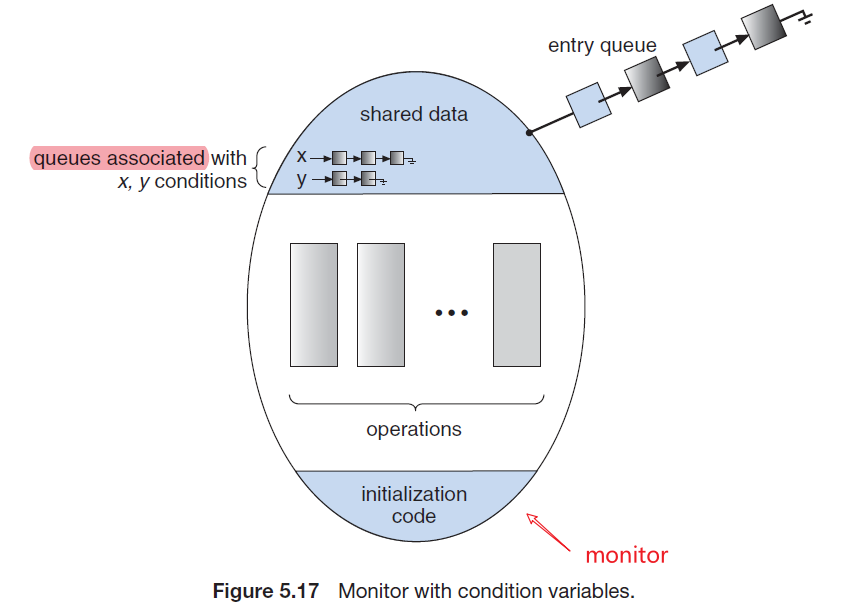

Idea Behind a Semaphore

Rather than engaging in busy waiting, the process can block itself.

Overall flow of a semaphore:

- places a process into a waiting queue associated with the semaphore

- the state of process s switched to the waiting state.

- Then control is transferred to the CPU scheduler, which selects another process to execute.

- A process that is blocked, waiting on a semaphore S, should be restarted by a

wakeup()operation when some other process executes asignal()operation.- The process is restarted, which changes the process from the waiting state to the ready state. The process is then placed in the ready queue.

so the simple structure of a

semaphorelooks like:typedef struct { int value; struct task_struct *list; } semaphore;

Consider int s=1:

wait(s){

lock();

s--;

while(s < 0){

add current process to queue;

unlock();

block itself;

// next time it wakes up, check again atomically

lock();

}

unlock();

}

where:

- the

lock()andunlock()are just to make the code of the semaphore to be atomic, so they could just betest_and_set()

and then:

signal(s) {

lock();

S->value++;

if (S->value <= 0) {

remove a process P from S->list;

wakeup(P);

}

unlock();

}

Note

- the above implementation allows a negative value semaphore. If a semaphore value is negative, its magnitude is the number of processes waiting on that semaphore

- One way to add and remove processes from the list so as to ensure bounded waiting is to use a FIFO queue

In kernel, the actual implementation are in kernel/locking/semaphore.c

In general:

- to grab a semaphore, use

void down(struct semaphore *sem) - to release a semaphore, use

void up(struct semaphore *sem)

In specific:

-

void down(struct semaphore *sem)looks like:void down(struct semaphore *sem) { unsigned long flags; /* using spin lock to make sure the following code is atomic */ raw_spin_lock_irqsave(&sem->lock, flags); if (likely(sem->count > 0)) sem->count--; else __down(sem); raw_spin_unlock_irqrestore(&sem->lock, flags); }where:

-

if the original

count=1, then this means only one process can obtain the semaphore. This is also called a binary semaphorecountdefines the number of processes allowed to obtain the semaphore simultaneously

-

otherwise, the

__down(sem)basically blocks/sleeps the process and does:static inline int __sched __down_common(struct semaphore *sem, long state, long timeout) { struct semaphore_waiter waiter; /* adds the task to a LIST OF TASK WAITING */ list_add_tail(&waiter.list, &sem->wait_list); waiter.task = current; waiter.up = false; for (;;) { if (signal_pending_state(state, current)) goto interrupted; if (unlikely(timeout <= 0)) goto timed_out; /* sets the state of the task, E>G> TASK_UNINTERRUPTABLE */ __set_current_state(state); /* befores goes to sleep, releases the spin lock from down() */ raw_spin_unlock_irq(&sem->lock); /* sends the process to sleep, also adds a timeout to the sleep. * Then, schedules something else to run */ timeout = schedule_timeout(timeout); /* here, the process woke up */ raw_spin_lock_irq(&sem->lock); /* if you woke up not by the __up() function, then waiter.up = false */ /* then, you will need to loop again and go back to sleep */ if (waiter.up) return 0; } /* some code omitted */ }

-

-

on the other hand,

void up(struct semaphore *sem)does:void up(struct semaphore *sem) { unsigned long flags; raw_spin_lock_irqsave(&sem->lock, flags); /* if wait_list is empty, increase the semaphore */ /* otherwise, wakes up the first process in the wait_list */ if (likely(list_empty(&sem->wait_list))) sem->count++; else __up(sem); raw_spin_unlock_irqrestore(&sem->lock, flags); }where:

-

the

__up(sem)does:static noinline void __sched __up(struct semaphore *sem) { struct semaphore_waiter *waiter = list_first_entry(&sem->wait_list, struct semaphore_waiter, list); list_del(&waiter->list); /* sets the up flag for the __down_common() function */ waiter->up = true; /* wakes up the process */ wake_up_process(waiter->task); }

-

Disadvantage

Because semaphores are stateful (it is not purely

1or0), if you messed up by doing:up(s); /* messed up the state */ /* some code */ down(s); /* some other code */ up(s);then the entire state of semaphore is messed up (i.e. it is broken from that point onward)

However, if you use a

spinlockwhich only has0or1, then messing it up once will still get it to work afterwards.

Mutex Locks

Mutex = Mutual Exclusion

- Basically either the spin lock idea, or the blocking idea (often it refers to the blocking locks).

So that the simple idea is just:

acquire() {

while (!available)

; /* busy wait */

available = false;

}

then

release() {

available = true;

}

For Example

do {

acquire lock

critical section

release lock

remainder section

} while (true);

Priority Inversion

As an example, assume we have three processes— $L$, $M$, and $H$—whose priorities follow the order $L < M < H$. Assume that process $H$ requires resource $R$, which is currently being accessed by process $L$.

- Ordinarily, process $H$ would wait for $L$ to finish using resource $R$.

- However, now suppose that process $M$ becomes runnable, thereby preempting process $L$.

- Indirectly, a process with a lower priority—process $M$—has affected how long process $H$ must wait for $L$ to relinquish resource $R$.

Priority Inversion

- The above problem is solved by implementing a priority-inheritance protocol.

- According to this protocol, all processes that are accessing resources needed by a higher-priority process inherit the higher priority until they are finished with the resources in question. When they are finished, their priorities revert to their original values.

Classical Synchronization Problems

All below mutex locks refer to blocking semaphores.

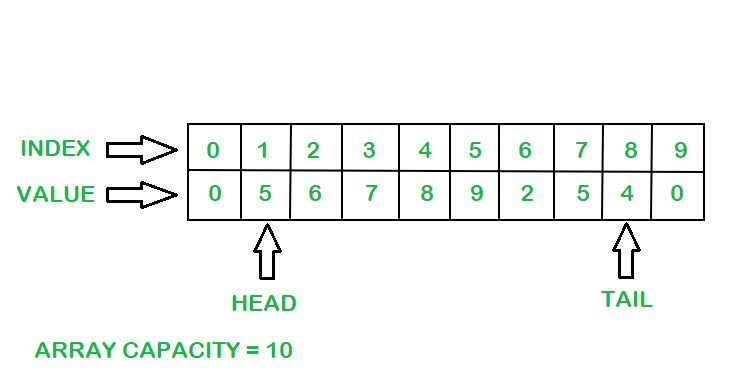

Bounded Buffer

Basically, consider the case where you have a pool of n buffers, and you want only one process (producer/consumer) to access the pool at the time.

Using a simple semaphore:

-

lock setups:

int n; semaphore mutex = 1; semaphore empty = n; semaphore full = 0;

Producer code:

do {

. . .

/* produce an item in next produced */

. . .

wait(empty);

wait(mutex);

. . .

/* add next produced to the buffer */

. . .

signal(mutex);

signal(full);

} while (true);

Consumer code:

do {

wait(full);

wait(mutex);

. . .

/* remove an item from buffer to next consumed */

. . .

signal(mutex);

signal(empty);

. . .

/* consume the item in next consumed */

. . .

} while (true);

Notice that:

mutexlock ensures only one process into the poolemptysemaphore ensures processes only producing toemptynumber of buffersfullsemaphore ensures processes only consuming tofullnumber of buffers- all three locks combined gives the correct behavior

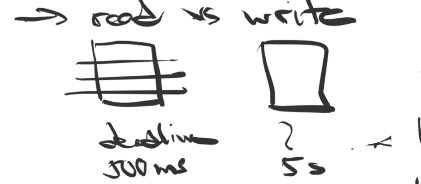

Reader-Writer Problem

Reader-Writer Problem

- First of all, when we have readers and writers for the same resource, we want to:

- readers to be concurrent

- writers to be mutually exclusive

- The first readers–writers problem, requires that no reader be kept waiting unless a writer has already obtained permission to use the shared object.

- notice that writers may starve here (waiting forever if readers come in a lot)

- The second readers–writers problem requires that, once a writer is ready, that writer perform its write as soon as possible

- notice that readers may starve here (waiting forever if writers come in a lot)

Therefore, in reality, there is often a mixed solution.

The first problem is solved by:

-

semaphore rw_mutex = 1; /* Used by writer (technically used by both) */ semaphore mutex = 1; /* Used by Readers */ int read count = 0;

Writer Process

do {

wait(rw_mutex); /* just simply lock */

. . .

/* writing is performed */

. . .

signal(rw_mutex); /* just simply unlock */

} while (true);

Reader Process

do {

wait(mutex);

read_count++;

if (read_count == 1)

wait(rw_mutex); /* first reader disables write */

signal(mutex);

. . .

/* reading is performed */

. . .

wait(mutex);

read_count--;

if (read_count == 0)

signal(rw_mutex); /* last reader enables write */

signal(mutex);

} while (true);

notice that:

- if a writer appears first, it obtains

rw_mutex, disallows all readers - if a reader appears first:

- the first reader locks the

rw_mutex, disallowing write - the last reader unlucks the

rw_mutex, allowing write

- the first reader locks the

My Idea for the Second Reader-Writer Problem:

variables:

semaphore rw_mutex = 1; /* Used by writer (technically used by both) */ semaphore read_lock = 1; /* Used to stop readers comming */ semaphore mutex = 1; /* Used by Readers */ semaphore r_mutex = 1; /* Used by Writers */ int read count = 0;Writer

do { wait(r_mutex); write_count++; if (write_count == 1) wait(read_lock); /* first writer disables more reader comming */ wait(rw_mutex); signal(r_mutex); . . . /* writing is performed */ . . . wait(r_mutex); if(write_count == 0) signal(read_lock); signal(rw_mutex); signal(r_mutex); } while (true);Reader:

do { wait(read_lock); /* used by writers to disable readers */ wait(mutex); read_count++; if (read_count == 1) wait(rw_mutex); /* first reader disables write */ signal(mutex); signal(read_lock); . . . /* reading is performed */ . . . wait(mutex); read_count--; if (read_count == 0) signal(rw_mutex); /* last reader enables write */ signal(mutex); } while (true);

RCU - Read Copy Update

This is an mechanism in kernel that allows concurrent readers without grabbing locks (saves performance issues)

- this only works if we can do atomic updates (for example, if you are using a linked-list)

RCU

- readers will not have a lock

- in code, there will be a fake lock, which is only used to indicate where the critical section is

- writers will have a spin lock (or any lock)

For Example: Linked-List RCU

Header file:

typedef struct ElementS{

int key;

int value;

struct ElementS *next;

} Element;

class RCUList {

private:

RCULock rcuLock;

Element *head;

public:

bool search(int key, int *value); /* read */

void insert(Element *item, value);

bool remove(int key);

};

implementation:

bool

RCUList::search(int key, int *valuep) {

bool result = FALSE;

Element *current;

rcuLock.readLock(); /* this is FAKE lock */

current = head;

for (current = head; current != NULL;

current = current->next) {

if (current->key == key) {

*valuep = current->value;

result = TRUE;

break;

}

}

rcuLock.readUnlock(); /* this is FAKE lock */

return result;

}

void

RCUList::insert(int key,

int value) {

Element *item;

// One write at a time.

rcuLock.writeLock(); /* this is an actual lock, we are modifying the list */

// Initialize item.

item = (Element*)

malloc(sizeof(Element));

item->key = key;

item->value = value;

item->next = head;

// Atomically update list.

rcuLock.publish(&head, item);

// Allow other writes

// to proceed.

rcuLock.writeUnlock(); /* this is an actual lock */

// Wait until no reader

// has old version.

rcuLock.synchronize();

}

bool

RCUList::remove(int key) {

bool found = FALSE;

Element *prev, *current;

// One write at a time.

rcuLock.WriteLock(); /* this is an actual lock, we are modifying the list */

for (prev = NULL, current = head;

current != NULL; prev = current,

current = current->next) {

if (current->key == key) {

found = TRUE;

// Publish update to readers

if (prev == NULL) {

rcuLock.publish(&head,

current->next);

} else {

rcuLock.publish(&(prev->next),

current->next);

}

break;

}

}

// Allow other writes to proceed.

rcuLock.writeUnlock();

// Wait until no reader has old version.

if (found) {

/* before synchronization, there MAY be an OLD reader looking at the old-TO-BE-REMOVED data, which is current */

rcuLock.synchronize();

/* afterwards, you are SURE no reader will be looking at the old entry. Now you can free */

free(current);

}

return found;

}

where:

- all the actual waiting happens at

synchronize()

the locks implementation:

class RCULock{

private:

// Global state

Spinlock globalSpin;

long globalCounter;

// One per processor

DEFINE_PER_PROCESSOR(

static long, quiescentCount);

// Per-lock state

Spinlock writerSpin;

// Public API omitted

}

void RCULock::ReadLock() {

disableInterrupts(); /* I can't be preempted, but other running processes can run */

}

void RCULock::ReadUnlock() {

enableInterrupts();

}

void RCULock::writeLock() {

writerSpin.acquire(); /* an actual spin lock */

}

void RCULock::writeUnlock() {

writerSpin.release(); /* an actual spin lock */

}

void RCULock::publish (void **pp1,

void *p2){

memory_barrier(); /* make sure memory layout does not change */

*pp1 = p2;

memory_barrier();

}

// Called by scheduler

void RCULock::QuiescentState(){

memory_barrier();

PER_PROC_VAR(quiescentCount) =

globalCounter; /* sets the quiescentCount=globalCounter */

memory_barrier();

}

void

RCULock::synchronize() {

int p, c;

globalSpin.acquire();

c = ++globalCounter;

globalSpin.release();

/* WAIT for EVERY single CPU to schedule something else */

FOREACH_PROCESSOR(p) {

/* true if the scheduler scheduled a NEW process AND called QuiescentState() */

while((PER_PROC_VAR(

quiescentCount, p) - c) < 0) {

// release CPU for 10ms

sleep(10);

}

}

}

so we see that synchronize basically does the waiting such that all data across CPUs would be “correct”.

Kernel Code Examples

Note that

if you want to use a RCU lock for a resource, then obviously you need all of the related operations:

- read

- write/update

to also use RCU

publish/synchronizationmechanism

static int

do_sched_setscheduler(pid_t pid, int policy, struct sched_param __user *param)

{

struct sched_param lparam;

struct task_struct *p;

int retval;

/* some code omitted */

rcu_read_lock(); /* rcu read_lock, not a real lock */

retval = -ESRCH;

p = find_process_by_pid(pid);

if (likely(p))

get_task_struct(p);

rcu_read_unlock();

if (likely(p)) {

retval = sched_setscheduler(p, policy, &lparam);

put_task_struct(p);

}

return retval;

}

Wait Queues

Basically, with this you can:

-

defines a wait queue

- add sleeping

taskto a wait queue - wake up sleeping

tasks from a wait queue (putting back toRUN QUEUE) - …

- more refer to

include/linux/wait.h.

Reminder

- When the task marks itself as sleeping, you wan to:

- puts itself on a wait queue

- removes itself from the red-black tree of runnable (

RUN QUEUE)- calls

schedule()to select a new process to execute.- Waking back up is the inverse:

- The task is set as runnable

- Removed from the wait queue

- Added back to the red-black tree of

RUN QUEUENote that waking up does not call

schedule()

In specific:

-

creating a wait queue:

DECLARE_WAITQUEUE(name, tsk); /* statically creating a waitqueue*/ DECLARE_WAIT_QUEUE_HEAD(wait_queue_name); /* statically creating a waitqueue*/ init_waitqueue_head(wq_head); /* dynamically creating one */where:

-

/* DECLARE_WAITQUEUE(name, tsk) does this */ struct wait_queue_entry name = __WAITQUEUE_INITIALIZER(name, tsk)

-

-

creating a wait_entry statically so that you can

#define DEFINE_WAIT(name) DEFINE_WAIT_FUNC(name, autoremove_wake_function)where:

-

this thing automatically assigns

currentin the private fieldstruct wait_queue_entry name = { .private = current, .func = function, .entry = LIST_HEAD_INIT((name).entry), }

-

-

adding things to a wait queue

extern void add_wait_queue(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry); /* or lower level */ static inline void __add_wait_queue(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry) { list_add(&wq_entry->entry, &wq_head->head); }notice that all it does is a list_add. This means you might be able to customize itself.

-

adding things to a wait queue to tail

static inline void __add_wait_queue_entry_tail(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry) { list_add_tail(&wq_entry->entry, &wq_head->head); } -

actually sending a process to sleep

prepare_to_wait(&pstrace_wait, &wait, TASK_INTERRUPTIBLE); schedule(); -

waking up all things from a wait queue by putting it back to

RUN_QUEUE#define wake_up(x) __wake_up(x, TASK_NORMAL, 1, NULL) -

remove task from

wait_queueafter you are sure that this thing should be running:/** * finish_wait - clean up after waiting in a queue * @wq_head: waitqueue waited on * @wq_entry: wait descriptor * * Sets current thread back to running state and removes * the wait descriptor from the given waitqueue if still * queued. */ void finish_wait(struct wait_queue_head *wq_head, struct wait_queue_entry *wq_entry)

Note

other useful

wake_uporsleeprelated functions can be found inkernel/sched/core.c

wake up a task:

int wake_up_process(struct task_struct *p) { return try_to_wake_up(p, TASK_NORMAL, 0); }

For Example:

DEFINE_WAIT(wait);

struct cx25840_state *state = to_state(i2c_get_clientdata(client));

init_waitqueue_head(&state->fw_wait);

q = create_singlethread_workqueue("cx25840_fw");

if (q) {

/* only changes state */

prepare_to_wait(&state->fw_wait, &wait, TASK_UNINTERRUPTIBLE);

/* kernel run something else, then the task is actually blocked */

schedule();

/* after the task GETS RUNNING AGAIN, this is resumed/called */

finish_wait(&state->fw_wait, &wait);

}

then to have something that wakes the task up, use

static void cx25840_work_handler(struct work_struct *work)

{

struct cx25840_state *state = container_of(work, struct cx25840_state, fw_work);

cx25840_loadfw(state->c);

/* wakes up the task */

wake_up(&state->fw_wait);

}

Note

waking_upthe task does not automatically puts it to running. It only puts it back to theRUN QUEUE. Then, only when some processes calledschedule(), the process might run (exactly which process to run depends on the scheduling algorithm).

For Example:

static ssize_t inotify_read(struct file *file, char __user *buf,

size_t count, loff_t *pos)

{

struct fsnotify_group *group;

DEFINE_WAIT_FUNC(wait, woken_wake_function);

/* initialization is done somewhere else */

add_wait_queue(&group->notification_waitq, &wait);

while (1) {

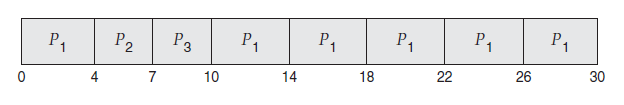

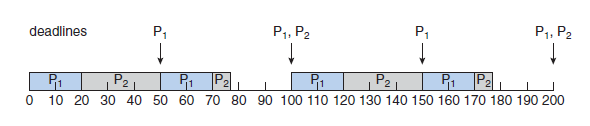

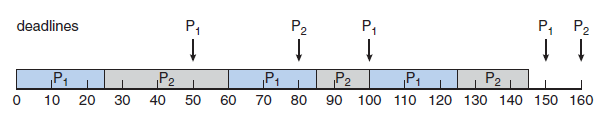

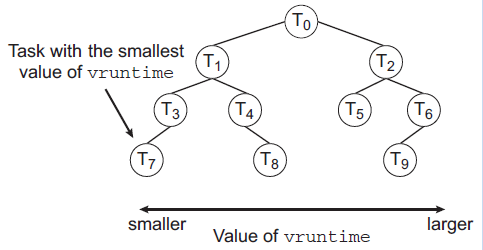

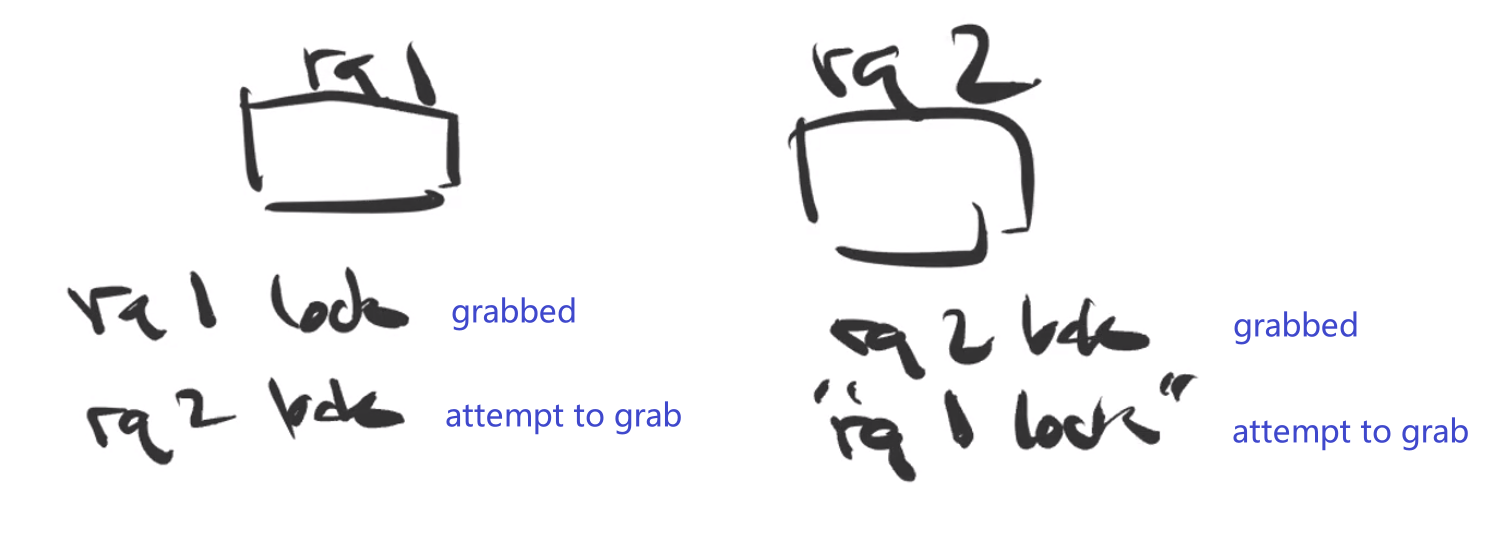

spin_lock(&group->notification_lock);