COMS4705 NLP part1

- Logistics and Introduction

- Basic Text Processing

- Minimum Edit Distance

- Language Models

- Spelling Correction

- Neural Networks in NLP

- RNN and LSTM

- Transformers

- Vector Semantics and Embeddings

- Sequence Labeling for POS and Named Entities

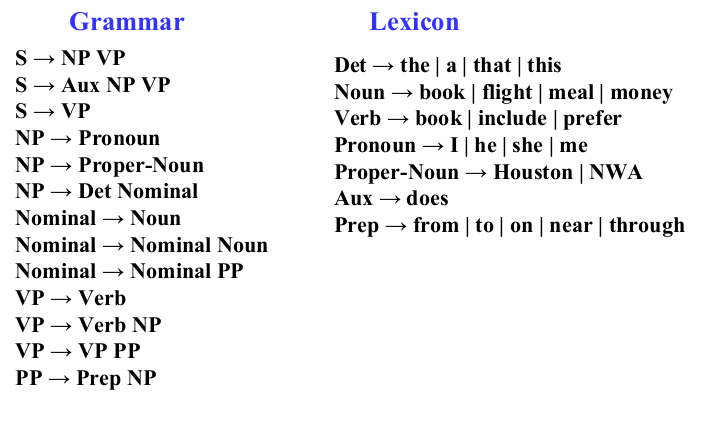

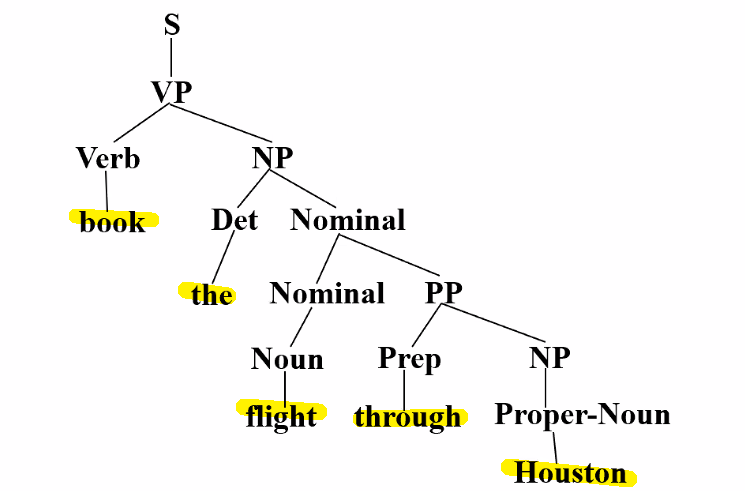

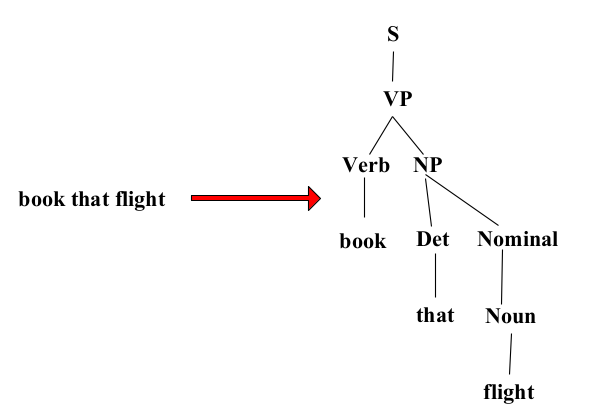

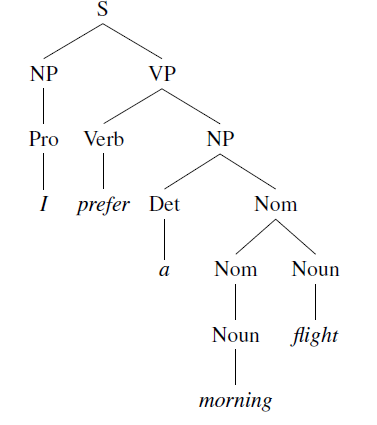

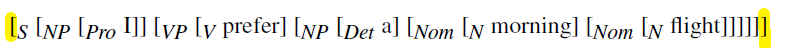

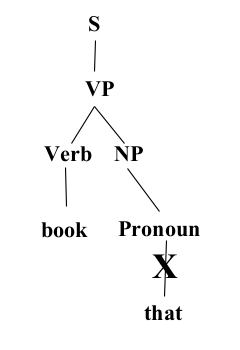

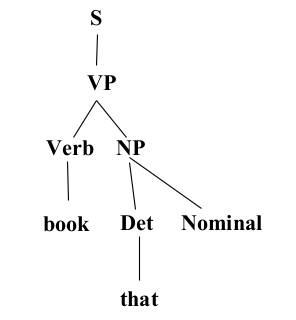

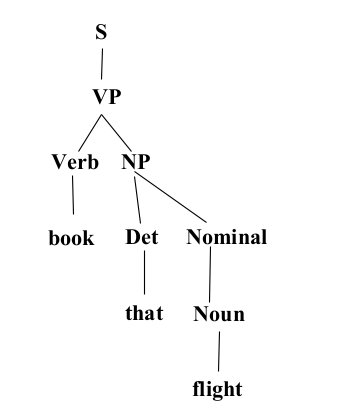

- Constituency Grammar and Parsing

- Midterm

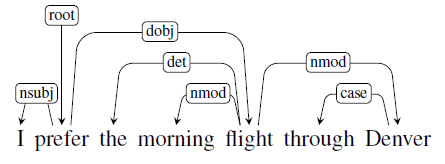

- Dependency Parsing

- Neural Shift-Reduce Parser

Logistics and Introduction

Discussion/Q&A:

- Piazza. Every questions should be sent there.

TA:

-

Professor: 1 hour before class

- Yanda Chen: Tue 6-8pm

- Kun Qian: Tue Thur 4:30-5:30pm

- Qingyang Wu: Wed 10am-12pm

Grades:

| Type | Percentage |

|---|---|

| Homework *3 (All python) | 51% |

| Midterm *1 | 15% |

| Final *1 | 20% |

| Tendance | 14% |

| Piazza (extra credit) | 3% |

| Total | 103% |

Slides:

- On the Syllabus

- Readings: https://web.stanford.edu/~jurafsky/slp3/

Applications of NLP

Question Answering:

- Won the game “Jeopardy” on Feb 16, 2011

Information Extraction

- e.g. extraction information from an email, and add the appointment to my calendar

Sentiment/Opinion Analysis

- whether if it is a positive review or a negative review

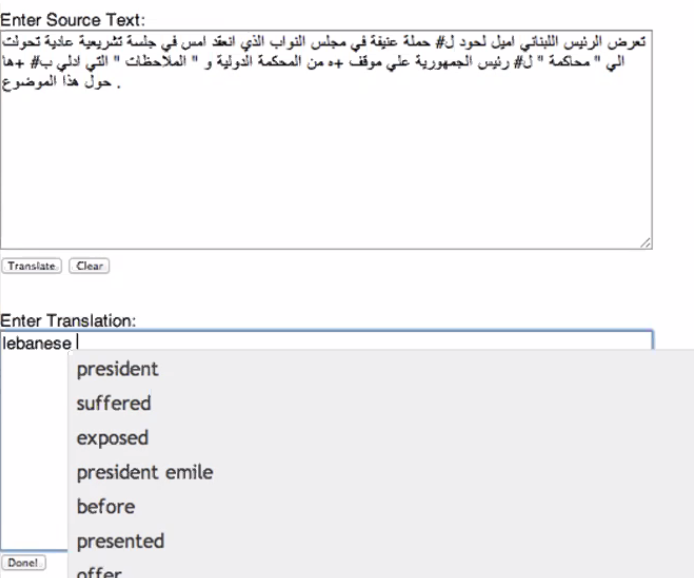

Machine Translation

-

fully automatic translation between different languages

-

help human translators to translate text

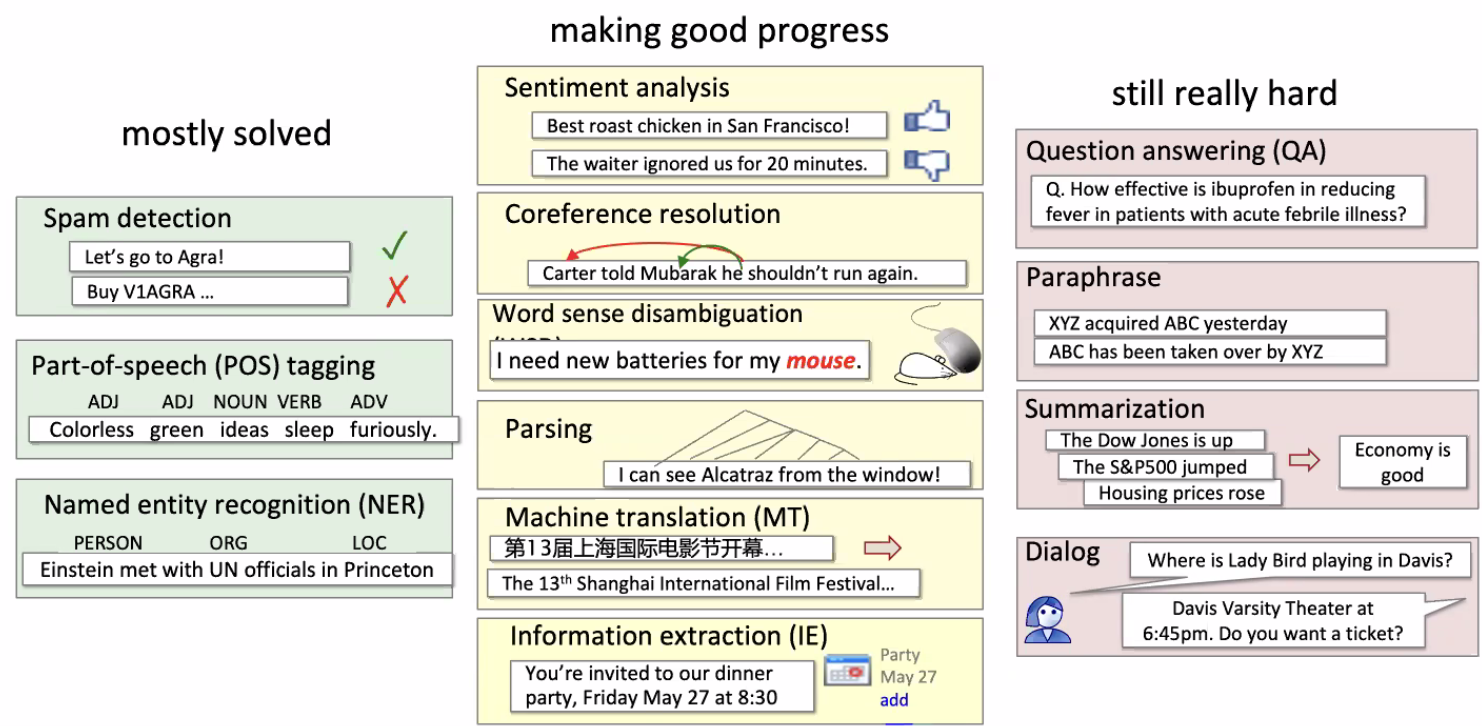

Current Progress in NLP

where:

- Spam detection would be like a ML binary classification problem

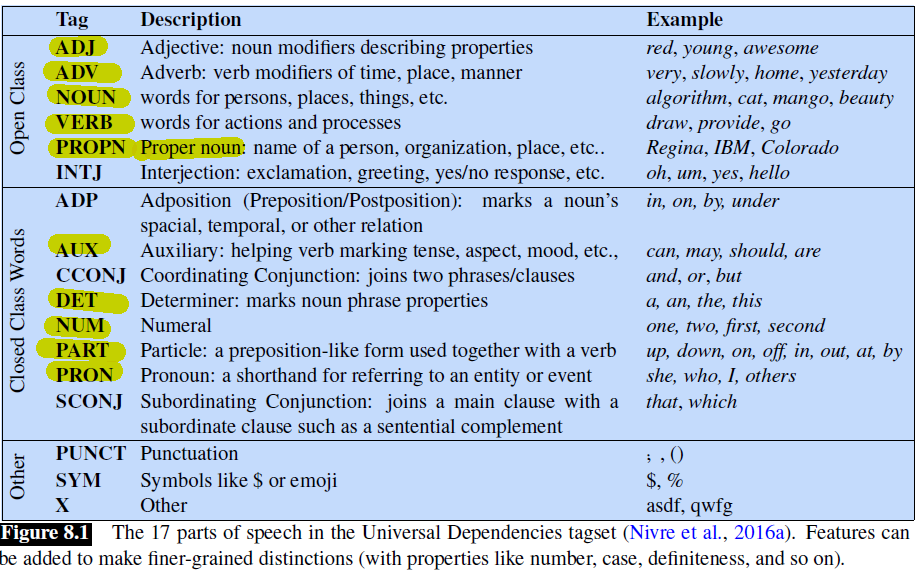

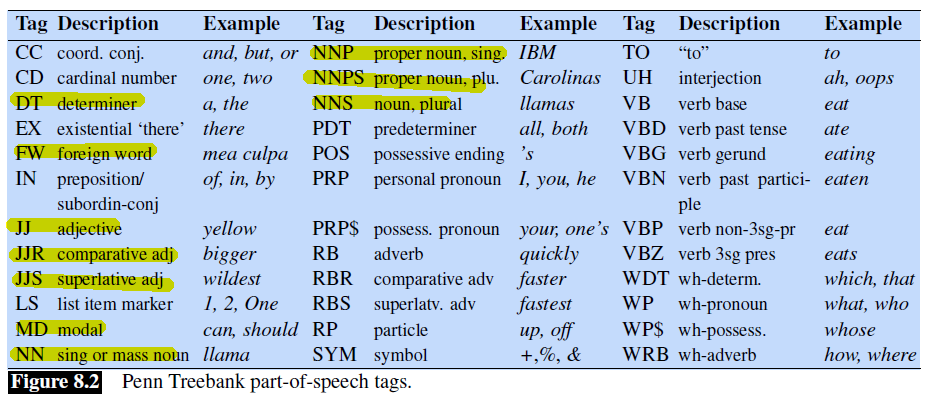

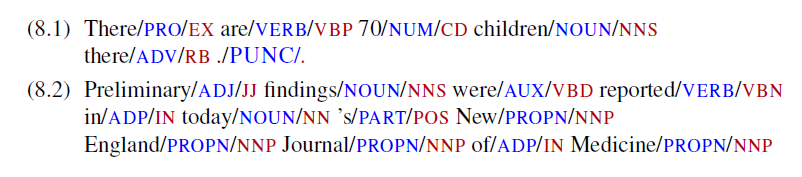

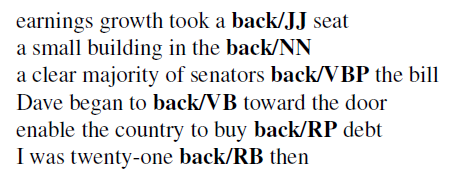

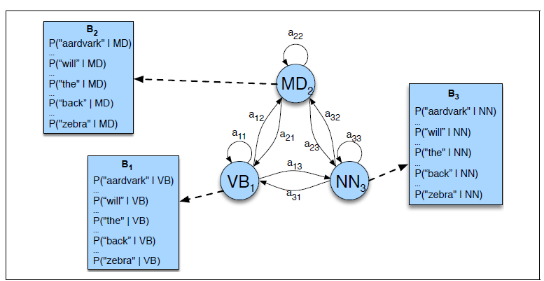

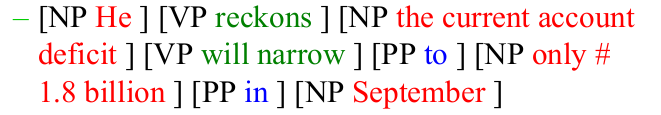

- Part-of-Speech (POS): tag every token with their syntactic meaning

- Sentiment Analysis: though it sounds like a simply classification problem, we may want to be “context aware” due to sarcasm

- Coreference: which person does “he” refers to? (helpful for extracting information)

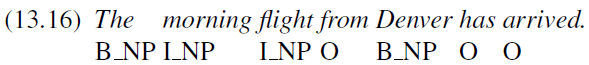

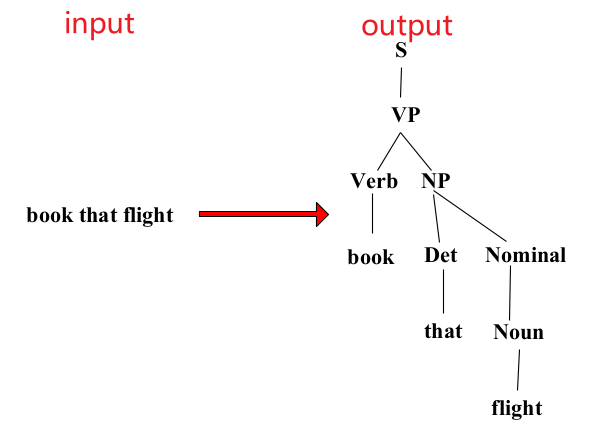

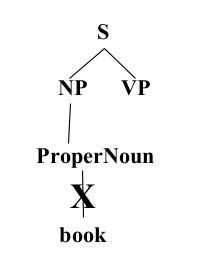

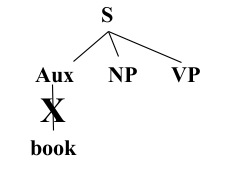

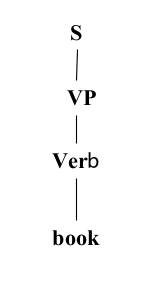

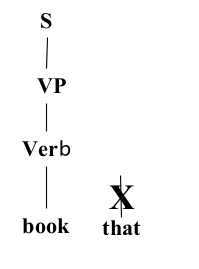

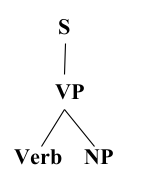

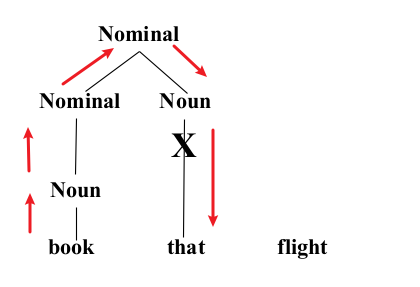

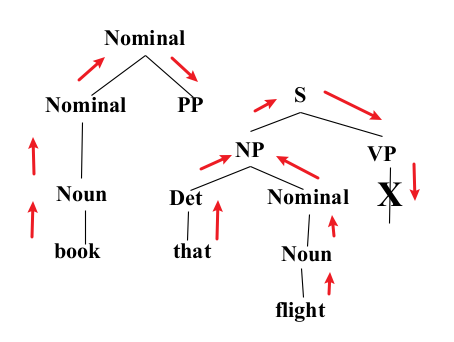

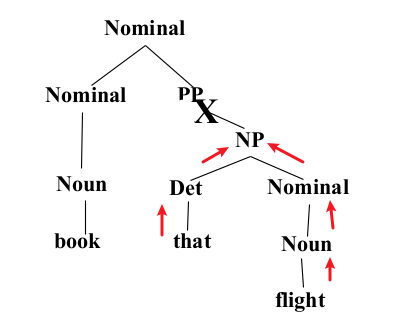

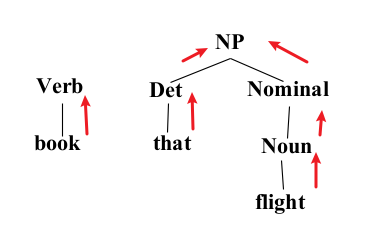

- Parsing: parse the sentence into a tree, e.g. which part is the central piece, which part is purely for description, e.g. understand the functions of different parts of a text. (helpful for summarization)

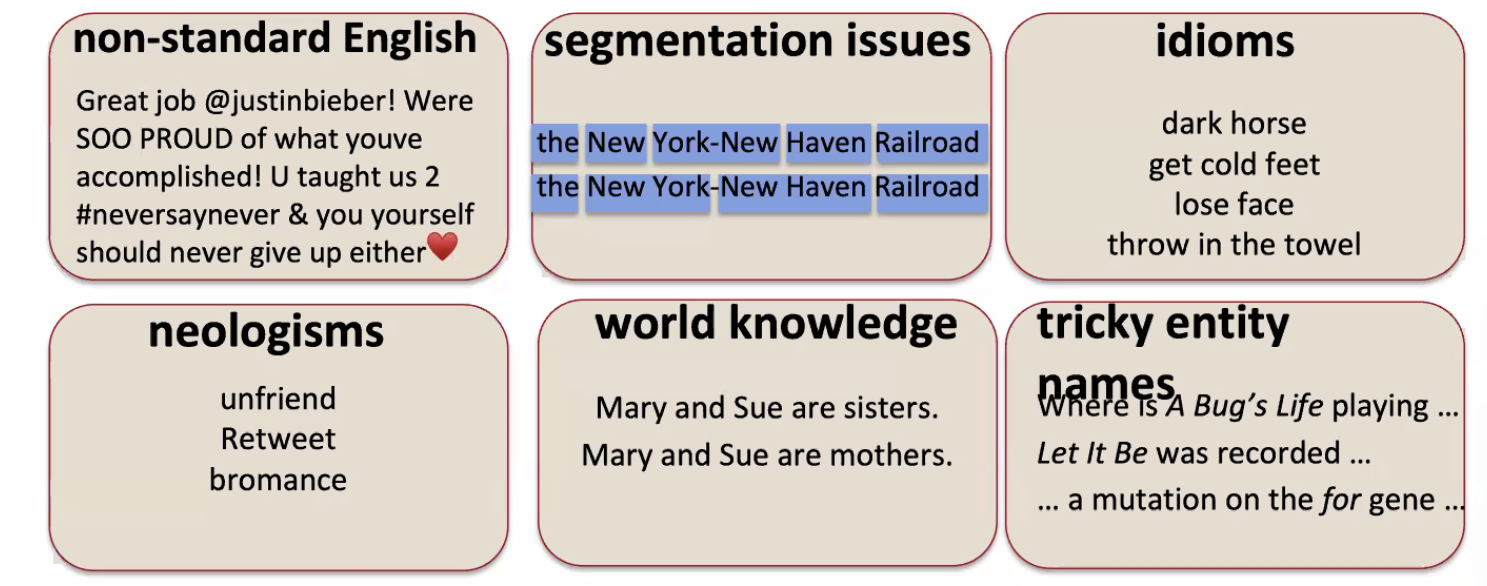

Difficulties in NLP

where:

- use of Emoji, internet slangs

- segmentation of a sentence to break them into parts

- idioms: they have deeper/cultural meaning that they superficially mean

- tricky entity names: “Let it Be” is the name of a song. “A bug’s life” is name of a show

Overall Syllabus

- We will start with theories and statistical NLP

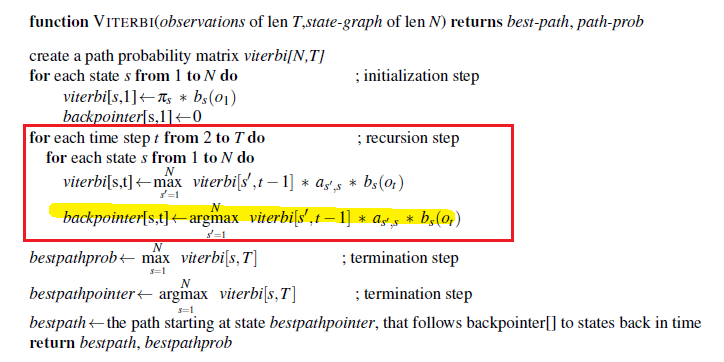

- Viterbi

- N-gram language modeling

- statistical parsing

- Inverted index, tf-id

- Programming and Implementations

- using Python

Basic Text Processing

Before almost any natural language processing of a text, the text has to be normalized. At least three tasks are commonly applied as part of any normalization process:

- Tokenizing (segmenting) words

- Normalizing word formats

- Segmenting sentences

Most preprocessing task in NLP involve a set of tasks collectively called text normalization

- Normalizing text means converting it to a more convenient, standard form. For example, most of what we are going to do with language relies on first separating out or tokenizing words, lemmatization (e.g. sang, sung, and sings are all form sing), stemming, sentence segmentation, and etc.

Some other nomenclatures:

- corpus (plural corpora), a computer-readable corpora collection of text or speech

- e.g. the Brown corpus is a million-word collection of samples from 500 written English texts from different genres

- utterance is the spoken correlate of a sentence: “I do uh main- mainly business data processing”

- This utterance has two kinds of disfluencies. The broken-off word main- is fragment called a fragment. Words like uh and um are called fillers or filled pauses.

- Should filled pause we consider these to be words? Again, it depends on the application.

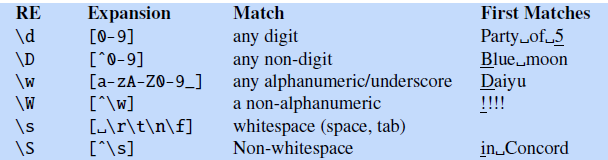

Regular Expressions

Some common usages building regular expressions during preprocessing/extracting some words:

Basics:

| Pattern | Matches | Note |

|---|---|---|

[wW]oodchuck |

Woodchuck, woodchuck | Disjunction [wW] |

[1-9] |

any single digit | Ranges: [1-9] |

[^A-Z] |

Not an upper case letter | ^ only means negation when used with [] |

[^Ss], [^e^] |

Not S or s, e or ^ |

e.g. a^b means actually matching a^b |

a|b|c |

same as [abc] |

More disjunction |

Regular expressions with ?/*/+/.

| Pattern | Matches | Note |

|---|---|---|

colou?r |

color, colour | previous character would be optional |

oo*h |

previous charterer would have 0 or more occurrence | |

o+h! |

1 or more occurrence | |

baa+ |

||

beg.n |

begin, began, begun |

Anchors ^ and $

| Pattern | Matches | Note |

|---|---|---|

^[A-Z] |

Palo | the first character must be [A-Z] |

^[^A-Z] |

1Hello | the first character must NOT be [A-Z] |

\.$ |

Hello. | the last character must be . |

note that we used escape character as otherwise . means any symbol.

More operators

For Example:

Finding all instance of the word “the” in a given text, assuming the texts are “clean”:

Solution:

Using:

the: misses capitalizedTheoccurrence[tT]he: also matchedother[^a-zA-Z][tT]he[^a-zA-Z]: works, but would miss theTheat the beginning of document(^|[^a-zA-Z])[tT]he([^a-zA-Z]|$): final version

Note

The process we just went through was based on fixing two kinds of errors

- Matching strings that we should not have matched (there, then, other)

- False positives (Type I)

- accuracy or precision (minimizing false positives)

- Not matching things that we should have matched (The)

- False negatives (Type II)

- Increasing coverage or recall (minimizing false negatives)

Simple Models

We can use Regular Expression as a simple model:

- whether if certain words exist in a paragraph -> classify

1(e.g. if a positive word exist, positive sentiment) - Sophisticated sequences of regular expressions are often the first model for any text processing text

More complicated tasks:

- Use regular expression for processing -> use machine learning models

Word Tokenization

Every NLP task needs to do text tokenization:

Tokenization is essentially splitting a phrase, sentence, paragraph, or an entire text document into smaller units, such as individual words or terms. Each of these smaller units are called tokens.

- e.g. “New York” and “rock’ n’ roll” are sometimes treated as large words despite the fact that they contain spaces

- punctuation will be included/treated as a separate token from words

Some problems that makes this task invovled:

- dealing with URLs

- dealing with numbers such as $555,500.0$, where punctuations are involved

- converting words such as “what’re” to “what are”

- depending on application, tokenize “New York” to a single word

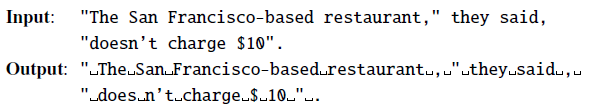

In practice, tokenization runs before any other processing, and needs to be fast. For example, converting:

therefore:

-

often implemented using deterministic algorithms based on regular expressions compiled into very efficient finite state automata.

-

programming example:

>>> text = 'That U.S.A. poster-print costs $12.40...' >>> pattern = r'''(?x) # set flag to allow verbose regexps ... ([A-Z]\.)+ # abbreviations, e.g. U.S.A. ... | \w+(-\w+)* # words with optional internal hyphens ... | \$?\d+(\.\d+)?%? # currency and percentages, e.g.$12.40, 82% ... | \.\.\. # ellipsis ... | [][.,;"'?():-_`] # these are separate tokens; includes ], [ ... ''' >>> nltk.regexp_tokenize(text, pattern) ['That', 'U.S.A.', 'poster-print', 'costs', '$12.40', '...']

Another common task is to compute the length of a sentence. While this sounds as just counting the tokenization, depending on application, you might need to think of whether if you should count on lemma or wordform:

\[\text{Seuss's \textbf{cat} in the hat is different from other \textbf{cats}!}\]so here notice that:

- A lemma is a set of lexical forms having the same stem, the same major part-of-speech, and the same word sense

- both $\text{cat}, \text{cats}$ would be count as only 1

- A word form is the full inflected or derived form of the word

- $\text{cat}, \text{cats}$ would count as 2 word forms

- i.e. how many “lemma” vs “wordform”

Types and Tokens

\[\text{They picnicked by the pool, then lay back on the grass and looked at the stars.}\]for instance:

- Types are the number of distinct words in a corpus; if the set of words in the vocabulary is $V$, the number of types is the word token vocabulary size $\vert V\vert$.

- When we speak about the number of words in the language, we are generally referring to word types.

- Tokens are the total number $N$ of running words.

- If we ignore punctuation, the above Brown sentence has 16 tokens and 14 types:

- each type may be exemplified by multiple tokens

Issues

Other than some common issues/choices you need to make in tokenization, such as:

- whether or not you should expand

what'retowhat are - should we remove

-fromHewlett-Packard? - what should we do with

PhD.? Should we treat it as the full acronym?

Other difficulties arises as well:

-

Issues in Languages.

For instance in German, the word:

\[\text{Lebensversicherungsgesellschaftsangesteller} \to \text{life insurance company employee}\]is basically four words in one:

- this single word contains four things, so we need to use compound splitter to split into four

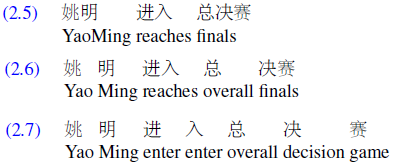

In Chinese, the sentence $\text{姚明进入总决赛}$ can be split into:

because there is no space! How do we split them into tokens?

- implemented using Greedy search (Maximum Marching), until they cannot be split further

- this works if you have a dictionary already

- therefore, word tokenization is also called word segmentation since there are no spaces in Chinese

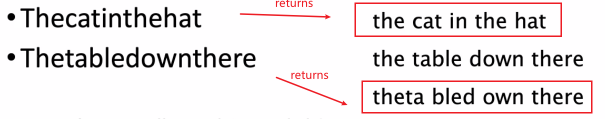

For Example: Maximum Marching

it turns out that it worked pretty well in most of the cases. For illustration purposes, we demonstrate them in English:

note that in languages such as Chinese they actually work pretty well

- but in general, modern probabilistic segmentation algorithms perform even better (baseline: Maximum Matching)

Byte-Pair Encoding for Tokenization

Another popular way of tokenization is to use the data/corpus to automatically tell us what tokens should be. NLP algorithms often learn some facts about language from one corpus (a training corpus) and then use these facts to make decisions about a separate test corpus and its language.

- however, a problem is, say we had words such as $\text{low}, \text{new}, \text{newer}$, but see $\text{lower}$ in the test corpus. What should we do?

To deal with this unknown word problem, modern tokenizers often automatically induce sets of tokens that include tokens smaller than words, called subwords.

- Subwords can be arbitrary substrings, or they can be meaning-bearing units like the morphemes, e.g. $\text{-est, -er}$

- A morpheme is the smallest meaning-bearing unit of a language; for example the word unlikeliest has the morphemes un-, likely, and -est.

- e.g. induce $\text{lower}$ from $\text{low}, \text{-er}$

In general, this is done in two steps:

- a token learner: takes a raw training corpus (sometimes roughly pre-separated into words, for example by whitespace) and induces a vocabulary of tokens that will be used

- a token segmenter, takes a raw test sentence and segments it into the tokens using the vocabulary

Three algorithms are widely used:

- byte-pair encoding (Sennrich et al., 2016),

- unigram language modeling (Kudo, 2018),

- WordPiece (Schuster and Nakajima, 2012);

there is also a SentencePiece library that includes implementations of the first two of the three

Normalization, Lemmatization, and Stemming

Word normalization is the task of putting words/tokens in a standard format, choosing a single normal form for words with multiple forms, i.e. implicitly define equivalence classes of terms

e.g. ‘USA’ and ‘US’ or ‘uh-huh’ and ‘uhhuh’, so that searching for “USA” should match result with “US” as well

therefore, useful for Information Retrieval: indexed text & query terms must have same form.

more e.g.

- Enter: window Search: window, windows

- Enter: windows Search: Windows, windows, window

Case Folding

One easy kind of normalization would be case folding: Mapping everything to lower case means that $\text{Woodchuck}$ and $\text{woodchuck}$ are represented identically/same form.

\[\text{SAIL} \iff \text{sail}\] \[\text{US} \iff \text{us}\]for different applications, we need to think about whether if this is appropriate

- helpful for generalization in many tasks, such as information retrieval and speech recognition (uses tend to use lower case more often)

- For sentiment analysis and other text classification tasks, information extraction, and machine translation, by contrast, case can be quite helpful and case folding is generally not done.

Lemmatization

Lemmatization: task of determining that two words have the same root, despite their surface differences

- e.g. the words sang, sung, and sings are forms of the verb sing.

- a lemmatizer maps from all of these to sing.

For morphologically complex languages like Arabic, we often need to deal with lemmatization

An example when this is useful would be: in web search, someone may type the string “woodchucks” but a useful system might want to also return pages that mention “woodchuck” with no “s”.

- i.e. words with same lemma should be considered as the “same”/a match

More examples include:

\[\{\text{car, cars, car's, cars'}\} \to \text{car}\] \[\text{He is reading detective stories} \to \text{He be read detective story}\]where:

-

another helpful case for this would be doing Machine Translation (MT)

-

how is this done? The most sophisticated methods for lemmatization involve complete morphological parsing of the word.

Morphology is the study of morpheme the way words are built up from smaller meaning-bearing units called morphemes.

Two broad classes of morphemes can be distinguished:

- stems — the central morpheme of the word, supplying the main meaning

- affix — adding “additional” meanings of various kinds.

For example, the word $\text{fox}$ consists of one morpheme (the morpheme fox) and the word $\text{cats}$ consists of two: the morpheme $\text{cat}$ and the morpheme $\text{-s}$.

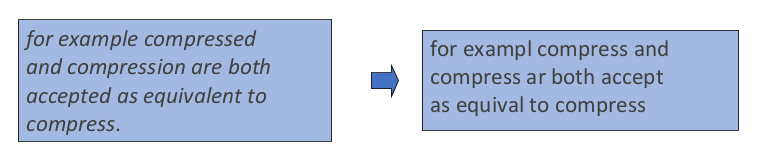

Stemming

Lemmatization algorithms can be complex. For this reason we sometimes make use of a simpler but cruder method, which mainly consists of chopping off word-final stemming affixes. This naive version of morphological analysis is called stemming.

Stemming refers to a simpler version of lemmatization in which we mainly just strip suffixes from the end of the word

For instance:

\[\text{automate, automatic, automation} \iff \text{automat}\]And for paragraphs:

sometimes Stemming is not very useful

- often used in information retrieval to condense/compress information

- one popular algorithm would be the Porter’s Algorithm

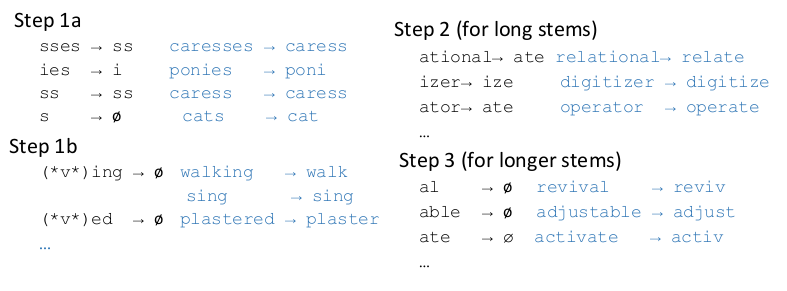

Porter’s Algorithm

In short, The algorithm is based on series of rewrite rules run in series, as a cascade, in which the output of each pass is fed as input to the next pass:

where:

- step 1b: removes

ing/ed - again, works pretty well for simple texts

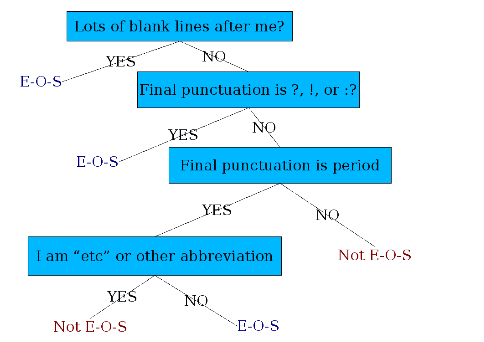

Sentence Segmentation

Another important step in preprocessing (related to tokenization) would be separating into sentences. The most useful cues for segmenting a text into sentences are punctuation, like periods, question marks, and exclamation points. However:

- Question marks and exclamation points are relatively unambiguous markers of sentence boundaries

- Periods, on the other hand, are more ambiguous.

- e.g. abbreviations such as Mr. or Inc., numbers such as $4.3$

In general, it can be solved using machine learning models or hand-written rules:

- Rule-based: Stanford CoreNLP toolkit (Manning et al., 2014),

- for example sentence splitting is rule-based, a deterministic consequence of tokenization

- hand-written rules basically hard-coded rules (

if/elif/...) or related to regular expressions

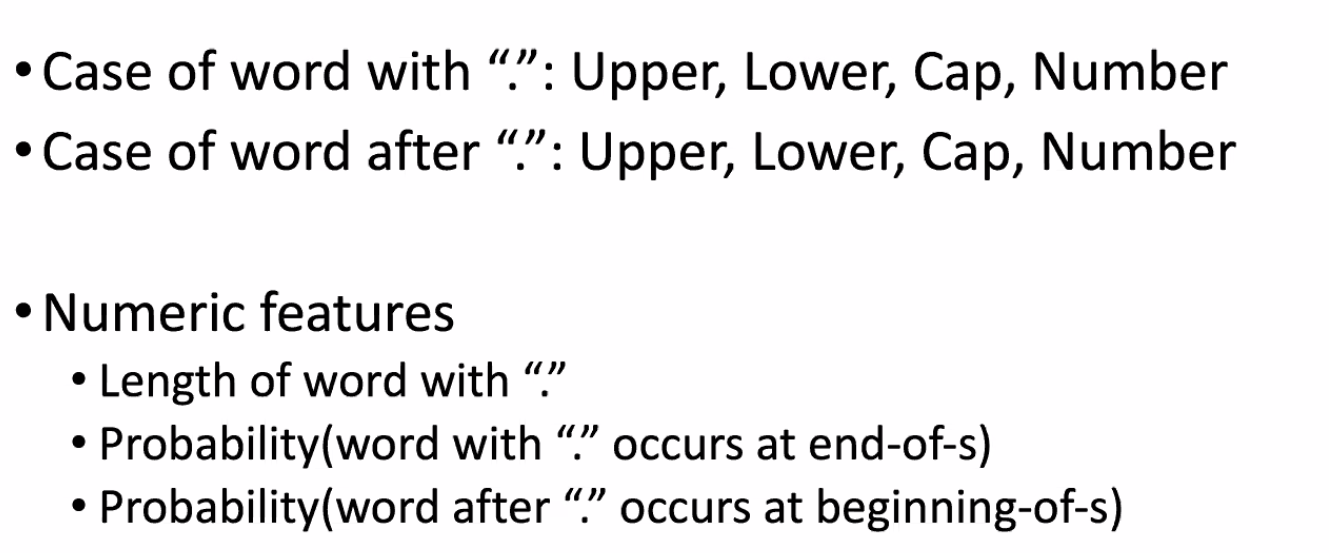

- Machine Learning: decision tree models

- Looks at a “.”

- Decides EndOfSentence/NotEndOfSentence

- Classifiers: hand-written rules, regular expressions, or machine-learning

For Example: Machine Learning model for Sentence Segmentation

Often we just use a decision tree for this

-

Construct features:

how to choose good features is the key part.

-

Run the Decision Tree. Output could look like:

Or, we can use other classifiers such as Logistic Regression, SVM, NN, etc.

Minimum Edit Distance

Much of natural language processing is concerned with measuring how similar two strings are.

- e.g. popping up autocompletion

Edit distance gives us a way to quantify both of these intuitions about string similarity.

- e.g. $\text{graffe}$ with $\text{giraffe}$

Minimum edit distance between two strings is defined as the minimum number of (weighted) editing operations (operations like insertion, deletion, substitution) needed to transform one string into another.

- e.g. $\text{graffe}$ to $\text{giraffe}$ would require one

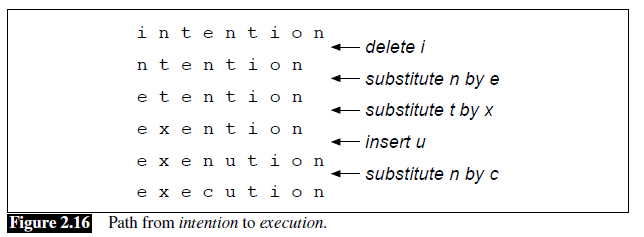

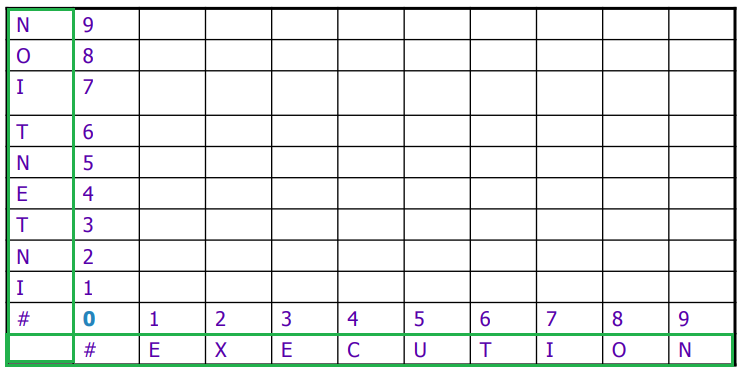

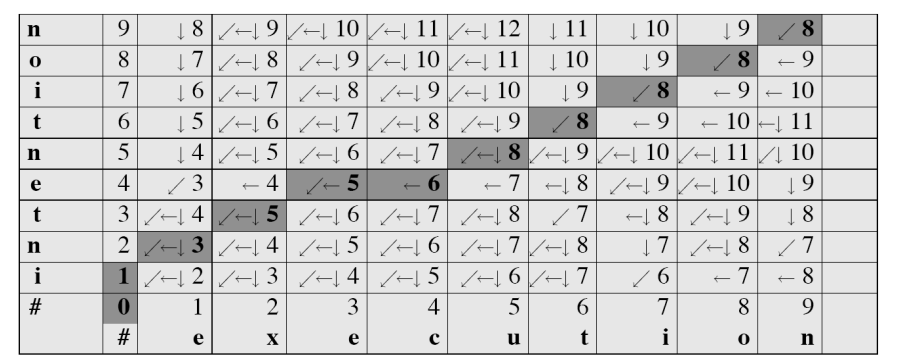

- e.g. $\text{intention}$ and $\text{execution}$, for example, is $5$ (without weighting)

- (delete an $i$, substitute $e$ for $n$, substitute $x$ for $t$, insert $c$, substitute $u$ for $n$)

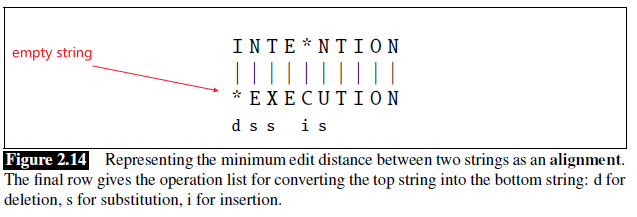

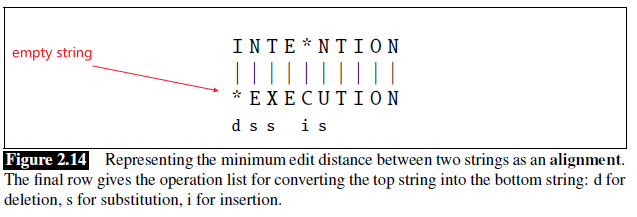

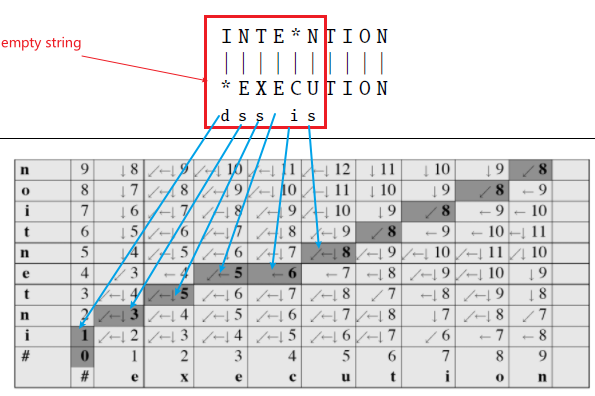

But the problem is, how do we algorithmically do it. First, we may want to visualize the result:

here we are:

- given an alignment: a correspondence between substrings of the two sequences.

- $I$ aligns with the empty string, $N$ with $E$, and so on

- below the aligned string is operation list for converting the top string into the bottom string: $d$ for deletion, $s$ for substitution, $i$ for insertion

- therefore, the minimum edit distance is $5$ because this is already the best alignment

Additionally:

- We can also assign a particular cost or weight to each of these operations. The Levenshtein distance between two sequences is the simplest weighting factor in which each of the three operations has a cost of $1$

- An alternative version of his metric in which each insertion or deletion has a cost of $1$ and substitutions are not allowed.

- This is equivalent to allowing substitution, but giving each substitution a cost of $2$ since any substitution can be represented by one insertion and one deletion

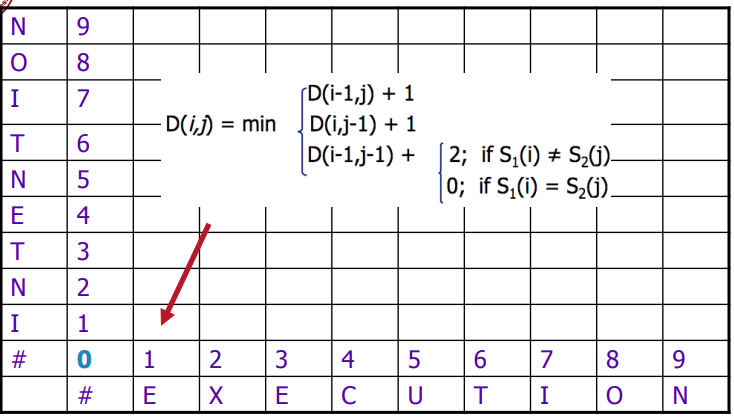

Minimum Edit Distance Algorithm

Intuition

Our final aim is to produce something like this

Imagine some string (perhaps it is $\text{exention}$) that is in this optimal path (whatever it is).

- if $\text{exention}$ is in the optimal operation list, then the optimal sequence must also include the optimal path from $\text{intention }$ to $\text{exention}$.

- all the other path we can forget

Then, iteratively compute it:

- from $\text{intention}$ to $\text{*}$ (empty string, base case)

- from $\text{intention}$ to $\text{e}$

- from $\text{intention}$ to $\text{ex}$

- from $\text{intention}$ to $\text{exe}$

- etc

- from $\text{intention}$ to $\text{execution}$

In reality since you can also start from $\text{execution}$, it would be a 2D matrix

Basically this is solved by dynamic programming, which are solutions that apply a table-driven method to solve problems by combining solutions to sub-problems

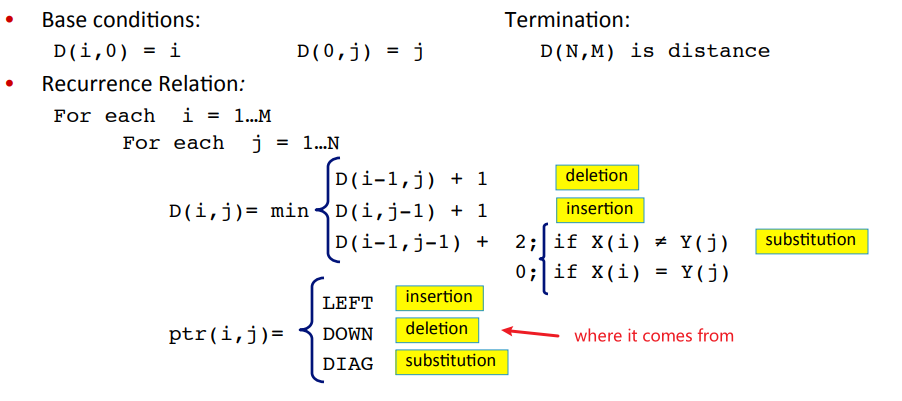

Before showing the main algorithm, we use the notation that:

- $*$ is an empty string

- $X,Y$ are inputs of length $n,m$

- $X[1..i],Y[1..j]$ are the first $i$ characters of $X$, and first $j$ characters of $Y$

- we will be filling up a matrix, call it $D$. Our result is basically $D[n,m]$

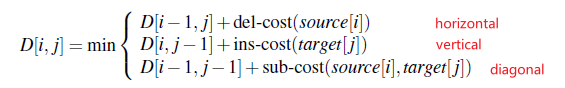

Then, the update rule is simply

i.e. choosing the best path given the previous bests, which are $D[i-1,j], D[i,j-1], D[i-1,j-1]$.

- we used $\text{sub-cost}$ for substitution cost so that user can specify the weights.

- here, we will use $\text{sub-cost}=2$, $\text{del-cost}=\text{ins-cost}=1$

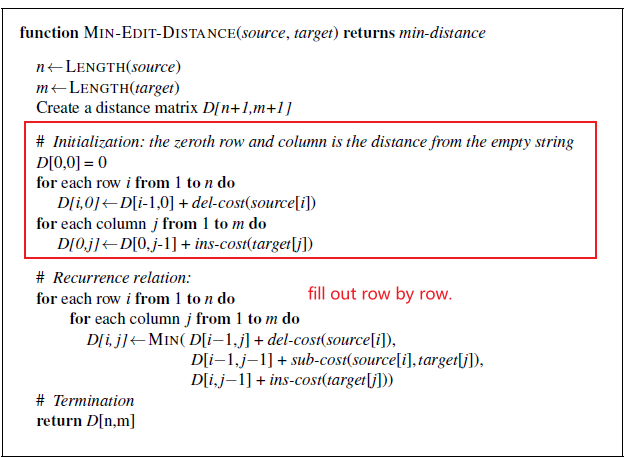

Therefore, the algorithm is:

Therefore, it can be easily seen that:

- Space Complexity: $O(nm)$

- Time complexity: $O(nm)$

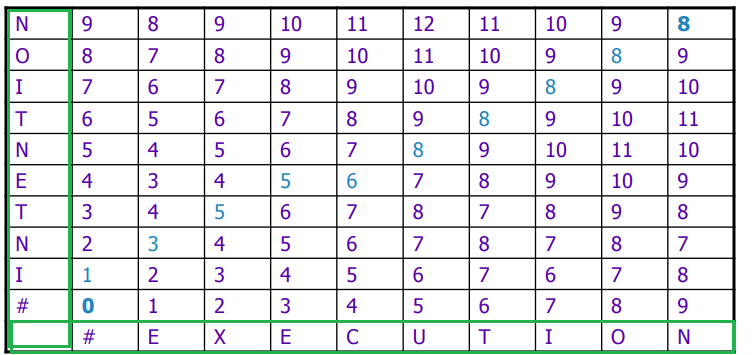

For Example:

-

First we initialize

-

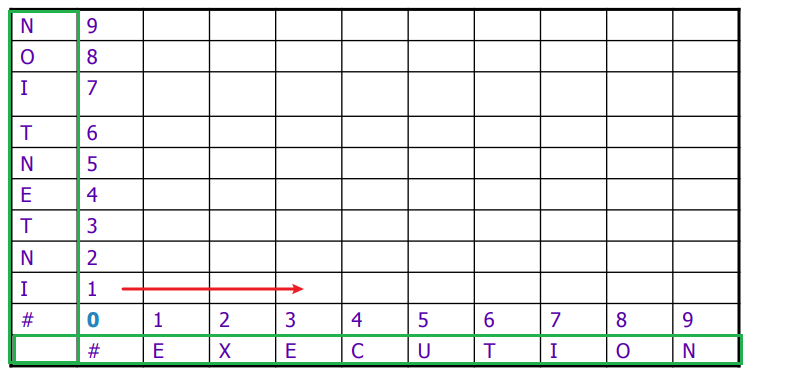

Then, we fill it up row by row

which works since for each computation, the 3 inputs $D[i-1,j], D[i,j-1], D[i-1,j-1]$ would have already been computed.

- we could also go column by column, from bottom up

-

fill up and return the top right

Note that, the optimal path at each stage is outlined here (done by backtracking)

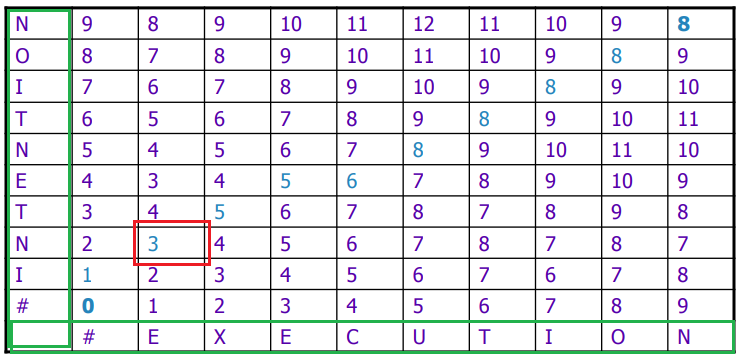

The value $3$ here would mean the minimal cost to go from $\text{IN}$ to $\text{E}$. We can follow the path:

- cost $0$ from start

- cost $1$ to go from $I \to *$

- delete $I$

- cost $3$ to go from $\text{IN} \to \text{E}$

- replace $N \to E$

Alignment

Recall that an alignment basically is the optimal path for edit distance:

Therefore, since it is just the optimal path, we only need to store, for each $D[i,j]$. where did the best previous path come from:

since technically a cell $D[i,j]$ could be optimally reached from several path, the result is NOT unique

- for programming, we usually just pick the first in the list

- when done, to perform the backtrace, i.e. draw the optimal path from the table, we need $O(n+m)$

The graphical result looks like:

For Example:

Consider how this part comes about from our optimal path

Language Models

The task we are considering is predicting the next few words someone is going to say? What word, for example, is likely to follow

\[\text{Please turn your homework ...}\]In the following sections we will formalize this intuition by introducing models that assign a probability to each possible next word. The same models will also serve to assign a probability to an entire sentence. So that:

\[P(\text{all of a sudden I notice three guys}) > P(\text{guys all I of notice three a sudden})\]This would be useful in:

-

identify words in noisy, ambiguous input, like speech recognition.

- For writing tools like spelling correction or grammatical error correction

- $\text{Their are two midterms}$, in which $\text{There}$ was mistyped as $\text{Their}$

- Assigning probabilities to sequences of words (sentences) is also essential in machine translation.

Models that assign probabilities to sequences of words are called language model, or LMs.

In this chapter we introduce the simplest model that assigns probabilties to sentences and sequences of words, the n-gram.

An n-gram is a sequence of $n$ words:

- a $2$-gram (which we’ll call bigram) is a two-word sequence of words like $\text{please turn}$, or $\text{turn your}$

- a 3-gram (a trigram) is a three-word sequence of words like $\text{please turn your}$, or $\text{turn your homework}$.

We will see how n-gram models to estimate the probability of the last word of an n-gram given the previous words, and also to assign probabilities to entire sequences.

N-Grams

Essentially, we would be interested in computing:

\[P(W_1, W_2, ..., W_n)\]for assigning probability to a given sequence of words.

- note that this is a joint probability, which means order matters. e,g. $P(X_1 = 1,X_2 =0) \neq P(X_1=0,X_2=1)$

For predicting next word:

\[P(W_5| W_1, W_2, ..., W_4)\]Language model, again, needs to be able to compute those probabilities.

The question is how? If we naively want to estimate:

\[P(X_1, ...,X_n) \approx \frac{\text{Count}(X_1,...X_n)}{\text{All Sentences}}\]The problem is that combination of $X_1,…,X_n$ might not even be there for $n$ being large.

- doesn’t appear in training set (usually just a large corpus) doesn’t mean chance of occurring is $0$

- need Laplace Smoothing, as you shall see soon

We know that a joint:

\[\begin{align*} P(X_1,...,X_n) &= P(X_1)P(X_2|X_1)P(X_3|X_1,X_2)...P(X_n|X_1,...X_{n-1})\\ &= P(X_1)P(X_2|X_{1})P(X_3|X_{1:2})...P(X_n|X_{1:n-1})\\ &= \prod_{i=1}^nP(X_i|X_{1:i-1}) \end{align*}\]But still, we are left we computing for $i = n$, or any large $i$:

\[P(X_i|X_1, ..., X_{k-1})= \frac{P(X_1,...,X_{k-1},X_k)}{P(X_1,...X_{k-1})}\]using law of total probability. Therefore:

\[P(X_i|X_1, ..., X_{k-1}) \approx \frac{\text{Count}(X_1,...,X_{k-1},X_k)}{\text{Count}(X_1,...X_{k-1})}\]So the same problem persist.

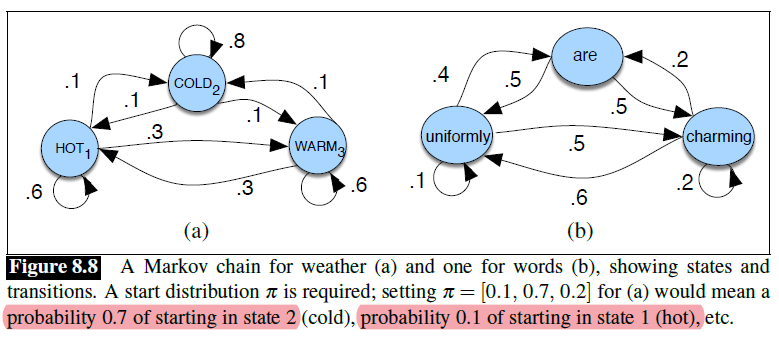

Yet we can approximate this using Markov’s Assumption

Markov models are the class of probabilistic models that assume we can predict the probability of some future unit without looking too far into the past.

thus the n-gram (which looks $n-1$ words into the past only)

e.g. $n=2$ means we only consider up to $1$ word in the past, i.e. only $P(X_i\vert X_{i-1})$

so that:

\[P(\text{the}|\text{its water is so transparent that})\to P(\text{the}|\text{that})\]

Therefore, the n-gram model basically says:

\[\begin{align*} P(X_1,...,X_m) &= \prod_{i=1}^m P(X_i|X_{i-n+1}, ..., X_{i-1}) \end{align*}\]Then to formally find out estimates for each $P(X_i\vert X_{i-n}, …, X_{i-1})$, we consider them as parameters and perform a MLE.

-

in a bigram case, where $n=2$, each parameter $P(X_i\vert X_{i-1})$ can be estimated using MLE to yield:

\[P_{MLE}(X_i | X_{i-1}) = \frac{\text{Count}(X_{i-1},X_{i})}{\text{Count}(X_i)}\]notice that the demotivator is a unigram probability

-

In general:

\[P_{MLE}(X_i | X_{i-n+1:i-1}) = \frac{\text{Count}(X_{i-n+1:i-1},X_{i})}{\text{Count}(X_{i-n+1:i-1})}\]

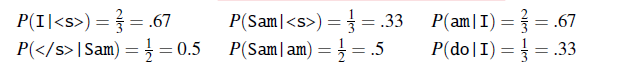

For Example

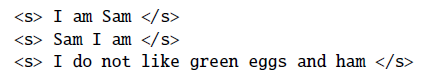

Consider our corpus being given as three sentences, the start is measured by <s> and end </s>

Suppose we are using bigram. Then we can compute some of the bigrams being

For Example:

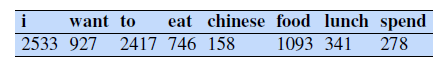

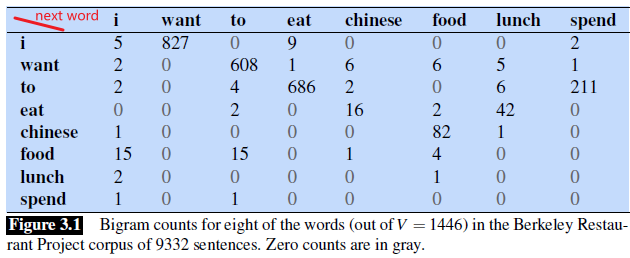

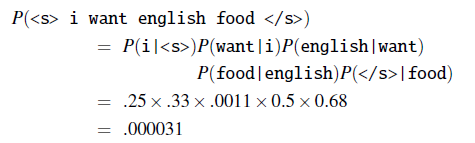

Consider using bigram, and recall that:

\[P_{MLE}(w_i | w_{i-1}) = \frac{\text{Count}(w_{i-1},w_{i})}{\text{Count}(w_i)}= \frac{\text{Count}(w_{i-1},w_{i})}{\sum \text{Count}(w_i,w_{i-1})}\]Let the unigram probabilities be given:

And the bigram counts have been filled

Then we can compute the probability of a sentence

Some practical issues

-

in practice to generate sentences that makes sense, it is more often to use trigram or 4-gram, depending on how much data you have for training

-

We always represent and compute language model probabilities in log probability, e.g. instead of $0.000031$, we use $\log (0.000031)$.

-

This is because probabilities are (by definition) less than or equal to $1$, the more probabilities we multiply together, the smaller the product becomes. Multiplying enough n-grams together would result in numerical underflow

-

$\log$ is good since it is monotonously increasing anyway

-

this could also speed up computation, because we can convert our probability output

\[\log (p_1 \times p_2 \times ... \times p_n) = \log(p_1) + \log(p_2) + ... + \log(p_n)\]becomes addition

-

Evaluating Language Models

In general there are two ways to evaluate:

- an intrinsic evaluation of a language model we need a test set

- so measures the quality of a model independent of any application

- an extrinsic evaluation: evaluate the performance of a language model by to embedding it in an application and measure how much the application improves

- the “real metric”, but far too expensive

- e.g. put into auto-corrector, and compare how many misspelled words corrected properly

Perplexity

In practice we don’t use raw probability as our metric for evaluating language model, but a variant called perplexity

Perplexity

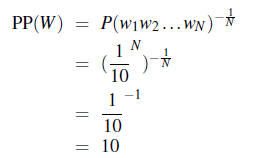

Consider a word sequence/sentence of $W_1, …, W_n$

\[PP(W_1, W_2, ..., W_n) \equiv PP(W)\]Then:

\[\begin{align*} PP(W) &= P(W_1,W_2, ..., W_n)^{-1/n}\\ &= \sqrt[n]{\frac{1}{P(W_1,W_2, ..., W_n)}}\\ &= \sqrt[n]{\frac{1}{\prod_{i=1}^nP(W_i|W_{1:i-1})}} \end{align*}\]is the exact definition.

The intuition of what perplexity measures is as follows:

-

in fact:

\[\text{perplexity} = 2^{\text{entropy}}\]where:

-

$\text{entropy}$ is the average number of bits that we need to encode the information

-

so $\exp$ of the entropy is the total amount of all possible information

-

- hence, instead of just computing the probability, consider a measure of the “average of choices per word”. The higher it is, the more “choices” you have, then the more random is your language model.

- therefore, minimizing perplexity is equivalent to maximizing the test set probability according to the language model

Note

In practice, since this sequence $W$ will cross many sentence boundaries, we need to include the begin- and end-sentence markers

<s>and</s>in the probability computation.We also need to include the end-of-sentence marker

</s>(but not the beginning-of-sentence marker<s>) in the total count of word tokens $N$.

- if you think about perplexity as the “average of choices per word”, then it makes sense that we do not consider

<s>since it must have always started with that

For Example

If we use a Bigram model:

\[PP(W)= \sqrt[n]{\frac{1}{\prod_{i=1}^nP(W_i|W_{i-1})}}\]For Example: Computing Perplexity

Consider two models that have trained on digits, i.e. $0\sim 9$ and know that:

- $P(W_i)=1/10$ is constant

- $P(W_i)=1/90$ but $P(W=0)=9/10$

Then if we see a text with $N$ words that look like:

\[\text{0 0 0 3 0 0 3 0 ....}\]then:

-

the perplexity of the first model:

where we are ignoring the start

<s>and end</s>for now -

the perplexity of the second model is lower since it modelled that on average, the choice of next of next word being $0$ is high.

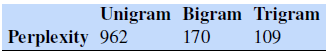

We trained unigram, bigram, and trigram grammars on 38 million words (including start-of-sentence tokens) from the Wall Street Journal, using a 19,979 word vocabulary. We then computed the perplexity of each of these models on a test set of 1.5 million words.

The table below shows the perplexity of a 1.5 million word WSJ test set according to each of these grammars

so basically Trigram is doing the best here.

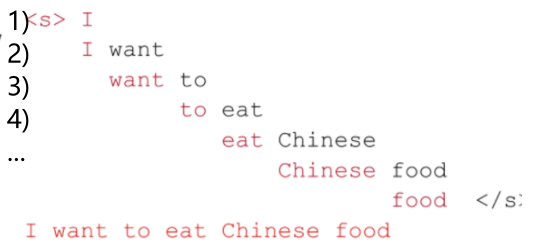

Sampling Sentence from LM

One important way to visualize what kind of knowledge a language model embodies is to sample from it.

- Sampling from a distribution means to choose random points according to their likelihood

- since our distribution basically are $P(w_i\vert w_{i-k+1:i-1})$, we treat this as a distribution and randomly sample from it

For example, suppose we have a Bigram Model. Using Shannon Visualization Method:

where:

- consider $P(w\vert \text{

})$ as a distribution for all token $w$. **Randomly sample from it**- in this case, we got $\text{I}$

- consider $P(w\vert \text{ I})$ as a distribututoin for all token $w$. Randomly sample from it

- in this case, we got $\text{want}$

- continues until we sampled $\text{</s>}$

Notice that we did not simply pick the most probable ones. Because in the end we will be more likely to generate sentences that the model thinks have a high probability and less likely to generate sentences that the model thinks have a low probability.

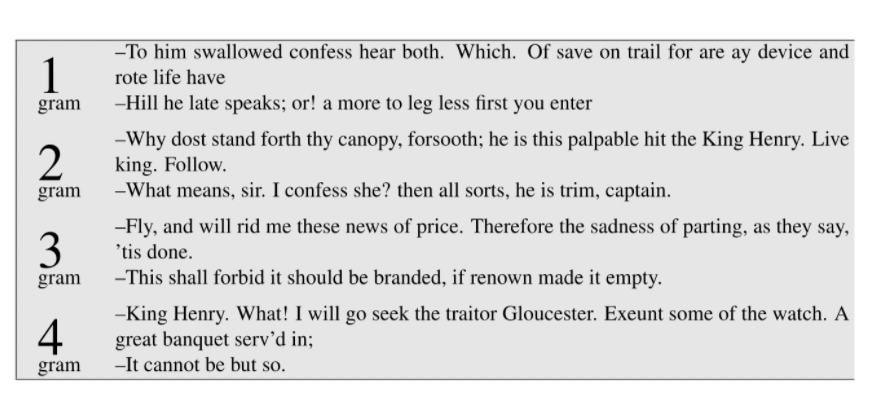

Other Examples

Then, if we you this idea to generate texts from Shakespeare (by training on Shakespeare corpus)

where the output is almost garbage. This is because:

- we are using a vocab of $V=29066$ unique tokens, with a corpus of total size $884647$ tokens.

- Even Shakespeare only produced around $300,000$ bigrams from the all possible $V^2 = 844 * 10^6$ bigrams. This means that $99.96\%$ of the possible bigrams were never seen in the training corpus (have zero entries in the table)

- so if it happens that one of the bigrams appeared in test corpus, we obvious won’t be able to get that.

So, one big problem for simple language models is their ability to generalize to unseen texts.

On the other hand, if the test and training corpus are somewhat similar:

then it seems to work.

- this is based on wall street journal corpus

Generalization and Zeros

The n-gram model, like many statistical models, has performance dependent on the training corpus.

This brings the following problems of LM:

- One implication of this is that the probabilities often encode specific facts about a given training corpus.

- e.g. if the corpus is about food, then most of the vocabs/structures will be steered towards that

- Another implication is that $n$-grams do a better and better job of modeling the training corpus as we increase the value of $N$.

- i.e. higher order $N$-gram models tends to perform better

- however, this then requires a larger training corpus or a larger storage for the probability matrix, which could go $O(V^N)$ for a corpus with $V$ vocab and $N$-gram is used.

- though this can be optimized as we are having a sparse matrix

- Models are often subject to the problem of sparsity. Because any corpus is limited, some perfectly acceptable English word sequences are bound to be missing from it.

- i.e. phrases in test dataset is never seen in train dataset. Then obviously this won’t work

- in real life, this happens

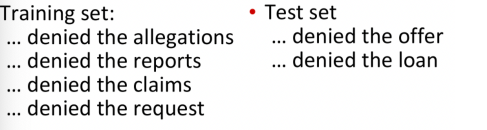

For example: Sparsity

Then:

\[P( \text{offer} | \text{ denied the}) = 0\]since it was never seen in.

- this happens very often in real life applications

- notice that this problem we assumed that we have seen all words, but haven’t seen the specific phrase (i.e. this specific combination). Another different problem is that you might not have seen the word!

Note

These zeros have two very serious consequences:

- First, their presence means we are underestimating the probability of all sorts of words that might occur, which will hurt the performance of any application we want to run on this data.

- Second, if the probability of any word in the test set is 0, the entire probability of the test set is 0.

- By definition, perplexity is based on the inverse probability of the test set. Thus if some words have zero probability, we can’t compute perplexity at all, since we can’t divide by 0!

Unknown Words

Before, we showed a problem we assumed that we have seen all words, but haven’t seen the specific phrase (i.e. this specific combination). Another different problem is that you might not have seen the word!

In a closed vocabulary system the test set can vocabulary only contain words from this lexicon, and there will be no unknown words

An open vocabulary system vocabulary is one in which we model these potential unknown words in the test set by adding a pseudo-word called

<UNK>.

- The percentage of OOV (out of vocabulary) open words that appear in the test set is called the OOV rate.

There are two common ways to train the probabilities of the unknown word model <UNK>.

- The first one is to turn the problem back into a closed vocabulary one by choosing a fixed vocabulary in advance:

- Choose a vocabulary (word list) that is fixed in advance (without looking at the training set)

- Convert in the training set any word that is not in this set (any OOV word) to the unknown word token

<UNK>in a text normalization step. - Estimate the probabilities for `

` from its counts</mark> just like any other regular word in the training set.

- Second alternative, in situations where we don’t have a prior vocabulary in advance, is to create such a vocabulary implicitly

- replace words in the training data by

<UNK>based on their frequency (i.e. threshold it).- e.g. replace by

<UNK>all words that occur fewer than $n$ times in the training set

- e.g. replace by

- proceed to train the language model as before, treating

<UNK>like a regular word.

- replace words in the training data by

Then, when testing our model, we use the probability with <UNK> when seeing an unknown word.

- i.e. use that when computing probability of seeing a text/perplexity

However, The exact choice of <UNK> model does have an effect on metrics like perplexity.

- A language model can achieve low perplexity by choosing a small vocabulary and assigning the unknown word a high probability.

- For this reason, perplexities should only be compared across language models with the same vocabularies

Smoothing

Now, we go back to the problem that vocabs are seen, but the specific phrase/combination are not seen.

Intuition

To keep a language model from assigning zero probability to these unseen events, we’ll have to shift a bit of probability mass from some more frequent events and give it to the events we’ve never seen.

- This modification is called smoothing or discounting

So for instance:

In general, there are many ways to do smoothing:

- Laplace (add-one) smoothing,

- add-k smoothing

- interpolation

- stupid backoff

- Kneser-Ney smoothing

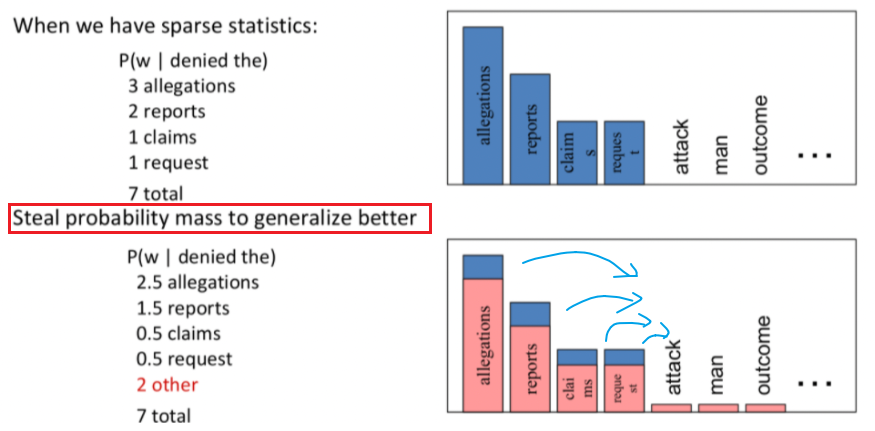

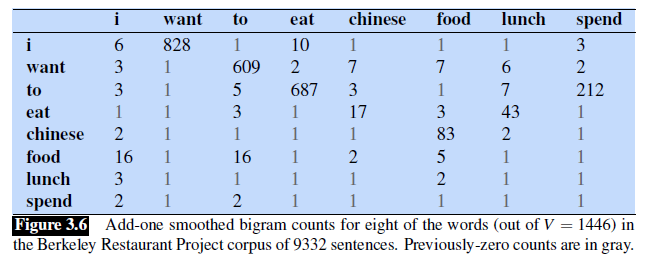

Laplace Smoothing

In reality, one simple way is to use Laplace Smoothing

Laplace Smoothing: add one to all the n-gram counts, before we normalize them into probabilities..

- does not perform well enough to be used smoothing in modern $n$-gram models

- but it usefully introduces many of the concepts that we see in other smoothing algorithms, gives a useful baseline

Let us first recall that our MLE estimate without smoothing was like (for Bigram)

\[P_{MLE}(w_i | w_{i-1}) = \frac{\text{Count}(w_{i-1},w_{i})}{\text{Count}(w_i)}= \frac{\text{Count}(w_{i-1},w_{i})}{\sum \text{Count}(w_i,w_{i-1})}\]we needed $\sum \text{Count}(w_i,w_{i-1})$ because it is $\text{Count}(w_i,w_{i-1})$, i.e. the element in our bigram frequency matrix that gets added 1.

- also notice that we don’t need gradient descent type algorithm, as this is a probabilistic model

Therefore, the Add-1 estimate is simply:

\[P_{Laplace}(w_i | w_{i-1}) = \frac{\text{Count}(w_{i-1},w_{i}) + 1}{\sum( \text{Count}(w_{i-1},w_{i}) + 1)}=\frac{\text{Count}(w_{i-1},w_{i}) + 1}{\text{Count}(w_{i-1}) + V}\]where notice that:

- $V$ is the size of vocabulary, which makes sense since $P$ needs to add up to 1

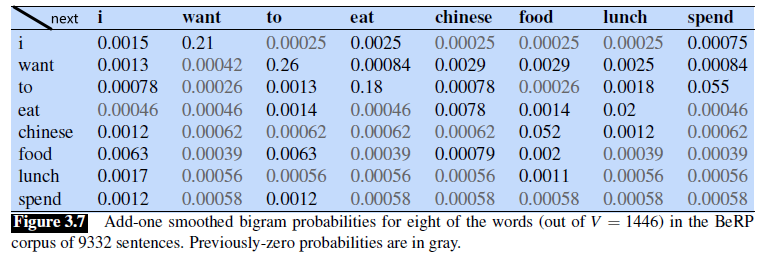

For Example:

When added smoothing the bigram frequency matrix looks like

where:

- many of the $1$ entries were $0$ before hand.

- Basically all entries are added $1$ to it

Now we can compute the conditional probability

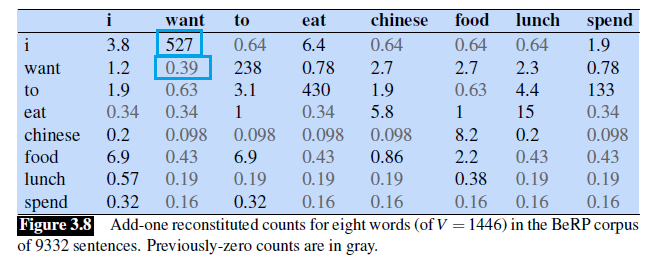

To see the smoothing effect more easily, we can reconstruct the count/frequency matrix by treating:

\[P_{ \text{Laplace}}(w_n | w_{n-1}) \equiv \frac{ \text{Count}^*(w_{n-1}w_n)}{\text{Count}(w_{n-1})} = \frac{\text{Count}(w_{i-1},w_{i}) + 1}{\text{Count}(w_{i-1}) + V}\]Then we can easily get:

\[\text{Count}^*(w_{n-1}w_n) =\frac{(\text{Count}(w_{i-1},w_{i}) + 1 ) * \text{Count}(w_{n-1})}{\text{Count}(w_{i-1}) + V}\]This means that the frequency becomes:

Note

In reality:

- add-1 isn’t used for N-grams. We’ll see better methods

- But add-1 is used to smooth other NLP models

- e.g. for text classification, or other domains where the number of zeros isn’t so huge.

Add-k Smoothing

Why add-1? Sometimes you may want to shift a bit less of the probability to unseen events, so that you might pick $k=0.5$, or $k=0.05$ for instance to:

\[P_{Laplace}(w_i | w_{i-1}) = \frac{\text{Count}(w_{i-1},w_{i}) + k}{\sum( \text{Count}(w_{i-1},w_{i}) + k)}=\frac{\text{Count}(w_{i-1},w_{i}) + k}{\text{Count}(w_{i-1}) + kV}\]where:

- now we may want to know what $k$ is the most optimal. This can be done, for example, by optimizing on a devset.

Backoff and Interpolation

Again, recall that the problem is no examples of a particular trigram (for example), $w_{n-2}w_{n-1}w_{n}$ is seen such that:

\[P(w_n|w_{n-2}w_{n-1}) = 0\]However, we can maybe just rely on/estimate its probability by using the bigram probability $P(w_n \vert w_{n-1})$.

- or basically any lower order N-gram model

- in other words, sometimes using less context is a good thing

Therefore, the idea is then:

In backoff, we use the trigram (e.g.) if the evidence is sufficient, otherwise we use the bigram, otherwise the unigram.

- “back off” to a lower-order n-gram if we have zero evidence

In interpolation, we always mix the probability estimates from all the n-gram estimators

- e.g. produced a weighting and combining the trigram, bigram, and unigram counts

- this usually works better than backoff

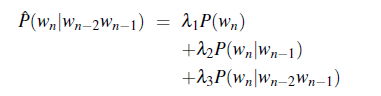

For Example: Simple Linear Interpolation

where:

- $\sum \lambda_i = 1$, otherwise we can choose the weighting

- but we can do better as choosing which one to weight more by context

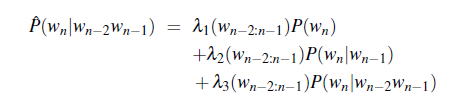

For Example: More sophisticated Linear Interpolation

If we have particularly accurate counts for a particular bigram, we assume that the counts of the trigrams based on this bigram will be more trustworthy, so we can make the ls for those trigrams higher.

- i.e. $\lambda$s differ based on which bigram $w_{n-2}w_{n-1}$ we are conditioning on

Then, the parameter $\lambda$ will be huge:

- in general, both the simple interpolation and conditional are learned/optimized from a held-out corpus.

- A held-out corpus is an additional training corpus, so-called because we hold it out from the training data, that we use to set hyperparameters like these l values.

- There are various ways to find this optimal set of $\lambda$ s. One way is to use the EM algorithm, an iterative learning algorithm that converges on locally optimal $\lambda$s

Example: Katz backoff

In the end, we want to produce a probability distribution

\[P(w_n | w_{n-N+1:n-1})\]if we naively just do:

\[P(w_n | w_{n-N+1:n-1}) = \begin{cases} P(w_n | w_{n-N+1:n-1}), & \text{if Count}(w_{n-N+1:n}) > 0\\ P(w_n | w_{n-N+2:n-1}), & \text{otherwise} \end{cases}\]we notice that this will exceed $1$ since just summing up over $P(w_n \vert w_{n-N+1:n-1})$ already reaches $1$.

Therefore, we need to:

- discount some probability mass from $P(w_n \vert w_{n-N+1:n-1})$

- for lower-order $n$-gram, we need a factor $\alpha$ to distribute it such that everything adds up to 1

where:

- we recursively back off to the Katz probability for the shorter-history ($N-1$)-gram if count is zero.

- $P^*$ is basically discounted $P$ but also sometimes with smoothing. Commonly used smoothing method here is called Good-Turing.

- The combined Good-Turing backoff algorithm involves quite detailed computation for estimating the Good-Turing smoothing and the $P^*$ and $\alpha$ values

Kneser-Ney Smoothing

One of the most commonly used and best performing n-gram smoothing methods is the interpolated Kneser-Ney algorithm, which has its root from absolute discounting

Absolute discounting is basically, say that given a $n$-gram model and count of each $n$-gram, how much should we discount it so that it is closest to the test dataset?

- Recall that discounting of the counts for frequent n-grams is necessary to save some probability mass for the smoothing algorithm

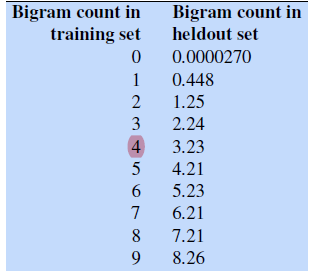

For instance, for a Bigram model, it has been found that the following are the training and heldout dataset

notice that:

- if we subtract off $0.75$ for Bigrams with count $2 \sim 9$, it seems to be a pretty close estimate

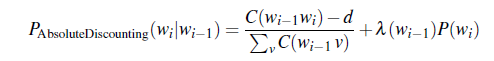

Therefore, absolute discounting for Bigram models looks like:

where:

- $d$ is the mount you discounted, e.g. $0.75$

- the second term is the unigram with an interpolation a conditional weight $\lambda$.

Kneser-Ney discounting (Kneser and Ney, 1995) augments absolute discounting with a more sophisticated way to handle the lower-order unigram distribution.

Essentially instead of blindly picking the most probable $P(w_i)$ if you don’t know what to do next, pick the $w_i$ that is likely to appear in a new/novel context

For instance, consider that you are predicting the next word of:

\[\text{I can’t see without my reading \_\_.}\]to predict the next word:

- if we basically used $P(w_i)$ for interpolation, then words like $\text{Kong}$ might appear more often as a unigram than $\text{glasses}$

- basically, if we are only to guess the next word, use the one that appears more context/has a wider distribution

- i.e. $\text{Kong}$ could have only appeared after $\text{Hong}$, but $\text{glasses}$ would have appeared after many different types of words

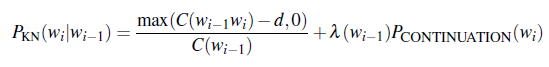

Therefore, we would be doing, for Interpolated Kneser-Ney smoothing for bigrams

where:

- the $\max$ is there since we might go negative in count if $d$ is large

- $P_\text{CONTINUATION}$ would be our approximate of how “versatile” a word/phrase would be.

In the case of Bigram, $P_{ \text{CONTINUATION}}$ is formalized as

\[P_{ \text{CONTINUATION}}(w) \propto | \{ v: C(vw) > 0 \}|\]which is basically the number of word types seen to precede $w$. Then, we need to normalize it, hence

\[P_{ \text{CONTINUATION}}(w) = \frac{ | \{ v: C(vw) > 0 \}|}{\sum_{w'} | \{ v: C(vw') > 0 \}|}\]so that:

- words such as $\text{Kong}$ would have a low continuation probability since it only appears after $\text{Hong}$.

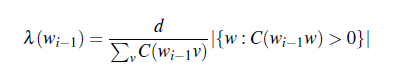

Lastly, a formula for $\lambda$ is also provided

where the first term is basically a normalizing constant

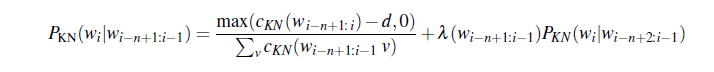

General recursive formulation:

where the definition of the count $c_{KN}$ depends on whether we are

- counting the highest-order n-gram being interpolated (for example trigram if we are interpolating trigram, bigram, and unigram)

- or one of the lower-order n-grams (bigram or unigram if we are interpolating trigram, bigram, and unigram)

where remember that continuation count is the number of unique single word contexts for $\cdot$.

Huge Web-Scale N-grams

Essentially those huge web-scale data comes from the internet, so we often refer to them as huge web-scale models as well.

Now, efficiency considerations are important when building language models that use such large sets of n-grams

- store each string as a 64-bit hash number

- Probabilities are generally quantized using only 4-8 bits

- n-grams are stored in reverse tries

Other techiniques are used to reduce the size of our model:

Pruning, for example only storing n-grams with counts greater than some threshold

- count threshold of 40 used for the Google n-gram release

- use entropy to prune less-important n-grams

Bloom filters: approximate language models

All of those are used to improve efficiency of our model when exposed to large corpus

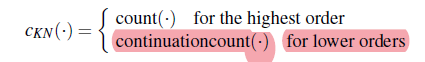

Stupid Backoff

Although with these above toolkits it is possible to build web-scale language models using full Kneser-Ney smoothing, Brants et al. (2007) show that with very large language models a much simpler algorithm may be sufficient.

- this algorithm for computing probability is called stupid backoff

Notice that all modifications of the basic N-gram language model is modifying this term:

\[P(w_i | w_{i-n+1:i-1})\]Stupid backoff basically gives up the idea of trying to make the above quantity a true probability distribution (i.e. sums up to 1).

- no discounting of the higher-order probabilities.

- simply backoff to a lower order n-gram, weighed by a fixed (context-independent) weight

where, since this is no longer a probability distribution, $S$ instead of $P$ is used:

- the upper term is essentially $P(w_i \vert w_{i-n+1:i-1})$

- the lower term essentially is a decaying weight the lower n-gram you are using

- using $\lambda = 0.4$ seems to work well

Summary

Here we mainly introduced the following techniques/modifications to the basic N-gram model:

- smoothing: takes care of the case when word/phrase is unseen

- Add-1/Laplacian Smoothing is easy

- Kneser-Ney works well

- backoff and interpolation

- use other probabilities of lower-order n-gram to fill up the gap when count is 0

- require discounting to create a probability distribution

- Kneser-Ney Smoothing: discounting + continuation probability

- dealing with large corpus

- efficiency tricks

- stupid backoff: no longer a probability distribution

Spelling Correction

A big task in real life:

- Estimates for the frequency of spelling errors in human-typed text vary from 1-2% for carefully retyping already printed text to 10-15% for web queries

The task idea is two fold:

- how do you detect spelling errors?

- how do you correct spelling errors?

- for correction, some common application uses either autocorrect, suggest a single correction, or suggest a list

- together, we call them spelling correction

The task of spelling correction has two broad categories to work with:

- non-word spelling correction

- real-word spelling correction

Non-word spelling correction is the detection and correction of spelling errors that result in non-words (like graffe for giraffe).

Then, the task involves:

- Non-word errors are detected by looking for any word not found in a dictionary. (modern systems often use enormous dictionaries derived from the web.)

- corrected by first generate candidates: real words that have a similar letter sequence to the error

Real word spelling correction is the task of detecting and correcting spelling errors even if they accidentally result in an actual real word of English.

- due to typographical errors, from insertion/deletion/transposition (e.g., there for three)

- due to cognitive errors, substituted the wrong spelling of a homophone or near-homophone (e.g., dessert for desert, or piece for peace).

Then, the task involves:

- detection is a much more difficult task, since any word in the input text could be an error

- correction will use the noisy channel to find candidates for each word $w$ typed by the user, and rank the correction that is most likely to have been the user’s original intention

- the trick is to include the word typed by the user itself in the candidate set

When correcting, both problems will involve the following component:

- rank the candidates using a distance metric (e.g. edit distance)

- but we also prefer corrections that are more frequent words, or more likely to occur in the context of the error.

- this can then be done by Noisy Channel Model

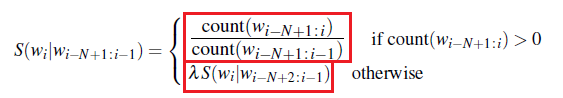

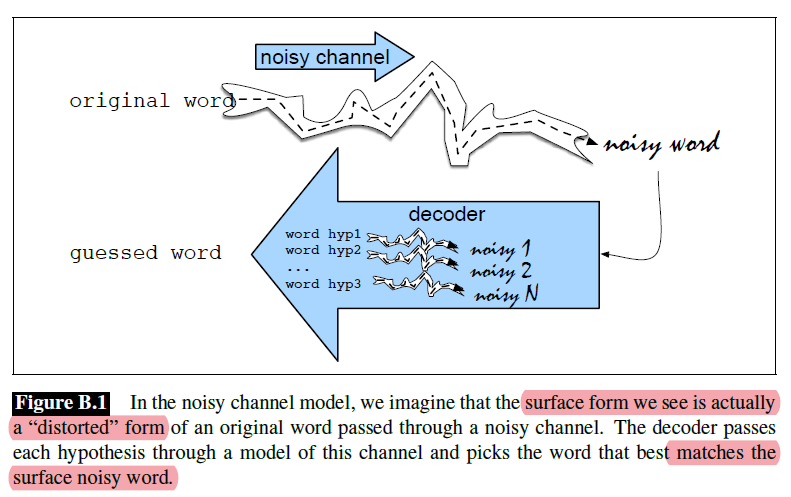

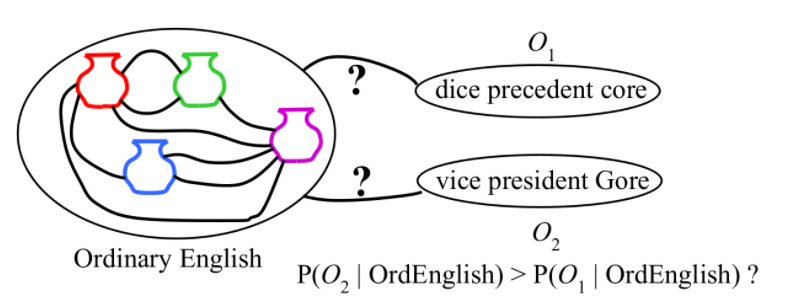

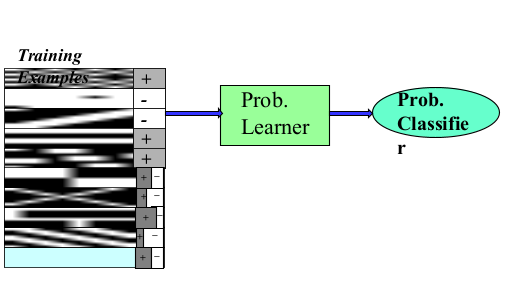

Noisy Channel

In this section we introduce the noisy channel model and show how to apply it to the task of detecting and correcting spelling errors. An image of what we need to do is the following:

basically the idea is similar to the probabilistic interpretation of logistic regression:

-

This channel introduces “noise” in the form of substitutions or other changes to the letters, making it hard to recognize the “true” word. i.e.

\[w' = w + \epsilon\]where $w$ is the word we observe, $w$ is the true word, $\epsilon$ is some random noise we want to model

-

we need to find the word $w$ that generated this misspelled word $w’$.

Therefore, the idea is clear:

- model this noisy channel $\epsilon$

- pass every word of the language through our model of the noisy channel

- pick the $w$ that comes closest to observed word $w’$

Then, we essentially consider the user input $x$ which is misspelled, but $w$ is the real intention so that:

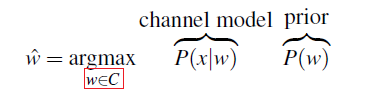

\[x \equiv w' = w + \epsilon\]We want to find out $\hat{w}$ that is most probably generated $x$:

\[\begin{align*} \hat{w} &= \arg\max_{w \in V} P(w|x)\\ &= \arg\max_{w \in V} \frac{P(x | w)P(w)}{P(x)}\\ &= \arg\max_{w \in V} P(x | w)P(w) \end{align*}\]then

- $P(x\vert w)$ represents the probability a word $w$ generated misspelled $x$ from this noisy channel

- this would be approximated from the training corpus (which contains both a misspelled version and the correct version)

- prior probability of a hidden word is modeled by $P(w)$. This represents the probability of seeing the word $w$ at that location/in place of $x$. This hints at us to use Language models such as Bigram, Trigram, etc.

Now, sometimes instead of going over the full dictionary $V$, we can

- generating a list of candidate words from $x$, the user input (e.g. misspelled)

- use them instead of $V$

Hence the noisy channel model is:

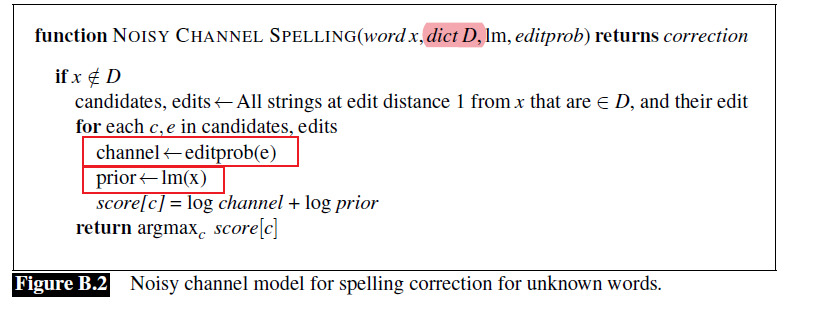

Then the overall algorithm is this:

where:

- the most important yet haven’t discussed part is how to estimate $P(x\vert w)$, nor $P(w)$ which potentially could depend on many factors such as word context, keyboard position, etc

- prior in general is computed using language model, such as unigram to trigram or 4-gram.

- Analysis of spelling error data has shown that the majority of spelling errors consist of a single-letter change and so we often make the simplifying assumption that these candidates have an edit distance of $1$ from the error word

- we use extended edit distance so that in addition to insertions, deletions, and substitutions, we’ll add a fourth type of edit, transpositions. This version is also called Damerau-Levenshtein edit distance

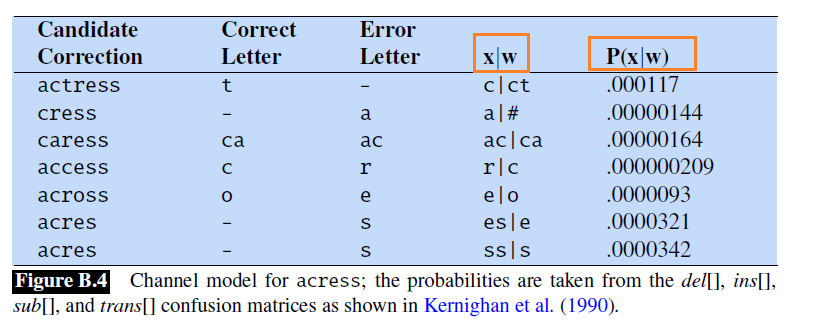

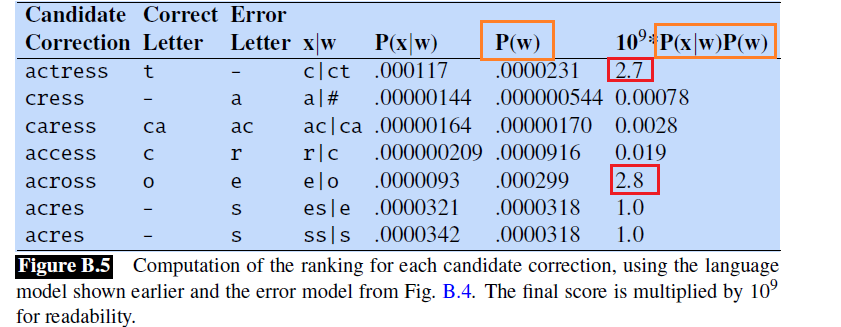

Now, we discuss how to estimate the two key probability. We will take the example of correcting the misspelled word “$\text{acress}$”

Estimating $P(x\vert w)$: Simple Model

A perfect model of the probability that a word will be mistyped would condition on all sorts of factors: who the typist was, whether the typist was lefthanded or right-handed, and so on. However, it turns out that we can do pretty well by just looking at the local context.

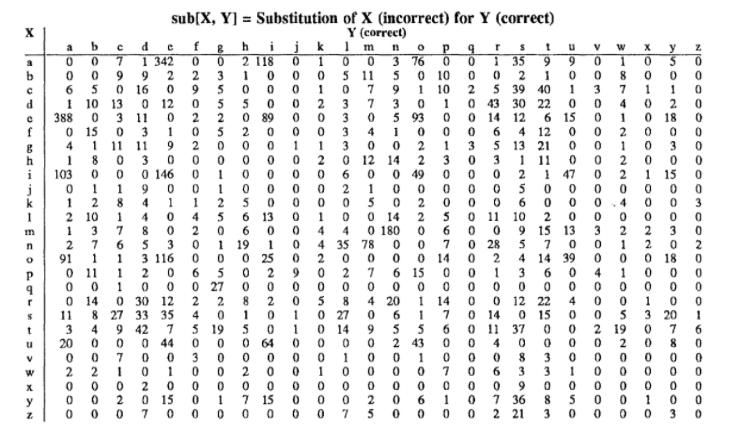

A simple model might estimate, for example, $P( \text{acress}\vert \text{across})$ just using the number of times that the letter $e$ was substituted for the letter $o$ in some large corpus of errors.

- to compute the probability for each edit we’ll need a confusion matrix that contains counts of errors.

- In general, a confusion matrix lists the matrix number of times one thing was confused with another.

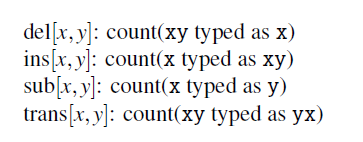

Then, since we have four operations, we get four confusion matrix, each for:

An example for substitution confusion matrix is:

(technically the above is $\text{sub}(y,x)$ by our above definition, but the order is something you can decide by yourself)

-

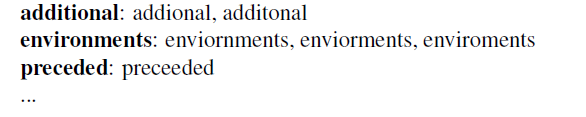

this type of data would be drawn from a list of misspellings like the following

-

There are lists available on Wikipedia and from Roger Mitton (http://www.dcs.bbk.ac.uk/~ROGER/corpora.html) and Peter Norvig (http://norvig.com/ngrams/).

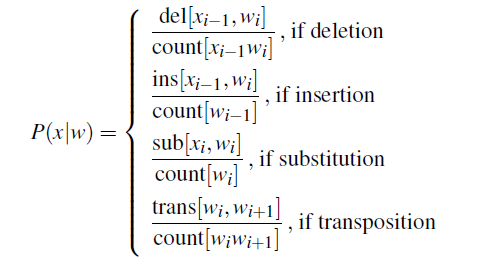

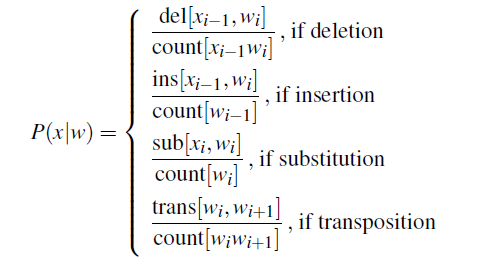

Finally, with those counts, we can estimate $P(x\vert w)$ as follows:

where:

- $w_i$ is the $i$th character of the correct word $w$, $x_i$ is the $i$th character of the misspelled word

- notice that we are assuming again a single character/operation error, as our candidates are only 1 edit-distance away

- notice that we are not caring about the context in this part!

Example computations look like

where make sure $x\vert w$ and $P(x\vert w)$ makes sense.

Estimating $P(x\vert w)$: Iterative model

An alternative approach used by Kernighan et al. (1990) is to compute the confusion matrices by iteratively using this very spelling error correction algorithm itself.

- The iterative algorithm first initializes the matrices with equal values

- e.g. any character is equally likely to be deleted, etc.

- spelling error correction algorithm is run on a set of spelling errors. Given the typos and corrections, update the confusion matrix

- repeat step 1

Basically an EM algorithm. Then, once we have the confusion matrix, use the same equation

and the rest is the same.

Estimating $P(w)$: Unigram

Now, recall that our model is:

\[\begin{align*} \hat{w} &= \arg\max_{w \in V} P(w|x)\\ &= \arg\max_{w \in V} \frac{P(x | w)P(w)}{P(x)}\\ &= \arg\max_{w \in V} P(x | w)P(w) \end{align*}\]so we still need $P(w)$.

If we choose to compute $P(w)$ as a unigram, then:

It turns out $\text{across}$ has a high value!

Unfortunately, this is the wrong choice as the writer’s intention becomes clear from the context:

\[\text{ . . was called a “stellar and versatile \textbf{acress} whose combination of sass and glamour has defined her. . . }\]The surrounding words make it clear that $\text{actress }$ was the intended word. We are missing context data from unigram + simple model for $P(x\vert w)$ based on purely alphabets.

Estimating $P(w)$: Bigram

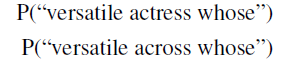

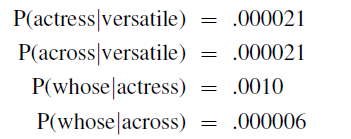

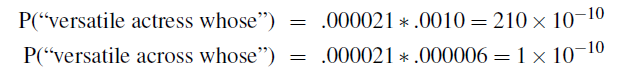

Now, with bigram, we can compute $P(\text{actress})$ and $P(\text{across})$ as:

which then can be estimated using bigram because we know the following:

Finally:

which combining the the $P(x\vert w)$ prediction, we gave out the correct result that $\text{actress}$ is more likely.

Real-Word Spelling Errors

Recall that another class of problem is that we might have mistyped but those words exist.

- The noisy channel approach can also be applied to detect and correct those errors as well! But we need a small tweak.

- A number of studies suggest that between 25% and 40% of spelling errors are valid English words

For instance:

\[\text{This used to belong to \textbf{thew} queen. They are leaving in about fifteen \textbf{minuets} to go to her house.}\]How do we use noisy channel that seems to be based on detecting error from misspelling?

Let’s begin with a version of the noisy channel model first proposed by Mays et al. (1991) to deal with these real-word spelling errors.

Idea:

takes the input sentence $X = {x1 , x_2 , …, x_n }$

Generates a large set of candidate correction sentences $C(X)$

start by generating a set of candidate words $C(x_i)$ for each input word $x_i$, e.g. within edit distance 1

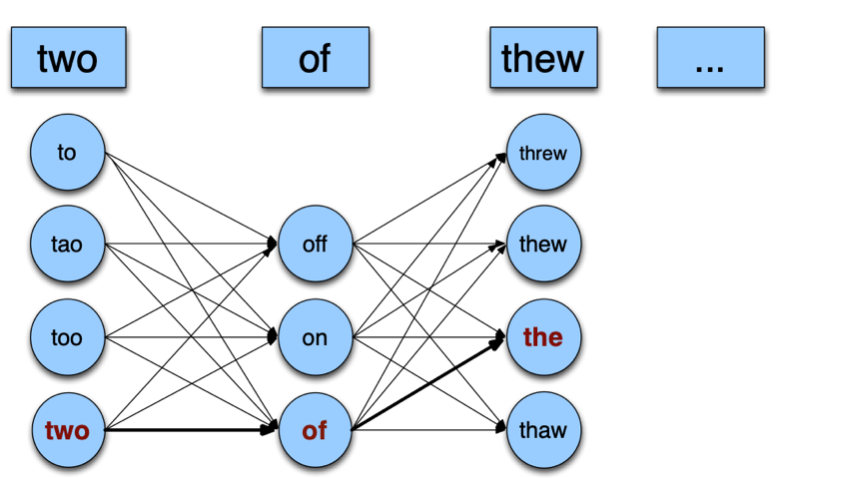

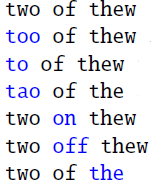

generate possible sentences from those words. For instance, if the input is $\text{two of thew}$:

where we already see a lot of possibility. To simplify, we assume that every sentence has only one error. Then we have:

picks the sentence with the highest language model probability

\[\hat{W} = \arg\max_{W \in C(X)} P(W|X)= \arg \max_{W \in C(X)} P(X|W)P(W)\]where $W$ we know are generated by us.

Now, the problem is we need to estimate:

- $P(X\vert W)$

- $P(W)$ - this can be done using a language model, essentially like we are generating sentences from it. (e.g. use a Trigram)

Now, the problem is $P(X\vert W)$. Since we assume only one word error, then we can assume:

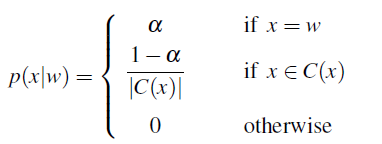

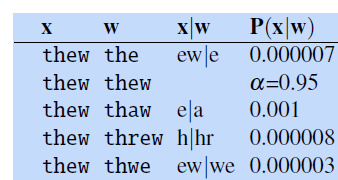

\[P(X|W) = P(x_1, ..., x_n | w_1, ..., w_n) = \prod_{i=1}^n P(x_i|w_i)\]where we assumed each $x_i\vert w_i$ pair are uncorrelated to each other. Then, if correct, i.e. $x_i = w_i$, we assumed $\alpha$ (as shown below). Hence:

\[P(X|W) = \prod_{i=1}^n P(x_i|w_i) = \alpha^{n-1}P(x_k|w_k)\]where:

- we assumed there is only one error, and the error is on $x_k$.

since context information/positional information is encoded into $P(W)$. Then, we can use the simple model that

where notice that:

- essentially $P(w\vert w)$, i.e. typed correctly, is probability $\alpha$. Often this is set to $\alpha = 0.95$

- alternatively we can make the distribution proportional to the edit probability from the more sophisticated channel model. i.e. we use $P(x\vert w)$ estimation by look at the confusion matrices.

Lastly, since constants can be dropped during $\arg\max_W$:

\[\hat{W} =\arg \max_{W \in C(X)} P(X|W)P(W) = \text{arg\,max}_{W \in C(X), k\in[1,n]} P(x_k|w_k)P(W)\]for the single wrong word being at position $k$.

For example, if we used the simple estimation for $P(x\vert w)$, then $P(X\vert W)$ essentially is:

where here we only looked at the case when we want to correct $\text{thew}$ in the sentence, which again is a real word.

- finally, don’t forget to multiply by $P(W)$ to get your final estimate for $P(W\vert X)$!

Noisy Channel Model: The State of the Art

State of the art implementations of noisy channel spelling correction make a number of extensions to the simple models we presented above. Here we go over a few

-

rather than make the assumption that the input sentence has only a single error, modern systems go through the input one word at a time, using the noisy channel to make a decision for that word.

-

we could suffer from overcorrecting, replacing correct but rare words with more frequent words

- Google solves this by using a blacklist, forbidding certain tokens (like numbers, punctuation, and single letter words) from being changed

- Instead of just choosing a candidate correction if it has a higher probability $P(w\vert x)$ than the word itself, these more careful systems choose to suggest a correction $w$ over keeping the non-correction $x$ only if the difference in probabilities is sufficiently great.

-

improve the performance of the noisy channel model by changing how the prior and the likelihood are combined

-

we might trust one of the two models more, therefore we use a weighted combination

\[\hat{w} = \arg\max_{w \in V} P(x|w)P(w)^\lambda\]for some constant $\lambda$

-

- For specific confusion sets, such as peace/piece, affect/effect, weather/whether, we can train supervised classifiers to draw on many features of the context and make a choice between the two candidates

- achieve very high accuracy for these specific set

- but it requires some handcrafted feature extraction/work

- Since many documents are typed, error by nearby keys include more weights

- for instance, used in weighing edit distance

Neural Networks in NLP

Basically the same content as ML: review Perceptron. This include:

- how the does the update rule in a single perceptron work?

- perceptron as a linear classifier

- (maybe checkout SVM)

In DL, review the chapter of Neural Network and Backpropagation.

- feed-forward network

- activation functions

- backpropagation

- this will be tested on Midterm

Architecture for Neural Language Model

Now, let’s talk about the architecture for training a neural language model

Comments on Model/Training

Comments on Training

- Many epochs (thousands) may be required, hours or days of training for large networks.

- To avoid local-minima problems, run several trials starting with different random weights (random restarts).

- Take results of trial with lowest training set error.

- Build a committee of results from multiple trials (possibly weighting votes by training set accuracy).

- Keep a hold-out validation set and test accuracy on it after every epoch.

- Stop training when overfitting. Do callbacks/epoch saves

Comments on Model/Architecture choice:

- Trained hidden units can be seen as newly constructed features that make the target concept linearly separable in

the transformed space. (e.g. for CNNs, edges, textures, etc.)

- However, the hidden layer can also become a distributed representation of the input in which each individual unit is not easily interpretable as a meaningful feature.

- Too few hidden units prevents the network from adequately fitting the data, but too many hidden units can result in over-fitting.

- Use internal cross-validation to empirically determine an optimal number of hidden units.

RNN and LSTM

For RNN:

- review DL notes, on the section of Sequence Models.

Recall

The basic problem of RNN (e.g. Deep RNN or stacked RNN) is that backpropagation involves matrices multiple times. This will cause the problem of vanishing/exploding gradient

- difficult to train a RNN

Some ideas to solve this problem:

- LTSM

- GRU

Both of which will also be able to model long term dependencies

Transformers

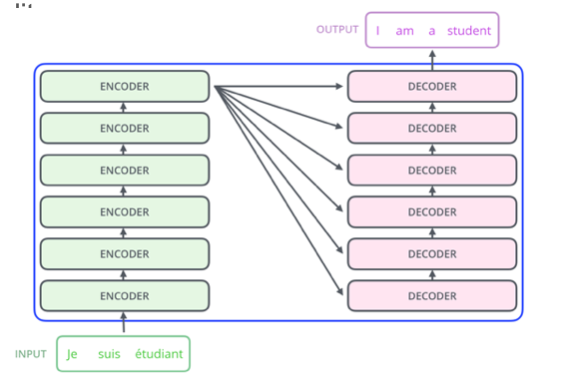

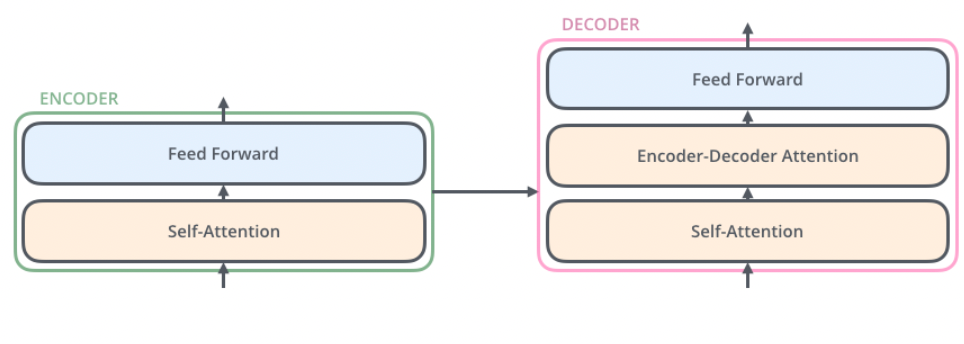

Essentially you have:

where:

- many stacked layers of encoder and stacked layers of decoder

- an encoder essentially is a transformer block

- input to the decoder only comes form the last layer

Within each encoder, essentially you will have:

where:

- feed forward layer basically is a NN that feeds forward (layers of linear + nonlinear activation)

Checkout the Transformer section in the DL notes.

Transformer Models

Essentially different models use different number of stacks for encoder/decoder/attention

- Models are larger and larger

-

More computation resources are required

- Models example: GPT, BERT, Roberta, T-5

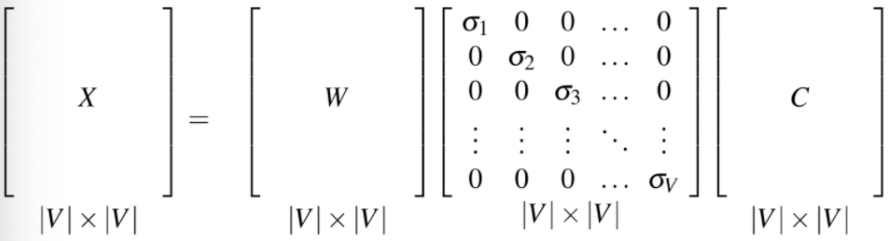

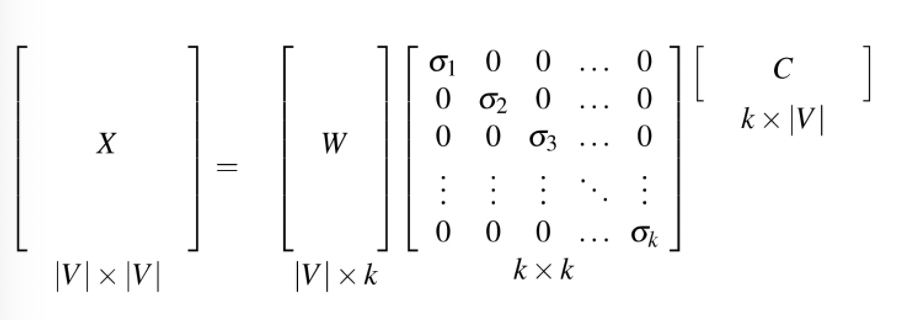

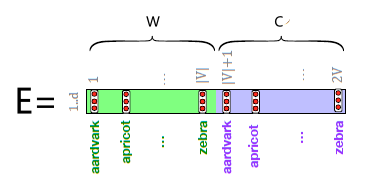

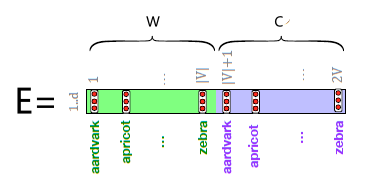

Vector Semantics and Embeddings

This chapter essentially deals with how we represent texts as vectors.

In more detail, we will use the distributional hypothesis that “words that occur in similar contexts tend to have similar meanings.” to instantiate this linguistic hypothesis, we will see this done by learning representations of the meaning of words, called embeddings. We will cover:

- static embeddings (e.g. SGNS Embedding from Word2Vec)

- contextualized embeddings (e.g. BERT)

All of those are done by self-supervised ways to learn representations of the input.

Lexical Semantics

The idea is that we want to compute similarity between words, i.e. a vector representation that achieves this.

Lexical Semantics is the study of word meanings. It includes the study of how words structure their meaning, how they act in grammar and compositionality, and the relationships between the distinct senses

First of all, recall some terms commonly used in NLP include:

- lemma, basically the “infinitive” form of words, such as “sing”

- wordforms, the specific form of words, such as “sings, sang”

- word sense: aspect of meaning of a word (e.g. a word could have many meanings)

Some of the important aspects we need to answer for representing words include:

-

Word Similarity: being able to measure similarity between two words is an important component of tasks like question answering, paraphrasing, and summarization

- e.g. synonyms would have high similarity

- but also words such as “cat/dog” would have high similarity

-

Word Relatedness: the meaning of two words can be related in the sense of association

- e.g. the word coffee and the word cup are very related yet have different meanings

- One common kind of relatedness between words is if they belong to the same semantic field

- For example, words might be related by being in the semantic field of hospitals (surgeon, scalpel, nurse, anesthetic, hospital)

-

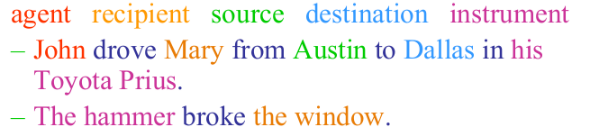

Semantic Frames and Roles: a set of words that denote perspectives or participants in a particular type of event

- e.g. understanding a sentence like Sam bought the book from Ling could be paraphrased as Ling sold the book to Sam, and that Sam has the role of the buyer in the frame and Ling the seller

-

Connotation: aspects of a word’s meaning that are related to a writer or reader’s emotions, sentiment, opinions, or evaluations. Hence very related to sentiment analysis of texts!

Early work on affective meaning (Osgood et al., 1957) found that words varied along three important dimensions of affective meaning:

- valence: the pleasantness of the stimulus

- arousal: the intensity of emotion provoked by the stimulus

- dominance: the degree of control exerted by the stimulus

Then, we can represent a word by a 3D vector, each component a score for each of the above. With this, we introduce the revolutionary idea of word embedding - represent words as vectors!

Vector Semantics

Vectors semantics is the standard way to represent word meaning in NLP, essentially helping us to build models that takes in texts.

In short, we will consider the merging the idea of:

- representing a word as a vector (e.g. in 3D space)

- define the meaning of a word by its distribution in language use, meaning its neighboring words or grammatical environments

Therefore, our task is to represent a word as a point in a multidimensional semantic space that is derived (in ways we’ll see) from the distributions of embeddings word neighbors.

Vectors for representing words are called embeddings (although the term is sometimes more strictly applied only to dense vectors like word2vec (Section 6.8), rather than sparse TF-IDF or PPMI vectors

In this chapter we will mainly discuss two commonly used models

- TF-IDF, which is usually used as the baseline, consider the meaning of a word is defined by a simple function of the counts of nearby word

- hence, resulting in sparse vectors

- Word2Vec, which is technically a family of algorithms that construct short, dense vectors that have useful semantic properties

(We’ll also introduce the cosine, the standard way to use embeddings to compute semantic similarity, between two words, two sentences, or two documents, an important tool in practical applications like question answering, summarization, or automatic essay grading)

Words and Vectors

Vector or distributional models of meaning (mentioned above) are generally based on a co-occurrence matrix, a way of representing how often words co-occur. Two popular matrices related to this is:

- term-document matrix

- term-term matrix

Vectors and Documents

In Term-document matrix, each row represents a word in the vocabulary and each column represents a document from some collection of documents

- e.g. a document could be a book

Then, the value would be number of times a particular word (defined by the row) occurs in a particular document (defined by the column).

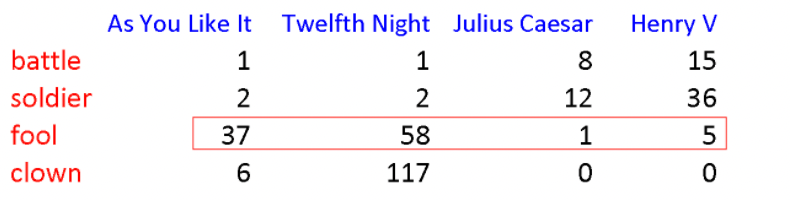

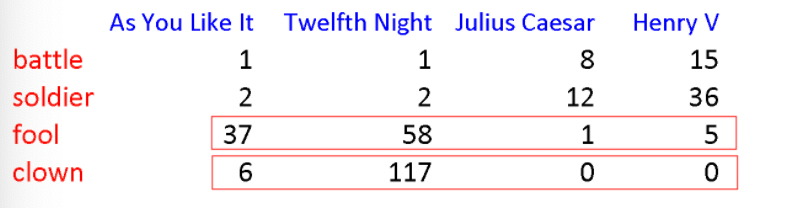

For example, term frequency would look like:

where:

- notice that each row could be treated as a vector for the word!

- similarly (but maybe not that useful for now), a document can then be represented as a column vector

- interestingly, this idea was introduced as as means of finding similar documents for the task of document information retrieval, i.e. having similar column vectors

This can be useful to determine similarity between word if their vector representation is similar

for instance, “fool” and “clown” would be more similar than others.

- a problem with this is that we need lots of documents to represent more accurate information

- vocabulary sizes are generally in the tens of thousands, and the number of documents can be enormous (think about all the pages on the web). Hence the size of the matrix will also be enormous!

Term-Context Matrix

An alternative to using the term-document matrix to represent words as vectors of document counts, is to use the term-term matrix, also called the word-word matrix or term-context matrix

In term-context matrix: columns are labeled by words rather matrix than documents.

- This matrix is thus of dimensionality $\vert V\vert \times \vert V\vert$

- each cell records the number of times the row (target) word and the column (context) word co-occur in some context in some training corpus

- e.g. the context could be the document, in which case the cell represents the number of times the two words appear in the same document.

- It is most common, however, to use smaller contexts, generally a window around the word, for example of 4 words to the left and 4 words to the right

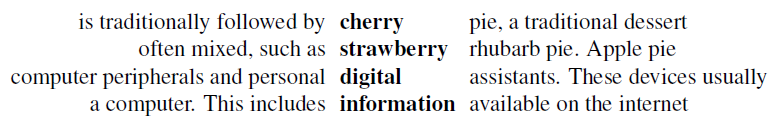

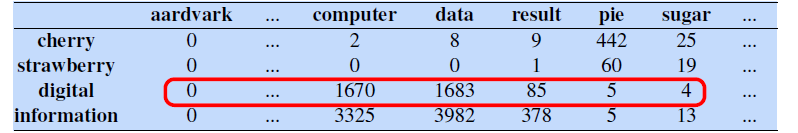

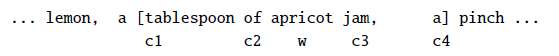

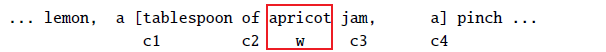

For instance, consider the following training corpus:

we want to compute the term-context matrix for the target word highlighted in bold.

- where we are taking a window size of $\pm 7$ for context word.

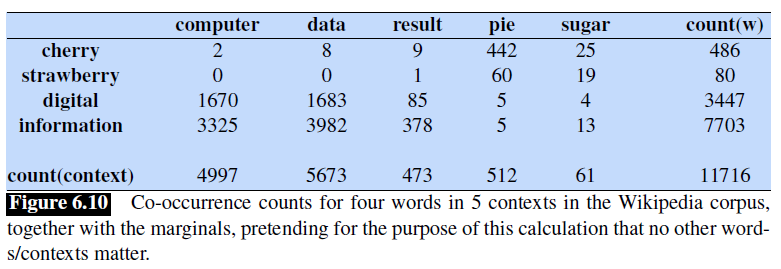

Then, if we take every occurrence of each word (say strawberry) and count the context words around it, we get a word-word co-occurrence matrix:

note that:

- here, the words cherry and strawberry are more similar to each other, and the same goes for digital and information

- therefore, if the vector for a word is close to each other, we would expect the row vector to look similar (the column vector would be a context vector, which we can choose to ignore in this case)

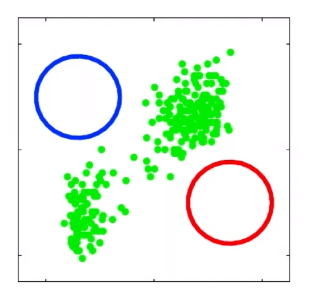

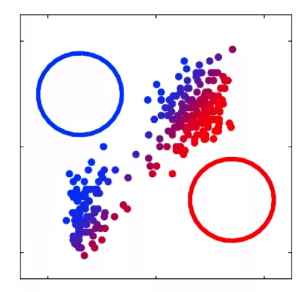

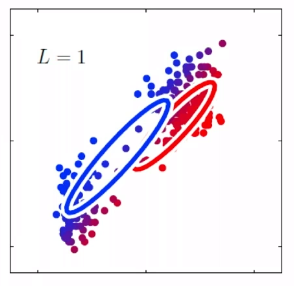

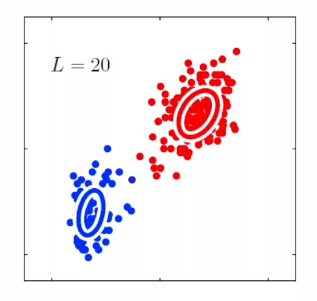

- in general, the shorter the window size captures more syntactic representation, and the longer the window, the more semantic representation (e.g. $L=4\sim 10$)

However, this is problematic in reality because if we have vocabulary size of $50,000$, then this means that the matrix will have dimension $\mathbb{R}^{50,000 \times 50,000}$, and it will be sparse!

TF-IDF

The co-occurrence matrices above represent each cell by frequencies, either of words with documents (Fig. 6.5), or words with other word. But raw frequency does not work directly:

- want to know what kinds of contexts are shared by cherry and strawberry but not by digital and information. But there are words that occur frequently with all sorts of words and aren’t informative about any particular word (e.g

the,good, etc) - essentially, words that occur too frequent are sometimes unimportant, e.g. good

Therefore, the solution is to somehow add weighting (essentially the idea of TF-IDF and PPMI as well)

TF-IDF essentially consist of two components:

- term frequency in log, essentially captures the frequency of words occurring in a document (i.e. the word-document matrix)

- inverse document frequency, essentially giving a higher weight to words that occur only in a few documents (i.e. they would carry important discriminative meanings)

The term frequency in a document $d$ is computed by:

\[\text{tf}(t,d) = \log_{10}(\text{count}(t,d) + 1)\]where:

- we added $1$ so that we won’t do $\log 0$, which is negative infinity

The document frequency of a term $t$ is the number of documents it occurs in.

- note that this is different from collection frequency of a term, which is the total number of times the word appears in any document of the whole collection

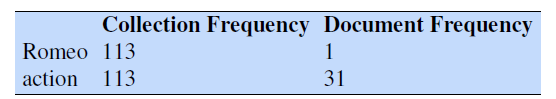

For example: consider in the collection of Shakespeare’s 37 plays the two words Romeo and action

Therefore, we want to emphasize discriminative words like Romeo via the inverse document frequency or IDF term weight:

\[\text{idf}(t) = \log_{10}\left( \frac{N}{\text{df}(t) } \right)\]where:

- apparently $\text{df(t)}$ is the number of documents in which term t occurs and $N$ is the total number of documents in the collection

- so essentially, the fewer documents in which a term occurs, the higher this weight.

-

notice that we don’t need $+1$ here because the minimum document frequency of a word in your corpus would be $1$

- again, because the number could be large, we use a $\log$.

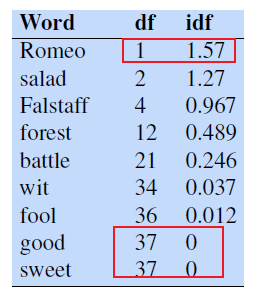

Here are some IDF values for some words in the Shakespeare corpus

so we have:

- extremely informative words which occur in only one play like Romeo

- those that occur in a few like salad or Falstaff

- those which are very common like fool

- so common as to be completely non-discriminative since they occur in all 37 plays like good or sweet

Finally, the TF-IDF basically then does:

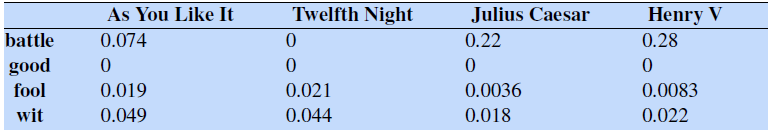

\[\text{TF-IDF}(t,d) = \text{tf}(t,d) \times \text{idf}(t)\]An example would be:

where notice that:

- essentially it is still a term-document matrix, but it is weighted by IDF.

- notice that because $\text{idf(good)}=0$, the row vector for good becomes all zero: this word appears in every document, the tf-idf weighting leads it to be ignored (as it is not very informative anymore)

Note

- this is by far the most common weighting metric used when we are considering relationships of words to documents

- however, not generally used for word-word similarity, which PPMI would be better at

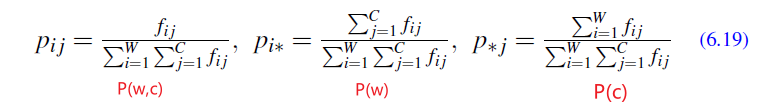

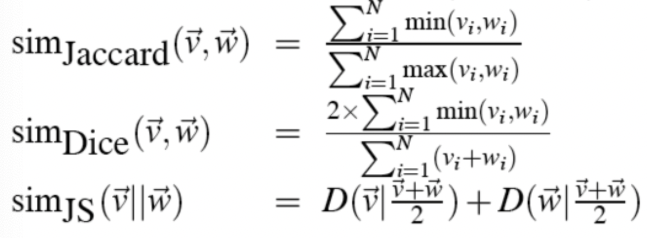

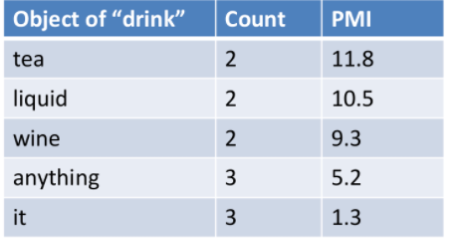

PPMI

An alternative for TF-IDF would be PPMI (positive pointwise mutual information), which is essentially weightings for term-term-matrices.

Intuition:

PPMI considers the best way to weigh the association between two words is to ask how much more often the two words co-occur in our corpus than we would have a priori expected them to appear by chance

- i.e. like a ratio of them appearing by chance v.s. actually appearing together in our corpus

Therefore, this is a measure of how often two events $x$ and $y$ occur, compared with what we would expect if they were independent:

\[I(x,y) = \log_2\left(\frac{P(x,y)}{P(x)P(y)}\right)\]where essentially:

- probability of them occurring jointly together over them occurring independently

Hence, the pointwise mutual information between a target word $w$ and a context word $c$ (Church and Hanks 1989, Church and Hanks 1990) is then defined as:

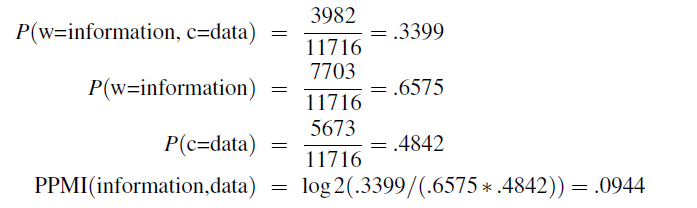

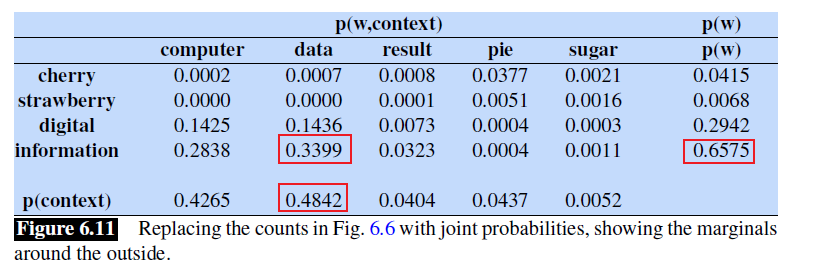

\[\text{PMI}(w,c) = \log_2\left(\frac{P(w,c)}{P(w)P(c)}\right)\]where notice that:

-

since we are back with word-context matrix, we consider target words $w$ and context $c$

- each probability would then be estimated by MLE, which basically are counts

- the denominator computes the probability that the two words to co-occur assuming they each occurred independently, the numerator of will be them actually co-occurring

- therefore, it is a useful tool whenever we need to find words that are strongly associated

- PMI values ranges from negative to positive infinity

Now, in reality, the problem with PMI is that negative PMI values (which imply things are co-occurring less often than we would expect by chance) tend to be unreliable unless our corpora are enormous.

- To distinguish whether two words whose individual probability is each $10^{-6}$ occur together less often than chance, we would need to be certain that the probability of the two occurring together is significantly different than $10^{-12}$, and this kind of granularity would require an enormous corpus.

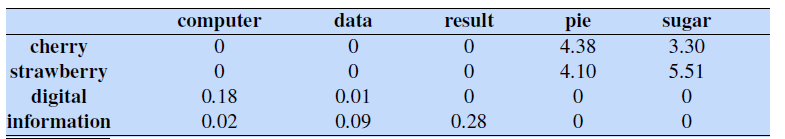

Therefore, we often turn to the Positive PMI (PPMI), which replaces all negative PMI values with zero:

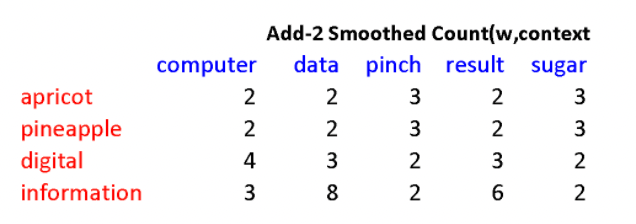

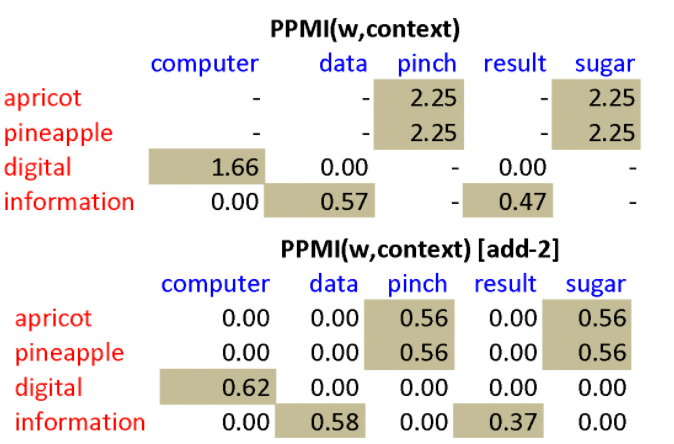

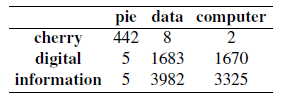

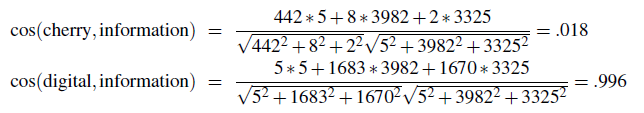

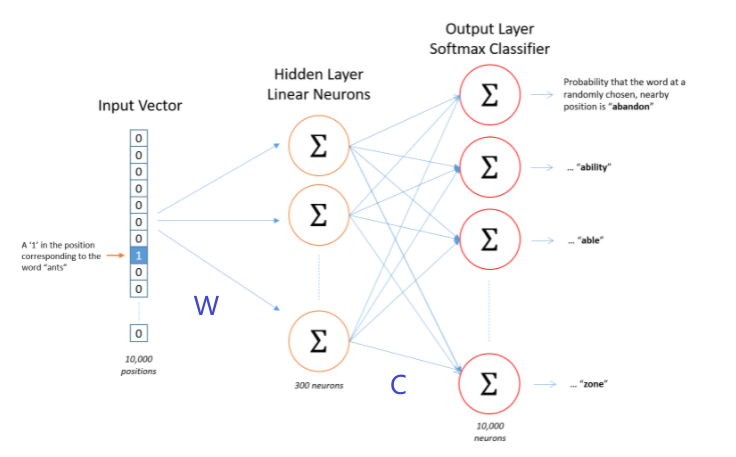

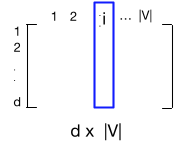

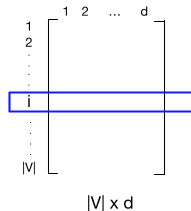

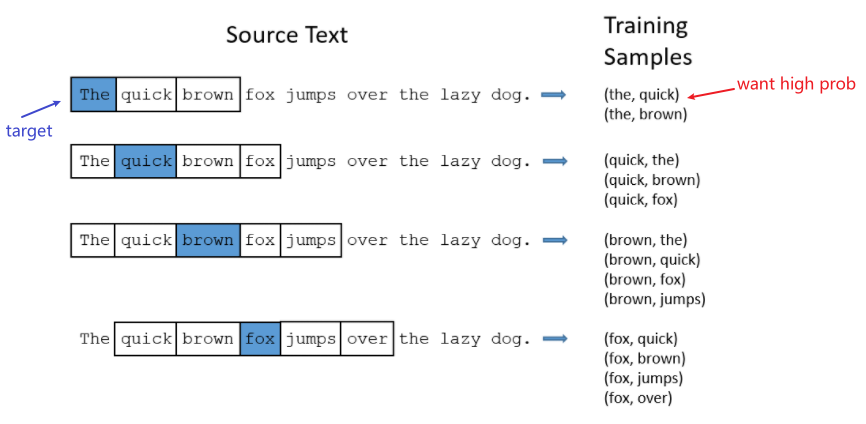

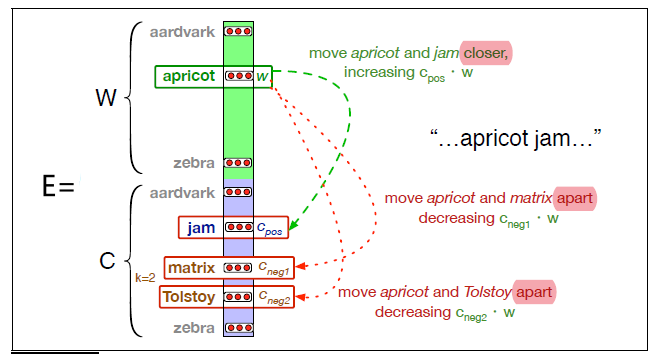

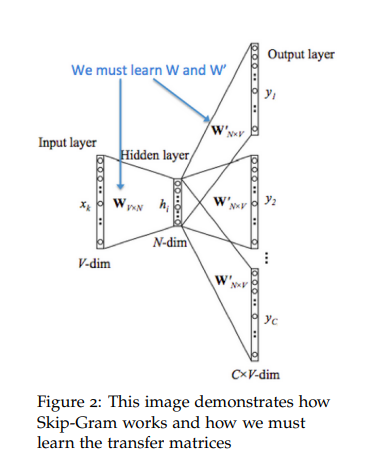

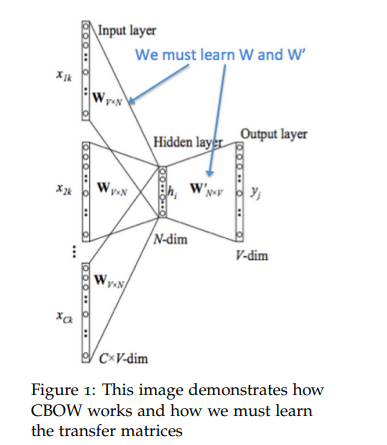

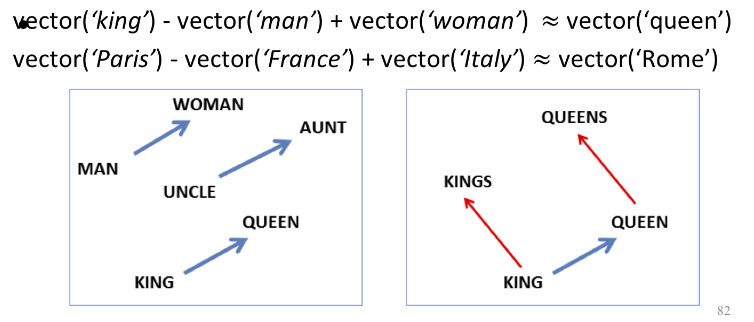

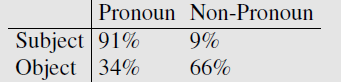

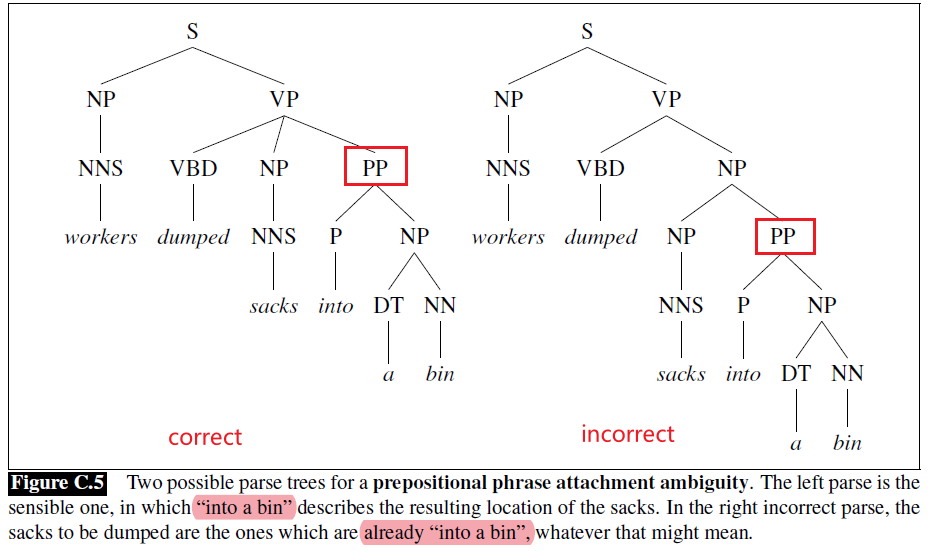

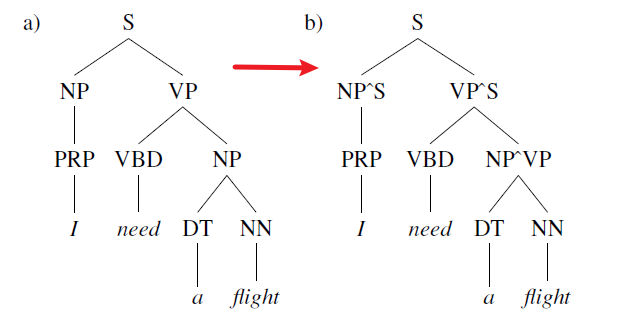

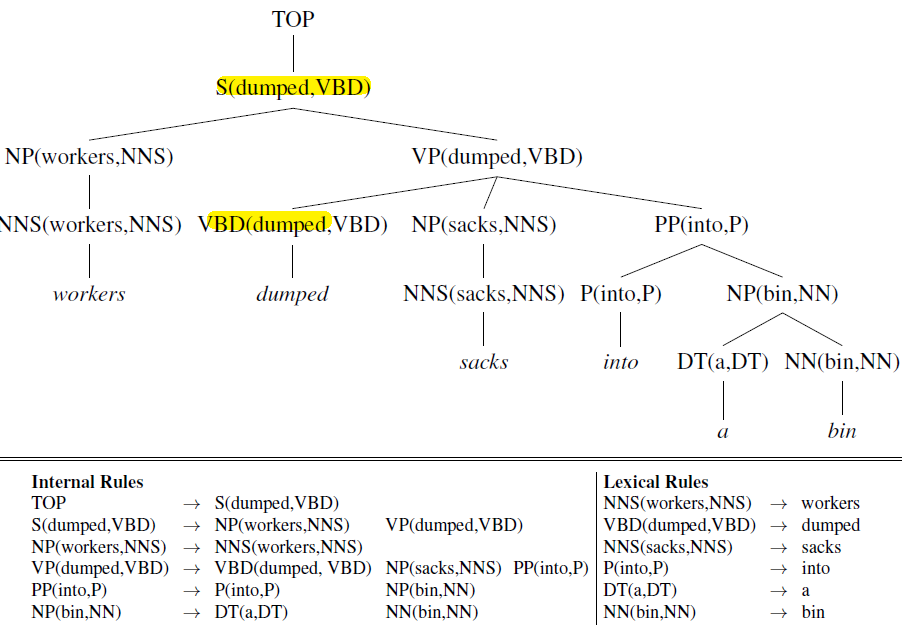

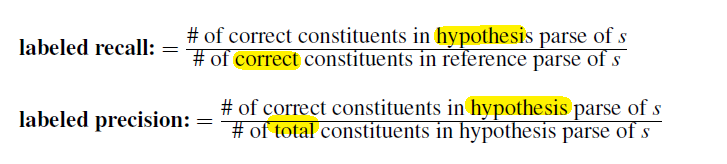

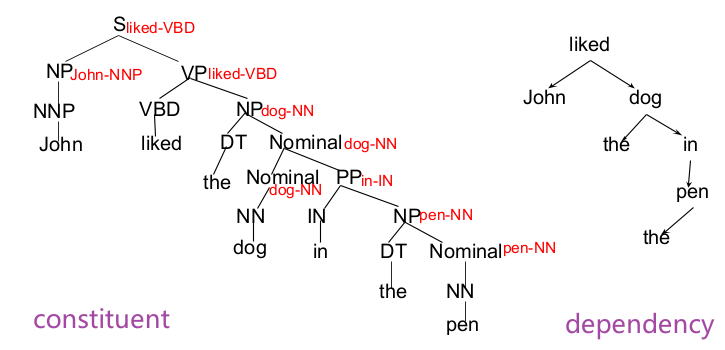

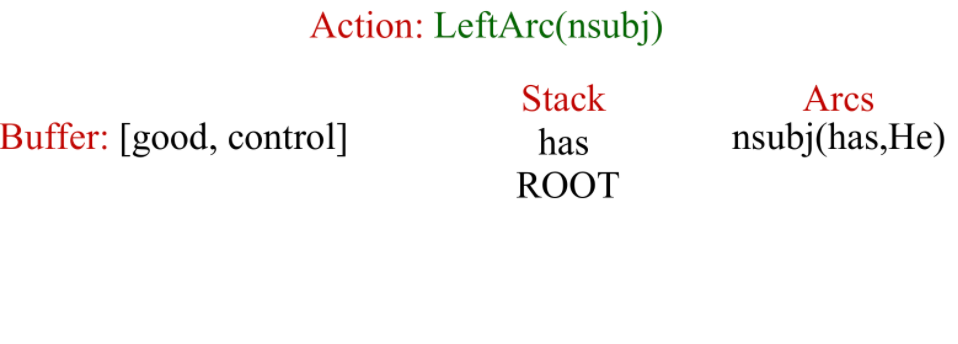

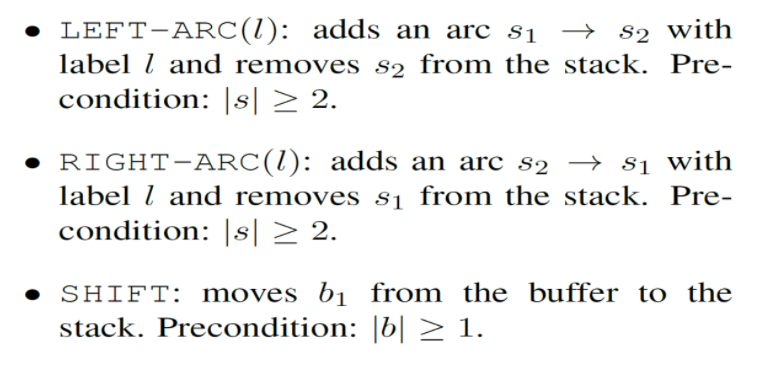

\[\text{PPMI}(w,c) = \max\left( \log_2 \frac{P(w,c)}{P(w)P(c)},0 \right)\]More formally, for our word-context matrix $F$, if we have $W$ rows (words) and $C$ columns (contexts), and each element is represented by $f_{ij}$, we can estimate the probability by: