COMS4705 NLP part2

- Semantic Role Labeling

- Advanced Semantics

- Machine Translation

- Sentiment Analysis and Classification

- Statistical Significance Testing

- Information Extraction and NER

- Question Answering

- Language Generation

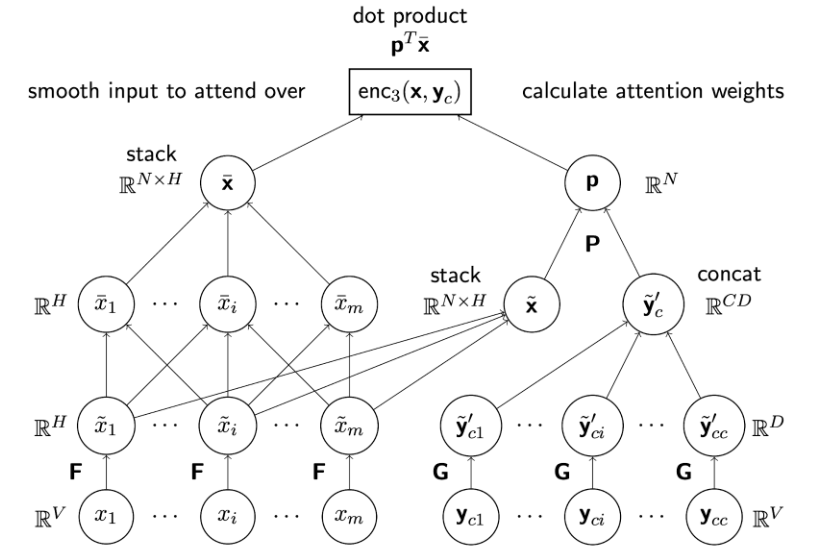

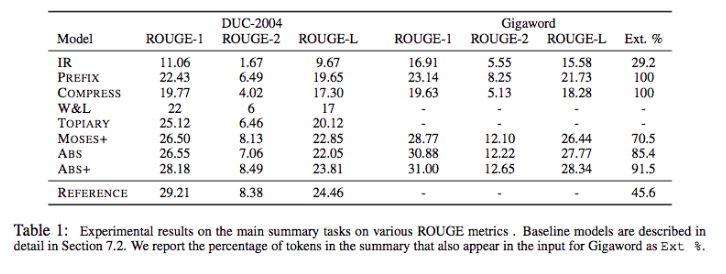

- Text Summarization

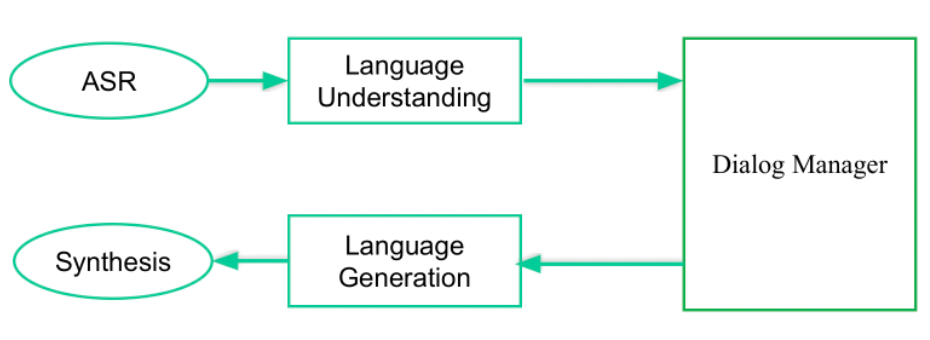

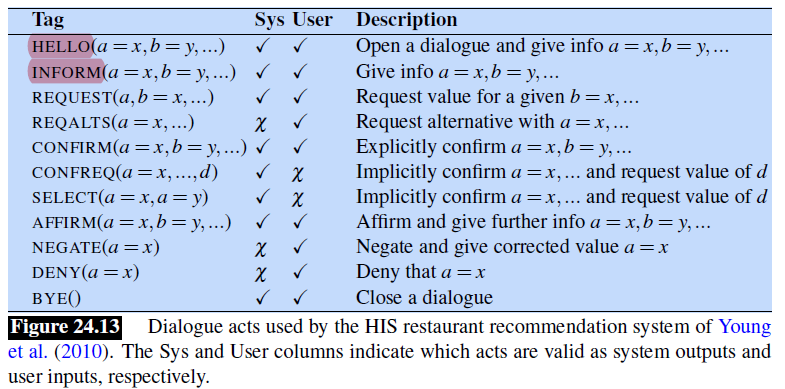

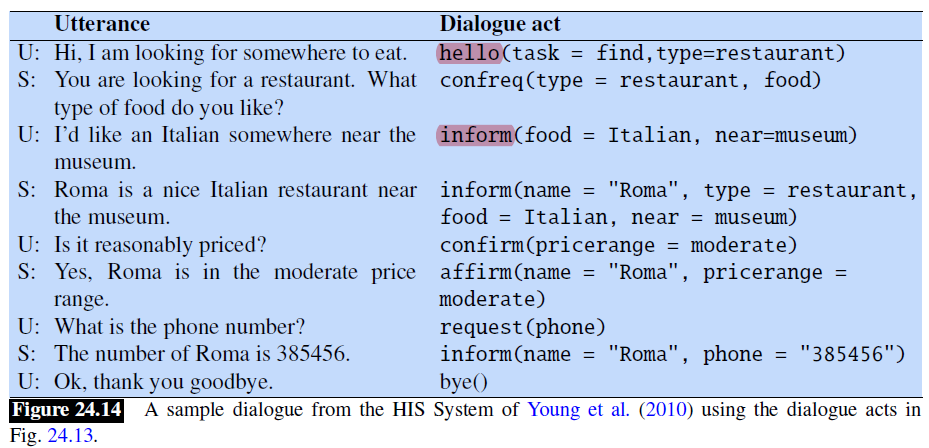

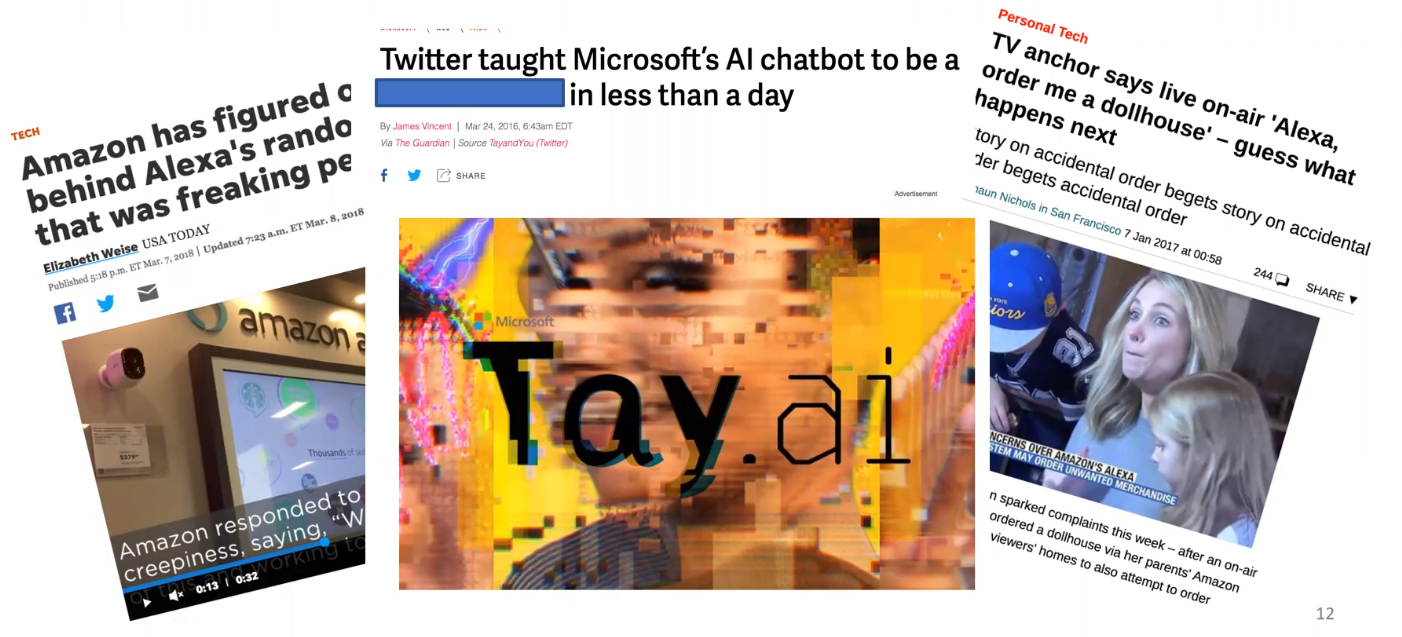

- Chatbots & Dialogue Systems

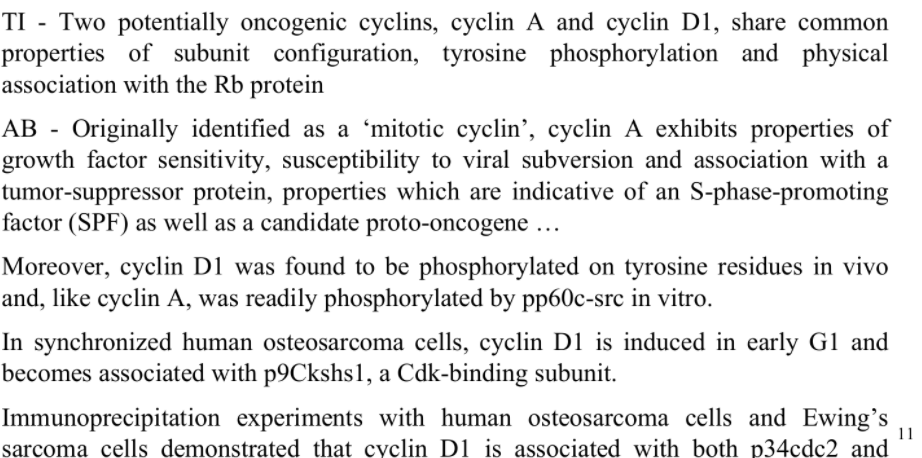

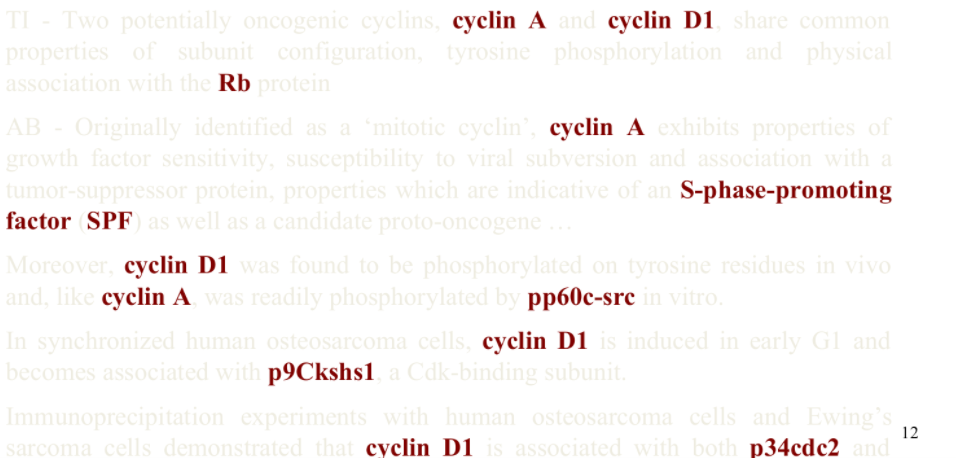

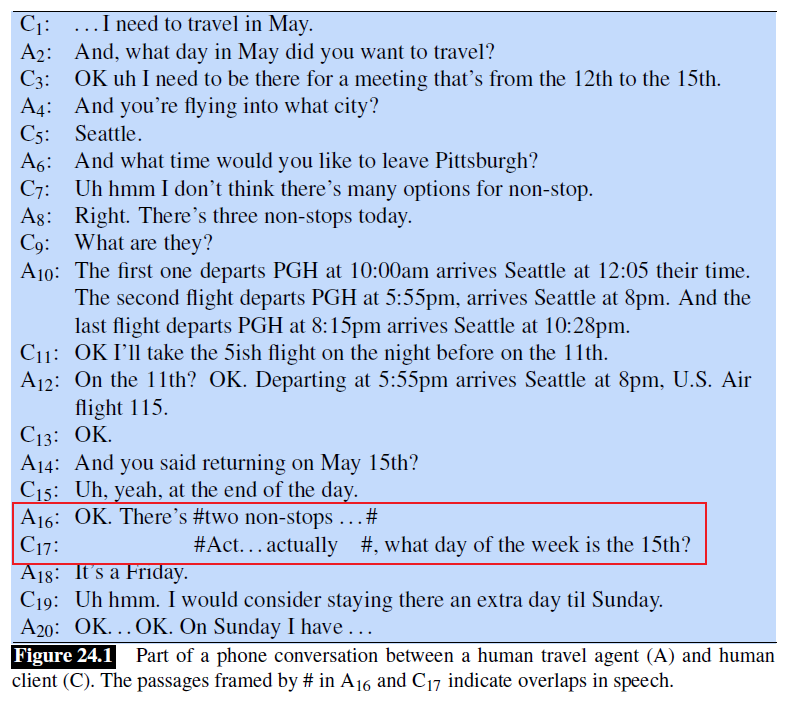

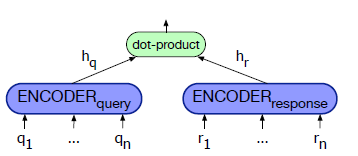

- Properties of Human Conversation

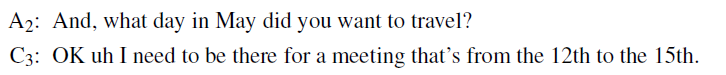

- Chatbots

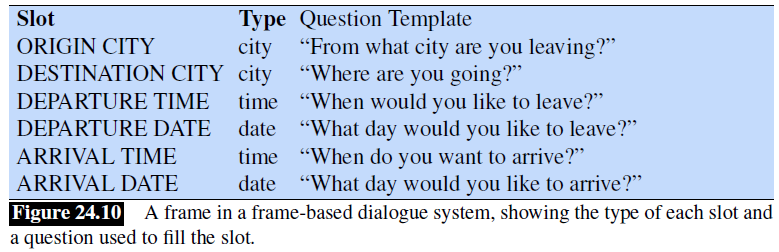

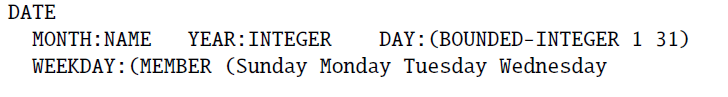

- GUS: Simple Frame-based Dialogue Systems

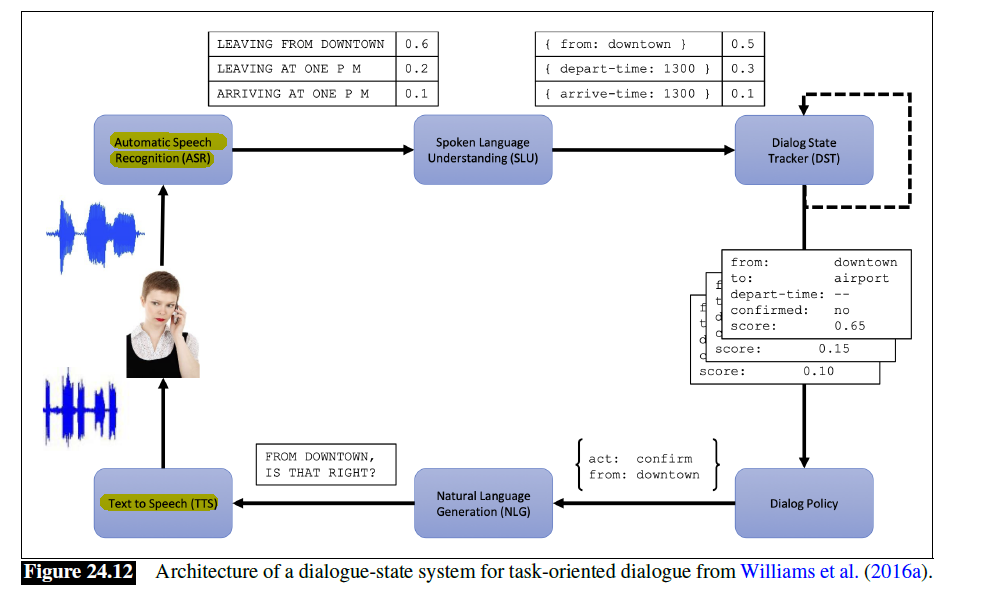

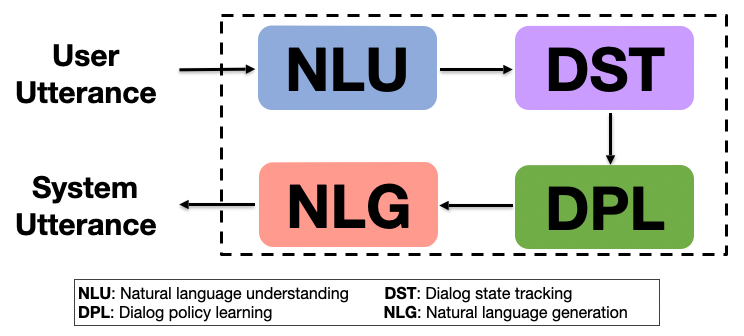

- The Dialogue-State Architecture

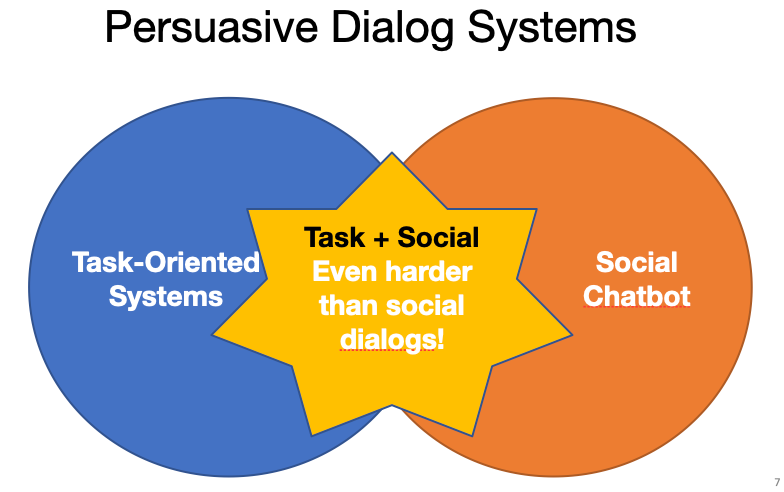

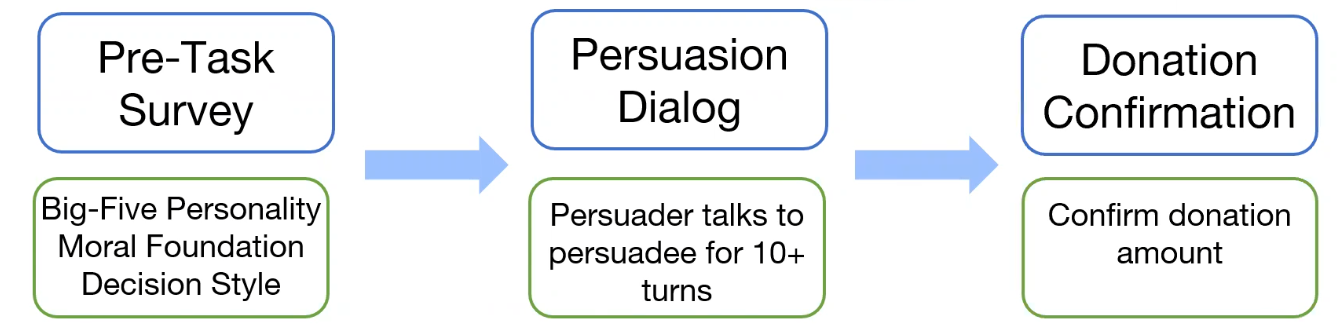

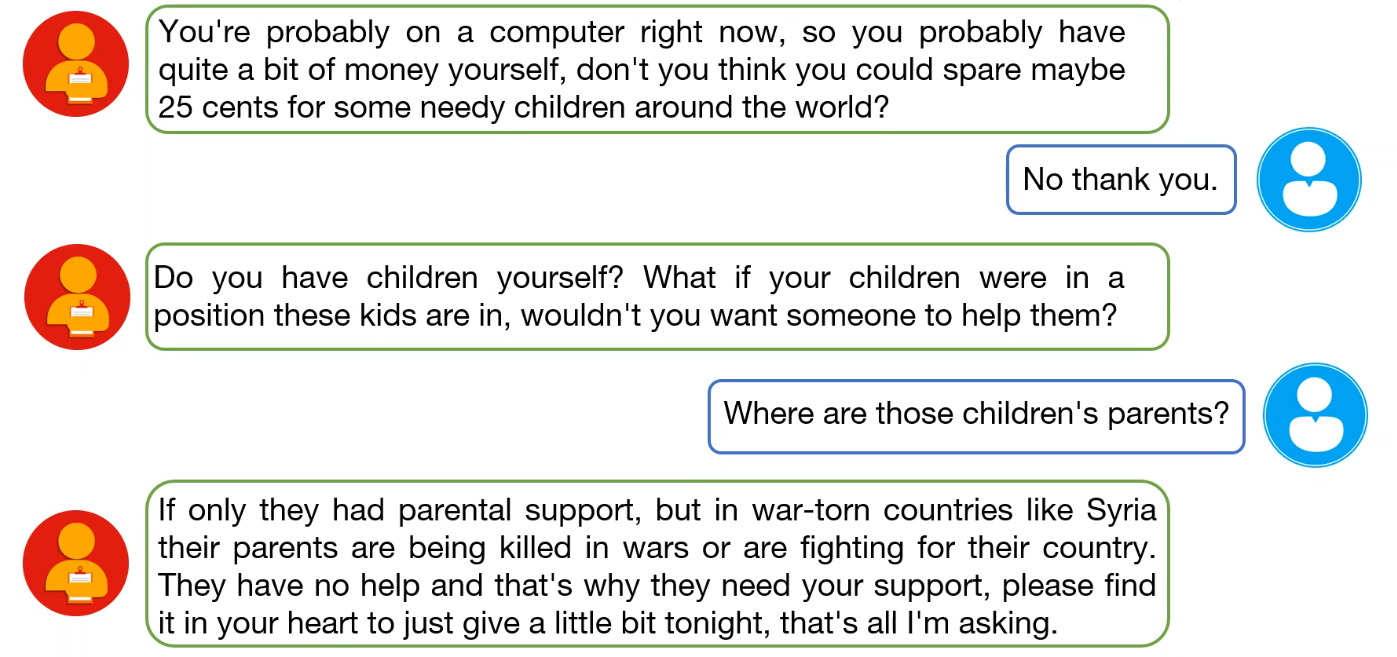

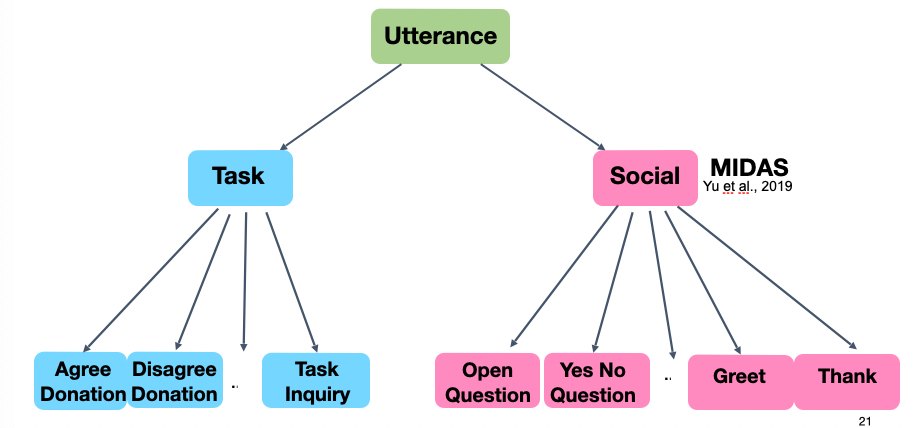

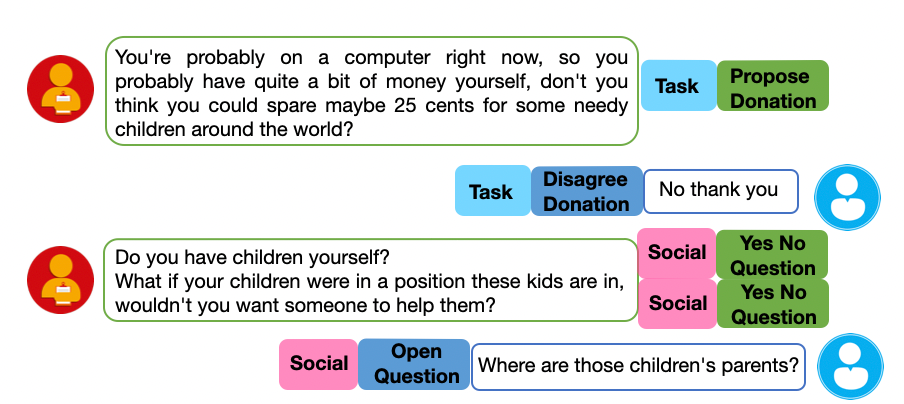

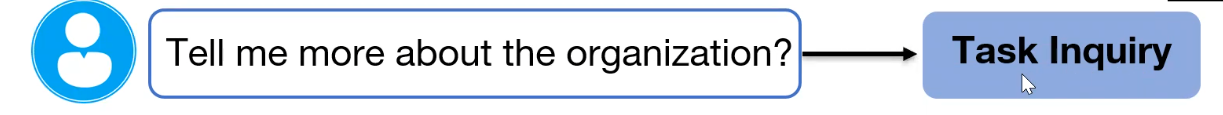

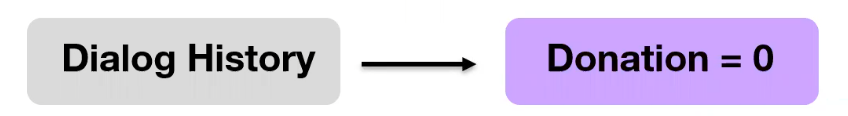

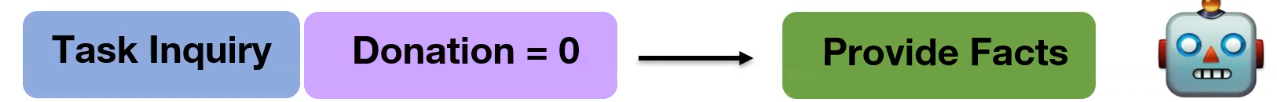

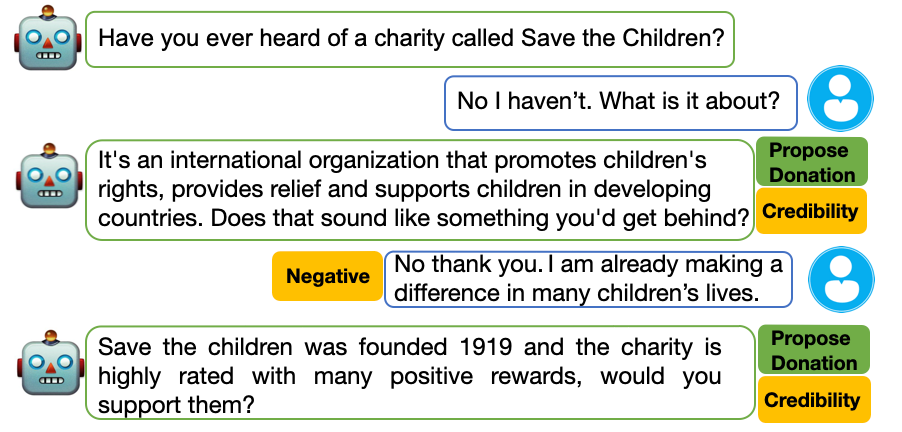

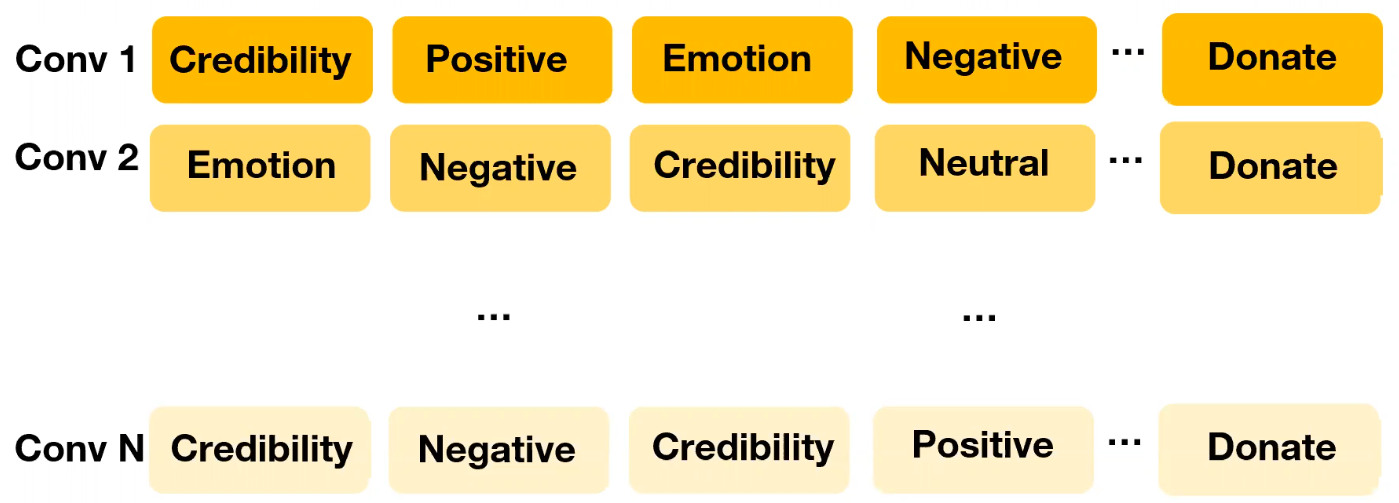

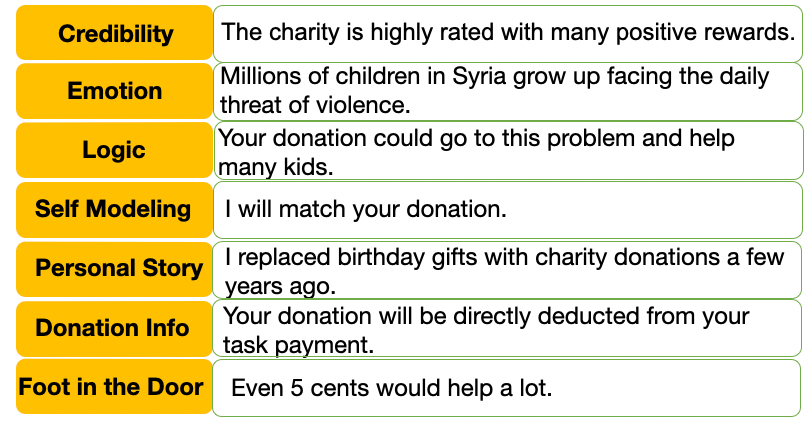

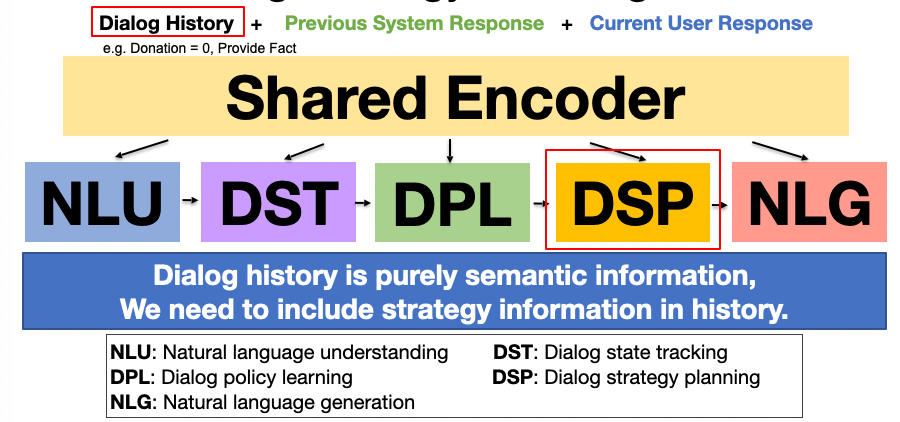

- Persuasive System

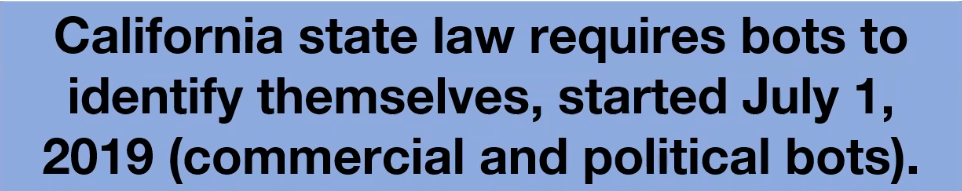

- Ethnical Consideration of Chatbots

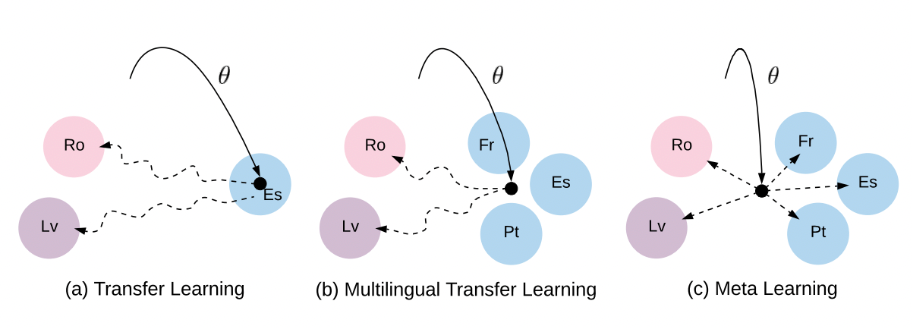

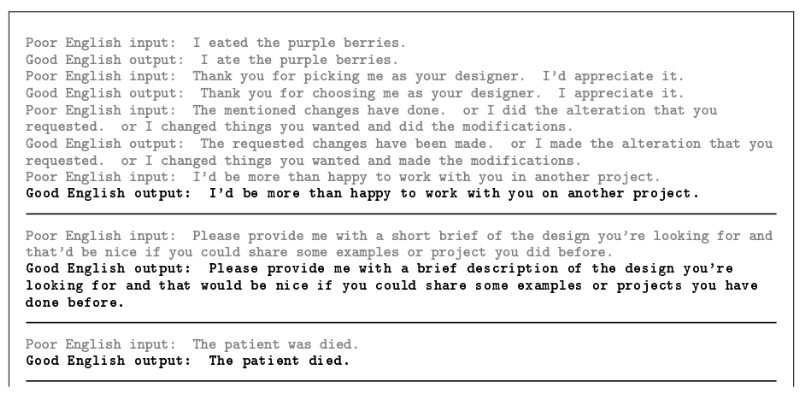

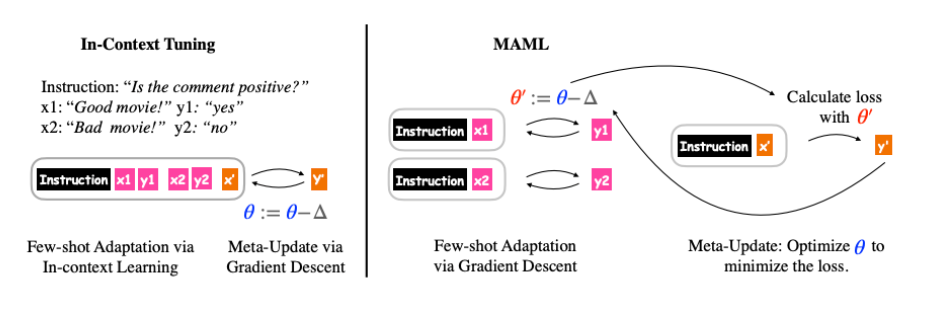

- Meta Learning in NLP

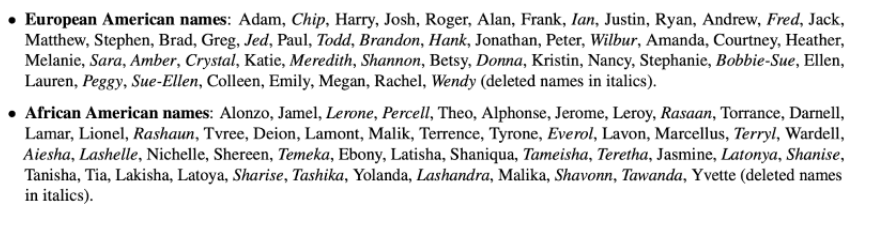

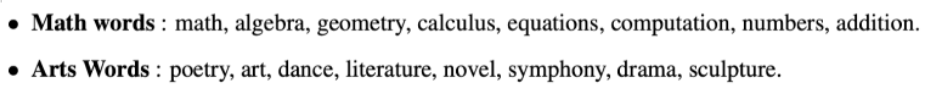

- Bias in NLP

Continuation from: NLP_part_1

Semantic Role Labeling

Here we consider solving the problem of, for each clause, determine the semantic role played by each noun phrase that is an argument to the verb.

We’ll introduce

- semantic role labeling, the task of assigning roles to spans in sentences, and

- selectional restrictions, the preferences that predicates express about their arguments, such as the fact that the theme of eat is generally something edible.

Examples would be:

Then, this would be useful as it can act as a shallow meaning representation that can let us make simple inferences that aren’t possible from the pure surface string of words:

- Question Answering, e.g. “Who” questions usually use Agents

- Machine Translation Generation

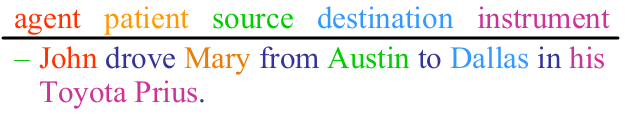

Semantic Roles

A variety of semantic role labels have been proposed, common ones are:

- Agent: Actor of an action

- Patient: Entity affected by the action

- Instrument: Tool used in performing action.

- Beneficiary: Entity for whom action is performed

- Source: Origin of the affected entity

- Destination: Destination of the affected entity

Although there is no universally agreed-upon set of roles, the above list some thematic roles that have been used in various computational papers

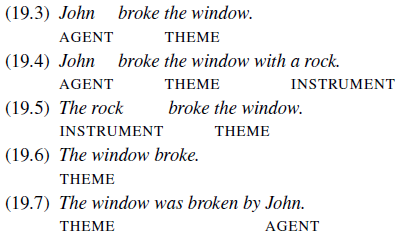

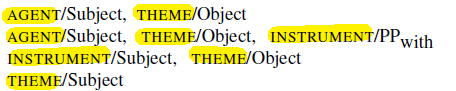

However, there are many problems with using those as-as. For instance, consider these possible realizations of the thematic arguments of the verb break:

These examples suggest that break has (at least) the possible arguments AGENT, THEME, and INSTRUMENT.

The set of thematic role arguments taken by a verb is often called the thematic grid, q-grid, or case frame

An example would be for break:

Additionally, researchers attempting to define role sets often find they need to fragment a role like AGENT or THEME into many specific roles. And it has proved difficult to formally define the thematic roles. This essentially leads to two class of models for solution

-

define generalized semantic roles that abstract over the specific thematic roles.

For example,

PROTO-AGENTandPROTO-PATIENTare generalized roles that express roughly agent-like and roughly patient-like meanings, and those roles are not defined by some “necessary tabular conditions”, but rather by a set of heuristic features that accompany more agent-like or more patient-like meanings.Then the more an argument displays agent-like properties (e.g. being volitionally involved in the event), the more likely it is to be classified as

PROTO-AGENT. -

define semantic roles that are specific to a particular verb or a particular group of semantically related verbs or nouns

The first of them leads to the PropBank dataset, which uses both proto-roles and verb-specific semantic roles.

The second leads to FrameNet dataset, which uses semantic roles that are specific to a general semantic idea called a frame.

PropBank is a verb-oriented resource, while FrameNet is centered on the more abstract notion of frames, which generalizes descriptions across similar verbs.

Of course, both of which have sentences annotated with semantic roles.

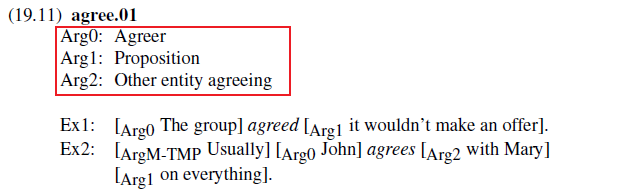

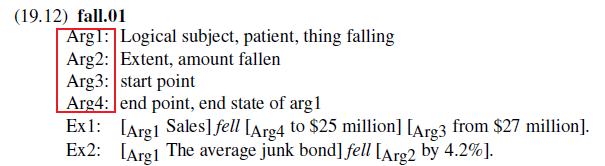

The Proposition Bank

Because of the difficulty of defining a universal set of thematic roles, the semantic roles in PropBank are defined with respect to an individual verb sense. Basically we have verb sense-specific labels (i.e. depending on the verb-sense, we will have different labels for arguments.)

In general, we will use labels names: Arg0, Arg1, Arg2, and so on:

Arg0represents thePROTO-AGENTArg1, thePROTO-PATIENTArg2is often the benefactive, instrument, attribute, or end stateArg3the start point, benefactive, instrument, or attribute,Arg4the end point

Examples include:

|  |

|  |

| ———————————————————– | ———————————— |

|

| ———————————————————– | ———————————— |

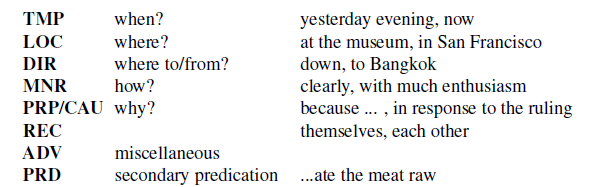

Additionally, PropBank also has a number of non-numbered arguments called ArgMs, (ArgMTMP, ArgM-LOC, etc.) which represent modification or adjunct meanings. As those are pretty much the same across verb senses, they are not listed with each frame file. However, they could be useful for training systems. Some examples of ArgM’s include:

FrameNet

Whereas roles in the PropBank project are specific to an individual verb, roles in the FrameNet project are specific to a frame.

A frame is basically the holistic background knowledge that unites these words, such as:

\[\text{reservation, flight, travel, buy, price, cost, fare}\]all defined with respect to a coherent chunk of common-sense background information concerning air travel.

Therefore, FrameNet defines a set of frame-specific semantic roles, called frame elements, and includes a set of predicates that use these roles. (i.e. those roles of a word will be different across different frames)

- additionally, FrameNet also codes relationships between frames, allowing frames to inherit from each other, or representing relations between frames like causation

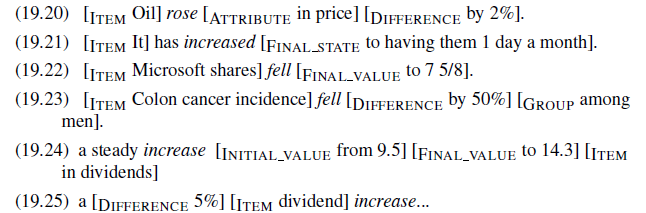

For example, the change_position_on_a_scale frame is defined as follows:

- This frame consists of words that indicate the change of an Item’s position on a scale (the

Attribute) from a starting point (Initial value) to an end point (Final value).

And example sentences with their labels include:

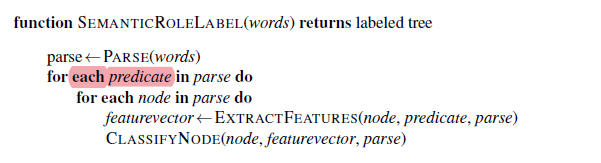

so if a word/verb (predicate) is in this frame, the above would be the labels. Verbs/predicates used in this frame looks like:

Semantic Role Labeling Models

Now we get to the task of Semantic role labeling (sometimes shortened as SRL): automatically finding the semantic roles of each argument of each predicate in a sentence. This is often done by:

- supervised machine learning

- using FrameNet and PropBank to specify predicates, define roles, and provide training and test sets.

Feature-based Algorithm for SRL

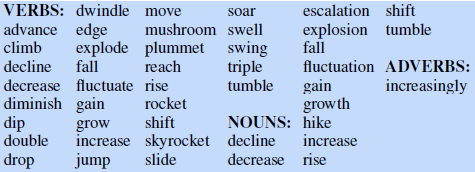

A simplified feature-based semantic role labeling algorithm basically do:

- assign a parse (e.g. constituency parsing) of an input string

- traverse the tree to find all predicates

- for each node that is a predicate:

- do a classification on that node, any standard classification algorithms can be used.

- this can be done either by finding some feature representation of it, or using idea such as GNN

This results in a 1-of-$N$ classifier, i.e. where basically choosing one out of $N$ potential semantic roles (plus 1 for an extra None role for non-role constituents). The algorithm hence looks like:

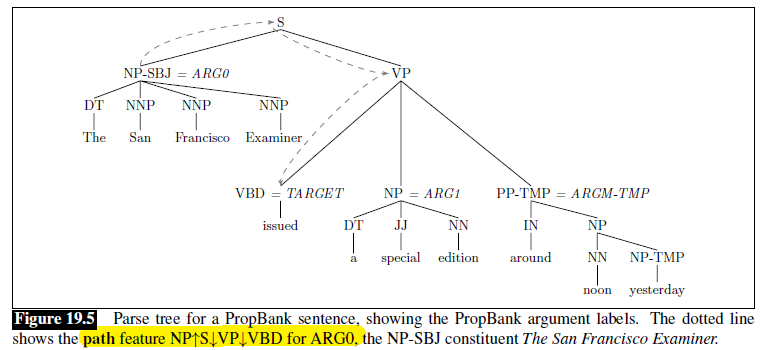

And the parse we did in step one could look like:

The general idea of those algorithms can be summarized into the following steps:

- Pruning: Since only a small number of the constituents in a sentence are arguments of any given predicate, many systems use simple heuristics to prune unlikely constituents.

- Identification: a binary classification of each node as an argument to be labeled or a

NONE. - Classification: a 1-of-N classification of all the constituents that were labeled as arguments by the previous stage

However, since this labels each argument of a predicate independently, it is ingoing interactions between arguments, as we know the semantic roles are not independent.

Therefore, thus often add a fourth step to deal with global consistency across the labels in a sentence. This can be done by:

- local classifiers can return a list of possible labels associated with probabilities for each constituent,

- a second-pass Viterbi decoding or re-ranking approach can be used to choose the best consensus label.

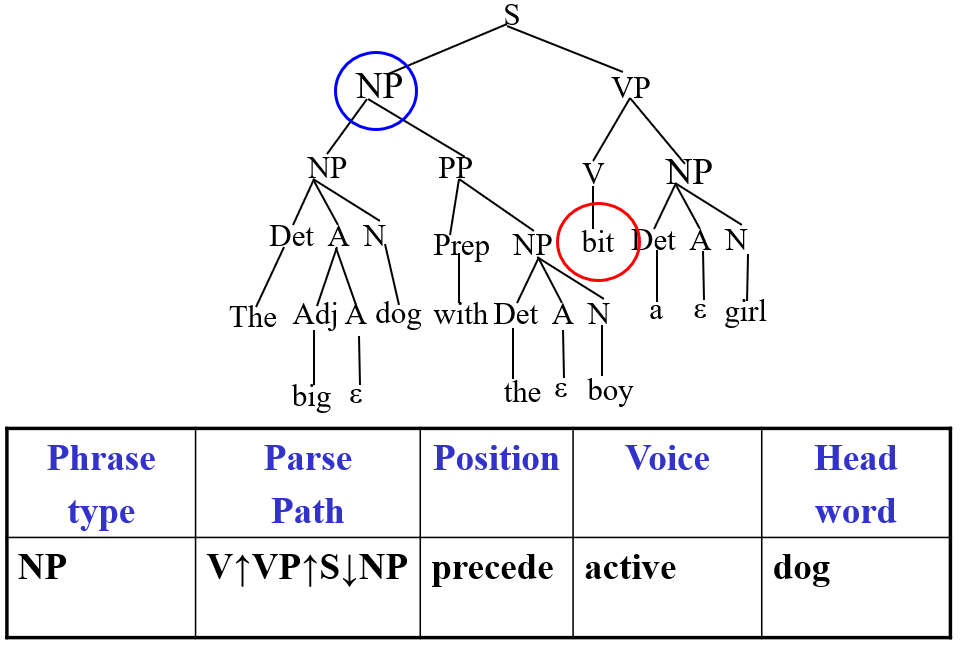

Features for Semantic Labelling Nodes

Common basic features templates mentioned above include

- Phrase type: The syntactic label of the candidate role, the filler (e.g.

NP). - Parse tree path: The path in the parse tree between the predicate and the candidate role filler.

- Position: Does candidate role filler precede or follow the predicate in the sentence?

- Voice: Is the predicate an active or passive verb?

- Head Word: What is the head word of the candidate role filler?

An example would be for the predicate bit:

Problems that this method will suffer:

- Due to errors in syntactic parsing, the parse tree is likely to be incorrect.

- Can have many other useful features.

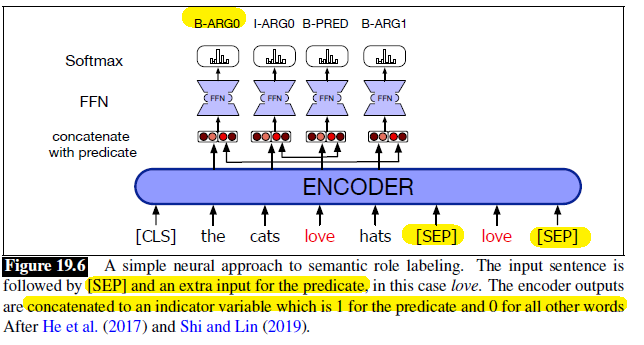

Neural Algorithm for SRL

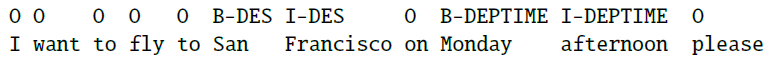

A simple neural approach to SRL is to treat it as a sequence labeling task like named-entity recognition, using the BIO approach.

Assume that we are given the predicate and the task is just detecting and labeling spans. This means that we will have:

- Input: sentence + the predicate, which will be separated by the

SEPtag as shown in the example below - Output: semantic labels + BIO tags, as each label is a constituent (can span over more than one word)

- recall that BIO tags are: beginning, inside, outside.

An example architecture would be feeding it into a transformer:

where of course those input word is mapped to pretrained embeddings.

Some problems that this method would encounter would be:

- Results may violate constraints like “an action has at most one agent”?

Evaluation Metric for SRL

Since essentially we have Identification (should label or not) and Classification (which label), their performance can be evaluated separately:

- each argument label must be assigned to the exact same parse constituent as in the ground truth

- then, accuracy and recall can be used, for combined to look at F-score.

Selectional Restrictions

We turn in this section to another way to represent facts about the relationship between predicates and arguments.

Frequently semantic role is indicated by a particular syntactic position . Examples include:

- Agent: subject

- Patient: direct object

- Instrument: object of “with”

PP- Beneficiary: object of “for”

PP- Source: object of “from”

PP- Destination: object of “to”

PP

However, obviously this is not always correct.

\[\text{The hammer hit the window.}\]then by the above logic hammer would be the AGENT, but it is actually an INSTRUMENT since it is not active.

Therefore, this means we also need to add Selectional Restrictions.

A selectional restriction is a semantic type constraint that a verb imposes on the kind of concepts that are allowed to fill its argument roles.

For instance, consider the following two sentences:

\[\text{I want to eat someplace nearby.}\\ \text{I want to eat Malaysian food.}\]where both involve the predicate eat:

- in the first case, we would want someplace nearby is an adjunct that gives the location of the eating event, instead of direct object

- in the second case, we would want Malaysian food to be direct object, instead of adjunct

Therefore, we see that:

- selectional restrictions are associated with senses, not entire lexemes

- yet another way is to instead specify preference, i.e. which one is preferred instead of which one is deferred, which we will see soon.

This can be very useful in that it can rule out many ambiguities include:

- Syntactic Ambiguity: “John ate the spaghetti with chopsticks.” would be wrong as

PATIENTSof eat must be edible - Word Sense Disambiguation: “John fired the secretary.” vs “John fired the rifle.”

But of course, it is difficult to acquire all of the selectional restrictions and taxonomic knowledge and applying them is also a problem.

In reality, taxanomic abstraction hierarchies or ontologies (e.g. hypernym links in WordNet) can be used to determine if such constraints are met.

- e.g. “John” is a “Human” which is a “Mammal” which is a “Vertebrate” which is an “Animate”

Selectional Preferences

Early word sense disambiguation systems used this idea to rule out senses that violated the selectional restrictions of their governing predicates. However, soon it became clear that these selectional restrictions were better represented as preferences rather than strict constraints.

Basically there will be measurements being a probabilistic measure of the strength of association between a predicate and a class dominating the argument to the predicate. More details see the book.

Advanced Semantics

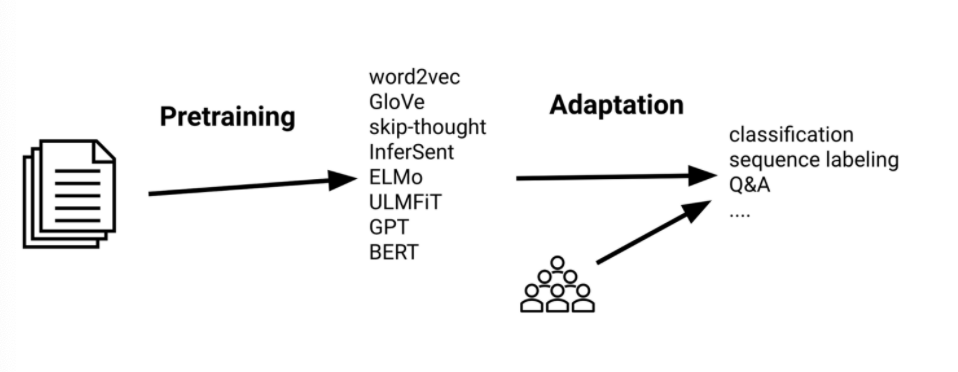

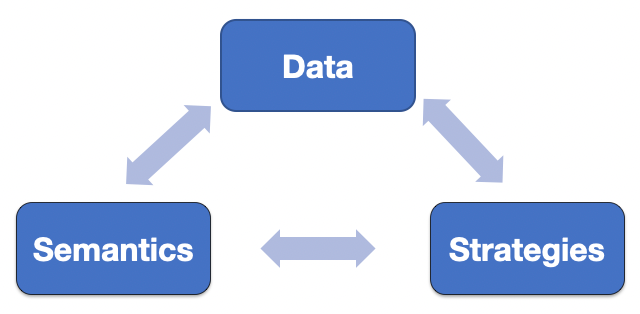

This section aims to give a quick recap of why we are using Pre-trained Language Models today for so many tasks.

A quick recap. We used pre-trained Embeddings to do:

- e.g. skipgram word embeddings, compact dense representation for words

- captures static semantic information of a word

Yet in reality, tasks we need to consider usually includes doing things after you got some embeddings. This means having NN such as RNN/transformer for downstream tasks such as sentiment analysis. However, there we also face problems if we want to train a model from scratch:

- need a collection of data + manual labeling

- often end up doing transfer learning: the training dataset is for move reviews, but for test we are working on Amazon product review

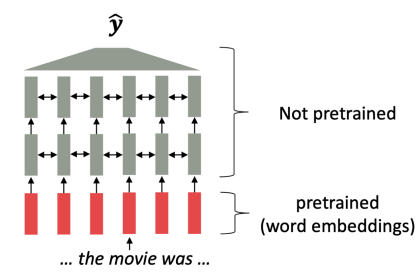

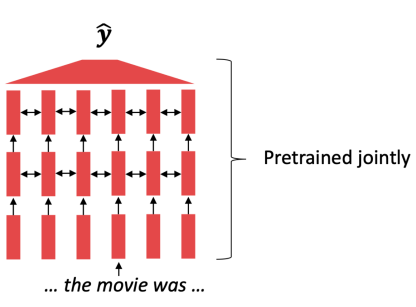

Simple Transfer Learning

The idea with word embedding to have a model learning some task agnostic data, and then fine tune it to task specific data.

This has been commonly used for fine-tuning word embeddings, but you will see that we can also have a model learn task agonistic objective (e.g. a language model), and then fine tune it for downstream task:

Then the advantage of pre-trained model in this setting would be:

-

no need of large corpus, only a few for fine-tuning

-

Can plug learned embeddings into the first layer

Additionally, for learning the task agnostic model, often:

- No labelled data required – just a large text corpus and do self-learning such as masked word prediction or next word prediction

Problems with Pre-trained Word Embeddings

Why can we not just use the pretrained embeddings? (but use pretrained language models)

One assumption/property we had so far for a word embedding include:

- they are static, hence does not care about the context, meaning the following would have the same

- “She broke the windshield with a bat.”

- “He was driving like a bat out of hell.”

- word embedding before learnt from co-occurrence, which does not care about order!

However, for an entire network trained only tasks such as language model related ones:

| Pretrained Word Embeddings | Pretrained Language Model |

|---|---|

|

|

then you basically remove the $\hat{y}$ layer and add a linear layer at the end for transfer learning.

- e.g. include recurrent or Transformer layers to incorporate context

- Better parameter initializations, for make it fine-tunable

Now, we discuss language model as a effective pretrain task (e.g. next word prediction, masked word prediction, etc)

- It’s self-supervised, so there’s no need for labelled data. e.g. Large text collections are readily available on the web

- it’s actually a difficult task, even for humans

- A model would need to learn about syntax, semantics, and even some world knowledge in order to do well at this task

- Luckily with a large number of parameters, large corpora, and lots of GPUs (or TPUs), we can do a pretty reasonable job

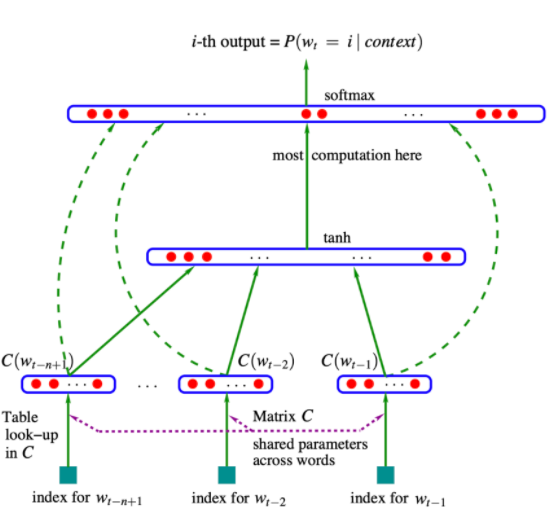

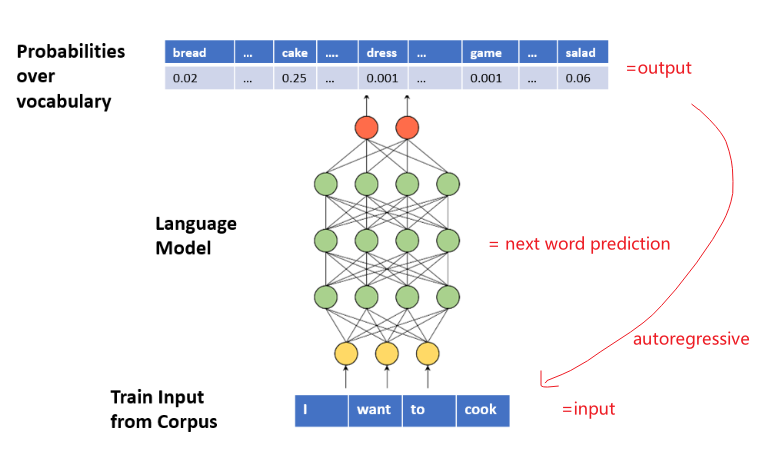

Neural Language Model

Recall: N-gram Language Model

The N-gram language model (e.g. for next word prediction) has limitations:

- Sparsity (e.g. bigram matrix)

- Need to store ngrams (and counts)

- Increases with n and corpus size

- Don’t take into account word similarity

The solution to use Neural Language Model: predict the next word given the current context window

where the architecture is simply:

- input -> embedding layer to start with

- one or more intermediate layers, e.g.

Linear,RNN, etc - output $\vec{h}$ and attach a SoftMax layer

- for fine-tuning the entire model, consider removing the softmax and attach another linear layer on top of $\vec{h}$ for your downstream task

This model solved several issues from N-gram models such as:

- we have no sparsity problem

- no need to store n-grams

- can capture word-similarity via embeddings

However, several unsolved problems include:

- Context window is limited (cannot just put in the entire document)

- Still can’t capture long range dependencies

- Increasing context window == increasing parameters

LMs and Transfer Learning

Then the idea is that the LM task could be useful for any downstream task.

- intuition: predict the next word positive fromthe sentence: the movie is great; positive”. Then we can use it as sentiment analysis

Therefore, you basically take the entire pre-trained model before the softmax layer as initialization, or as fine-tuning, or your downstream task. For instance:

- use an pretrained LSTM LM on a large corpus

- Use weights of embeddings and LSTM layers as initialization for the target task

Nowadays, besides LSTM LM, we have many pretrained architectures to choose from.

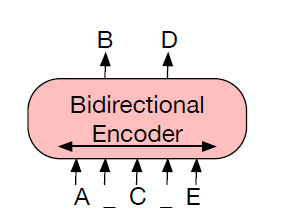

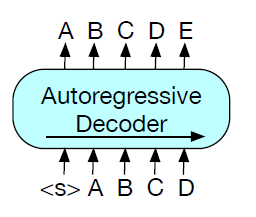

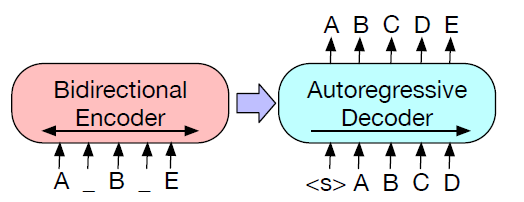

Architecture Choices

In NLP we have a lot of pretained language models. For each, be careful to consider the following aspects:

- Model Architecture

- Pre-training Objective

- Pre-training Data

- Adaptation to downstream tasks

Architecture Examples

In reality, since the pretained model has a lot of parameters, it is common to freeze those weights for the following models and add a linear/NN layer on top for your downstream task.

GPT

- Transformer decoder with 12 layers

- 768 hidden units, 12 heads, 3072-dim feed forward layer (117M params)

- Pre-training objective: next word prediction

- Data:

- BooksCorpus

- 7K unpublished books covering a variety of genres (800M words)

- Allows model to condition on long-range dependencies

- For GPT-2 and GPT-3

- Larger models + more data = stronger LMs

then, the paper tested this model and applied to a variety of different downstream tasks.

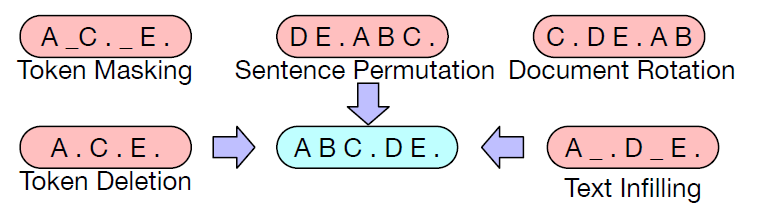

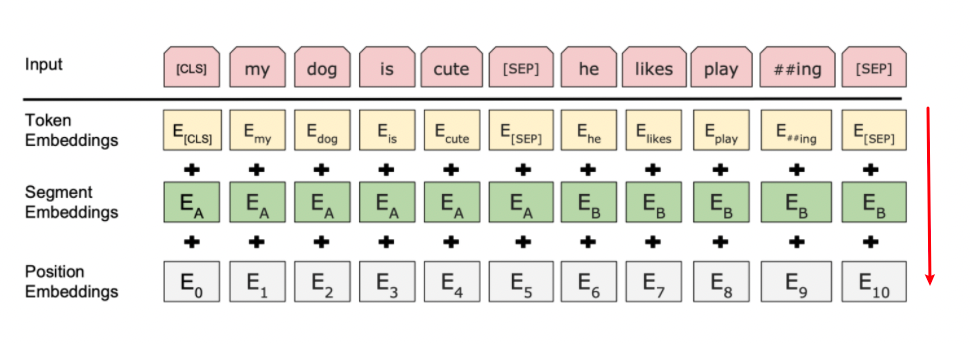

BERT

- Pre-training objective: masked word prediction + next sentence prediction

- LMs are unidirectional, but language understanding is bidirectional.

- next sentence prediction: whether if the next sentence follows from the previous sentence

- 12 layer transformer encoder, 768 hidden units, 12 attention heads = 110M parameters

- Data

- BooksCorpus (800M words)

- English Wikipedia (2,500M words)

- Text passages only

- Training time

- 4 days on 4x4 or 8x8 TPU v2 slices

- ~$7K to train BERT Large

Input architecture looks like

where:

- segment embedding is to denote where we are putting the separator.

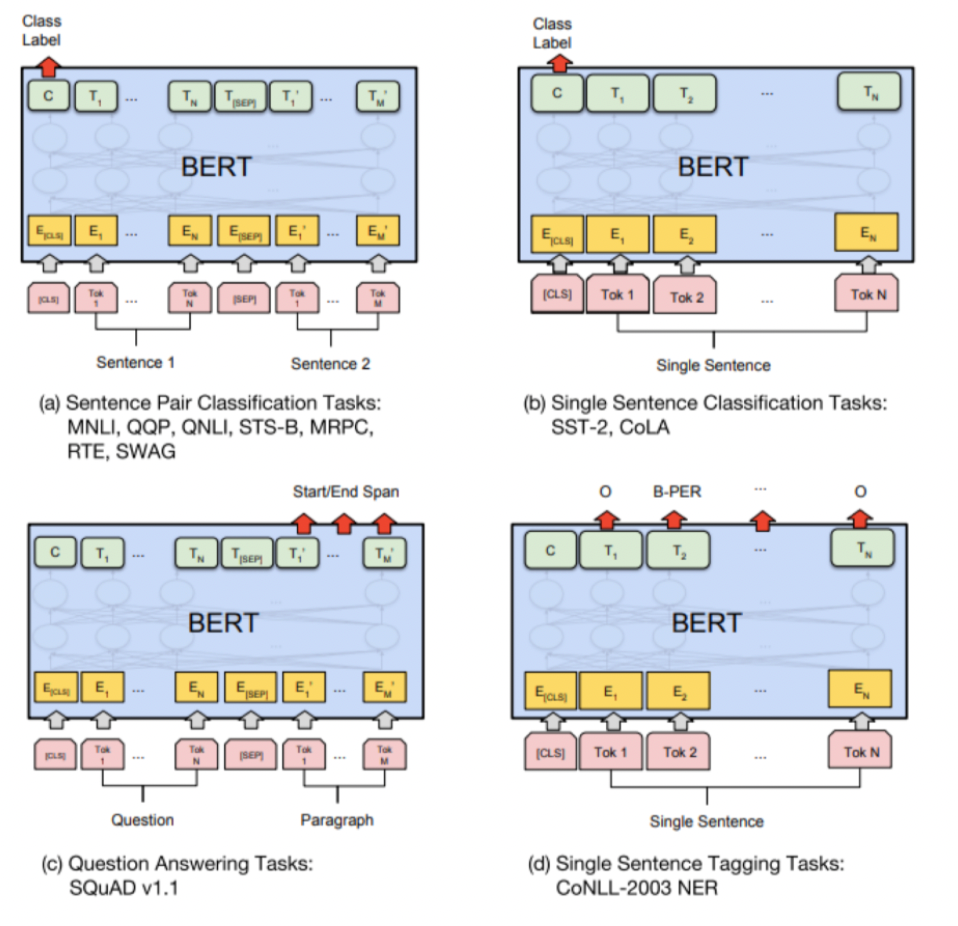

Then, using this model for other downstream tasks include

Some importnat variants of BERT:

- RoBERTa

- Next sentence prediction not necessary as objective

- BERT + data + training steps

- SpanBERT

- Mask out spans instead

- e.g. which span of the reading/resource relates to the question that was asked

- Significant improvements on span selection tasks (such as QA)

- Mask out spans instead

It turns out that this also can be tuned for other languages: https://github.com/google-research/bert/blob/master/multilingual.md

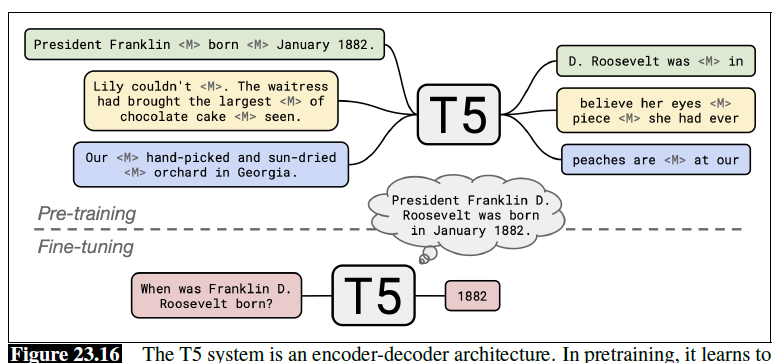

Future of Pretrained Models

Currently the trend is

- to have an increasing size of model and data:

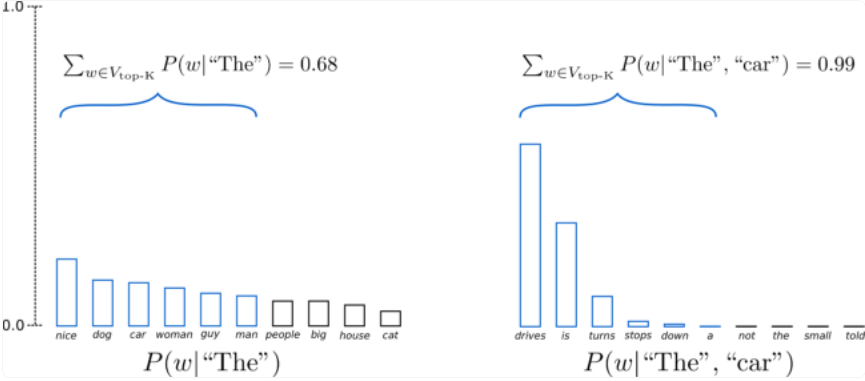

- consider zero/one/few-shot learning instead of transfer learning

Model size

- BERT/GPT (2018) -> ~100M params

- GPT-2 (2019) -> 1.5B params

- T5 (2020) -> 11B param

- GPT-3 (2020) -> 175B params

Dataset sizes

- GPT (2018) -> 800M words

- BERT (2018) -> 3B words

- GPT-2 (2019) -> 40B words

- GPT-3 (2020) -> 500B words

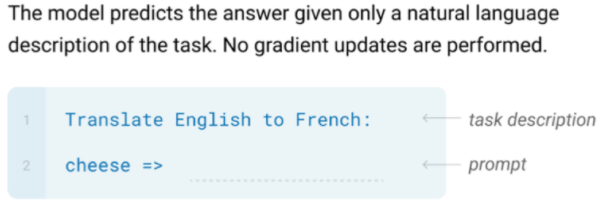

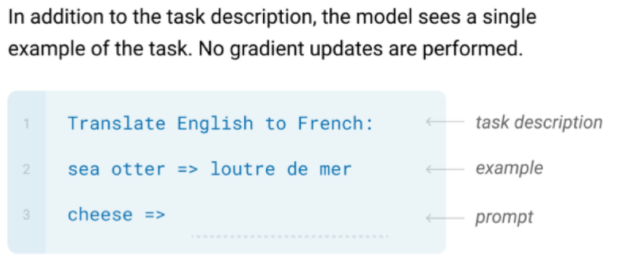

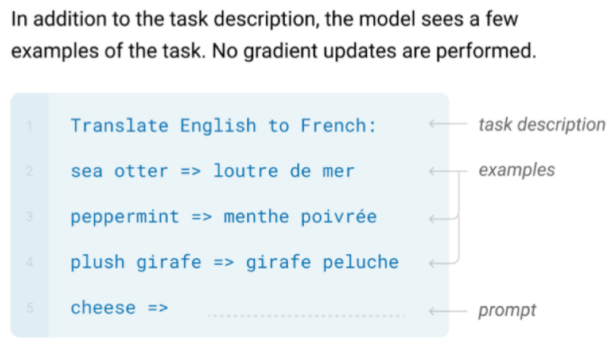

Additionally, different from the transfer learning paradigm, recently we have been looking at zero/one/few-shot learning

| Zero-shot | One-shot | Few-hot |

|---|---|---|

|

|

|

one key difference here is that we do NOT update weights, we are only providing exmaples = providing a context.

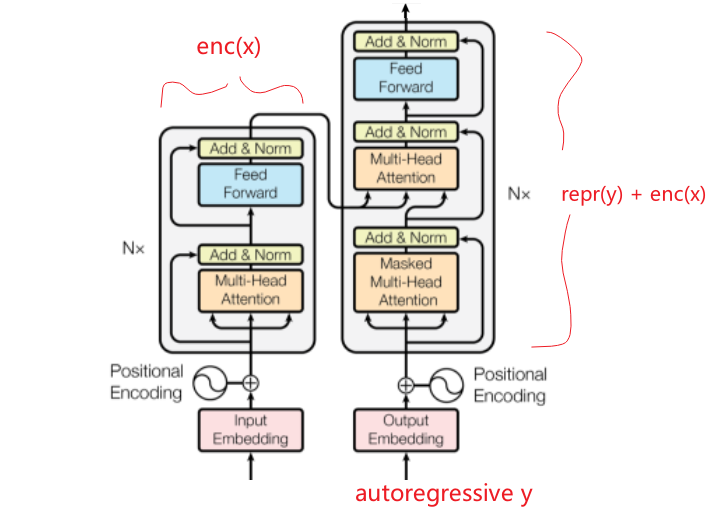

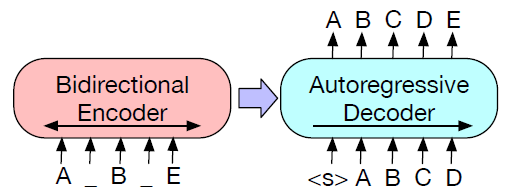

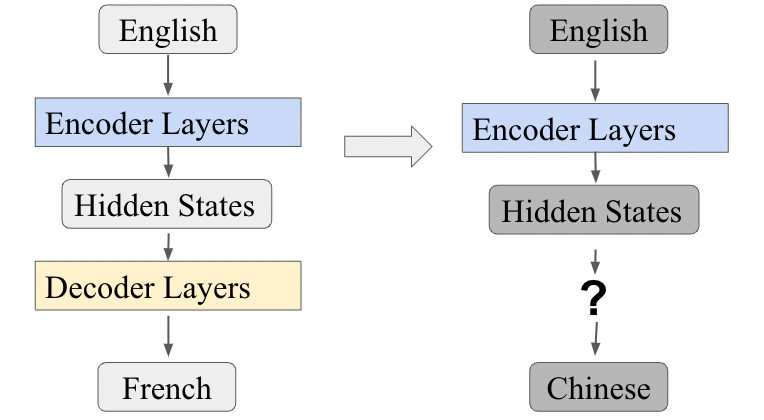

Machine Translation

The task here is to translate one natural language into another. Some common usages include:

- pure translation: Google translate

- computer-aided translation: used to produce a draft translation that is fixed up in a post-editing phase by a human translator. This is commonly used as part of localization: the task of adapting content or a product to a particular language community

- incremental translation: translating speech on-the-fly before the entire sentence is complete

- Image-centric translation: use OCR of the text on a phone camera image as input to an MT system to translate menus or street signs

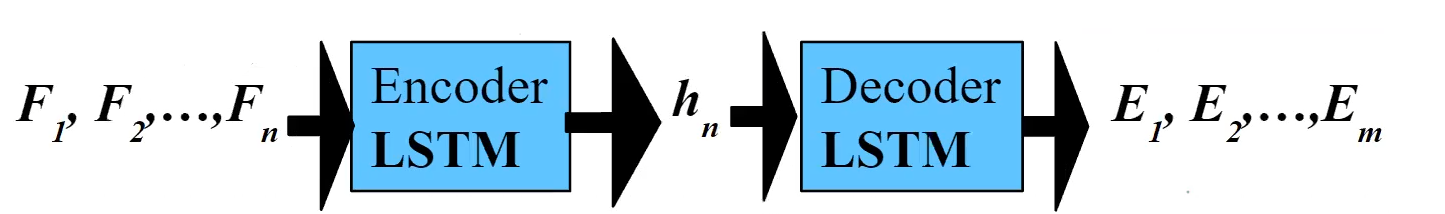

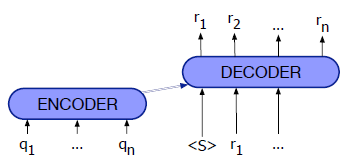

The standard algorithm for MT:

-

statistical methods (not used a lot today)

-

the encoder-decoder network, also called the decoder sequence to sequence network, an architecture that can be implemented with RNNs or with Transformers. Why can we not just use an encoder/just an decoder?

Machine Translation needs a map from a sequence of input words or tokens to a sequence of tags that are not merely direct mappings from individual words, which is exactly what such an architecture is doing.

An example of MT task would be:

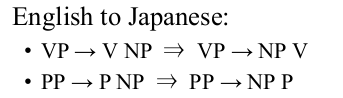

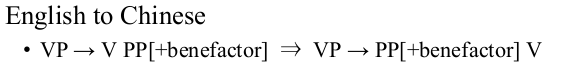

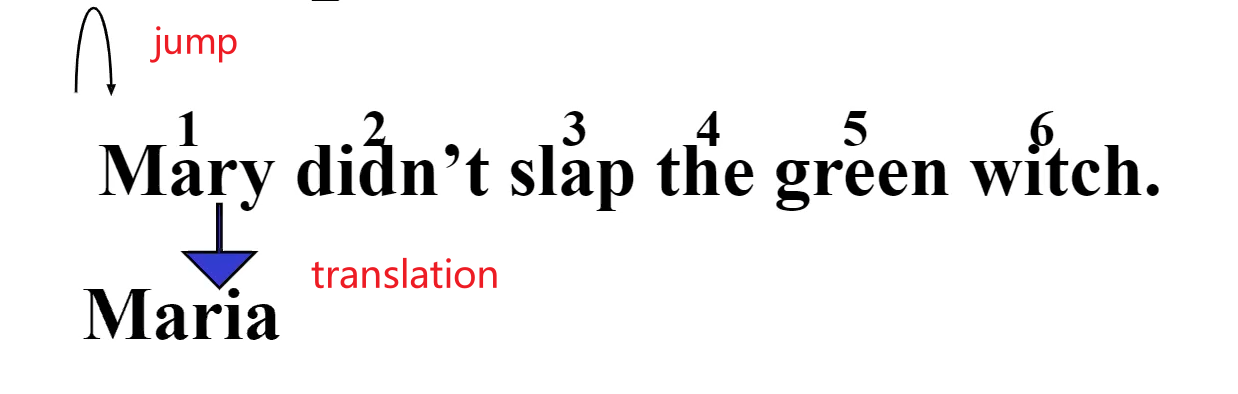

\[\text{English}: \quad \text{He wrote a letter to a friend}\\ \text{Japanese}: \quad \underbrace{\text{tomodachi}}_{\text{friend}}\,\,\underbrace{\text{ni tegami-o}}_{\text{to letter}}\,\,\underbrace{\text{kaita}}_{\text{wrote}}\]which evinces some key challenges that makes the task difficult:

- syntactical difference amongst languages: in English,

verbis in the middle while in Japanese,verbis at the end.- e.g. word ordering difference:

SVO(e.g. English),SOV(e.g. Hindi), andVSO(e.g. Arabic) languages. - e.g. In some SVO languages (like English and Mandarin)

adjectivestend to appear beforeverbs, while in others languages like Spanish and Modern Hebrew,adjectivesappear after thenoun

- e.g. word ordering difference:

- Pro-drop languages: regularly omit subjects that must be inferred.

- e.g. Chinese sometimes drop subjects

- Morphological difference

- Lexical gaps: there might not exist a one to one mapping for a word in foreign languages

Encoder-decoder networks are very successful at handling these sorts of complicated cases of sequence mappings.

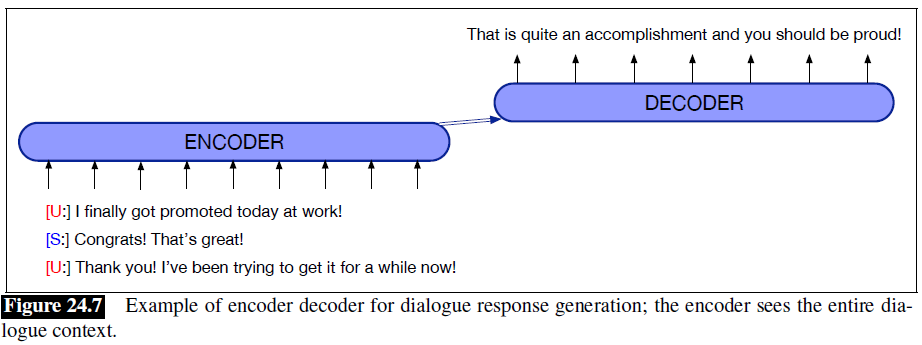

Indeed, the encoder-decoder algorithm is not just for MT; it’s the state of the art for many other tasks where complex mappings between two sequences are involved:

- summarization (where we map from a long text to its summary, like a title or an abstract)

- dialogue (where we map from what the user said to what our dialogue system should respond)

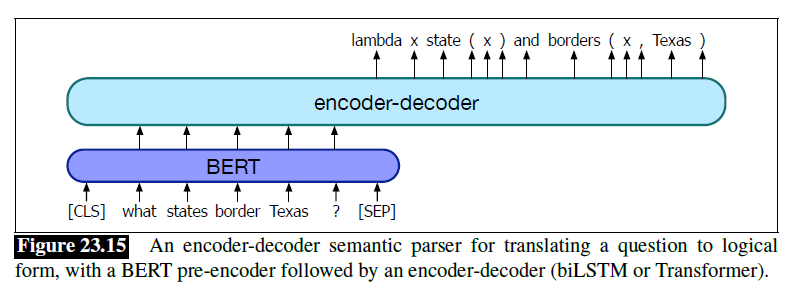

- semantic parsing (where we map from a string of words to a semantic representation like logic or SQL)

- and many others.

However, the current translation quality is not perfect:

- Existing MT systems can generate rough translations that at least convey the gist of a document

- High quality translations possible when specialized to narrow domains, e.g. weather forecasts.

Language Divergence

This section discusses a bit more on the differences between languages that makes the task of MT difficult

Languages differ in many ways, and an understanding of what causes translation such divergences will help us build better MT models. The study of these systematic cross-linguistic similarities and differences is called linguistic typology

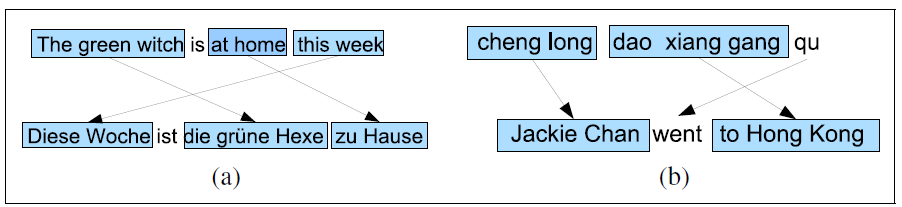

Word Order Typology

As we hinted it in our example above comparing English and Japanese, languages differ in the basic word order:

- Subject-Verb-Object order

- In some SVO languages (like English and Mandarin)

adjectivestend to appear beforeverbs, while in others languages like Spanish and Modern Hebrew,adjectivesappear after thenoun

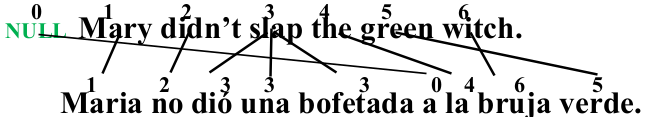

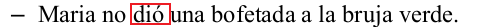

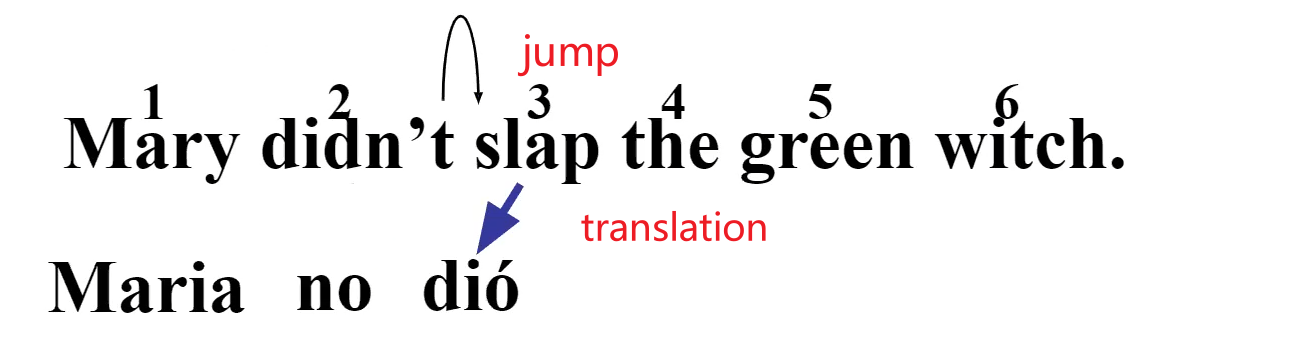

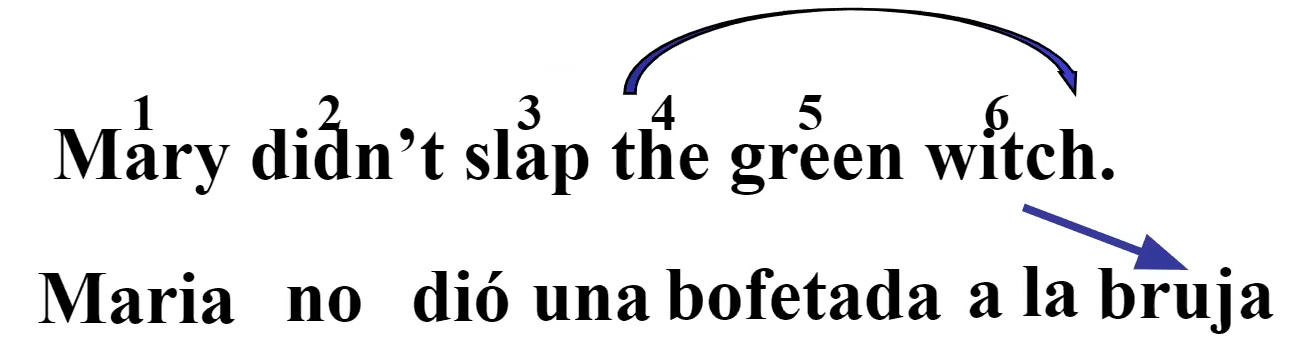

Visual example of word order difference hence complex mapping:

Lexical Divergences

Here we need to deal with problems such as:

- appropriate word/translation can vary depending on the context.

- e.g. German uses two distinct words for what in English would be called a

wall:Wandfor walls inside a building, andMauerfor walls outside a building.

- e.g. German uses two distinct words for what in English would be called a

- Sometimes one language places more grammatical constraints on word choice than another.

- lexical gap: no word or phrase, short of an explanatory footnote, can express the exact meaning of a word in the other language.

Morphological Typology

Recall that a morpheme is the smallest unit of meaning that a word can be divided into.

Then, in many languages we have:

- difference in the number of morphemes per word

- isolating languages like Vietnamese and Cantonese, one morpheme per word

- polysynthetic languages like Siberian Yupik (“

Eskimo”), in which a single word may have very many morphemes, corresponding to a whole sentence in English

- difference in the degree a morpheme is separable

- agglutinative languages like Turkish, in which morphemes have relatively clean boundaries

- fusion languages like Russian, in which a single affix may conflate multiple morphemes

This means that translating between languages with rich morphology requires dealing with structure below the word level, .e.g. use subword models such as BPE.

Rule-Based MT Model

Rules-based machine translation (RBMT) is a machine translation approach based on hardcoded linguistic rules. The rules used here would include:

- lexical transfer

- lexical reordering

- etc.

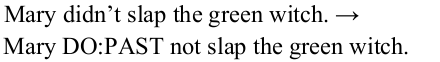

Direct Transfer

The task is to use rules to translate between, e.g. English to Spanish:

-

Use morphological analysis

-

Use lexical transfer rules to find syntactic one to many mapping of the translation of each word:

notice that here we did two things: do the translation per word (e.g. using a dictionary) + translated into basic grammar structure in Spanish

-

Lexical Reordering: fixing some more detailed word orders

-

Morphological generation: generate the morphology back from the first step

But of course even this rule-based approach has shortcomings in quality:

-

lexical reordering does not adequately handle more dramatic reordering such as that required to translate from an SVO to an SOV language. This means we need syntactic transfer rules that map parse tree for one language into one for another.

For example:

-

some transfer requires semantic information. For example, in Chinese

PPexpressing a goal semantically should occur beforeverb, but in English, it occurs after theverb.Hence we need rules such as

Statistical MT

Of course rule-based approach have big problems

-

difficult to come up with good rules between two languages

-

it does not scale as it requires hand-written rules.

Instead of rule based direct transfer, consider a statistical model which at least scales

SMT acquires knowledge needed for translation from a parallel corpus or bitext that contains the same set of documents in two languages.

Noisy Channel Model

The idea is to consider, for example translating French to English:

Source sentence (e.g. French) was generated from some noisy transformation of the target sentence (e.g. English), as we have done in Spelling Correction.

Therefore, we consider finding the translation $\hat{E}$:

\[\hat{E} = \arg\max P(E |F)\]for $F=f_1,f_2…,f_m$ being a sentence in French composing $m$ words, and $E = e_1,e_2,…,e_n$ being a sentence in English composed of $n$ words. Then using Bayesian rules:

\[\hat{E} = \arg\max_E P(E|F) = \arg\max_E \underbrace{P(F|E)}_{\text{likelihood of $E$}}\quad\underbrace{P(E)}_{\text{prior of $E$ occuring}}\]where $P(F\vert E)$ would then be computed by a translation model and $P(E)$ by a language model.

-

e.g. $P(E)$ could come form a n-gram model. or a PCFG which captures syntactic structure as well, etc.

-

to compute $P(F\vert E)$, we would ideally want to do:

- find phrase alignments from a given $E$ to $F$ and translate each phrase

- but this is hard to do, so in reality we consider word alignment $A=a_1,…,a_k$ then translation

more details on how this works is covered in the next section.

Word Alignment for MT

Recall that our task is to compute $P(F\vert E)$, for $F$ being a random variable and $E$ of length $n$.

To simplify the problem, typically assume the following:

where notice that:

given a $E$, each word in $E$ aligns to one or more words in $F$

each word in $F$ aligns to 1 word in $E$ (so it is a vector, as shown below)

Then, an alignment in basically becomes a size $9$ vector which looks like:

\[[1,2,3,3,3,0,4,6,5]^T\]which is for each word in $F$, the index of the word in $E$ which generated it. (then you can apply word-word level translation)

In general, such an alignment can be learnt from

-

supervised word alignments, but human-aligned bitexts are rare and expensive to construct.

-

so typically obtained using an unsupervised EM-based approach to compute a word alignment from unannotated parallel corpus.

IBM Model 1

Now, assume that $P(F\vert E,A)$ is computable. The IBM model for SMT can generated a single $F$ from $E=e_1,…,e_n$ of length $n$ by:

- choose length $k$, so that we would have $F=f_1,…,f_k$

- choose an alignment $A=a_1,…,a_k$ which represents which English word it should comes from

- For each position of word in $F$, generated a word $f_j$ from the aligned English version $e_{a_j}$

Next, we can define how to compute $P(F\vert E)$ of $E$ having length $n$ by:

-

given some length distribution $P(K=k\vert E)$

-

assuming all alignments are qually likely, then there are $(n+1)^k$ possible alignments. Hence:

\[P(A=a_1,...,a_k|E=e_1,...,e_n) = \frac{P(K=k|E)}{(n+1)^k},\quad \forall a_i\]i.e. same probability if given the same length. e.g. $P(A=0,1,2\vert E)=P(A=1,0,2\vert E)$

-

Given some translation probability per word from $e_y \to f_x$, let it be $t(f_x\vert e_y)$ we then have:

\[P(F|E,A) = \prod_{j=1}^kt(f_j|e_{a_j})\]where the alignment would be given by previous step

-

Finally, we sum over all possible alignments:

\[P(F|E) = \sum_A P(F|E,A)P(A|E) = \sum_A \frac{P(k|E)}{(n+1)^k} \prod_{j=1}^kt(f_j|e_{a_j})\]where the alignments $A$ would vary both in length $k$ and in the elements/indices within an alignment of same length.

Typically use an unsupervised EM-based approach to compute a word alignment from unannotated parallel corpus, e.g. you could have $A=0,1,2$, $A=1,0,2$, $A=1,0,0,2$, etc.

- notice that this is only a forward algorithm, so if we need to decode, this would be not very computational efficient.

Lastly, the decoding produce for finding the best alignment can be done by:

\[\hat{A} = \arg\max_A \frac{P(k|E)}{(n+1)^k} \prod_{j=1}^kt(f_j|e_{a_j}) = \arg\max_A \prod_{j=1}^kt(f_j|e_{a_j})\]then, how do we maximize a product of terms? Since each term is independent, we can maximize it by maximizing each term independently. Hence:

\[\hat{a}_j = \arg\max_{i} t(f_j,e_i),\quad \forall j\]which tells you which English word $f_j$ should align to.

- of course you can compute this once you know all the probabilities $P(k\vert E), t(f_y\vert e_x)$.

HMM-Based Word Alignment

Obviously, one problem with IBM Model 1 is that it assumes all alignments are equally likely and does not take into account locality, e.g. next to each other words are likely to be next to each other in another language as well.

To solve this issue, HMM models can be used which models the jump width as hidden state, i.e. :

- translate current word

- decide which next word to jump to for translation

- repeat from step 1

First, an example would be

| Iteration 1 | Iteration 3 | Iteration 4 |

|---|---|---|

|

|

|

notice that:

-

the jump could jump to the current word itself, as there could be one-to-many mapping

-

the jump could jump both forward and backward discontinuously. E.g.

Therefore, now we can define what this model really is

- Hidden states are current English word $e_i$ being translated

- State transition would be modelling the jumps for the next word, which is $a_{ij}=P(s_i \to s_j)$

- Observations are the translated French word $f_j$

- Emission probability is therefore $b_j(f_i)=P(f_i\vert e_j)$, which is basically probability of translation from $e_j \to f_i$

Then this means that:

- Observation likelihood $P(F\vert E)$ can be computed by the forward algorithm with HMM

- Decoding $\hat{E}=\arg\max_E P(E\vert F)$ can be computed by Viterbi algorithm with HMM

Training Word Alignment Models

Both the IBM model 1 and HMM model require the following parameters

- $P(f_i\vert e_j)$ probability of individual word translation (shown in the example below)

- $P(K=k\vert E)$, length of target translation sentence

which can be obtained/trained on a parallel corpus to set the required parameters, including the specific ones such as:

- for HMM Model, we also need $P(e_i)$ and $P(e_i \to e_j)$ being transition probabilities

In general

- if we have a labelled/supervised (hand-aligned) training data, parameters can be estimated directly using frequency counts; e.g. sentence alignment. Which sentence in a corpus corresponds to which sentence is another corpus.

- most often we have an unsupervised piece of parallel corpus. Then we need to estimate the probabilities using EM type algorithm.

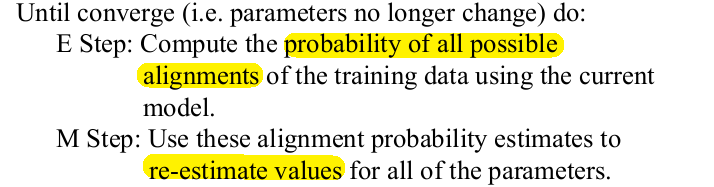

A sketch of the algorithm looks like

For example, given two data in the training corpus:

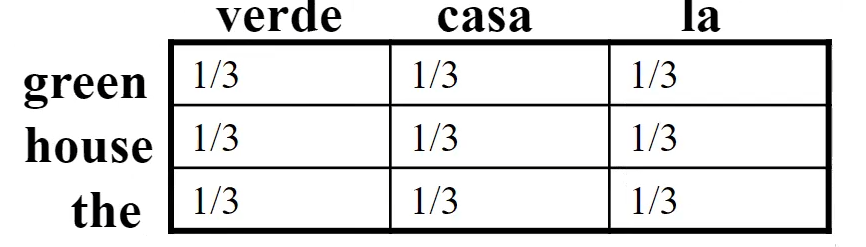

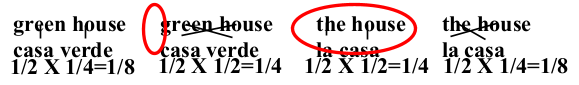

\[\text{green house} \iff \text{casa verde}\\ \text{the house} \iff \text{la casa}\]Our aim is to be able to compute $P(s_i\vert e_j)$ for $s_i$ being a Spanish word, and perhaps vice versa.

- notice that you should expect $\text{house} \iff \text{casa}$ to have a higher probability as this pair occurs more often

- this is exactly what the EM algorithm tries to do

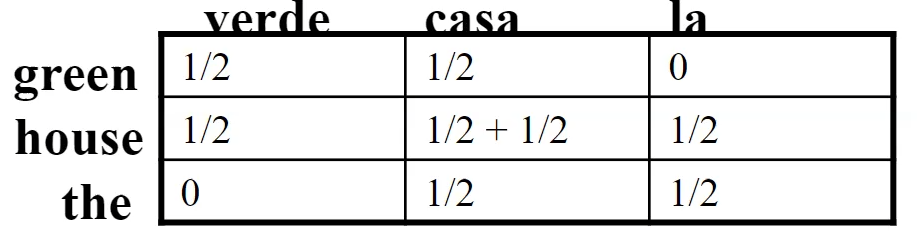

-

Step one: initialization. Here we can assume a uniform distribution such that each row/column sums to one

notice that each cell would represent $P(s_i\vert e_j)$ and vice versa.

-

Expectation Step: we impute the missing data, which is to consider all possible alignments/individual translations. Here we have four possible cases:

\[\text{green} \iff \text{casa}\quad \text{AND} \quad \text{house} \iff \text{verde};\quad p=\frac{1/9}{2/9}=\frac{1}{2}\\ \text{green} \iff \text{verde}\quad \text{AND} \quad \text{house} \iff \text{casa};\quad p=\frac{1/9}{2/9}=\frac{1}{2}\]and that

\[\text{the} \iff \text{la}\quad \text{AND} \quad \text{house} \iff \text{casa};\quad p=\frac{1/9}{2/9}=\frac{1}{2}\\ \text{the} \iff \text{casa}\quad \text{AND} \quad \text{house} \iff \text{la};\quad p=\frac{1/9}{2/9}=\frac{1}{2}\] -

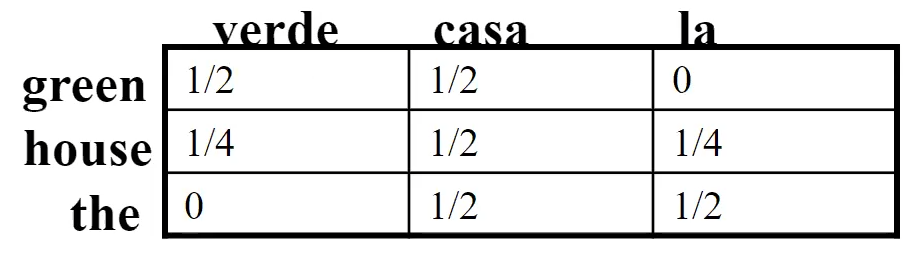

Maximization Step: then we fill in the table using the previous probabilities

And normalizing to sum to one:

-

Repeat step 2-3 until convergence. Here just to be clear we show one more iteration of step 2. Possible alignments and their probability:

Hence

\[\text{green} \iff \text{casa}\quad \text{AND} \quad \text{house} \iff \text{verde};\quad p=\frac{1/8}{3/8}=\frac{1}{3}\\ \text{green} \iff \text{verde}\quad \text{AND} \quad \text{house} \iff \text{casa};\quad p=\frac{1/4}{3/8}=\frac{2}{3}\]and

\[\text{the} \iff \text{la}\quad \text{AND} \quad \text{house} \iff \text{casa};\quad p=\frac{1/4}{3/8}=\frac{2}{3}\\ \text{the} \iff \text{casa}\quad \text{AND} \quad \text{house} \iff \text{la};\quad p=\frac{1/8}{3/8}=\frac{1}{3}\]

Note that the above works by the assumption that many words will be repeated.

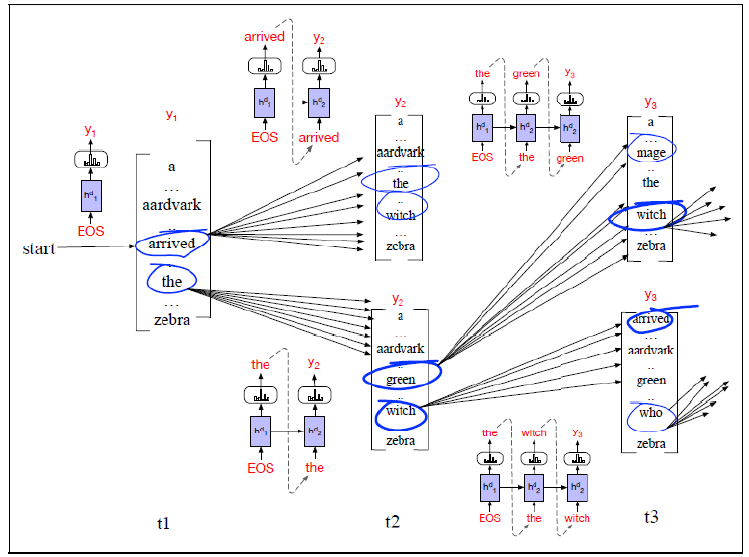

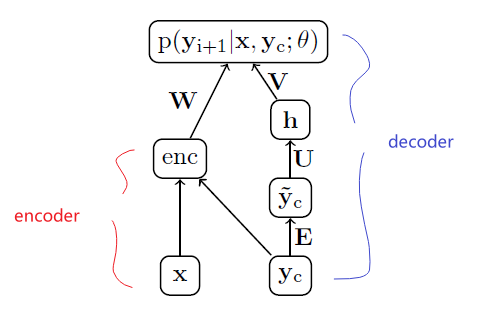

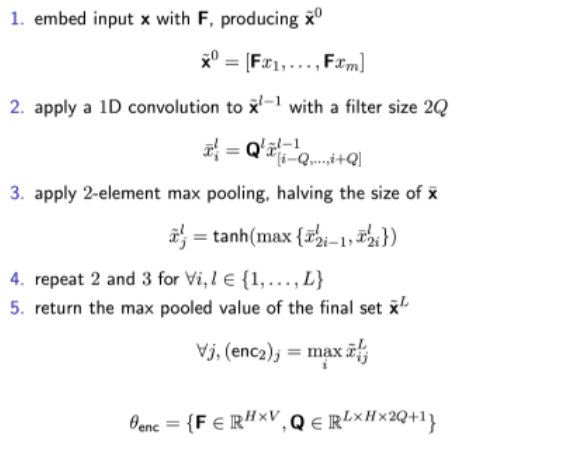

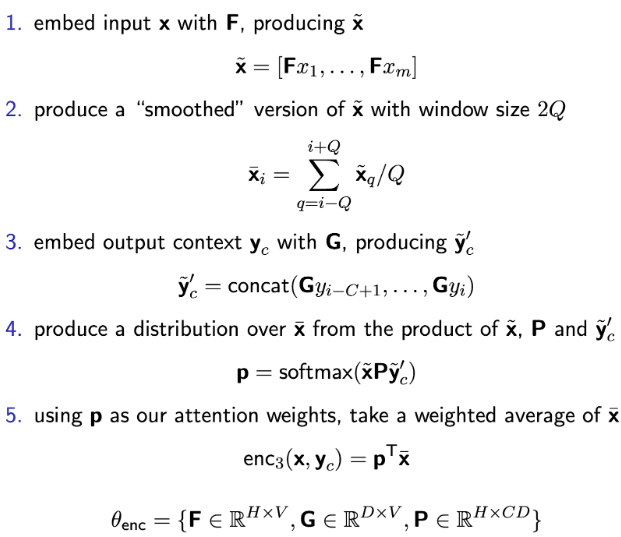

Neural Machine Translation

As mentioned before, translation often involves a complex map between two sequences, hence usually we do

-

encoder-decoder model (e.g. LSTM blocks)

e.g. transformer based encoder-decoder

-

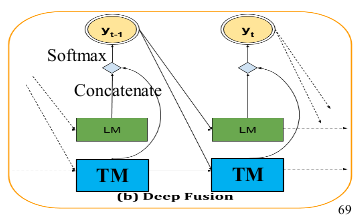

integrate an LSTM with language model using “deep fusion.”

which basically is for decoder to predict the next word from a concatenation of the hidden states of both the translation and language LSTM models.

Evaluating MT

Translations are evaluated along two dimensions:

- adequacy: how well the translation captures the exact meaning of the source sentence. Sometimes called faithfulness or fidelity.

- fluency: how fluent the translation is in the target language (is it grammatical, clear, readable, natural).

The most accurate metric is to have human to score the translations based on the above criterion, but that is inefficient and expensive. This in reality is done often in the following manner:

- Collect one or more human reference translations of the source, i.e. gold standard

- Compare MT output to these reference translations.

- Score result based on similarity to the reference translations.

Some automatic scoring system implemented today include BLEU, NIST, TER, etc.

BLEU

BLEU (Bilingual Evaluation Understudy): scores based on the following criteria:

- What percentage of machine n-grams can be found in the reference translation?

- Brevity Penalty: if translated sentence is too short, e.g.

the., it matches/precision $1.0$ but it is cheating!

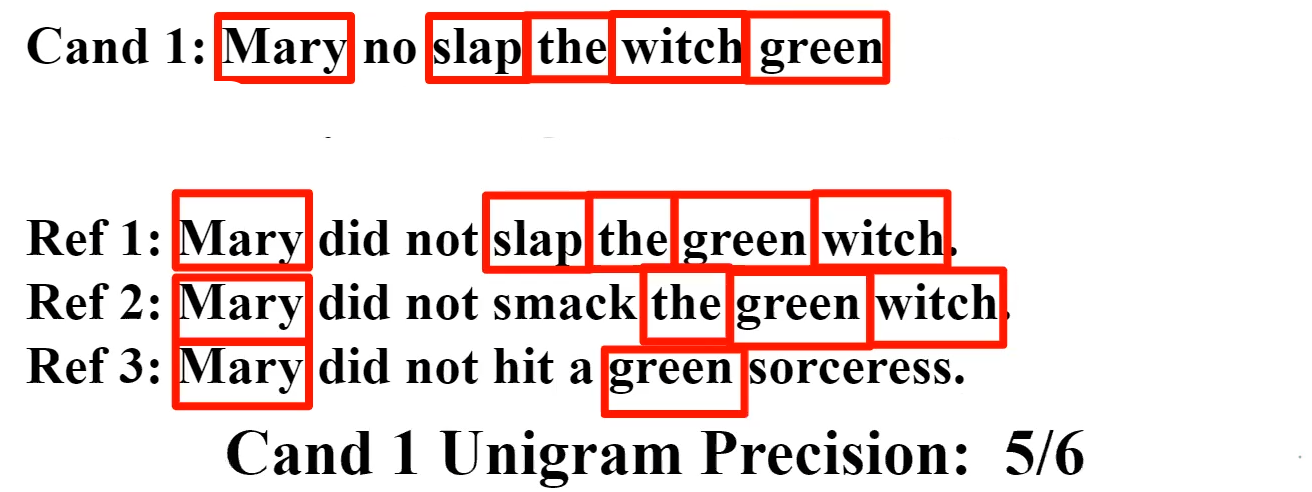

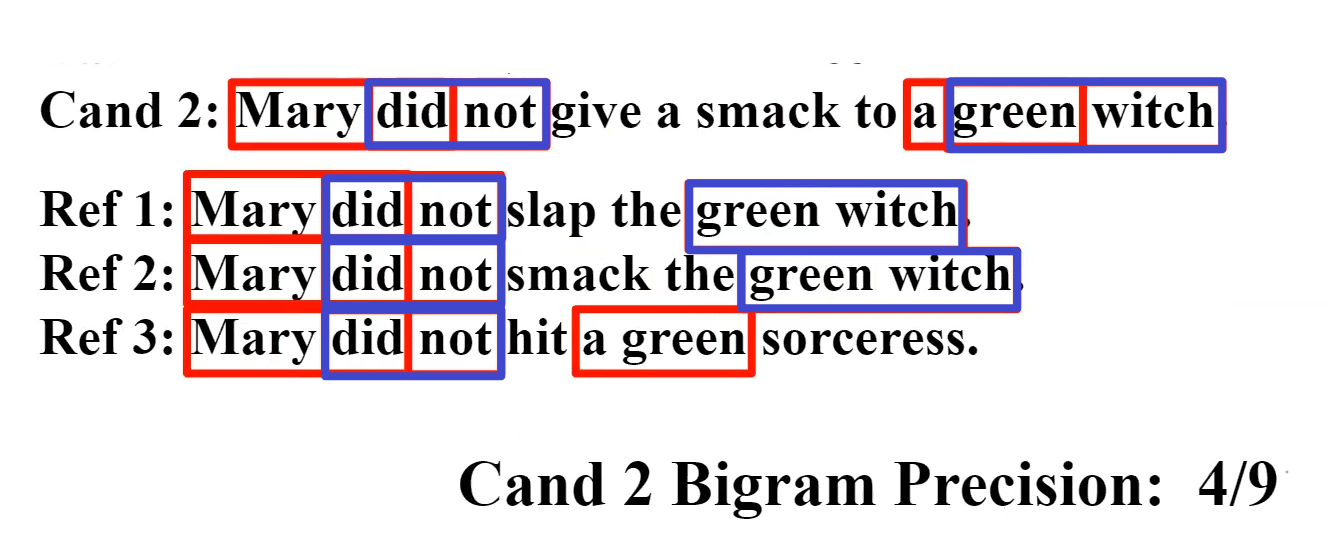

To answer the first question, an example would be:

-

finding shared unigrams:

where basically we count a match if the unigram appears in at least one of the reference translation

-

finding shared bigrams

-

until some fixed size $N$, typically $4$.

Finally, scoring the first criteria involves finding a geometric mean:

\[\text{Precision}_{gm} = \sqrt[N]{\prod_{n=1}^NPr_n}\]However, this alone will not work, because you can have a shorter sentence which would give a higher score. Therefore, we also need a brevity penalty/the second criteria:

-

ideally, we might compute $\text{Recall}$. which could solve the problem. But this is problematic since there are multiple alternative gold-standard references.

-

therefore, we cook up with the following metric:

Define effective reference length, $r$, as the length of the reference sentence with the largest number of n-gram matches. Then, if $c$ is the candidate sentence length:

\[BP = \begin{cases} 1,& \text{if }c > r\\ e^{1-(r/c)},& \text{if }c \le r \end{cases}\]

So that the final score is:

\[BLUE = \text{Precision}_{gm} \times BP\]Challenges and Futures in MT

Certain challenges in MT include:

- OOV word in test set. We need smoothing or other techniques, such as subword.

- domain mismatch: e.g. corpus in movie but test in Amazon product review

- translating long context is hard, even with attention it is not completely solved

- low-resource language pairs

- NMT can pick up biases (e.g. gender bias)

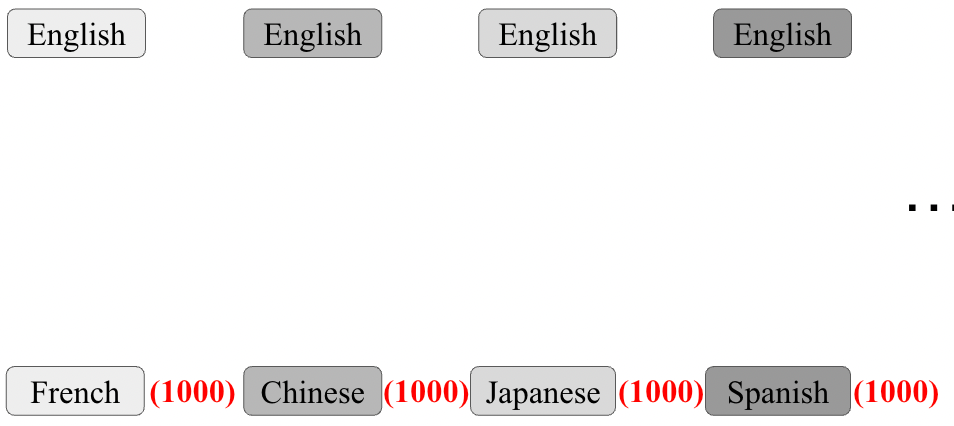

Some future directions include:

- unsupervised MT attempts to learn language laignment form monolinguial data

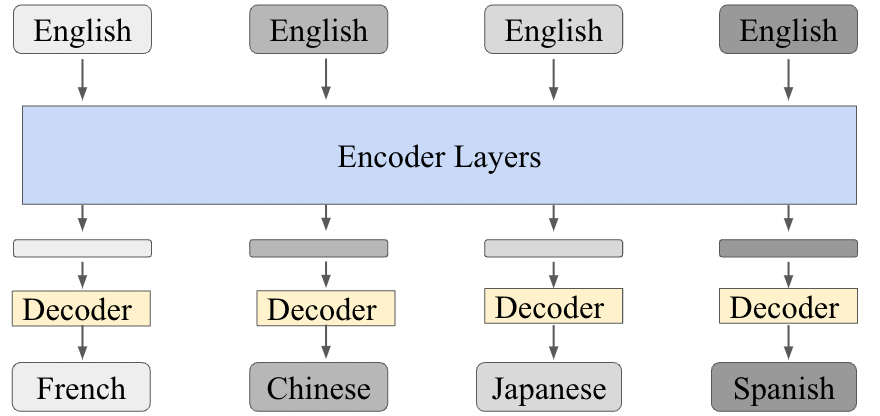

- multilingual NMT, learn shared representation across all languages (which can solve low resource problem)

- Neural LSTM methods are currently the state-of-the-art.

Sentiment Analysis and Classification

In this chapter we introduce the algorithms such as Naïve text Bayes algorithm and apply it to text categorization, the task of assigning a label or category to an entire text or document. In particular, we focus on categorizing text based on its sentiments, i.e. sentiment analysis.

- e.g. positive or negative orientation that a writer expresses toward some object.

- simplest version of sentiment analysis is a binary classification task

Other commonly used names for sentiment analysis include: Opinion extraction; Opinion mining; Sentiment mining; Subjectivity analysis.

So now are are dealing with classification task, which is to be set in contrast to Seq2Seq task. Here we only need to output a single label/or a small fixed set for the entire document, e.g. a single written product review.

Other text categorization other than sentiment include:

- Spam detection: another important commercial application, the binary classification task of assigning an email to one of the two classes spam or not-spam

- Authorship attribution: whether if a given text is written by the person

- Subject category classification: which library category does a piece of text belong to? Science? Humanities? etc.

The goal of classification is to take a single observation, i.e. a test sample, extract some useful features, and thereby classify the observation into one of a set of discrete classes.

And again recall that there are two broad class of classification algorithms:

- Generative classifiers like Naïve Bayes

- Discriminative classifiers like logistic regression

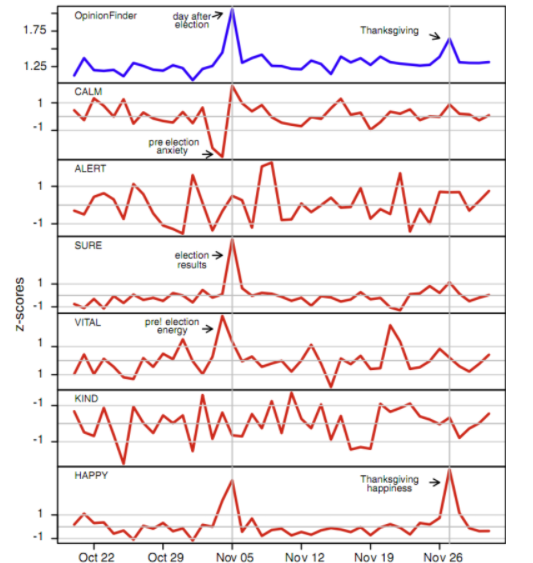

Real Life Example: Twitter posts sentiment analysis

For posts from Twitter:

where we see “Happiness” surges around Election day and Thanksgiving. Other commonly used cases include:

-

Movie: is this review positive or negative?

- Products: what do people think about the new iPhone?

- Politics: what do people think about this candidate or issue?

- Used as an input/feature to other task, such as predicting market trends from sentiment

- etc.

Scherer Typology of Affective States

Some labels you can have for affective states human have:

- Emotion: brief organically synchronized … evaluation of a major event

- angry, sad, joyful, fearful, ashamed, proud, elated

- Mood: diffuse non-caused low-intensity long-duration change in subjective feeling

- cheerful, gloomy, irritable, listless, depressed, buoyant

- more for longer term predictions

- Interpersonal stances: affective stance toward another person in a specific interaction

- friendly, flirtatious, distant, cold, warm, supportive, contemptuous

- used often for Social Media analysis, how users interact online between each other

- Attitudes: enduring, affectively colored beliefs, dispositions towards objects or persons

- liking, loving, hating, valuing, desiring

- used a lot for product reviews and sentiment analysis

- Personality Traits

- nervous, anxious, reckless, morose, hostile, jealous

- not very commonly used, but related to those personality test you take

Of course, which ones to use depends on the particular application.

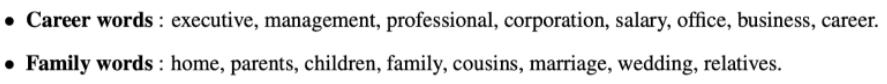

Sentiment Analysis and Attitudes

Often sentiment analysis is framed as the detection of attitudes. In particular, we want to find out

-

Holder (source) of attitude

-

Target (aspect) of attitude

- Type of attitude

- From a set of types: Like, love, hate, value, desire, etc.

- Or (more commonly) simple weighted polarity: positive, negative, neutral, together with strength

- Text containing this attitude: which sentence/document

In reality:

- Simplest task: Is the attitude of this text positive or negative? This will be our focus in this chapter as a classification task.

- More complex: Rank the attitude of this text from 1 to 5, i.e. include strength

- Advanced: all the 4 subtasks.

Sentiment Classification

Here we take on the simplest task: Is an IMDB movie review positive or negative?

For instance, we could have

| Positive | Negative |

|---|---|

| When Han solo goes light speed , the stars change to bright lines, going towards the viewer in lines that converge at an invisible point . Cool. |

“snake eyes” is the most aggravating kind of movie : the kind that shows so much potential then becomes unbelievably disappointing |

In general, the steps we need to go through before and at classification time include:

-

Tokenization:

- Deal with HTML and XML markup

- Deal with Emoticons

- Deal with Twitter mark-up (names, hash tags), e.g.

@xxx

-

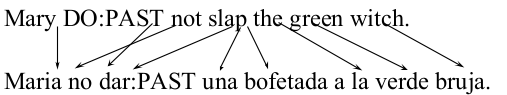

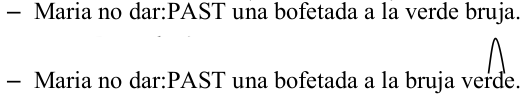

Feature Extraction

-

Some how handle negation, which could flip the meaning of a sentence:

\[\text{I didn’t like this movie.}\quad v.s.\quad \text{I did like this movie.}\]one idea is to convert the former to:

\[\text{I didn’t NOT\_like NOT\_this NOT\_movie}.\]basically adding NOT to every word between negative and the following punctuation.

-

Then perhaps we don’t need all words? Only need the adjectives? (It turns out using All words work better)

-

-

Classification using different classifiers:

\[\text{class} = \arg\max_{c_j \in C} P(C|W=w_1,...,w_n)\]- Naive Bayes

- MaxEntropy

- SVM

We will first introduce some baseline algorithms that works at least.

Baseline: Multinomial Naïve Bayes

Multinomial Naïve Bayes is essentially a Naive Bayes classifier that is able to output a class among many classes (more than two). This is essentially done by:

\[P(w_1,...,w_n|c_j) = \prod_i P(w_i|c_j)\]

- (assumption: Naive Bayes) probabilities $P(w_i\vert c)$ are independent given the class $c$. Therefore it allows

hence

\[c = \arg\max_{c_j \in C} P(c_j) P(w_1,...,w_n |c_j) =\arg\max_{c_j \in C} P(c_j)\prod_i P(w_i |c_j)\]

So for Naive Bayes, we consider:

\[c = \arg\max_{c_j \in C} P(W|c_j)P(c_j) =\arg\max_{c_j \in C} P(c_j)\prod_i P(w_i |c_j)\]meaning that we assume each word to be independently contributing to $c_j$. Note that

-

this is a generative model because this equation can be understood as:

- generate a class $P(c_j)$

- generate the words from the class $P(w_i\vert c_j)$

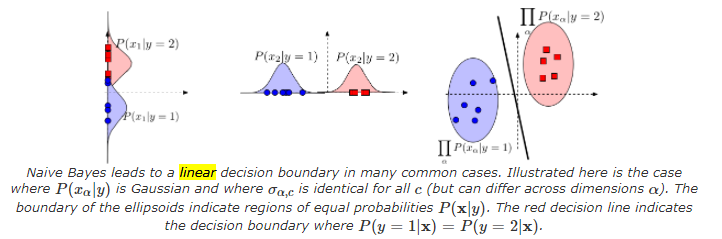

-

in many cases Naive Bayes is a linear classifier

where you see the decision boundary for $P(c_1\vert x)=P(c_2\vert x)$ is a line.

To avoid numeric underflow, we would convert the estimate to log space:

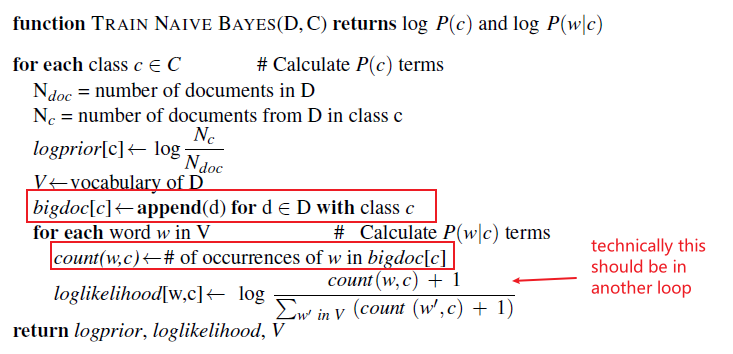

\[c_{NB} = \arg\max_{c_j \in C} \,\,\log P(c_j) + \sum_i \log P(w_i|c_j)\]But how do we learn $P(c_j)$ and $P(w_i\vert c_j)$?

-

learning the prior $P(c_j)$ is easy. Given $N_{doc}$ documents (e.g. posts), let $N_{c_j}$ be the number of document with sentiment class $c_j$. Then:

\[\hat{P}(c_j) = \frac{N_{c_j}}{N_{doc}}\] -

learning the likelihood means counting how often is $w_i$ associated with class $c_j$. Hence we consider:

\[\hat{P}(w_i|c_j) = \frac{\text{Count}(w_i, c_j)}{\sum_{w\in V}\text{Count}(w_i, c_j)}\]where:

- $\text{Count}(w_i, c_j)$ represent the number of times the word $w_i$ appears among all words in all documents of class $c_j$.

- In other words, we first concatenate all documents with class $c_j$ together, then count the number of occurrence of $w_i$.

- since eventually we need this for all words, $V$ presents the entire vocabulary instead of vocabulary in class $c_j$.

Since we are using count, consider smoothing as well for unmet $w_i,c_j$ pair:

\[\hat{P}(w_i|c_j) = \frac{\text{Count}(w_i, c_j)+1}{\sum_{w\in V}\text{Count}(w_i, c_j)+1}=\frac{\text{Count}(w_i, c_j)+1}{\text{Count(total words in $c_j$)}+|V|}\]which is critically needed as the likelihood term is a multiplication.

- for OOV words, we can use the technique introduced before by inducing

OOVvocab in the training set. However, for Naive Bayes, it is more common to ignore those words completely (as if you didn’t see it) - other processing step include removing stop words such as

aandthefor both train and test sets. Though the performance gain from this is not significant.

Therefore, the algorithm for learning is

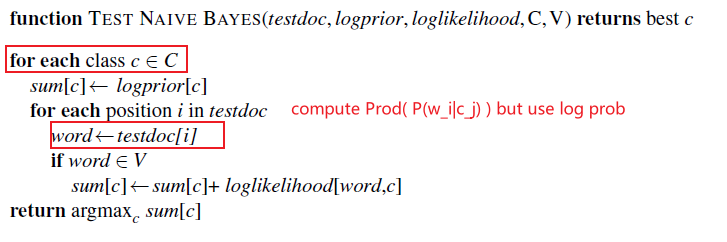

Then for testing, we simply perform:

\[\hat{c} = \arg\max_{c_j \in C} P(c_j)\prod_i P(w_i |c_j)\]Hence the algorithm for test is

Example

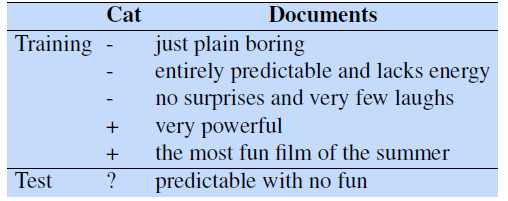

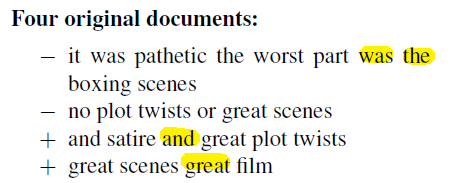

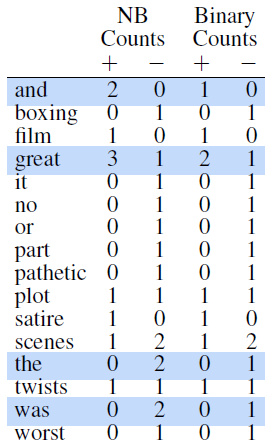

We’ll use a sentiment analysis domain with the two classes positive (+) and negative (-). The train and test set is provided below

What are the parameters $P(c_j)$ and $P(w_i\vert c_j)$ in this case?

-

the prior $P(c_j)$ is simply counts:

\[P(-) = \frac{3}{5},\quad P(+) = \frac{2}{5}\] -

then the smoothed likelihood essentially is

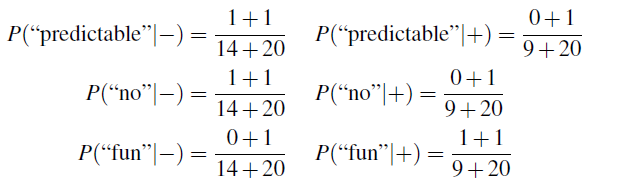

\[\hat{P}(w_i|c_j) = \frac{\text{Count}(w_i, c_j)+1}{\sum_{w\in V}\text{Count}(w_i, c_j)+1}=\frac{\text{Count}(w_i, c_j)+1}{\text{Count(total words in $c_j$)}+|V|}\]where $\vert V\vert =20$ and we see that $\text{Count(total words in$+$)}=14$ and $\text{Count(total words in$-$)}=9$. Hence some examples:

and the rest is trivial.

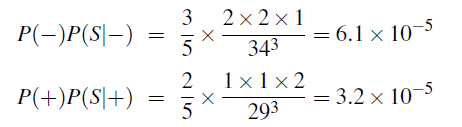

For estimation, first we realize that the word with is OOV. Hence we ignored it as we are doing Naive Bayes. Then we are basically predicting $\text{“Prediction no fun”}$:

hence the result is $-$ as it has a higher probability.

Improvements From Baseline

While standard naive Bayes text classification can work well for sentiment analysis, some small changes are generally employed that improve performance.

-

does the $\text{Count}(w_i,c_j)$ really matters? Maybe all it matters is that fact that it occurred in $c_j$ document at least once!

-

dealing with negation improves Naive Bayes accuracy as well:

\[\text{I didn’t like this movie.}\quad v.s.\quad \text{I did like this movie.}\]notice that the negation of didn’t completely changed $P(\text{like}\vert c_j)$, for instance. The baseline that deals with this is mentioned before by converting them to

\[\text{I didn’t NOT\_like NOT\_this NOT\_movie}.\]where newly formed words such as $\text{NOT_like}$ will be treated as a word.

-

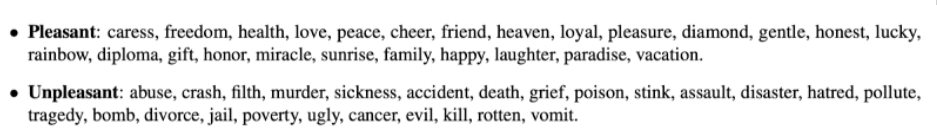

insufficient labeled training data to train accurate naive Bayes classifiers using all words in the training set. In those cases we will have to derive word features (e.g. labelled emotion carried in a word) using sentiment lexicons. Some popular ones online include:

-

General Inquirer, LIWC, The Opinion Lexicon, MPQA

-

example of annotated words from MPQA include:

\[+ : admirable, \,\,beautiful, \,\,confident, \,\,dazzling, \,\,ecstatic,\,\, favor,\,\, glee,\,\, great\\ - : awful,\,\, bad,\,\, bias,\,\, catastrophe, \,\,cheat, \,\,deny,\,\, envious,\,\, foul,\,\, harsh, \,\,hate\]A common way to use lexicons in a naive Bayes classifier is to add a feature that is counted whenever a word from that lexicon occurs.

-

Here we will go over details on how to implement the Binary NB variant.

If we believe that word occurrence may matter more than word frequency: then we can do “Binary Naive Bayes”, which clips all the word counts in each document at $1$. (i.e. it’s like a Boolean switch for each word)

this results in binary multimodal naive Bayes, or binary NB

in practice this seems to be true, that performance is better.

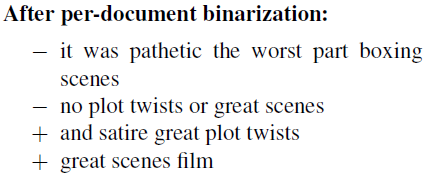

In algorithm, basically you can first reduce all duplicate words in each document to one occurrence, and then perform the same algorithm.

For example:

| Raw Naive Bayes | Boolean Naive Bayes |

|---|---|

|

|

where the highlighted words are duplicates within the document. Hence the counts become:

Then for testing, the we do not remove words as this is just for making the probability work better.

Problems in Sentiment Classification

Some cases where Naive Bayes’ assumption essentially fails:

-

Subtlety in words: each word itself is neutral, but overall there is a sentiment:

“If you are reading this because it is your darling fragrance, please wear it at home exclusively, and tape the windows shut.”

-

Thwarted Expectations and Ordering Effects: where words such as However would suddenly change the sentiment

“This film should be brilliant. It sounds like a great plot, the actors are first grade, and the supporting cast is good as well, and Stallone is attempting to deliver a good performance. However, it can’t hold up.”

In those cases just modelling $P(w_i\vert c_j)$ won’t work. Neural Models that remember/see contexts would work better.

Polarity in Sentiment Analysis

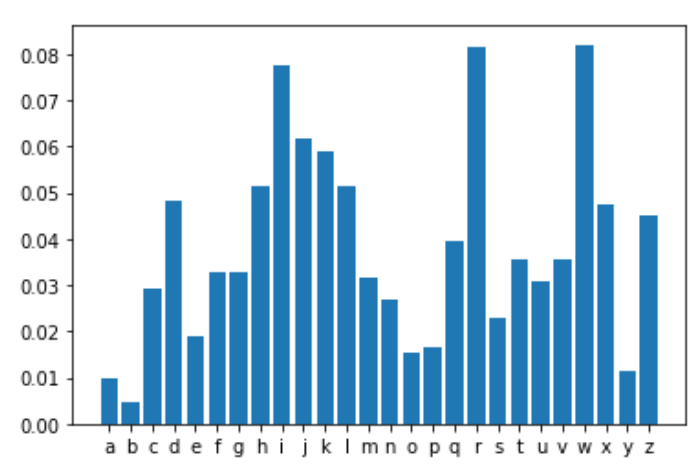

Here, the idea is that each word/phrase themselves could have polarity, meaning that they themselves could be indicative of the sentiment of the sentence/document.

Polarity is float which lies in the range of $[-1,1]$ where $1$ means positive statement and $-1$ means a negative statement.

Some simple measurement of such is to consider:

- how likely each word is to appear in each sentiment class (discussed here)

- use some form of embedding, which could be learnt in a Word2Vec approach

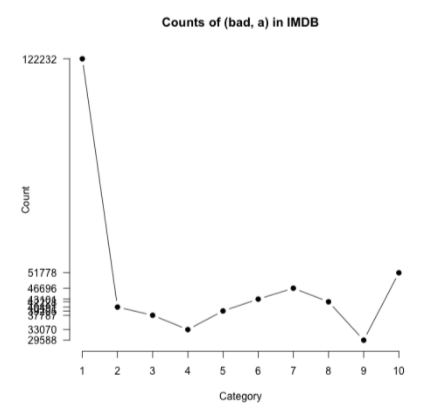

For instance, consider the polarity of the word “bad”:

where

- each category $c$ would represent the word “bad” appearing in a 1-star, 2-star, 3-star review, etc

- notice that even in 10-star comments, we still see the word “bad” quite often. This could happen due to negations.

Then, since we have the counts, we can compute the likelihood

\[P(w|c) = \frac{\text{Count}(w,c)}{\sum_{w}\text{Count}(w,c)}\]where $c$ would represent 1-star, 2-star, … Then we could scale it so it is comparable between words

\[\frac{P(w|c)}{P(w)}\]for $P(w)$ would be a the probability of observing the word at all.

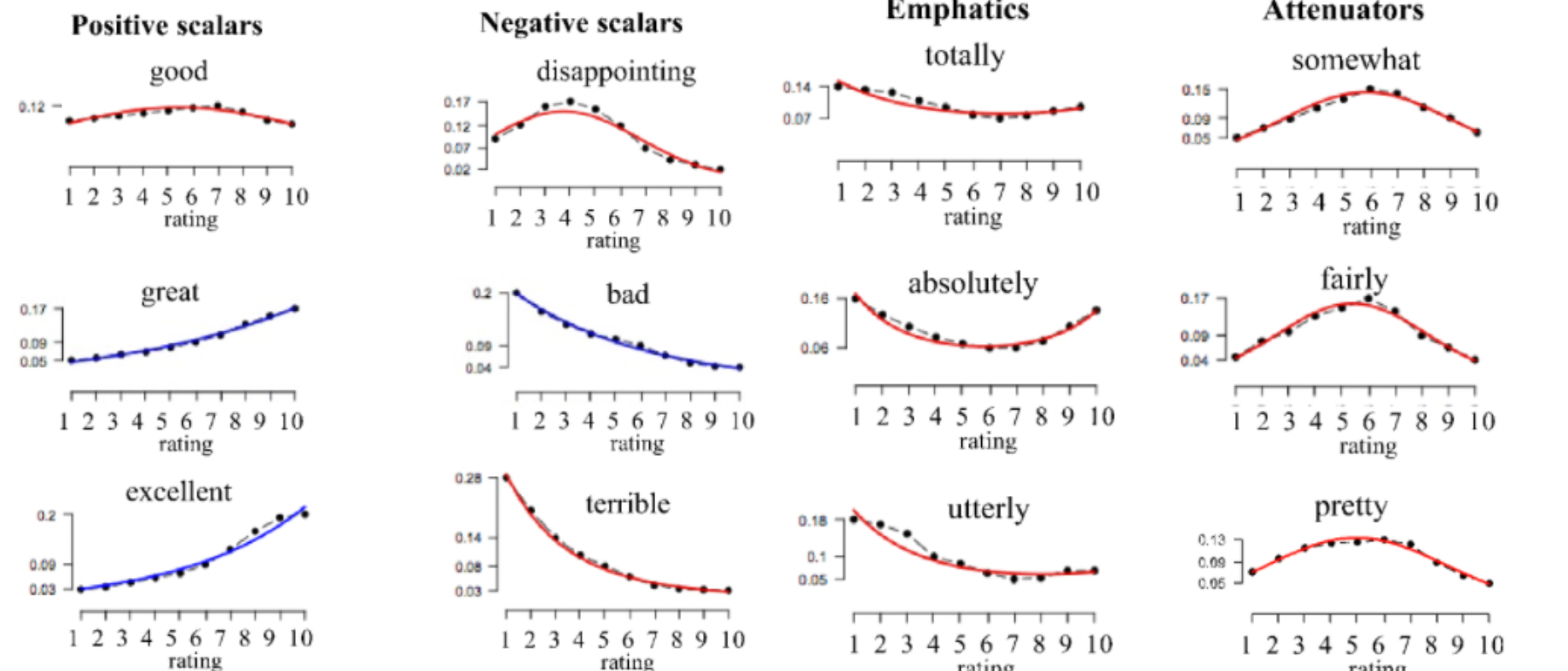

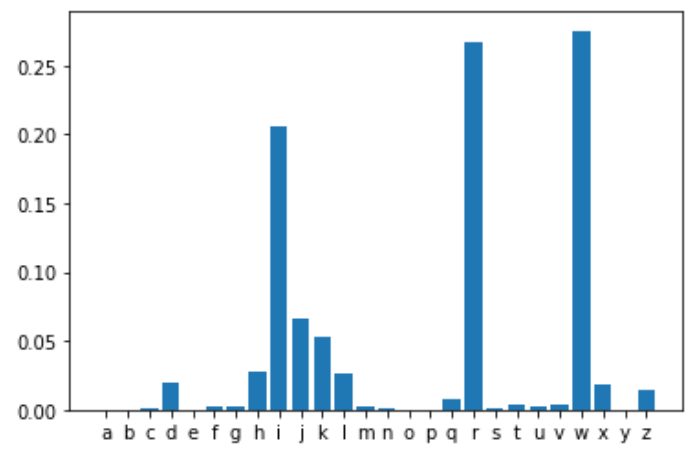

Exmaple: Polarity of each word in IMDB

Plotting the scaled likelihood for some common words in the 1-10 star IMDB reviews look like:

where:

- notice that “good” looks relatively flat across ratings (perhaps due to negations). This means “good” is not a good indicator for positive sentiment/rather neutral polarity.

- words such as “great” and “excellent” would be a better “metric” for the positive rating of the movie/positive polarity.

- words such as “somewhat” and “fairly” are attenuators as they most happen in th middle of the rating; they dampen extremely positive/negative reviews

- the upshot is that even seemingly positive/negative words could happen in all contexts

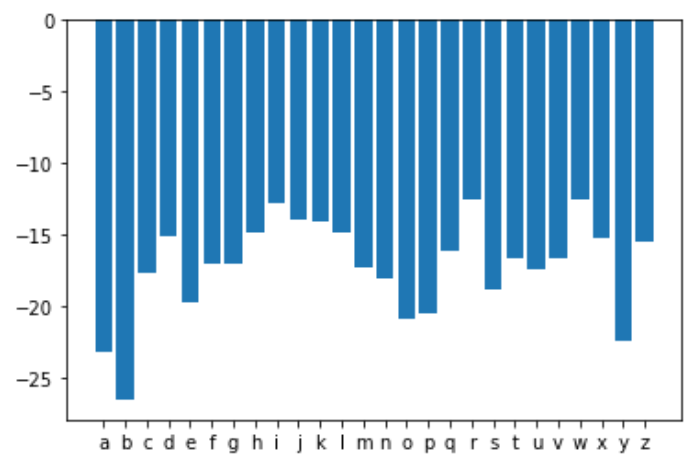

Logical Negation and Polarity

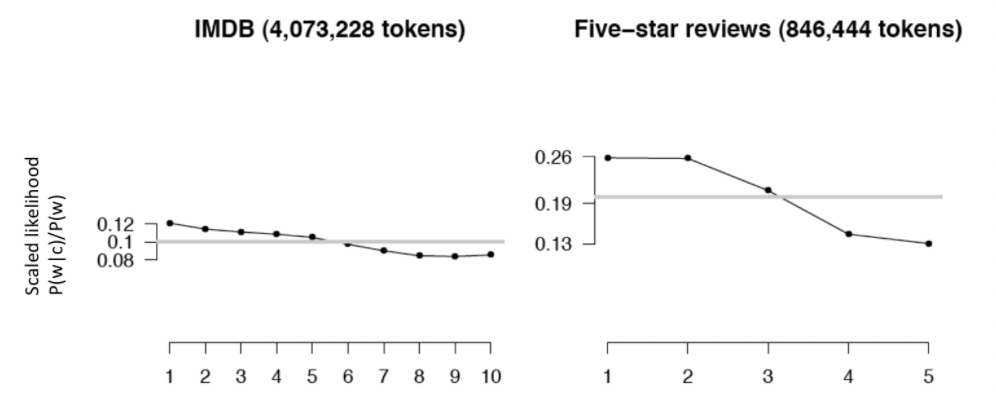

Is logical negation (no, not) associated with negative sentiment? (we know there are problematic cases such as “I don’t hate this movie” is a positive review with double negative.)

In general, there are slightly more negation in negative sentiments. For word such as “no/not”, the plot looks like:

where notice that:

- the scaled likelihood is slightly higher for low ratings for negation word such as “no/not”

- so even if we have double negatives such as “I don’t hate this movie”, this is still a useful feature

Learning Sentiment Lexicons

Here we consider the case that you want to have some sort of word polarity for your domain task, but there is no dataset with lexicons and ratings so that you cannot really know which words are more positive/negative.

- e.g. maybe dealing with a academic domain, where polarity of words would be different from well-established datasets such as movie reviews

Can we use a few hand-built patterns/lexicons to bootstrap and build a larger lexicon list?

- more lexicons learnt means your model could be more robust to test set

- useful for domain transfer

The general idea/a simple rule:

- adjectives conjointed by “and” tend to have same/similar polarity. e.g. “fair and legitimate”

- adjectives conjointed by “but” tend to have different polarity. e.g. fair but brutal

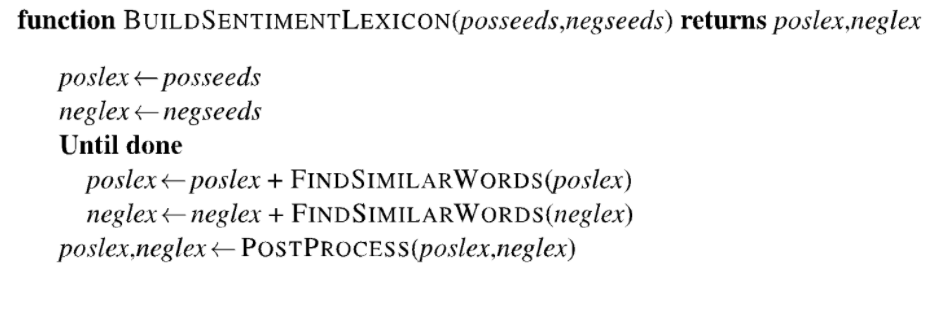

Then, we can consider some kind of program that does:

where seeds are the hand-built small lexicon list you made. Now, the question become:

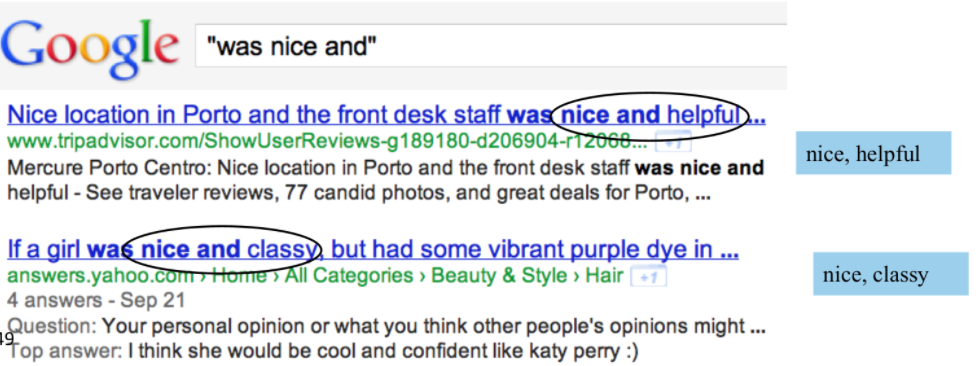

Where do you find similar/different words? Use a database or search engine!

Example:

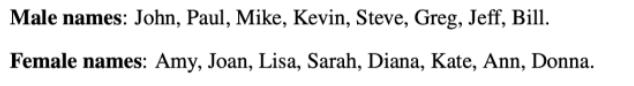

-

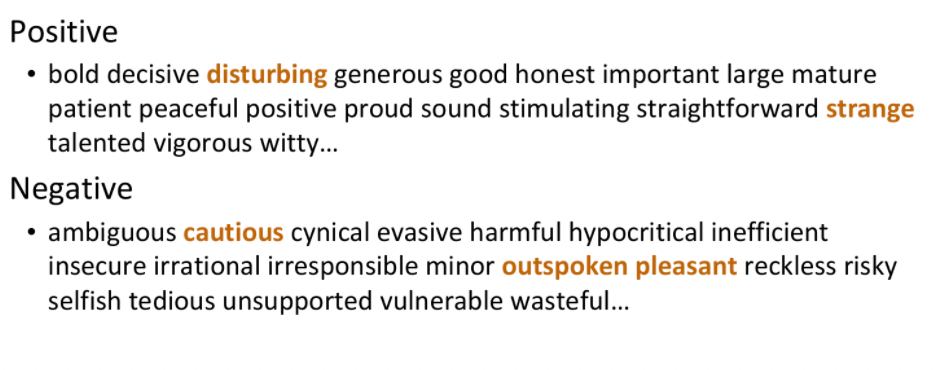

Start with a labelled seed set of words. For instance a labelled seed set of 1336 adjectives such that:

- there are 675 positive ones: adequate, central, clever, etc.

- there are 679 negative ones: contagious, drunken, ignorant, etc

-

Then we can find similar words from a database/a search engine by conjoining with “and”:

-

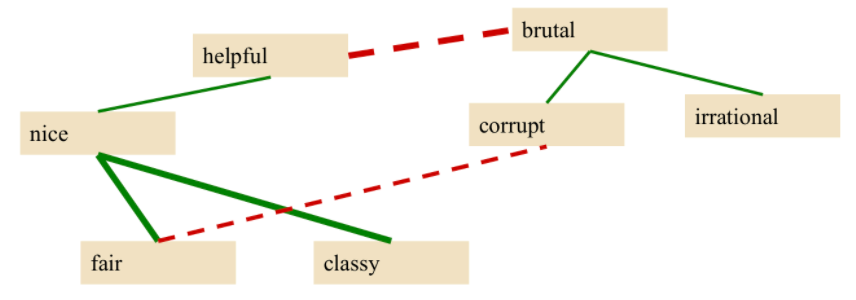

However, words might appear in many pairs. For instance, we could have a “fair and nice”, and but possibly “fair and corrupt” as well.

Therefore, from the seed set, we can train a supervised classifier to assign a polarity similarity score to a given pair of word.

-

Then we can determine the cluster by:

- for any two pair, if unknown polarity similarity, use the trained classifier

- closer polarity words are grouped/clustered together

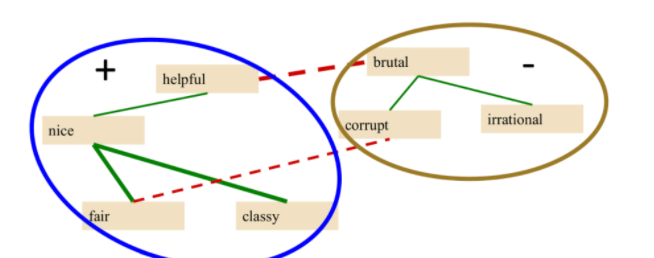

Hence we would arrive at:

-

Output the clusters and words inside it

note that of course we can get errors.

Turney Algorithm

Notice the above is a semi-supervised approach to learn a lexicon list with polarity. Then with those polarity, we could potentially use to classify sentiment of documents containing those words.

However, is there are way to do it in a unsupervised approach?

Unsupervised classificaiton of reviews! Done by:

- Extract a phrasal lexicon from reviews (following some pre-defined rules)

- Learn polarity of each phrase (by co-occurance with words such as “excellent” and “poor” )

- Rate a review by the average polarity of its phrases

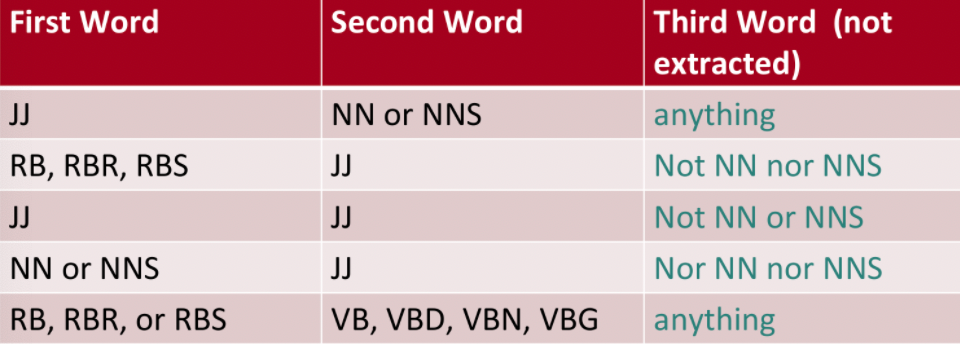

For computation and simplicity, we only extract two-word phrases with adjectives. Then, the algorithm does

-

First we extract the phrases. We extract two-word phrases that satisfy the following POS tags:

note that RB, RBR, RBS are the comparitive/superlative form of adjectives JJ.

-

Then we need to know the polarity of phrase. We hypothesize that:

- Positive phrases co-occur more with “excellent”

- Negative phrases co-occur more with “poor”

- the choice of the two words should at least come from the graphs in section Polarity in Sentiment Analysis

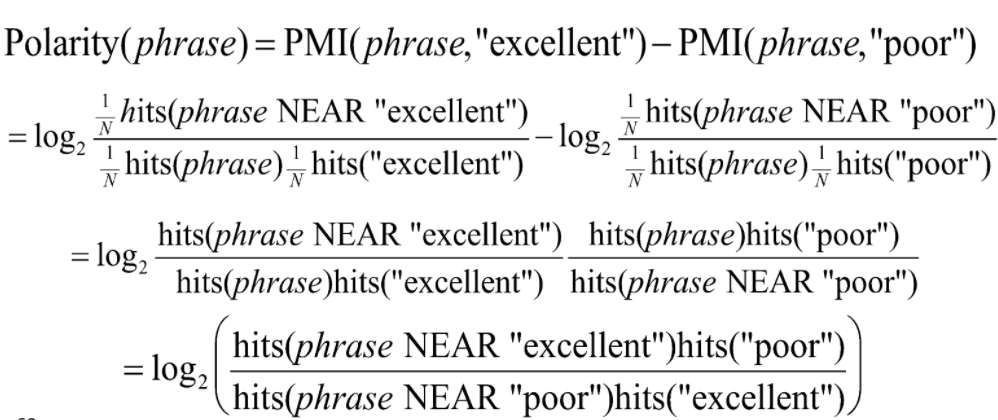

We can measure the co-occurance by PMI, which comes from a co-occurance matrix. Recall that it is a measure of how often two events $x$ and $y$ occur together, compared with what we would expect if they were independent:

\[I(x,y) = \log_2\left(\frac{P(x,y)}{P(x)P(y)}\right)\]Hence, the pointwise mutual information between a target word $w$ and a context word $c$ (for some window size such as 7) is then defined as:

\[\text{PMI}(w,c) = \log_2\left(\frac{P(w,c)}{P(w)P(c)}\right)\]for probabilities can be estimated with word-context matrix, we consider target words $w$ and context $c$. In this case, we don’t really care about context but rather how often $w$ appears with “excellent” and “poor” as:

\[\text{PMI}(w, \text{excellent}) = \log_2\left(\frac{P(w,\text{excellent})}{P(w)P(\text{excellent})}\right)\]and similarly for “poor”. Then:

-

To get those counts and the co-occurance matrix, we will use the search engine:

\[\hat{P}(w) = \frac{\text{hits}(w)}{N}, \quad N=\sum_w \text{hits}(w)\]Then $\hat{P}(w_1, w_2)$ can be esitmated by:

\[\hat{P}(w_1, w_2) = \frac{\text{hits}(w_1\,\, \text{Near}\,\, w_2)}{\sum_{w_1, w_2}\text{hits}(w_1\,\, \text{Near}\,\, w_2)}=\frac{\text{hits}(w_1\,\, \text{Near}\,\, w_2)}{kN}\]for $k$ being the size of the window, i.e. being $k$ words apart. (we often drop $k$ in subsequent calculation as it is a constant)

-

Finally, the PMI is therefore

\[\text{PMI}(w, \text{excellent}) = \log_2\left(\frac{\text{hits}(w_1\,\, \text{Near}\,\, w_2)/N}{\text{hits}(w)\text{hits}(w_2)/N^2}\right)\]

Finally, we define the polarity of a phrase by doing the PMI difference between "*excellent*" or "*poor*":

-

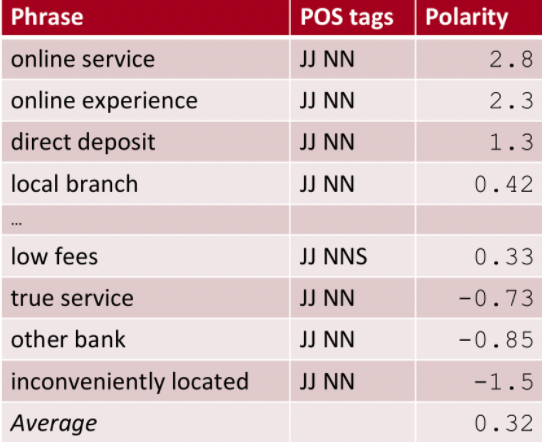

Now for evaluating the polarity of a single review, we simply first compute the polarity for each extracted phrase in the review:

notice that

- “true service” has a low score, i.e. co-occur more often with negative word such as “poor”, hence indicative of bad reviews. The obvious one would be “inconveniently located”

- then the final score for the review will be the average. Here it is $0.32$, which means this review is slighltly positive.

Results from this algorithm on the Epinions dataset:

- 170 (41%) negative

- 240 (59%) positive

- baseline (59%): guessing all positive

- Turney algorithm: 74%

Note that again, this is good given that it is fully unsupervised!

Summary on Learning Lexicon

Both the algorithm covered above share the following pattern:

- start with some seed set of words, e.g. “good”, “excellent”, “poor”

- find other words that have similar polarity from some external dataset/search engine

- using “and” and “but”

- using co-occurance

- add them to lexicon

Other Sentiment Task

Recall that:

Often sentiment analysis is framed as the detection of attitudes. In particular, we want to find out

-

Holder (source) of attitude

-

Target (aspect) of attitude

- Type of attitude

- From a set of types: Like, love, hate, value, desire, etc.

- Or (more commonly) simple weighted polarity: positive, negative, neutral, together with strength

- Text containing this attitude: which sentence/document

In reality:

- Simplest task: Is the attitude of this text positive or negative? This will be our focus in this chapter as a classification task.

- More complex: Rank the attitude of this text from 1 to 5, i.e. include strength

- Advanced: all the 4 subtasks.

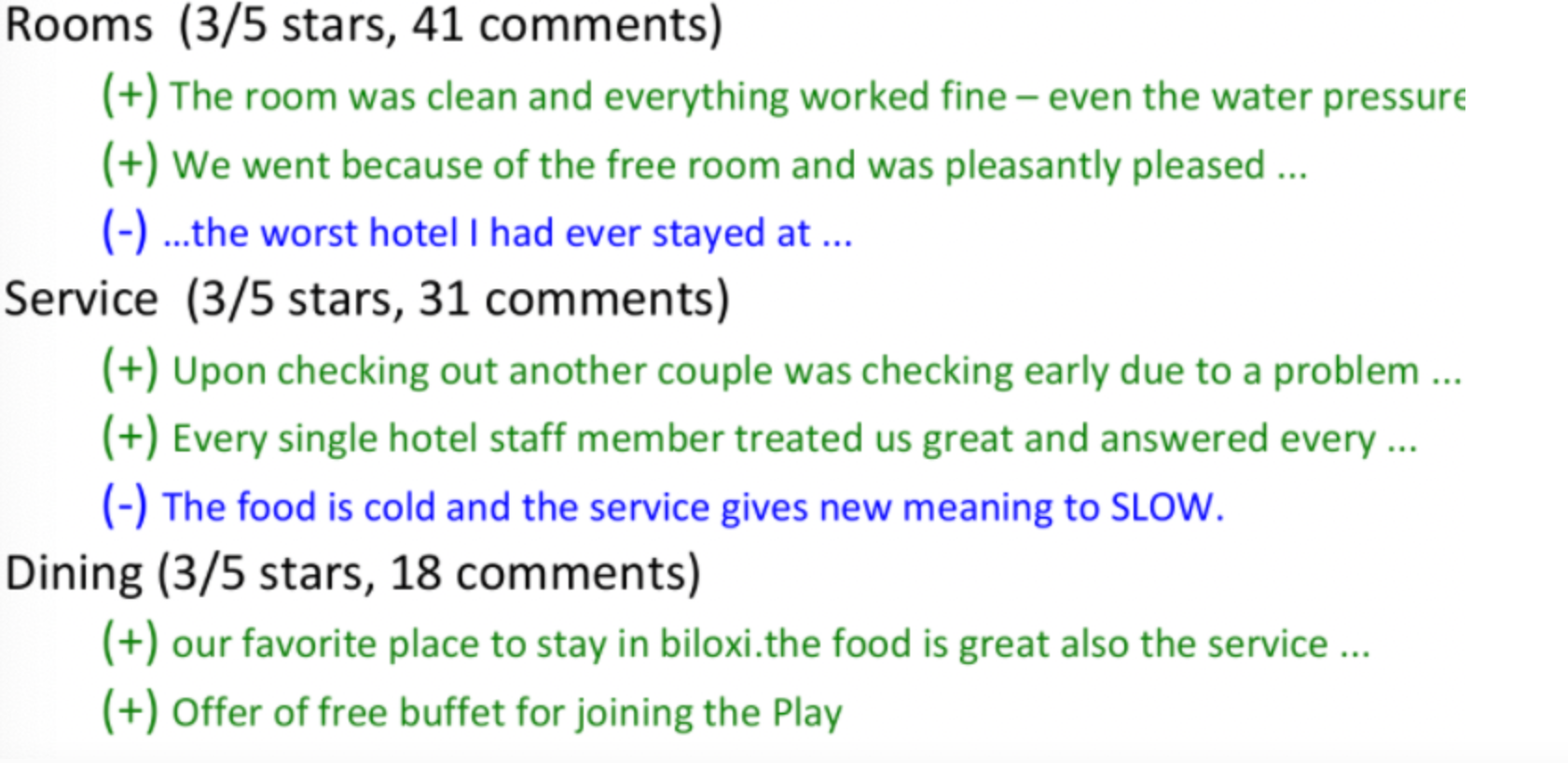

Here we discuss some approaches of identifying the target/aspect of a positive/negative review:

- target: “The food was great but the service was awful”.

- aspect: automatically find out it is talking about “food” and “service”

Finding Target/Aspect of a Sentiment

Some simple approaches for extracting aspects mentioned in some review:

- rule-based: simple

- find all frequenct phrases across reviews, e.g. “fish tacos”

- filter again by some rules such as “an spect should occur after a sentiment word”. e.g. we need “… great fish tacos” to keep “fish tacos” unfiltered.

- ML based: some aspects might not be in the sentence, i.e. its meaning is hidden. e.g. “… the place smells bad” correspond to something like “odor”.

- hand-label a small corpus of reviews sentences with aspect, e.g. whether it is about “food, decor, service”, etc.

- train a classifier on it

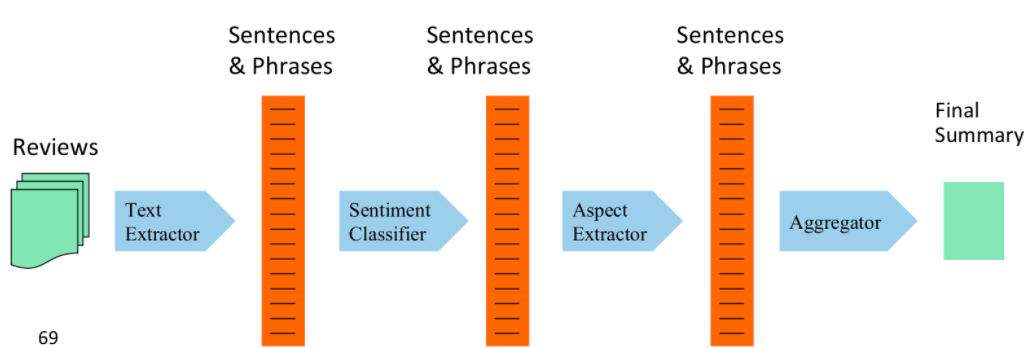

Then, once you have some way to extract aspect from a sentence, consider the following pipeline:

hence the general idea would be:

- first do a sentence level sentiment classification

- from the positive/negative reviews, extract the aspects using the trained classifier

- hence obtain aspects level classifcation, essentially by combing the extracted aspect + whether if they are positive/negative from the sentence level

For Example, results would look like:

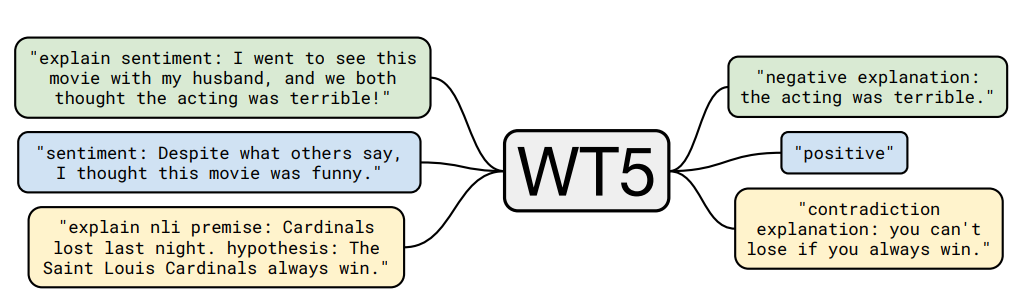

Explaining Sentiment Classification

Why is certain sentence clasified as positive/negative?

Still an active research topic, some current approaches:

-

we just highlight the words associated with positive/negative score

-

WT5, comes form T5. It is a text-to-text model, hene it can directly genrate a text explaining why it is a positive/negative post and classifying it

Summary on Sentiment Classification

Generally modeled as simple classification or regression task

- predict a binary or ordinal label

Some common process/task/problems it involves:

-

how to deal with negation is important

-

Using all words (in naive bayes) works well for some tasks

\[c = \arg\max_{c_j \in C} P(W|c_j)P(c_j) =\arg\max_{c_j \in C} P(c_j)\prod_i P(w_i |c_j)\] -

Finding subsets of words may help in other tasks

-

Hand-built polarity lexicons

-

Use seeds and semi-supervised learning to induce lexicons

-

-

A fully unsupervised approach such as Turney’s Algorithm

Statistical Significance Testing

In building systems we often need to compare the performance of two systems. How can we know if the new system we just built is better than our old one? How certain are we if one model performend better than nother on some test set?

Let us have:

- two models we want to compare, model $A$ being the new one and $B$ being some baseline.

- we are interested in testing on some metric $M$, such as $F_1$ score or accurarcy.

- suppose we have some test set $x$ to evaluate on

Obviously we could measure:

\[\delta(x) = M(A,x) - M(B-x) \equiv \text{Effect Size}\]for $M(A,x)$ is the performance of model $A$ on test set $x$ using metric $M$. Of course the larger the effect size the better, but another question we usually want to ask is:

if $A$’s superiority over $B$ is likely to hold again if we checked another test set $x’$

In the paradigm of statistical hypothesis testing, we test this by formalizing two hypotheses. Suppose you found $\delta(x)=0.2$, i.e. we have a $0.2$ higher e.g. accurarcy:

\[H_0: \text{what you observed is just a random effect}\\ H_1: \text{it is not random. Our model is better}\]We want to show that $H_0$, the null hypothesis, has a low probability of happening, so that what we observed is not random/by chance:

\[\text{p-value}(x) \equiv P(\delta(X) \ge \delta(x) | H_0 \text{ is true})\]i.e. we try on some random test set $X=x$ (with mean $0$) to get $\delta(x)$ again and again. If it is not by chance, then $\text{p-value}(x)$ should be small.

- e.g. consider playing Poker, I claim that I am a better player, so the null hypothesis is that I win by luck.

- then, suppose $\delta(x)$ is I win 10 “random” games in a roll. We know that $P(\delta(X) \ge \delta(x) \vert \text{I win by luck})$ is small. Hence I can reject the null hypothesis and conclude that I am actually a better player, if $\delta(x)$ happened.

Therefore, p-value gives the probability of observing a test statistic as extreme as the one observed $\delta(x)$, if the null hypothesis is true.

Hence, if the p-value is small, the observed test statistic is very unlikely under the null hypothesis

- the expected value of $\delta(X)$ over many test sets, if assuming $A$ is not better than $B$ is $\mathbb{E}_X \delta(X) = 0$ for $X$ comes from a distribution with zero mean

- how small should the p-value be? Often threshold such as $0.05,0.01$ is fine.

How do we compute the probability needed?

- In NLP we generally don’t use parametric tests such as t-tests or ANOVAs as they make assumptions on the distributions of the test statistic (such as normality) that don’t generally hold in our cases So in NLP we usually use non-parametric tests based on sampling.

Either:

- if we had lots of different random test sets $x^\prime$ with mean $0$ we could just measure all the $\delta(x^\prime)$ for all the $x^\prime$. This gives a distribution, from which we can compute probability of at least $\delta(x)$ happening if it is a random effect

- use a bootstrap test by repeatedly drawing large numbers of smaller samples with replacement, under the assumption that the sample is representative of the population.

The Paired Bootstrap Test

The bootstrap test (Efron and Tibshirani, 1993) can apply to any metric; from precision, recall, or F1 to the BLEU metric used in machine translation.

The word bootstrapping refers to repeatedly drawing large numbers of smaller samples with replacement (called bootstrap samples) from an original larger sample.

- in fact, the idea is to virtually create random test sets $X$ by sampling with replacement from a fixed test set $x$.

- since this means we have $X$ with mean of $\delta(x)$, we would have to change the formula a bit

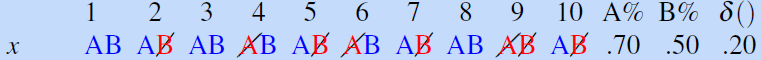

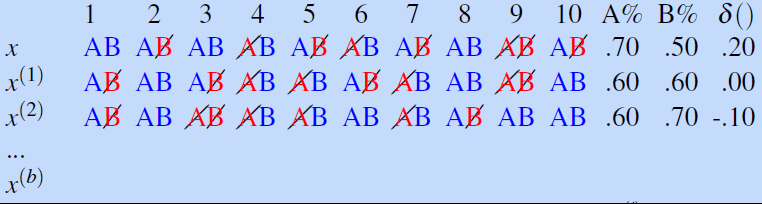

Consider a tiny text classification example with a test set $x$ of $10$ documents. And suppose we have $M$ being accuracy and we have two models $A$, and $B$:

where a slash means the model got it wrong. For this test set $x$, the effect size is $\delta(x)=0.2$.

We need to create a large distribution of test sets $X$. We can do this by:

- pick a large number $b$, e.g. $b=10^5$ being the number of tests $x^{(i)}$ we want to create

- each test $x^{(i)}$ will have $n=10$ same as $x$. Hence we repeatedly sample $n$ times from $x$ with replacement.

- do until we created $x^{(b)}$

Now that we have the $b$ random test sets, providing a sampling distribution, we can compute how likely $A$ made $\delta(x)$ by pure chance. We might naively consider:

\[\text{p-value}(x) = \frac{1}{b} \sum_{i=1}^b \mathbb{1}\left( \delta(x^{(i)}) - \delta(x) \ge 0 \right)\]so that if p-value is low, then $\delta(x)$ is not by pure chance. However, this would be wrong as

\[\text{p-value}(x) \equiv P(\delta(X) \ge \delta(x) | H_0 \text{ is true})\]assumes the $X$ would yield a mean of $\delta(X)=0$. Here we would yield a $\delta(X=x)=0.2$ since it all came from the test set $x$.

Therefore, the correct one would be

\[\begin{align*} \text{p-value}(x) &= \frac{1}{b} \sum_{i=1}^b \mathbb{1}\left( \delta(x^{(i)}) - \delta(x) \ge \delta(x) \right)\\ &= \frac{1}{b} \sum_{i=1}^b \mathbb{1}\left( \delta(x^{(i)}) \ge 2\delta(x) \right) \end{align*}\]So that if we have $10^5$ tests and for $47$ of them it happened that $\delta(x^{(i)}) \ge 2\delta(x)$, then it means p-value is $.0047$, i.e. it is very rare by chance. If we take a threshold of $0.01$ being significant, then we can say that this result $\delta(x)$ is statistically significant.

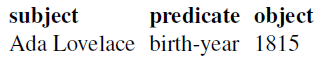

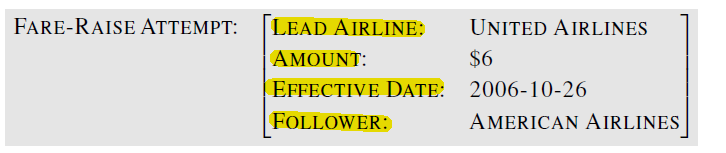

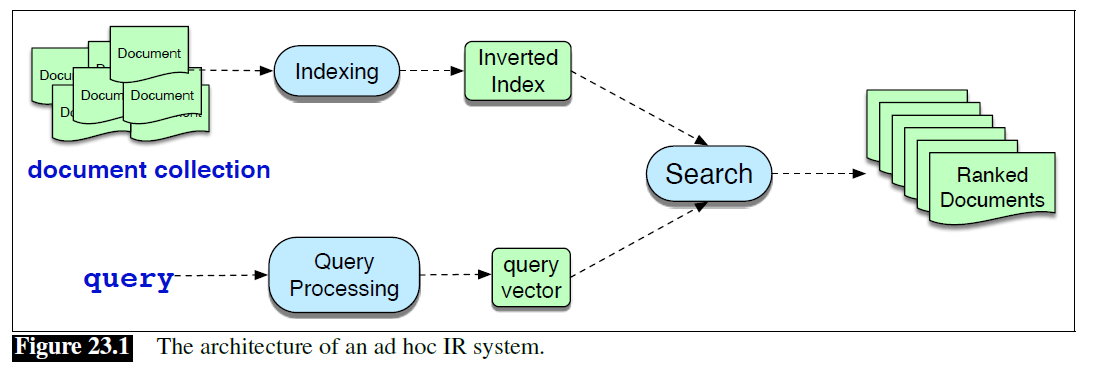

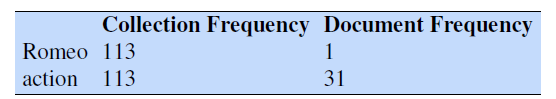

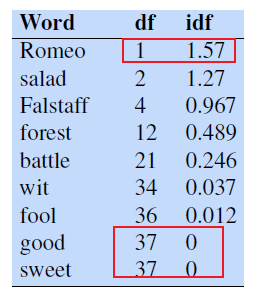

Information Extraction and NER

structed textual document: tables

Transform unstructured information in a corpus of documents or web pages into a structured data, e.g. populating a relational database, to enable further processing.

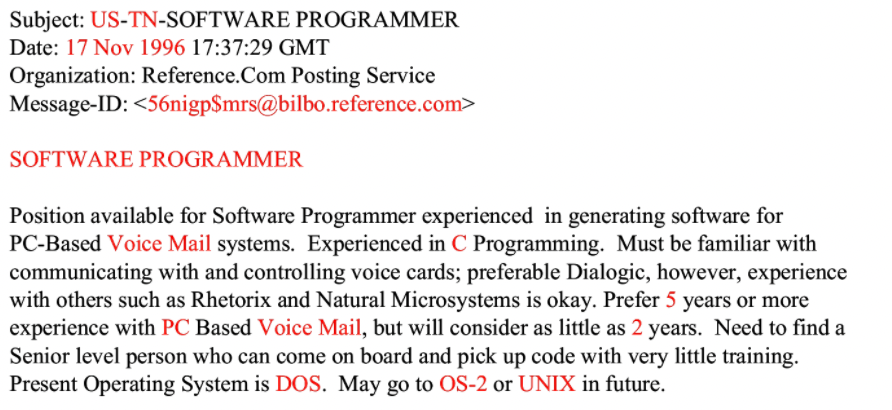

Consider receiving an email from job posting:

| Raw Data/Email | Extracted Data |

|---|---|

|

|

The task would be to extract information from some pre-defined attributes we want to extract.

- i.e. givne the input, fill out the table (on the right) as much as we can

- notice that each extracted info is an entity

The general pipeline you want to consider is:

- named entity recognition

- relation extraction

- template filling

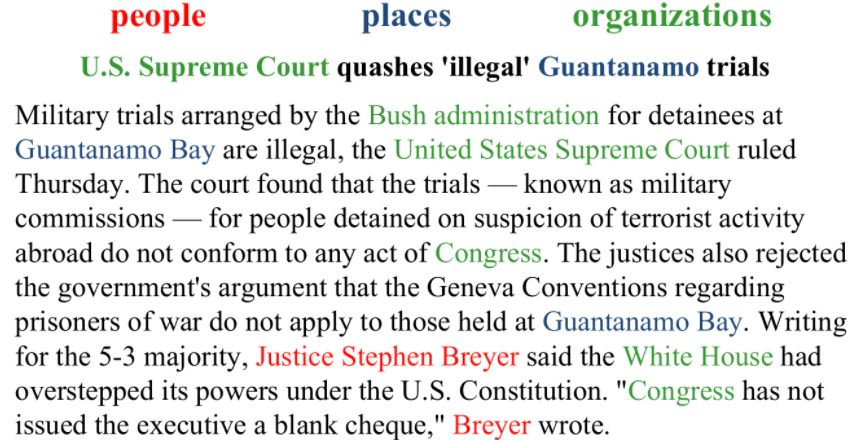

Named Entity Recognition

Specific type of information extraction in which the goal is to extract formal names of particular types of entities such as people, places, organizations, etc.

Usually this is used as a preprocessing step for some future tasks, such as the template filling task we had, or question answering. Notice that this is a task in between sequence level and token level task - it is a span-oriented application.

Formally, given an input $x$ with $T$ tokens, $x_1,…,x_T$, a span is a continuous sequence of tokens with start $i$ and end $j$ such that $1 \le i \le j \le T$. Then in total for all possible span length we could have:

\[\frac{T(T-1)}{2}\]total possible spans. Most application literally iterate through all possible spans. Hence they often have some application-specific length limit $L$ such that $j-i < L$ is required/legal span. We refer tot the set of legal span in $x$ as $S(x)$.

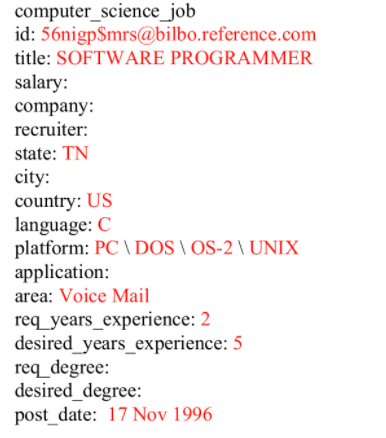

An example would be

| Input | Output Desired |

|---|---|

|

|

where note that we only find NER for the three entities we care here. Hence entities such as “Geneva Conventions” SHOULD NOT be highlighted. Hence this task is generally not easy.

In general, today we have two approaches:

- train a BIO tagger. This will essentially be a Seq-labelling model

- train an end-to-end model using GPT-3. This will be a Span-based Model

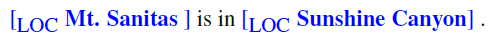

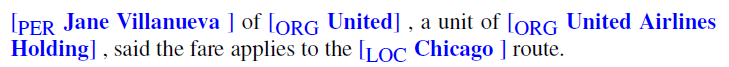

BIO-based NER

Some idea for training BIO-based model would be a Seq-labelling model

| Training Data | BIO version of Training Data |

|---|---|

|

|

Hence this is essentially a Seq-2-Seq decoding. A fuller example would be:

TODO: CRF on page 178-Chapter 8

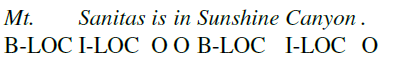

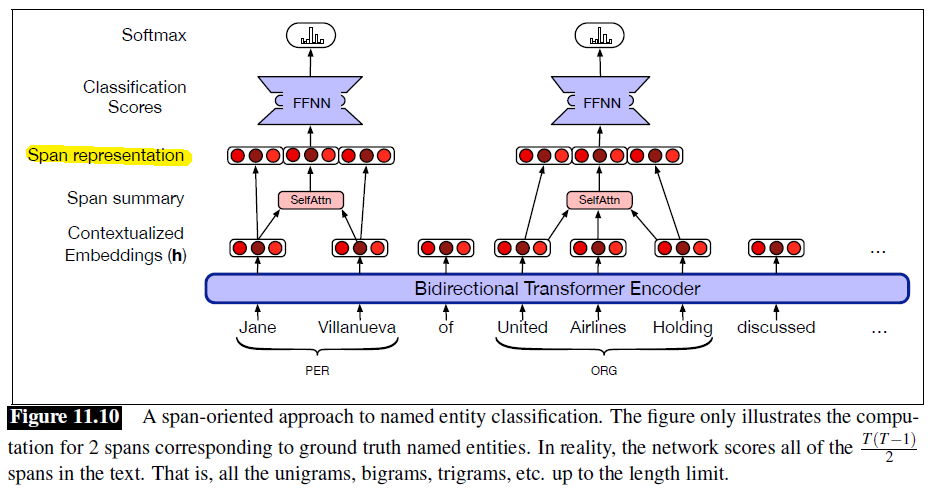

Span-based NER

Some idea for training an end-to-end NN based model would be a span-oriented application.

-

generate representations for all the spans in the input. This often contains

- representations of the span boundaries, $h_i,h_j$ being the embeddings

- representation of the content in the span, $f(h_{i+1:j-1}) \to g_{ij}$

- an example of such as function could be $f(h_{i+1:j-1}) = \bar{h}_{i+1:j-1}$ being the average

- combine the two representation, $\text{SpanRep}{ij} = [h_1;h_j;g{ij}]$

this can be done using contextualized input embeddings from the model

-

then, we have a span representation $g_{ij}$ for each span in $S(x)$. Then this is a classification problem where each span in an input is assigned a class label $y$:

\[y_{ij} = \text{softmax}(\text{FFNN}(\text{SpanRep}_{ij})) \in \mathbb{R^{|Y|}}\]since most spans will not have a label, we add $y = \text{null} \cup y$ to the set of labels, and hence $\vert Y\vert =\vert y\vert +1$.

-

then for decoding, take $\arg\max y_{ij}$ to get the tag.

Note that some post-processing steps will need to be done to prevent overlapping classifications, as this scores all $T(T-1)/2$ spans. However, it does have a benefit as it naturally accommodate embedded named entities:

- e.g. both “United Airlines” and “United Airlines Holding” would be evaluated

- a BIO based tagging approach would have not looked at this.

A detailed example would be:

-

instead of taking average, we consider using the embeddings as

\[g_{ij} = \text{SelfAttn}(h_{i:j})\]so that the representation would be centered around the head of the phrase corresponding to the span. Then combining to get:

\[\text{SpanRep}_{ij} = [h_1;h_j;g_{ij}]\] -

finally doing the same:

\[y_{ij} = \text{softmax}(\text{FFNN}(\text{SpanRep}_{ij}))\]

Hence graphically

where:

- cross-entropy loss would be used

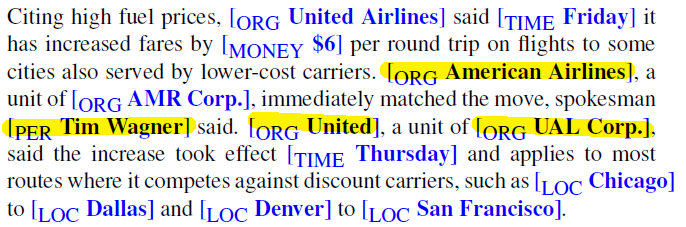

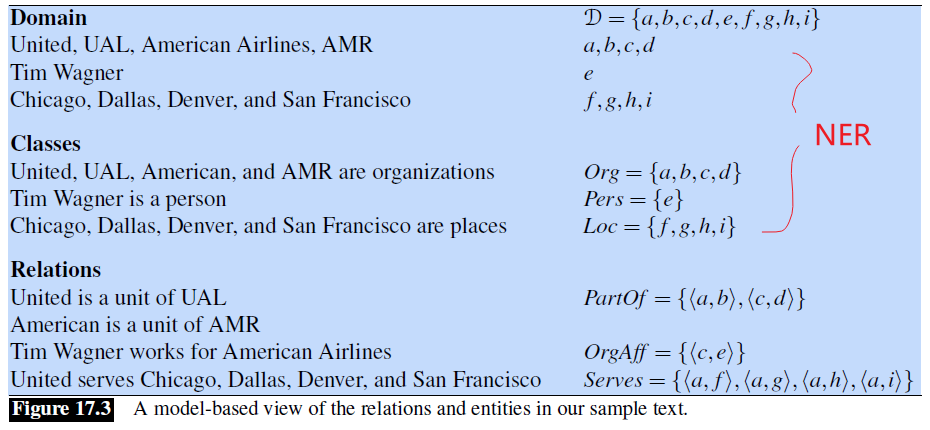

Relation Extraction

Now we have detected named entities, our next step is to discern relationships between those entities. For instance:

where form the highlighted part, we know that:

- “Tim Wagner” is a spokesman for “American Airlines”

- “United” is a unit of “UAL Corp”

- etc.

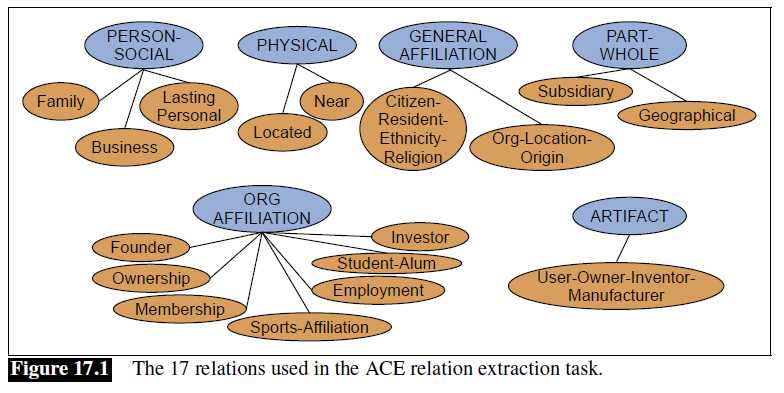

All of those are simple binary relationships which fall under some generic categorization such as:

-

basic 3 relations: employed-by; located-at; part-of

-

a more complicated 17 relations used in ACE relation extraction

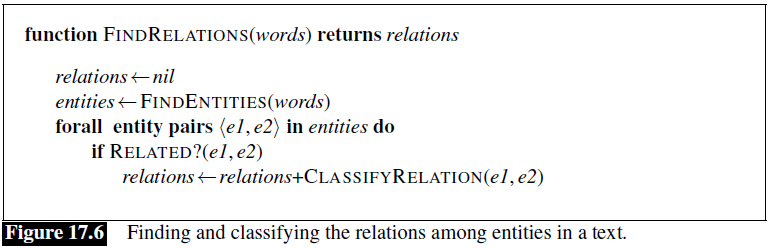

Hence, a graphical representation of what are doing now is:

where:

- the first two steps can be done in a NER

- the relation can be seen as consisting of a set of ordered tuples over elements of a domain (e.g. named entities)

Relation Extraction Algorithms

Now, we answer the question: how do we find those relations? In general, there are four main ways to do it

- handwritten patterns

- supervised machine learning

- semi-supervised (via bootstrapping or distant supervision)

- unsupervised

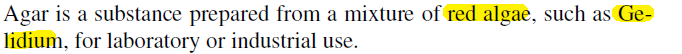

Using Patterns to Extract Relations

Consider the example of:

where we notice that “Gelidium” is a hyponym of “red algae”, which can be identified using the following pattern

\[NP_0 \text{ such as } NP_1 \{,NP_2,...,(\text{and,or})NP_i\}\]implies the relation

\[\forall NP_i,i\ge 1, \text{hyponym}(NP_i, NP_0)\]which inspires pattern such as

| Pattern | Example |

|---|---|

|

|

but of course, with hand-crafted rules we have:

- advantage of high precision as they are tailored to specific domains

- low recall, missing a lot

- not scalable

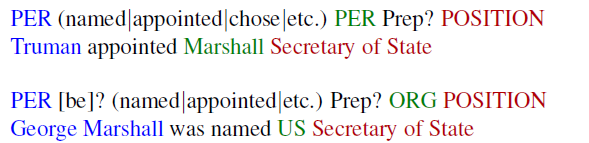

Relation Extraction via Supervised Learning

Consider now having the

- input of: a fixed set of entities

- label of: a fixed set of pre-defined relations

Then approaches to make it a classification problem could be: for each possible pair, apply a multiclass classification for each class being the relation

where the feature/embeddings of the named entity $e_1,e_2$ could be:

- word level embeddings of the named entity $e_i$

- head word embedding of the named entity

- encoding the named entity’s type, e.g. if it is

ORG, orPER - number of entities in this sentence

- encoding some syntactic structure of the sentence

- etc

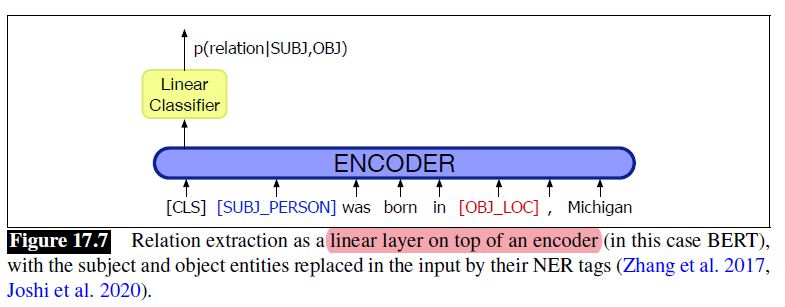

then the architecture of the classifier could be

where notice that

-

essentially using a transformer, hence using context embeddings for the content

-

we also included the entire sentence to give context for the two named entities in this case

of course, this can be optimized as we can skip some pairs for certain relation as it won’t happen at at all

But labeling a large training set is extremely expensive and supervised models are brittle: they don’t generalize well to different text genres.

For this reason, much research in relation extraction has focused on the semi-supervised and unsupervised approaches we turn to next.

- other problem include: what if the relation set is not fixed? Then of course supervised version would not work.

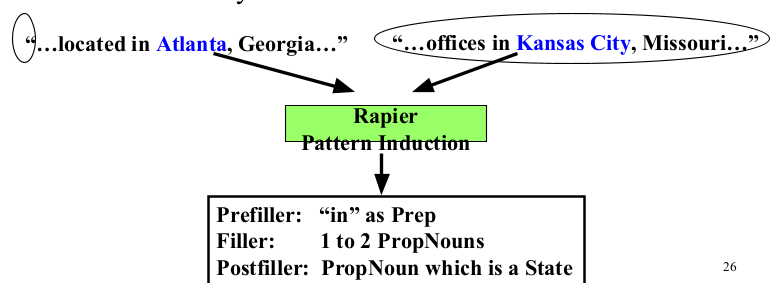

Semi-supervised Relation Extraction

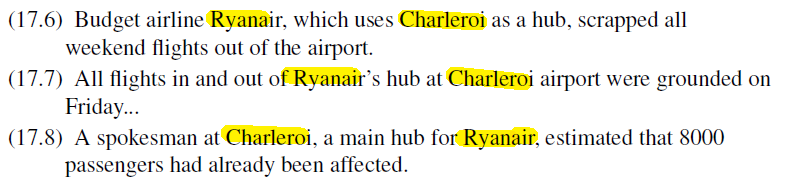

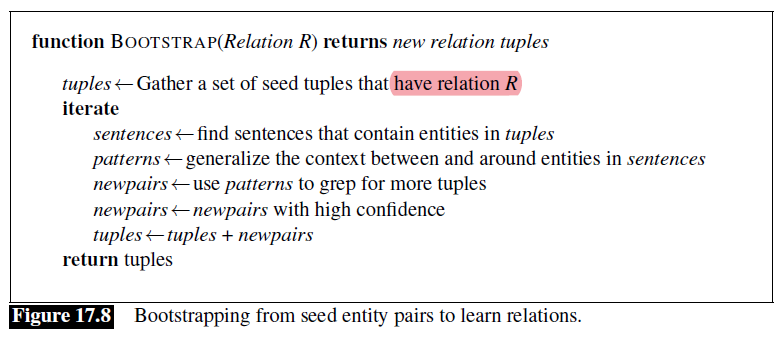

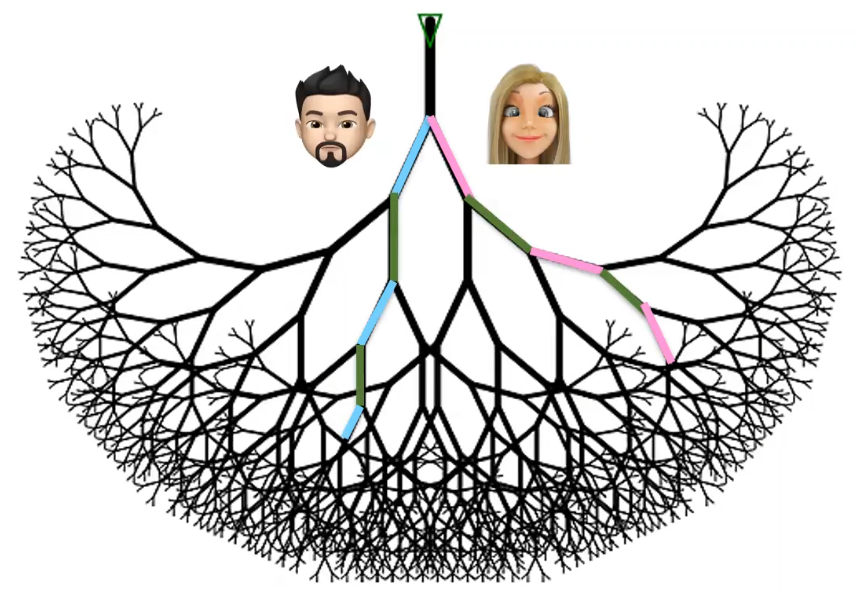

One idea is to bootstrap more relation-labeled entity pairs from some known small sample labelled pair. For instance, suppose you want to get a relation being airline/hub pair, and you already have

being a known pair, you can:

-

finding other mentions of this relation in our corpus

-

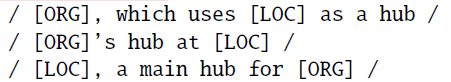

use features such as context to extract general patterns such as the following

which signifies a “rule” for

airline/hubpair -

use these new patterns can then be used to search for additional tuples.

-

assign confidence values to new tuples, and add to the dataset if high confidence

- this is to avoid semantic drift. In semantic drift, an erroneous pattern leads to the introduction of erroneous tuples, which, in turn, lead to the creation of problematic patterns and the meaning of the extracted relations ‘drifts’.

Hence, given some seed samples that have relation $R$, we can populate this set by

Once done, apply supervised classification as mentioned above.

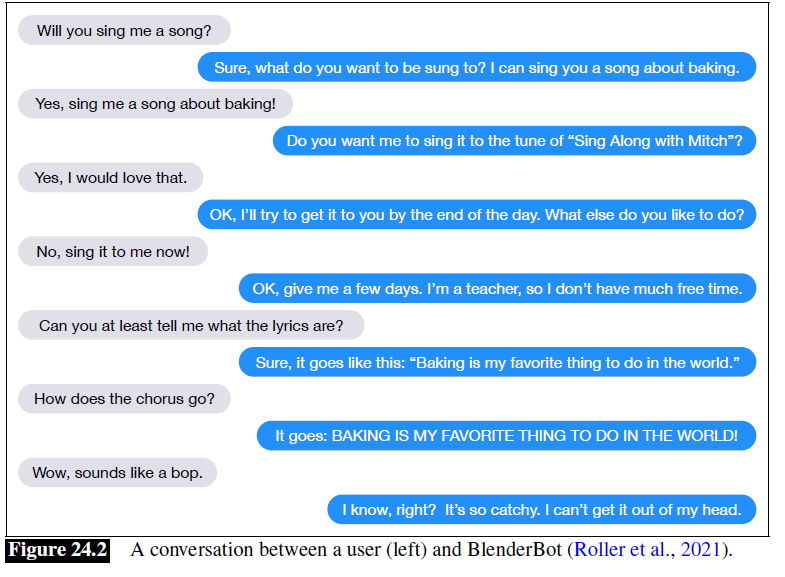

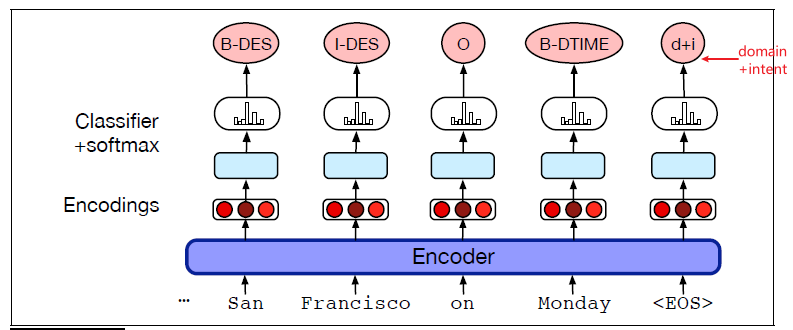

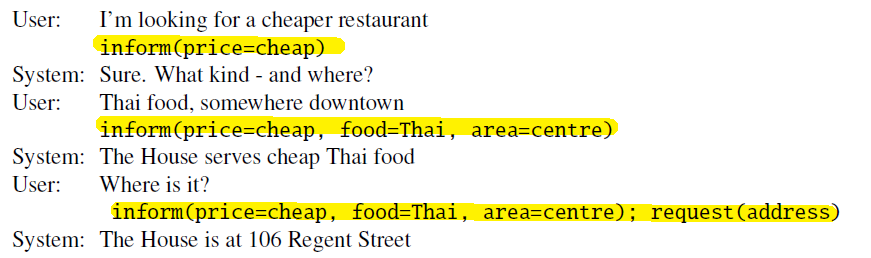

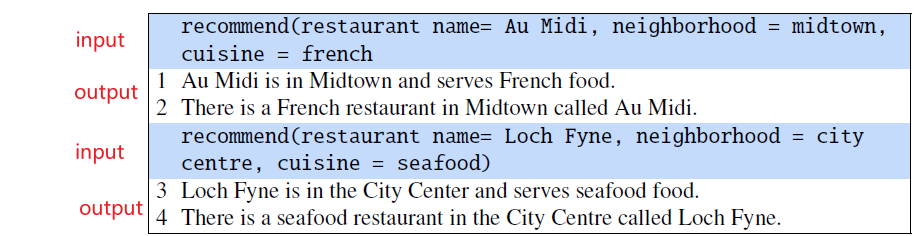

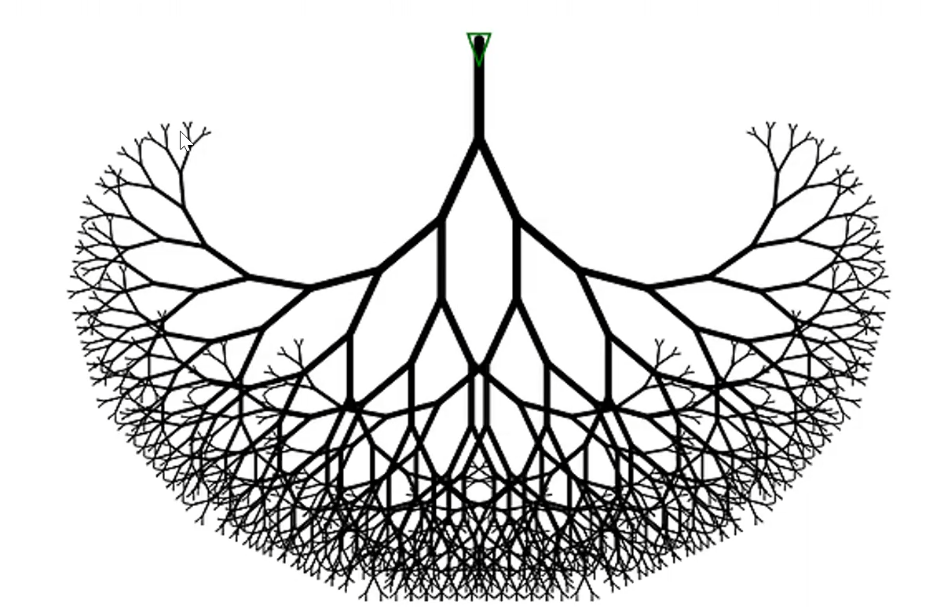

Unsupervised Relation Extraction