COMS4732 Computer Vision II

- Introduction

- Convolution

- Fourier Transform

- Machine Learning

- Object Recognition

- Video Recognition

- Object Tracking

- Interpretability

- Self-Supervised Learning

- Synthesis

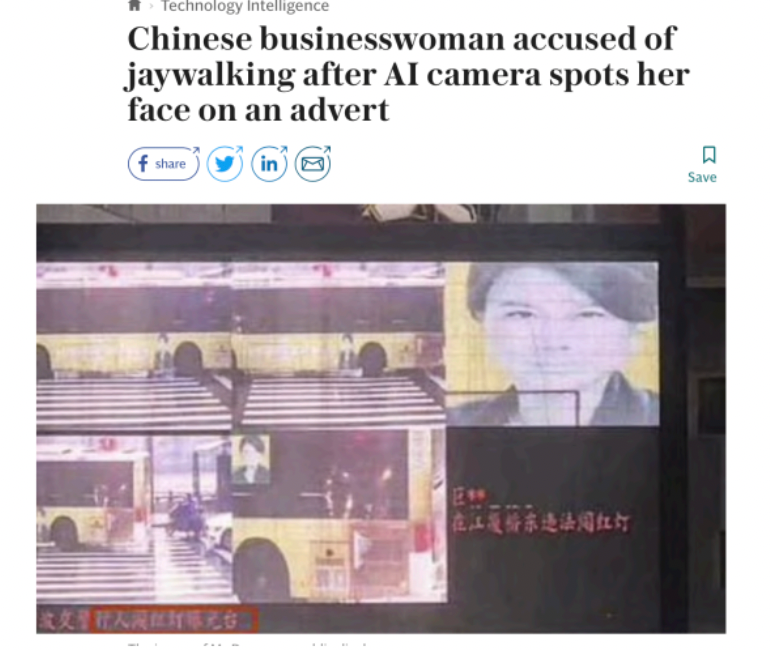

- Ethics and Bias

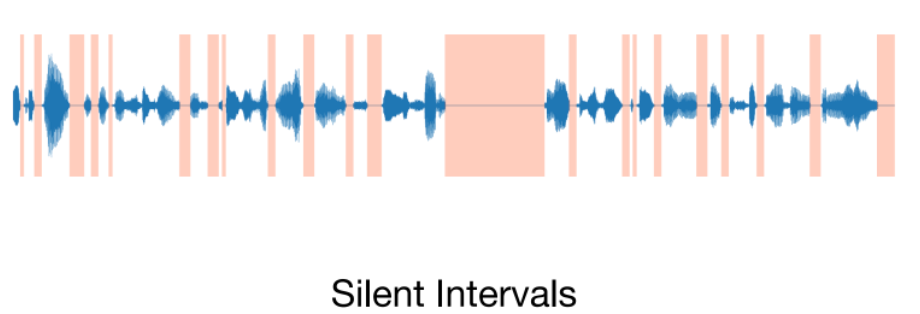

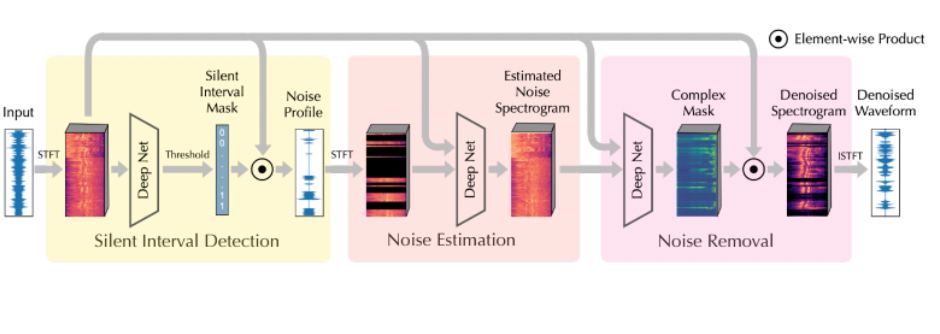

- Vision and Sound

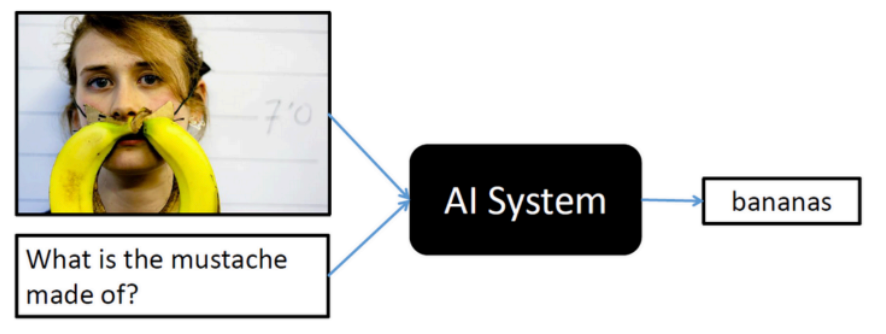

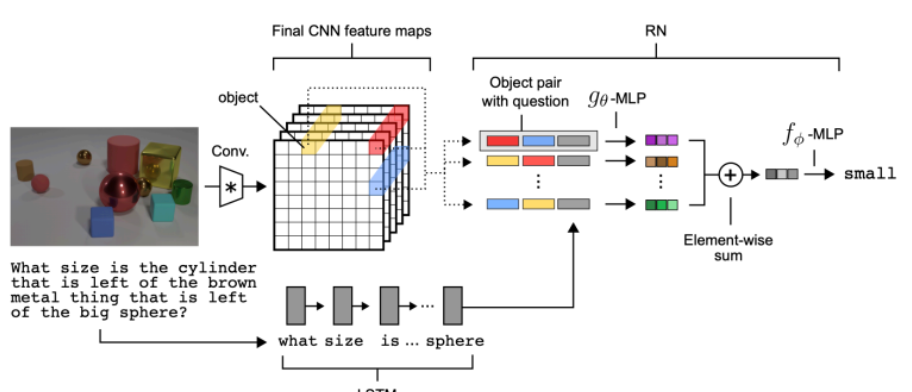

- Vision and Language

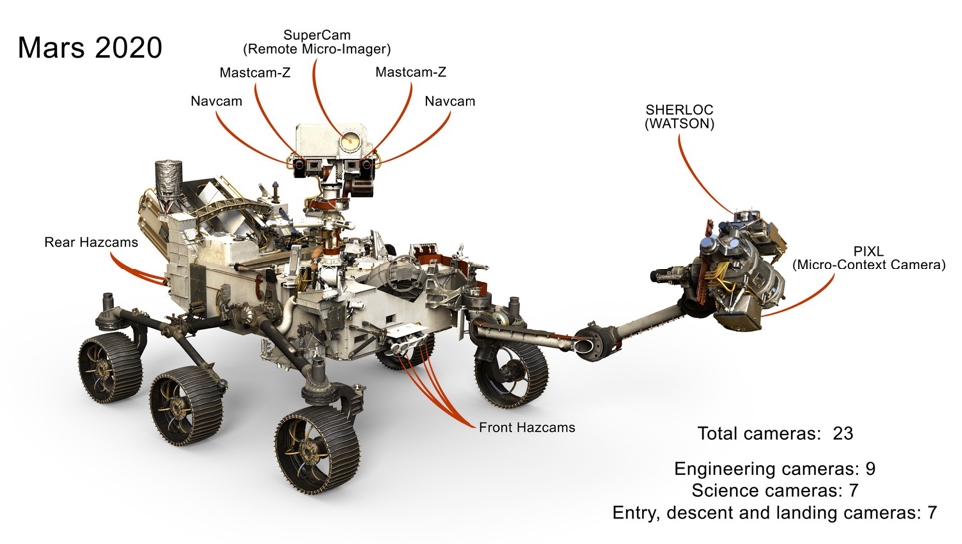

- 3D Vision

- Final Exam

Introduction

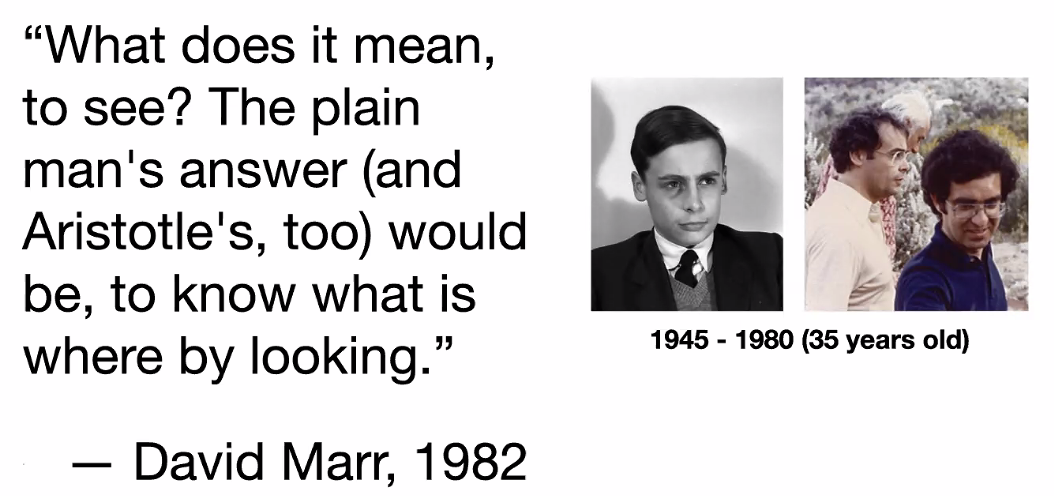

What is vision?

Applications

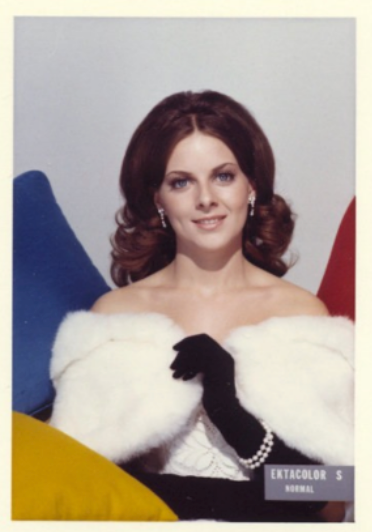

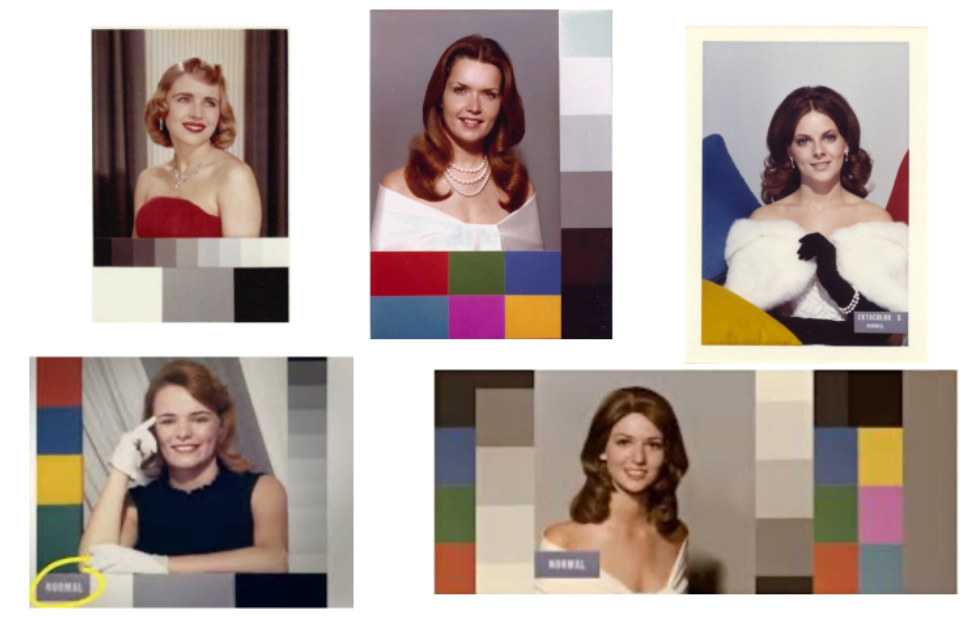

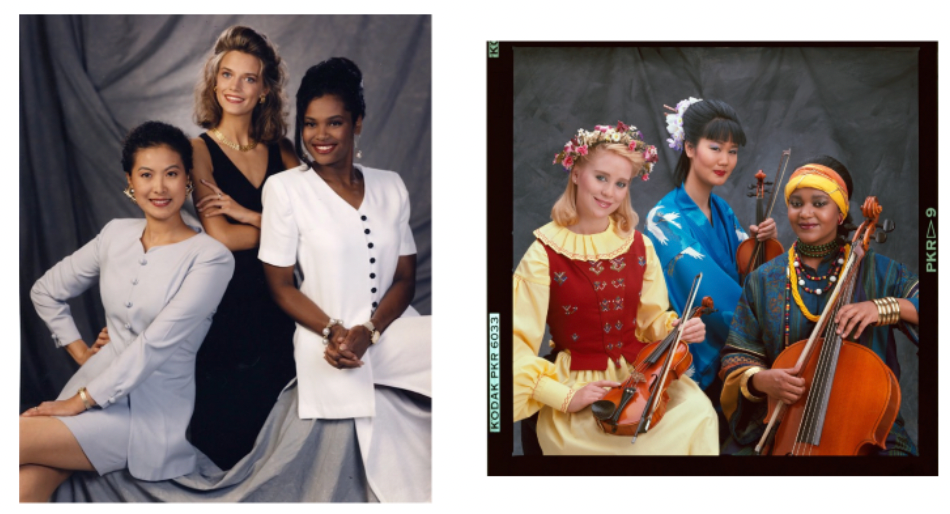

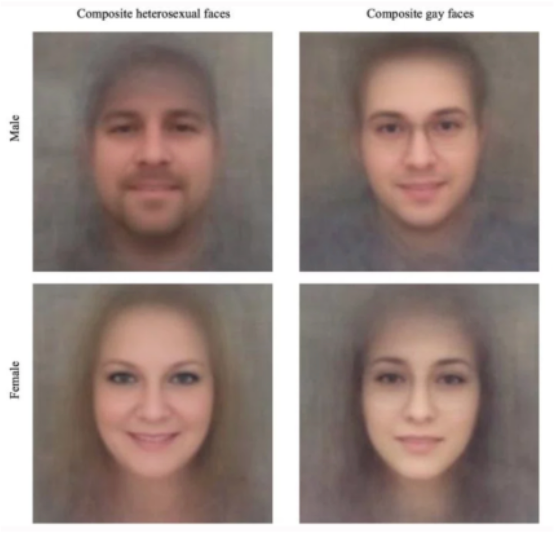

One very important application is Biometrics

- how FaceID works!

Another would be Optical Character Recognition

Gaming with VR: recognize your body poses:

- recognize fine details about your movements

Recently there has been application in shopping

-

as a customer, you can grab whatever you want, and you will be charged by Amazon

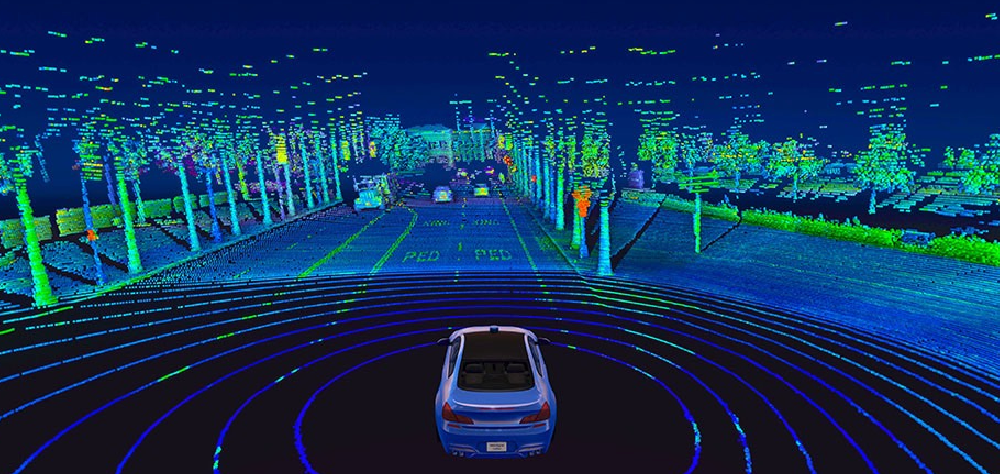

Last but not least, self-driving cars

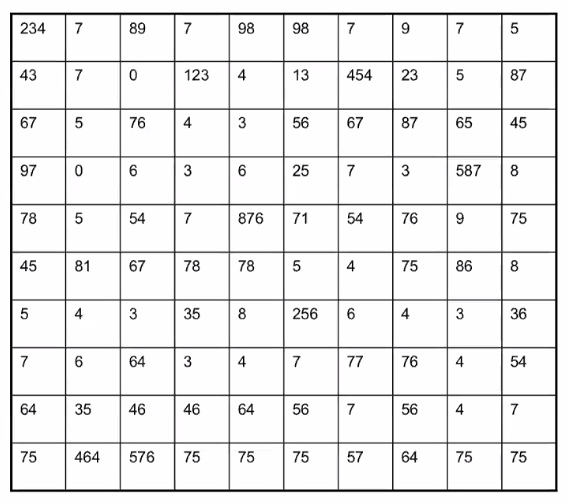

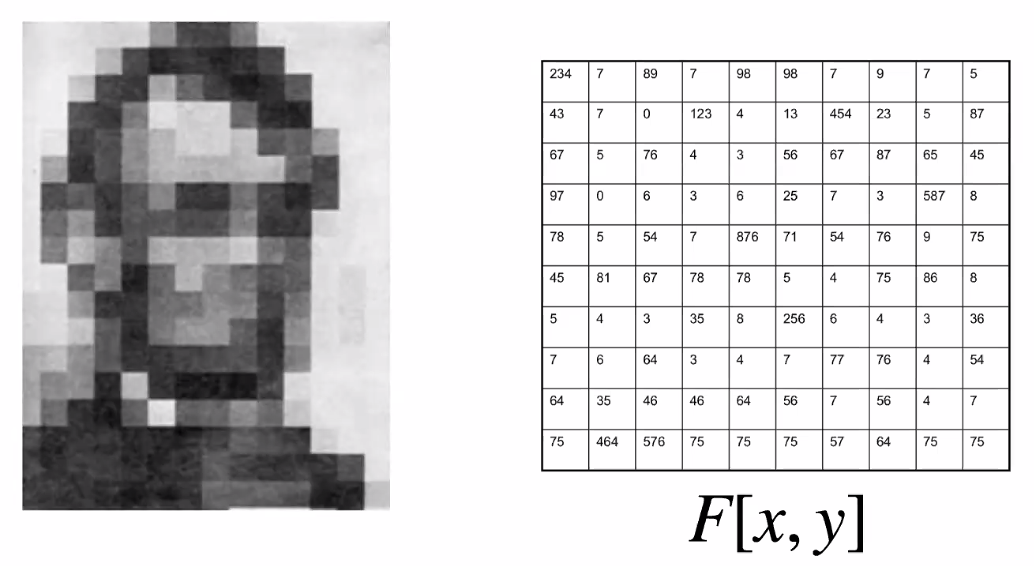

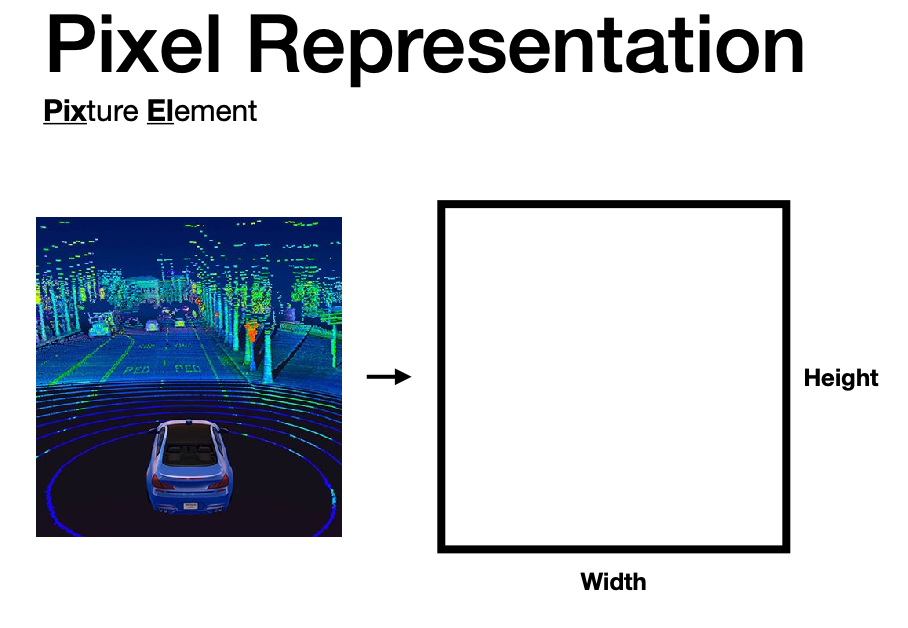

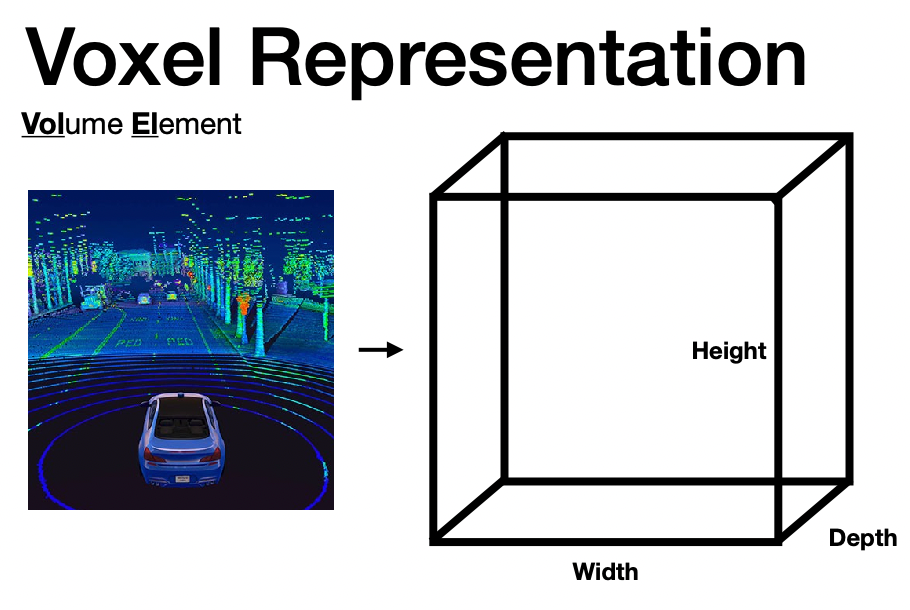

Perceiving Images

Basically the input of an image would be

| What We See | What Computer Sees |

|---|---|

|

|

which hints at the why computer vision is difficult.

-

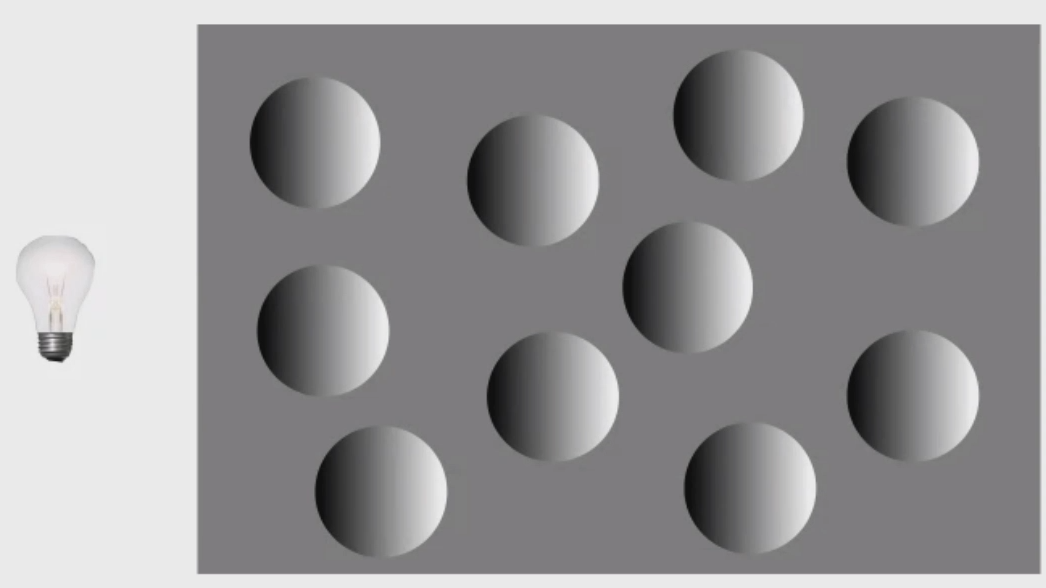

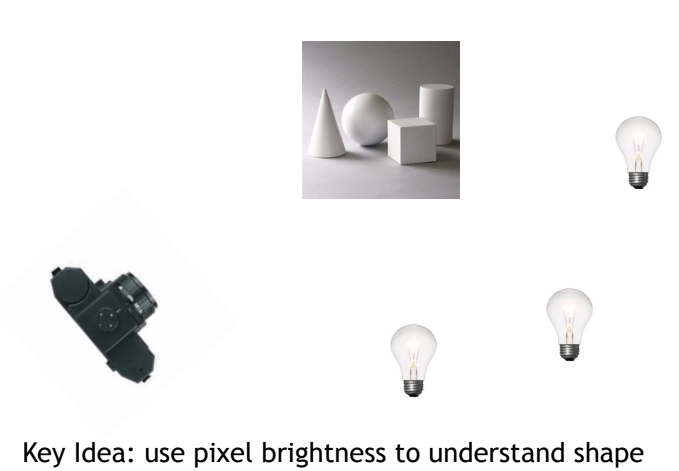

other factors that could make it more complicated is the lighting, which can change the picture

-

object occlusion, an object will be partially blocked

-

class variation: objects can have various shapes. What is a chair?

-

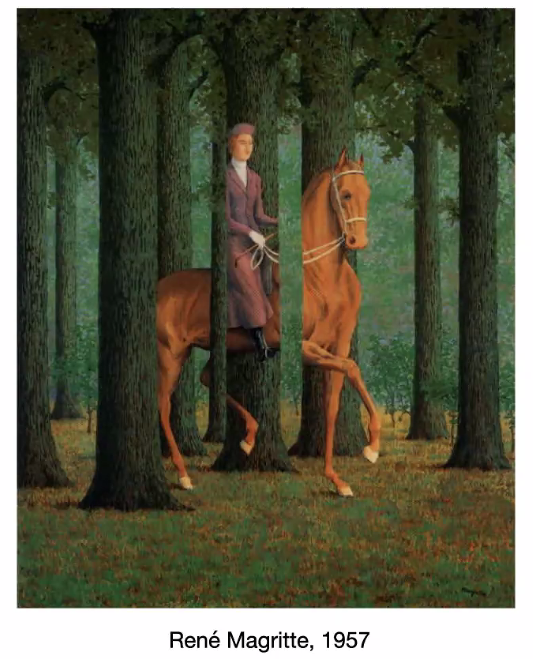

clutter and camouflage: we are able to see through camouflage

so that we can see there is an owl, but computer vision systems would struggle here.

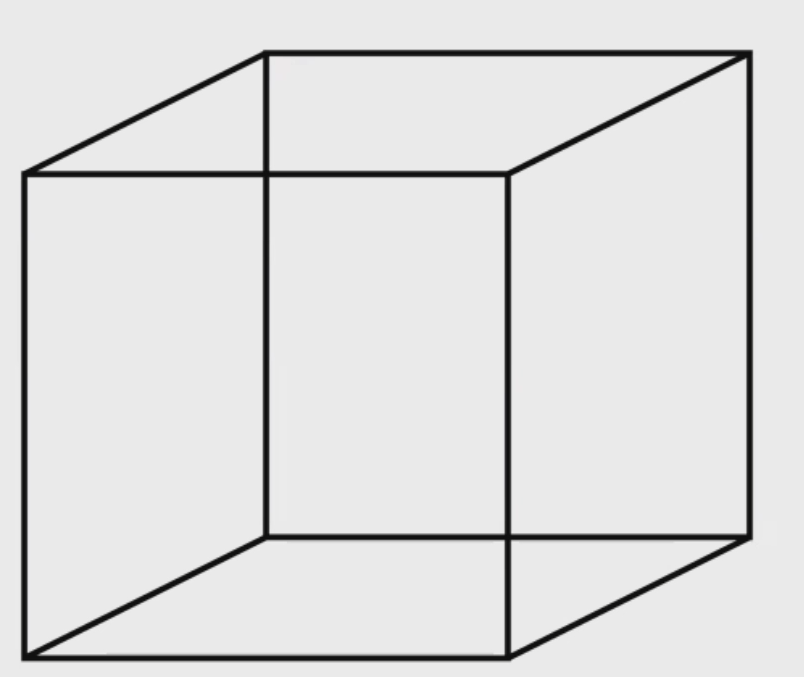

In general, there is often no correct answer for computer vision!

Evolution of Vision

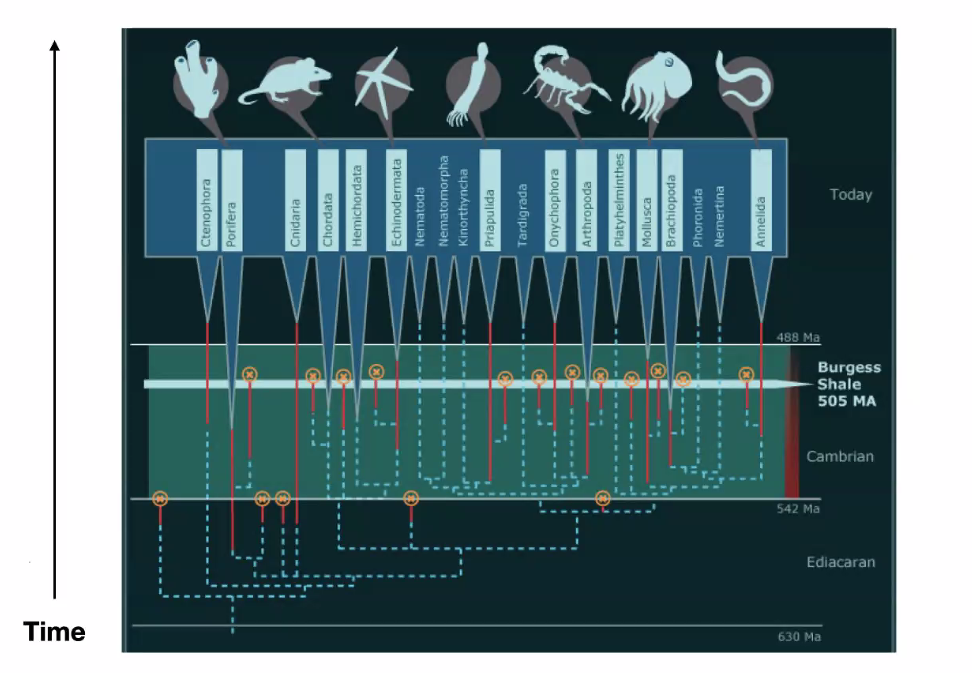

Before the Cambrian explosions, there were only about 4 species (worm-like) on Earth. However, after the explosion:

some theories to

- “In the blink of an eye”: The Cambrian Explosion is trigged by the sudden evolution of vision, which set off an evolutionary arms race where animals either evolved or died.

- our vision has evolved for more than 200 million years. Now let the computer do it.

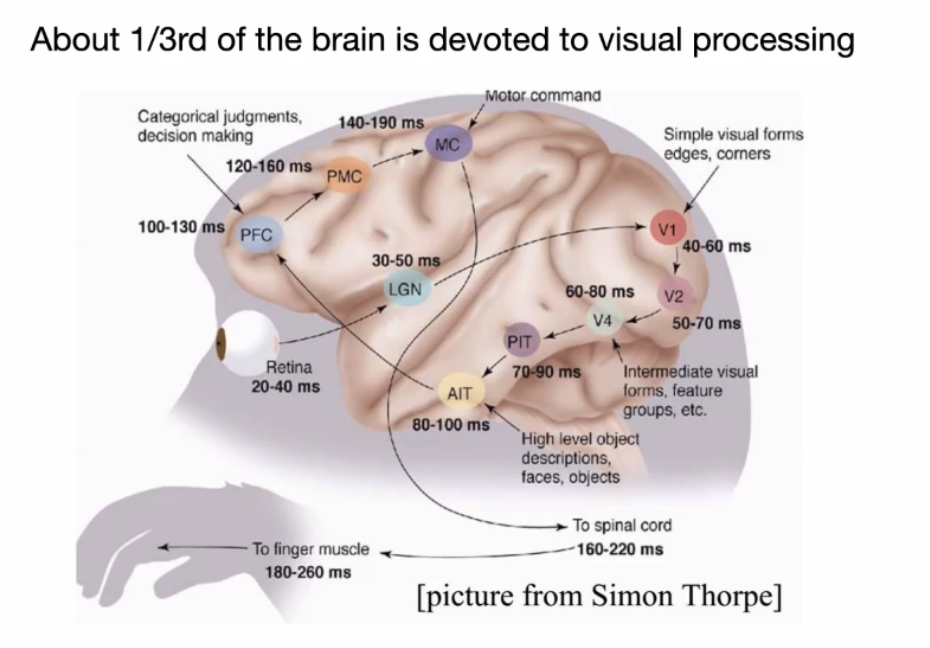

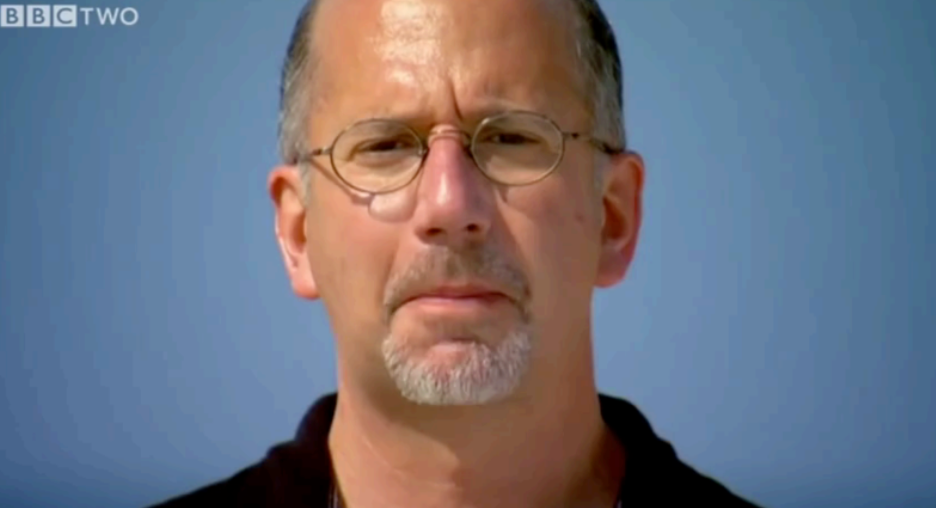

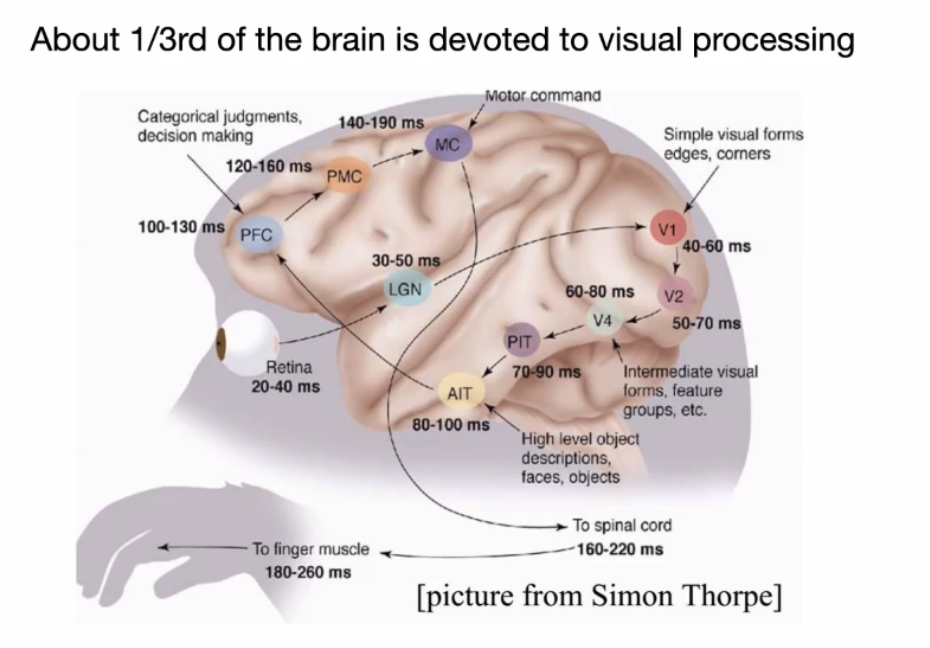

What don’t we just build a brain?

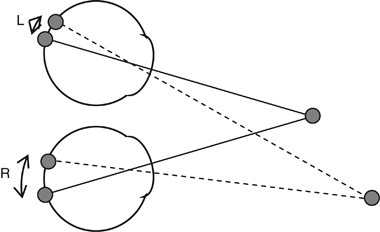

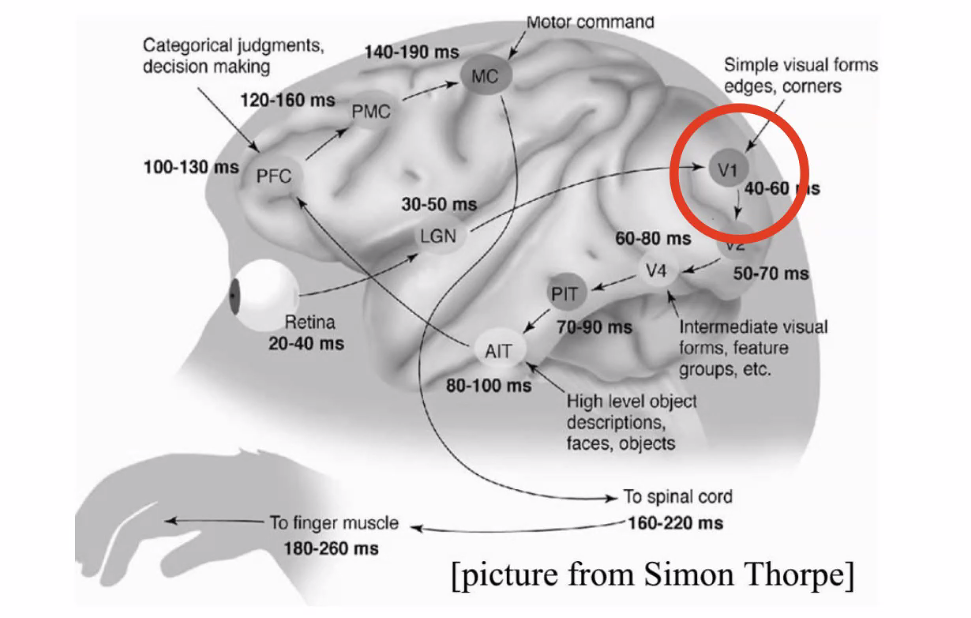

where we start the loop from our retina:

- starting from PFC it is related to other stuff.

- but even until today, we are still not sure how brain works.

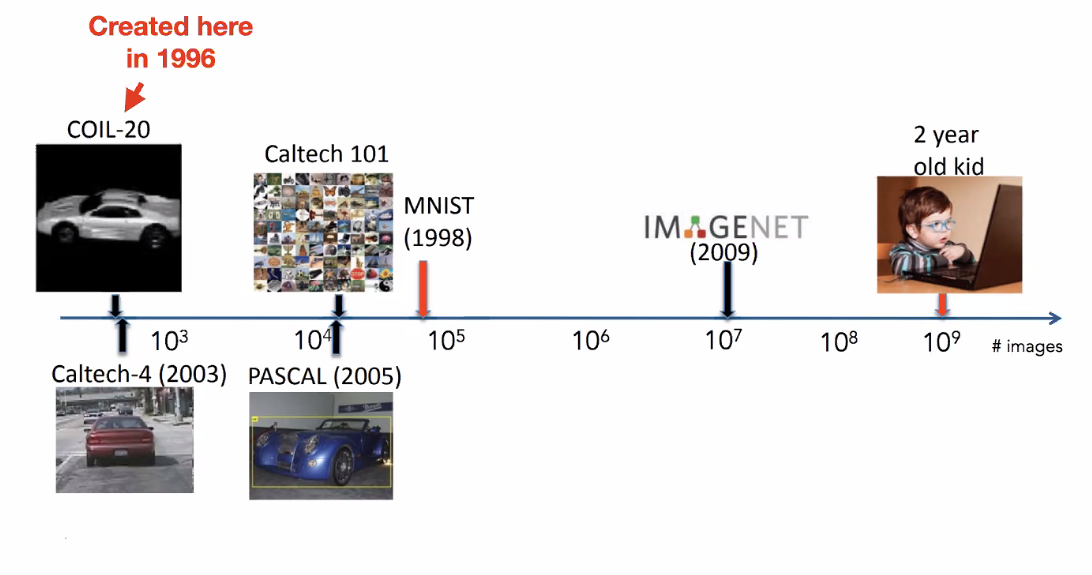

Additionally, there is a difference in datasets

notice that what a 2 year-old child have seen would have been much more than the best dset we have now.

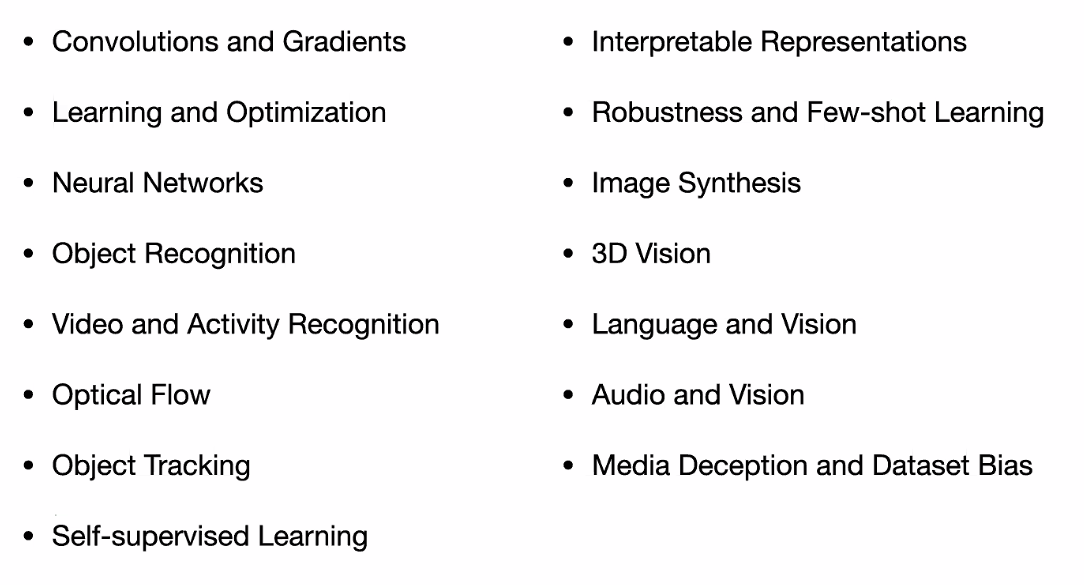

Syllabus

Because the course is large, there will be no exceptions at all

Topics: we do NOT assume prior knowledge in computer vision and machine learning

Format: Hybrid

- so Zoom is allowed

- every lecture will be recorded

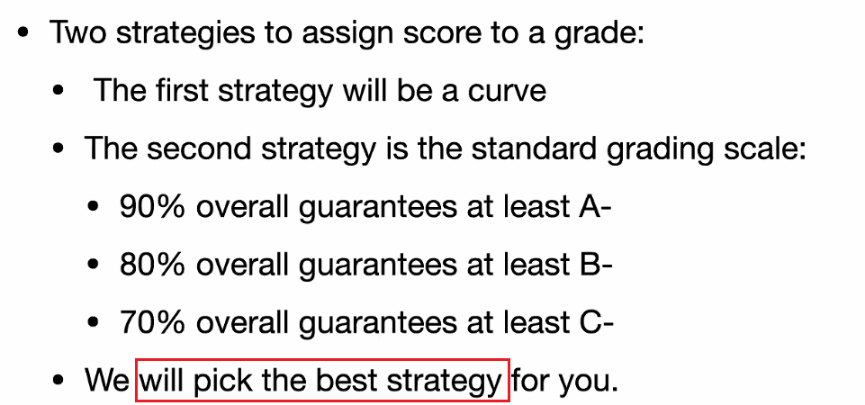

Grading

- Homework 0: 5% (self-assessment, should be easy)

- Homework 1 through 5: 10% each

- Final Quiz: 45% (written)

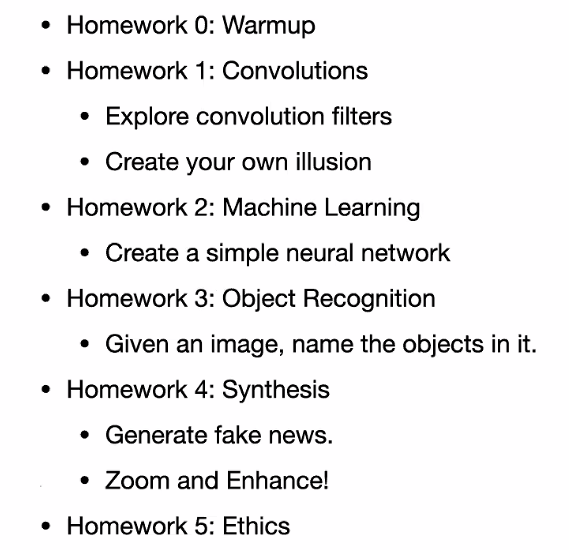

Homework: outlines

- usually it will be 2 weeks for each homework

- probably hand in via Gradescope

- collaborations will be allowed, but need to disclose

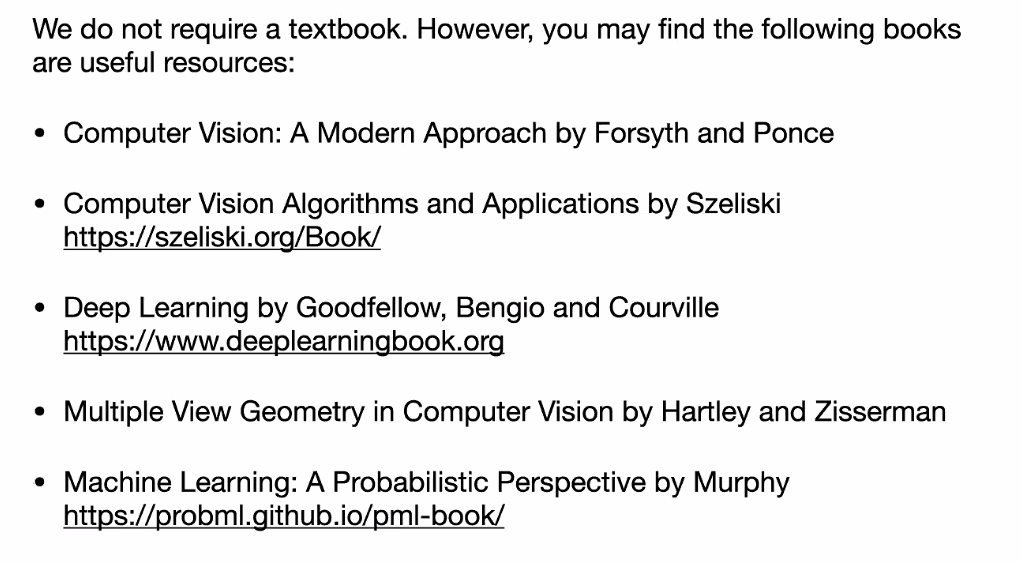

Useful Resources

OH

- will be online

Optical Illusions

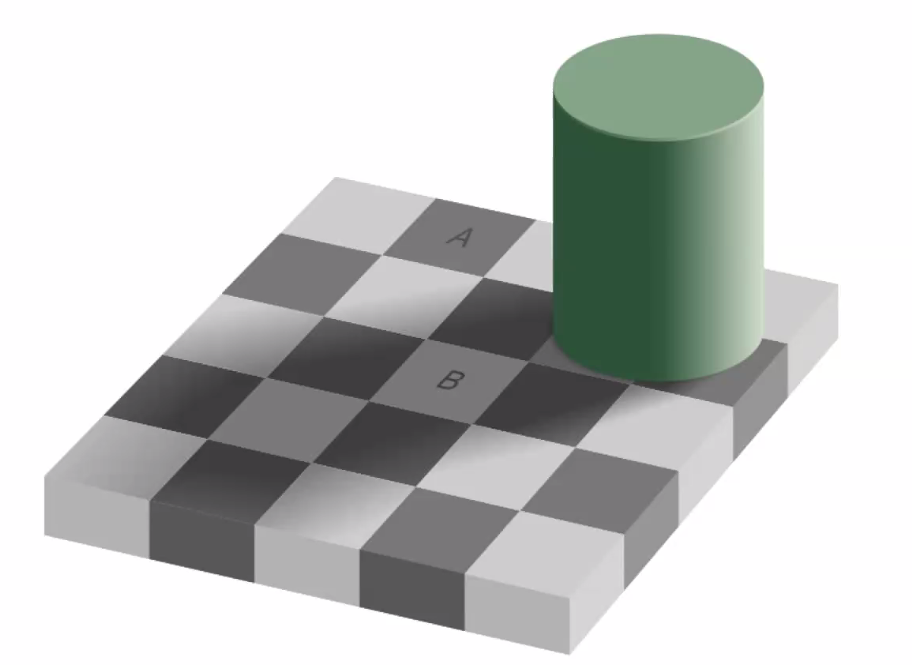

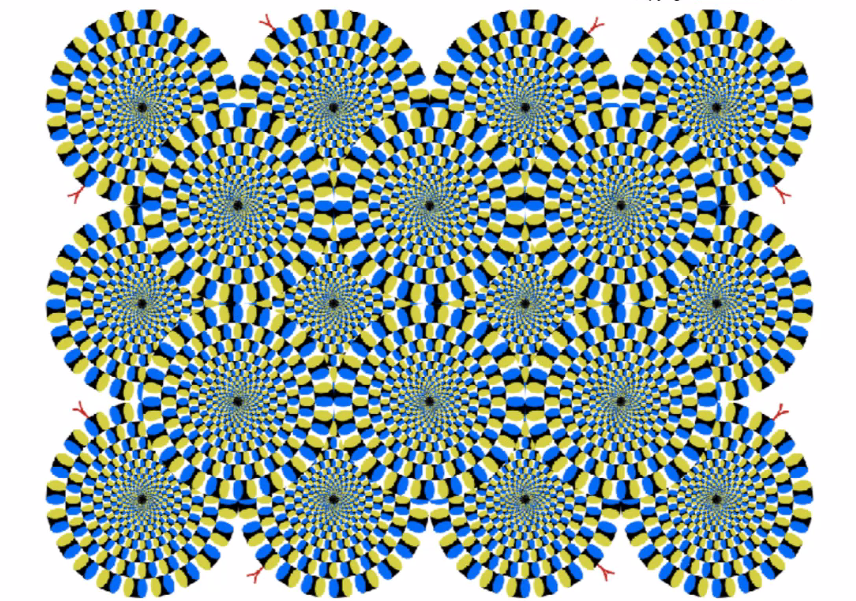

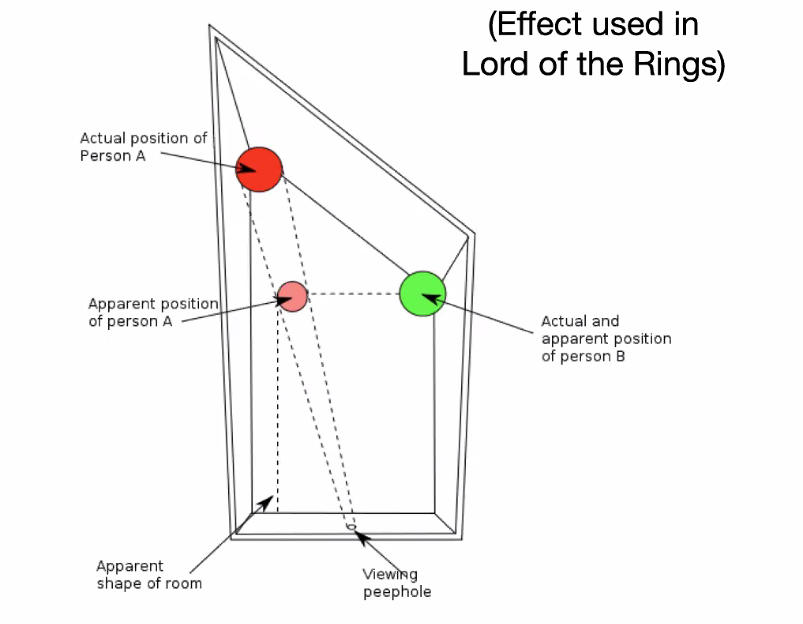

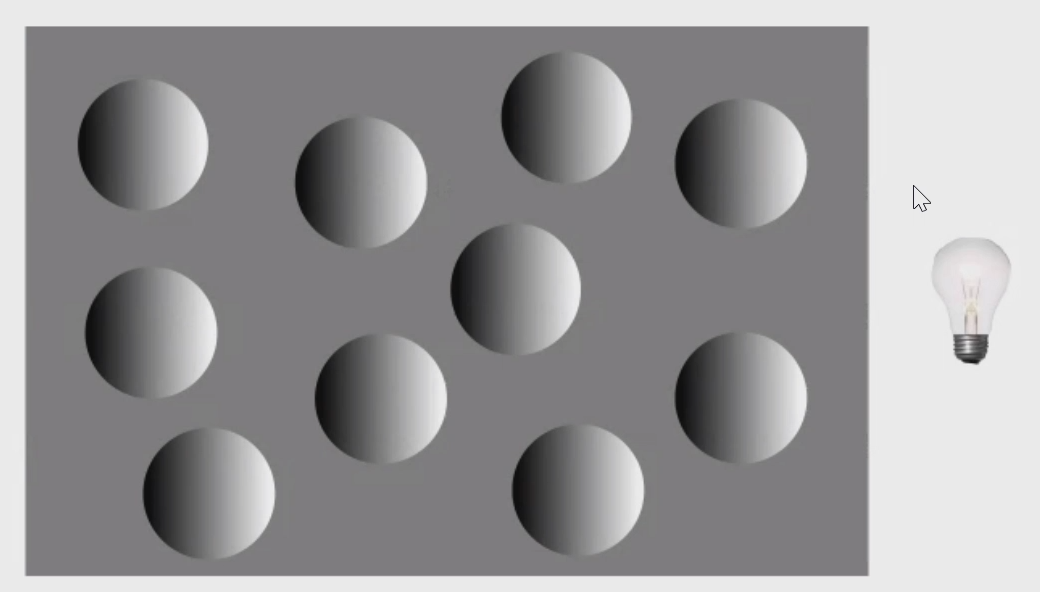

Below are some interesting illusions

| Illusion | Your Brain |

|---|---|

|

You brain “factors” out the fact that there is a shadow, which automatically made a block $B$ seem lighter than $A$. (How can your computer vision do this if they have the same RGB?) |

|

Some explanation of this talks about that you see them “moving” because your neurons overloaded. |

|

Ambiguities our brain resolve pretty fast: a big chair instead of a small person |

|

Makes you think people shrunk in size. But actually this is how it happened |

in short:

- our brain “automatically fill in things” that are not there - hard part of perception

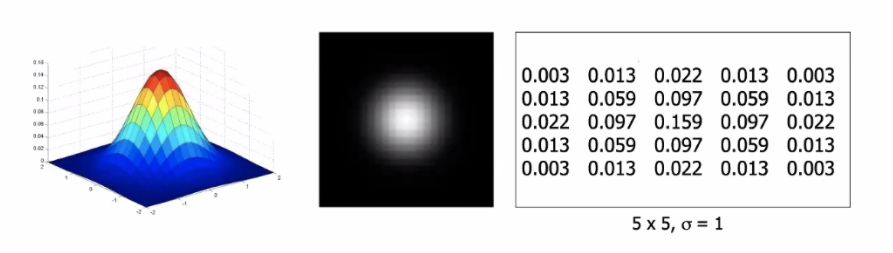

Convolution

The idea is that we want to de preprocessing of the image, such that:

- we can “denoise” an image.

- highlight edges (taking gradient)

- etc

using a linear kernel/filter, which essentially are using weighted sums of pixel values using different patterns of weights to find different image patterns. Despite its simplicity, this process is extremely useful.

For instance, when you take a photo at night, there is little light hence it would capture a lot of noise

Intuition

One way to suppress noise would be to:

- take many photos and take average

- how do we “take an average” even if we only have one photo?

One way to think about this, is that we can first treat each image as a “function”

where:

- as a function, the image maps a coordinate $(x,y)$ to intensity $[0,255]$

- (in some other cases, thinking of this as a matrix would work)

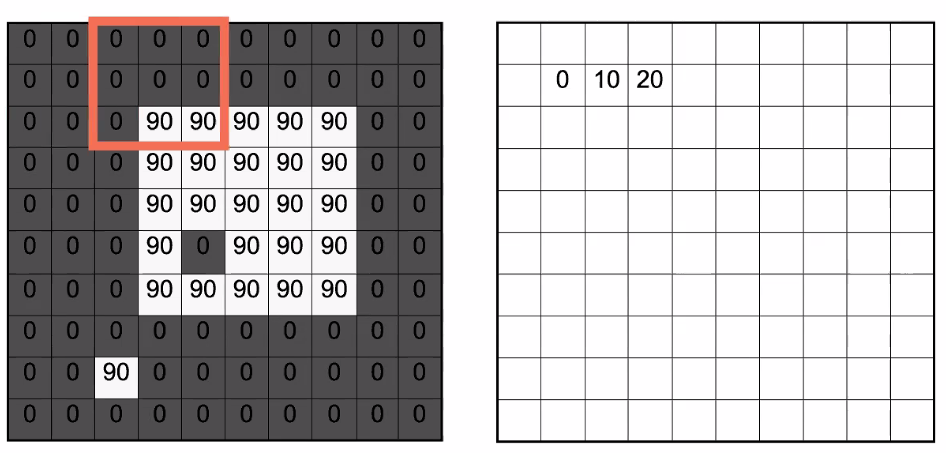

Then, then, you can take a moving average:

| Sliding Through | Output |

|---|---|

|

|

when we finish, notice that:

- the next effect is that it “blurs” or “smooths” the image out

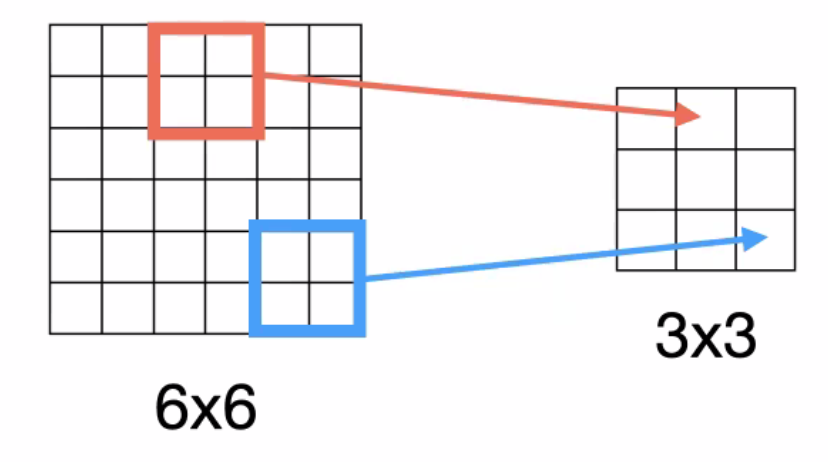

- the output has a smaller size than the input. This is because there are $(n-3+1)^2$ unique positions for putting the $3\times 3$ kernel.

Linear Filter

The above can also be thought of as:

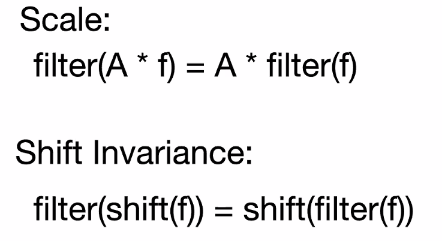

In general, we will be looking at linear filters, which has to satisfy the following

-

$\text{filter}( \text{im},f_1 +f_2) = \text{filter}( \text{im},f_1) + \text{filter}( \text{im},f_2)$

-

$f_1,f_2$ are filters/kernels. The function is the process of applying them to the image.

- output of sum of filters is the same as sum of output of filters $f((a+b)x)=f(ax)+f(bx)$

- since filters can also be seen as “images”: output of the sum of two images is the same as the sum of the outputs obtained for the images separately.

-

-

$C\cdot \text{filter}( \text{im},f_1) = \text{filter}( \text{im},C\dot f_1)$

- multiplied by a constant

And you can think of this as linear algebra

- most of the convolutions operations are linear by construction

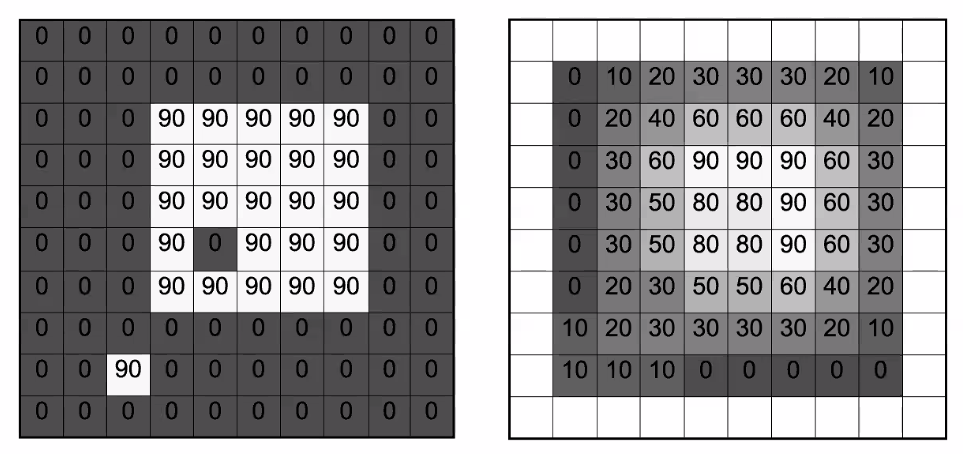

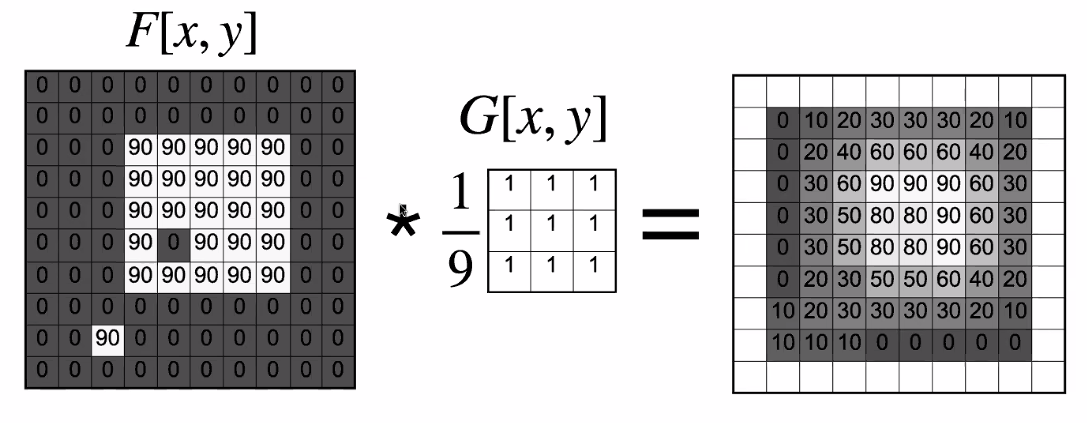

Convolution Filter

Kernel/Filter: The pattern of weights used for a linear filter is usually referred to as the kernel/the filter

The process of applying the filter is usually referred to as convolution.

For instance, we can do a running average by the following convolution:

where:

- $*$ is often a symbol used for convolving

- essentially it is about taking $G$ , then taking sum of element-wise product with a $3\times 3$ region in $F$

- This is the same as moving average we had. But notice that we needed $1/9$ in front:

- In reality, we also want to make sure that the output is still a valid image. Hence we need to be careful that the output intensity value does not exceed $255$, for instance.

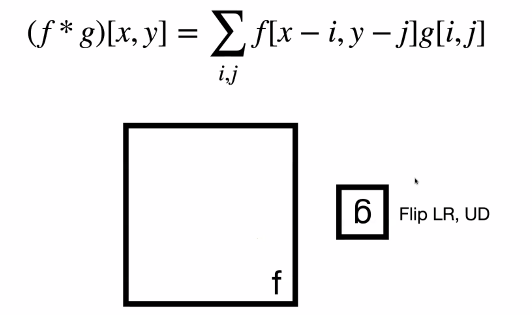

Formally, convolution is defined as:

\[(f * g)[x,y] = \sum_{i,j} f[x-i,y-j]g[i,j]\]where

-

$(f * g)[x,y]$ means $f$ convolves with $g$, which is a function of coordinate $x,y$. Outputs the intensity at $x,y$.

-

For a $3\times 3$ kernel, we would set $i \in [0,2], j \in [0,2]$ and output to the top right instead of center.

-

notice that the minus sign is intended, so that we are flipping the filter:

where:

- the only purpose of flipping is that it makes the math easier later on

- increasing index in $g$ but doing decreasing for $f$.

- therefore, you need to flip the filter upside down, and then right to left

- when you code it, however, often you will just have

+sign.

Note that if the filter is symmetric, then flipping doesn’t matter.

- However, if the filter is not symmetric, (most people) just don’t flip it either way. So it depends.

If you use the $+$ instead, it is called a cross-correlation operation

\[(f * g)[x,y] = \sum_{i,j} f[x+i,y+j]g[i,j]\]which is also denoted as:

\[f \otimes g\]which does not have all the nice properties like convolution just due to that sign.

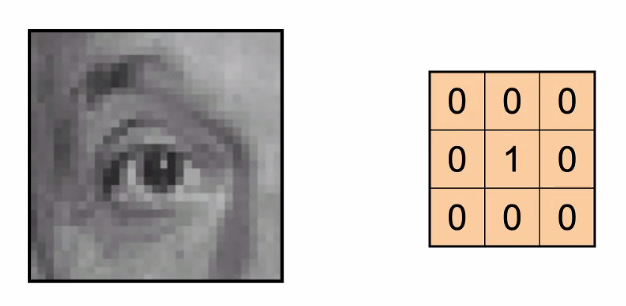

For instance: convolution examples

Identity transformation:

- basically It will output the same image (but contracted by 1)

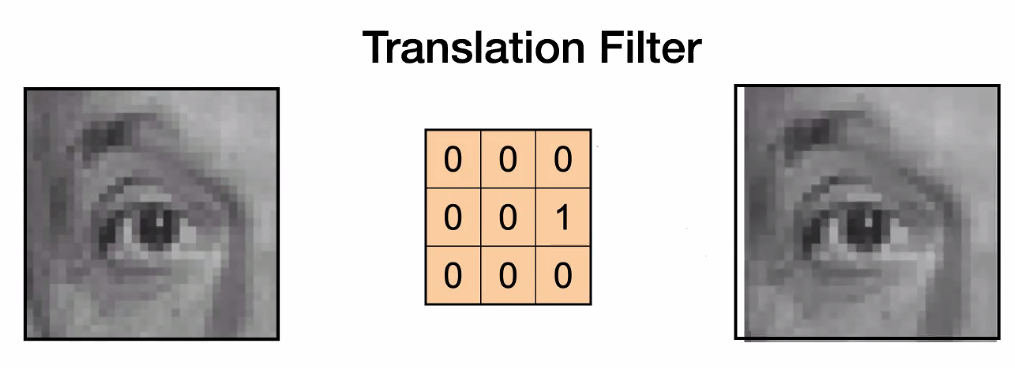

Translation

-

where it shifts to the right because we had the minus sign. In essence, we need to flip the convolutional kernel upside down and right to left, which becomes this:

hence it is in fact shifted to the right

Nonlinear Kernel

where notice that no such convolution kernel exist, because:

- this is not a linear operation!

- for convolution kernel to work, we needed to **treat everything/pixel identically (from its neighbors) **. However, a rotation doesn’t work like this (e.g. consider the treatment of the pixel in the center and the pixel far away from the center on the LHS image)

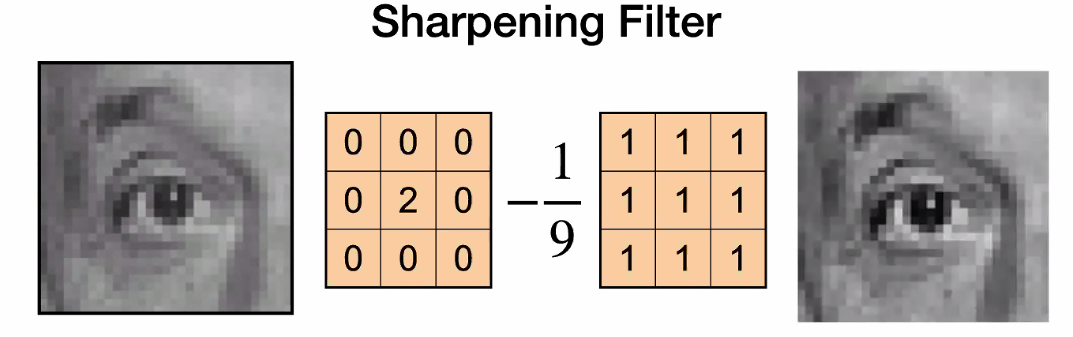

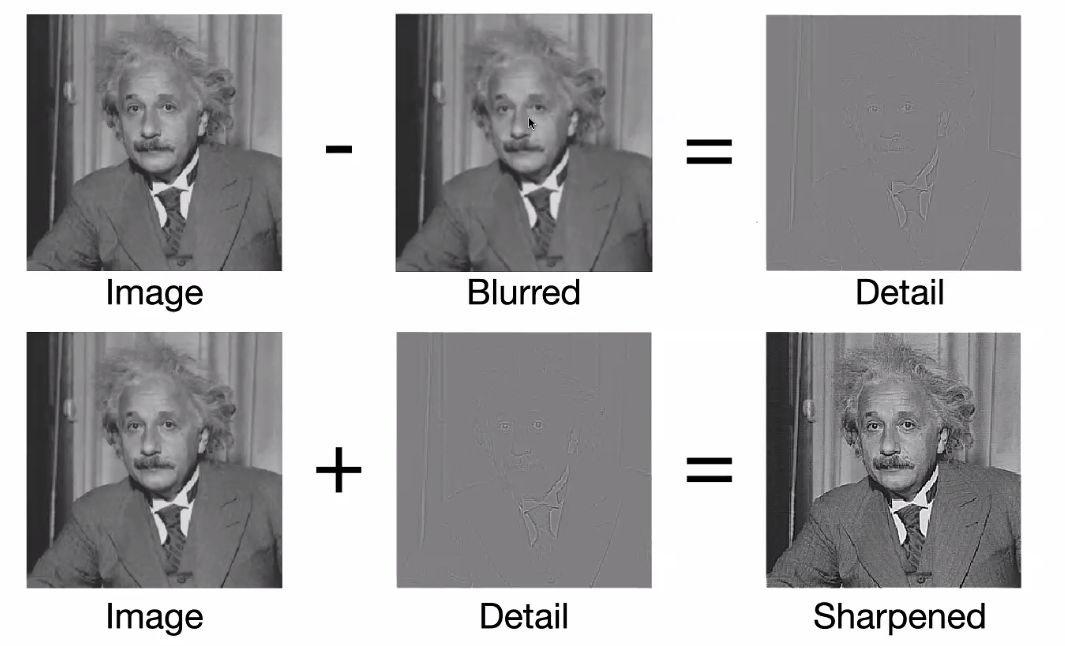

Sharpening

where:

-

sharpening actually increases the noise

- multiply by $2$ is like brightening

- subtracting a blurred image = subtracting removed noise

-

so it turns out that our eyes think “adding noise” makes the photo looks sharper

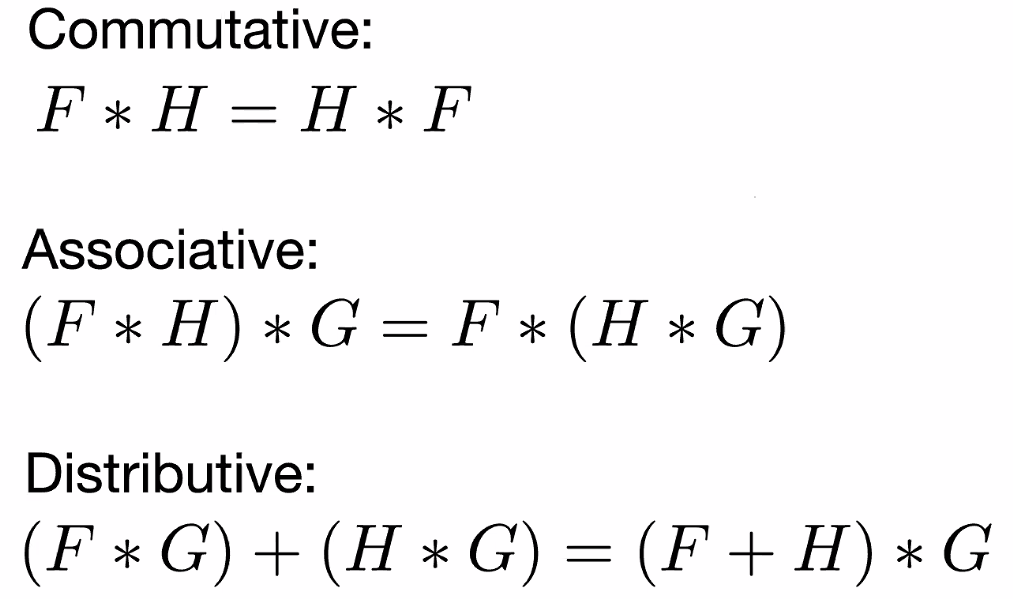

Convolution Properties

The operation $*$ has the following property:

those can be proved with the minus sign in our definition, which switching to plus sign might make things break. $F,G,H$ are all filters/kernels, so remember that $F * G$ means, .e.g having image $F$ convolve with filter $G$

- commutative/associative: order of convolution does not matter. You can apply $F$ then $G$, or $G$ then $F$

- distributive: same as linearity of kernels

Note

- you kind of have to ignore the fact that different sizes of image/filter produces a different border

- those are useful because it makes your code runs faster

Additionally, we also know that

which makes sense since a linear convolution treats each pixel the same/”same weights from neighbors”.

Gaussian Filter

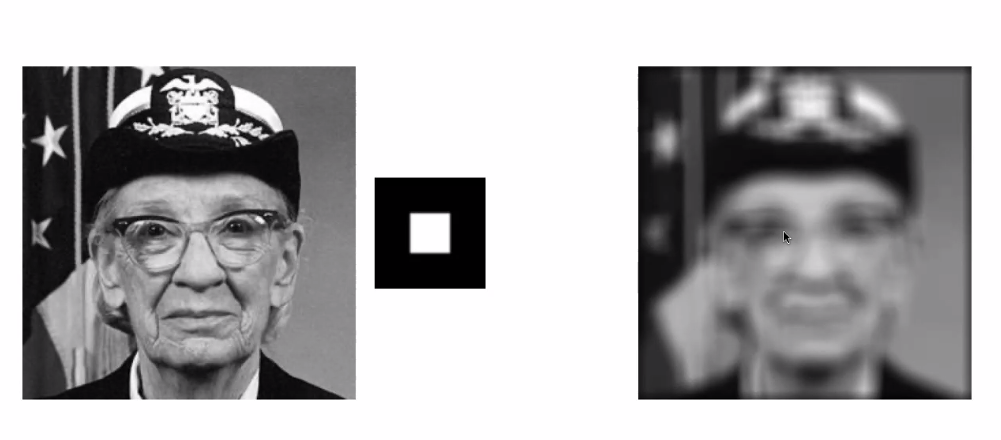

Now, let us reconsider the task of blurring an image: we can blur the image by “creating multiple copies of the image”, dis-align them and add them up:

| Box Filter | Gaussian Filter |

|---|---|

|

|

where in both cases, we have blurred/smoothened the image

- black means 0, white means 1, and this white box is larger than $1 \times 1$ in size.

- smoothing: suppresses noise by enforcing the requirement that pixels should look like their neighbors

- the Gaussian one does indeed is more visually appealing

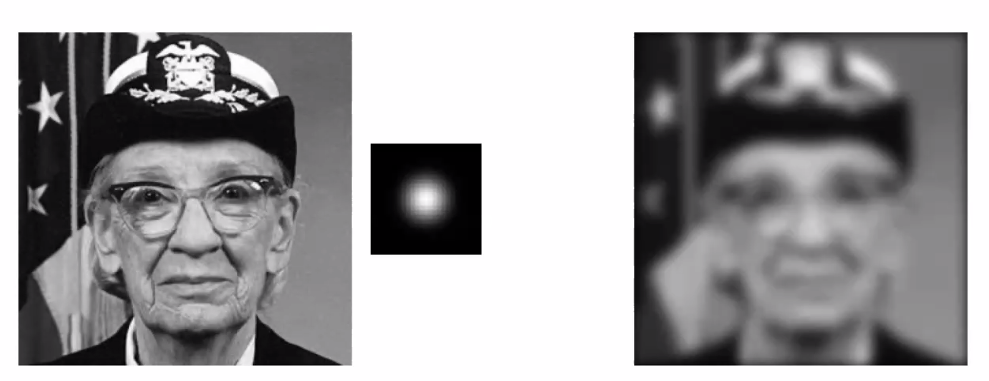

More mathematically, the Gaussian is a multivariate Gaussian but having identity as covariance: i.e. the two variables are independent:

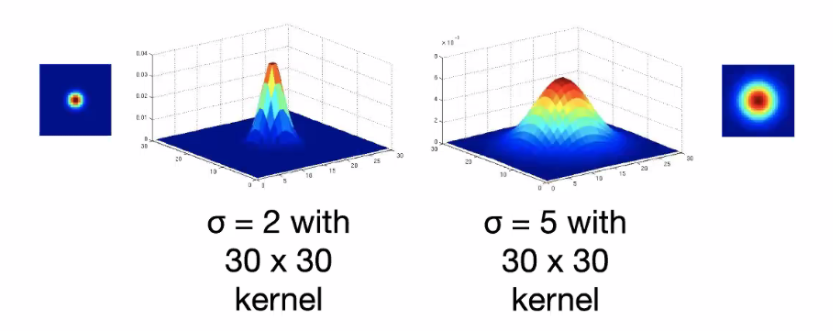

\[G_\sigma = \frac{1}{2\pi \sigma^2} \exp({ - \frac{x^2 + y^2}{2\sigma^2}})\]where $x,y$ are coordinates, and an example output looks like:

recall that Gaussian also has the nice property that they sum up to 1.

- notice that it is symmetric. This is enforced.

- yet since it is a Gaussian, we can also control its parameters $\sigma$, which determines the extent of smoothing

so that:

- more spread out gives more blur

For instance:

| Slow Sigma | High Sigma | |

|---|---|---|

|

|

|

Computation Complexity

For having an image of $n\times n$ doing a convolution of $m \times m$ kernel/filter:

\[O(n^2 m^2)\]where we assumed that there are paddings done, so the output is the same size as input.

- For each single pixel, we need to do $m \times m$ work

- Since we have $n \times n$ pixels, we needed to $n^2 m^2$

- this is very expensive!

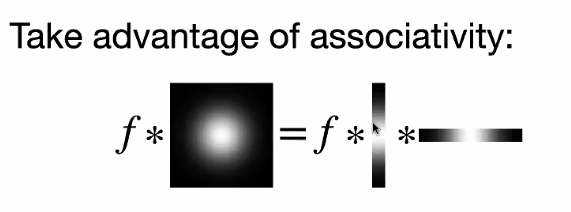

But we can speed this up in some cases. Consider separating the Gaussian filter into 2:

\[G_\sigma = \frac{1}{2\pi \sigma^2} \exp({ - \frac{x^2 + y^2}{2\sigma^2}}) = \left[ \frac{1}{\sqrt{2\pi }\sigma} \exp({ - \frac{x^2 }{2\sigma^2}}) \right]\left[ \frac{1}{\sqrt{2\pi} \sigma} \exp({ - \frac{y^2}{2\sigma^2}}) \right]\]Therefore, since we know that if we have two filters $g,h$, and an image $f$, associativity says:

\[f * (g * h) = (f*g)*h\]Therefore

(technically, we are saying the following)

\[f * g = f * (g_v \times g_h)= (f* g_v) * g_h\]Then, since $G_\sigma$ can be separated into two filters of smaller dimension:

\[O(n^2 m)\]now for each pixel, we only needed to do $m$ work/look at $m$ neighbors.

- technically you do it twice, so $2n^2m$, but $2$ is ignored.

- this only works in special cases.

Human Visual System

In fact, one stage our vision system also does convolution

- $V1$ is doing convolution.

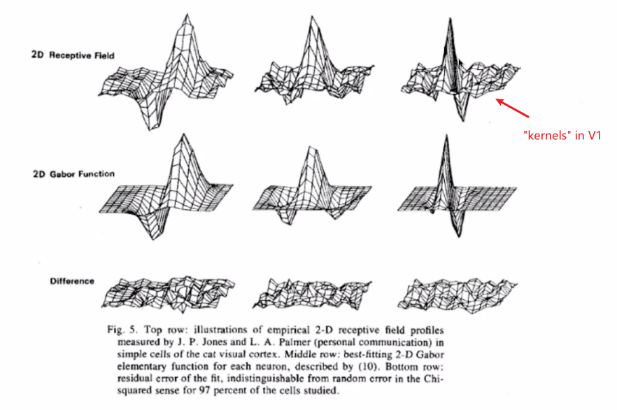

Experiments have been done on cats, and show that the kernel they are using looks like the following

where:

- to simulate the kernels in cat, we have those Gabor’s filter

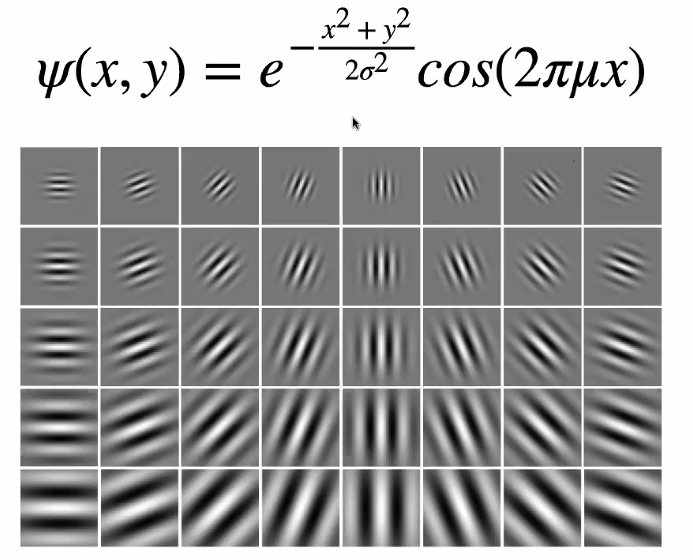

Gabor Filters

Gabor filters are defined by:

interestingly:

- it seems that convolutional NN also returned a similar filter

- it turns out this can do edge detection

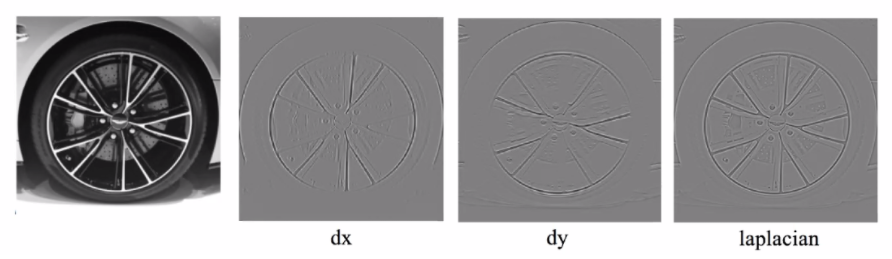

Image Gradients

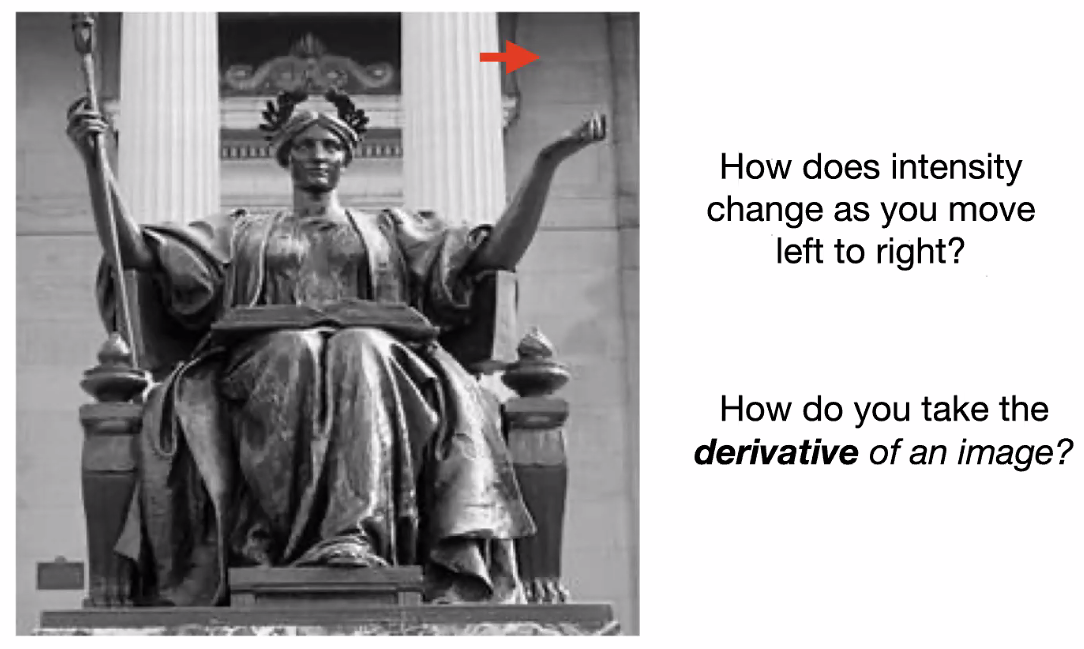

Now, we want to consider the problem of identifying edges in a picture, which is part of an important process in identifying objects.

Consider looking at the red arrow. We are interested in how does the intensity change

-

when we moved across the pillar, it seems that intensity changed dramatically!

-

so we want to compute the “derivatives”

We know that

\[\frac{\partial f}{\partial x} = \lim_{\epsilon \to 0} \frac{f(x + \epsilon ,y)-f(x - \epsilon ,y)}{\epsilon}\]but since the smallest unit is a pixel:

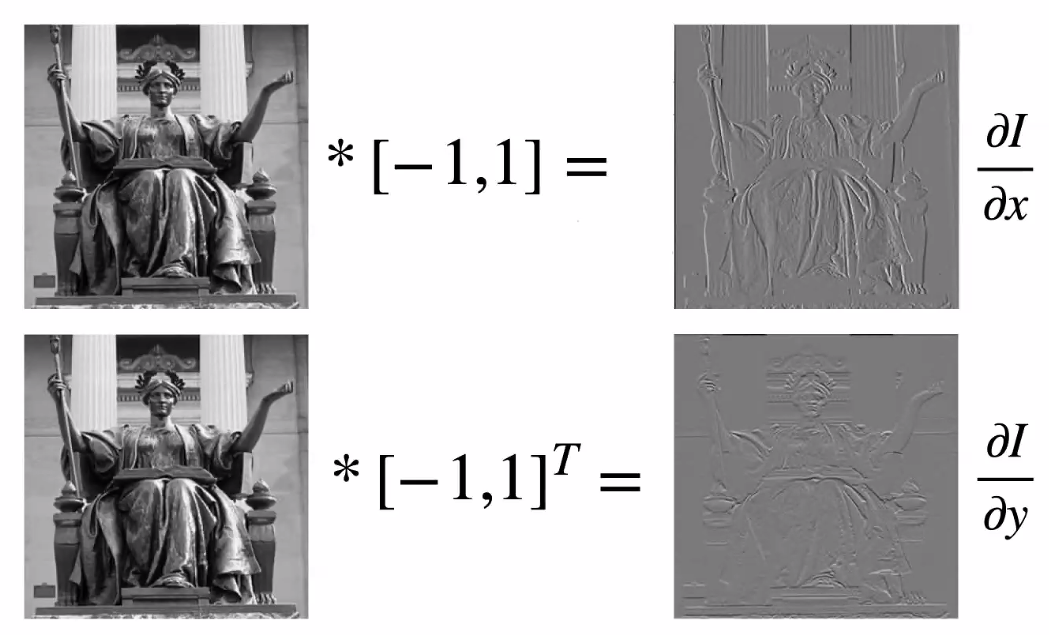

\[\frac{\partial f}{\partial x} \approx f(x+1,j) - f(x-1,j)\]Therefore, we basically have the following:

- $\partial f/ \partial x$: using $[-1,0,1]$ or $[-1,1]$ as kernel

- $\partial f/ \partial y$: using $[-1,0,1]^T$ or $[-1,1]^T$ as kernel

Result:

where we see:

-

the $\partial x$ shows how images change when we move in $x$-direction. Hence we see the texture of the pillars on the RHS. But if we do $\partial y$, they disappear.

- if we want to be more “exact”: $0.5[-1,0,1]$ since the step size is $2$ pixels

- technically the signs are “backwards” because we need to flip our kernel

Similarly, we can also compute second derivative from using the first derivative as input:

Edge Detection: Idea

There is no strict “definition of what is edge”, so it is more like a practical trial and error:

- detect edge such that first derivative has a largest change in some region, i.e. second derivative is $0$!

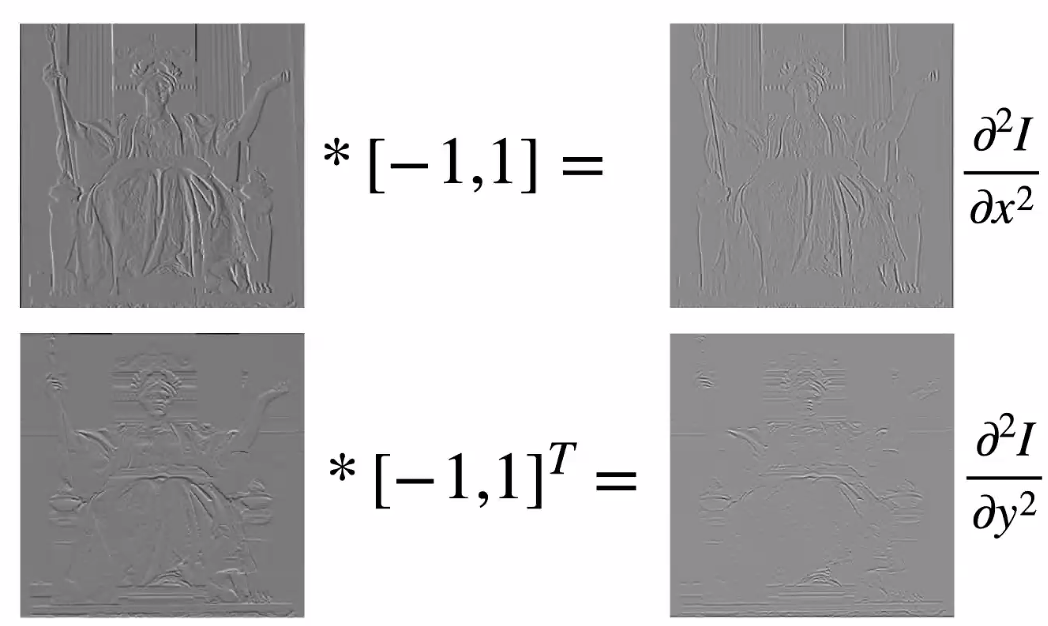

We may care about second derivatives because, usually our image will be noisy:

notice that derivatives is high everywhere

- hence we may need to smoothing it first

- then the edges has the larges derivative among them

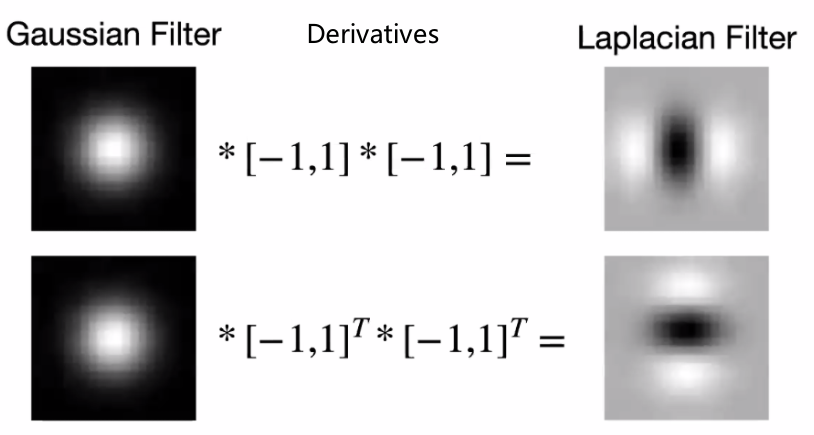

Therefore, we can do:

again, we can combine them because:

- convoving with filter 1, then convolve with filter 2 = covolve with (filter 1 convovle filter 2)

- notice that they are all linear filters!

- the Laplacian filter looks similar to the Gabor filter! Detecting the edge!

Note

If you pad an image with $0$ outside (instead of reflection), then essentially you will be adding an extra edge to the image.

- though in a CNN, those could be learnt

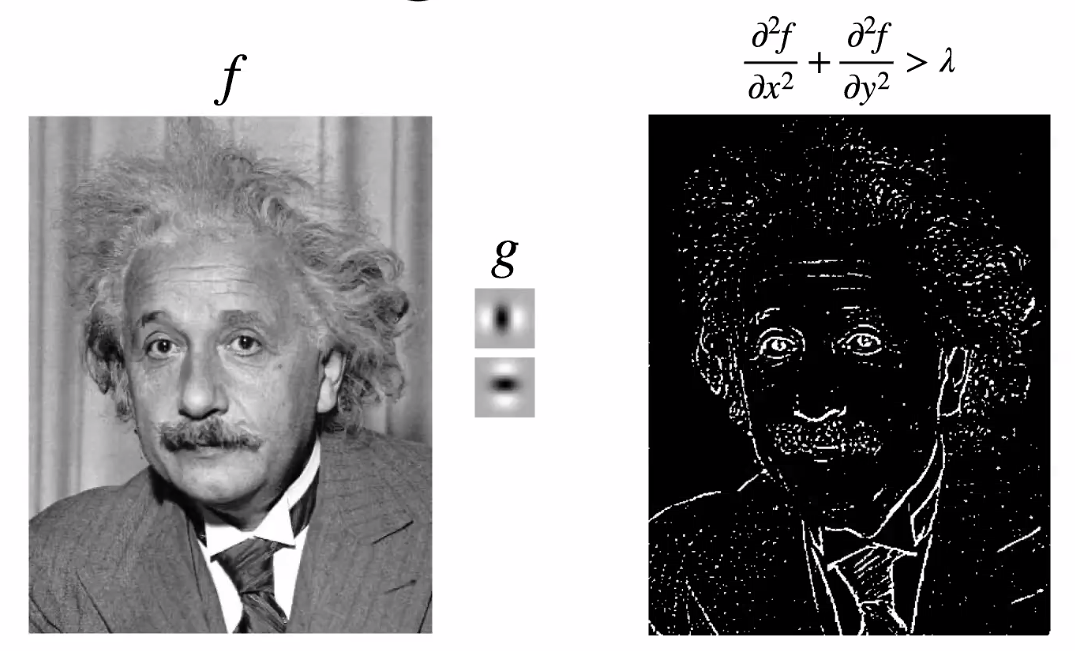

Laplacian Filter

The more exact definition of Laplacian filter is:

\[\nabla^2 I = \frac{\partial^2 f}{\partial x^2} + \frac{\partial^2 f}{\partial y^2}\]For instance

where basically:

- edges will get high intensity

Another example, but now we threshold the second derivative:

- smaller than $\lambda$ so that changes in gradients are large

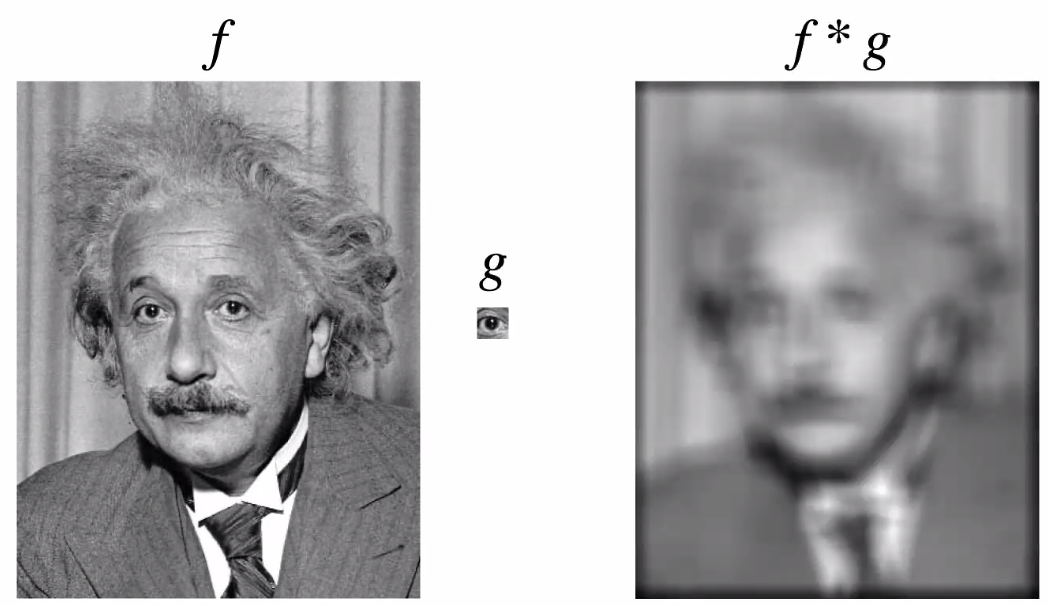

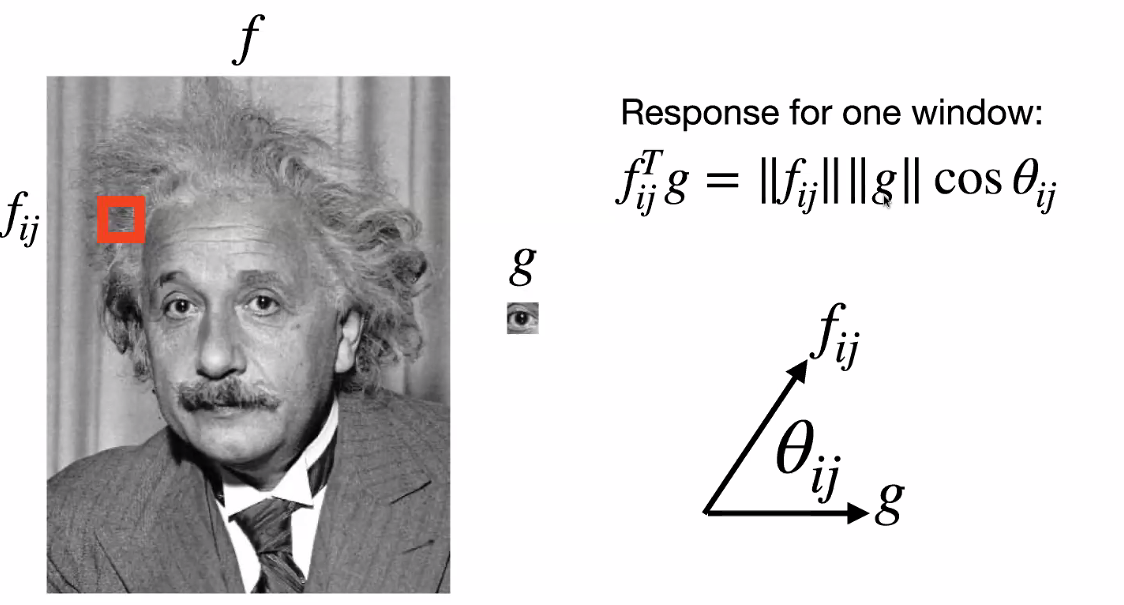

Object Detection: Idea

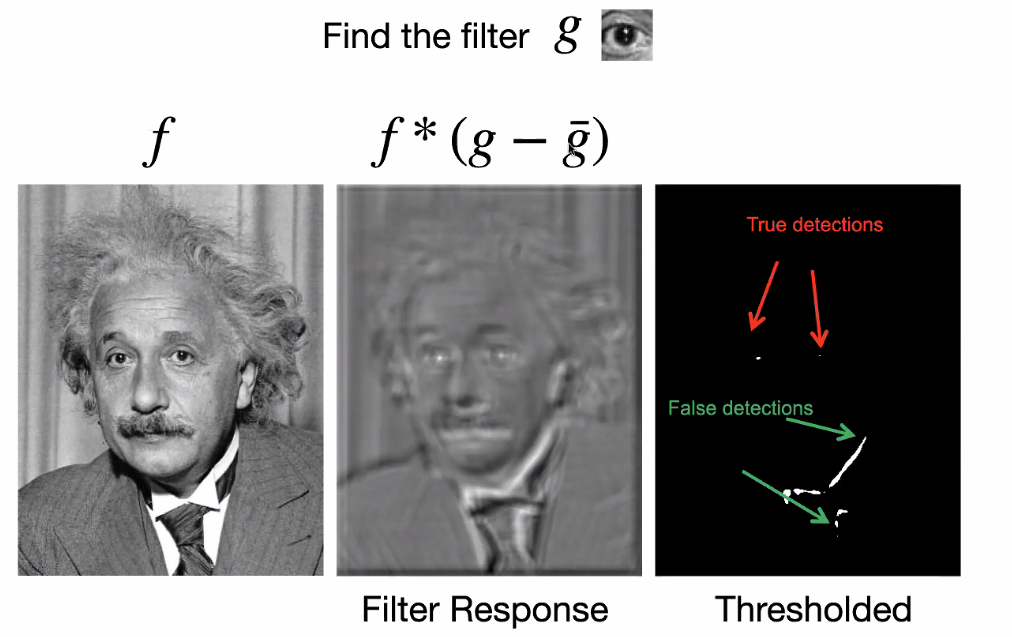

What if we “convolve Einstein with his own eye”: (with the aim of finding the eye)

where we see that the results are not that good.

-

in the end, this is where machine learning kicks in, let it figure out what

-

note that the above does not work because, if you think of $f_{ij} * g$ as computing a cosine similarity between vectors as we are doing inner products anyway:

then obviously it does not work.

However, it turns out that we can do the following:

-

so the problem is more like how do we find the right filter.

-

Finally, this task will be one reason why we will be using CNN to learn the filters

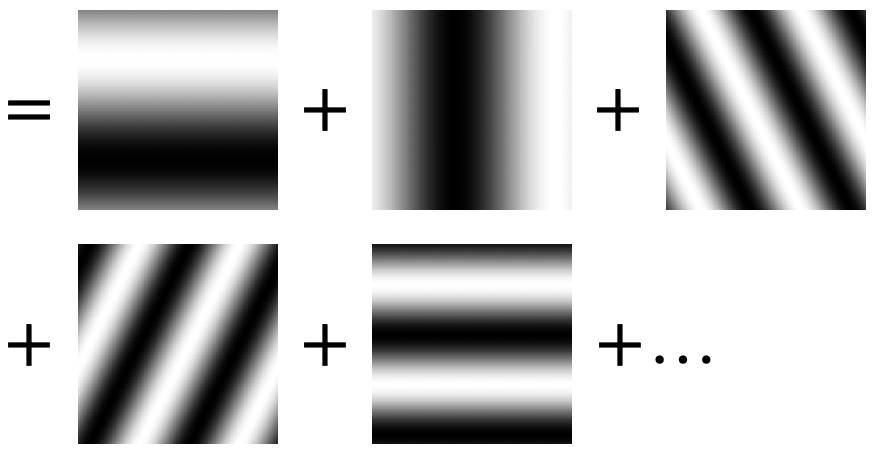

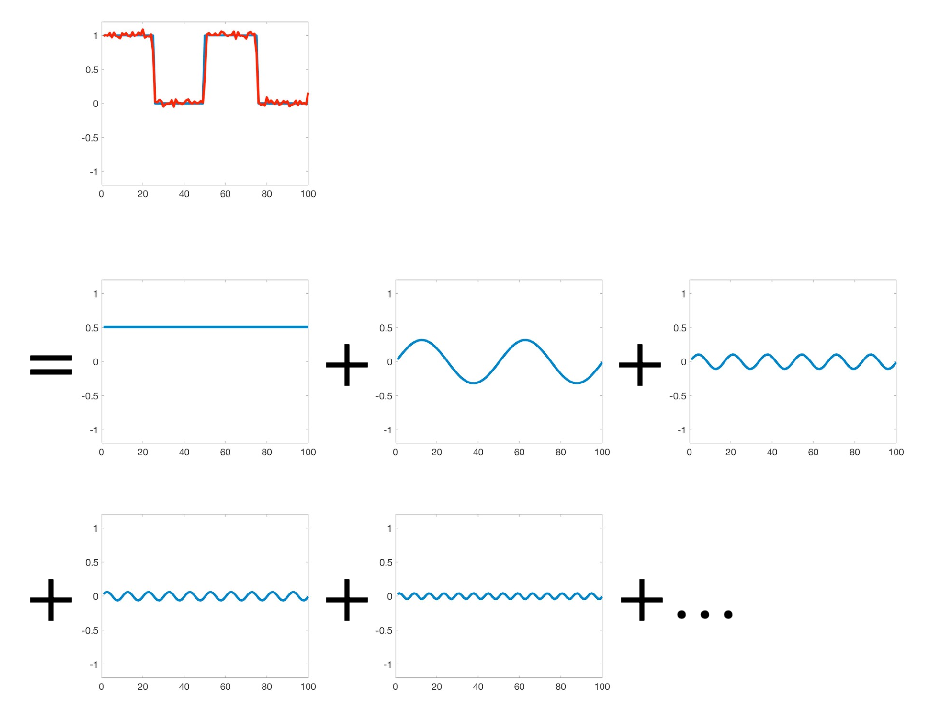

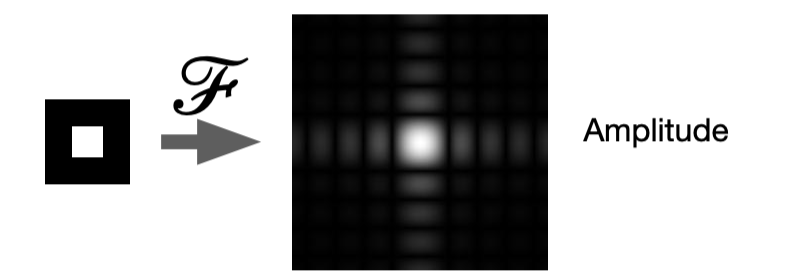

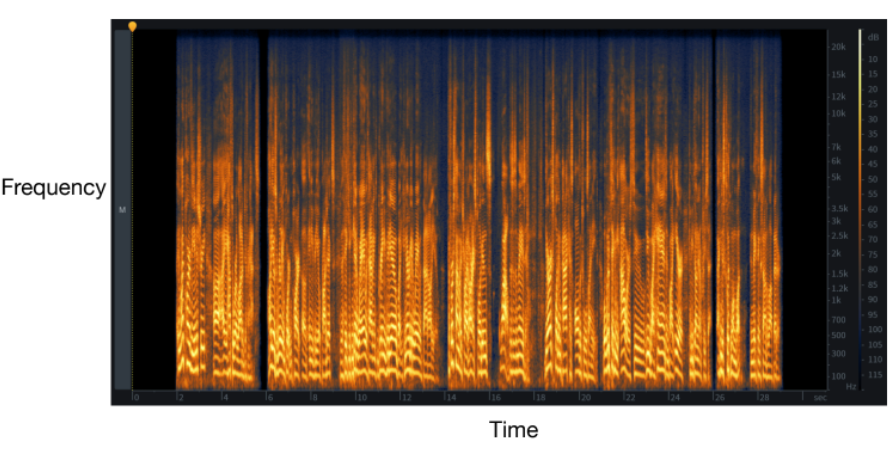

Fourier Transform

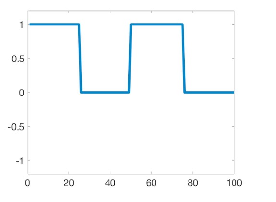

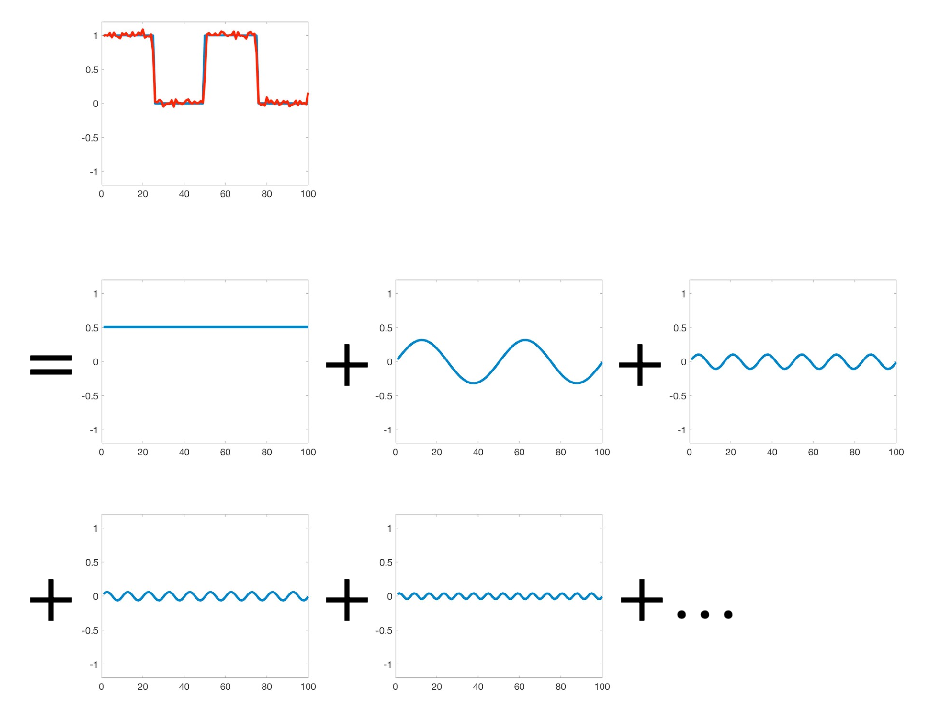

The basic idea of Fourier Transform is that any univariate function an be rewritten as a weighted sum of sines and cosines of different frequencies. (recall in PDE)

\[f(x) = a_0 + \sum_{n} a_n \sin (n \pi \frac{x}{L}) + \sum_{n} b_n \cos(n\pi \frac{x}{L})\]An example would be that we can:

| Original | Fourier Series |

|---|---|

|

|

If this is true, we can also fourier transform the 2D images as sums:

| Original | Fourier Series |

|---|---|

|

|

where

- we can use this for, e.g. compression, by removing some higher order terms to reduce data but still making the image look reasonbly good.

- now, since the source function is in 2D, fourier transform basically converts it to a sum of 2D waves

- notice that the frequency of the “image” increases. This is basically what happens in higher order frequency terms in FT!

Note

The key idea in this chapter is that images, which can be treated as function $g(x,y)$, can be thought of as a linear combination/sum of waves with different frequencies $u,v$. Such that, in the end it is found that:

- low frequency information usually encapsulates details of the image

- high frequency usually encapsulates noise

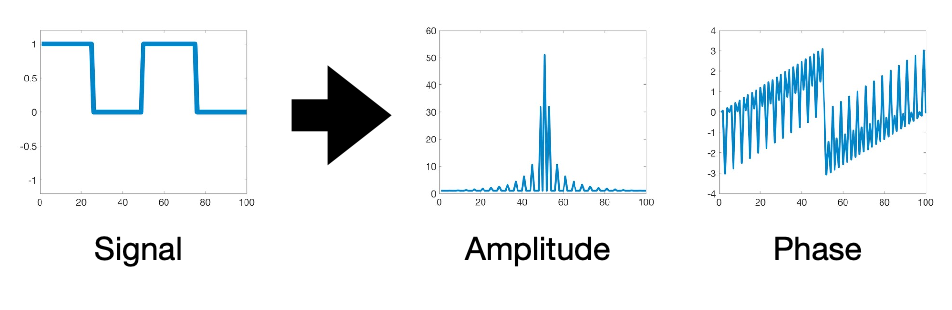

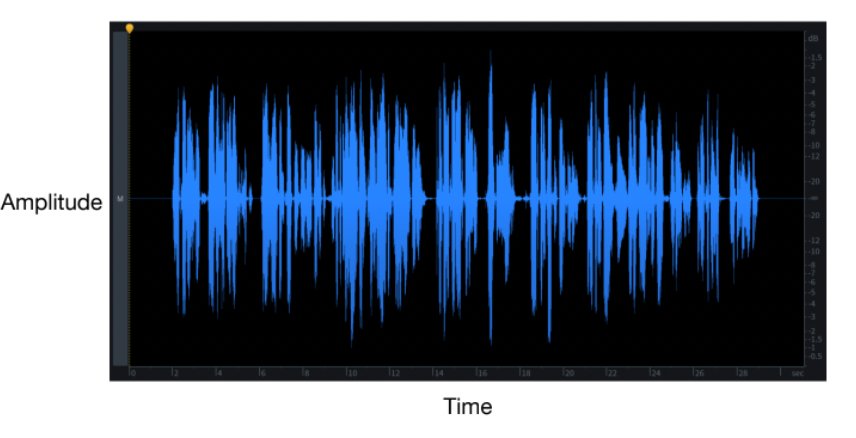

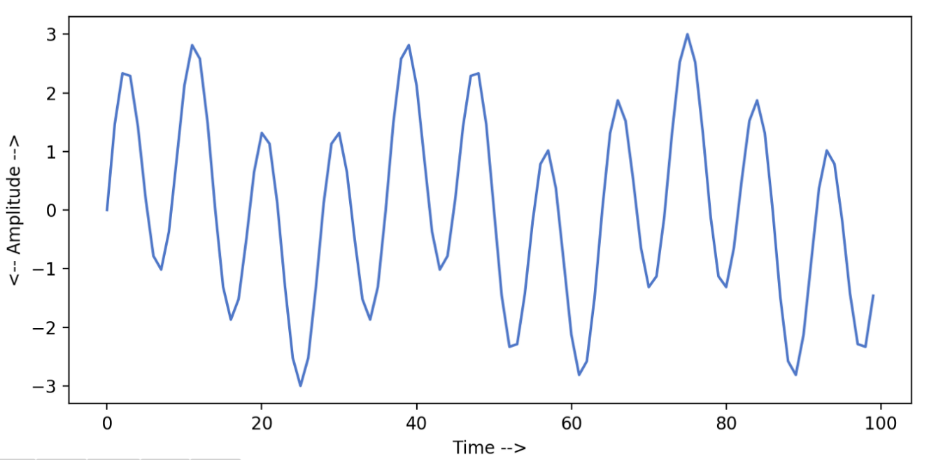

Backgrounds

Recall that for a sinusoid, we have three key parameters to specify a wave

\[g(t) = A \sin(2 \pi ft + \phi) = A \sin (\omega t + \phi)\]where:

-

$A, \phi , f$ are amplitude, phase, freqency respectively.

-

essentially, Fourier transform gets any function to a sum of those waves by telling us what would be the $A_i, \phi_i, f_i$ for each component (technically, Fourier transform is a function when given frequency $f_i$, what will be the amplitude and phase $A_i, \phi_i$)

where frequency is encoded in the $x$-axis

- for instance, according to the graph, the decomposition to $f=0$ has $A\approx 55$ and $\phi = 0$

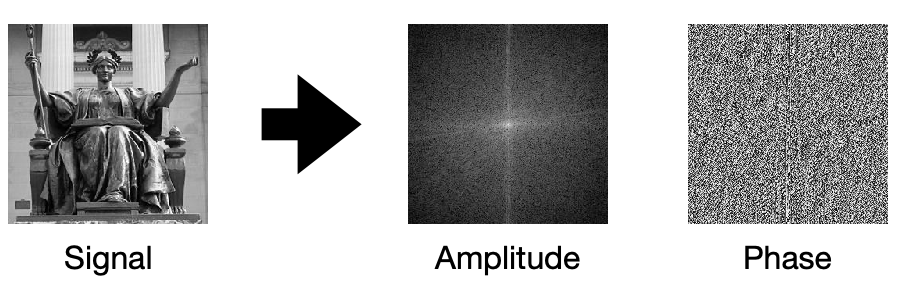

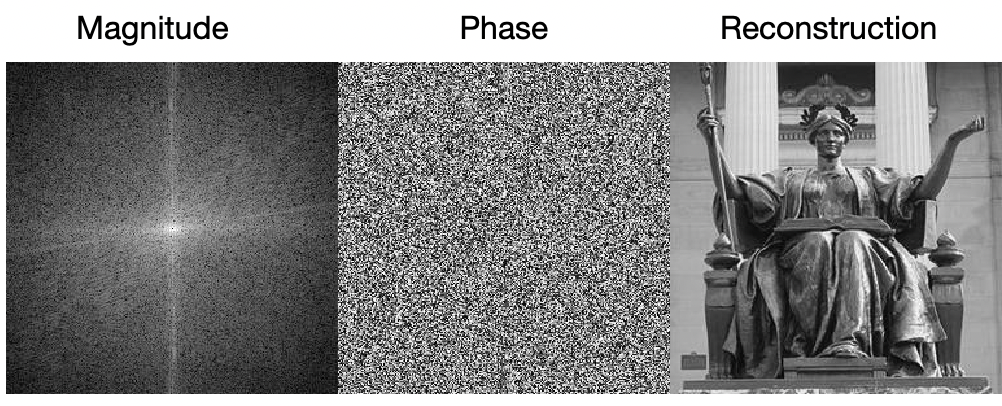

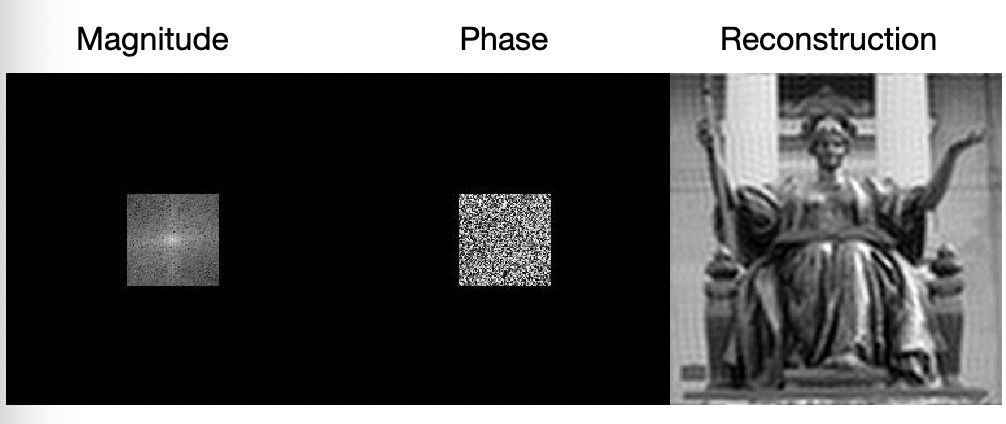

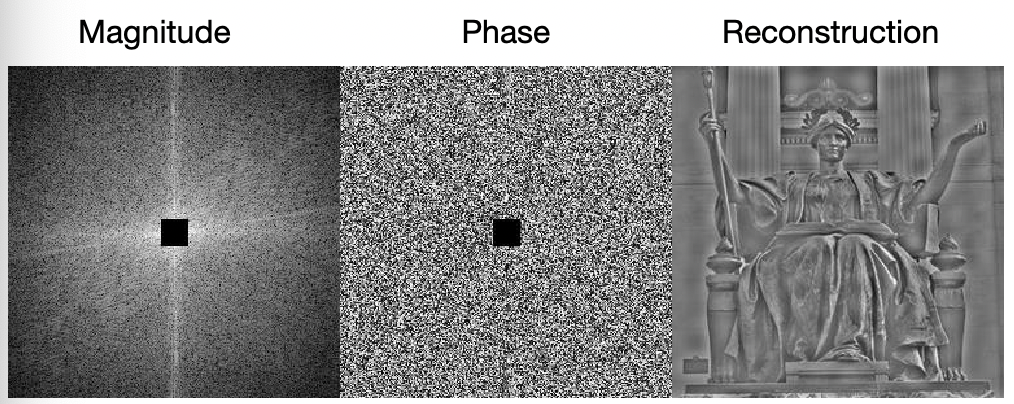

Now, in 2D,

where since our image is in 2D, we will have two axis/two waves: horizontal frequency and vertical frequency.

- typically the coordinate $(0,0)$ will be in the center of the image

- for amplitude graph: black means $0$, white is large

- for phase graph: grey means 0, black means negative, and white means large

- note that fourier series by default generates an infinite amount of waves, yet here we do cut off at certain frequencies

- all those waves are fully specified by $A_i , \phi_i, f_i$, which are all available on the two plots!

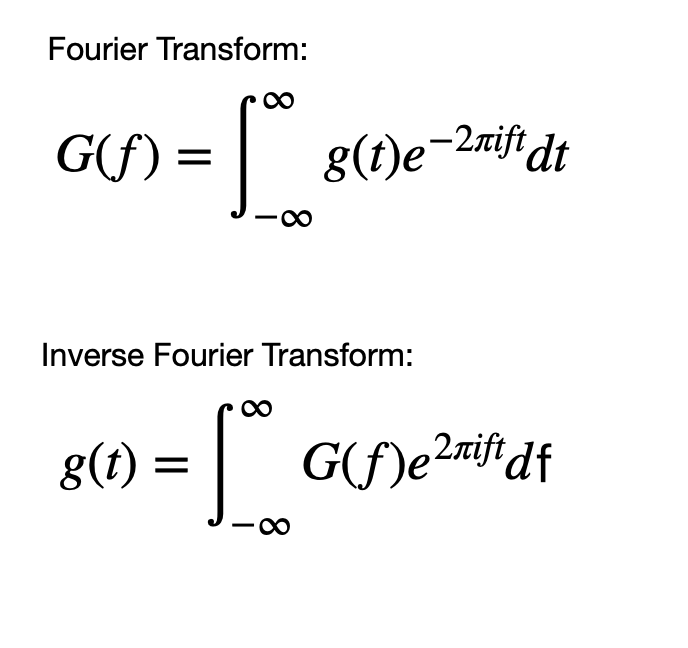

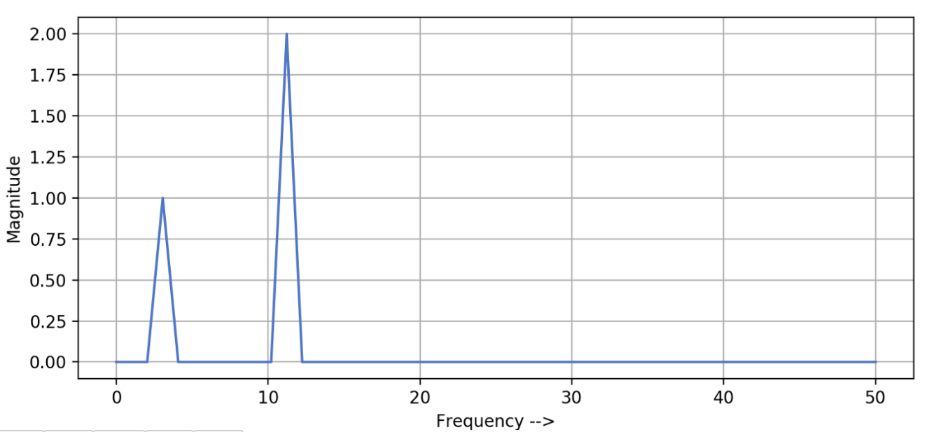

Fourier Transform

Aim: the goal of this is to find a procedure, that

- given some signal wave $g(t)$, or $g(x,y)$ if you think of images, and a frequency $f$ of interest

- return $A_f, \phi_f$ being the amplitude and phrase corresponding to that $f$

so essentially tells you the $f$-th term in the fourier series.

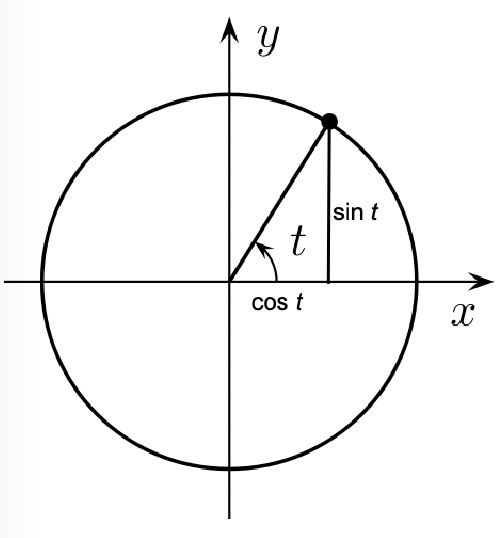

Recall that we can we know

\[e^{ift} = \cos (ft) + i \sin (ft)\]Then, if we increase $t$, we will basically find a unit circle

where the vertical component will be $i$. So this could represent a wave!

- e.g. increasing amplitude means a larger circle

Then, we can consider $Ae^{ift}$ with different $A$ and frequency $f$:

where:

- essentially we can imagine the sinusoidal as unit circles but with different amplitude and different frequency (time taken to complete an entire revolution)

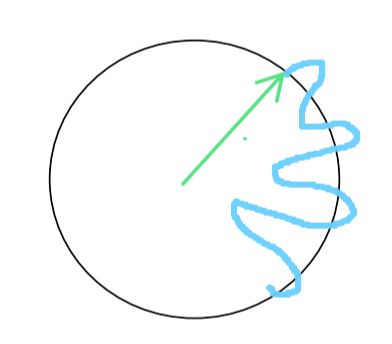

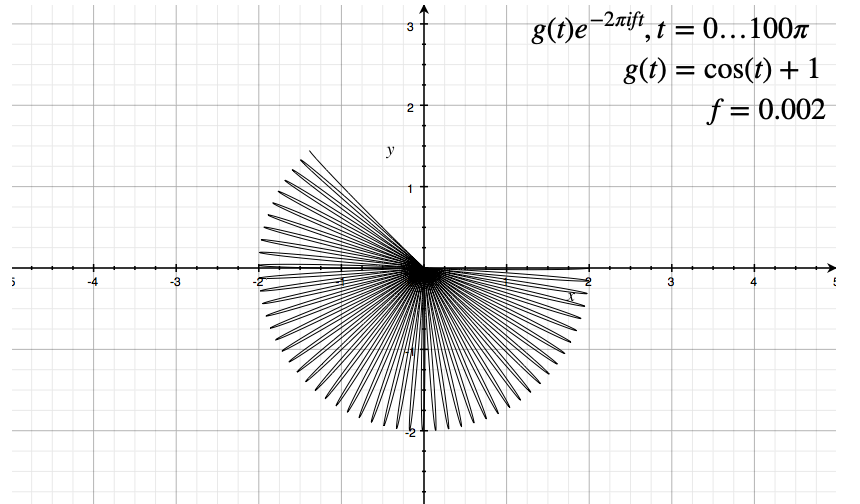

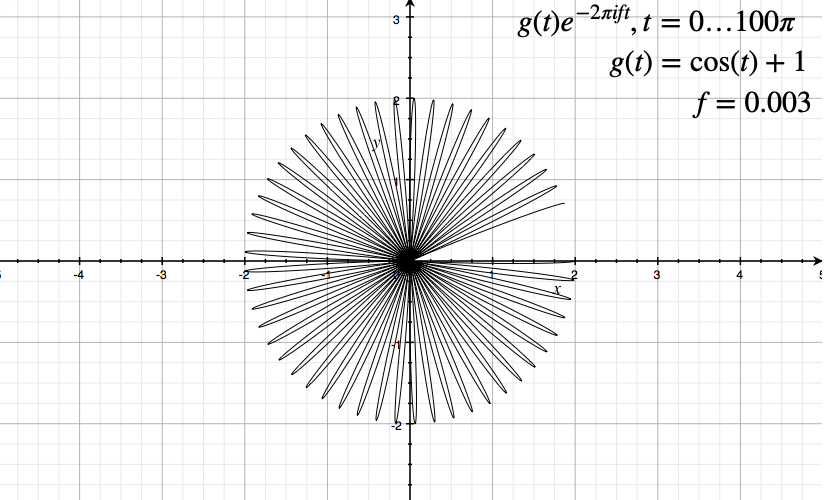

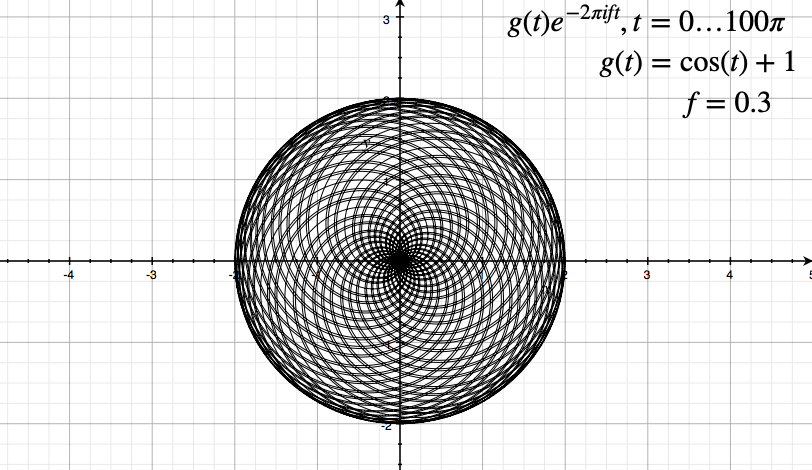

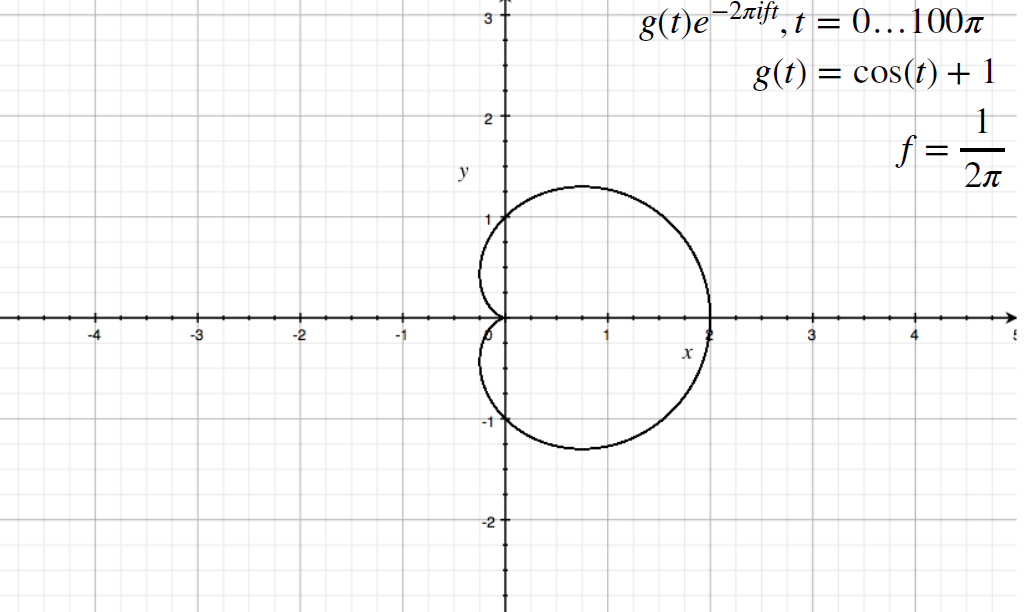

Now, consider that we are modulating the amplitude by the signal

\[g(t) e^{-2\pi ift}\]then essentially:

- while you are revolving the circle, you are “wrapping the original wave/signal $g(t)$” around it

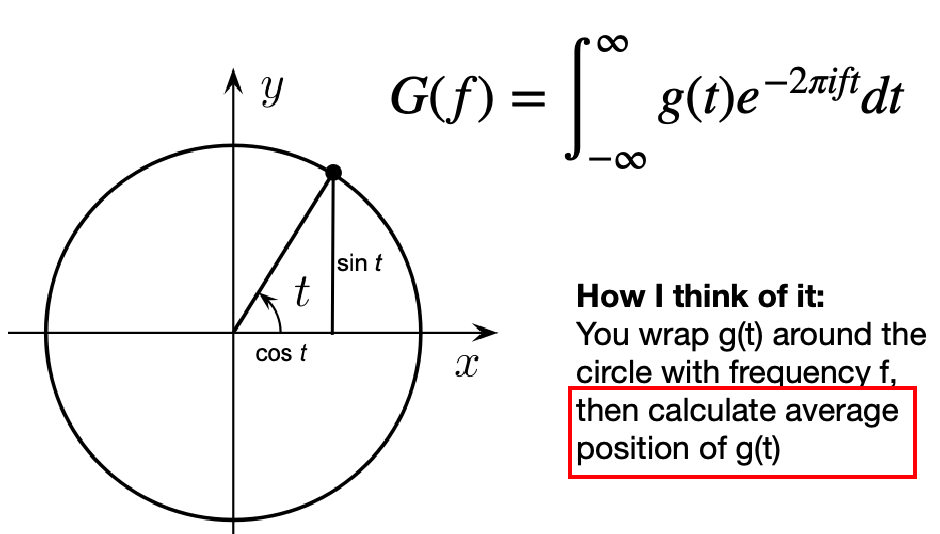

Then, fourier transform does:

\[G(f) = \int_{\infty}^{\infty}g(t)e^{-2\pi i ft}dt\]which is basically can be thought as calculating the average position of $g(t)$, when given some frequency $f$

notice that:

- the function output is in frequency domain, where as the original signal is in $t$ domain

- with different frequency, the final shape/average position might be different (see below)

For Example

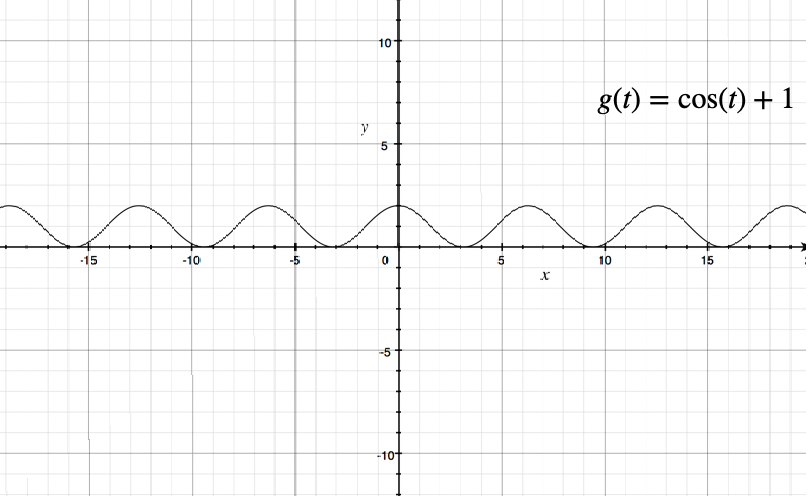

Consider the following original signal:

Then:

| Low Frequency | Slightly Higher Frequenct | |

|---|---|---|

|

|

|

where we notice that we only plotted for a finite amount of time, instead of $t \in [-\infty, \infty]$

-

since $g(t)=\cos(t)+1$, there are time when amplitude $g(t) \to 0$. Hence they go back to the origin on the graph.

-

for a different frequency, we have a finite amount revolved as time is finite here

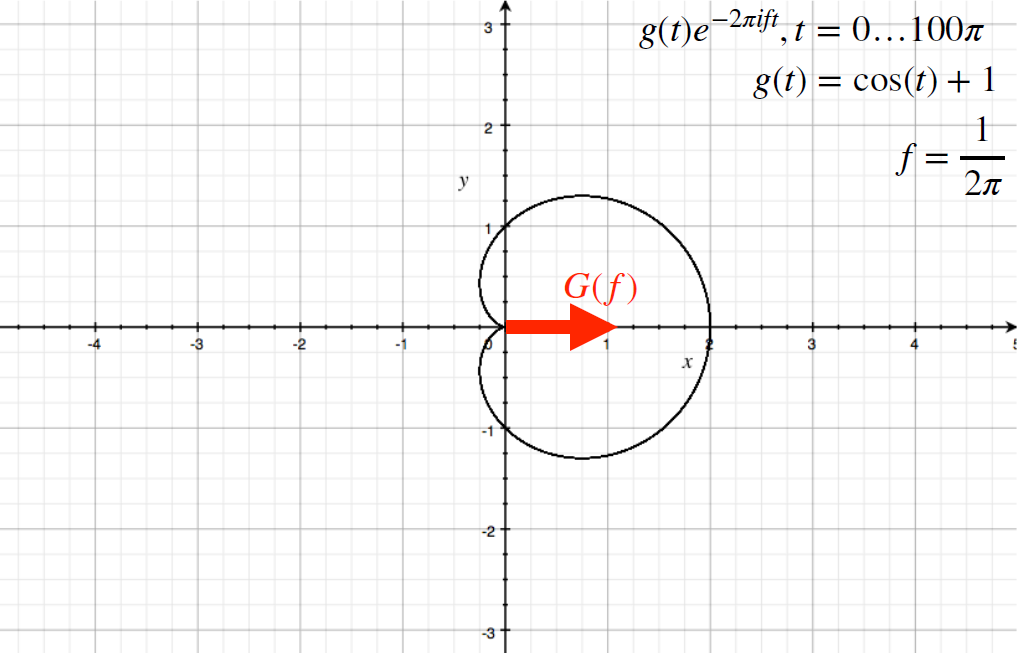

Then, if we consider the average, i.e. the center of mass, the following images

| Original | Computing $G(f)$ |

|---|---|

|

|

which then means $G[f=1 /(2\pi)]$ spits out approximately $1 + 0i$.

-

notice the output is always a Complex number.

- then, since we can do this for many different frequencies, we have a function of frequency $G(f)$

- it can be shown that the “angle” of the complex vector will always be $0$ if there is no phase.

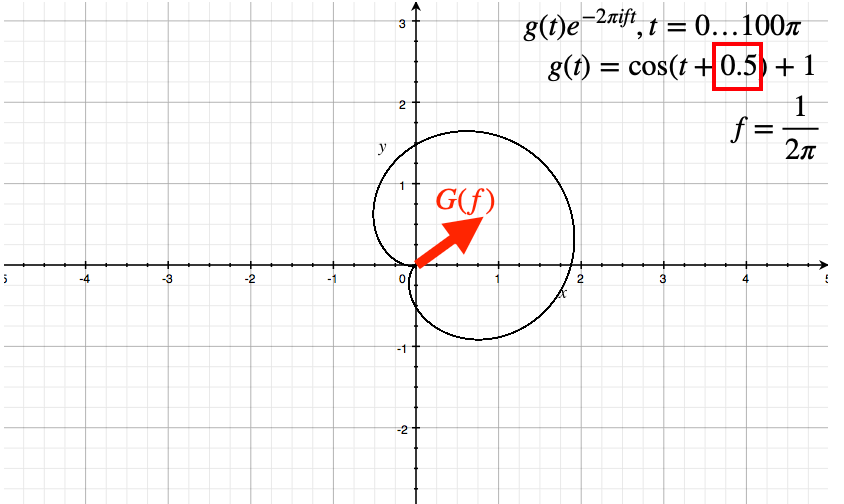

This means that If I do a phase shift, then essentially I start the wave at another position. Hence this results in the following:

where we have rotated the circle

- so the angle of the vector has information about the phase

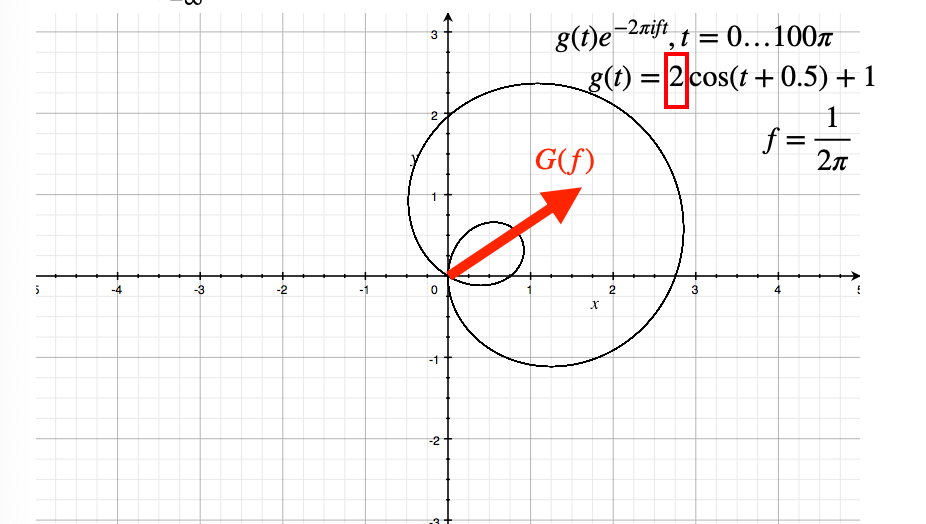

where the circle is a bit bigger.

-

so the magnitude of the vector has information about the amplitude

-

so if an amplitude of zero, this means that that frequency wave is not contributing to the $G(f)$

Then, the general formula would be:

\[G(f) = \int_{\infty}^{\infty}g(t)e^{-2\pi i ft}dt =\mathbb{R}[G(f)] + i\cdot \mathbb{I}[G(f)]\]has a real and an imaginary part, hence:

\[\begin{cases} \sqrt{\mathbb{R}[G(f)]^2 + \mathbb{I}[G(f)]^2}, & \text{amplitude}\\ \tan^{-1}(\mathbb{I}[G(f)] / \mathbb{R}[G(f)]), & \text{phase} \end{cases}\]so a single complex number output of $G(f)$ has all the information about amplitude and phase!

Note

In reality, you will have $g(t)$ taking a discrete domain (as you will see, essentially $g(x)\to g(x)$ if we think about position in the image). The number of frequencies you need to describe it will be the same as the number “positions” you have in your discrete $g(t)$, i.e. size of the domain.

Finally, for the 1D case:

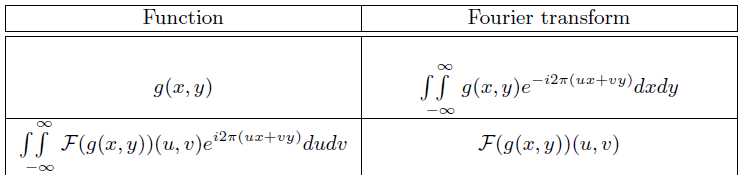

Then for a higher dimension, you will just be having multiple integrals over $dt_x dt_y$ for instance:

where:

- $(x,y)$ would be the position in your image, and $u,v$ would be horizontal and vertical frequencies

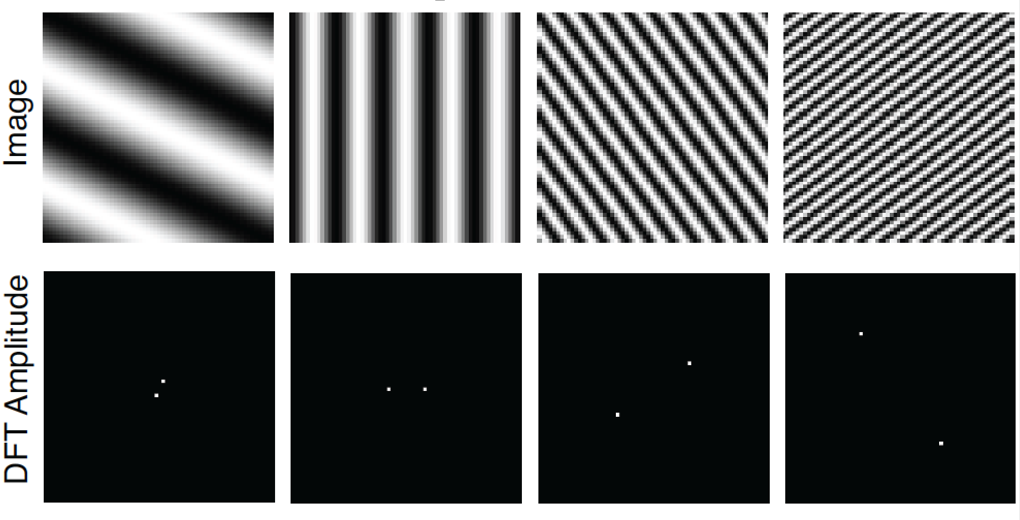

For Example

where this means:

- for the first column: the only waves that are “contributing” are the low frequency waves (because only those have non-zero amplitude/white dots). There is a tilt because the original wave in the image $g(x,y)$ has a phase.

- the higher the frequency in the image, we therefore have a larger magnitude of the vector of $G(f)$, hence farther away the activated points in the frequency domain

Note

For any signals that takes only takes real component, the amplitude will be symmetrical.

- an easy way to think about is that you will need to “cancel out” the imaginary component, as images are real

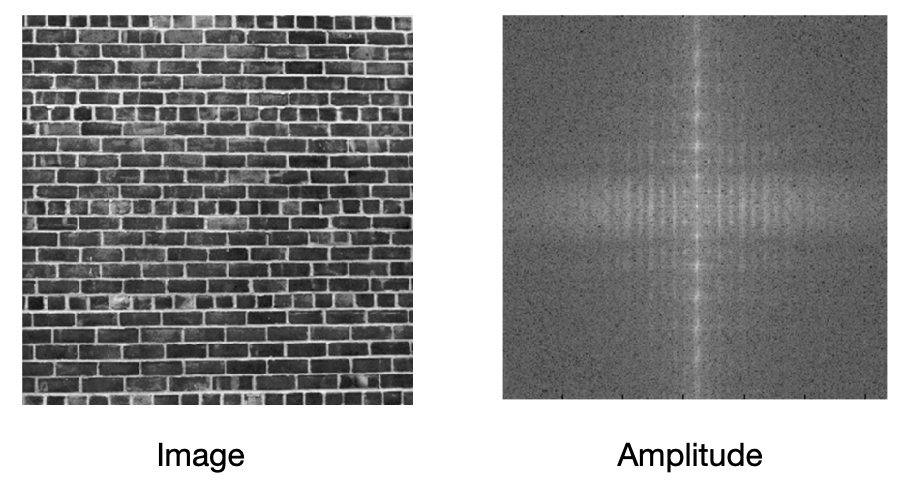

Another real life example would be:

where:

- recall that horizontal and vertical component of the amplitude graph are frequencies

- in the image, horizontal sinusoids will have a low frequency component being more dominant, because the horizontal part of the image have rather slow “changes”. Hence, we have mostly low horizonal frequency activated in the $G(f)$

- in the image, vertical sinusoids will need high frequency component, since the change/sinusoids in the original image vertically is fast. Therefore, we see high vertical frequency activated in the $G(f)$

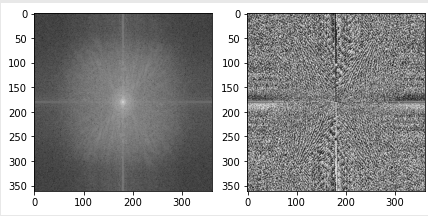

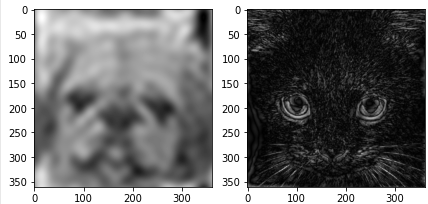

In code, this is how it is done:

cat_fft = np.fft.fftshift(np.fft.fft2(cat))

dog_fft = np.fft.fftshift(np.fft.fft2(dog))

# Visualize the magnitude and phase of cat_fft. This is a complex number, so we visualize

# the magnitude and angle of the complex number.

# Curious fact: most of the information for natural images is stored in the phase (angle).

f, axarr = plt.subplots(1,2)

axarr[0].imshow(np.log(np.abs(cat_fft)), cmap='gray')

axarr[1].imshow(np.angle(cat_fft), cmap='gray')

For Example: Blurring and Edge detection

Originally, we would have the image as:

Then if we remove the high frequency

notice that:

- this is the same effect as blurring the photo (we see why convolving with Gaussian filter is the same as this soon)

Then, if we remove low frequency

note that:

- this is the same as edge detection

In code, this is how it is done:

# we can create a low mask utlizing outer product

filter = np.zeros_like(cat_fft)

w, h = filter.shape

box_width = 10

filter[w//2-box_width:w//2+box_width+1, h//2-box_width:h//2+box_width+1] = 1

# high and low mask filter

high_mask = 1 - filter

low_mask = filter

Then applying the filter to FFT version of the image

# filtering fft, elementwise dot

cat_fft_filtered = high_mask * cat_fft # cat_fft = np.fft.fftshift(np.fft.fft2(cat))

dog_fft_filtered = low_mask * dog_fft

cat_filtered = np.abs(np.fft.ifft2(np.fft.ifftshift(cat_fft_filtered))) # shift back and then transform

dog_filtered = np.abs(np.fft.ifft2(np.fft.ifftshift(dog_fft_filtered)))

f, axarr = plt.subplots(1,2)

axarr[0].imshow(dog_filtered, cmap='gray')

axarr[1].imshow(cat_filtered, cmap='gray')

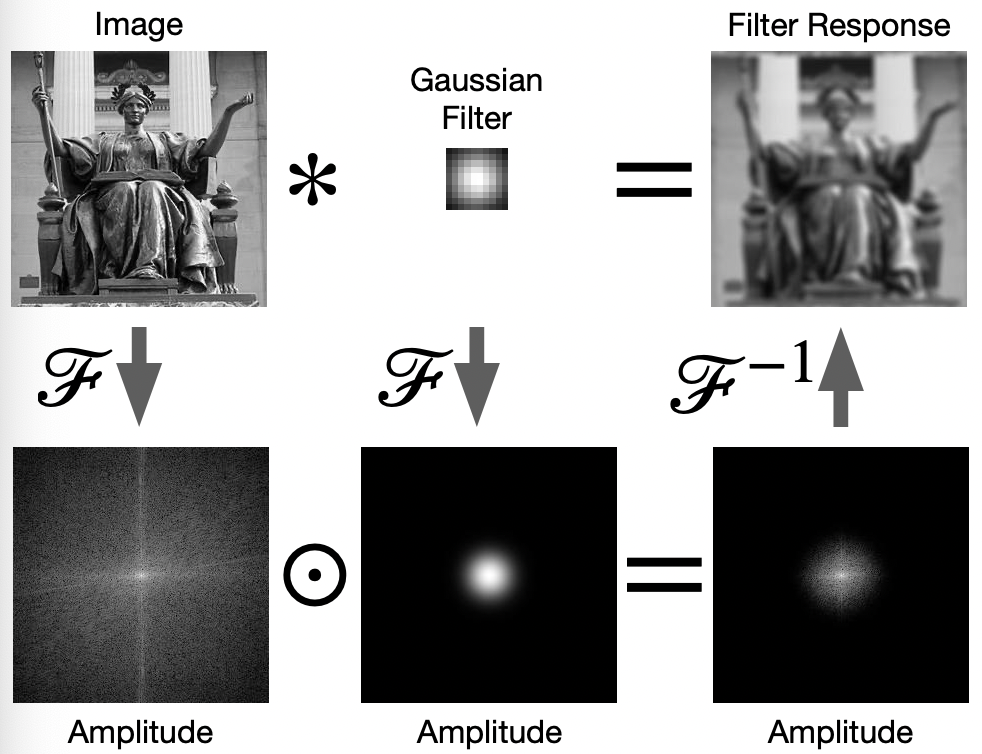

Convolution with FT

Now it turns out that:

Theorem

Convolution in $x,y$ space is element-wise multiplication in frequency space

\[g(x) * h(x) = \mathcal{F}^{-1}[\mathcal{F}[g(x)] \cdot \mathcal{F}[h(x)]]\]and convolution in frequency space is the same as element-wise multiplication in $x,y$ space:

\[\mathcal{F}[g(x)] * \mathcal{F}[h(x)] = \mathcal{F}[g(x) \cdot h(x)]\]where the 2D version of this is analogous.

This means you could speed up convolution operation since element-wise multiplication can be done fast (technically this also depends on the speed you Fourier transforms)

- if your filter is huge, then doing Fourier Transformation and element-wise dot product is fast

- e.g. if your image is size $n \times m$, and filter size $n \times m$, with padding, you will get $O(n^2m^2)$ if doing convolution

- if your filter is small, then convolution in space would be faster

- as Fourier transform takes time

- This is also why we mentioned to treat essentially an image/filter as a function! (i.e. $g(x), h(x)$ shown in the text)

For instance:

notice that:

-

in reality, applying Fourier Transform returns your a matrix of complex numbers (i.e. the vector of $G(f)$). So technically you are doing element-wise multiplication for those complex numbers

-

but for visualization, let us only consider the amplitude of the returned complex vectors in $G(f)$. (so if that is zero, than means the particular frequency wave is not useful) Then, element-wise multiplication with a Gaussian filter is basically removing high frequency details.

- note that FT of Gaussian is still a Gaussian

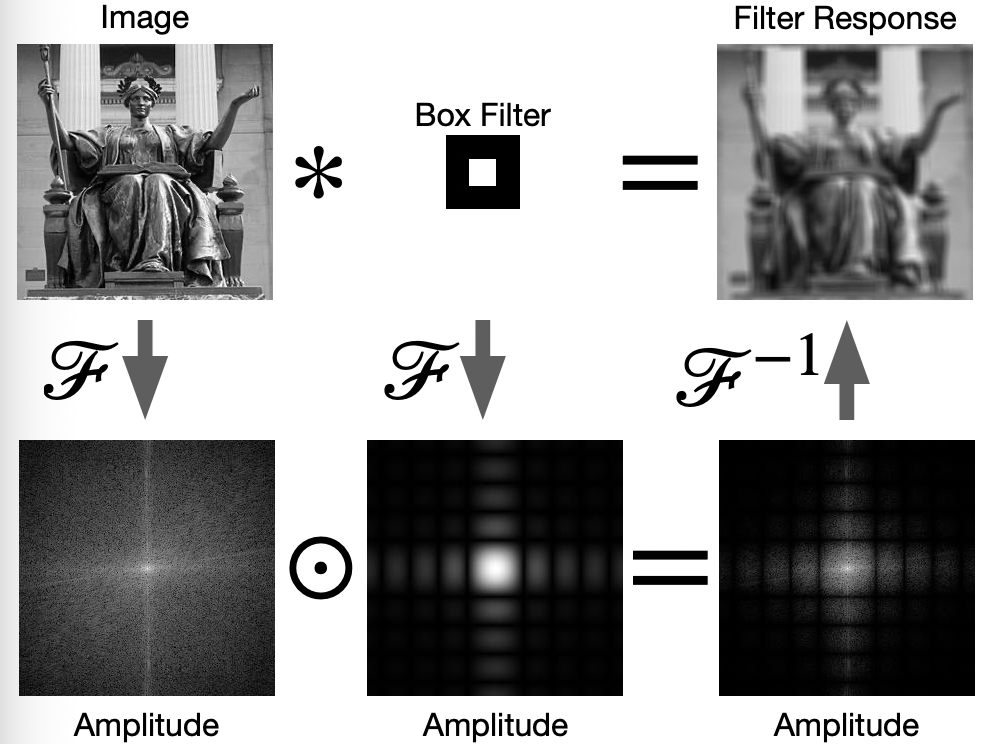

For Example

Now, it makes sense that why box filters have the following effect

which is suboptimal as compared to Gaussian filter. This is because when we do Fourier transform for box wave:

we had high frequency terms involved!

Therefore, the FT of box filter looks like:

which included some high frequency noises.

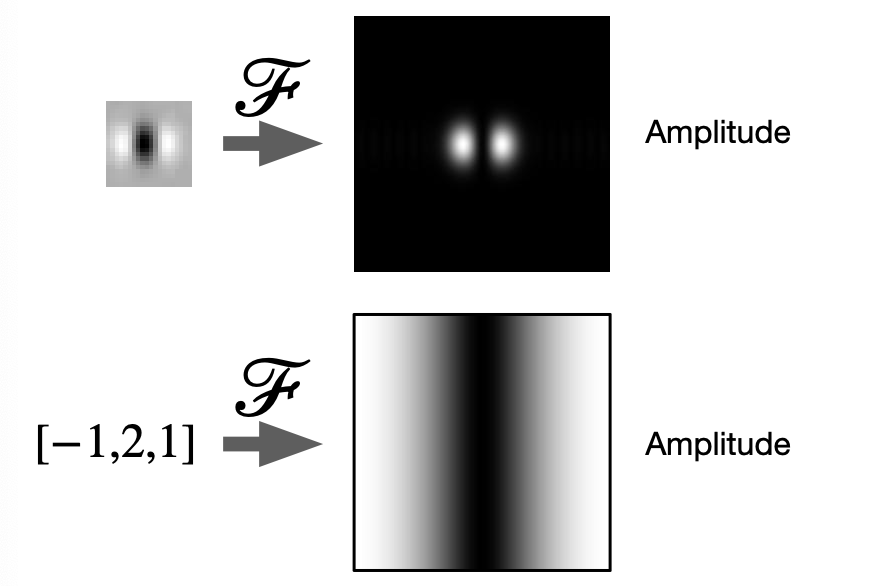

For Example: Laplacian Filter

In reality, we often use the following instead of $[-1,2,1]^T$ as Laplacian filter:

This is because, if we consider the Fourier transform

where we notice that

-

just using $[-1,2,1]^T$ would have included lots of high frequency noise, as shown on the bottom

-

but we want to remove both details and those noise to leave edges. Hence:

- involve a Gaussian blurring = removing high frequency

- perform $[-1,2,1]^T$ filter to remove low frequency details

The end product is what we see on the top, which is the commonly used Laplacian filter

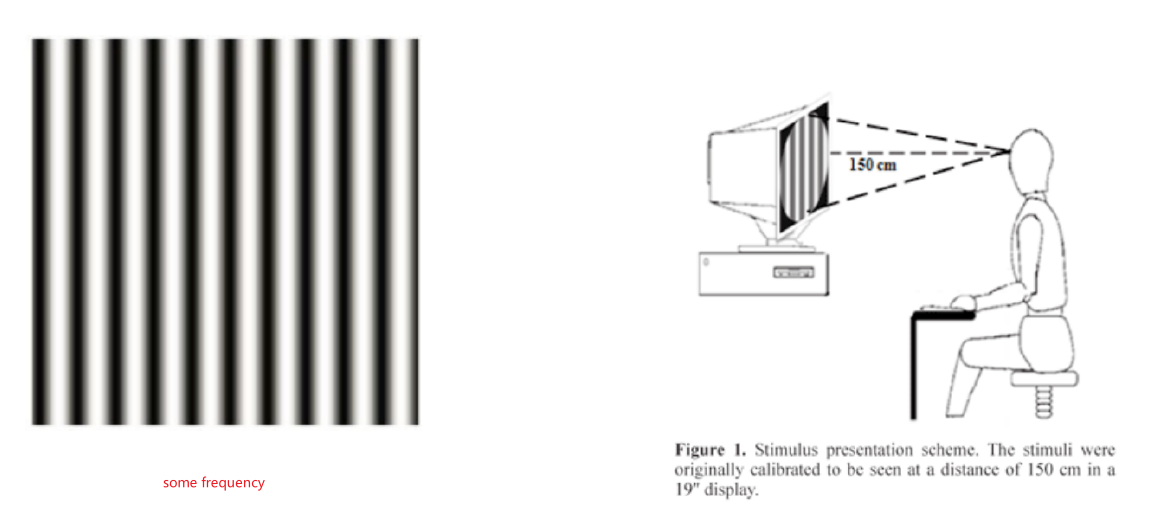

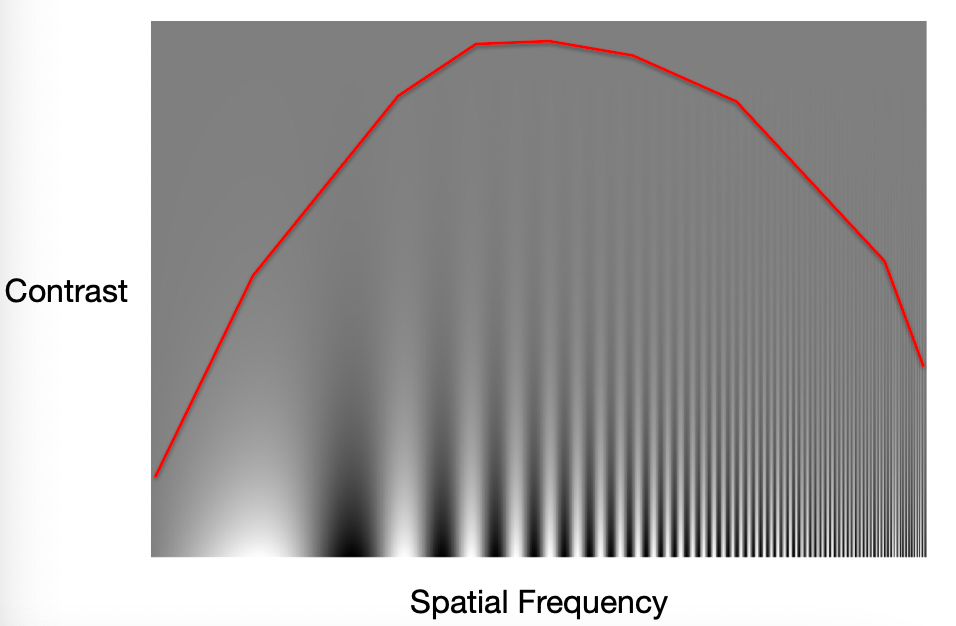

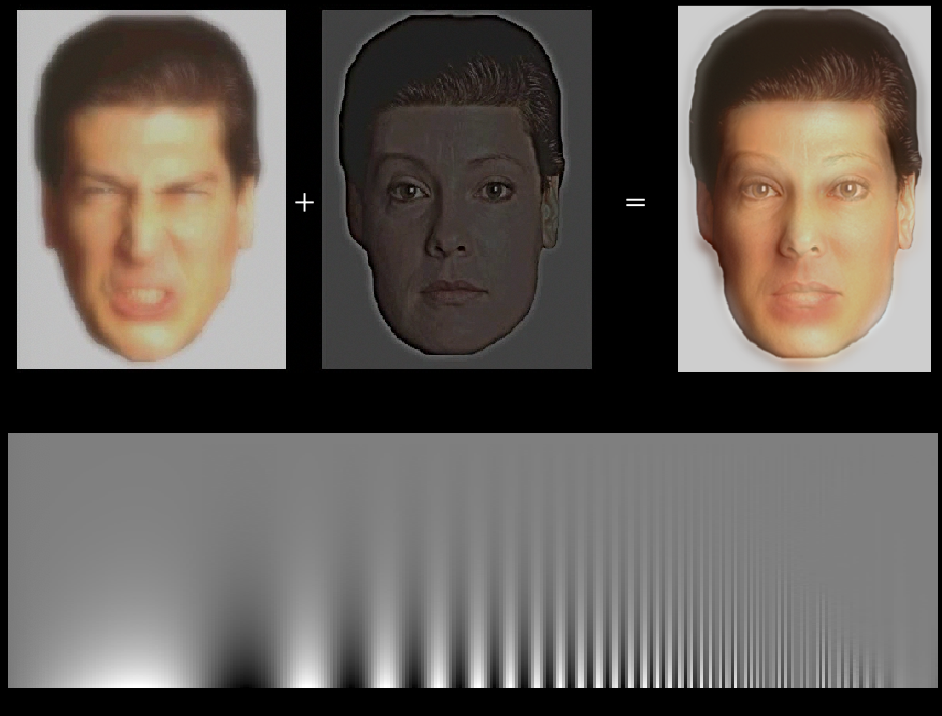

Hybrid Image

This is more of a interesting application of Fourier transform. Consider the question: What frequency waves can we see from a monitor if you are exactly 150cm away?

where the key idea is that you will not be able to perceive certain frequencies well.

The result shows that:

hence, any wave with configuration above the red line, people cannot see the wave/they see just grey stuff

-

contrast is brightness/amplitude

-

then maybe you can hide data above the red line

For example:

Consider keeping only low frequency data of a man’s face with high frequency data of a women’s face:

so that:

- depend on how far away you are, the red line is at different position.

- when you are far, the high frequency details you will not be able to discern. But when you are close, you will be able to see the high frequency

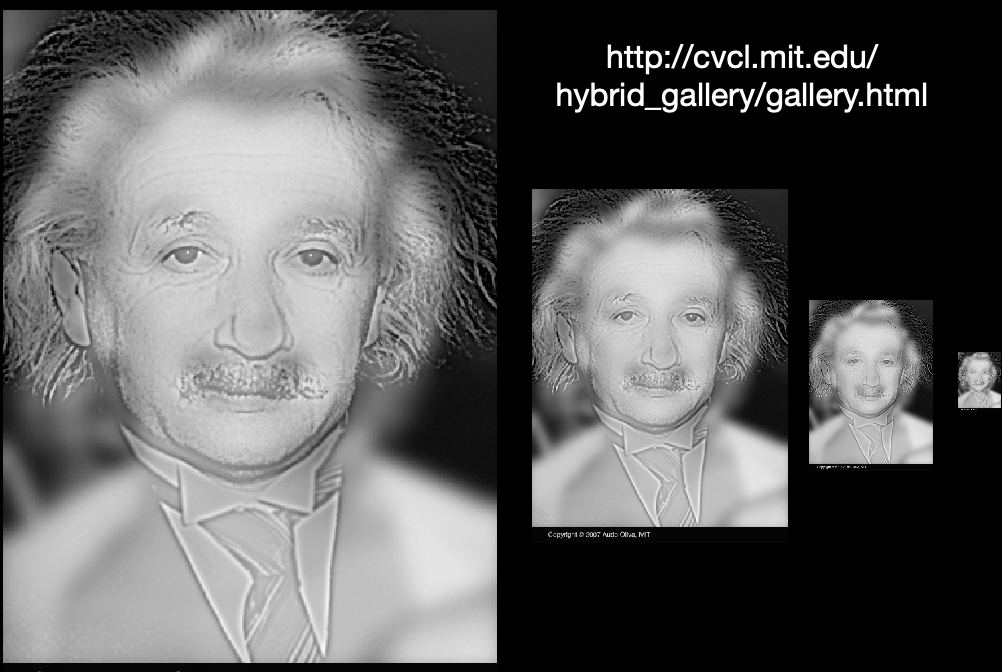

Then another example:

where Einstein will be encoded in the high frequency data.

- here we scaled them so you can experience see the image “from afar”

Machine Learning

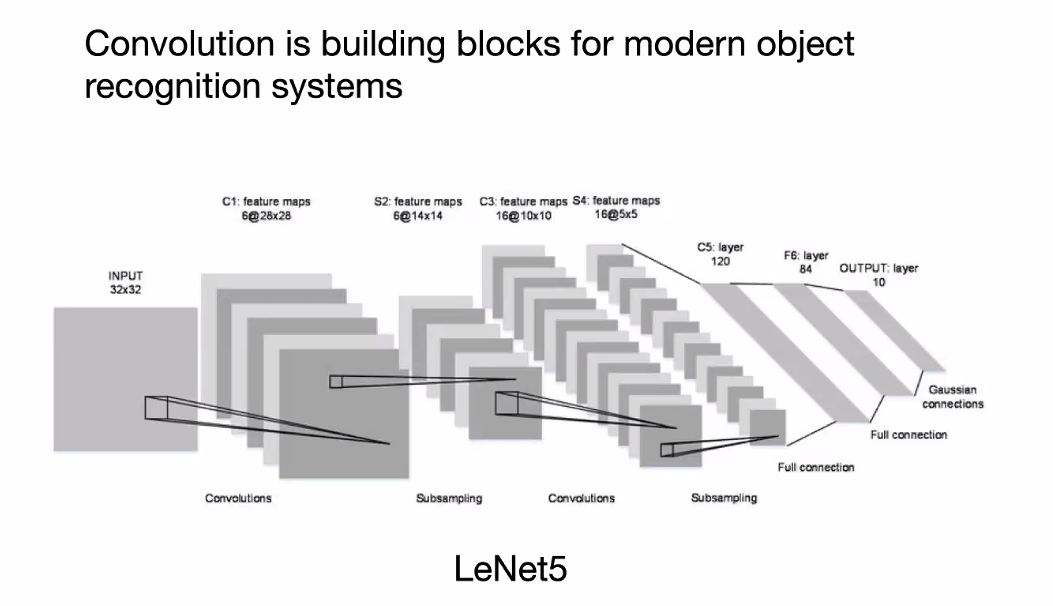

If you take this class 10 years ago, you would be majorly doing maths to design filters, such that properties such as shift invariance is satified. However, it turns out that those filters/kernels can be learnt by ML/DL architectures.

- specifty the constraints, such as Toeplitz matrix, then let the machine learn it

Regression Review

Checkout the ML notes on reviewing the basics of regression

\[\hat{y}=f(x;\theta)\]where:

- $\theta$ willl be our parameters to learn

- the difference between regression/classification is basically the loss you are trying to assign

Objective function is essentially what drives the algorithm to update the parameters:

\[\min \mathcal{L}(\hat{y},y)\]Some notes you should read on:

- Linear Regression and Logistic Regression

- checkout how to prove that XOR problem is not solvable by linear models

- Convolutional Neural Network

- Backpropagation

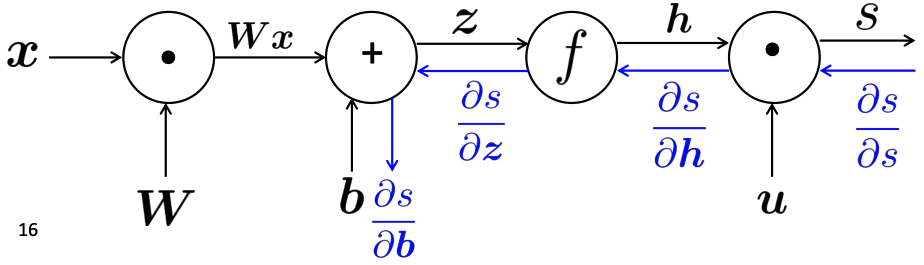

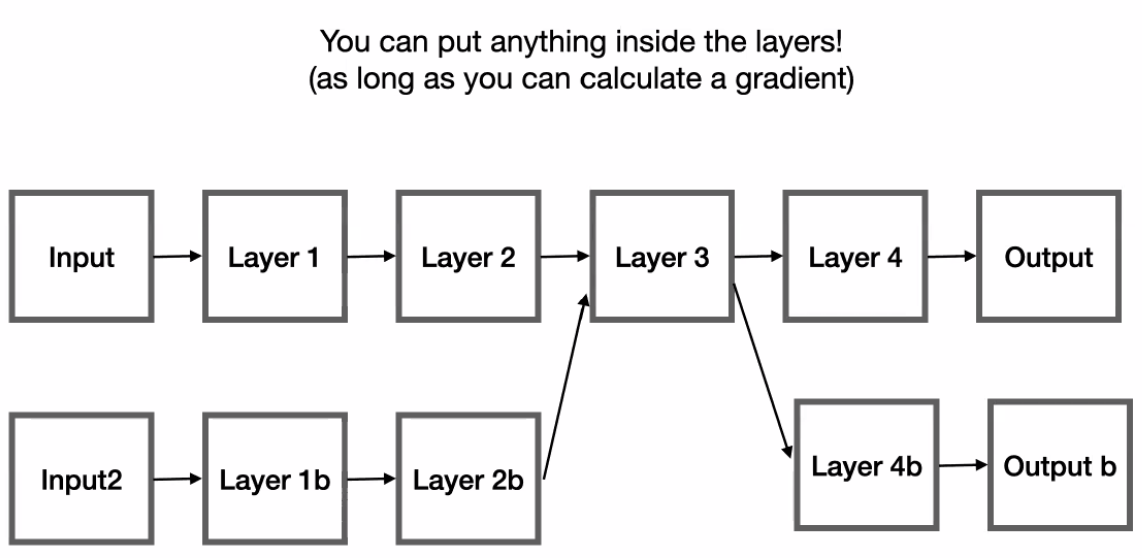

Some key take-aways:

-

Essentially we are having computation graphs

then your network architecture eventually is about what operation you want for each block.

Then, essentially you will have a loss that is a nested function:

\[\mathcal{L} = f(W^3f(W^2f(W^1x)))\]then I ask you to compute $\partial L / \partial W^1$? You realize that computing this needs:

- $\partial L / \partial W^3$

- $\partial L / \partial W^2$

Hence you realize that you can

- compute everything in one go by backpropagation.

- you have a dependency tree, where the latest layer $\partial L / \partial W^3$ will get used by all other children nodes. So it makes sense to do backpropagation.

Note:

A good trick you can use to compute derivative would be the following. Consider:

\[y = W^{(2)}h+b^{(2)}\\ L = \frac{1}{2}||y-t||^2\]And we need $dL/dh$:

-

consider scalar derivatives:

\[\frac{dL}{dh} = \frac{dL}{dy}\frac{dy}{dh} = \frac{dL}{dy}W^{(2)}\] -

Convert this to vector and check dimension:

\[\frac{dL}{dh} \to \nabla_h L\]hence:

\[\nabla_hL = (\nabla_y L) W^{(2)},\quad \mathbb{R}^{|h| \times 1}=\mathbb{R}^{|y| \times 1}\times \mathbb{R}^{|y| \times h}\] -

Correct the dimension to:

\[\mathbb{R}^{|h| \times 1}=\mathbb{R}^{h \times|y|}\times \mathbb{R}^{|y| \times 1}\]which means:

\[\nabla_h L = W^{(2)^T}(\nabla_y L)\]

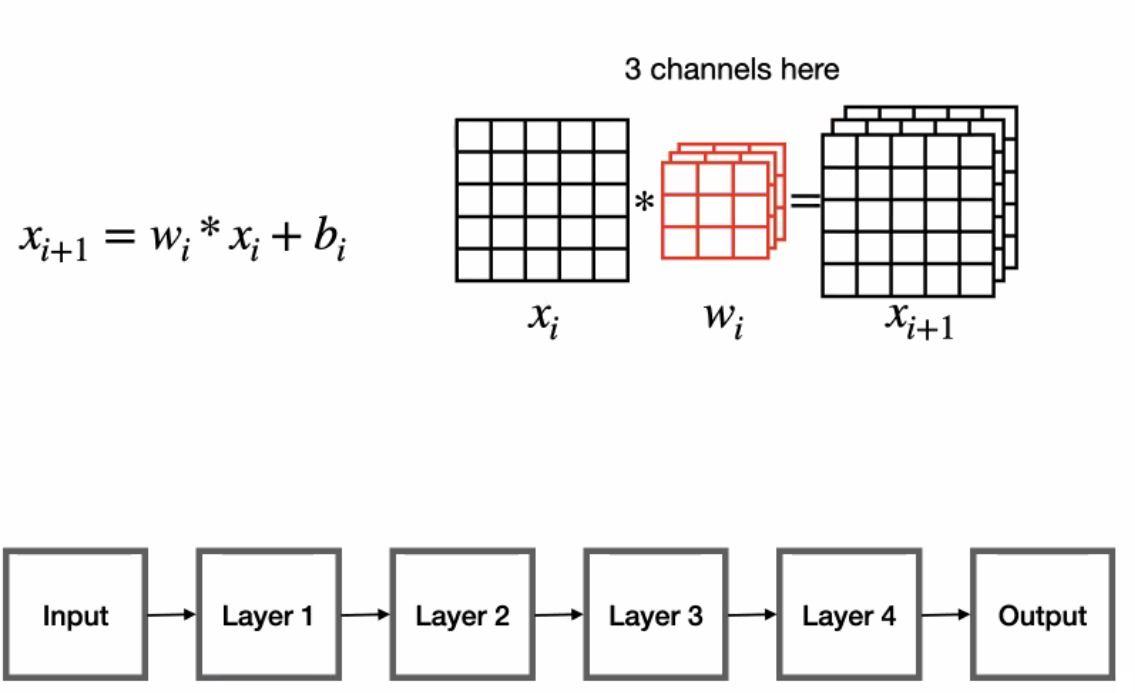

Convolution Layer Review

Review the CNN chapter of DL

-

Instead of linear layers that does $W^Tx + b$, consider doing convolution operation $*$:

Separated Compact Overview

then question is then, what is the gradient of this operation?

-

another frequently used layer is max-pooling. For instance, $2 \times 2$ with stride $2$ does:

why would you want to do this?

- e.g. when you are detecting cats in an image, and certain neurons get triggered, you can use max pooling to only focus on those activated values (easier for classification head as you ignore low value ones)

- cheap resize operation which can cut down the number of neurons/connections for further layers

- the gradient defined here would be:

- $1$ for the pixel that is the max

- $0$ otherwise.

-

batch normalization also very important

\[x_{i+1} = a_i \frac{x_i - \mathbb{E}[x_i]}{\text{Var}(x_i)} + b_i\]where:

- $a_i$, $b_i$ is the scaling and shift parameter

- this is called batch normalization as this operation will be applied the same way to the entire batch.

-

dropout: a layer where with some probability we output $0$

\[x_{i+1}^j = \begin{cases} x_{i+1}^j & \text{with probability $p$}\\ 0 & \text{otherwise} \end{cases}\]which is pretty helpful for preventing overfitting.

-

Softmax: we are doing some kind of max, but also making sure we can compute the gradient

\[x_{i+1}^j = \frac{\exp(x_i^j)}{\sum_k \exp(x_i^k)}\]which can also be interpreted as a probability distribution

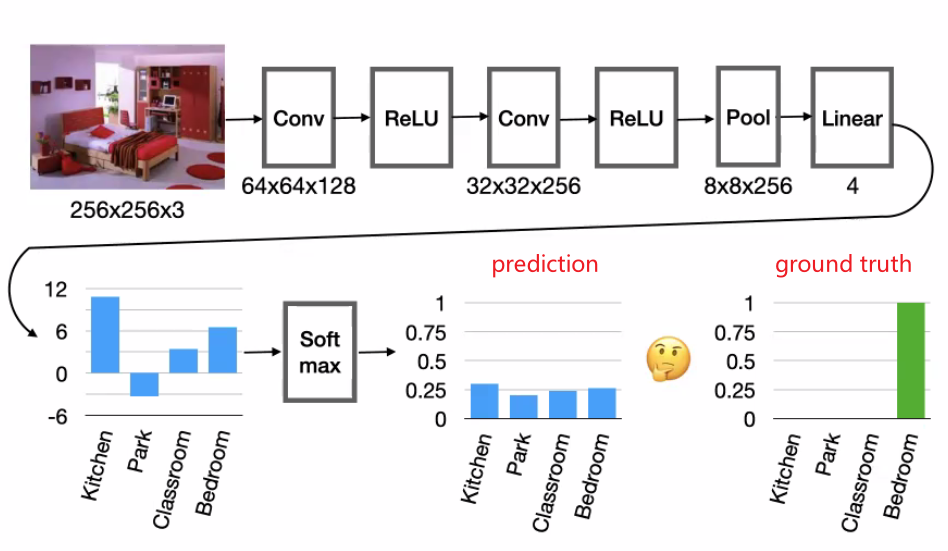

Then an example CNN looks like

then in order to train your network/take gradient, you would need to define $\mathcal{L}$.

-

typical loss function would be cross entropy loss: Average number of bits loss/needed to encode $y$ if the coding schema from $\hat{y}$ is used instead.

\[\mathcal{L}(y,\hat{y}) = - \sum_{i} y_i \log(\hat{y}_i)\] -

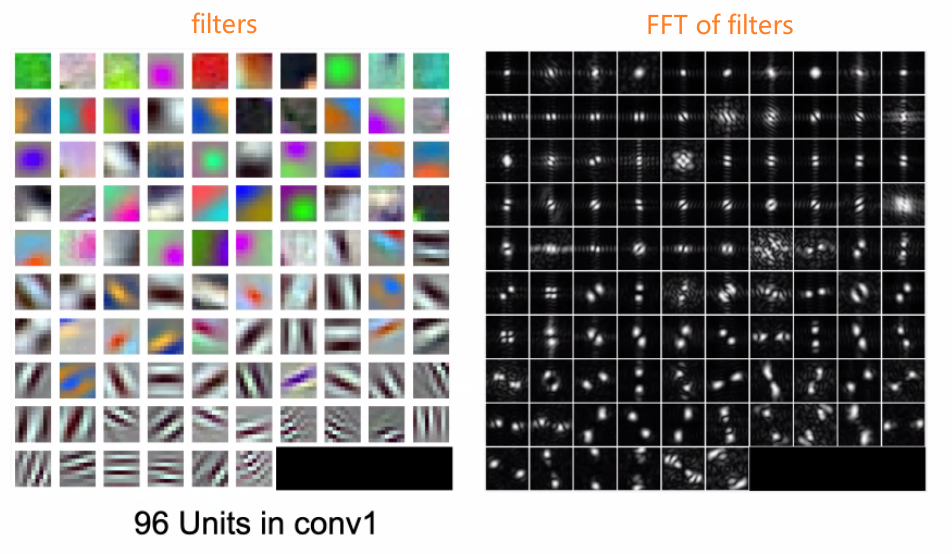

once done, you can also look at the filters/weights learnt and visualize them

where notice that:

- the top FFT means that we are concentrating on low frequency data

- the bottom FFT shows that they look at top frequency data

Note: Why ReLU?

\[\text{ReLU}(a)=\max(0,a),\quad a = Wx+b\]Then

- One major benefit is the reduced likelihood of the gradient to vanish. This arises when $a>0$. In this regime the gradient has a constant value. In contrast, the gradient of sigmoids becomes increasingly small as the absolute value of x increases. The constant gradient of ReLUs results in faster learning.

- The other benefit of ReLUs is sparsity. Sparsity arises when a≤0a≤0. The more such units that exist in a layer the more sparse the resulting representation. Sigmoids on the other hand are always likely to generate some non-zero value resulting in dense representations.

However, there is a Dying ReLU problem - if too many activations get below zero then most of the units(neurons) in network with ReLU will simply output zero, in other words, die and thereby prohibiting learning.

Width vs Depth

We consider:

- width = how many neurons? (i.e. size of weight matrix $W$)

- depth = how many layers? (i.e. how many of those weights to learn)

In reality, there is a interesting theoretical result which is rarely used in reality

Universal approximation theorem: With sufficiently wide network and just one (hidden) layer, you can approximate any continuous function with arbitrary approximation error.

The problem is that

-

it doesn’t specify “how wide we need”, which could be extremely wide hence not computational efficient.

-

but if we go deep, we can backprop and it is in general quite fast

Object Recognition

Why is it so hard for a machine to do object recognitions?

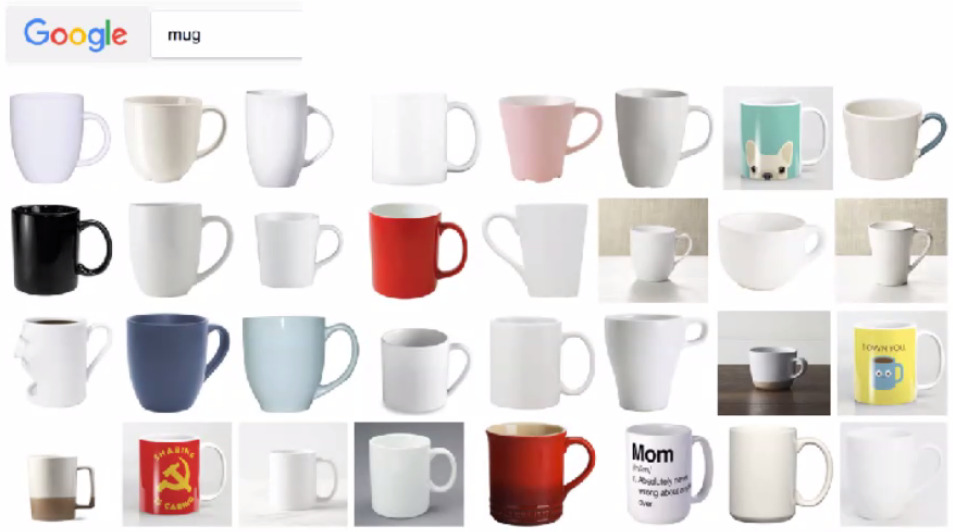

Canonical Perspective: the best and most easily recognized view of an object

- e.g. a perspective so that you can recognize this object very fast

An example would be:

where you should feel that the top row is easier to recognize

-

how can you train a network that works regardless of the perspective?

-

model will also learn the bias

e.g. all handles are almost all on the right!

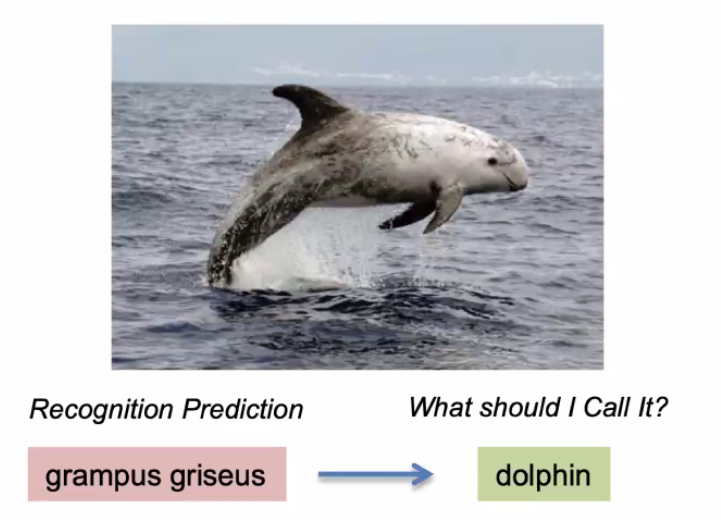

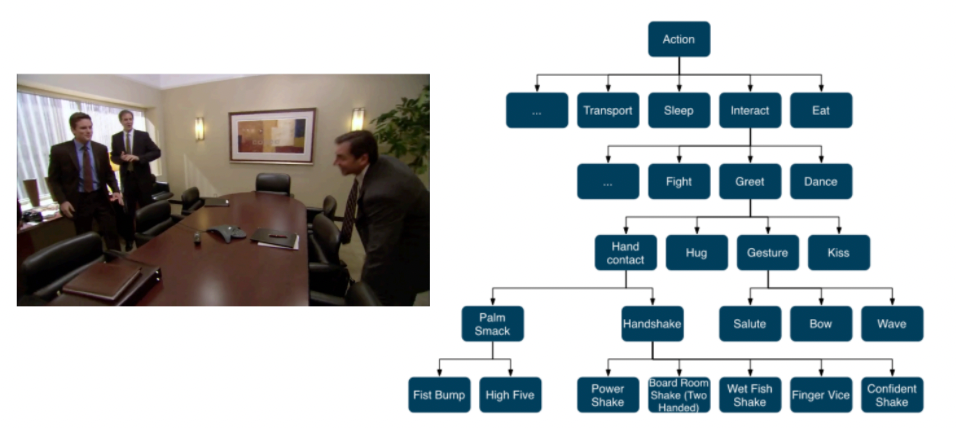

Entry Level Categories: The first category a human pick when classifying an object, among potentially a tree of categories that corresponds to an object.

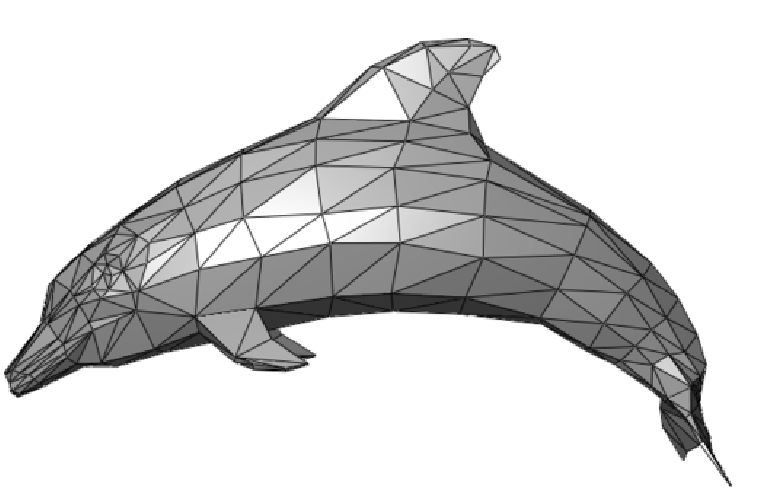

An example would be:

the question is, why did you think of this as a dolphin, but not saying it is “an animal”? A “living being”?

Other problems involve:

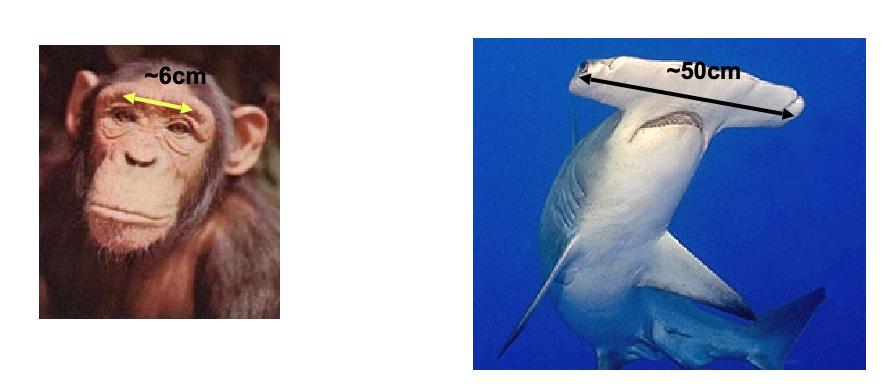

- scale problem

-

illumination problem

-

within-class variation

Note

- In reality, many massive models are trained with data coming from crowdsourcing: paying people around the world to label data (e.g. Amazon Mechanical Turk)

- one large image dataset commonly used is ImageNet - often used as a benchmark for testing your model performance.

Classical View of Categories

One big problem is “what is xxx”? Hot Dog or a Sandwich?

Some natural ways a human think about categorizing an object:

- A category is formed by defining properties

- Anything that matches all/enough of the properties is part of the category

- Anything else is outside of the category

But even this idea could vary, in different people/culture.

-

e.g. in some indigenous people in Australia, people have a single word for “Women, Fire, and Dangerous Things”

-

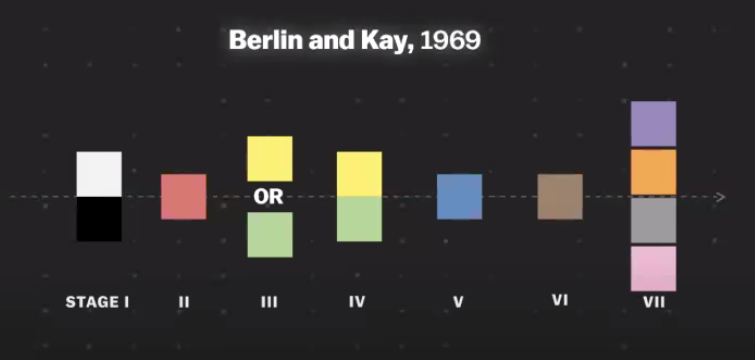

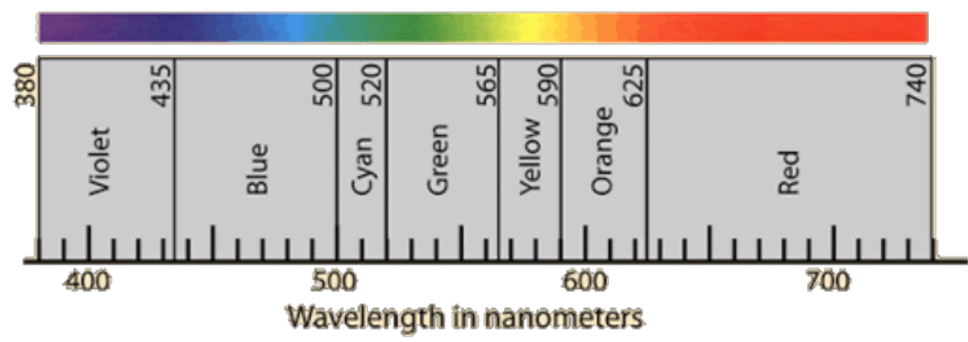

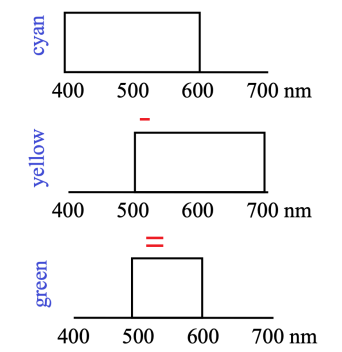

e.g. in a culture, what are the words you use to represent colors?

where:

- if you only have two words for color, which colors will you pick? Black and White

- for three colors, most people gives Red

- the take-away message is that you can think of things even if you don’t have language for it. Yet for machine models, we are categorizing objects based on language (i.e. language label for category)

Another way to define category would be:

Affordance: An object is defined by what action it affords

- e.g. what we can do with it

- e.g. a laptop is a laptop for us, but could be a chair for a pet

A theory of him is that when we see an object, we automatically think about affordance of it, i.e. what we can do with it.

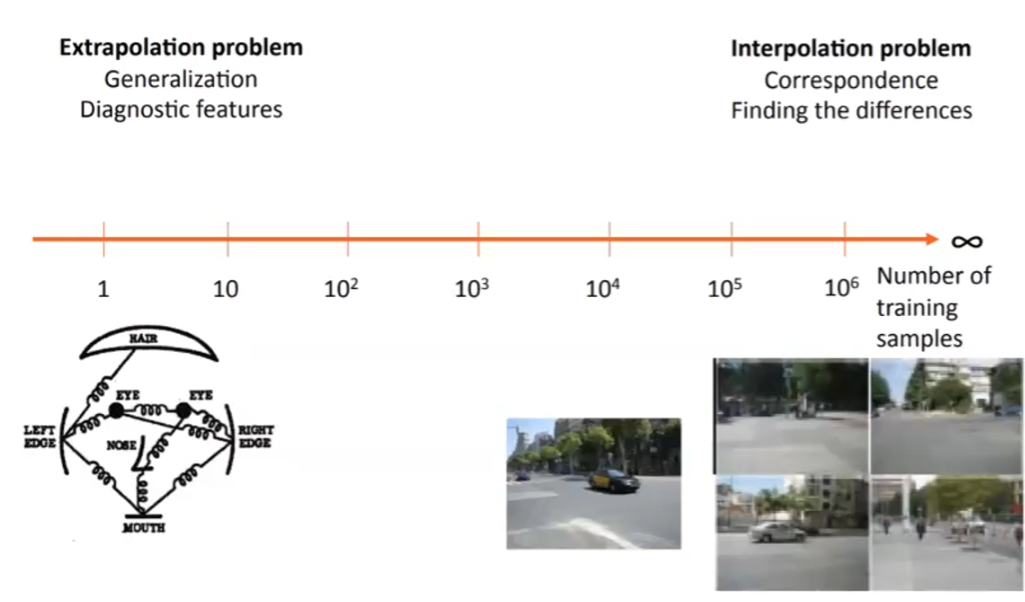

Two Extremes of Vision

In reality, we are always dealing with either of the two occasions:

- we don’t have much data, we need extrapolation to predict things

- we have much data, we need to interpolate and find differences between existing objects

where:

- the latter end of the spectrum would be captured more by NN types of model, which tends to be poor at generalization, so we care a lot of few-shot training/zero-shot training

- for huge training dataset, one reason for test accuracy to be high is that the training dataset distribution does model the true distribution, hence “overfitting” will not really damage performance.

Exemplar SVM

In reality approaches that uses big data to do basically lookup function for classification.

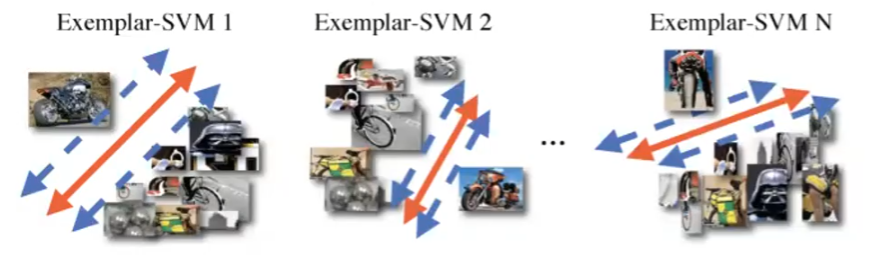

One example is the Exemplar-SVM

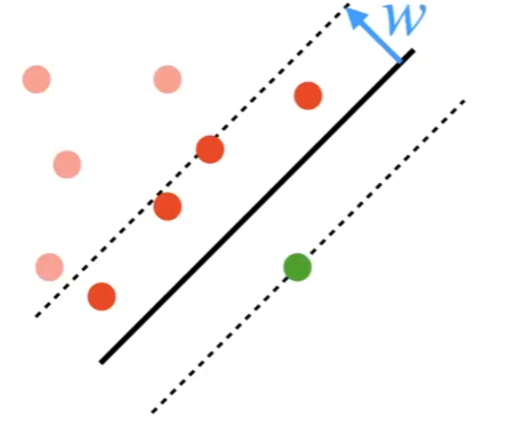

this idea can be seen as a new way to do classification. For example data in the training set, train a SVM where that single data point is a positive example, whereas all the others are negative. Graphically:

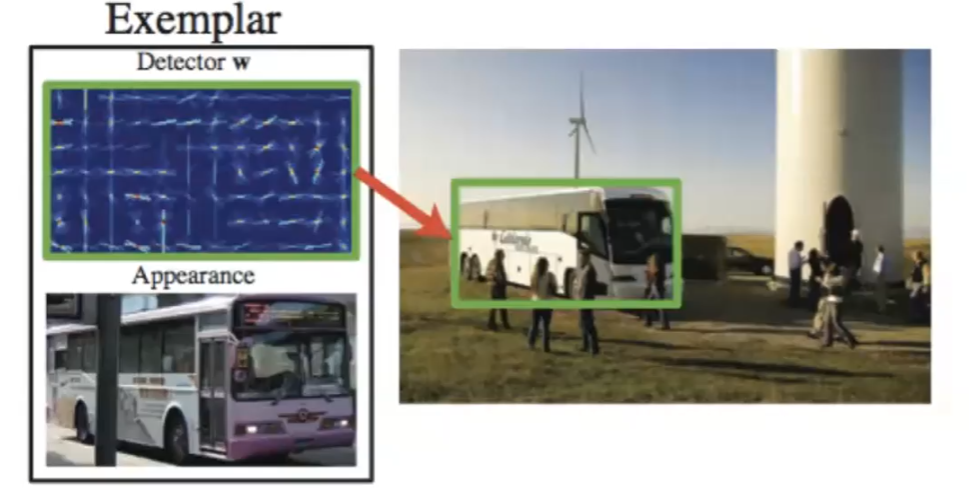

Therefore, you learn $N$ SVM, if there are $N$ data points. With this, when you are classifying an input $x$, all you need to do is to ask is: to which of the $N$ data point is it most similar to w.r.t the SVM? (hence it is like a k-NN). Then, when giving an image, you do:

- for each possible window in an image

- try all the $N$-SVM and pick out the SVM that fires the most (hence it is like a lookup table)

- Since each SVM trained corresponds to an object, this can be used for object recognition

Graphically

where notice that:

- since SVM gives some degree of extrapolation/robustness, it works even if the bus has a different color.

This works essentially based on the idea that, instead of definition what is a car, we consider what is this object similar to (something we already know)?

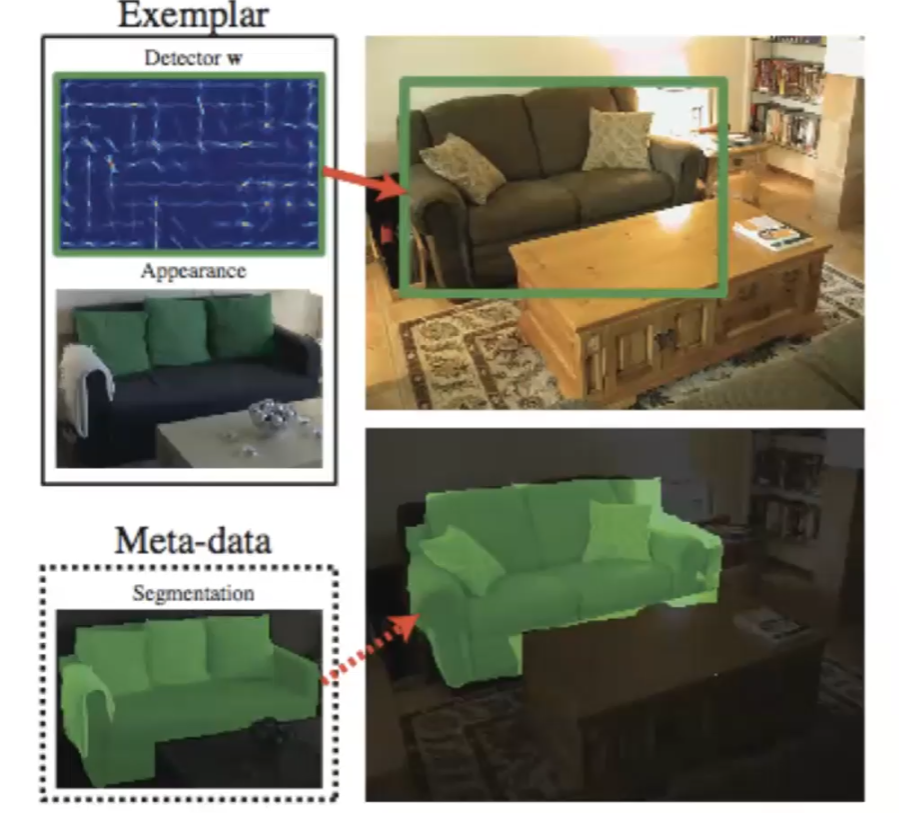

This setup in the end can also do segmentation and occlusion, just because there are many repetitions in our real world.

where the above would be an example of segmentation

What might not work:

- there is a view-point bias for photos, so that technically if you change the view point, the SVM might not work. However, again, assuming we have huge data, there could be essentially many images taken from many viewpoints. Then it still works.

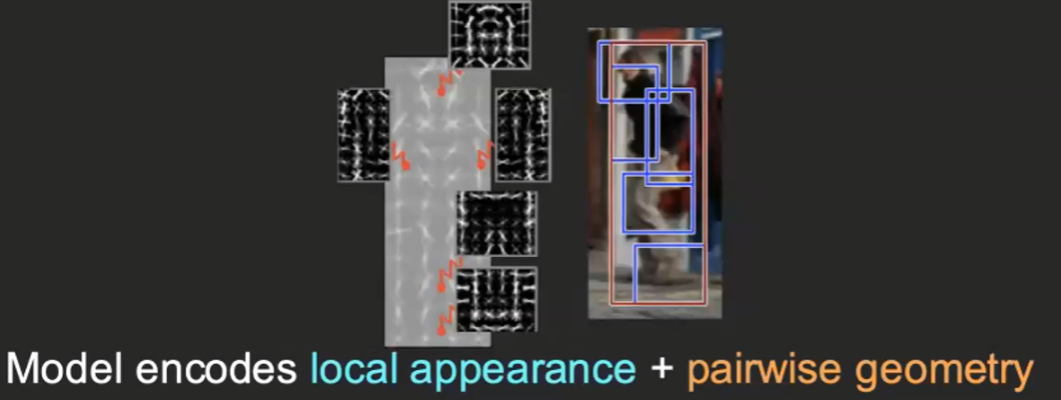

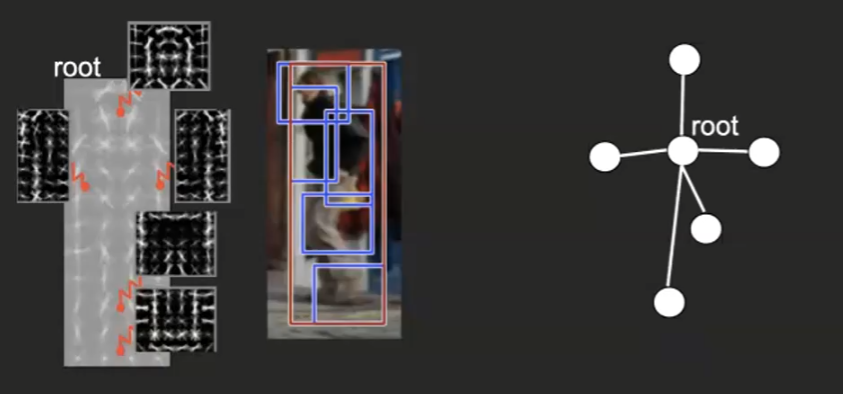

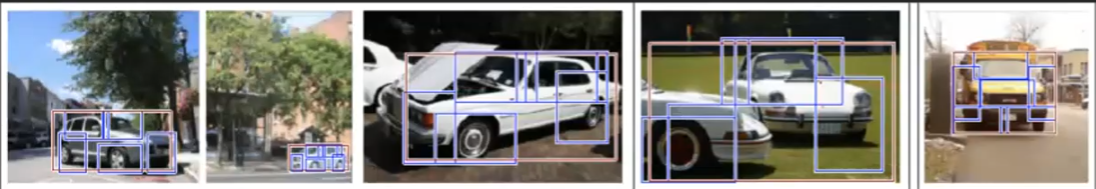

Deformable Part Models

This idea is then to learn each component of the objects + learn the connections. This would work extremely well at detecting poses, for instance, where all we changed is the connection between components of the object (human).

Specifically, you would build a tree that connects the

where:

- nodes encode the component we recognized, e.g. the

rootwould be the torso, and etc. - edges encode the relationship we found, e.g. relative relationship between leg and torso.

Therefore, as it can recognize individual parts + connections, it can work with different view points.

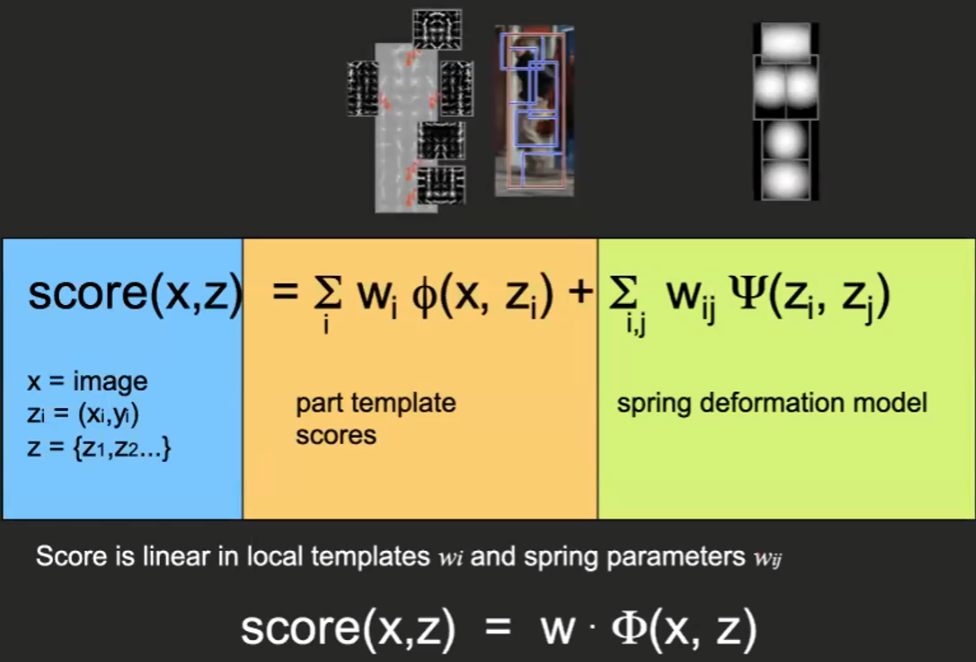

Specifically, this model does the following as the objective for similarity:

where:

-

$z_i$ is the location of the different parts/components

-

part template refers the score for the position nodes w.r.t the large image

-

deformation model refers to the score for the edges w.r.t the pair of node, e.g. answering the question: what is the score if a leg is below a torso?

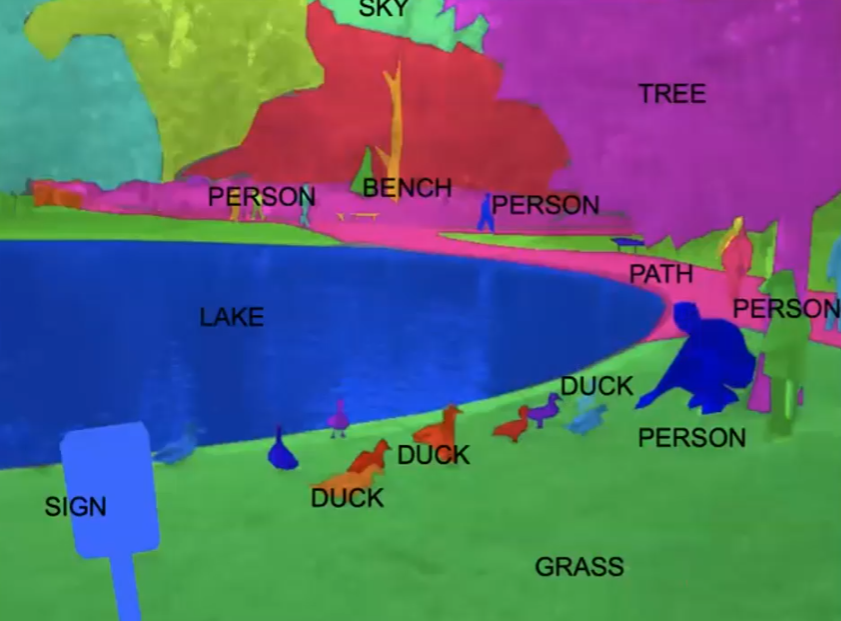

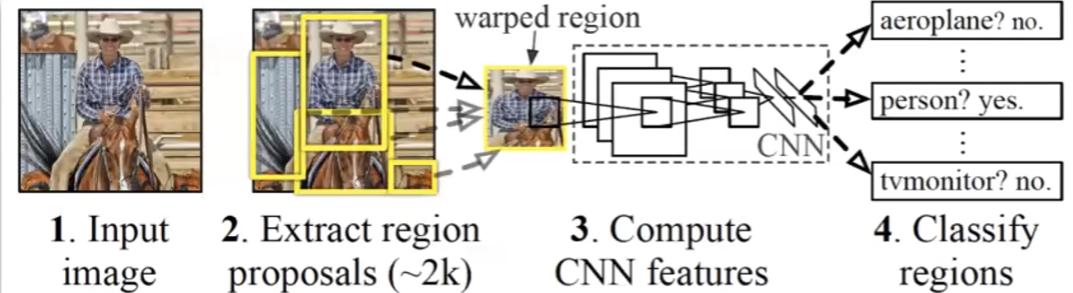

R-CNN

Consider a task that tries to assign a category to each pixel:

The idea is basically to:

- consider all possible windows (of various sizes) in an image

- for each window:

- in each of the window, classify if we should continue processing it

- if yes, put it into CNN and classify the window

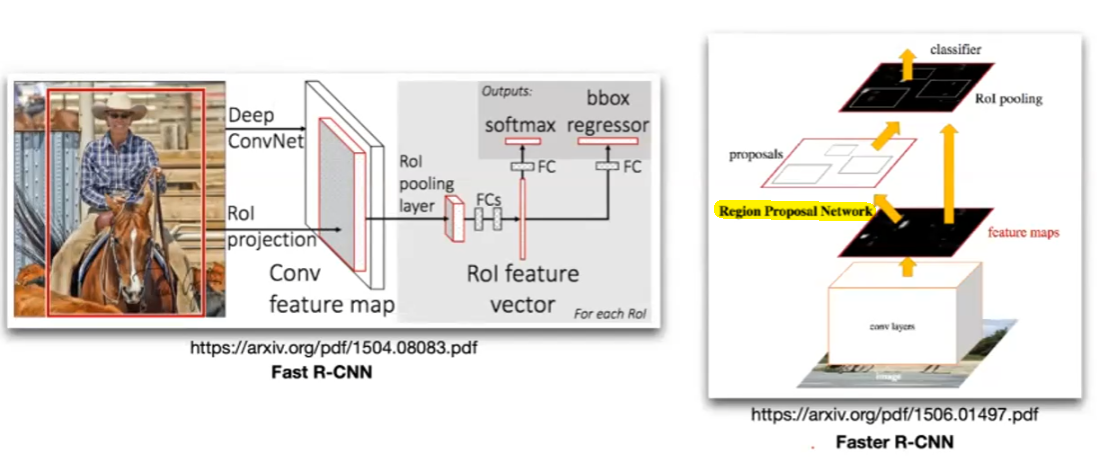

Graphically, we are doing

and it works pretty well in reality. However, the problem is that it is slow. Therefore we also had models such as Faster CNN, by learning the window proposition step, i.e. which windows are plausible, hence reduce the time.

then you basically just backpropagate to update the weights:

- initially the convolutional layer at the bottom of right image would consider all possible windows

- the Region of Interest feature vector would encode the proposed window, then you compute loss to the window proposed as you know the bounding box

- in faster RNN, the feature maps are used two fold: used for proposal and being passed on as encoding what is inside the window

Segmentation Examples

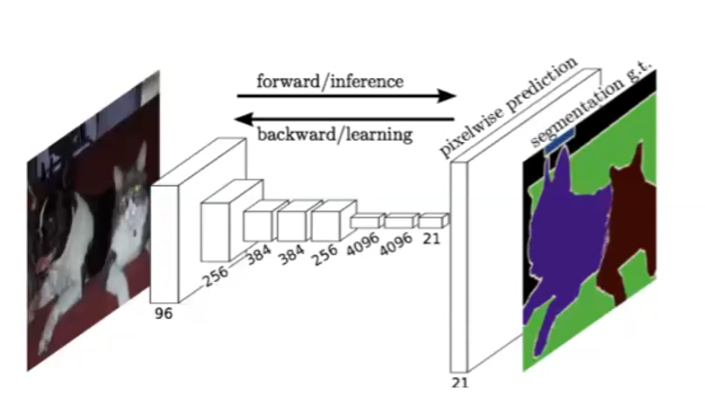

Consider the task to assign each pixel of the image a label: either a category, or whether if it is a new instance. This task is commonly referred to as segmentation.

Some architecture that aims to solve this include Fully Convolutional Network

Essentially you can just keep doing convolution, so the output is still an image

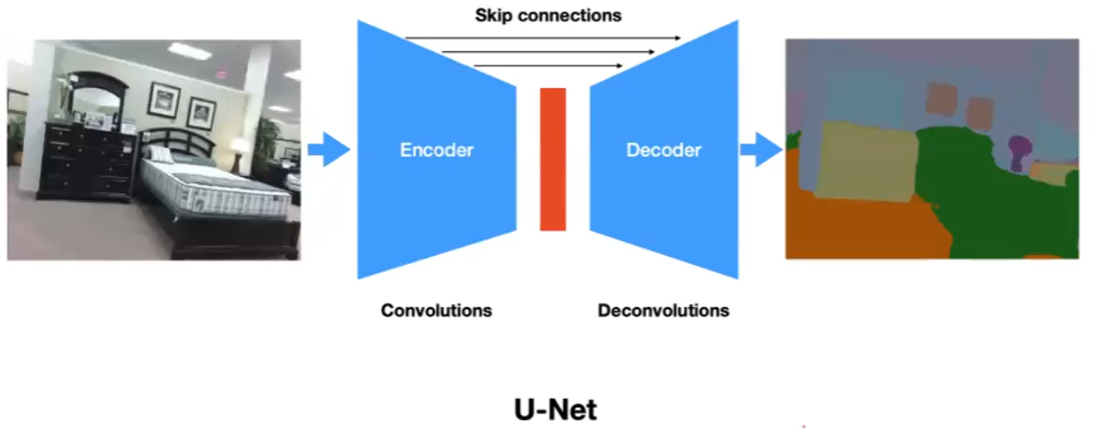

Encoder-Decoder type.

Here the idea is that, in order to be able to recognize a “bed”, you need to somehow encode all the related pixels into a group and recognize this group of pixels is a bed.

where essentially the latent feature space would be able to encode/compress pixels. However, this does mean resolution loss in the output image, hence we also have skip confections added.

Residual Networks

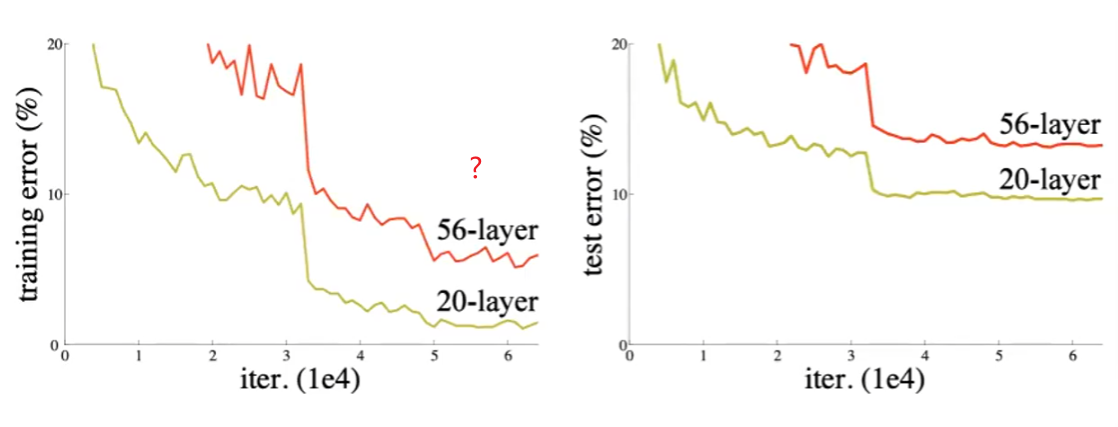

The observation comes from the abnormal behavior that, increasing the layers actually caused a decrease in performance for both train and test:

this is abnormal because, if the 20-layer solution is optimal, then the other 36-layers should be able to learn to do nothing, or doing identity operation.

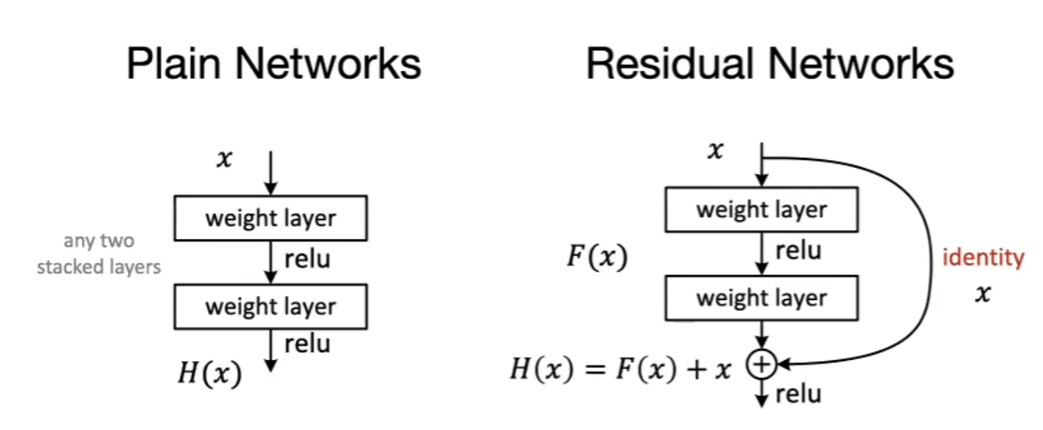

Then, the intuition is to make learning nothing an easy thing to do for the network. Hence:

where essentially we can have $F(x)=0$ being pretty easy to do (v.s. $F(x)=x$ with nonlinear operation is pretty hard).

- This is also helpful for solving vanishing gradient

- essentially enabled us to train very deep networks!

Again, the key reason behind all the idea of training deeper network is that you have big data for training.

Video Recognition

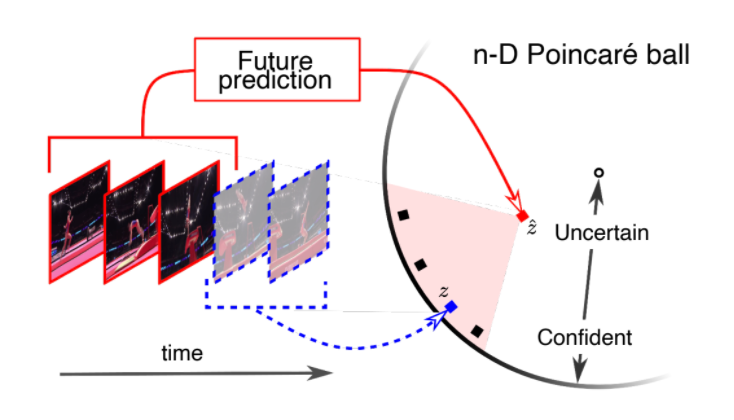

Theory of mind refers to the capacity to understand other people by ascribing mental states to them. In terms of CS, it is like you have the same program, but different memory. Yet you still can more of less know what the program will do.

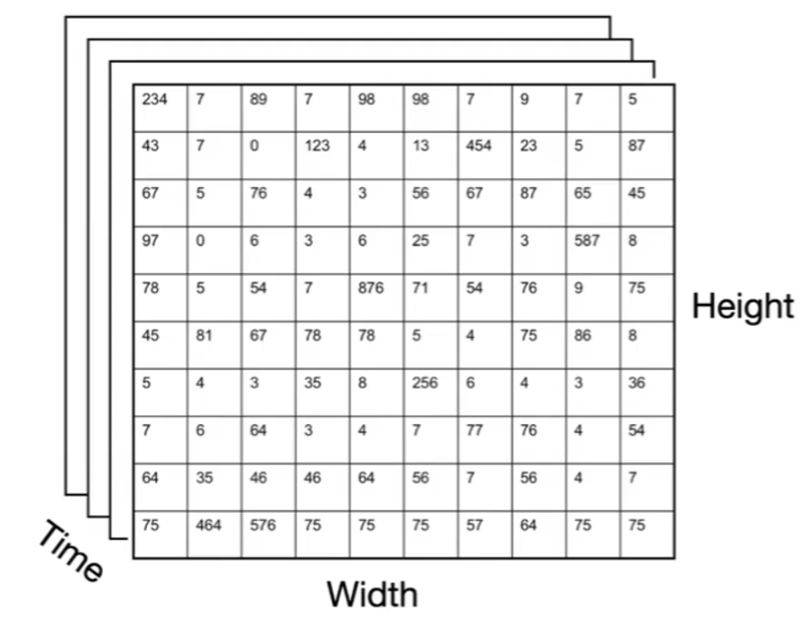

First of all, we need to represent video as some kind of numbers. Consider videos as a series of pictures:

Then essentially you just have a cube of pictures/matrix.

Accordingly, convolution operation thus involve a third dimension

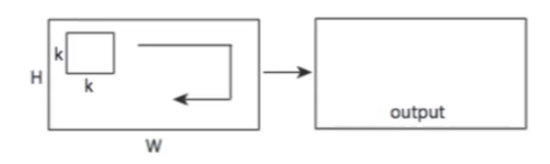

| 2D Convolution | 3D Convolution |

|---|---|

|

|

where now essentially you have an increased dimension in kernel + another dimension of time for the kernel to move around (convolution).

-

first imagine the video as a grey scale image, then essentially from image convolution (2D kernel) we now have video convolution (3D kernel)

-

note that because the filters basically also have a time dimension (stacks of 2D kernel), so they can be represented as a video as well.

Human Behaviors

Before we consider how machines should solve the problem, we should first understand and look around how human solve those problems such as:

- action classification: what is he doing (given a video)? Is his action intentional/unintentional?

- action prediction: what will happen next?

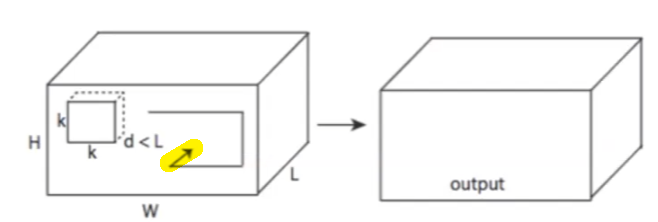

Behavior Continuum

Consider the case when a children goes to school, an continuous set of events that he/she would do involve:

for example, doing $A-G$ would have included doing $A-B$, etc.

- this poses the question of how to quantitatively represent an action hard, as it’s no longer discrete

- this then relates to how we perhaps want to design video recognition

Human Brain Video Recognition

Essentially a video is a stack of images, such that if flipped through fast enough, we have the illusion that things are moving. How does a human brain understand videos?

where essentially:

-

we are doing two separate systems: one that performs object recognition and the other recognizes motion/location.

-

an example would be the stepping feet illusion: our dorsal stream regonizes dots moving around as a person walking

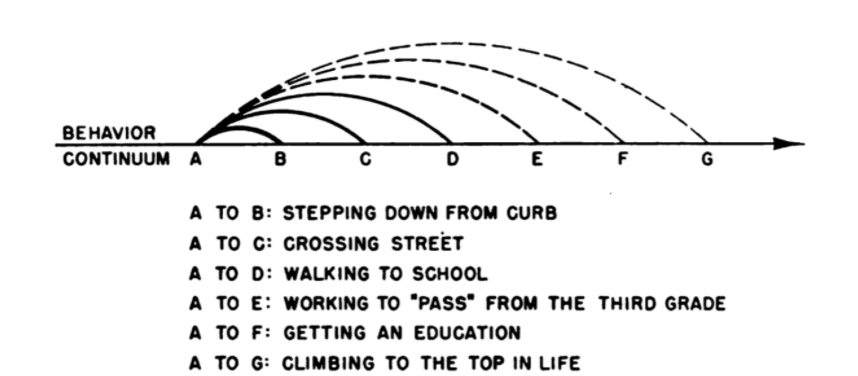

Therefore, one idea is to build a network also with two visual passways:

where:

- the spatial stream is basically the normal convolutional net

- the temporal stream basically is the convolutional net but the input is optical flows, how each pixel in an image moves

Recurrent Neural Network

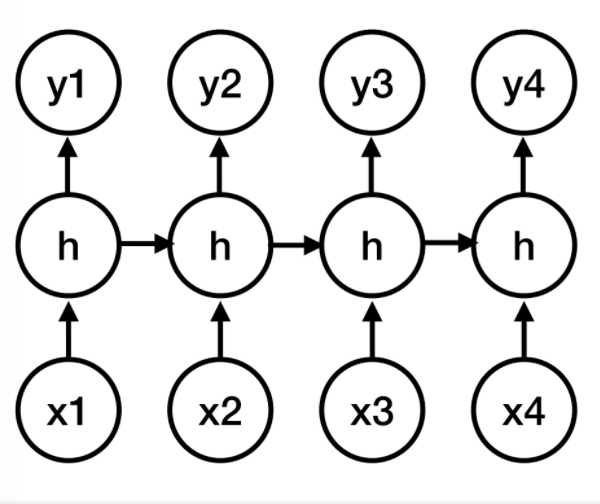

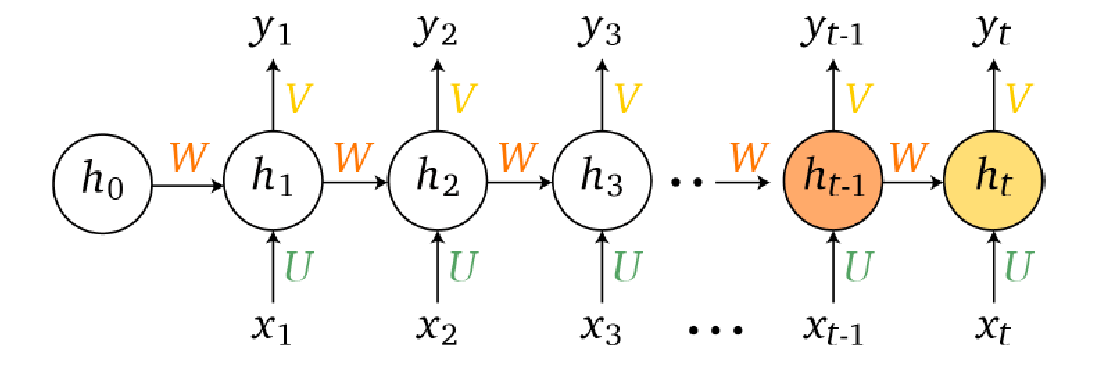

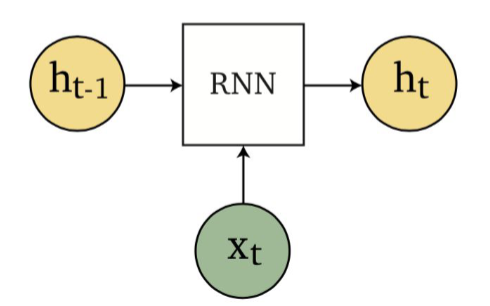

Another way to represent time would be naturally the recurrent neural networks. When unrolled, basically does:

where the “forward” formulas becomes:

\[h_i = f(w_x^T x_i + w_h^t h_{i-1})\\ y_i = g(w_y^T h_i)\]where interestingly:

-

with the additional of time, another way to see this is that we now can do loops in FFNN.

-

basically now we have a state machine:

Though this network is sound, the problem is that it has a problem of vanishing/exploding gradient. Because when you backpropagation, you would be doing backpropagation through time: (TODO replace $z_i$ with $h_i$)

At time $i$, we have the forward pass being

\[z_i = h_i = f(w_x^T x_i + w_h^T h_{i-1})\]then the gradient being:

\[\frac{d\mathcal{L}(\hat{y} , y)}{dw} =\frac{d\mathcal{L}}{dz_{i+1}} \frac{dz_{i+1}}{dz_i}\frac{dz_{i}}{dw} = \frac{d \mathcal{L}}{dz_T}\left( \prod_{j=1}^{T-1}\frac{dz_{j+1}}{dz_j} \right)\frac{dz_i}{dw}\]being the general form.

- e.g. let $w = w_h$. (recall that only three weights). Then the update/gradient at the end of the sequence at time $T$ will be products of gradients, which would either explode or vanish if it is large or small.

- to solve those problem, we have GRU/LSTMs.

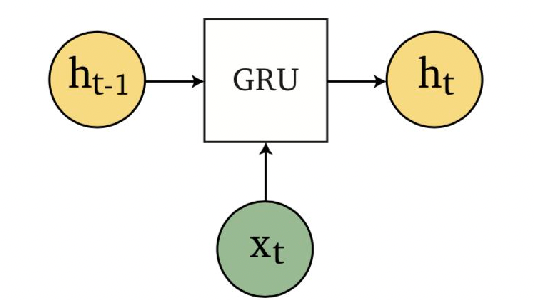

GRU and LSTM

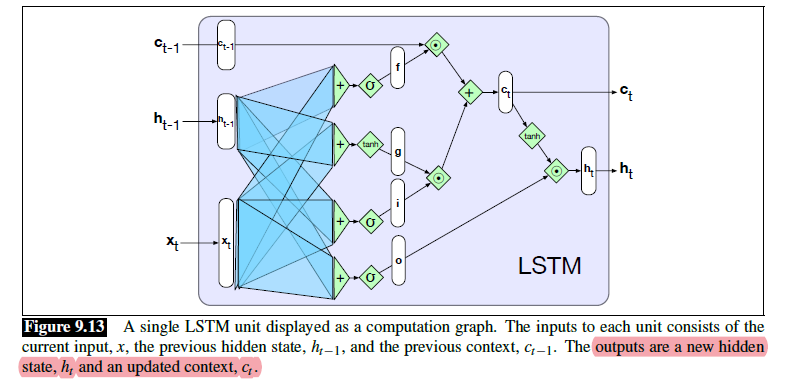

Schematically, GRU does the following change:

| RNN Encapsulation | GRU Encapsulation |

|---|---|

|

|

Specifically:

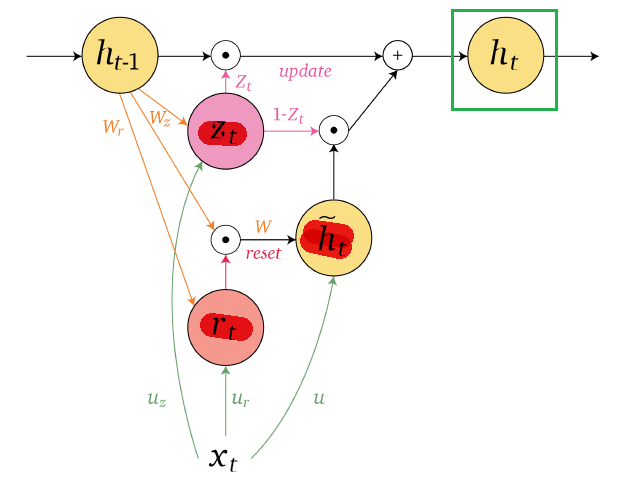

| GRU Schematic | Equations |

|---|---|

|

|

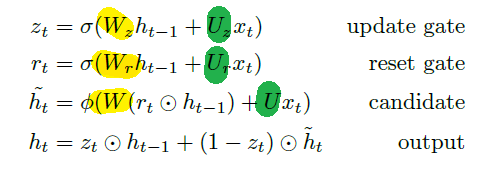

Similarly, the LSTM architecture looks like:

note that you have an additional memory cell, $C_{t}$, as compared to the GRU and RNN we had.

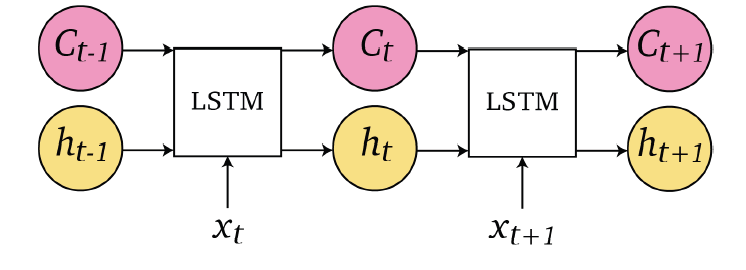

Each unit of LSTM look like:

| LSTM Schematic | Another View |

|---|---|

|

|

where the highlighted part is clear, same as RNN.

(a good blog that discuss LSTM would be: https://colah.github.io/posts/2015-08-Understanding-LSTMs/)

In both cases, the backpropagation through time would now involve addition instead of products. Hence this aims to solve the exploding/vanishing gradient problem.

Action Classification

The basic approach used here is to learn motion Features

- e.g. elapsed time feature

Key aspects of motion/video that we seem to care about:

- how long does each action take? i.e. normally, what would be the elapsed time for a normal motion.

- what are the main objects/what will happen next?

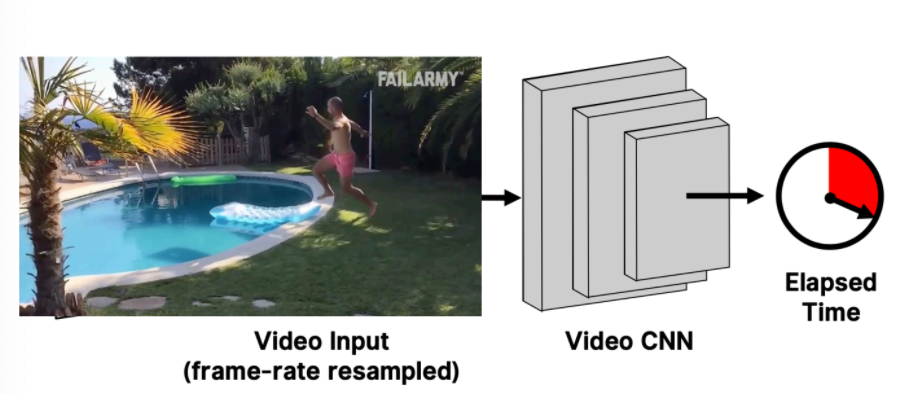

One way to learn this in NN is that we can resample a video, and then ask the NN to predict elapsed time:

This feature can be helpful for:

- deciding whether if an action is intentional/unintentional: speed of action alters perception

Action Prediction

It turns out that all our mind cares is about the future/actions, i.e. for things that seem irrelevant in the future, we kind of just ignores it.

- correlates to the idea before that categorization of an object is related to intention/action we can do with it

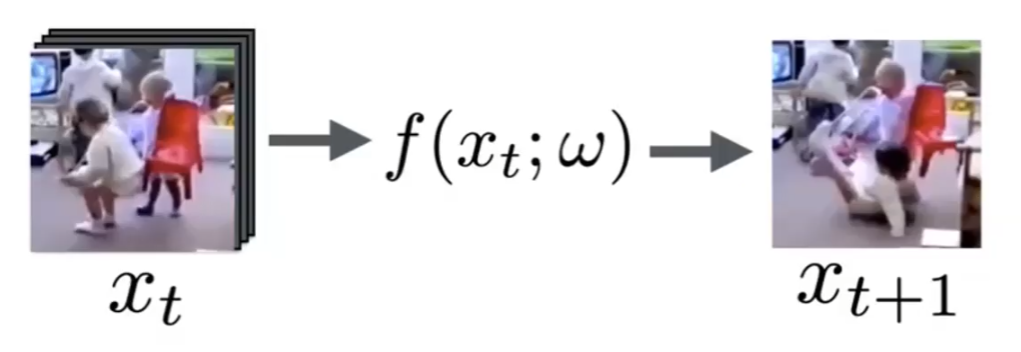

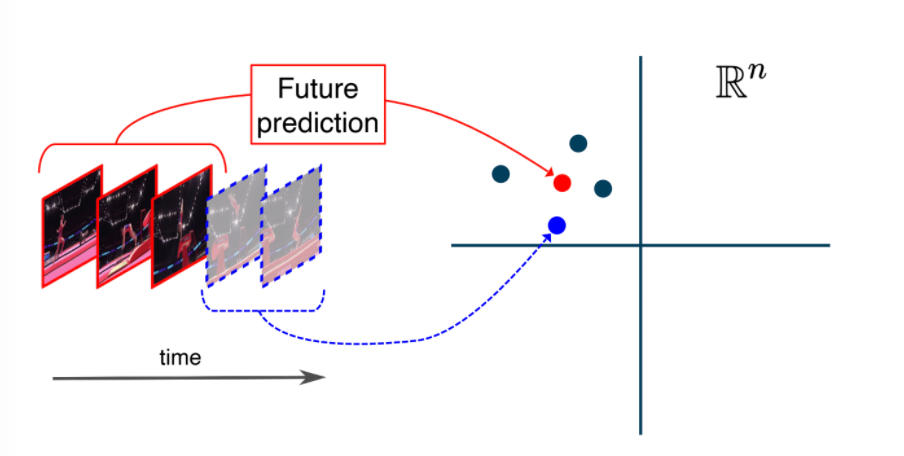

An example to stress how to predict the future would be:

this will be called future geneation:

- given data up to $x_t$

- predict $x_{t+1}$

Then for each video you collected in your dataset:

with loss being

\[\min_w \sum_i ||f(x^i_t; w) - x_{t+1}^i||_2^2\]which basically is a Euclidean loss:

- each vector $x^{i}_t$ represents the flattened vector representation of video at time $t$ (hence an image), for the $i$-th video in your dataset

But consider $x_{t+1}^i$ being the $i$-th possible future of the video up to $x_{t}$. Now you want to output, say, all possible futures, and perhaps among them, pick the most probable future.

- note that our brain can do this pretty easily!

Then, we see a problem that with this is that you can let:

\[f^*(x_t;w) = \frac{1}{m}\sum_i x^i_{t+1}\]to regress to the mean, i.e. your predicted future would be a mean of possible futures. This is bad! But how do we build models that is capable of predicting possible/likely future?

One problem is that there are multiple possible outcomes (i.e. we have uncertainties in what will happen next), but the reality we have in the video has only one future. How do we build this?

Intuition:

When a child gets near a candy store, and right before he/she goes inside, what will he/she predict to happen inside?

- instead of saying how many candies, and their color, he/she might predict his/her own sensation: they are going to taste like xxx, smell like xxx, and etc.

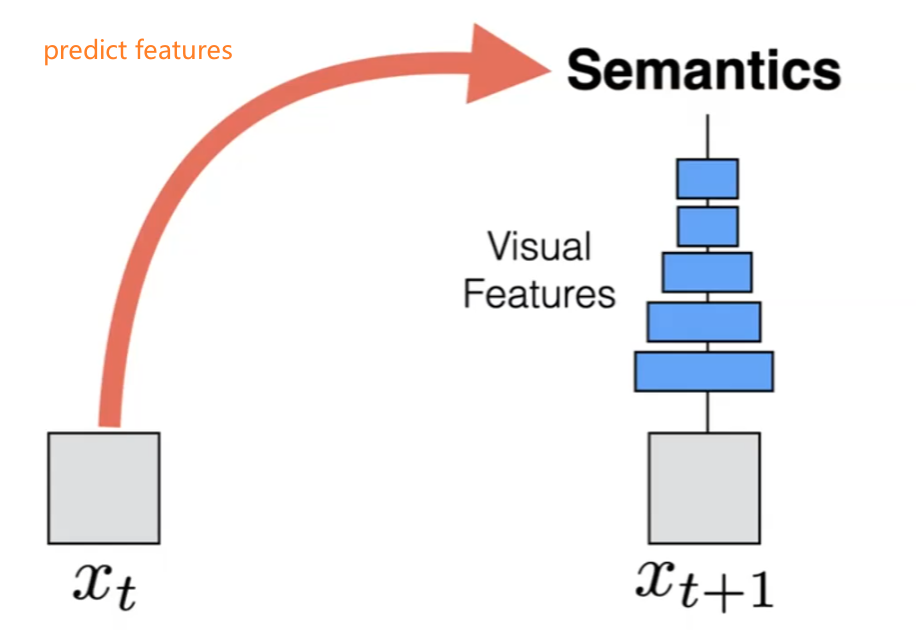

Therefore, the idea here is to build a NN with:

- input $x_t$, e.g. a picture

- predict the features of the future picture $x_{t+1}$. (the feature could come from an encoder that encodes $x_{t+1}$ for example)

Graphically, we are doing:

which is an easier prediction problem, because the output space is much smaller.

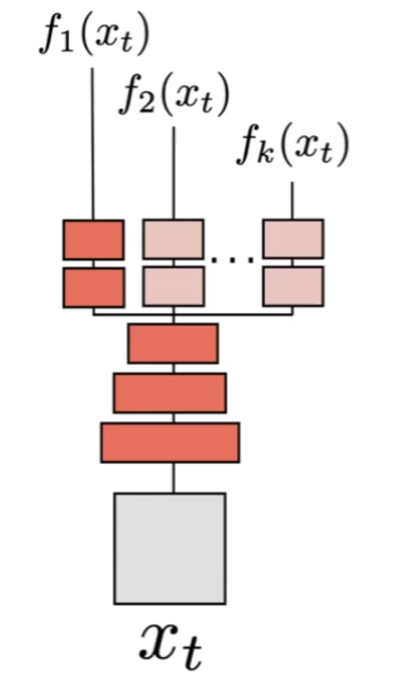

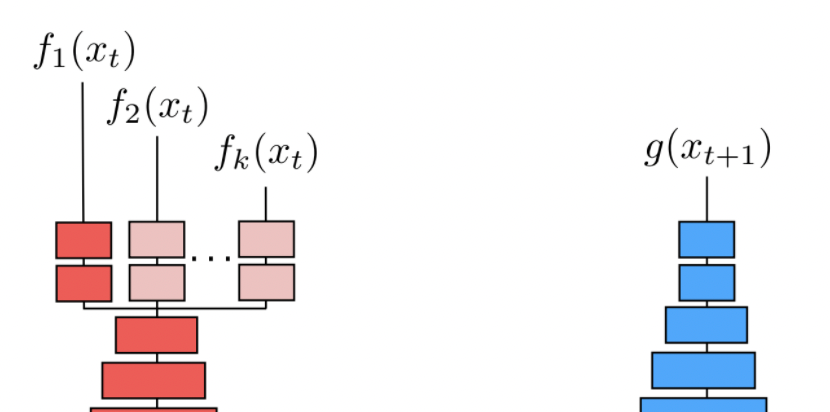

Then, since there are multiple possible futures, we could have each multiple predictions of the feature:

which we can do by basically having $k$-learnable activation functions/NN attached after. But then, to train this multiple prediction model, notice that we only have one output/future in the video data, hence only “labeled feature” $g(x_{t+1})$:

so then the problem is how to figure out the whole distribution ${f_1(x_1),f_2(x_1),…,f_k(x_1)}$ while you only have one label/ground truth $g(x_{t+1})$. Then, the idea is:

-

We know that if we only have one prediction, then we can do:

\[\min_f \sum_i ||f(x_t^i) - g(x_{t+1}^i)||_2^2\]for the $i$ data points you have in your training set.

-

If we have only one of them correct, but I do not know which one, then it means we have some latent variable to estimate.

For a single data point $x_t$, the loss would be:

\[\sum_k \delta_k ||f_k(x_t) - g(x_{t+1})|| _2^2\]for $\delta_k \in {0,1}$ being a latent variable, so that $\vert \vert \delta\vert \vert _1 =1$.

Then for all those data points, we have a different $\delta_k$ to learn:

\[\min_{f,\delta} \sum_i \sum_k^K \delta_k^i ||f_k(x^i_t) - g(x^i_{t+1})|| _2^2,\quad \text{s.t. } ||\delta^i||_1=1\]for basically $\delta^i$ being like a one-hot vector to learn.

Now we have the entire problem setup, lastly we need to train this.

- this using backprop does not work, because $\vert \vert \delta^i\vert \vert _1=1$ makes this a discrete variable, which we cannot take derivative of.

- but since it is a latent variable, use EM algorithm

- E-step: Fill in the missing variable ($\delta$) by hallucinating (if at initialization) or estimating it by MLE (when you have some $f$)

- M-step: Fit the model with known latent variable ($\delta$), and do backpropagation on $f$ to maximize the parameters for $f$.

- repeat

where essentially it solves the loop by “hallucinating”:

- to solve/optimize for $f$, we need $\delta$; but to solve/optimize for $\delta$, we need $f$.

- therefore, we just assume/hallucinate some $\delta$ to start with, then iteratively update

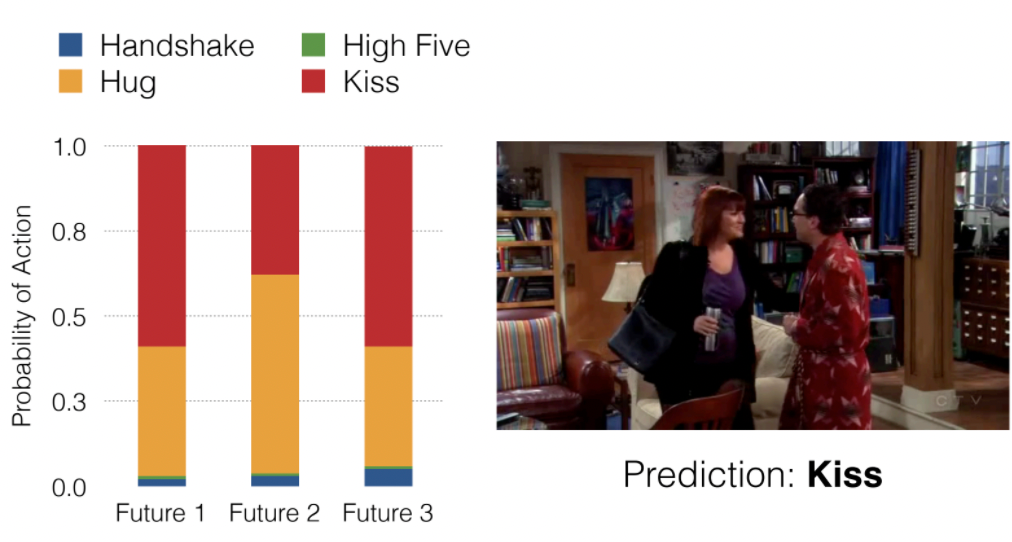

Examples: Then we can use this to do action prediction, with $k=3$ and predicting four features (handshake, high five, hug, kiss):

For prediction, we then use $\delta^i$ to tell which future is taking place, and then spit out the feature that has the highest score as the prediction.

Another idea is that, since someimtes we have uncertainty in actions (even if we do it by ourselves)

Predicting in Eucliean Space

Last time we saw that the objective we used results in the problem of regression to the mean:

where basically

- you imagine the four possible futures, indicated by the three black points and the blue point

- the “possible futures” are obtained by having similar videos and claiming their “past” are the same even though there are some variations

- one idea of how we “fix” this is to represent this perhaps not in the input feature space

First, we need to recap what properties eucliean geometry have.

Hyperbolic Geometry

Axioms of Eucliean Geometry: (i.e. we can derive all euclidean stuff from those five axioms)

-

There is one and only one line segment between any two given points.

-

Any line segment can be extended continuously to a line.

-

There is one and only one circle with any given center and any given radius.

-

All right angles are congruent to one another.

-

Given any straight line and a point not on it, there exists one and only one straight line which passes through that point and never intersects the first line.

basically related to what it means being parallel.

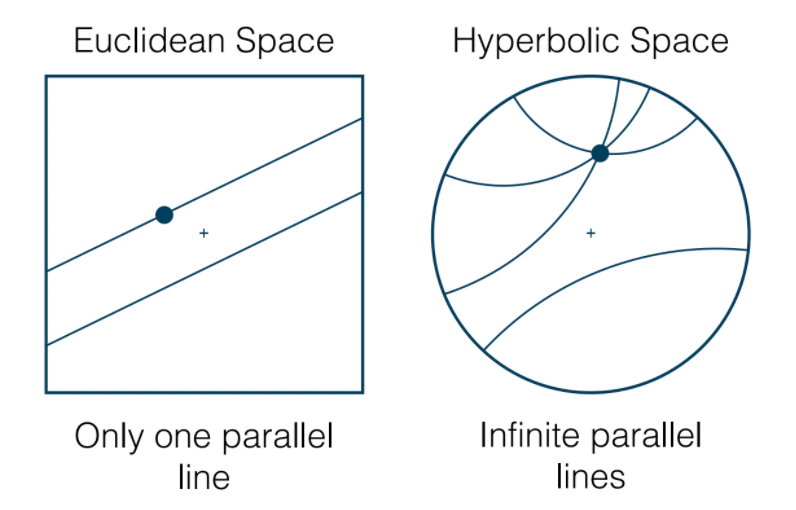

For hyperbolic geometry, we only chage the fifth rule and we will have a different geometry:

- Given any straight line and a point not on it, there exists

one and only oneinfinitely many straight line which passes through that point and never intersects the first line.

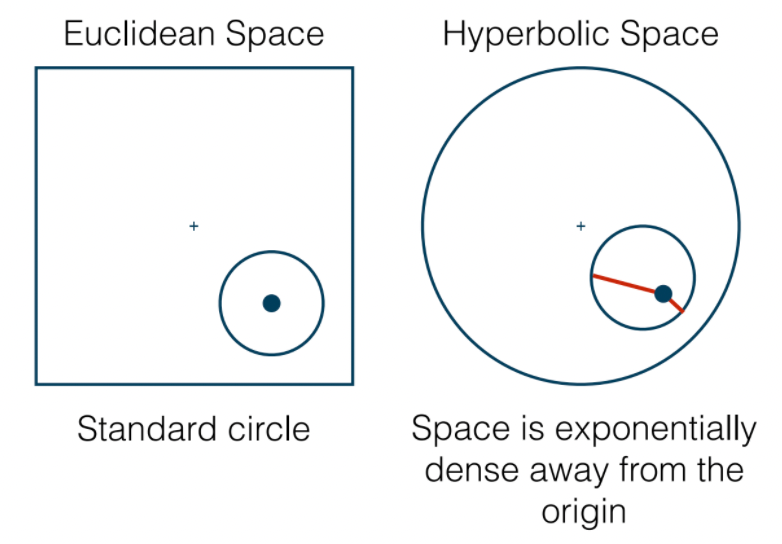

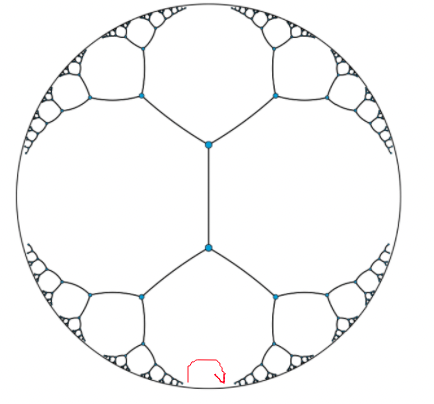

Some graphical comparision would be

where

- the plus sign represents the origin.

- for hyperbolic space, the infinity of the space is the circular boundary

- the straight line in hyperbolic space is drawn by doing the shortest path in the manifold (see below).

- This line is also called the geodesic line, which in cartesian would be a straight line.

- one intition here is that the density of space is high near the boundary of the hyperbolic space.

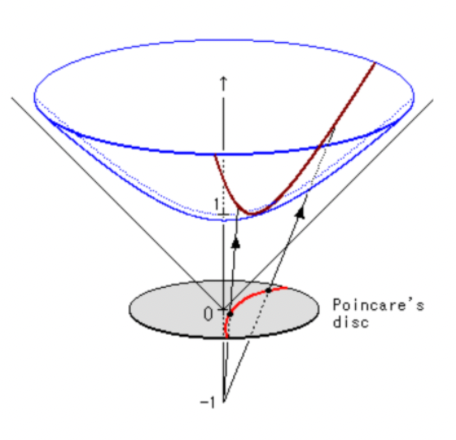

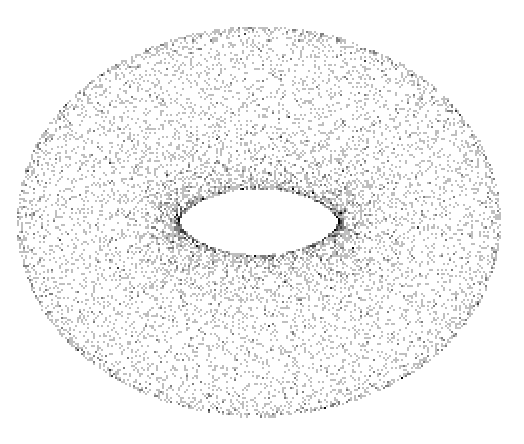

All the points live oin a manifold, where the manifold is the hyperbolic surface in this case (the blue region above, generated by rotating a hyperbole)

Then the formula for distance between points on hyperole (the blue surface), becomes:

\[d(a,b) = \cosh^{-1}\left( 1+ 2 \frac{||a-b||^2}{(1-||a||^2)(1-||b||^2)} \right)\]for $a,b$ being vectors to the points. Some other properties of space include:

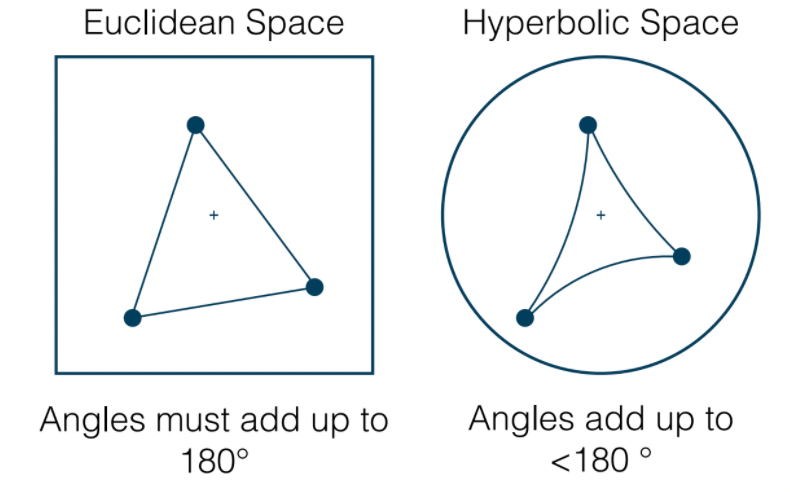

| Shapes in Hyperbolic Space | Center of Circles |

|---|---|

|

|

where:

- on the left, it is significant as the area of triangle will be solely determined from angles. And the shape of “square” does not exist (though there exists four sides shapes)

- on the right, the center of circle shears more towrads the boundart, because the density is higher near boundary (i.e. the red curves, technically it sohuld be, should have the same length!)

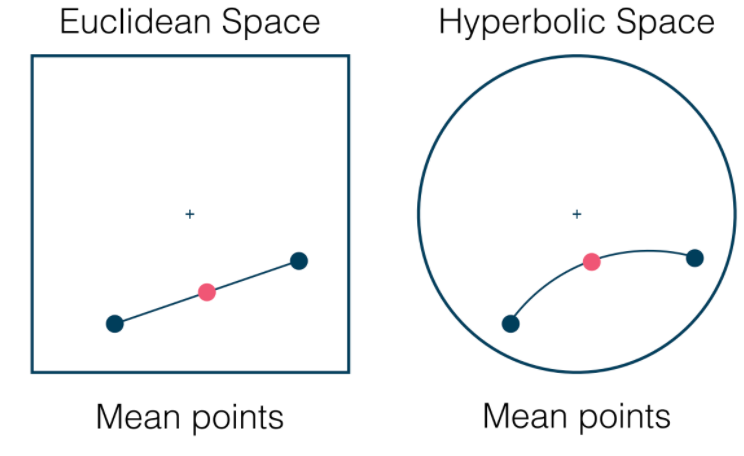

Additionally, you can also find the mean (which now relates to regression!)

Distortoin of Space

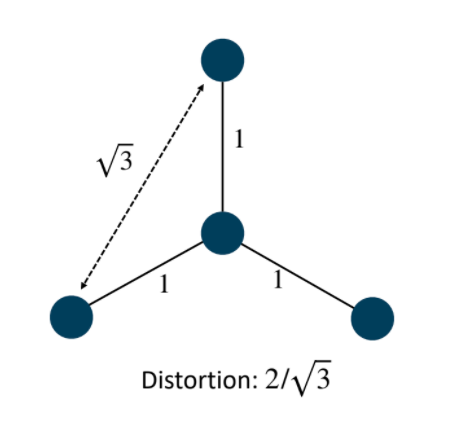

Why do we want to use eucliean space? We want to embed a hierarchy tree in to the space.

I want distance defined by a line joining the nodes should be the sum of distancce between between node-node in the tree.

Consider doing this in eucliean space, this does not work and we have distortion:

where this comes from $2=1+1$ is the correct distance we want, and $\sqrt{3}$ is the actual distance we got.

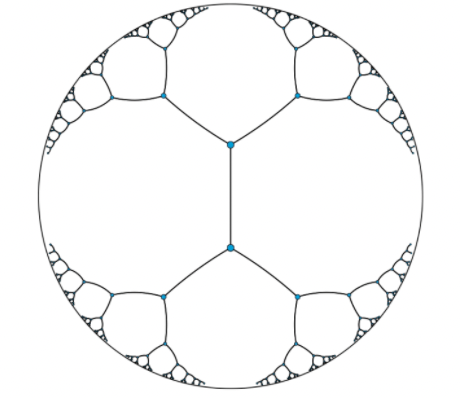

Yet, hyperbolic spaces can naturally embed trees

| Trees in Hyperbolic Space | Example | Example |

|---|---|---|

|

|

|

where the

- second figure shows an example of “straight line”/shortest path that defines the distance between the two nodes.

- third figure shows bats that have the same area in hyperbolic space

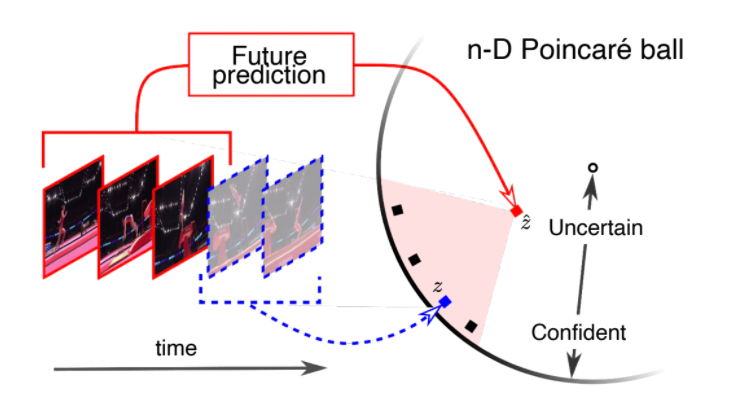

Predicting in Hyperbolic Space

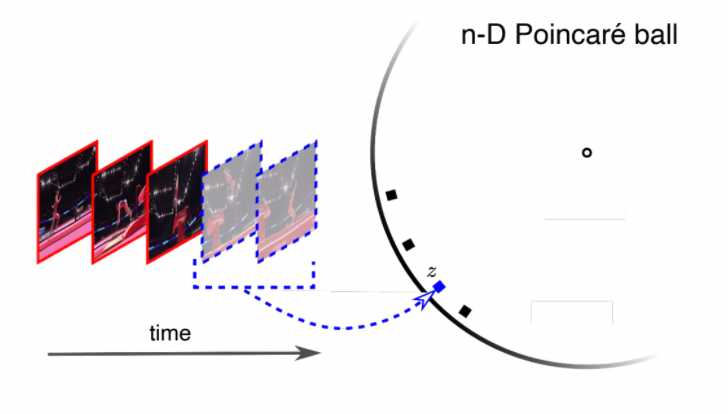

Then we consider 4 possible futures, shown as the three black points and a single blue point. Our task is to predict $\hat{z}$ given the three past images, and the 4 true labels such as $f_\theta(\text{past images}) = \hat{z}$ represents the mean of the future = minimize the distance to the all the possible futures:

| Regressoin Task | Interpretation |

|---|---|

|

|

where:

-

regression to mean in hyperbolic space means having the point $\hat{z}$ which is closer to origin, which corresponds to uncertainty in or prediction being in higher parts in the hierarchy tree!

-

Then, the objective function would be defined by regression using hyperbolic distance

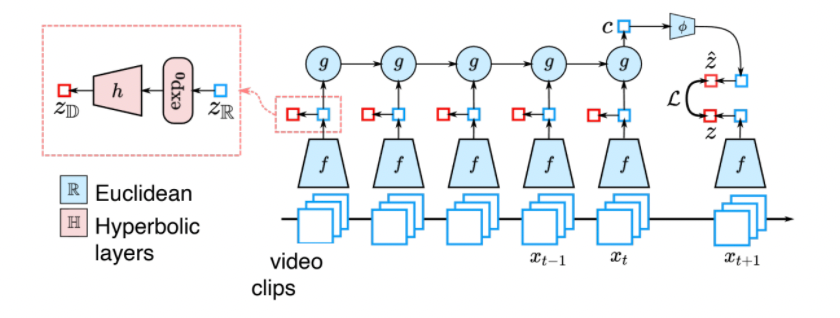

\[\min \sum_i\left[ d^2 (\hat{z}_i,z_i) + \log \sum_j \exp (-d^2 (\hat{z}_i,z_j)) \right]\]such that we essentially have two neurnets, $z_i$ from the blue neural net and the $\hat{z}_i$ from red for future prediction:

- the first term minimizes the distance between $z_i$ and $\hat{z}_i$, for $z_i$ being the one past, and $\hat{z}_i$ being its future

- technically we are predicting one $\hat{z}_i$ per past, but eventually we converge to the same future $\hat{z}$ if the past are similar

- the second term wants $\hat{z}_i$ to be far away from other non-related examples $z_j$ in the dataset (without this term $z,\hat{z}$ collapse to origin)

Graphically:

where the blue latent point can be interpreted as “what features in the future image”

- the first term minimizes the distance between $z_i$ and $\hat{z}_i$, for $z_i$ being the one past, and $\hat{z}_i$ being its future

Last but not least, given those points in the latent space, you finally map it back to features such as “probability of hugging”, and etc:

where the classifer you attached from the output of latent space vector $z$ could be a linear one in hyperbolic space.

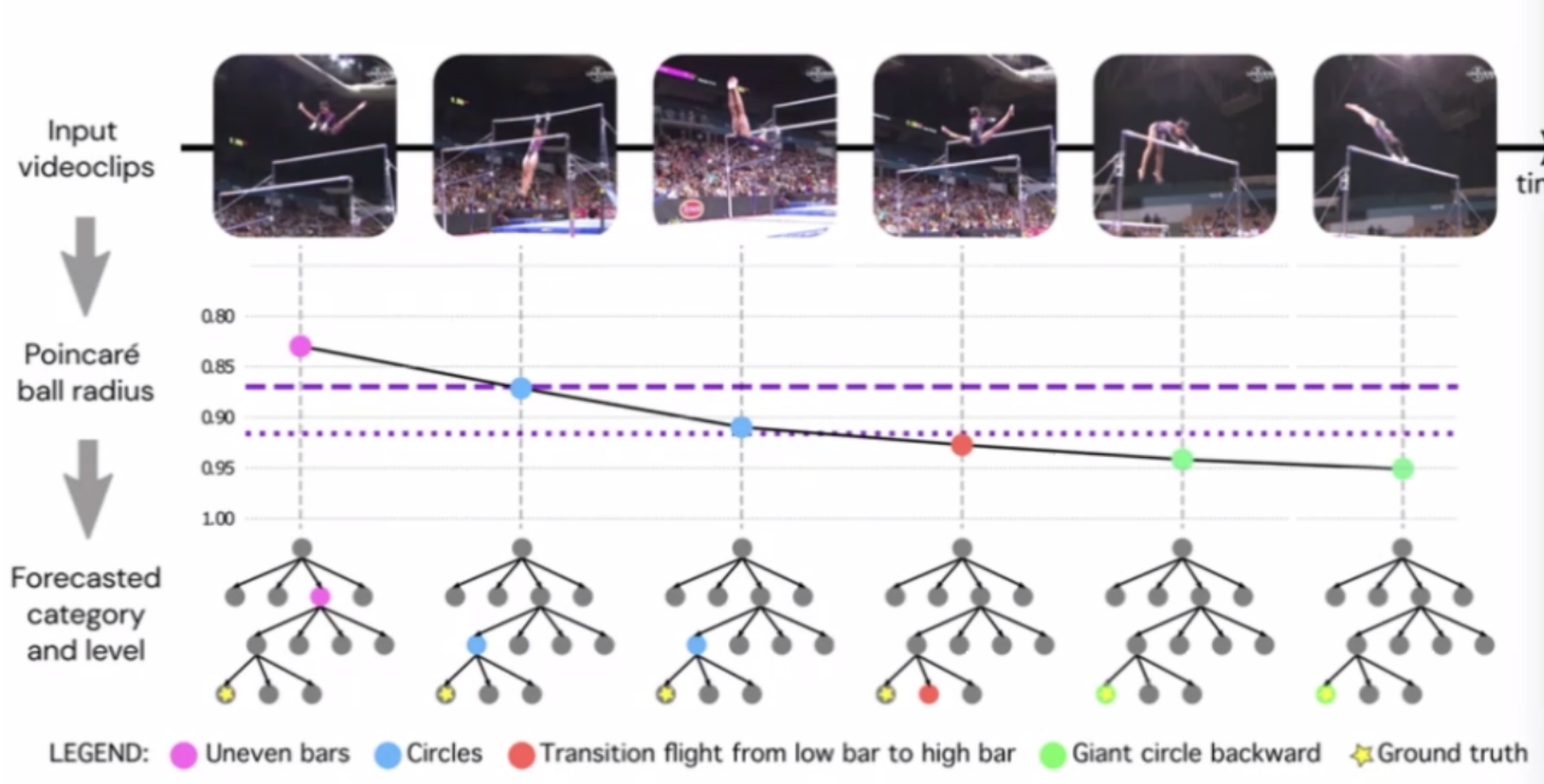

Predicing Action

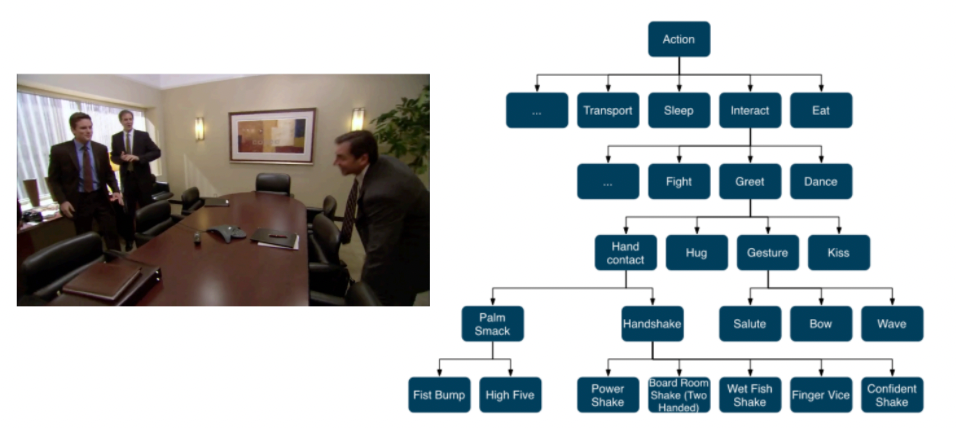

notice that:

- essentially as more future is revealed, the less uncertainty you have by moving down the action hierarchy tree

- the purple dash lines would represent the levels of the tree you are at

Action Regression

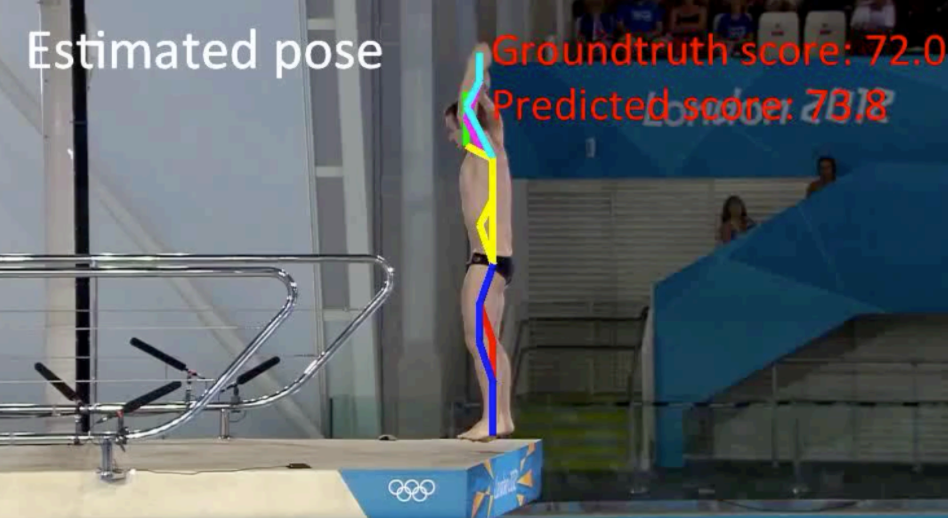

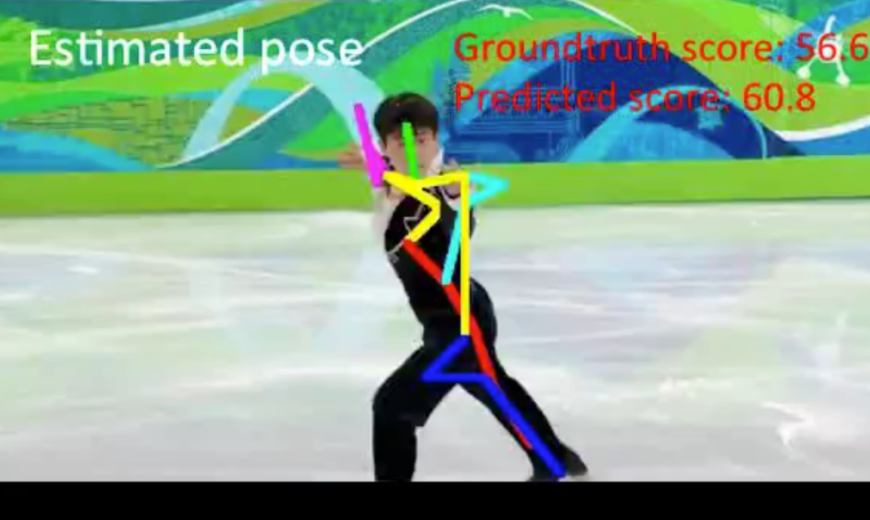

Other related applications include regression on actions to predict a score.

For example: How well are they diving?

- Track and compute human pose

- Extract temporal features

- normalize pose

- convert to frequency space

- use histogram as descriptor

- Train regression model to predict expert quality score

Additionally, this can also be applied reversely by answering the question: how should the post change to get a higher score?

where

- essentially compute gradients

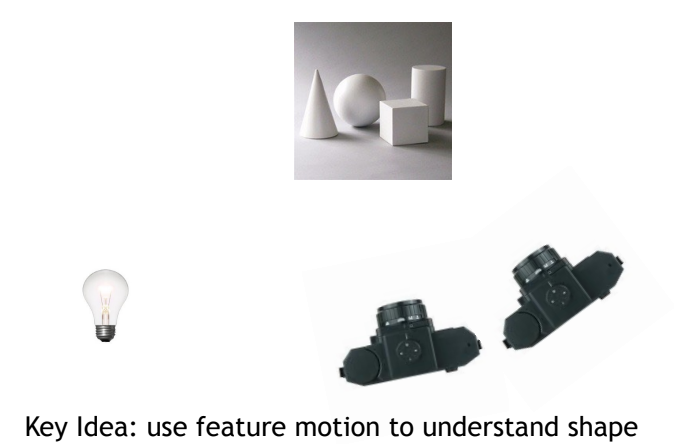

Object Tracking

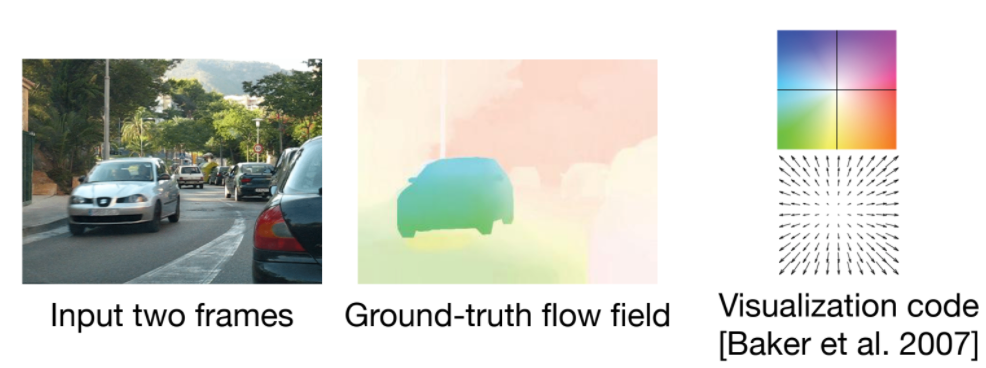

The first and foremost useful representation of motion is the optical flow.

Optical flow field: assign a flow vector to each pixel

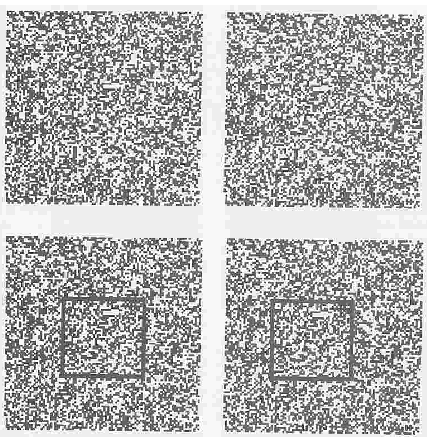

However, there is a problem with computing optical flow, e.g:

| Start | End |

|---|---|

|

|

which is ambiguous how the line moved, as it could have go up/right/top right, all yielding the same result.

- another example would bte the barber pole illusion, where

- e.g. if you put an aperture near the car, then how it moves become ambiguous. Hence this where machine learning becomes useful, which can learn the priors. But the problem is where can we get the correct labels if we have those ambiguities?

Learning Optic Flow

The idea is to training use game engines, so that we can:

- generate dataset with labelled/ground truth optic flow using game engines

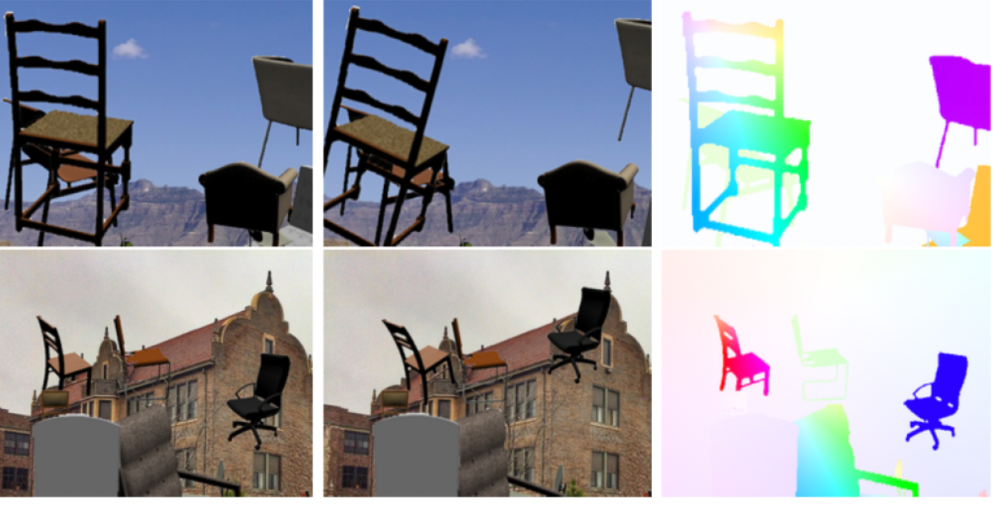

An example dataset that comes out for this is the falling chairs

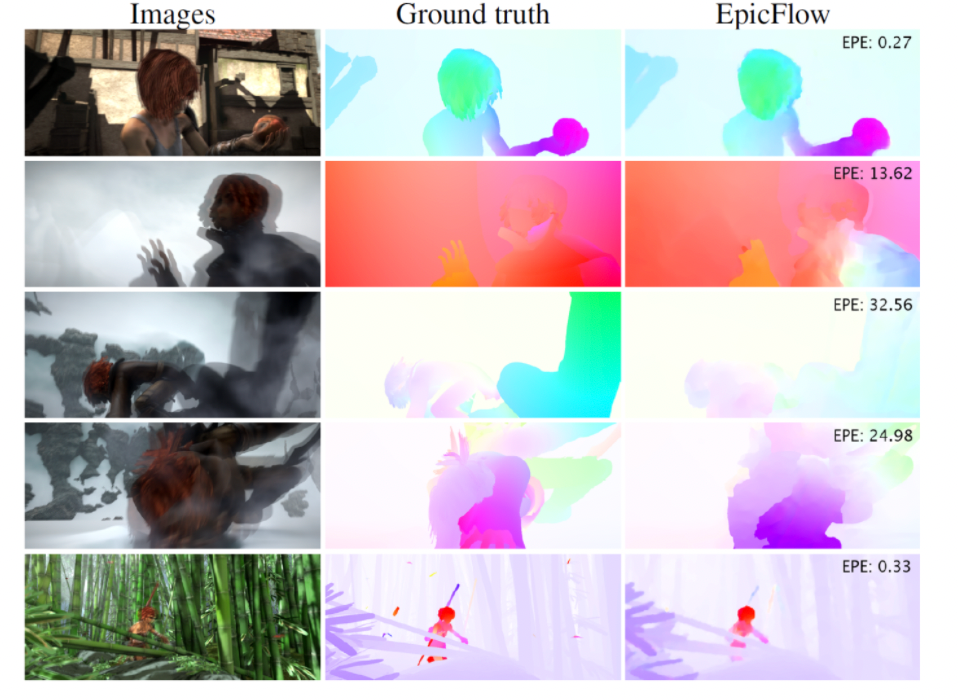

And one model that worded well is the EpicFlow

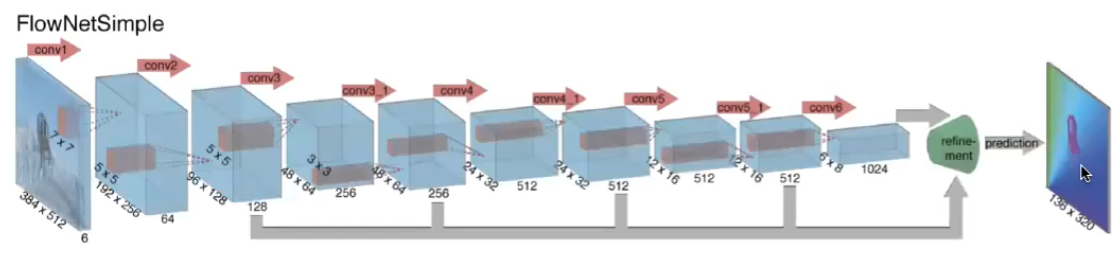

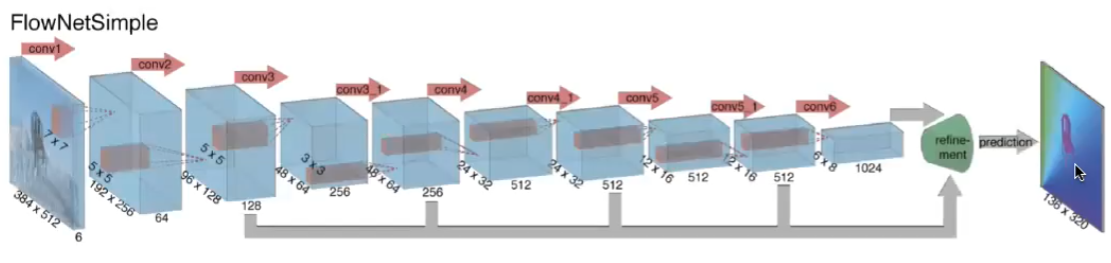

The general setup would looklike:

- input image pairs, output which pixel moves to where (i.e. flow vector for each pixel)

- sample architecture with CNN looks like

Then this can be used to to predict motion by using the motion field

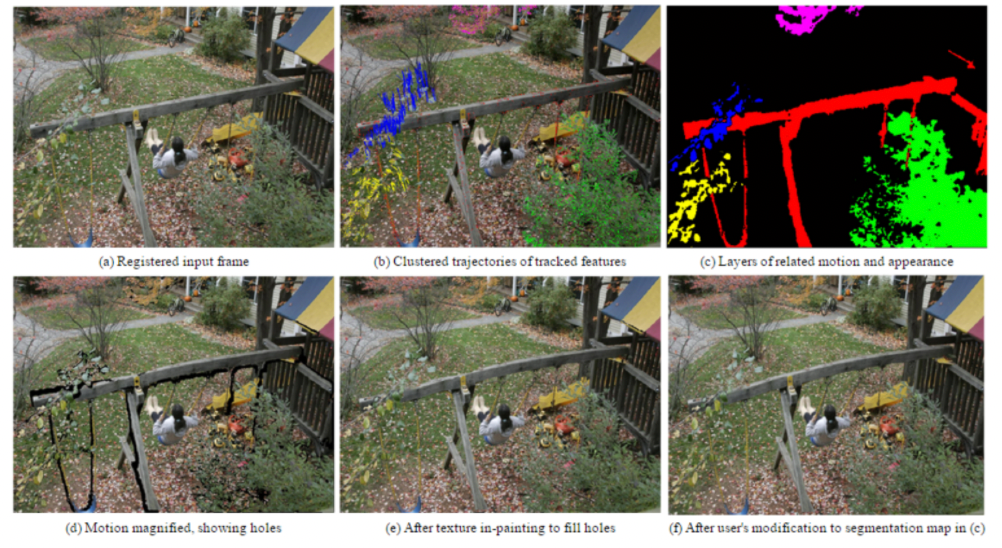

-

Motion Magnification: since machines can see more subtle motions, we can create videos with those magnified

- find the motion field

- cluster similar trajectores

- magnify the motion

Tracking Dynamics

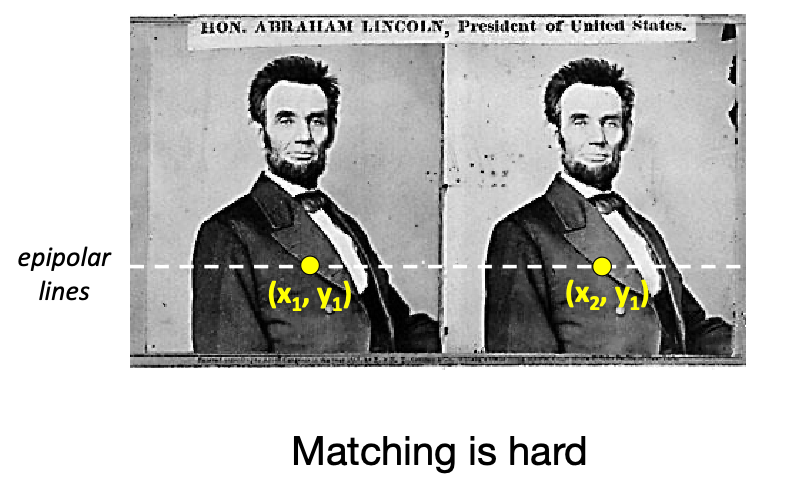

Moving from knowing how each pixel is moving, we would like to consider how each object is moving. Hence we end up in the task of how to track an object.

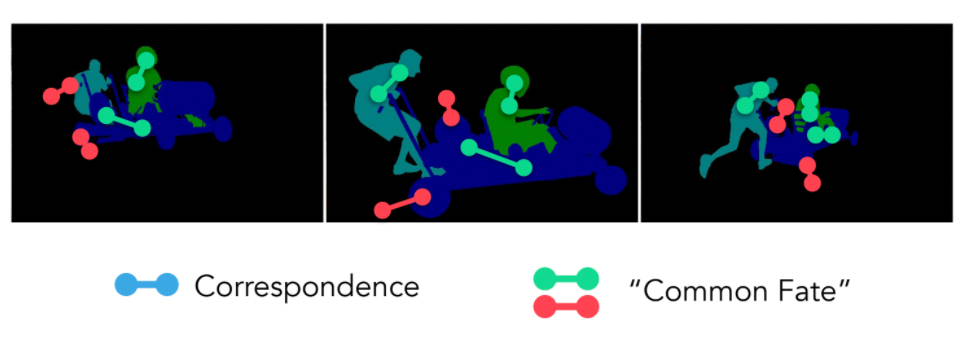

When tracking an object, we generally consider how to answer the following two questions:

- common fate: connected parts so that they should move together

- correpondance: how do you know those are the same thing after some time?

Example:

The common approach is to solve this by learning an optical flow field using supervised approach. Similar to how we learnt optimal flow:

- given some input video with ground truth labelled object trajectory, for instance

- learnt the tracking

Then you would end up using similar architecture for learning optical flow. For instance:

while this does work great, but the problem would be collecting those labeled data, and that:

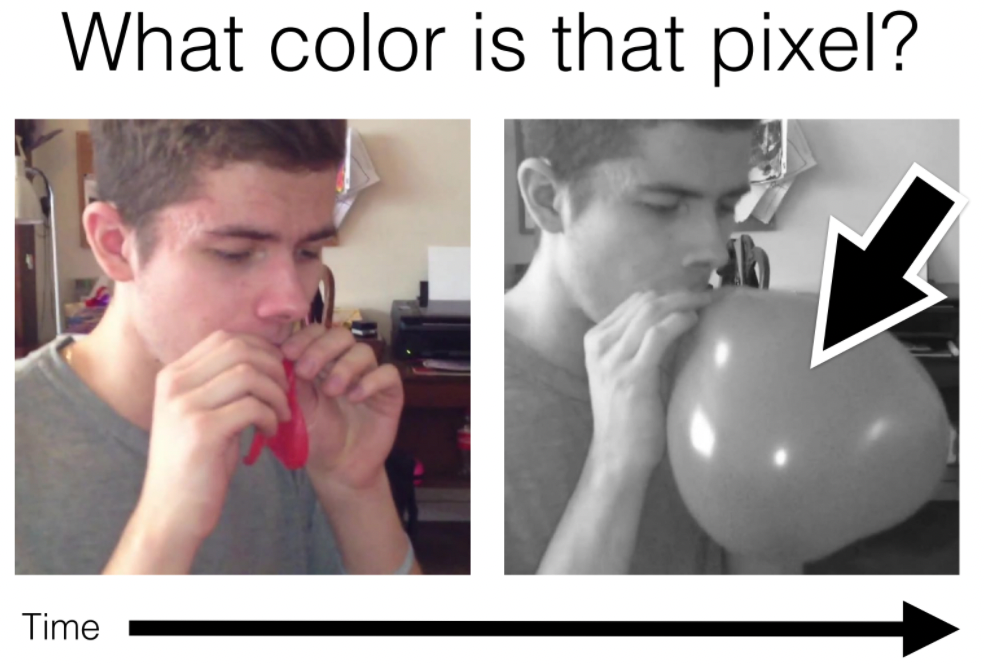

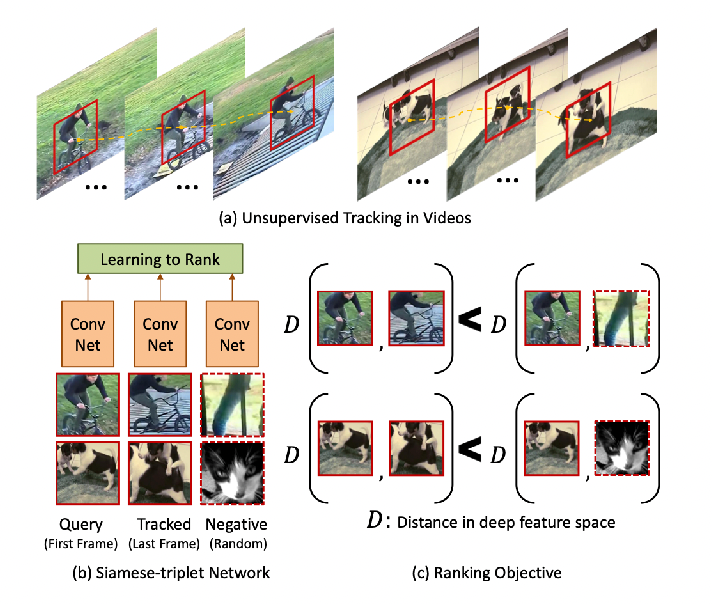

Is there an approach where we can solve this without having a supervised approach? It feels that every living being in existence should be able to track without a “teacher”.

- for most problems, if you have a big enough dataset, then they can usually be solved by many architectures

- can we come up with a unsupervised problem that tricks the machine and actually solves the actual problem?

An example would be:

where notice that to answer this question, you would have logically tracked the image!

Then we can have a system such that, we are given a colored video:

- only take the first image as colored

- the rest we process to grey scale and feed into network to predict color for each pixel

- notice that we have all the labels already!

note that this won’t solve the tracking problem conpletely, but is a good approach.

- exceptions inclued an object changing color over time, perhaps due to lighting, e.g. at party house

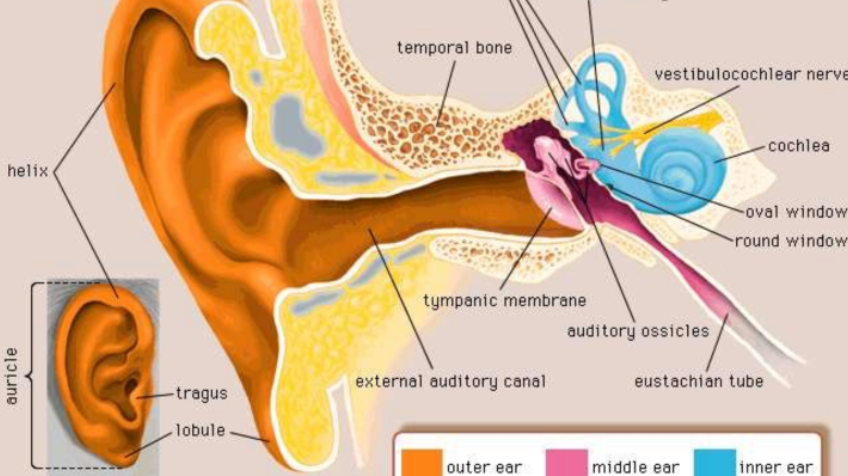

Human Perception of Color

Recall that colors we perceive essentially is determined by wavelength in light

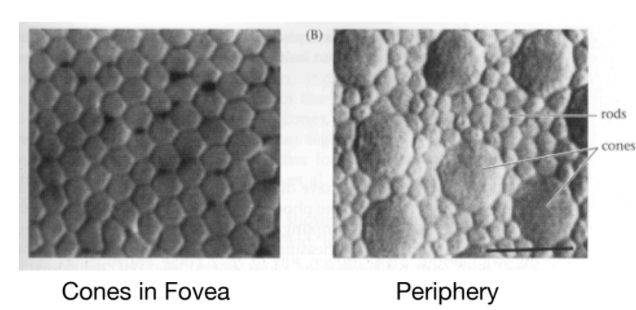

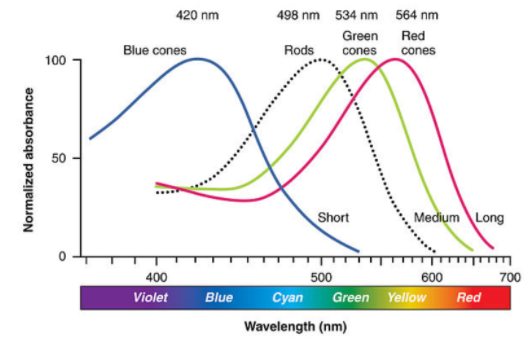

And we have in brain rods that perceive brightness and cones that perceive those colors

| Cones and Rods in Human | Absorbance Spectrum |

|---|---|

|

|

where in human,

- we have only three types of cones: one for blue, one for green, and one for red. But combinatinos of the three gives us perception of a spectrum of colors. This is also why we have RGB scale in computer images.

- we have only few cones in periphery, so we are actually not that good at detecting colors at periphery

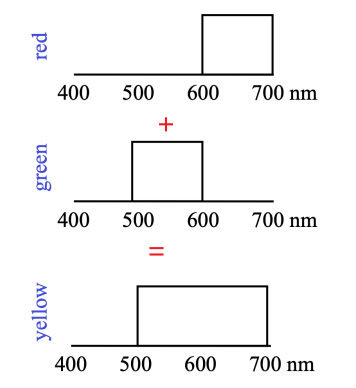

Then from this, you also get modern applications in how to arrive at different colors:

| Additive | Subtractive |

|---|---|

|

|

- additive color mixing: adding RGB to get more colors

- subtractive color mixing: multiplying/intersection of color

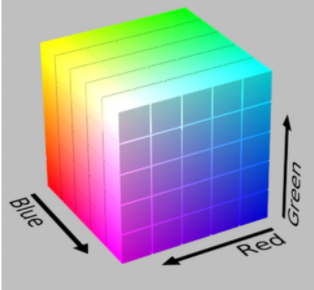

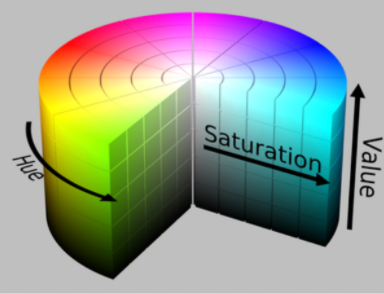

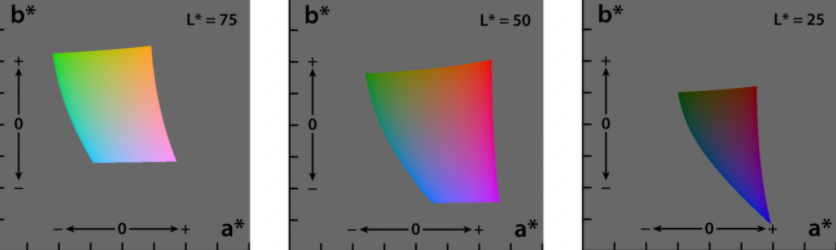

And we have different representation of color spaces

| RGB | HSV | Lab Space |

|---|---|---|

|

|

|

where:

- HSV: hue saturation value

- notice that we get an illusino of magenta which comes from mixing of red and blue, which if you look at the wavelength scale, it should not happen

- $L$ in lab space means intensity. This is a non-Euclidean space that seems to correspond the best with human vision (the idea is color spectrum could be a function of intensity as well)

- so essentially $L,a,b$ would be the values for color

- in practice $L$ is often represented as the pixel value when in grey scale

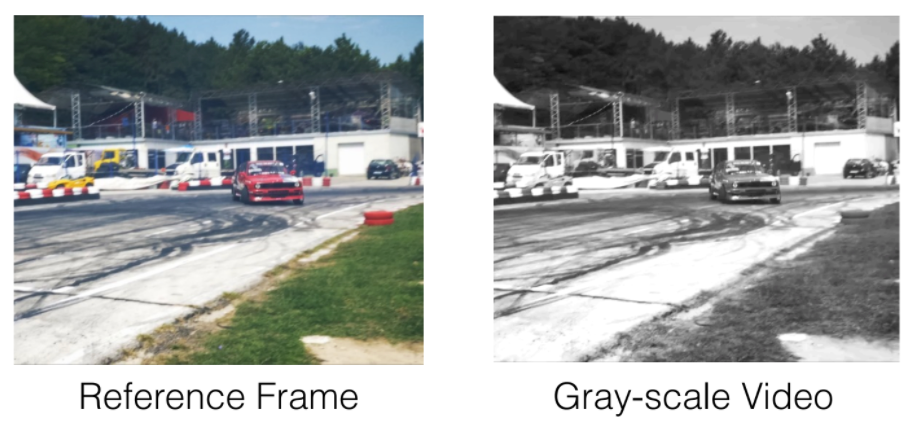

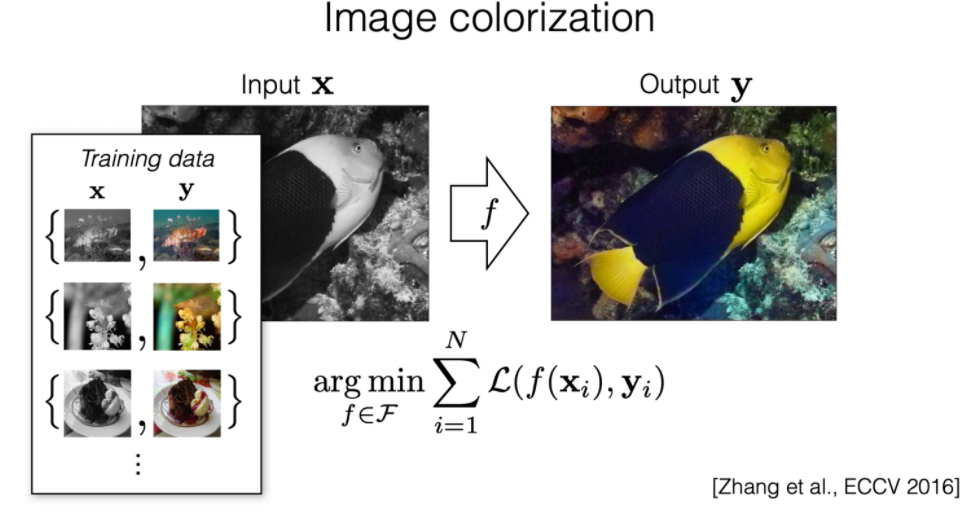

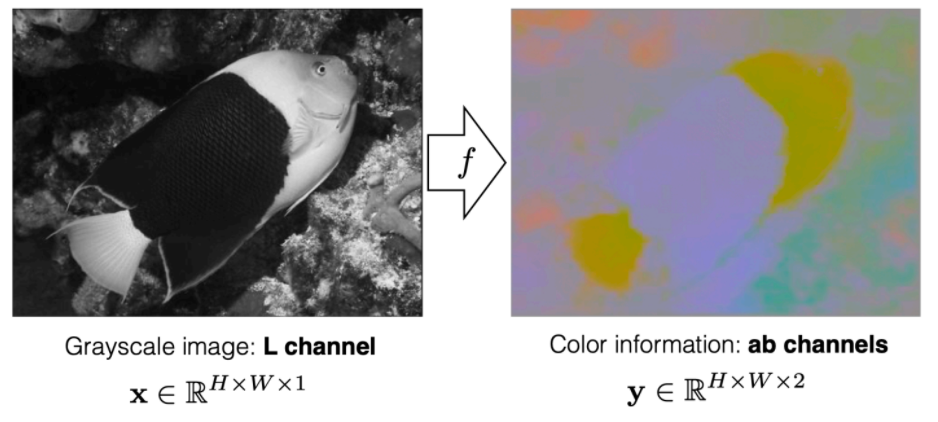

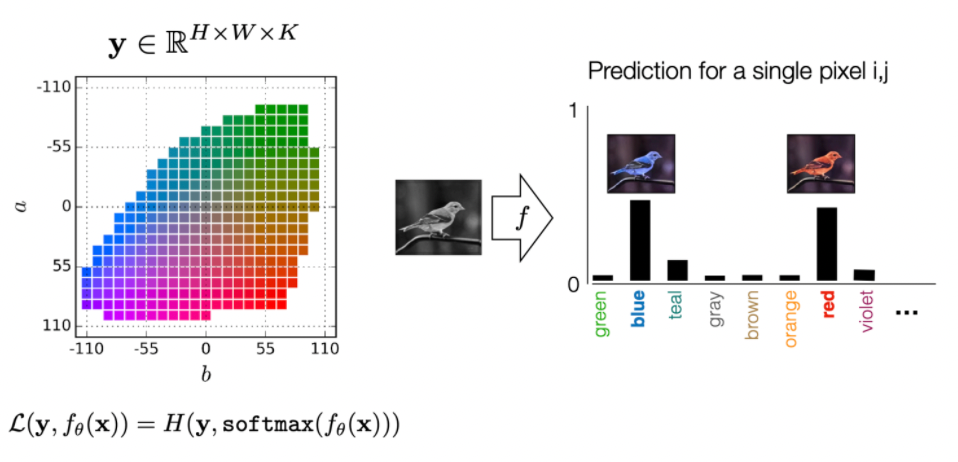

Then using Lab space could be used very commonly in for the task of image colorization

where the:

-

the grey scale image could already be the $L$ values

-

then the task is just to predict $a,b$ values of the lab

We can also only look at the predicted $a,b$ values:

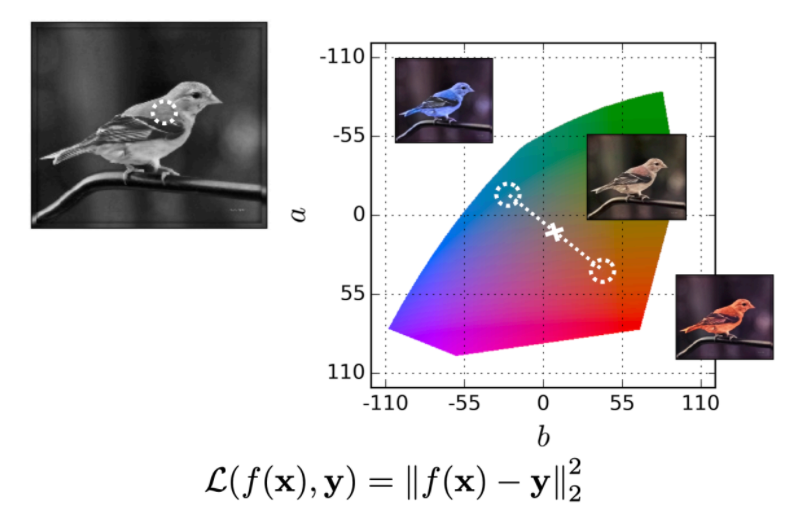

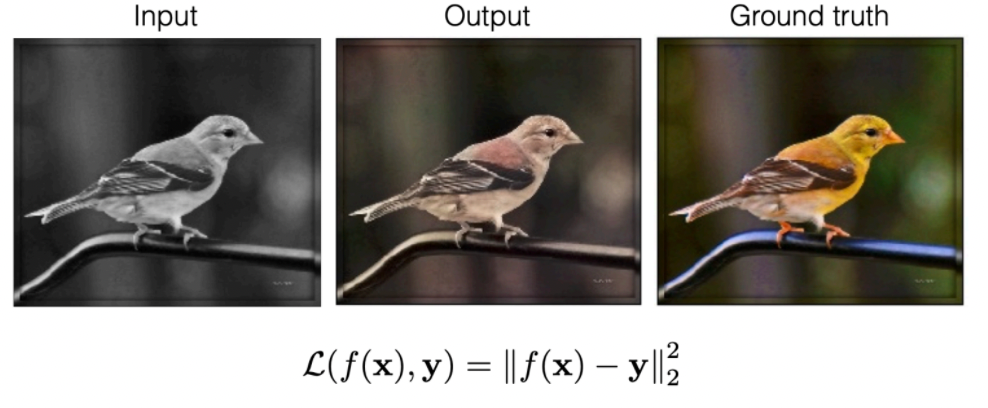

But since we are learning via regression, we could have averaging problem where if we have red/blue/green birds, then

| Given Data | Output |

|---|---|

|

|

One way to deal with it is to predict a distribution of discrete colors, so that we allow for more than one answer!

then basically we can output a distribution of possible for color for each pixel.

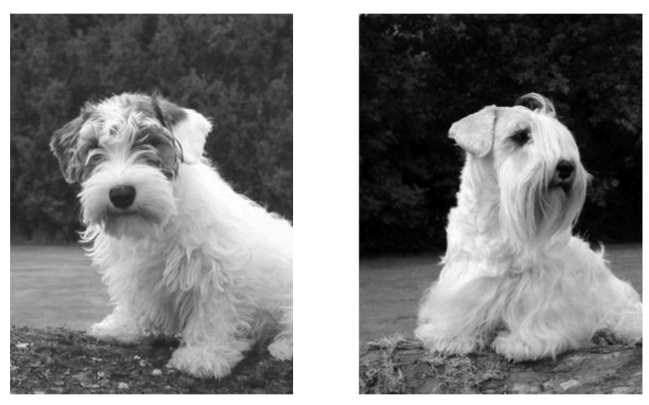

But still this type of model still have problems in biases:

| Training Data | Input | Color Prediction |

|---|---|---|

|

|

|

where:

- because many training data had dogs sticking tongues out, it paints a tongue as well on the input

Color Mapping for Tracking

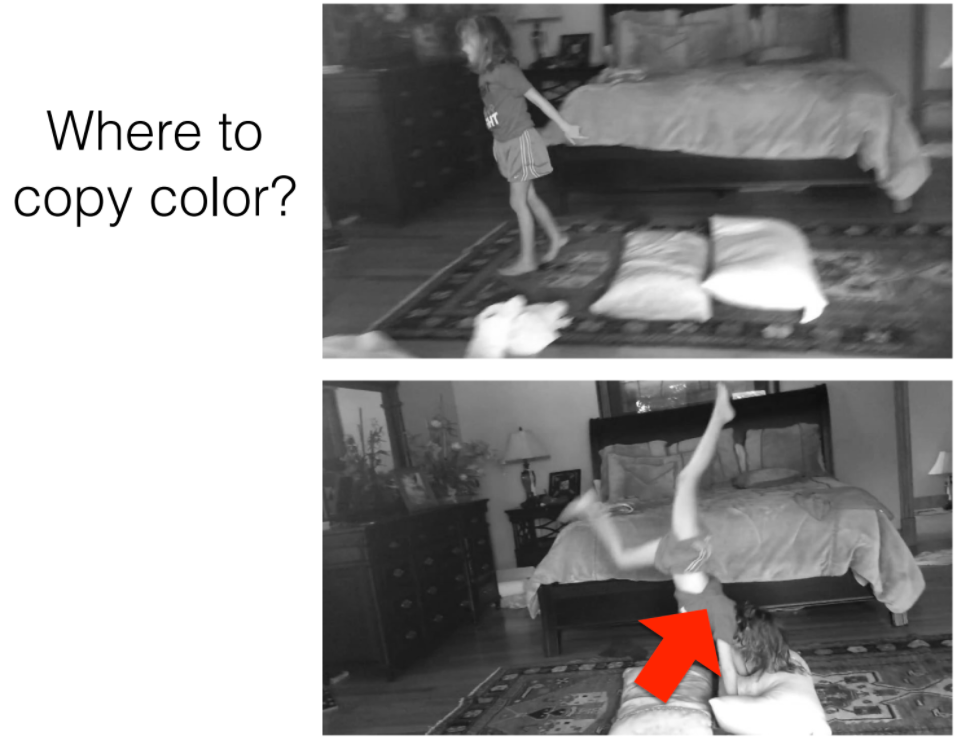

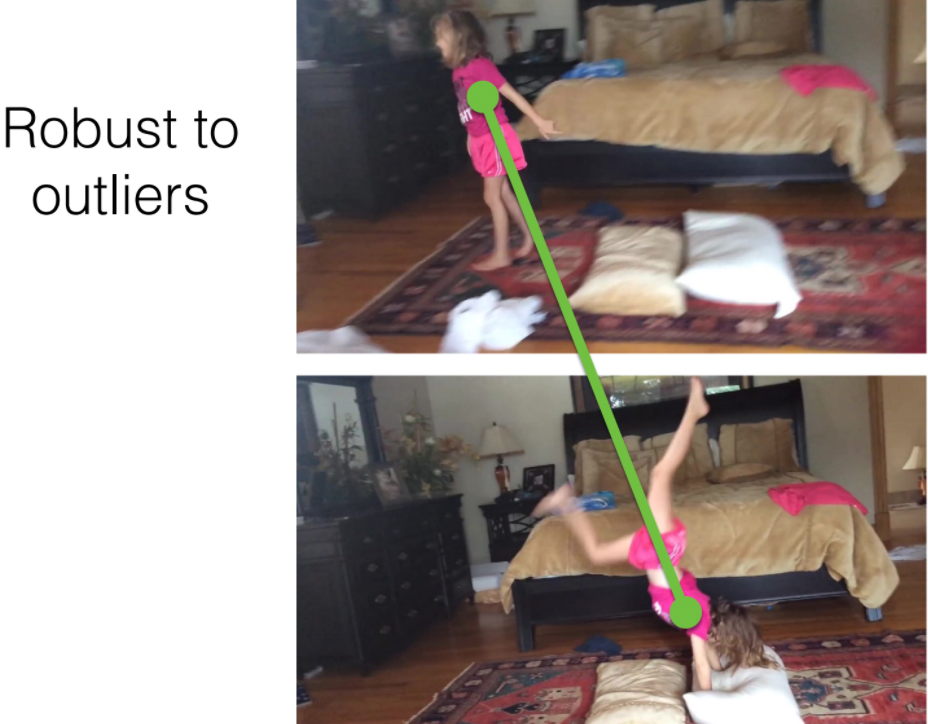

For image colorization, we ask the question:

However, in video, recall that we would want to consider coloring for the hidden purpose of tracking. Hence your question would be:

Where should I copy this color from?

| Learning Task | Label |

|---|---|

|

|

where notice that the solution to this colorization problem is tracking (hence we achieve our goal)

- we do not want to say that all objects of the same color are the same object, which is kind of what image colorization do

- here we learn color for tracking, hence this reformulation.

How do we color the video such that it learns where to map?

- essentially what the NN learn is a pointer, but the loss is on the color

For each pixel, we have some embedding.

- $i,j$ would represent the location of the pixel in each image

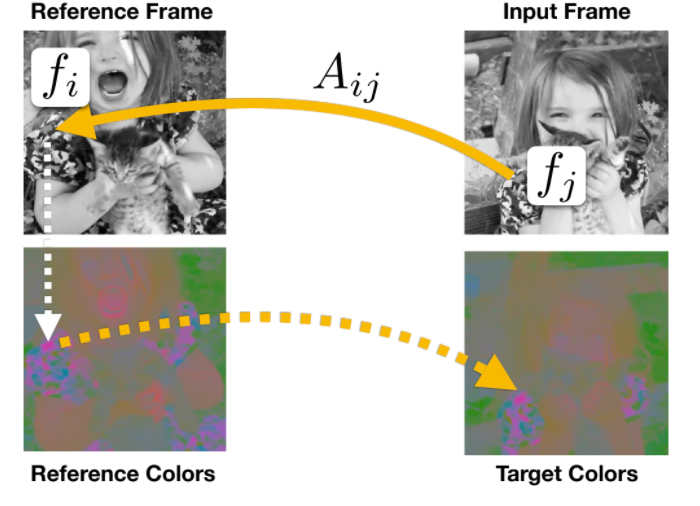

- for every pixel $i$ in frame 1, we want to know how similar is it (i.e. if same object) to pixel $j$ in frame 2, e.g. at a later time.

- Hence we get a matrix $A_{ij}$ for measuring similarity between every pair of pixel

- then, we want to assign same color to “similar” pixels by having a weighted sum

Therefore, the whether if a pointer exist between pixel $i$ and $j$ would be represented by similarity between $f_i$ and $f_j$.

Graphically, we are doing:

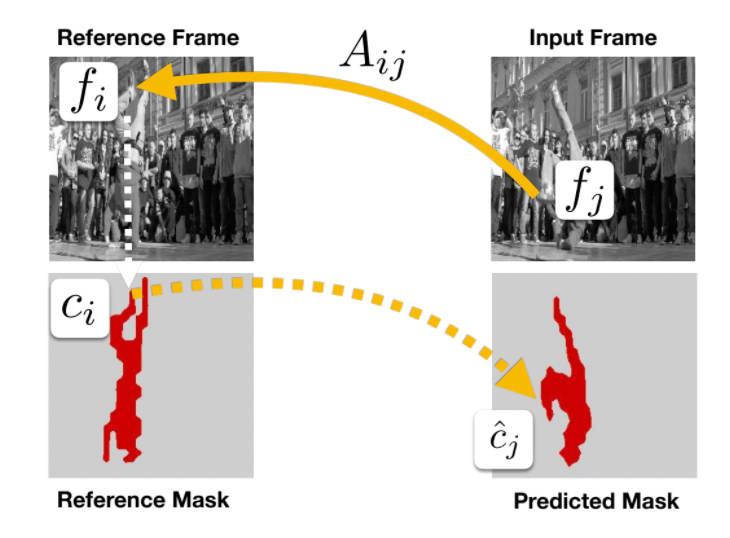

In more details: given color $c_i$ from reference and (learnt) embedding $f_i$ from refernce, and a input to predict, what is the color at each position $j$? We do this by:

\[\hat{c}_j = \sum_i A_{ij}c_i,\quad A_{ij} = \frac{\exp(f_i^T f_j)}{\sum_{k} \exp(f_k^T f_j)}\]essentially a weighted sum based on similarity of the embedding of each pixel. (note the analogy to self-attention mechanisms)

Then since we have the label already:

\[\min_\theta \mathcal{L}\left( c_j, \hat{c}_j | f_\theta \right) = \min_\theta \mathcal{L}\left( c_j, \sum_{i}A_{ij}c_i \,\, |f_\theta \right)\]so that

- for a particular video, our NN would be able to produce a pixel-wise embedding $f$ from its learnt parameters $\theta$

- once we have the embedding, we can color the image or we find object correspondance hence tracking by measuing similarity between $f_i,f_j$ between any two locations of between two frames!

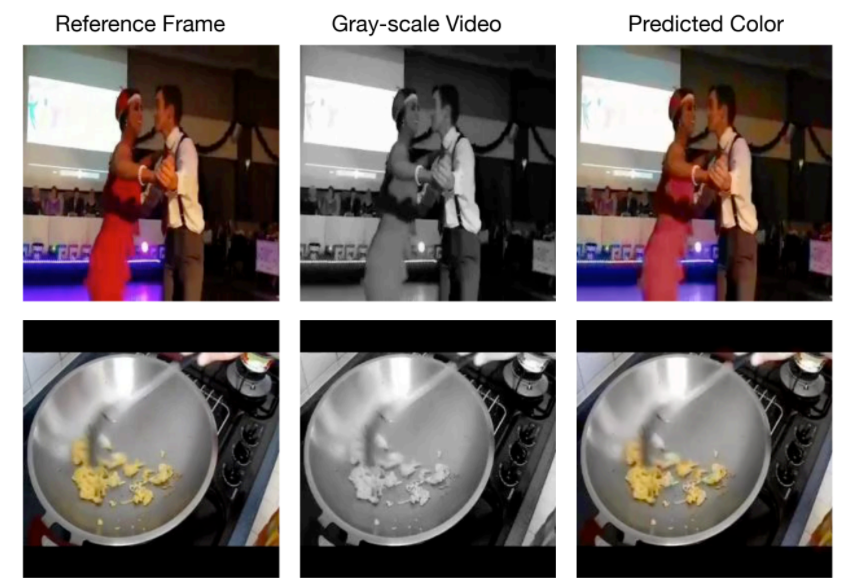

Example: using it to predict color

which implicitly learns object tracking. Therefore, if you need tracking information, you just keep a pointer by:

-

compute the $\arg\max_{i} f_i^T f_j$ so we know which pixel $i$ the pixel $j$ corresponded to

-

then convert an entire group of it as a mask

and let the mask propagate in your network to do other things. Some more result examples

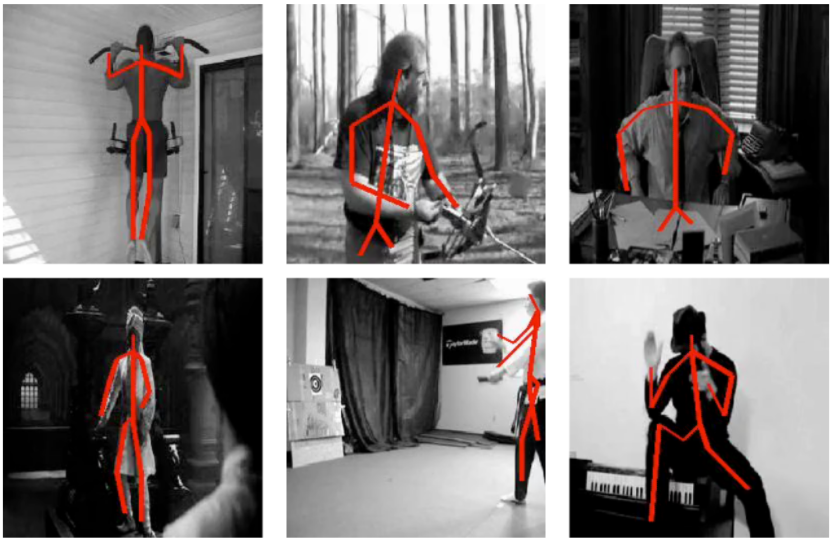

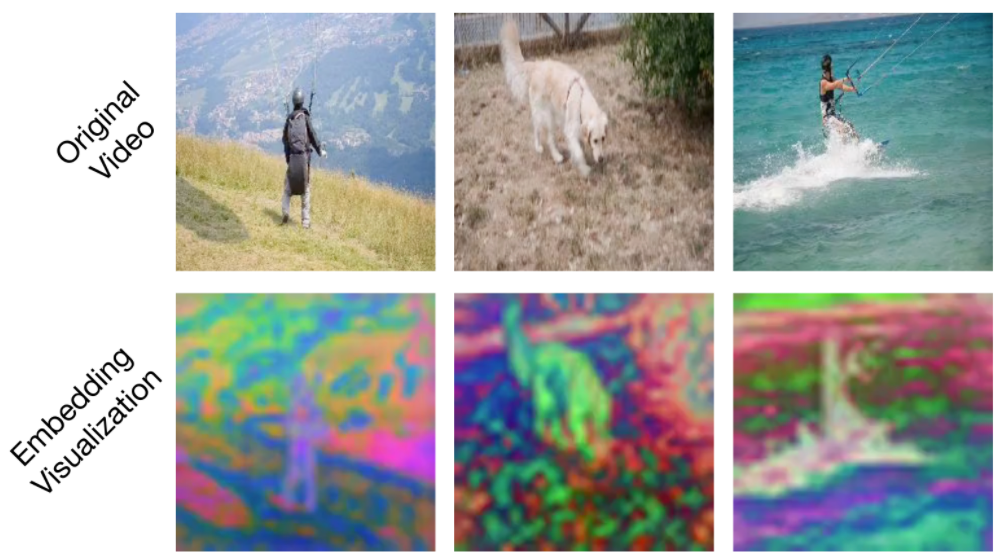

| Tracking Segments | Tracking Poses | Visualization of Embeddings |

|---|---|---|

|

|

|

where

- embedding in the third example refers to the $f_i$ for each pixel. Since $f_i$ is high dimensional, we needed to use PCA to reduce it to 3 dimension to superimpose on the original image. Note that this could also be useful for drawing a segmentation for objects in a video.

- note that the above notion of $\arg\max_{i} f_i^T f_j$ makes sense as the colors we found is dependent on the similarity between $f_i$ in input/reference image and $f_j$ of another frame

Interpretability

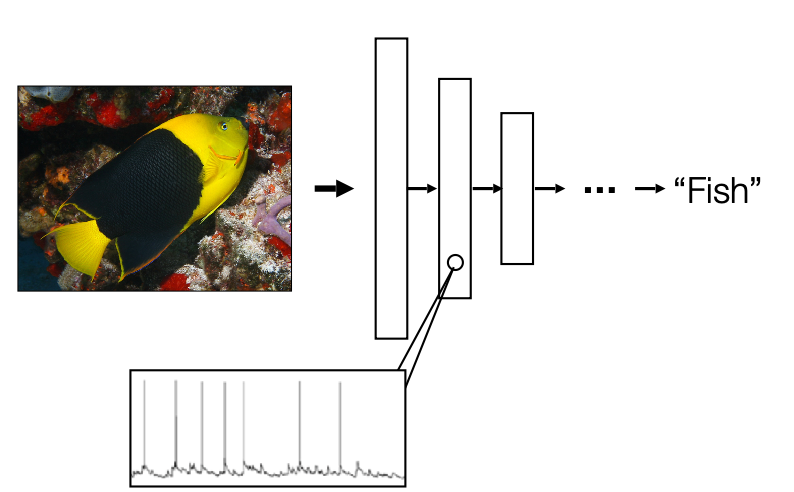

How to interpret deep learning architectures? Consider the simple example of

What are neurons in the network learning? What should it learn?

- those techniques below could also be useful for debugging your model.

This is an important chapter that covers many common technique used in real life to visualize what is happening in your model.

Grandmother Neurons in Human

It turns out that research shows there are specific neuron in your brain that represents your grandmother, a neuron in your brain that represents your friends, etc.

- done by inserting electrodes into brain and letting patients look at certain images. Hence recording neuron activities.

- recall that brain sends electrical signal around. Here it is sticked in visual system, so it responds to what people see and activates certain neurons.

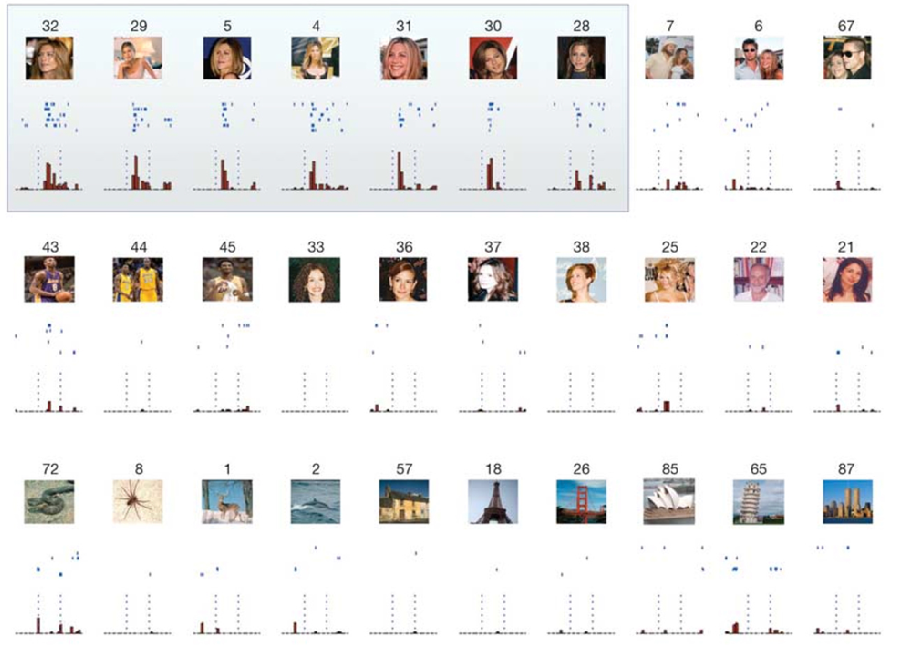

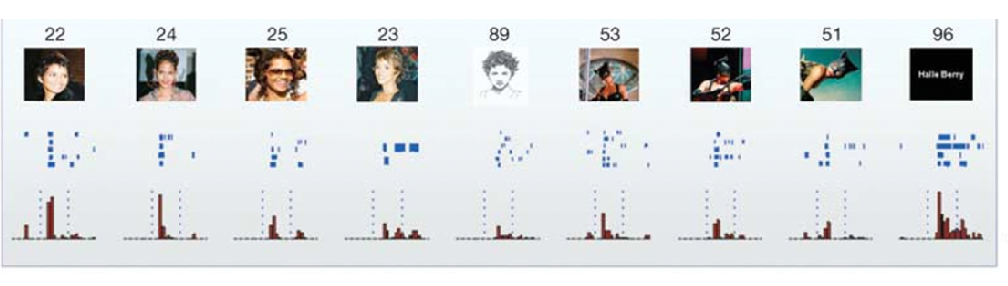

When flashing pictures of celebrities, there are neurons that would only fire for them:

where we see there are high activations for only a few neurons.

More interestingly, they are firing for the concept of a person:

so that it also fires for things like “sketches” Halle Barry.

- but the question is, if I take out that neuron, would I forget about Helle Barry? It is highly plausible that there would be redundancies in brain so that we don’t forget easily.

- but still the concept of a few/specific neurons being able to fire/activate for a certain class is important.

A grandmother neuron is a neuron that responds selectively to an activation of a high level concept corresponding with an idea in the mind.

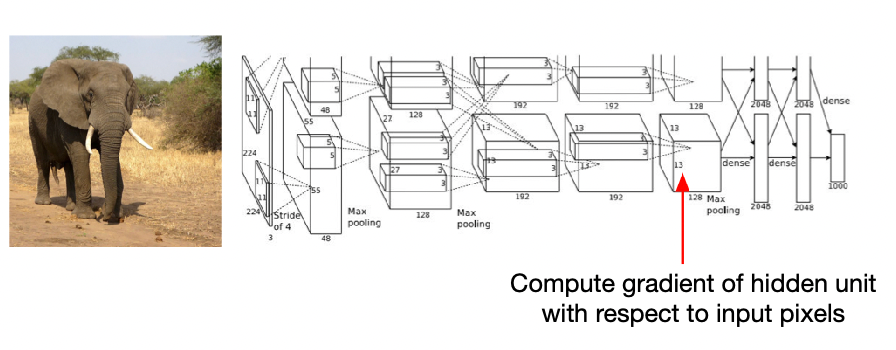

Deep Net Electrophysiology

Following from the above search, this hint on one way how we can interpret deep learning networks, by looking at what kind of image patch would cause the neuron to fire.

- other interpretation methods include Similarity Analysis, Saliency by Occlusion, etc.

First, we consider the activation values for each neuron:

then you can also get a graph like the above for a certain layer.

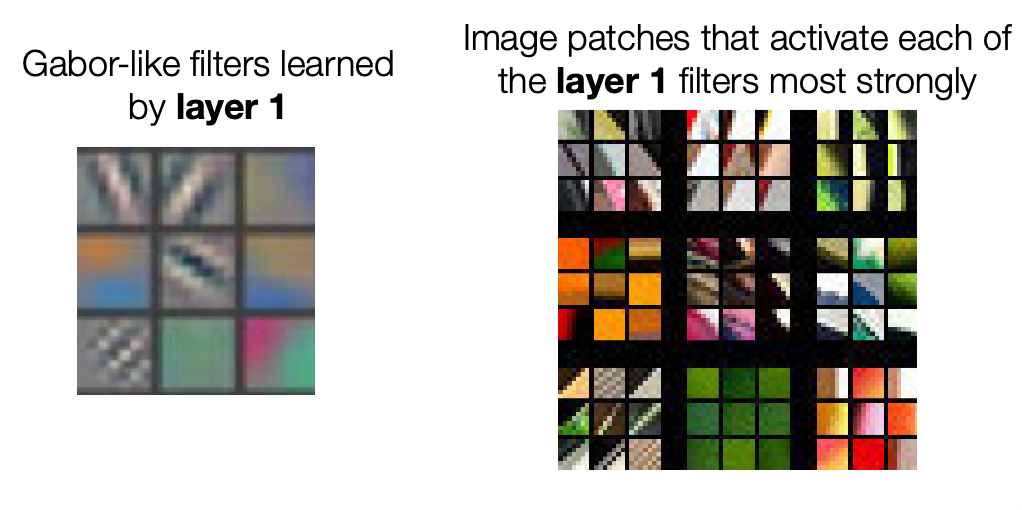

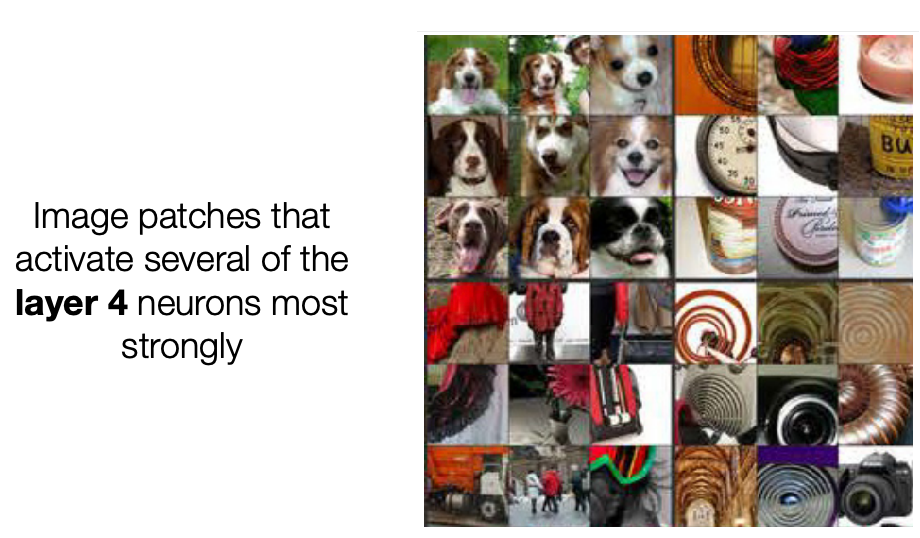

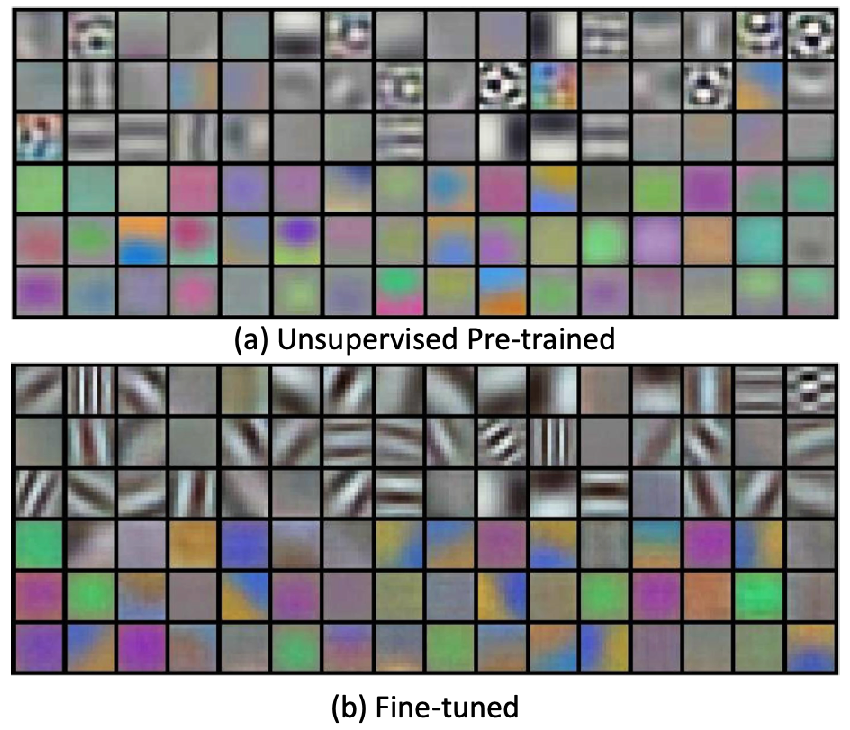

A more detailed example is visualizing the CNNs. Here we have each layer being a bunch of Convolutions, and we treat the kernel as neurons.

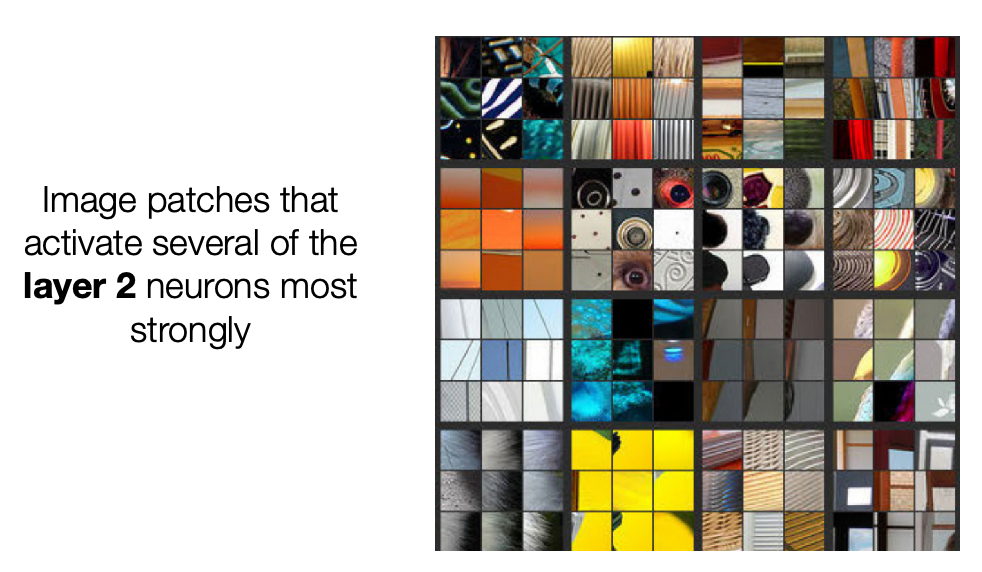

where essentially we record what image batches activate the first layer most strongly, and it seems that we are detecting edges. If you also do it for layer 2 in the network:

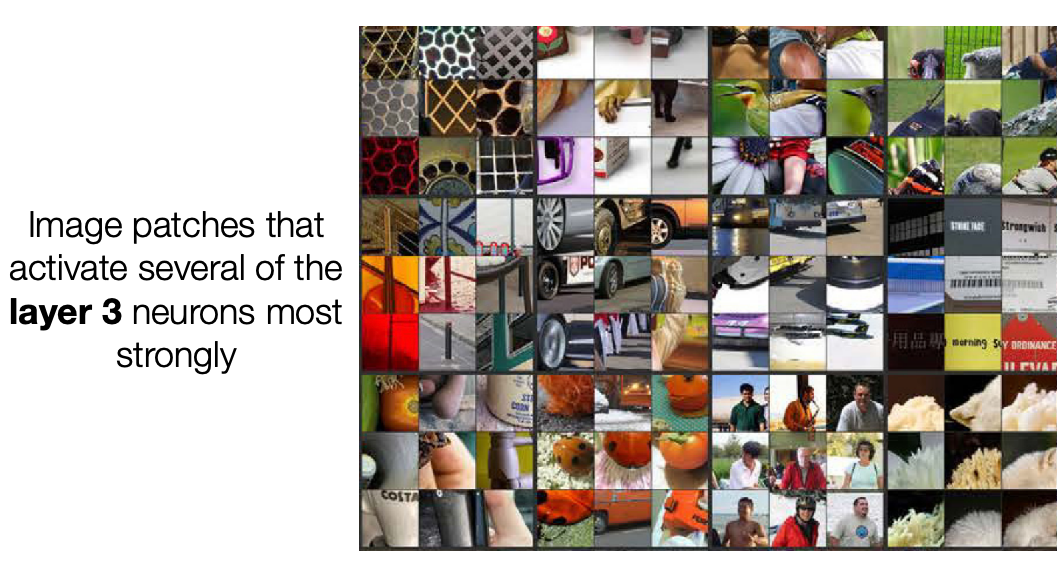

where it seems that those neurons are firing for patterns/shapes, and finally at layer 3:

where here we seem to be able to put shapes together and detect objects!

and etc. But notice that the image activated are axis aligned

Since rotations are linear transformation, then we should imagine that to not change any information hence learnt representation should have an arbitrary aligned axis?

- rotation can be performed by a linear transformation, so then a NN could have rotated and those representations. Then why are we still have the vertical alignment for maximal activation? i.e. the activation is lower if we rotated the image, which shouldn’t happen.

Therefore, this also motivates another view that instead of having a grandmother neuron specialized for a concept, could it be that we have a distributed view of a concept across neurons, so that the combination gives us the classification?

- then we can perhaps recover the extra degree of freedom carried in by transformation such as rotation?

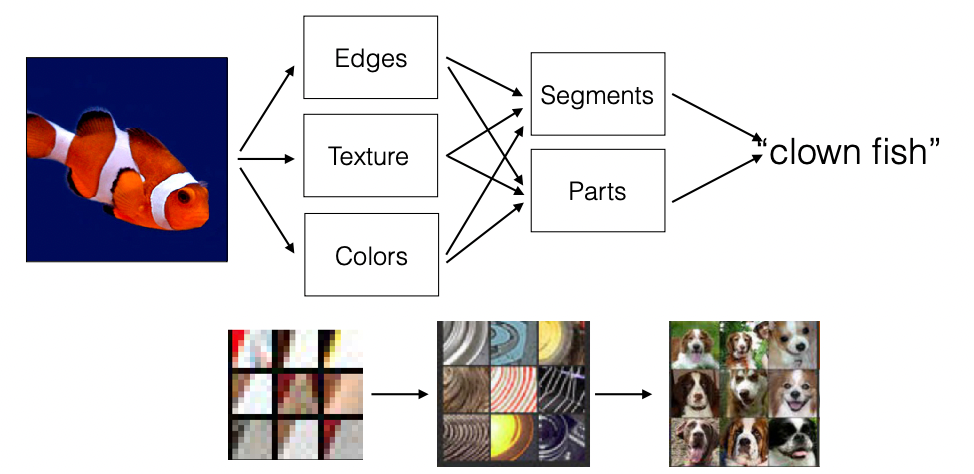

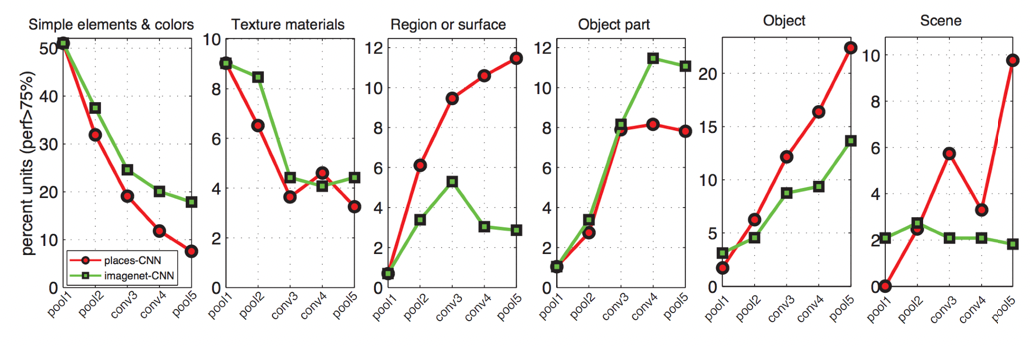

In summary, it seems that CNNs learned the classical visual recognition pipeline

We can also quantity this at each level:

where vertical is percent of neurons that activated when pictures described in the title is fed in. So here we see that:

- the deep layer we are in the model, the more higher layer concepts we are leanring.

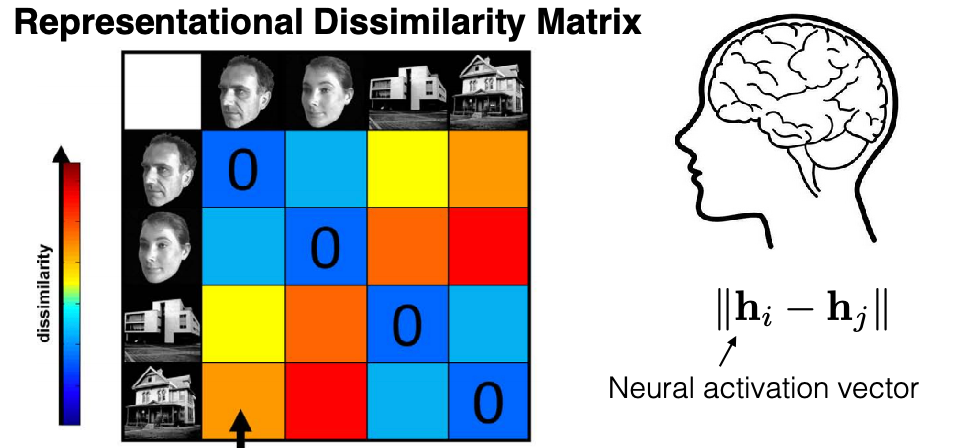

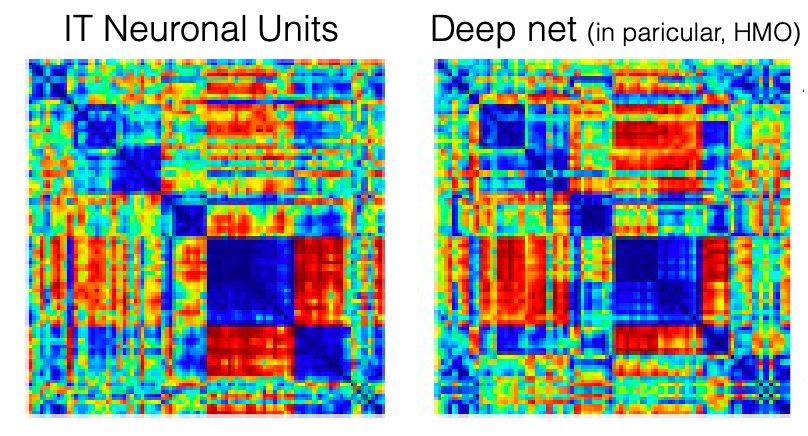

Similarity Analysis

Then if we take the embedding vector/hidden state of those images, we can also compare those vectors between images of different classes:

where we expect that similar images should have similar representations. Then we can use this to conpare compare thi

where here we can see what DNN thinks are similar or different objects. The correspondence (left is from people) is high!

- in some ways, this is surprising that machine is learning a similar way as human does

- but it could be reasoned that as humans are labelling those images. of course machines learnt a similar way.

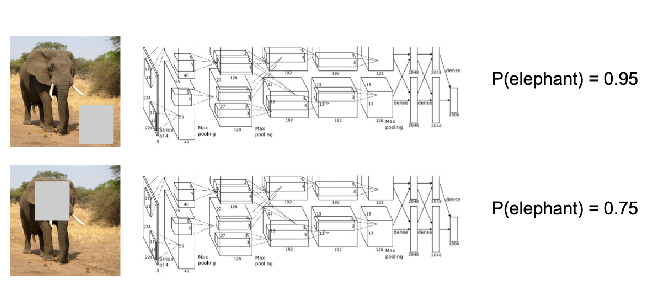

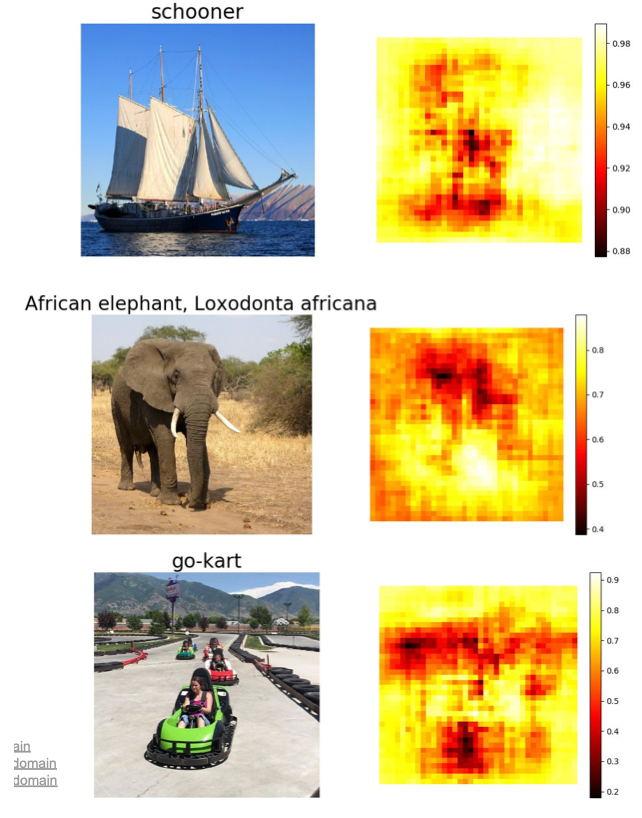

Saliency by Occlusion

What part of the image does the neural net make decisions on? Which part of the elephant did the neural net use to determine?

One simple idea is to blocking of several regions in the image, and consider how much does the score go down when each region is blocked

Then doing it over all regions:

where we can basically identify:

- which part of the image blocked out, still has high confidence

- then the inverse of the number would represent importance

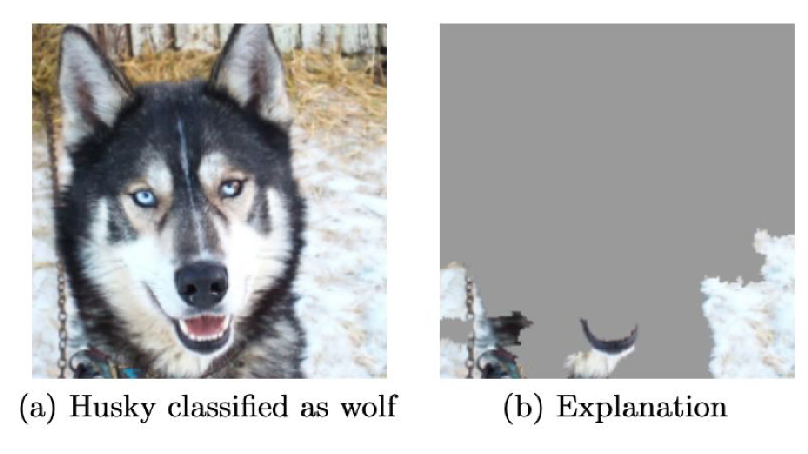

Another intuitive approach would be to answer the following question.

What is the maximum number of pixels I can mask out so that the machine can still classify the image?

An example of answering the above question would be:

so in this case the neural net is not learning the correct thing.

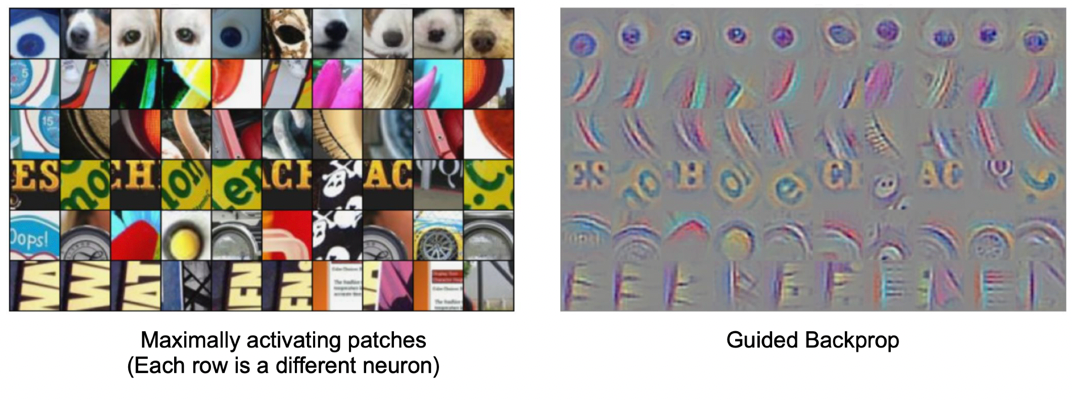

Guided Backpropagation

What pixles can I twiddle such that the resulting clasification is no longer correct?

Then this results in

Guided backprop: Only propagate pixel if it has a positive gradient backwards, i.e. activation increases if this pixel changed. Truncate negative gradients to zero.

- the reason why we truncate negative gradients is because we want to find which regions cause the object/find causation relationship, not the regions that do not cause it.

Visual examples of what we are doing:

Results:

where in this result, we are doing:

- patches found using the “Grandmother” neuron procedure, i.e. maximum activating patches

- from those patches, we perform a guided backpropagation to know what aspects of those patches that caused the maximum activation

You could also do only a guided backprop on the whole picture.

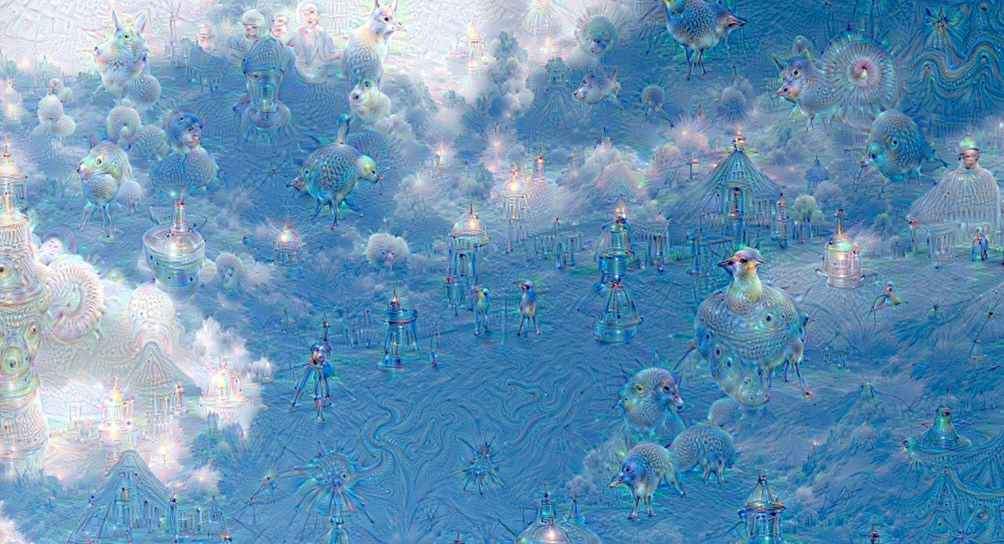

Gradient Ascent/Adversarial Attack

Given a trained model, what image does the network think is the most representative/likely of class $k$?

Then we consider:

\[\max_x f_{\text{neuorn}_i}(x) - \lambda ||x||^2_2\]where $f$ would be the activation function for each neuron

-

$x$ would be input to each neuron, which corresponds to certain pixles of the image

-

the regularizatoin is needed so that $x$ would be at least in the visible range, as otherwise we can go towards infinity

Then eventually we do a gradient ascent to find the “best representation for each class”. Results look like:

Then the “fun” things people could do is that we can try to modify an image such that some class $k$ would be activated for a neuron:

| Original Image | Modified Image using Gradient Ascent |

|---|---|

|

|

where in the right we are modifying images so that the model would have triggered activations of many classes you like.

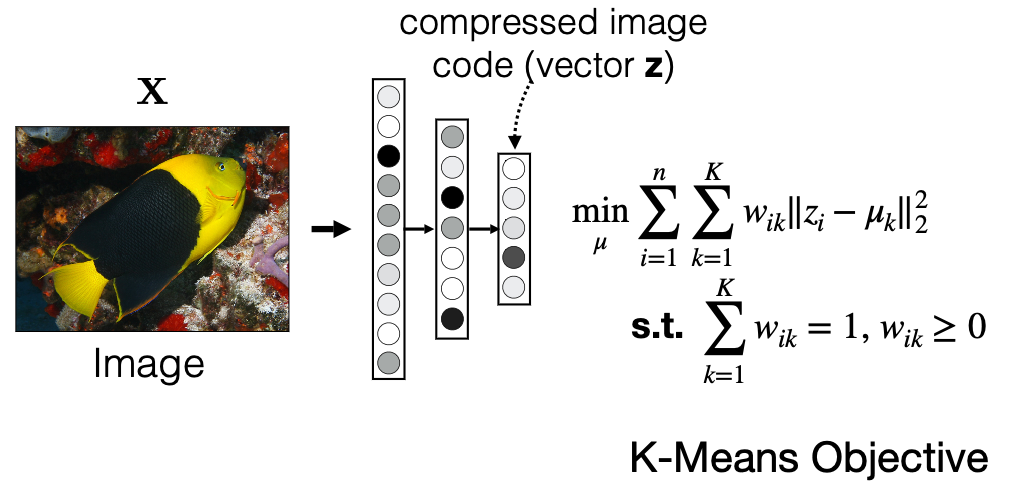

Self-Supervised Learning

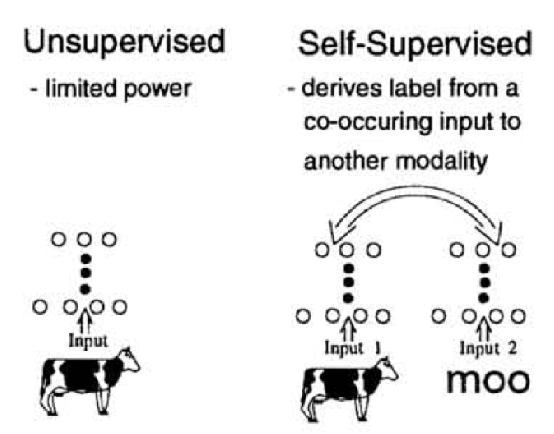

One example we have seen before would be how to use color for tracking, which turned the task into a self-supervised/unsupervised task. Here we see some other generic unsupervised methods used for downstream tasks.

-

such as unsupervised segmentation $\to$ object detection.

-

e.g. representations learnt can then be used for clustering. We can use the learnt $h=z$ hidden vector for k-means

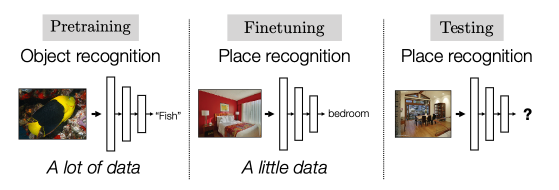

One simple architecture used would be similar to the process of fine-tune a pretrained model:

where the key point is that finetuning starts with some representation learnt from a previous task hence:

- we aim to construct a network that can learn useful representation $h$ of images $x$ in an unsupervised way

- then use that representation $h$ as a “pretrained network” for fine-tuning on other tasks

hence here we are mostly concerned with:

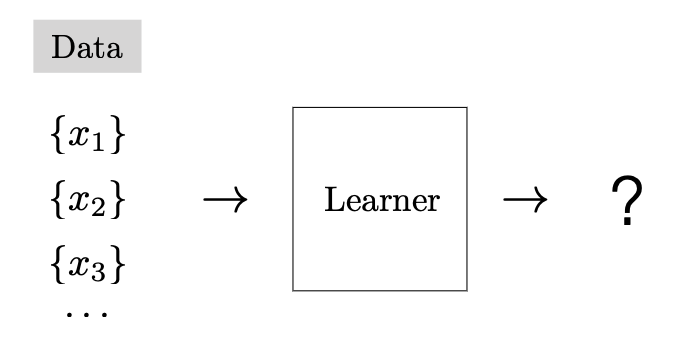

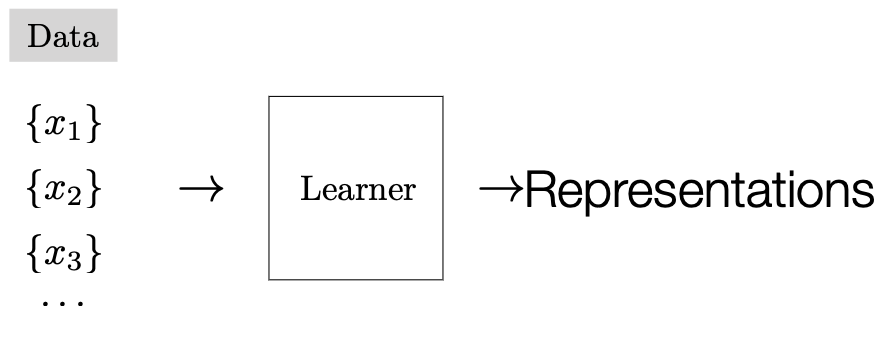

| General Self-Supervised | Self-Supervised Representation Learning |

|---|---|

|

|

Why is having some representation $h$ useful?

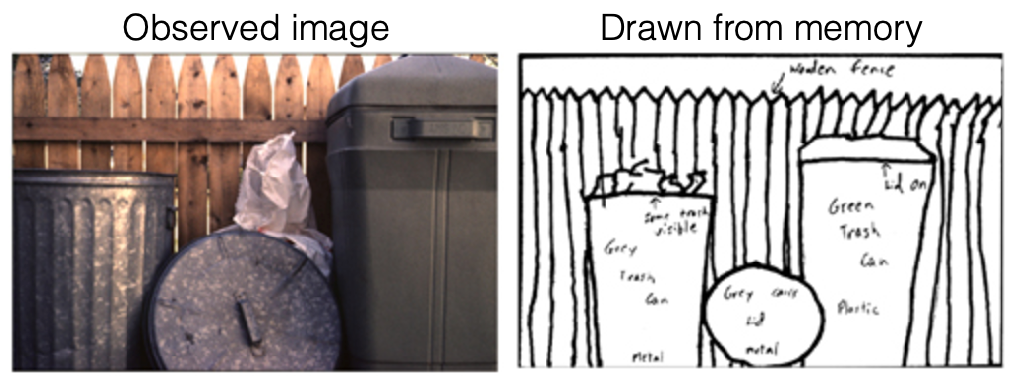

Consider the example of remembering the observed image and then drawing from scratch

notice that:

- when most people draw it, we automatically extrapolated: we drew the entire rubbish bin when we only observed part of it

- the same happened for videos, when we are only show part of a video and were asked to describe it, we extrapolate unseen scenes.

Our mind is constantly predicting and extrapolating. Self-supervised learning aim is to be able to extrapolate information/representation from the given data.

Common Self-Supervised Tasks

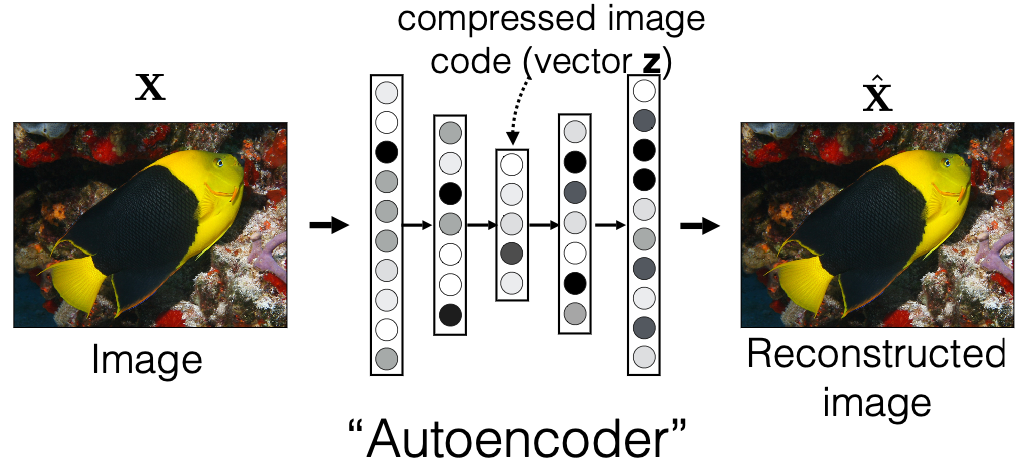

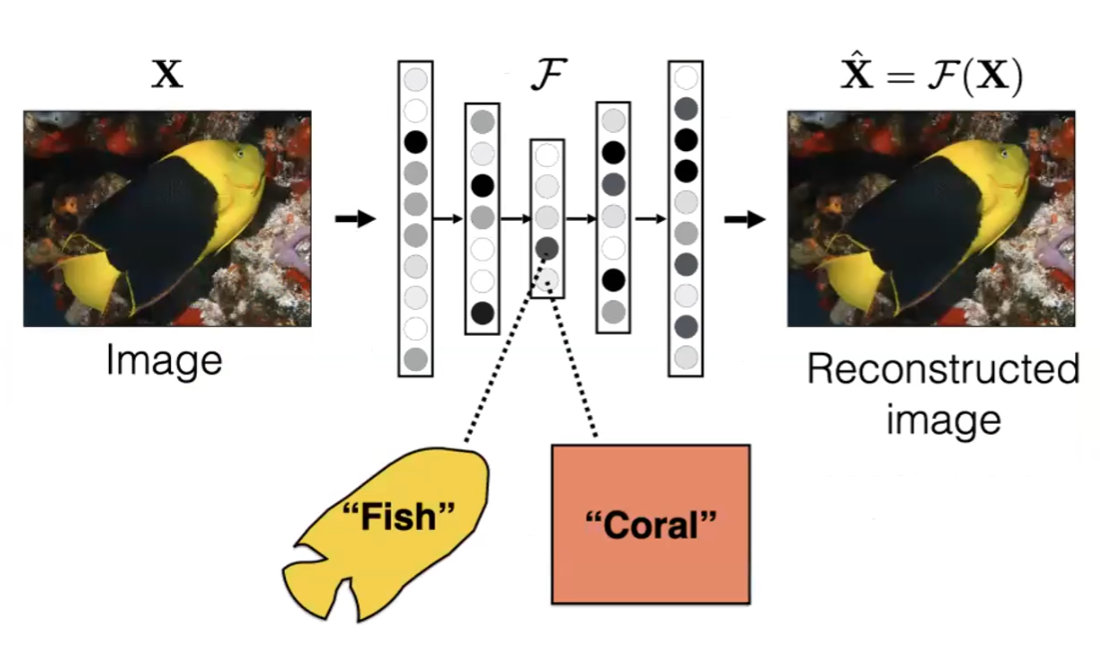

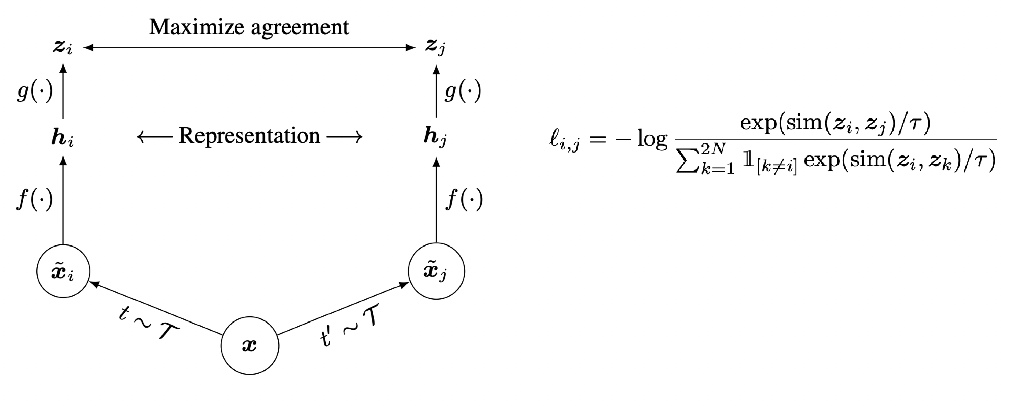

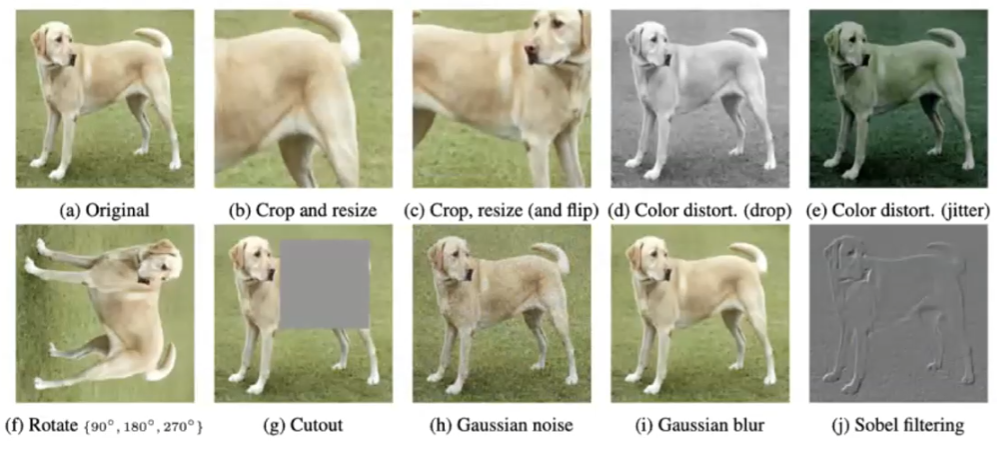

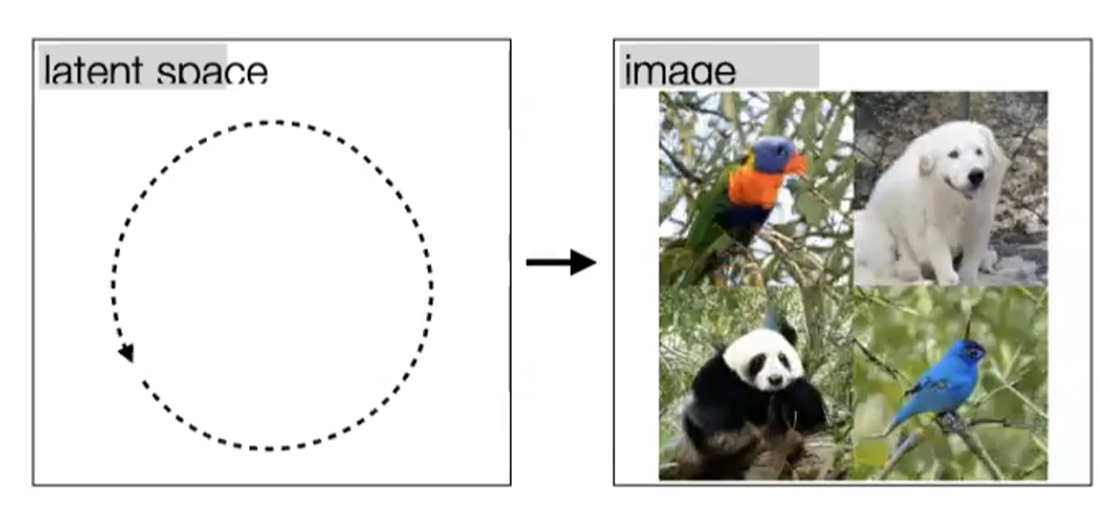

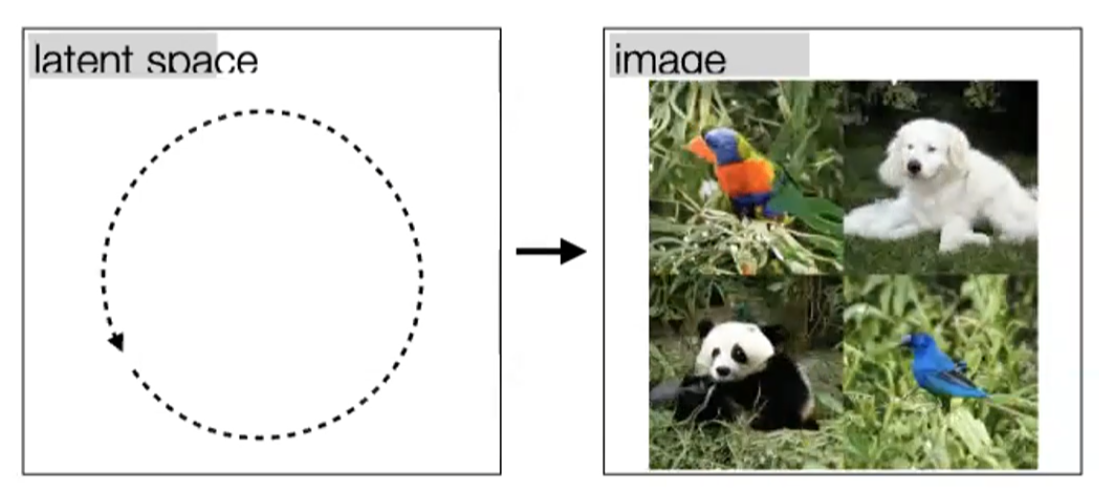

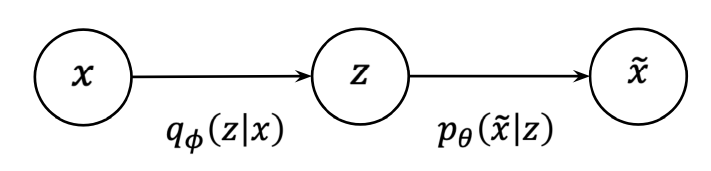

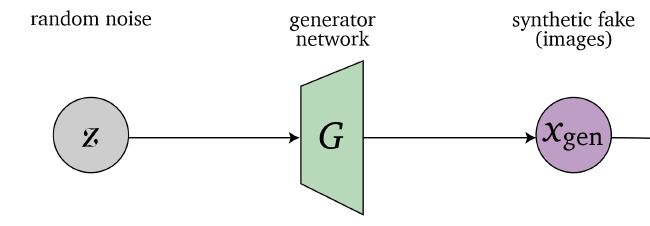

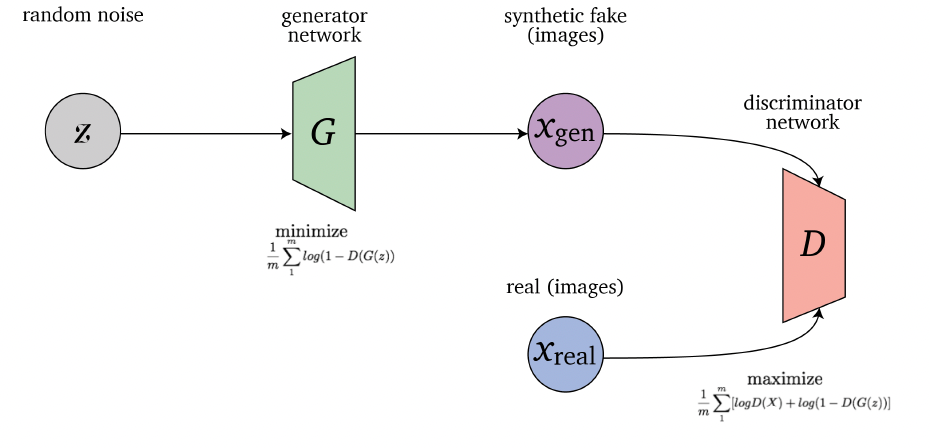

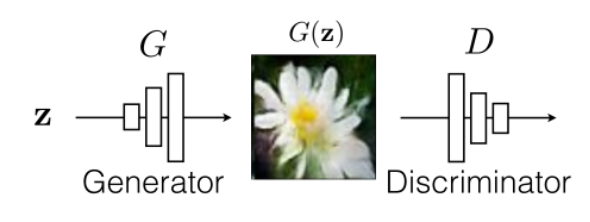

How do we get that representation $z$ or $h$? Here we will present a few:

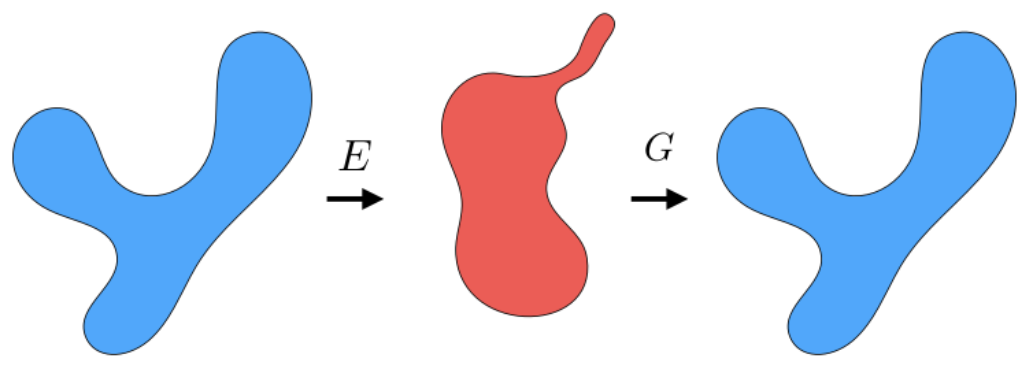

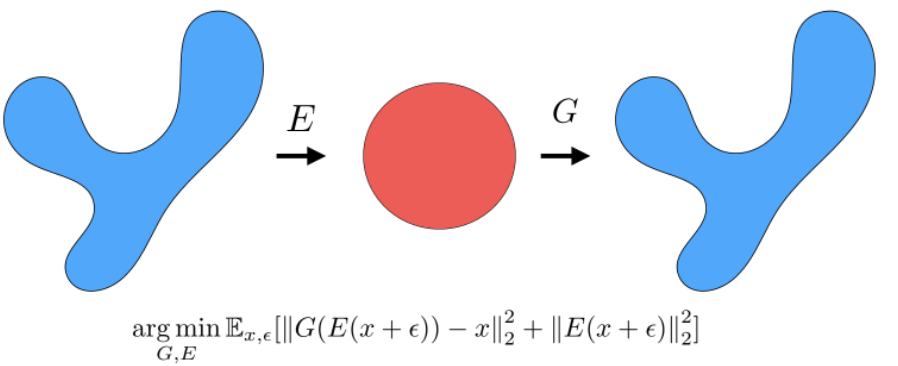

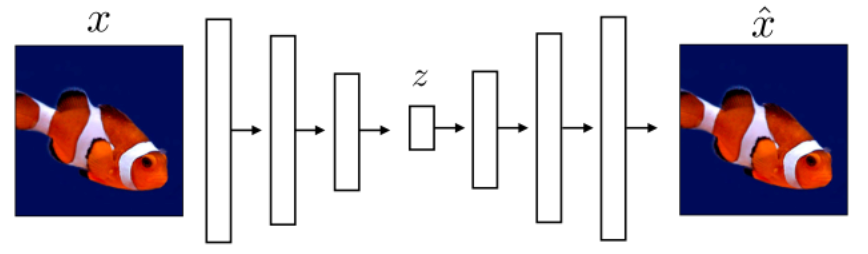

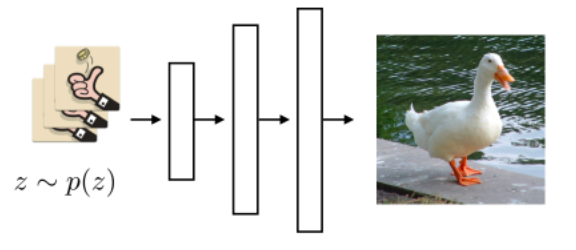

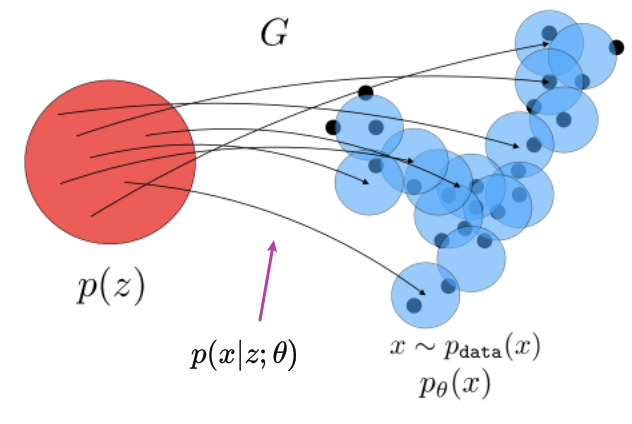

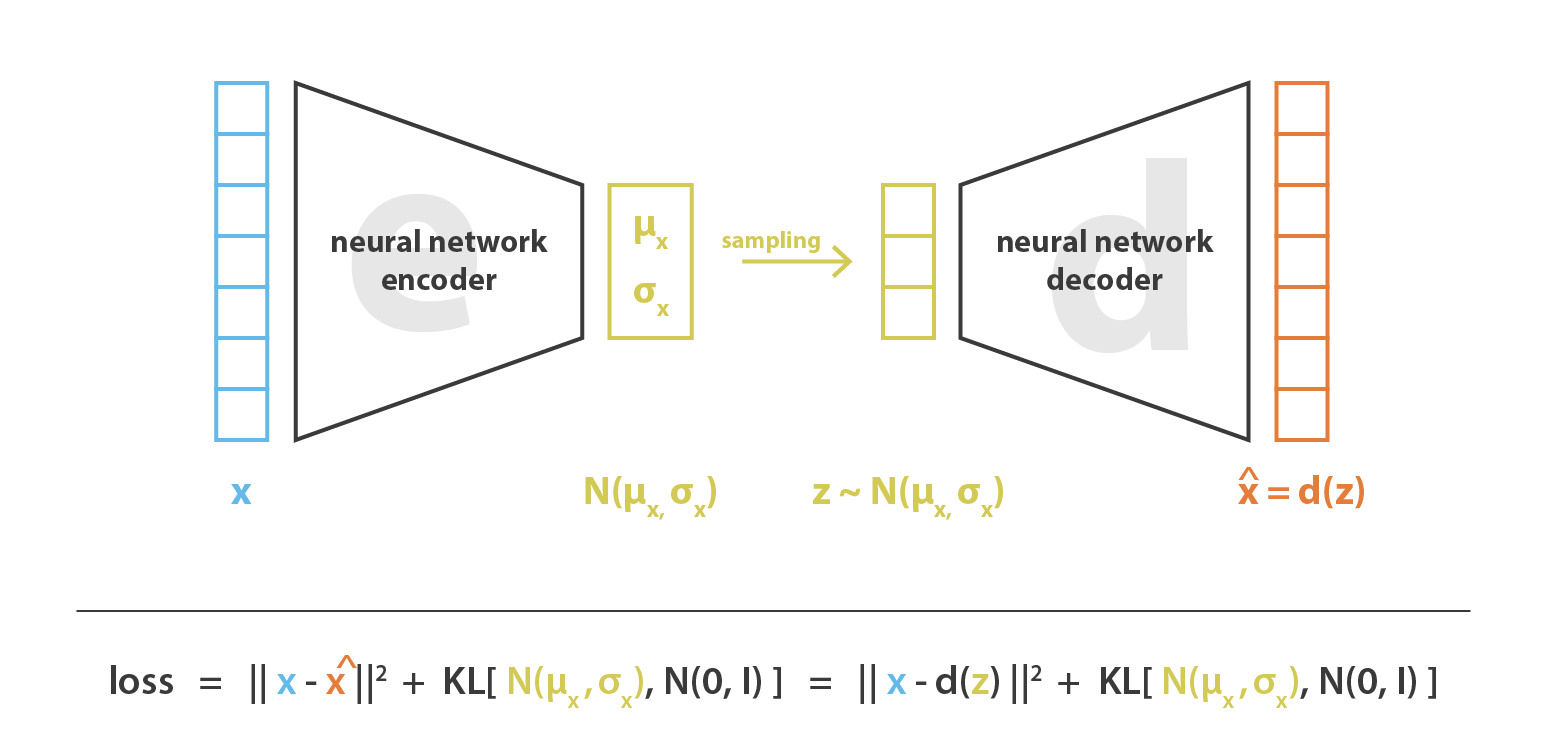

- find a low dimension $h$ such that reconstruction is the best: autoencoder

- find a network $f_\theta$ that outputs representation of both image and audio of the same video, and maximize correlation

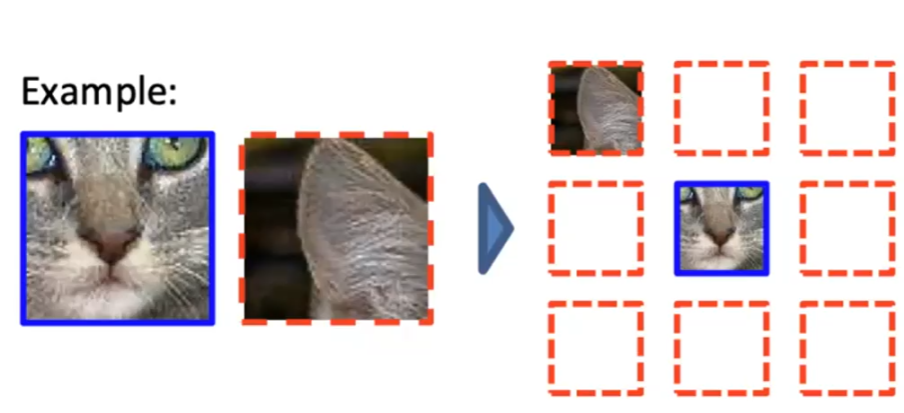

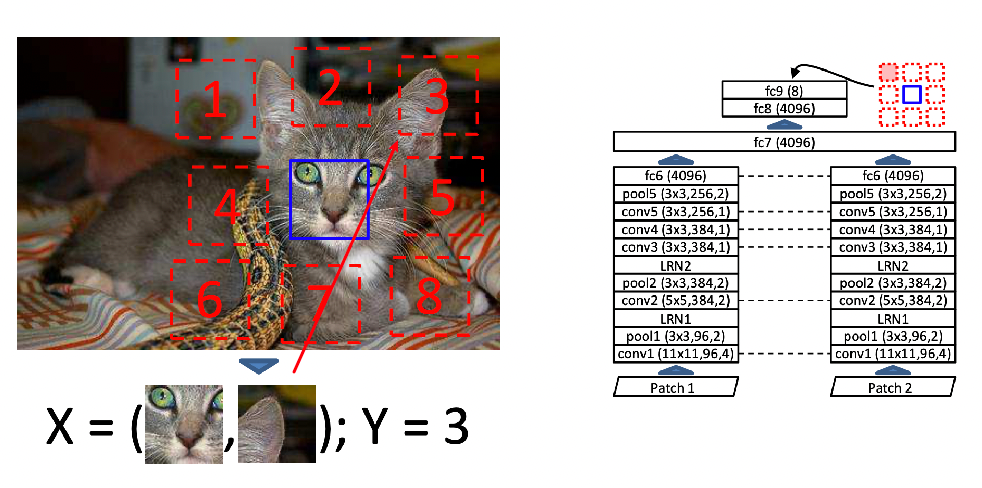

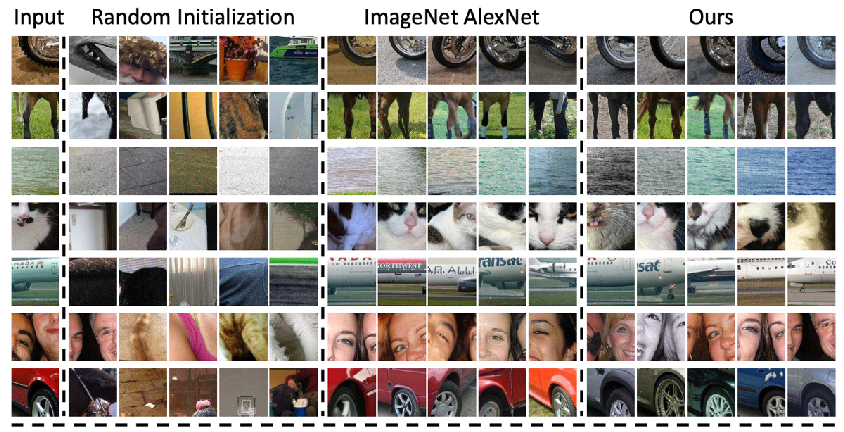

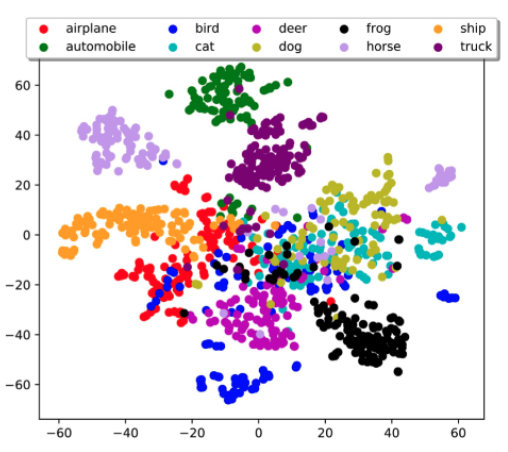

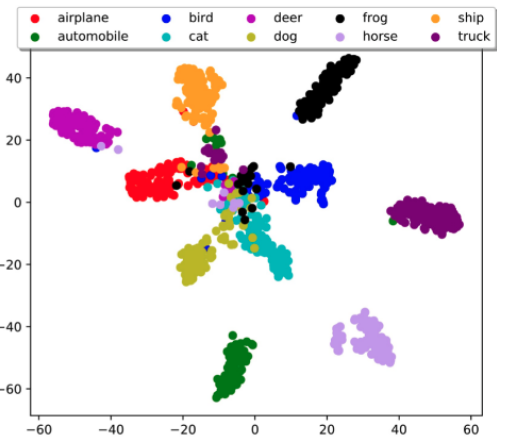

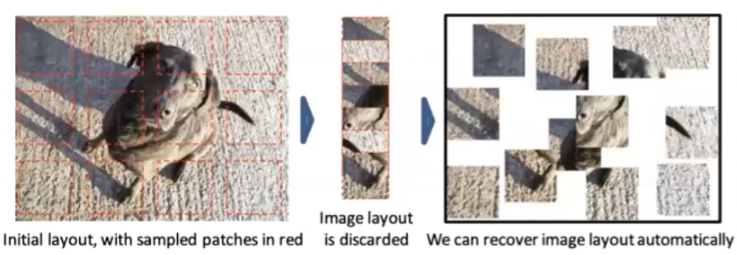

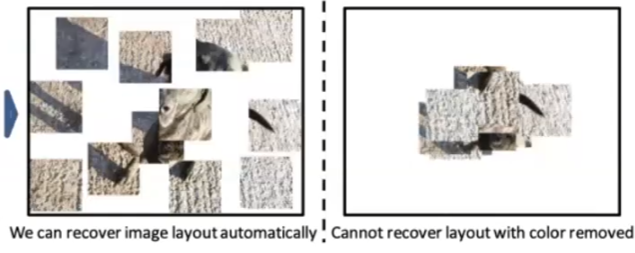

- find a network $f_\theta$ that outputs representation for context prediction, i.e. predicting relative location of patches of an image

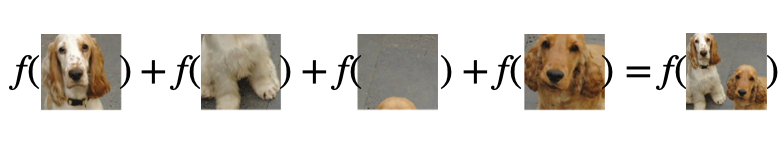

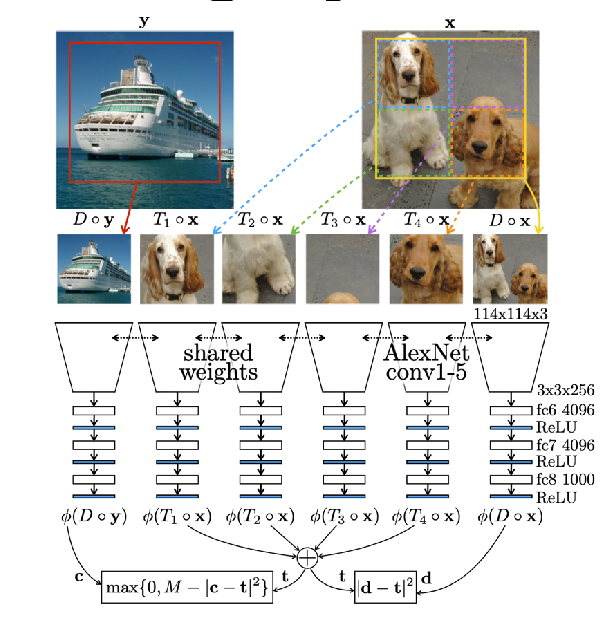

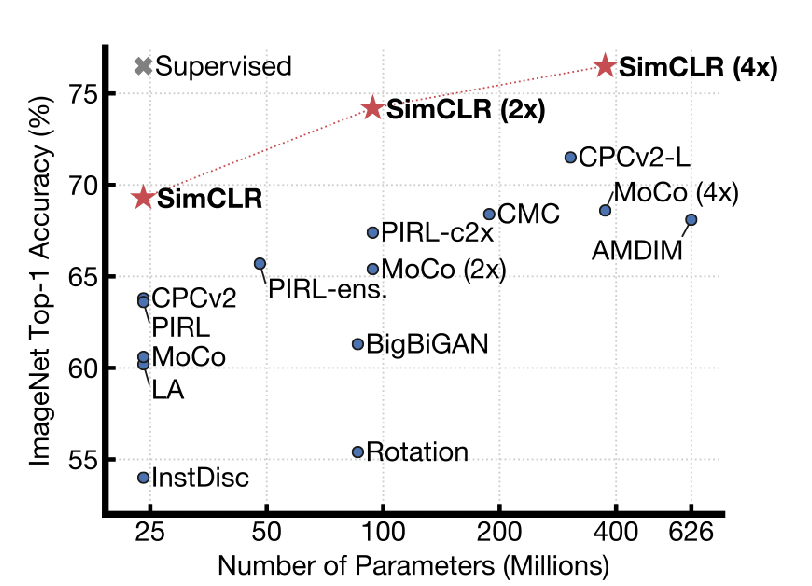

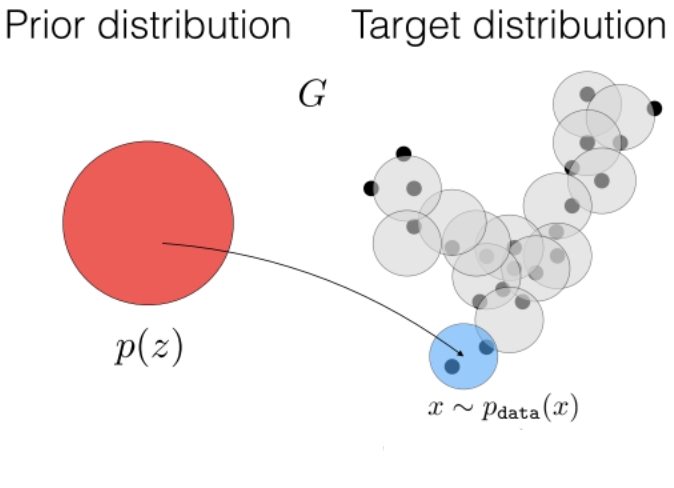

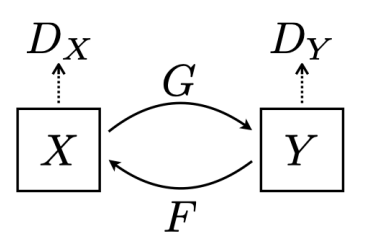

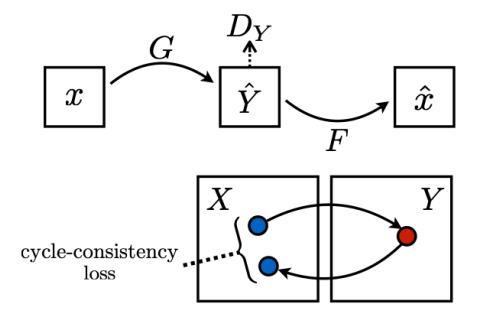

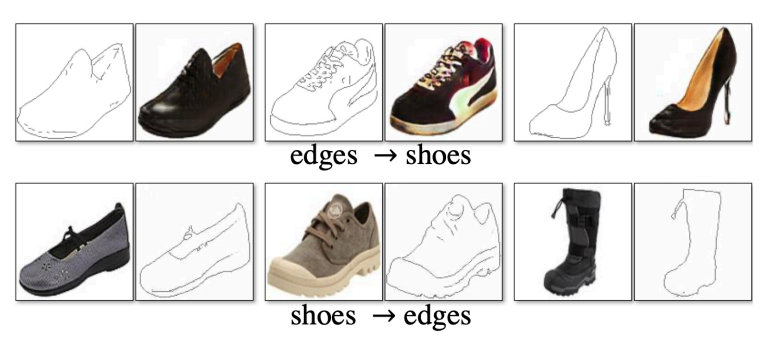

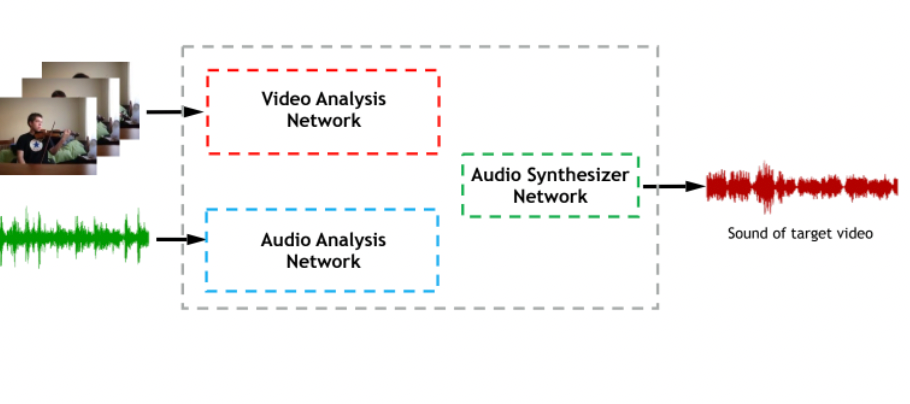

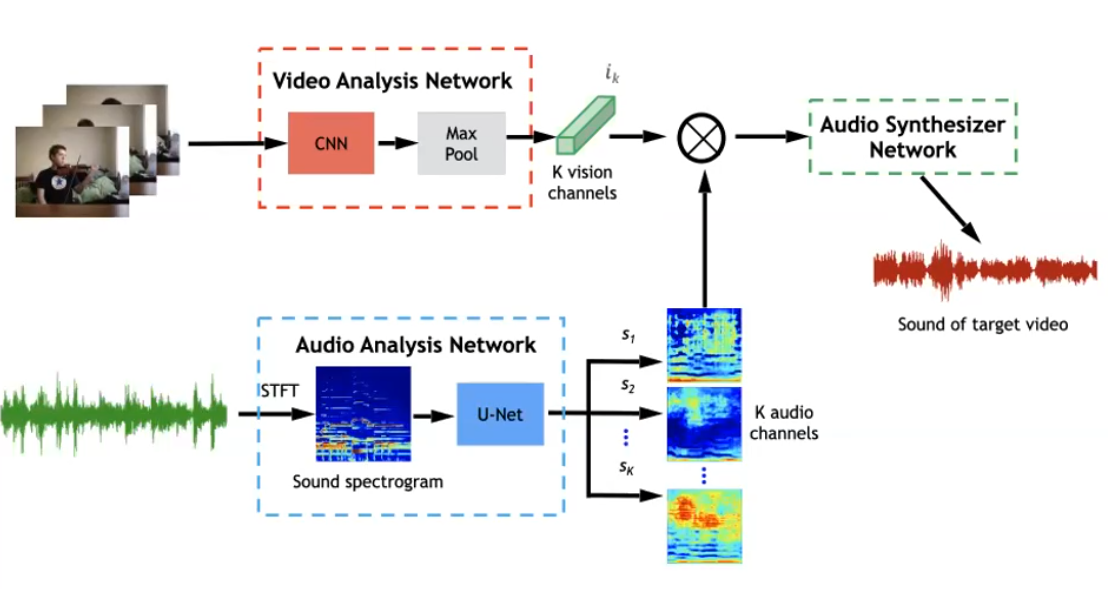

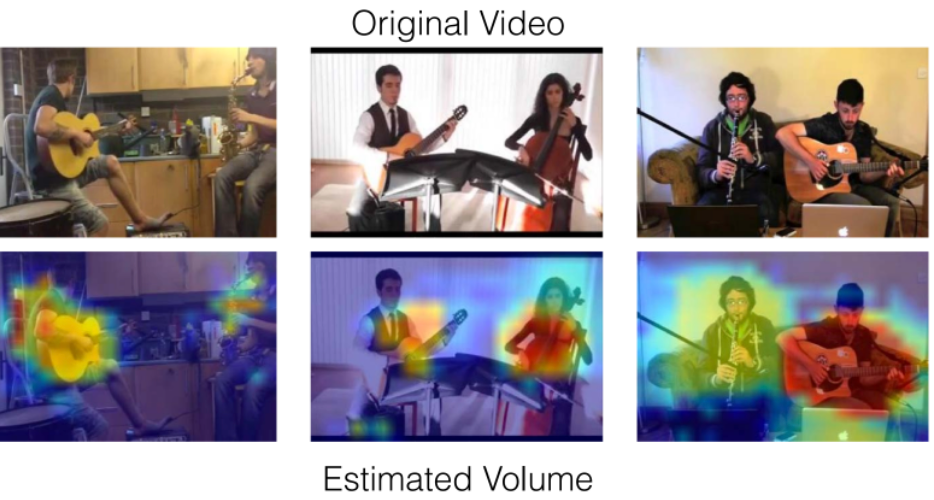

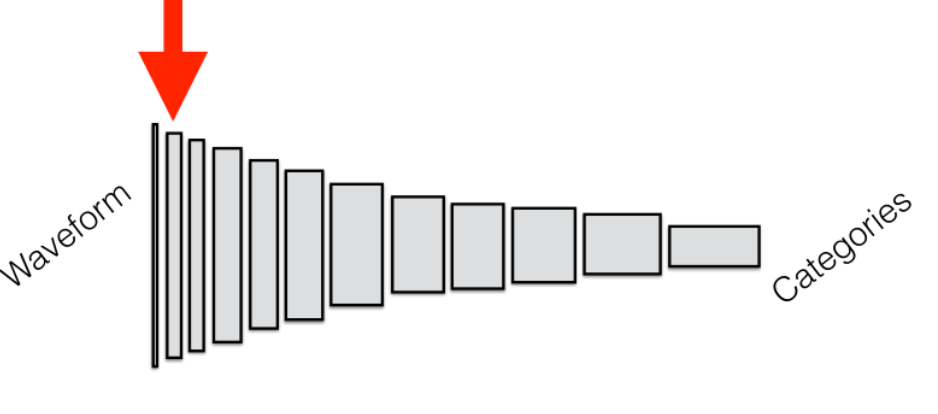

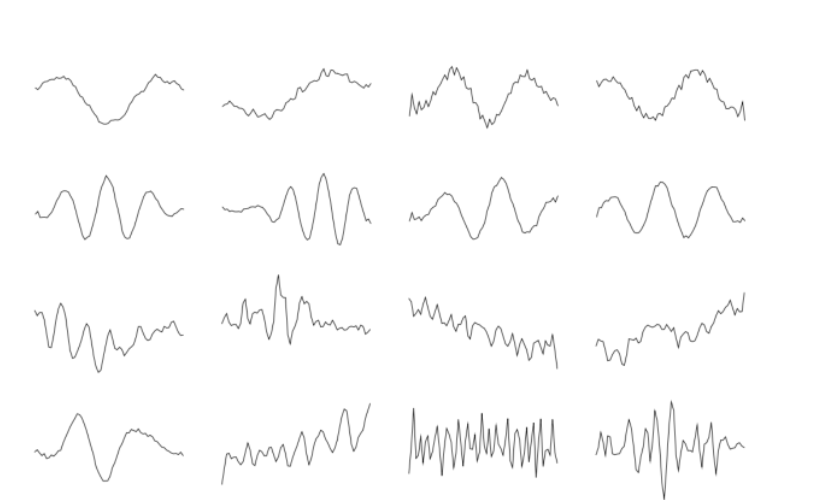

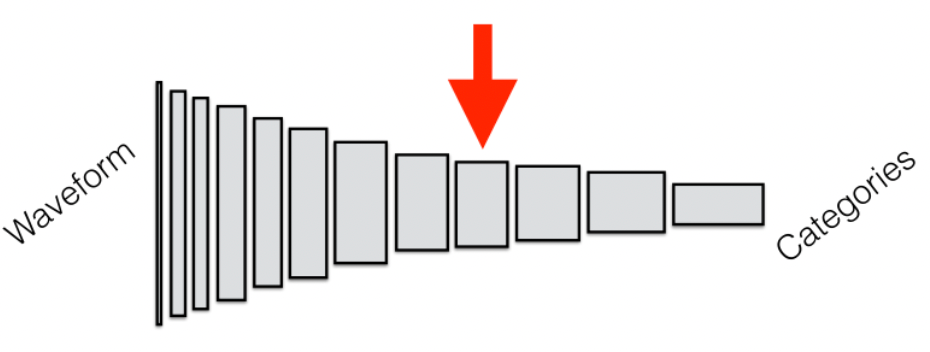

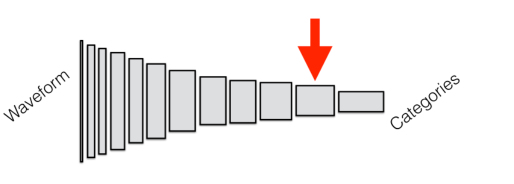

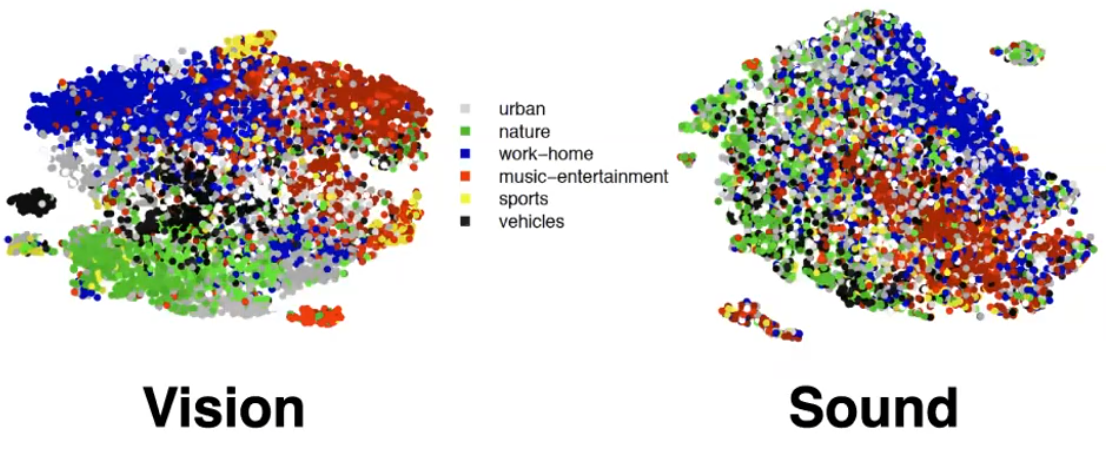

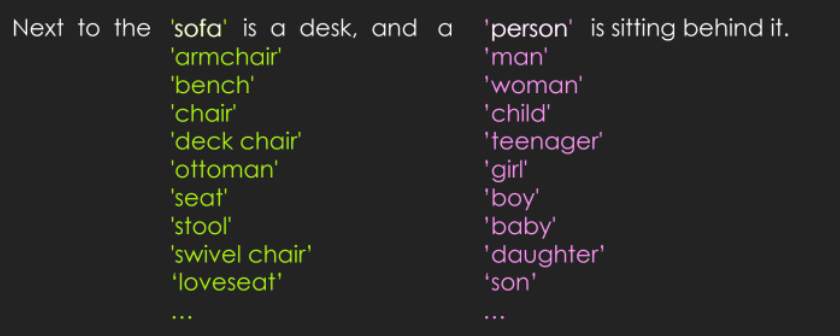

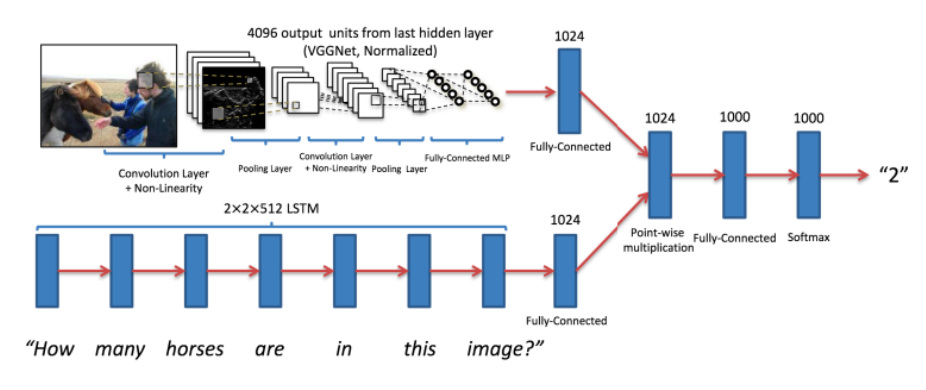

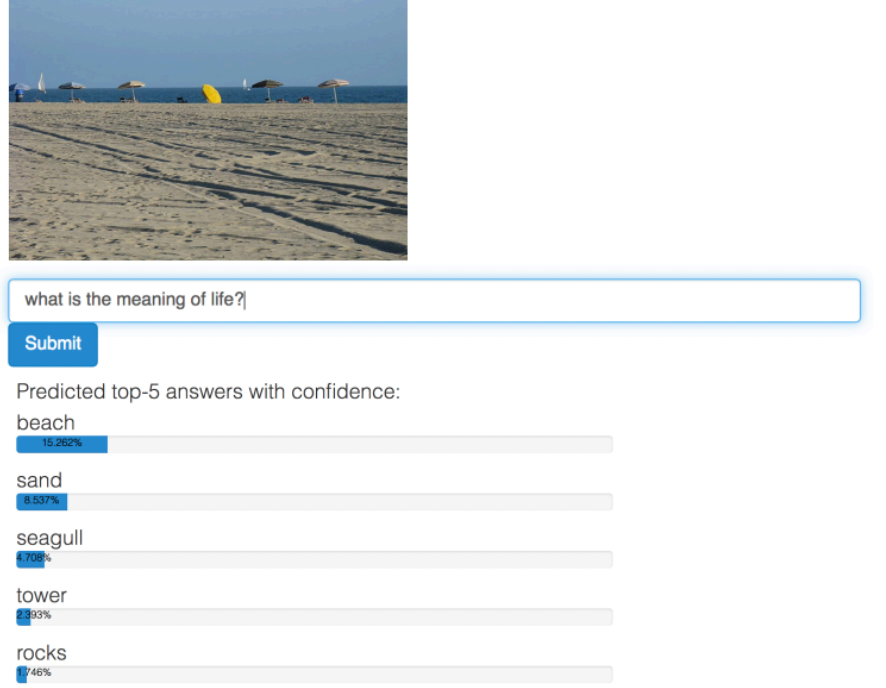

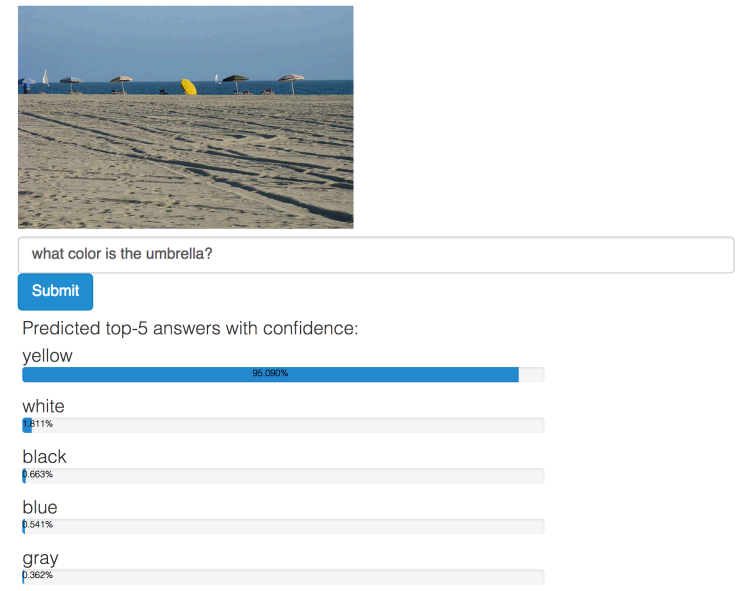

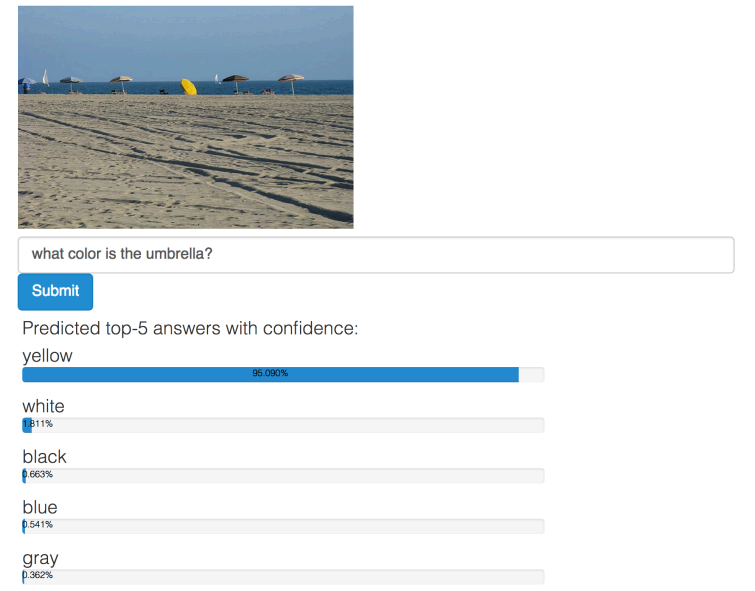

- find a network $f_\theta$ that outputs representation that can be added, i.e. sum of representation of parts of an image = representation of an image