STFCS234 Reinforcement Learning

- Introductions

- Intro to Sequential Decision Making

- MDPs and MRPs

- Policy Evaluation with Unknown World

- Model Free Control

- Value Function Approximation

- Deep Reinforcement Learning

- Imitation Learning

- Policy Gradient

- Monte Carlo Tree Search

- General Offline RL Algorithms

Stanford Reinforcement Learning:

- course video: https://www.youtube.com/watch?v=FgzM3zpZ55o&list=PLoROMvodv4rOSOPzutgyCTapiGlY2Nd8u&index=1

- assignments: https://github.com/Huixxi/CS234-Reinforcement-Learning-Winter-2019

- slides: https://web.stanford.edu/class/cs234/CS234Win2019/schedule.html

Introductions

Reinforcement Learning involves

- Optimization: find an optimal way to make decisions

- Delayed consequences: Decisions now can impact things much later

- When planning: decisions involve reasoning about not just immediate benefit of a decision but also its longer term ramifications

- When learning: temporal credit assignment is hard (what caused later high or low rewards?)

- Exploration: Learning about the world by making decisions and trying

- censored data: Only get a reward (label) for decision made. i.e. only have one reality

- in a sense that we need to collect our own training data

- Generalization

- learn a policy which can do interpolation/extrapolation, i.e. handle cases when not met in training data

Examples:

| Example/Comment | Strategies Involved | |

|---|---|---|

| Go as RL |  |

Optimization Delayed Consequences Generalization |

| Supervised ML ad RL | Optimization Generalization |

|

| Imitation Learning as RL | Learns from experience…of others; Assumes input demos of good policies Reduces RL to supervised learning |

Optimization Delayed Consequences Generalization |

Intro to Sequential Decision Making

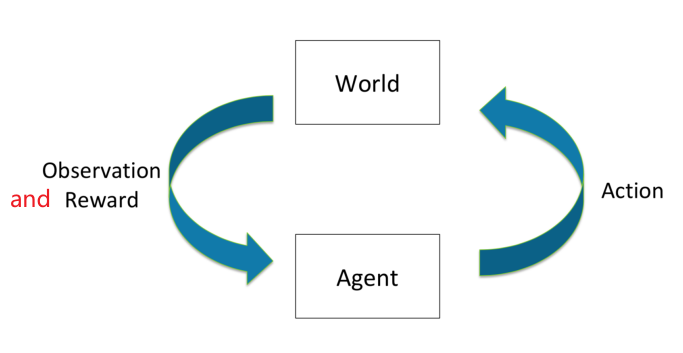

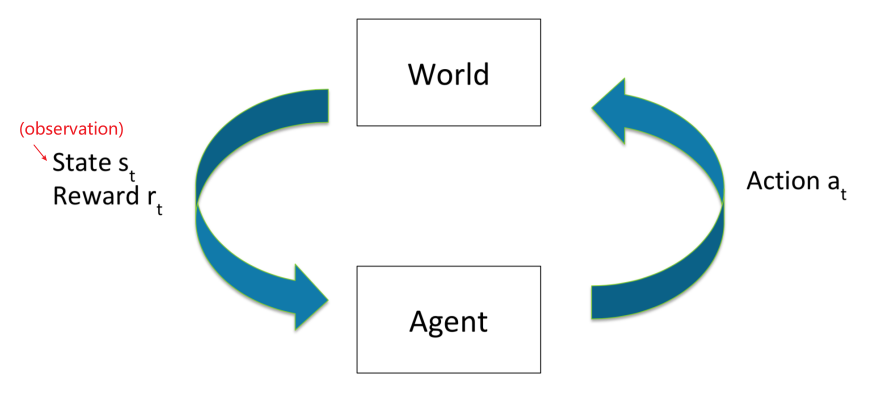

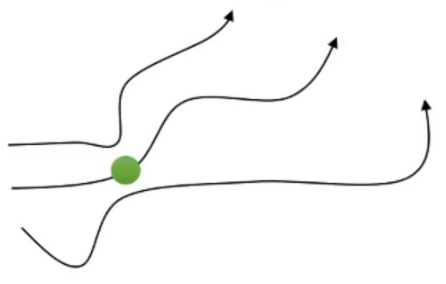

The problem basically looks like

-

Goal: Select actions to maximize total expected future reward

-

May require balancing immediate & long term rewards

Note that it is critical to pick a good reward function. Consider the following RL system:

- Agent: AI Teacher

- Action: pick a addition or subtraction problem for student to do

- World: some student doing the problem

- Observation: whether if student did it correctly or not

- Reward: +1 if correct, -1 if not

What would happen in this case? The agent could learn to give easy problems.

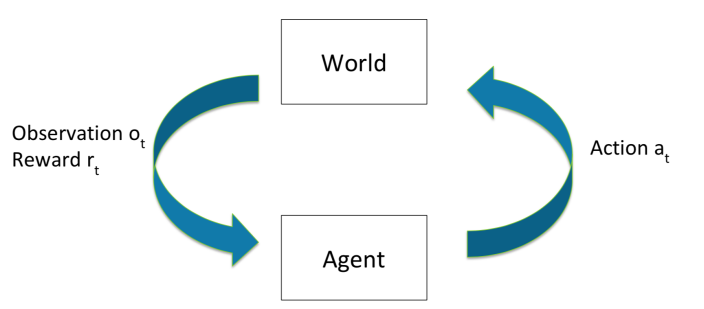

A more formal formulation of the task looks like

where we have:

- at each time $t$

- agent takes some action $a_t$

- world updates given action $a_t$, emits observation $o_t$ and reward $r_t$

- agent receives observation $o_t$ and reward $r_t$

- therefore, we essentially have a history $h_t=(a_1,o_1,r_1,…,a_t,o_t,r_t)$

- State is information assumed to determine what happens next

- usually the agent uses (some function of) the history to determine what happens next, so $s_t = f(h_t)$

- This is true state of the world is hidden from agent

Markov Assumption and MDP

Why is Markov assumption so popular? It turns out that such an assumption can always be satisfied if we set state as history:

\[p(s_{t+1}|s_t, a_t) = p(s_{t+1}|h_t,a_t)\]where RHS is like the true model, and LHS is what we are modelling.

However, in practice we often assume the most recent observation being sufficient to model the state

\[p(s_{t+1}|s_t, a_t) = p(s_{t+1}|o_t,a_t)\]So that:

-

If we consider most recent observation as your state, so $s_t = o_t$, then the agent is modelling the world as MDP

-

If agent state is not the same as the world state (e.g. Use history $s_t = h_t$ , or beliefs of world state, or RNN), then it is a Partially Observable MDP, or POMDP

- e.g. playing a poker game, where agent only sees its own card

Or other state representation (e.g. past 4 states). This choice affects how big our state space is, which has big implications for:

- Computational complexity

- Data required

- Resulting performance

An example of this would be:

- a state include all history, e.g. our entire life trajectory = have only a single data sample

- a state include only most recent life experience = a lot of data samples

Types of Sequential Decision Process

In reality, we may face different types of problems, each with different properties:

- Bandits: actions have no influence on next observations

- e.g. whether if I clicked on the advertisement does not affect who the next customer (coming to the website) is

- MDP and POMDP: Actions influence future observations

- Deterministic World: given the same state and action, we will have the same/only one possible observation and reward

- Common assumption in robotics and controls

- Stochastic: given the same state and action, we can have multiple possible observation and reward

- Common assumption for customers, patients, hard to model domains

- can think of the case that we don’t have good enough model for deterministic coin flipping, hence we model it as stochastic

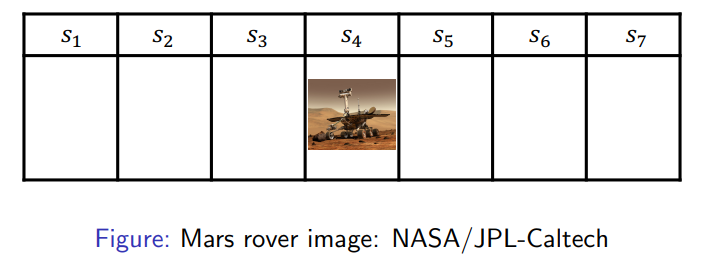

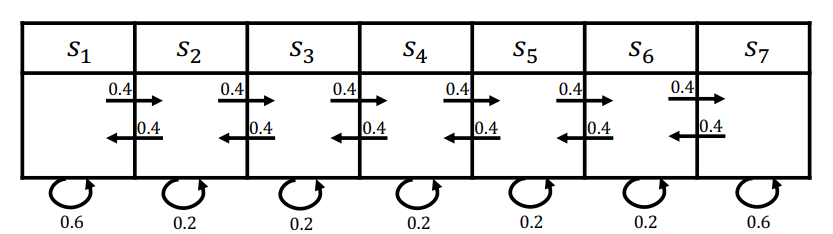

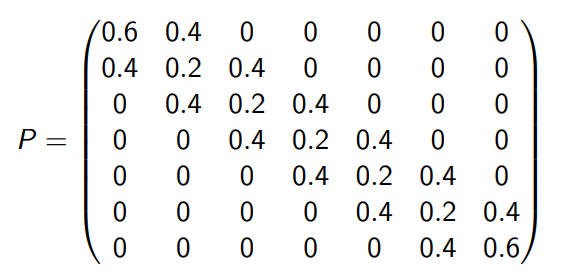

Example of MDP

Consider the case of having a Mars Rover exploring, which has seven possible states/locations to explore. We start at state $s_4$

so we have

- States: Location of rover $(s_1, …,s_7)$

- Actions: Left or Right

- Rewards: $+1$ in state $s_1$, $10$ in state $s_7$, $0$ in all other states

We want to find a program that tells the rover what to do next. In general RL problems can be modeled in three ways

-

Model: Representation of how the world changes in response to agent’s action

-

in this case we need to model transition

\[p(s_{t+1}=s' | s_t = s,a_t=a)\]for any action state combination

-

and the reward

\[r(s_t=s,a_t=a) \equiv \mathbb{E}[r_t|s_t =s,a_t=a]\]for any action state combination

-

-

Policy: function mapping agent’s states to action (i.e. what to do next given current state)

-

hence we model, if deterministic

\[\pi(s)=a\]spits out an action given a state

-

if stochastic

\[\pi(a|s) = P(a_t=a|s_t=s)\]being a probability distribution given a state.

-

-

Value function: Future rewards from being in a state and/or action when following a particular policy

-

we consider the expected discounted sum of future rewards under/given a particular policy $\pi$

\[V_\pi(s_t=s) = \mathbb{E}_\pi [r_t + \gamma r_{t+1} + \gamma^2 r_{t+2}+...|s_t=s]\]for $\gamma \in [0,1)$ specifying how much we care about the future reward compared to current reward.

-

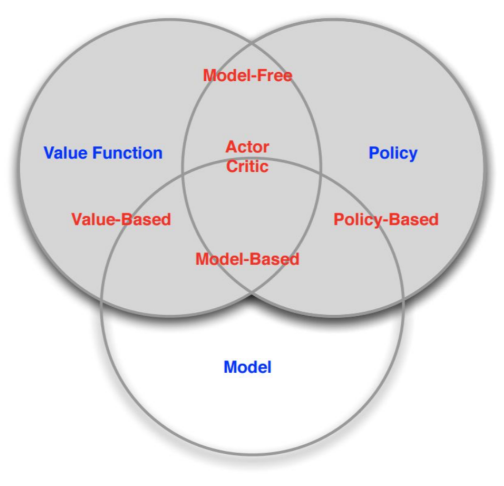

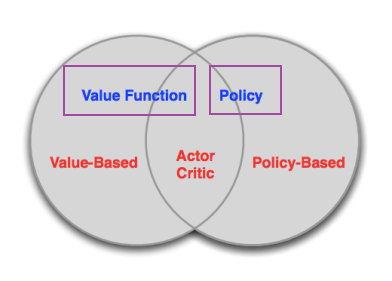

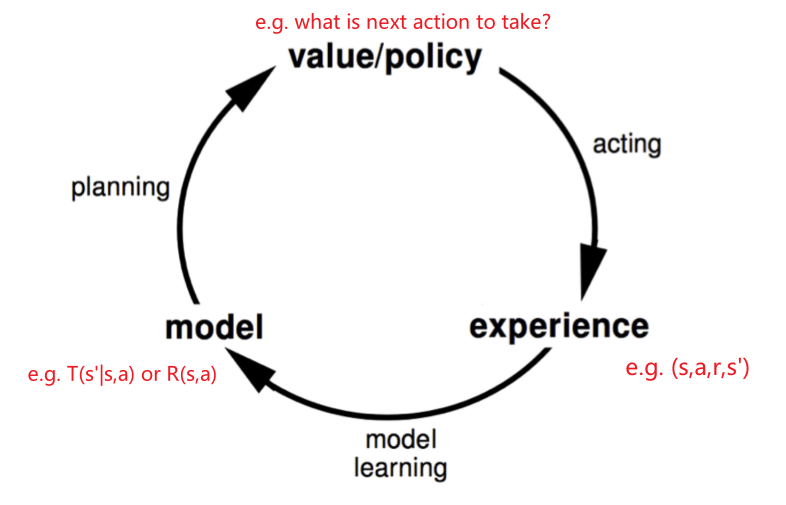

Types of RL Agents

Therefore, from the above discussion, we essentially have two types of RL algorithms:

- Model-based

- explicitly having a model of the world (e.g. models the transition function, reward)

- may or may not have an explicit policy and/or value function (e.g. if needed compute from the model)

- no longer needs interaction/additonal experience

- Model-free

- explicitly have a value function and/or policy function

- no model of the world

A more complete diagram would be

Key Challenges in Making Decisions

- Planning (Agent’s internal computation)

- Given model of how the world works

- i.e. you already know the transition and rewards

- need an algorithm to compute how to act in order to maximize expected reward

- With no interaction with real environment

- Given model of how the world works

- Reinforcement learning (we don’t even have the model)

- Agent doesn’t know how world works

- Interacts with world to implicitly/explicitly learn how world works

- Agent improves policy (may involve planning)

so we see that RL deals with more problems: we also need to decide what action to do for a) getting the necessary information of the world, and b) achieve high future rewards

For instance

- Planning: Chess game

- we already know all the possible moves, and rewards (who wins/losses)

- we need an algorithm to tell us what to do next based on this model

- doing a tree search, etc.

- Reinforcement Learning: Chess game with no rule book, i.e. don’t know the rule of chess

- first we need to directly learn by taking actions and see what happens

- Try to find a good policy over time

Important Components in RL

Agent only experiences what happens for the actions it tries. How should an RL agent balance its actions?

- Exploration: trying new things that might enable the agent to make better decisions in the future

- Exploitation: choosing actions that are expected to yield good reward given past experience

Often there may be an exploration-exploitation tradeoff, as you might not the correct model of the world (since you only have finite experience). May have to sacrifice reward in order to explore & learn about potentially better policy

Another two very important component you often see in RL is:

-

Evaluation: given some policy, we want to evaluate how good the policy is

-

Control: find the good policy

- e.g. do policy evaluation, and improve (iff the policy is stochastic)

- so does more than evaluation

MDPs and MRPs

Here we discuss how do we decide to take actions when given a world model. So here we will first discuss the problem of planning, instead of reinforcement learning (discussed later).

Specifically, we will cover

- Markov Decision Processes (MDP)

- Markov Reward Processes (MRP)

- Evaluation and Control in MDPs

Therefore, we basically consider

so mathematically we consider

\[p(s_{t+1}|s_t, a_t) = p(s_{t+1}|o_t, a_t)\]Markov Process/Chain

Before we discuss MDP, we first consider what is MP.

Markov Process

A Markov process has:

a set of finite states $s \in S$

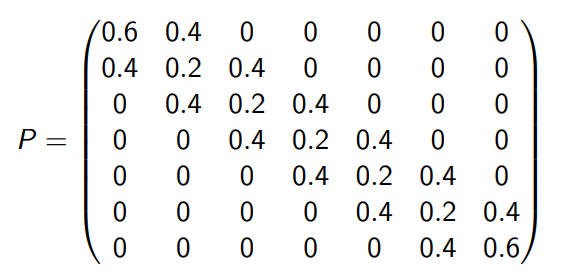

a dynamics/transition model $P$ that specifies $p(s_{t+1}=s’ \vert s_t=s)$. For a finite number of states, this can be modelled as

\[P = \begin{bmatrix} P(s_1|s_1) & P(s_2|s_1) & \dots & P(s_N|s_1)\\ P(s_1|s_2) & P(s_2|s_2) & \dots & P(s_N|s_2)\\ \vdots & \vdots & \ddots & \vdots\\ P(s_1|s_N) & P(s_2|s_N) & \dots & P(s_N|s_N)\\ \end{bmatrix}\]notice that we have no action nor rewards: so we basically just observe this process being a memoryless random process

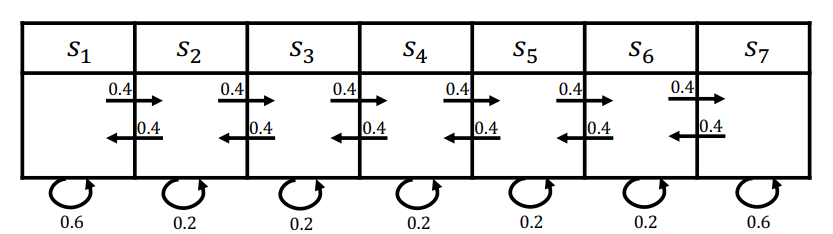

For instance, we can consider Mar’s Rover having the following transition dynamics

| Markov World | Transition Model |

|---|---|

|

|

where we see that $p(s_1\vert s_1)=0.6$, and $p(s_2\vert s_1)=0.4$, etc.

Using the transition model we can also mathematically compute the probability distribution of the next state given current state. For instance, if we are currently at $s_t=s_1$, then $p(s_{t+1}\vert s_t)$ is modelled by

\[p(s_{t+1}|s_t=s_1) = [1, 0, 0,0,0,0,0]P = \begin{bmatrix} 0.6\\ 0.4\\ 0\\ \vdots\\ 0 \end{bmatrix}\]Then, a sequence of states you would observe from the world would be sampled from the above distribution, so in the end you only see some deterministic observations/episodes such as

- $s_4 \to s_5 \to s_6 \to s_7 \to s_7, …$

- $s_4 \to s_5 \to s_4 \to s_5 \to s_7, …$

- $s_4 \to s_3 \to s_2 \to s_1 \to s_2, …$

- etc.

Markov Reward Process

Markov Reward Process is essentially Markov Chain + Reward

Markov Reward Process:

Markov reward process involves

- a set of finite states $s \in S$

- a dynamics/transition model $P$ that specifies $p(s_{t+1}=s’ \vert s_t=s)$.

- for a finite number of states, this can be expressed as a matrix.

- a reward function $R(s_t=s) \equiv \mathbb{E}[r_t \vert s_t=s]$, meaning the reward at a state is the expected reward of that state

- for a finite number of states, this can be expressed as a vector.

- allow for a discount factor $\gamma \in [0,1]$

- mainly for mathematical convenient, which can avoid infinite returns and values/converges

- if episodes are finite, then $\gamma = 1$ works

note that we still have no actions.

Once there is a reward, we can consider ideas such as returns and value functions

Horizon: Number of time steps in each episode

- Can be infinite

- if not, it is called finite Markov reward process

(MRP) Return: discounted sum of rewards from time step $t$ to Horizon

\[G_t = r_t + \gamma r_{t+1} + \gamma^2 r_{t+2} + ...\]note that

- this is like a reward for a particular episode starting from time $t$

- why geometric series? This is usually used for its nice mathematical properties.

(MRP) State Value Function $V(s)$: expected return from starting in state $s$

\[V(s) = \mathbb{E}[G_t|s_t=s] = \mathbb{E}[ r_t + \gamma r_{t+1} + \gamma^2 r_{t+2} + ... | s_t=s]\]

note that

- if the process is deterministic, then $\mathbb{E}[G_t\vert s_t=s]=G_t$

- if the process is stochastic, then it is usually different.

For instance, consider the previous example of

| Markov World | Transition Model |

|---|---|

|

|

but now we have:

- $R(s=s_1)=+1$, and $R(s=s_7)=+10$. Zero otherwise

Then consider sampling 2 episodes with 4-step length, using $\gamma=1/2$ we can compute the sample return

- $s_4,s_5,s_6,s_7$ having a return of $0+\frac{1}{2}\times 0+\frac{1}{4}\times 0+\frac{1}{8}\times 10=1.25$

- $s_4, s_4, s_5, s_4$ having a return of $0+\frac{1}{2}\times 0+\frac{1}{4}\times 0+\frac{1}{8}\times 0=0$

Finally, we can consider the state value function by averaging over all the possible trajectories for each state, and we would get

\[V= [1.53, 0.37, 0.13, 0.22, 0.85, 3.59, 15.31]\]in this particular example.

How do we compute the state function in reality?

-

Could estimate by simulation (i.e. generate a large number of episodes and take average)

- notice that this method assumes no Markov process, as we are just sampling and averaging

- we have theoretical bounds as well on how many episodes we need

-

Or we can utilize the Markov structure and know that MRP value function satisfies

\[V(s) = \underbrace{R(s)}_{\text{imemdiate reward}}+ \quad \underbrace{\gamma \sum_{s'\in S} P(s'|s)V(s')}_{\text{discounted sum of future rewards}}\]

Of course for computation we will use the latter case, which brings us to

Bellman’s Equation for MRP: for fintie state MRP, we can express $V(s)$ for each state using a matrix equation

\[\begin{bmatrix} V(s_1)\\ \vdots\\ V(s_N) \end{bmatrix} = \begin{bmatrix} R(s_1)\\ \vdots\\ R(s_N) \end{bmatrix} + \gamma \begin{bmatrix} P(s_1|s_1) & P(s_2|s_1) & \dots & P(s_N|s_1)\\ P(s_1|s_2) & P(s_2|s_2) & \dots & P(s_N|s_2)\\ \vdots & \vdots & \ddots & \vdots\\ P(s_1|s_N) & P(s_2|s_N) & \dots & P(s_N|s_N)\\ \end{bmatrix}\begin{bmatrix} V(s_1)\\ \vdots\\ V(s_N) \end{bmatrix}\]or more compactly

\[V = R + \gamma PV\]

Note that both $R, P$ is known. All we need is to solve for $V$. This can be solved in two ways:

- directly with liner algebra

- iterative using DP

First, solving it with linear algebra

\[\begin{align*} V &= R + \gamma PV\\ V - \gamma PV &= R\\ V &= (I-\gamma P)^{-1}R \end{align*}\]which requires solving matrix inverses, hence is $\sim O(N^3)$.

Another way to compute $V$ is by dynamic programming:

-

initialize $V_0(s)=0$ for all state $s$

-

For $k=1$ until convergence

- for all $s \in S$

As this is an iterative algorithm, the cost is $O(kN^2)$ for having $k$ iterations (so each iteration updates is only $O(N^2)$)

Markov Decision Process

Finally we add in action as well, so essentially MDPs are Markov Reward Process + actions

Markov Decision Process: MDP involves

- a set of finite states $s \in S$

- a finite set of actions $a \in A$

- a dynamics/transition model $P$ for each action that specifies $p(s_{t+1}=s’ \vert s_t=s, a_t=a)$.

- a reward function $R(s_t=s,a_t=a) \equiv \mathbb{E}[r_t \vert s_t=s,a_t=a]$

- allow for a discount factor $\gamma \in [0,1]$

note that know we mostly deal with the “joint probability” of $s_t,a_t$ together.

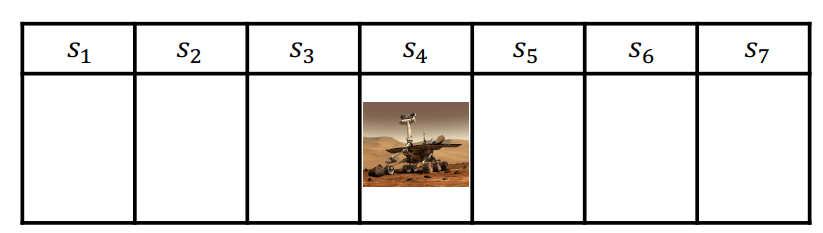

For instance, for the Mars Rover MDP case

| World Model | Transition Model |

|---|---|

|

|

where in this case we are having deterministic actions, which nonetheless can be modelled as a stochastic transition with probability of one.

Once we have actions in our model, we also have the following

Policy: specifies what action to take in each state

\[\pi (a|s) = P(a_t=a|s_t=s)\]Basically a conditional distribution of actions on current state

Note that once you specified a policy, you can convert MDP + policy to a Markov Reward Process, so that

\[R^\pi (s) = \sum_{a \in A} \pi(a|s)R(s,a)\]is again independent of action in MRP, and similarly

\[P^\pi(s'|s) = \sum_{a\in A}\pi(a|s) P(s'|s,a)\]which implies we can use same techniques to evaluate the value of a policy for a MDP as we could to compute the value of a MRP

MDP Policy Evaluation

For a deterministic policy $\pi(s)$, we can evaluate the value function $V^\pi(s)$ by, for example, the iterative algorithm mentioned before

-

initialize $V_0(s)=0$ for all state $s$

-

For $k=1$ until convergence

- for all $s \in S$

so essentially $V_k^\pi(s)$ is exact value of $k$-horizon value of state $s$ under policy $\pi$, i.e. the value function if we are allowed to act for $k$ steps. Therefore, as $k$ increases we converge to infinite horizon.

This is also called the Bellman backup for a particular (deterministic) policy.

- note that of course we could have also computed $V^\pi$ analytically, or with simulation.

For instance, consider the setup of Mars Rover

And in this case we have:

- Dynamics: $p(s_6 \vert s_6,a_1)=0.5, p(s_7 \vert s_6,a_1)=0.5, …$

- Reward: for all actions, +1 in state $s_1$, and +10 in state $s_7$. Zero otherwise

- Policy: $\pi(s)=a_1$ for all states.

- $\gamma$ set to $0.5$

Let we initialize with $V^\pi_k=[1,0,0,0,0,0,0,10]$, we want to compute $V^\pi_{k+1}(s_6)$. From the iterative formula

\[\begin{align*} V^\pi_{k+1}(s_6) &= r(s_6, a_1) + \gamma [0.5 * V_k(s_6) + 0.5 * V_k(s_7)]\\ &= 0 + 0.5*[0.5 * 0 + 0.5 * 10]\\ &= 2.5 \end{align*}\]Notice that we have propagated the reward information from $s_7$ to $s_6$ in this value function! If you do this for all states eventually such an information will be spread in all states.

MDP Control

Ultimately we want our agent to find an optimal policy

\[\pi^*(s) = \arg\max_\pi V^\pi(s)\]i.e. policy such that its value function is the maximum. Meaning that

A policy $\pi$ is defined to be better than or equal to a policy $\pi’$ if

\[\pi \ge \pi' \iff V_\pi(s) \ge V_{\pi'}(s),\quad \forall s\]which means its expected return is greater than or equal to that of $\pi’$ for all states. And there is always at least one policy that is better than or equal to all other policies as a policy is essentially a mapping.

Then, it turns out that

Theorem: MDP with infinite horizon:

- there exists a unique optimal value function

- the optimal policy for a MDP in an infinite horizon problem is

- deterministic

- stationary: does not depend on time step. (intuition would be that for infinite horizon, you have essentially infinite time/visits for each state, hence the optimal policy does not depend on time)

- not necessarily unique

Therefore, it suffices for us to focus on (improving) deterministic policies as our final optimal policy is also deterministic

For example: Consider the Mars Rover case again

which have 7 states and 2 actions, $a_1, a_2$. How many deterministic policies are there?

- since a policy is essentially a mapping from states to actions, there are $2^7$ possible policies.

So how do we search for the best policy?

- enumeration: compute for all $\vert A\vert ^{\vert S\vert }$ possible deterministic policy and pick best one

- policy iteration: which is more efficient by doing policy evaluation + improvement

MDP Policy Iteration

The goal is to improve a policy iteratively so we end up with an optimal policy:

- set $i=0$

- initialize $\pi_0(s)$ randomly for all $s$

- while $i==0$ or $\vert \vert \pi_i - \pi_{i-1}\vert \vert _1 > 0$ being the L1-norm

- $V^{\pi_i}$ being the MDP policy evaluation of $\pi_i$

- $\pi_{i+1}$ being the policy improvement for $\pi_i$ (discuss next)

- $i = i+1$

How do we improve a policy? First need to consider some new definition

State-Action Value Function: essentially a value function exploring what happens if you take some action $a$ (e.g. different than some given policy) in each state

\[Q^\pi(s,a) = R(s,a)+\gamma \sum_{s' \in S}P(s'|s,a)V^\pi(s')\]so essentially at each state $s$, we consider:

- take action $a$

- then follow policy $\pi$

Therefore, using this we can essentially improve a policy $\pi_i$ by:

-

compute the state-action value of a policy $\pi_i$

- for each state $s\in S$ and $a \in A$

-

compute the new policy $\pi_{i+1}$ by

\[\pi_{i+1}(s) = \arg\max_a Q^{\pi_i}(s,a),\quad \forall s\in S\]to prove that this is a better policy, we need to show that

\[V^{\pi_{i+1}}(s) \ge V^{\pi_i}(s)\]which we will prove below. (inequality becomes strict if $V^{\pi_i}$ is suboptimal)

Using this approach, we are guaranteed to arrive at the global optimum of best policy.

Proof: Monotonic Improvement in Policy.

We first know that:

\[\max_a Q^{\pi_i}(s,a) \ge V^{\pi_i}(s)=r(s,\pi_i(s)) + \gamma \sum_{s' \in S} p(s'|s, \pi_i(s))V^{\pi_i}(s')\]by definition of choosing a $\max_a$. Then, notice that

\[\begin{align*} V^{\pi_i}(s) &\le \max_a Q^{\pi_i}(s,a)\\ &= \max_a \{ R(s,a) + \gamma \sum_{s' \in S} P(s'|s,a)V^{\pi_i}(s') \}\\ &= R(s,\pi_{i+1}(s)) + \gamma \sum_{s' \in S} P(s'|s,\pi_{i+1}(s))V^{\pi_i}(s')\\ &\le R(s,\pi_{i+1}(s)) + \gamma \sum_{s' \in S} P(s'|s,\pi_{i+1}(s))\left( \max_{a'} Q^{\pi_i}(s',a') \right)\\\\ &= R(s,\pi_{i+1}(s)) + \gamma \sum_{s' \in S} P(s'|s,\pi_{i+1}(s))\left[ R(s',\pi_{i+1}(s')) + \gamma \sum_{s'' \in S} P(s''|s',\pi_{i+1}(s'))V^{\pi_i}(s'') \right]\\ &\le \dots\\ &= V^{\pi_{i+1}}(s) \end{align*}\]where:

-

notice that by definition of $Q^{\pi_{i}}$, we are using $\pi_i$ for future steps on the second and third equality

-

the third equality comes from the fact that we know $\pi_{i+1}(s) = \arg\max_a Q^{\pi_i}(s,a)$ which spits out the action for maximizing $Q$

- the fifth equality is basically doing the same as doing everything from the first to the third equality

- hence essentially we are expanding and pushing $\pi_{i+1}$ to a future step, until we are using $\pi_{i+1}$ for all steps

Note that: this is an monotonic improvement

- this mean that if the policy didn’t change on one iteration, can it change in future iteration? No, because if taking the max cannot improve it, then it have reached global optimum.

- Is there are maximum number of policy iteration? Yes, because there is only $\vert A\vert ^{\vert S\vert }$ number of policies, and since improvement step is monotonic, each policy can only appear once (unless we reached optimal)

MDP Value Iteration

Another approach to find an optimal policy is by value iteration.

- policy iteration: for each policy $\pi_i$, we get the value $V^{\pi_i}$ for the infinite horizon and improve it, until obtained best $V^{\pi^*}$

- value iteration: maintain the best $V(s)$ up to $k$ number of steps left in the episode, until $k \to \infty$ or converges

so we are computing a different thing here, but the final answer will be the same.

Recall that value of a policy has to satisfy the Bellman equation

\[\begin{align*} V^\pi(s) &= R^\pi(s) + \gamma \sum_{s' \in S} P^\pi(s'|s)V^\pi(s')\\ &=R(s,\pi(s)) + \gamma \sum_{s' \in S} P(s'|s,\pi(s))V^\pi(s') \end{align*}\]was the definition

Then we can consider a Bellman backup operator which operates on a value function:

\[\mathbb{B}V(s) \equiv \max_a \{ R(s,a) + \gamma \sum_{s'\in S}p(s'|s,a)V(s') \}\]so basically:

- $\mathbb{B}$ is like an operator, which will spit out a new value function

- it will improve the value if possible, as this is basically what policy iteration did

Then, with this, we define the algorithm for value iteration:

-

set $k=1$

-

Initialize $V_0(s)=0$ for all state $s$

-

loop until [finite horizon, convergence]

-

for each state $s$

\[V_{k+1}(s) = \max_a \{ R(s,a) + \gamma \sum_{s'\in S}p(s'|s,a)V_k(s') \}\]which can be views as just a Bellman backup operation on $V_k$

\[V_{k+1} = \mathbb{B}V_k\]

-

-

then policy for acting $k+1$ steps (best policy) can be easily derived by the action that leads to the best state given current state

\[\pi_{k+1}(s) = \arg\max_a \{ R(s,a) + \gamma \sum_{s'\in S}p(s'|s,a)V_k(s') \}\]so basically considering best action if only act for $k=1$ step, the use this to compute $k=2$ steps, and etc.

How do we know that this converges? This is because Bellman’s backup operator is a contraction operator (if $\gamma \le 1$)

Difference between Policy and Value iteration

- Value iteration

- Compute optimal value as if horizon $= k$ steps

- Note this can be used to compute optimal policy if horizon $= k$, i.e. finite horizon

- Increment $k$

- Policy iteration

- Compute infinite horizon value of a policy

- Use to select another (better) policy

- Closely related to a very popular method in RL: policy gradient

Policy Iteration as Bellman Operations

Essentially policy iteration also derives from the Bellman’s constraint, so we can express the policy iteration as Bellman operations as well.

First, we consider Bellman backup operator $\mathbb{B}^\pi$ for a particular policy which operates on some value function (which could have a different policy):

\[\mathbb{B}^\pi V(s) = R^\pi(s) + \gamma \sum_{s'\in S} P^\pi(s'|s)V(s')\]Therefore, this means that:

-

policy evaluation of $\pi_i$ is basically doing:

\[V^{\pi_i} = B^{\pi_i}B^{\pi_i}...B^{\pi_i}V\]for some randomly initialized $V$

-

policy improvement

\[\pi_{k+1}(s) = \arg\max_a \{ R(s,a) + \gamma \sum_{s'\in S}p(s'|s,a)V^{\pi_k}(s') \}\]

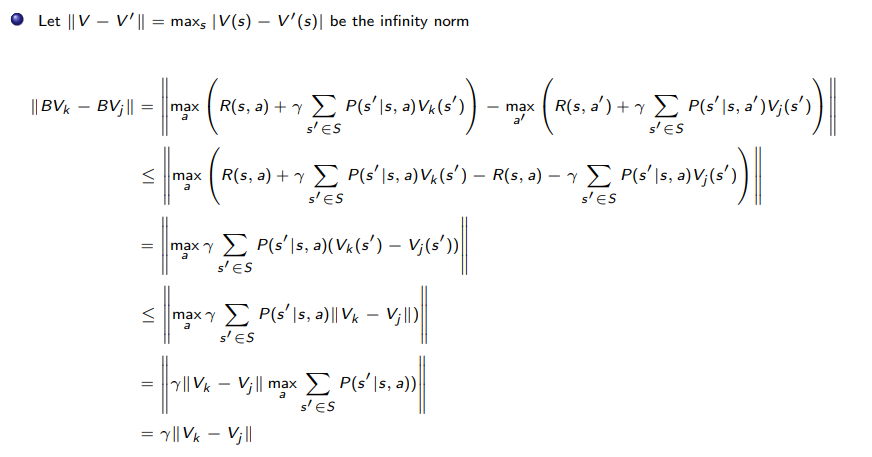

Contraction Operator

Contraction Operator:

Let $O$ be an operator, and $\vert x\vert$ denote any norm of $x$. If

\[|OV-OV'| \le |V-V'|\]then $O$ is an contraction operator.

so basically

-

distance between two value functions after applying Bellman’s operator must be less than or equal to distance they had before.

-

Given this property, it is straightforward to argue that distance between some value function $V$ to the optimal value function $V^*$ will decrease monotonically, hence convergence.

Proof

Policy Evaluation with Unknown World

Before in the MDPs and MRPs section we discussed the problem of planning: if we know how the world works, i.e. have some transition/reward function known, how do we find out the best policy.

However, what if we do not have a world model to begin with? This is what we will discuss in this section, including:

- Monte Carlo policy evaluation

- Temporal Difference (TD)

Monte Carlo Policy Evaluation

First, recall that with a world model, our algorithm for policy evaluation is:

-

initialize $V_0(s)=0$ for all state $s$

-

For $k=1$ until convergence

- for all $s \in S$

so essentially $V_k^\pi(s)$ is exact value of $k$-horizon value of state $s$ under policy $\pi$, i.e. the value function if we are allowed to act for $k$ steps. Therefore, as $k$ increases we converge to infinite horizon.

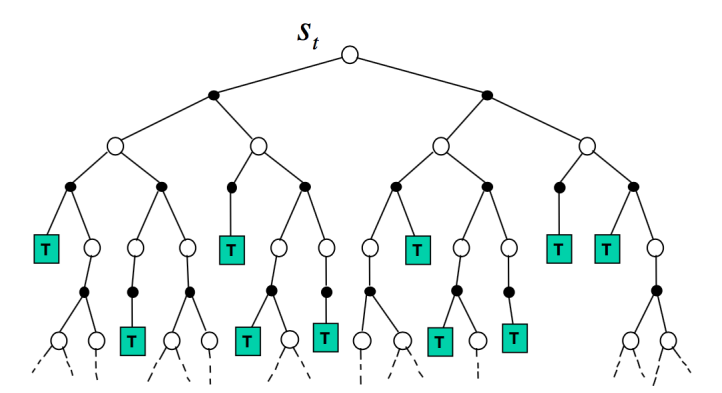

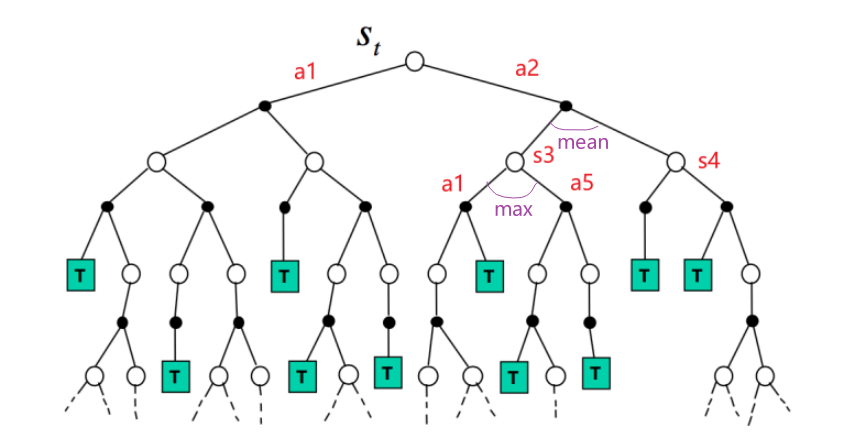

In other words, we are iteratively estimating $V^\pi(s)$ by $V_k^\pi(s)$ as:

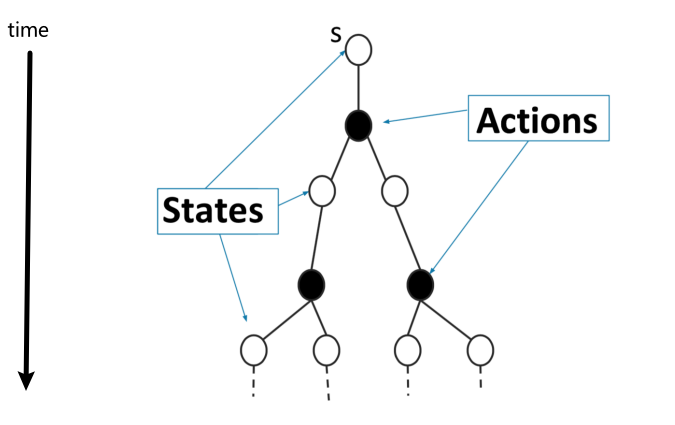

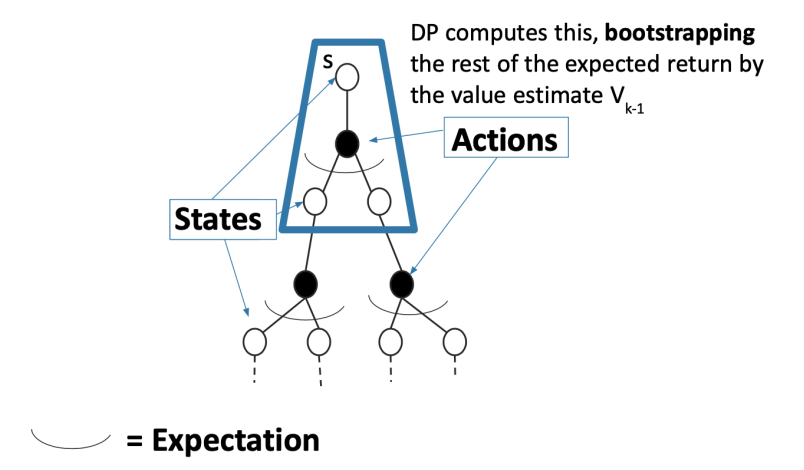

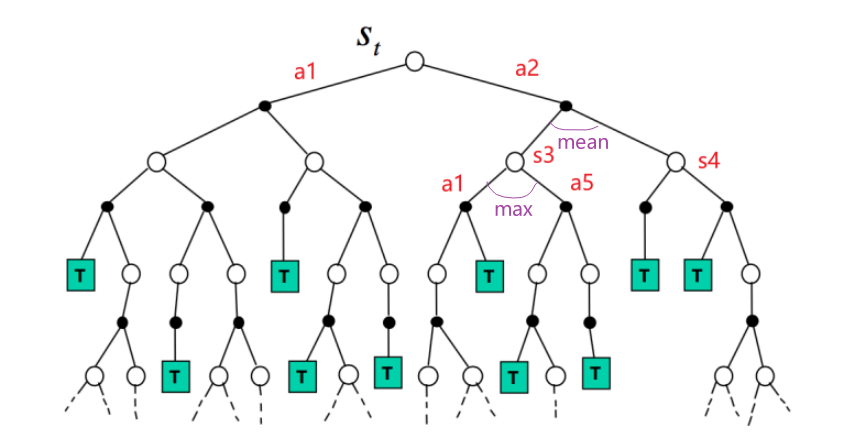

\[V^\pi(s) = \mathbb{E}_\pi[G_t|s_t=s] \approx V_k^\pi(s) = \mathbb{E}_\pi[r_t + \gamma V_{k-1}|s_t=s]\]we can think update tule graphhically as:

\[V^\pi(s) \leftarrow \mathbb{E}_\pi[r_t + \gamma V_{k-1}|s_t=s]\]| Tree of Possible Trajectories Following a Stochastic $\pi(a\vert s)$ | Dynamic Programming Algorithm |

|---|---|

|

|

where notice that:

-

essentially we are taking $V_{k-1}^\pi(s)$ for each state as the “true value functions”, and then computing the highlighted expectation by

\[V^\pi(s) \leftarrow r(s,\pi(s)) + \gamma \sum_{s' \in S} p(s'|s, \pi(s))V_{k-1}(s')\]to update the value functions. Notice that for this to make sense we need to assume infinite horizon, since we are treating $V^\pi(s)$ being stationary for each state, i.e. not a function of time step.

-

therefore we are bootstrapping as we are taking the value function from previous iteration.

-

notice that to compute this update we needed $P(s’\vert s,a)$ to average over all possible futures (and $R(s,a)$ as well)

Now, what happens if we do not know the world/model?

In the framework of MC Policy Evaluation, the idea is to notice that

\[V^\pi(s) = \mathbb{E}_{T \sim \pi}[G_t | s_t=s]\]basically averaging the $G_t$ of each possible trajectory $T$ following $\pi$, i.e. average over the branches of the tree

therefore, if trajectories are all finite, sample set of trajectories & average returns would give us some approximation of the value function. Hence, properties related to this approach include:

- Does not require a known MDP dynamics/rewards, as we just need sample trajectories as its reward

- Does not assume state is Markov (e.g. no notion of next state, etc.)

- Can only be applied to episodic MDPs

- Requires each episode to terminate

Aim: estimate $V^\pi(s)$ given sampled episodes $(s_1,a_1,r_1,s_2,a_2,r_2,…)$ generated under policy $\pi$

Using this idea of MC policy evaluation, we have the following algorithms that can do this:

- first visit MC on Policy Evaluation

- every visit MC on Policy Evaluation

- incremental MC on Policy Evaluation

First Visit MC On Policy Evaluation

Our aim is to estimate $V^\pi(s)$ by sampling trajectories and taking their mean (as being the MC method). In this case we consider the following algorithm:

-

Initialize $N(s)=0$, $G(s)=0, \forall s \in S$

-

loop

-

sample an episode $i=(s_{i,1},a_{i,1},r_{i,1},s_{i,2},a_{i,2},r_{i,2},….,s_{i,T_i})$

-

define $G_{i,t}$ being the return in this $i$-th episode from time $t$ onwards

\[G_{i,t}=r_{i,t}+\gamma r_{i,t+1} + \gamma^2 r_{i,t+2} + ... + \gamma^{T_i-1} r_{i,T_i}\] -

for each state $s$ visited in the episode $i$

- for the first time $t$ that state $s$ is visited

- increment counter of total first visits for that state $N(s)=N(s)+1$

- increment total return $G(s)=G(s)+G_{i,t}$

- Update estimate $V^\pi(s)=G(s)/N(s)$

- for the first time $t$ that state $s$ is visited

-

How does this algorithm work? How do we know that this way of estimating $V^\pi(s)$ being good (i.e. bias, variance, and consistent)?

Theorem:

- $V^\pi$ estimator in this case is an unbiased estimator of the true $\mathbb{E}_\pi[G_t\vert s_t=s]$

- $V^\pi$ estimator in this case is also a consistent estimator so that as $N(s)\to \infty$, $V^\pi(s)\to \mathbb{E}_\pi[G_t\vert s_t=s]$

However, those this is a good news, it might not be efficient as we are throwing away many other visits to the same state, which brings us to Every Visit MC On Policy Evaluation

Bias, Variance and MSE Recap

Recall that a way to see if certain estimators work is to compare against the ground truth. Let $\hat{\theta}=f(x)$ be our estimator function (a function of the observed data $x$) for some true parameter $\theta$.

- so basically $x \sim P(x\vert \theta)$ being generated from the true parameter

- we are constructing an estimator $\hat{\theta}=f(x)$ to estimate such a parameter.

- e.g. for $x \sim N(\mu, \sigma)$, we can estimate $\mu$ by $\hat{\mu} = \text{mean}(x)$ being our estimator

Bias: The bias of an estimator $\hat{\theta}$ is defined by

\[\text{Bias}_\theta(\hat{\theta}) \equiv \mathbb{E}_{x|\theta}[\hat{\theta}] - \theta\]so that over many sampled datasets, how far is the average $\hat{\theta}$ from the true $\theta$

note that since we don’t know what $\theta$ is in reality, we usually just bound it.

Variance: the variance of an estimator $\hat{\theta}$ is defined by:

\[\text{Var}(\hat{\theta}) \equiv \mathbb{E}_{x|\theta}[(\hat{\theta} - \mathbb{E}[\hat{\theta}])]\]which is basically the definition of variance itself and has nothing to do with $\theta$

In general different algorithms will have different trade-off between bias and variance.

MSE: Mean squared error of an estimator $\hat{\theta}$ is

\[\text{MSE}_\theta(\hat{\theta}) \equiv \text{Var}(\hat{\theta})+\text{Bias}_\theta(\hat{\theta})^2\]

Every Visit MC On Policy Evaluation

Here, the change is small

-

Initialize $N(s)=0$, $G(s)=0, \forall s \in S$

-

loop

-

sample an episode $i=(s_{i,1},a_{i,1},r_{i,1},s_{i,2},a_{i,2},r_{i,2},….,s_{i,T_i})$

-

define $G_{i,t}$ being the return in this $i$-th episode from time $t$ onwards

\[G_{i,t}=r_{i,t}+\gamma r_{i,t+1} + \gamma^2 r_{i,t+2} + ... + \gamma^{T_i-1} r_{i,T_i}\] -

for each state $s$ visited in the episode $i$

- for the every time $t$ that state $s$ is visited

- increment counter of total first visits for that state $N(s)=N(s)+1$

- increment total return $G(s)=G(s)+G_{i,t}$

- Update estimate $V^\pi(s)=G(s)/N(s)$

- for the every time $t$ that state $s$ is visited

-

Although this is more data efficient as we performed more updates

Theorem:

- $V^\pi$ estimator in this case is an biased estimator of the true $\mathbb{E}_\pi[G_t\vert s_t=s]$

- the intuition here is that because each state in an episode is not IID, the $G_{i,t}$ is not IID either. Therefore, we will get a biased estimator in this case as we use all encounters of state $s$

- $V^\pi$ estimator in this case is a consistent estimator so that as $N(s)\to \infty$, $V^\pi(s)\to \mathbb{E}_\pi[G_t\vert s_t=s]$

- Empirically, $V^\pi$ has a lower variance

Incremental MC On Policy Evaluation

In both previous algorithms we had to update the mean incrementally by

-

first doing $N(s)=N(s)+1$

-

increment total return $G(s)=G(s)+G_{i,t}$

-

then update

\[V^\pi(s)=\frac{G(s)}{N(s)}\]

We can also perform the same update incrementally by:

-

first doing $N(s)=N(s)+1$

-

update

\[V^\pi(s)=V^\pi(s)\frac{N(s)-1}{N(s)} + \frac{G_{i,t}}{N(s)}=V^\pi(s)+\frac{G_{i,t}-V^\pi(s)}{N(s)}\]for basically adding a "correction term" of $(G_{i,t}-V^\pi(s))/N(s)$

this idea of a correction term will be used later in TD algorithms, but in practice we can tweak this term so that, if we consider the following algorithm:

-

Initialize $N(s)=0$, $G(s)=0, \forall s \in S$

-

loop

-

sample an episode $i=(s_{i,1},a_{i,1},r_{i,1},s_{i,2},a_{i,2},r_{i,2},….,s_{i,T_i})$

-

define $G_{i,t}$ being the return in this $i$-th episode from time $t$ onwards

\[G_{i,t}=r_{i,t}+\gamma r_{i,t+1} + \gamma^2 r_{i,t+2} + ... + \gamma^{T_i-1} r_{i,T_i}\] -

for each state $s$ visited in the episode $i$

-

for the every time $t$ that state $s$ is visited

-

increment counter of total first visits for that state $N(s)=N(s)+1$

-

update

\[V^\pi(s)=V^\pi(s)+\alpha\left( G_{i,t}-V^\pi(s) \right)\]

-

-

-

Then if we used:

- $\alpha = 1/N(s)$ then it is the same as every visit MC

- $\alpha > 1/N(s)$ then we are placing emphasis on more recent ones/updates $G_{i,t}-V^\pi(s)$.

- this could be helpful if domains are non-stationary. For example in robotics some parts can be breaking down over time, so we want to focus more on more recently learnt data

MC On Policy Example

Consider a simple case of a Mars Rover again:

- reward being $R=[1,0,0,0,0,0,0,10]$

- initialize $V^\pi(s)=0$ for all $s$

- Our policy is $\pi(s)=a_1,\forall s$.

- Any action from state $s_1$ or $s_7$ gives termination

- take $\gamma = 1$

Suppose then we got a trajectory from this policy:

- $T = (s_3,a_1,0,s_2,a_1,0,s_2,a_1,0,s_1,a_1,1,\text)$

Question: What is the first and every visit MC estimate of $V^\pi$?

- first visit of $V^\pi(s)=[1,1,1,0,0,0,0]$

- every visit is the same even though $s_2$ has two updates

Notice that for all MC methods, we only was able to perform updates until the termination of the episode, since we need to know $G_{i,t}$.

MC On Policy Evaluation Key Limitations

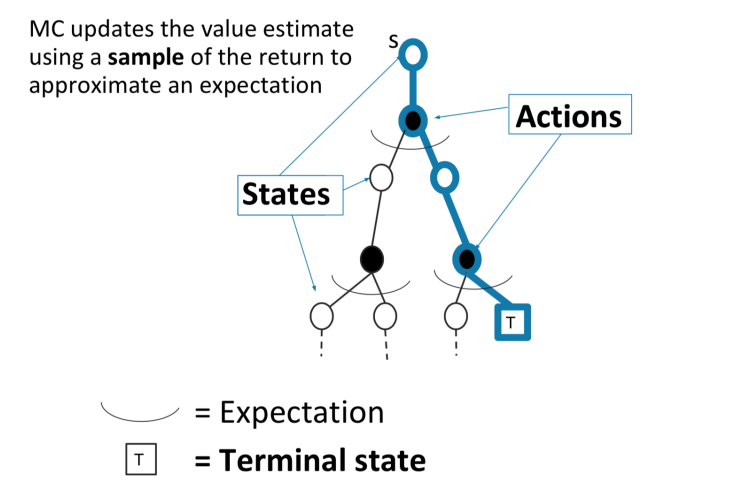

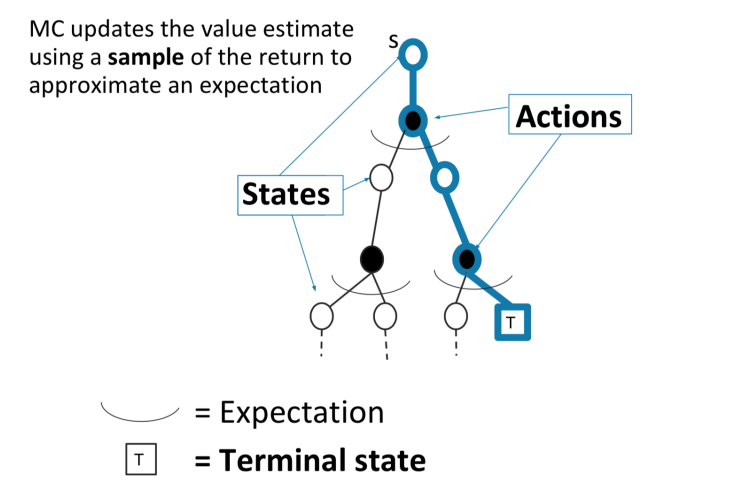

Graphically, what MC algorithms are doing is

| Tree of Possible Trajectories Following a Stochastic $\pi(a\vert s)$ | MC On Policy Algorithm |

|---|---|

|

|

So notice that we are averaging across all trajectories, meaning that:

- Generally high variance estimator. Reducing variance can require a lot of data

- Requires episodic settings (since we can only update once episode terminated)

Temporal Difference Learning

“If one had to identify one idea as central and novel to reinforcement learning, it would undoubtedly be temporal-difference (TD) learning.” – Sutton and Barto 2017

Some key attributes of this algorithm is:

- Combination of Monte Carlo & dynamic programming methods

- therefore, it Bootstraps (dynamic programming, reusing $V_{k-1}^\pi(s)$) and samples (MC)

- Model-free (does not need to know the transition/reward functions)

- Can be used in episodic or infinite-horizon non-episodic settings

- Can immediately updates estimate of $V$ after each $(s,a,r,s’)$ tuple, i.e. we do not need to wait until the end-of-episode like MC methods.

Aim: estimate $V^\pi(s)$ given sampled episodes $(s_1,a_1,r_1,s_2,a_2,r_2,…)$ generated under policy $\pi$

this is the same as MC on Policy Evaluation. But recall that if we are having a MDP model, the Bellman Operator can be used to perform an update of the $V(s)$:

\[\mathbb{B}^\pi V(s) = R^\pi(s) + \gamma \sum_{s'\in S} P^\pi(s'|s)V(s')\]which basically takes the current reward and averages over the value of next state. From this, we consider

Insight: given some current estimate of $V^\pi$, we can update using

\[V^\pi(s_t) =V^\pi(s_t)+\alpha( \underbrace{[r_t+\gamma V^\pi(s_{t+1})]}_{\text{TD target}}-V^\pi(s_t))\]for $r_t+\gamma V^\pi(s_{t+1})$ basically is our target (to approximate $r_t+\gamma \sum P^\pi(s’\vert s)V(s’)$) to improve $V^\pi(s)$, as compared to the MC On-policy method which used $G_{i,t}$.

Notice that:

this target is basically also takes the current reward and looks at the value of next state.

we are bootstrapping again, instead of waiting until end of episode, we use previous estimate $V^\pi(s)$ to compute our target $r_t+\gamma V^\pi(s_{t+1})$ and updates at each time step. Therefore we also don’t need episodic requirement.

TD Error: we can also view the above update rule as basically correcting $V^\pi(s)$ by the error term:

\[\delta_t \equiv [r_t+\gamma V^\pi(s_{t+1})]-V^\pi(s_t)\]

Under this framework of TD learning, we also have some variations:

- TD(0) Learning: only boostrap

- TD($\lambda$) Learning: MC update for $\lambda$ steps and then bootstrap

hence technically we have a continuum of algorithm between using bootstrap and using MC algorithm.

TD(0) Learning

The simplest TD algorithm is TD(0):

-

input $\alpha$

-

initialize $V^\pi(s)=0$, $\forall s \in S$

-

loop

-

sample tuple $(s_t,a_t,r_t,s_{t+1})$

-

update the state:

\[V^\pi(s_t) =V^\pi(s_t)+\alpha( \underbrace{[r_t+\gamma V^\pi(s_{t+1})]}_{\text{TD target}}-V^\pi(s_t))\]

-

which is a combination of MC sampling and bootstrapping:

- we needed sampling to get $s’$ for evaluating $V^\pi(s_{t+1})$

- we used the previous estimate of $V^\pi$ to calculate the values, which is boostrapping

TD(0) Learning Example

Consider a simple case of a Mars Rover again:

- reward being $R=[1,0,0,0,0,0,0,10]$

- initialize $V^\pi(s)=0$ for all $s$

- Our policy is $\pi(s)=a_1,\forall s$.

- Any action from state $s_1$ or $s_7$ gives termination

- take $\gamma = 1$

Suppose then we got a trajectory from this policy:

- $T = (s_3,a_1,0,s_2,a_1,0,s_2,a_1,0,s_1,a_1,1,\text)$

Question: What is the first and every visit MC estimate of $V^\pi$?

- first visit of $V^\pi(s)=[1,1,1,0,0,0,0]$

- every visit is the same even though $s_2$ has two updates

Question: What is the TD estimate of all states (init at 0, single pass) if we use $\alpha = 1$:

- $[1,0,0,0,0,0,0]$. Notice that here we have forgotten the previous history/did not propagate the information of reward at $s_1$ to other states in a single episode.

- to propagate this information to $s_2$, we need another episode which had the tuple $s_2 \to s_1$. To propagate to $s_3$, we need yet another episode that contains $s_3 \to s_2$, etc. So this propagation is slow

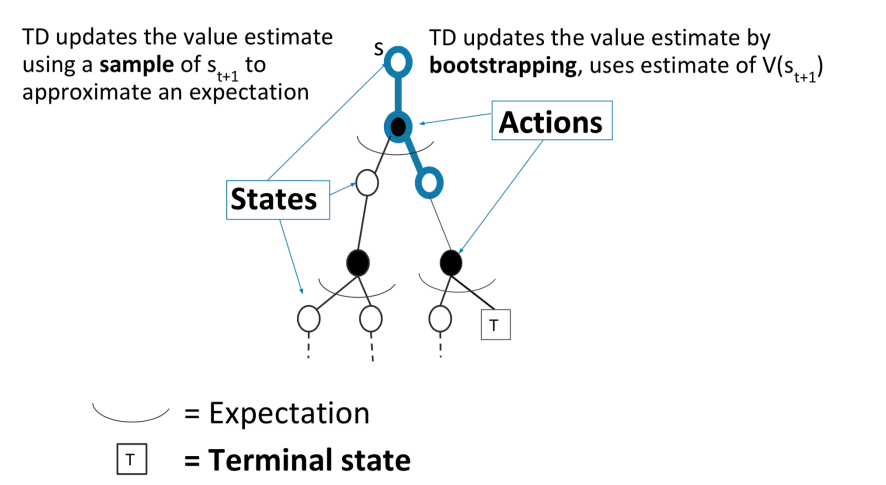

TD Learning Key Limitations

Graphically, temporal difference considers

| MC On Policy Algorithm | TD(0) On Policy Algorithm |

|---|---|

|

|

so we notice that TD(0):

- sits between MC and DP because we are still sampling stuff, but we are updating in a DP fashion

Although this algorithm allows us to update quickly:

- it is a biased estimator, since our update rules are using bootstrapping (i.e. $V^\pi(s)$ we used are estimates of the true $V^\pi(s)$, hence it will just be biased)

Comparison Between DP,MC and TD

| DP | MC | TD | |

|---|---|---|---|

| Usable when no models of current domain | Yes | Yes | |

| Handles continuing (non-episodic) domains | Yes | Yes | |

| Handles Non-Markovian domains | Yes | ||

| Converges to true value in limit (assume Markov) | Yes | Yes | Yes |

| Unbiased estimate of value | N/A | Yes |

note that

- for DP, it is the exact estimate.

- unbiased = for finite amount of data, on average it is $\theta$; consistent = for infinite amount of data it is $\theta$

Note that here we are still living in the world of tabular state space, i.e. our state space is discrete. Once we move on to the case of having continuous state space, we will cover function approximation methods and a lot of them does not guarantee convergence.

In addition:

- MC: updates until end of episode

- Unbiased

- High variance

- Consistent (converges to true) even with function approximation

- TD: updates per sample point $(s,a,r,s’)$ immediately

- Some bias

- Lower variance

- TD(0) converges to true value with tabular representation

- TD(0) does not always converge with function approximation

Batch MC and TD

The aim is to use the data more efficiently, hence we might consider:

Batch (Offline) solution for finite dataset:

- Given set of $K$ episodes

- Repeatedly sample an episode from $K$ (since we are offline, we can replay the episodes)

- Apply MC or TD(0) to the sampled episode

The question is, what will MC and TD(0) converge to?

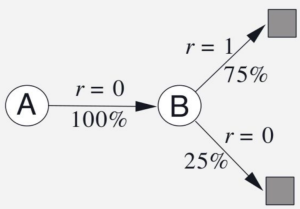

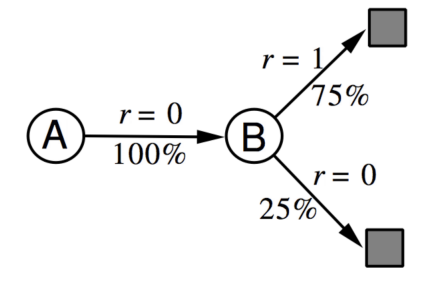

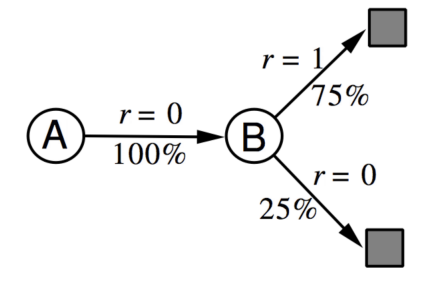

Consider the following example:

- Two states $A, B$ with $\gamma = 1$ and a small $\alpha < 1$

- Given 8 episodes of experience:

- $A, 0, B, 0$

- $B, 1$ (observed 6 times)

- $B, 0$

Graphically we have

Question: what is $V(A), V(B)$ under MC and TD(0) if we are sampling those data for infinite number of times until convergence?

- $V(B)=6/8$ for MC: because in general for 6 out of 8 episodes we get $B\to 1$ in the trajectory

- $V(B)=6/8$ for TD(0): because in general 6 out of 8 times we get $B \to \text{Terminate}$ we will have a reward of 1

- $V(A)=0$ for MC: in MC setting because updates are done per episode, and the only episode that contained state $A$ had a reward of zero

- $V(A)\neq 0$ for TD(0): in TD settings reward propagate back across episodes. Hence once $V(B)\neq 0$ and we sampled a tuple $A\to B$, we will obtain a non-zero value for $V(A)$

Properties of Batch MD and TD

- Monte Carlo in batch setting

- converges to min MSE (mean squared error) Minimize loss with respect to observed returns

- MC can be more data efficient than simple TD if markov assumption does not hold (i.e. we need to sample data in TD in order of the episode)

- TD(0) in batch setting

- converges to DP policy V π for the MDP with the maximum likelihood model estimates

- TD exploits Markov structure, if it holds then it is very data efficient

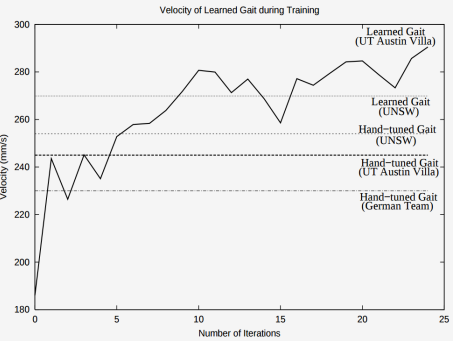

Model Free Control

How does an agent learn to act when it does not know how the world works, and does not aim to construct a model (model-free).

- Previous section: Policy evaluation with no knowledge of how the world works = how good is a specific policy?

- This section: Control (making decisions) without a model of how the world works = how can we learn a good policy?

Many applications can be modeled as a MDP:

- Backgammon, Go, Robot locomation, Helicopter flight, Robocup soccer, Autonomous driving, etc.

For many of these and other problems either:

- MDP model is unknown but can be sampled

- MDP model is known but it is computationally infeasible to use directly, except through sampling (e.g. climate simulation)

Optimization Goal: identify a policy with high expected rewards (without model of the world). Certain features we will encounter like all RL algorithms:

- Delayed consequences: May take many time steps to evaluate whether an earlier decision was good or not

- Exploration: Necessary to try different actions to learn what actions can lead to high rewards

In this section, we will discuss two types of algorithms:

- On-policy Learning

- as we had for policy evaluation

- Direct experience

- Learn to estimate and evaluate a policy from experience obtained from following that policy

- Off-policy Learning

- Learn to estimate and evaluate a policy using experience gathered from following a different policy

- e.g. given history $s_1,a_1,s_1,a_1$ and $s_1,a_2,s_1,a_2$, be able to extrapolate what happens if you do $s_1,a_1,s_1,a_2$

MC On-Policy Policy Iteration

Recall that when we know the model, we had the following algorithm for control:

-

set $i=0$

-

initialize $\pi_0(s)$ randomly for all $s$

-

while $i==0$ or $\vert \vert \pi_i - \pi_{i-1}\vert \vert _1 > 0$ being the L1-norm

-

$V^{\pi_i}$ being the MDP policy evaluation of $\pi_i$

-

$\pi_{i+1}$ being the policy improvement for $\pi_i$ (discuss next)

\[\pi_{i+1}(s) = \arg\max_a \left\{ R(s,a) + \gamma \sum_{s' \in S} P(s'|s,a)V^{\pi_i}(s') \right\} = \arg\max_a Q^{\pi_i}(s,a)\]

which monotonically improves (strict inequality) the policy until optimal policy

-

Now, we want to do the above two steps without access to the true dynamics and reward models. Notice that essentially policy improvement does $\arg \max_a Q^\pi$, so we want to find a way to estimate $Q^\pi$ directly without knowing world.

- previously we have only discussed how to do policy evaluation $V^\pi$ without the world, but not how to improve it

Therefore, we consider the following model-free policy iteration framework:

-

initialize $\pi_0(s)$ randomly for all $s$

-

repeat

-

policy evaluation: compute $Q^{\pi_i}(s,a)$

-

policy improvement: update $\pi_{i+1}$ by:

\[\pi_{i+1} = \arg\max_a Q^{\pi_i}(s,a)\]

-

But how do we estimate $Q^\pi$?

- MC for On Policy Q Evaluation (first visit and every visit)

MC for On Policy Q Evaluation

The idea is basically the same as $V^\pi$ evaluation, where we consider:

-

Initialize $N(s,a)=0$, $G(s,a)=0, Q^\pi(s,a)=0,\forall s \in S, \forall a \in A$

- in contrast to $V^\pi$ evaluation where we had $N(s)=0, G(s)=0$, etc.

-

loop

-

sample an episode $i=(s_{i,1},a_{i,1},r_{i,1},s_{i,2},a_{i,2},r_{i,2},….,s_{i,T_i})$

-

define $G_{i,t}$ being the return in this $i$-th episode from time $t$ onwards

\[G_{i,t}=r_{i,t}+\gamma r_{i,t+1} + \gamma^2 r_{i,t+2} + ... + \gamma^{T_i-1} r_{i,T_i}\] -

for each state-action pair $(s,a)$ visited in the episode $i$

- for the first time or every time $t$ that the pair $(s,a)$ is visited

- increment counter of total first visits for that state $N(s,a)=N(s,a)+1$

- increment total return $G(s,a)=G(s,a)+G_{i,t}$

- Update estimate $Q^\pi(s,a)=G(s,a)/N(s,a)$

- for the first time or every time $t$ that the pair $(s,a)$ is visited

-

Notice that a problem with this algorithm: we can only evaluate $Q^\pi(s,a)$ for state-action pairs that we have experienced. And if $\pi$ is deterministic, we can’t compute $Q^\pi(s,a)$ for any $a\neq \pi(s)$. This means that we cannot say anything about new actions.

The same problem goes with improvement, $\pi_{i+1} = \arg\max_a Q^{\pi_i}(s,a)$ since we have initialized $Q^\pi(s,a)=0,\forall a \in A,\forall s \in S$.

- one solution to deal with this is optimistic initialization so we initialize a high $Q^\pi(s,a)$ to promote exploration

This means that our policy improvement will only look at actions we have taken, i.e. it will not explore new state-action pairs so that

\[\pi_{i+1} = \arg\max_a Q^{\pi_i}(s,a)\]will only end up choosing actions visited by $s,\pi(s)$.

This means we may need to modify the policy evaluation algorithm to include non-deterministic state-action pairs for $Q^\pi(s,a)$

Policy Evaluation with Exploration

Recall that the definition of $Q^\pi(s,a)$ was:

\[Q^\pi(s,a) = R(s,a)+\gamma \sum_{s' \in S}P(s'|s,a)V^\pi(s')\]so to find a good evaluation of $Q^\pi(s,a)$, we need to:

- explore every possible action $a$ from state $s$, to record $R(s,a)$

- after that, follow policy $\pi$ to get $\gamma \sum_{s’ \in S}P(s’\vert s,a)V^\pi(s’)$

Insight: since we need to explore every possible action, consider the simple idea to balance exploration and exploitation

\[\pi(a|s) = \begin{cases} \pi(s),& p=1-\epsilon\\ a \in A, & p = \epsilon / |A| \end{cases}\]which is called the epsilon-greedy policy w.r.t a deterministic policy $\pi(s)$.

This also means we can also define $\epsilon$-greedy policy w.r.t. a state-action value $Q(s,a)$

\[\pi(a|s) = \begin{cases} \arg\max_a Q(s,a),& p=1-\epsilon\\ a \in A, & p = \epsilon / |A| \end{cases}\]so that you will see we can use this to change the update rule we had, which is $\pi_{i+1} = \arg\max_a Q^{\pi_i}(s,a)$.

For Example: Mars Rovers again, with 7 states:

-

Now we specify rewards for both actions as we need to compute $Q(s,a)$

\[r(\cdot, a_1) = [1,0,0,0,0,0,10]\\ r(\cdot, a_2) = [0,0,0,0,0,0,5]\] - assume current greedy policy $\pi(s)=a_1$

- take $\gamma =1, \epsilon = 0.5$

Then, using $\epsilon$-greedy w.r.t to $\pi(s)$, we got a sampled trajectory of

\[(s_3,a_1,0,s_2,a_2,0,s_3,a_1,0, s_2,a_2,0, s_1,a_1,1, \text{terminal})\]Question: What is the first visit MC estimate of $Q$ of each $(s,a)$ pair?

- $Q^{\epsilon-\pi}(\cdot,a_1)=[1,0,1,0,0,0,0]$ since we are doing MC, we propagates the end-of-episode reward to all states

- $Q^{\epsilon-\pi}(\cdot, a_2)=[0,1,0,0,0,0,0]$ same reason as above

- notice that without $\epsilon$-greedy, we would have never got $(s_2,a_2,0)$, hence we would have $Q^{\epsilon-\pi}(\cdot, a_2)=[0,0,0,0,0,0,0]$

$\epsilon$-greedy Policy Improvement

Recall that we previous thought of the following as the framework of Model free PI:

-

set $i=0$

-

initialize $\pi_0(s)$ randomly for all $s$

-

while $i==0$ or $\vert \vert \pi_i - \pi_{i-1}\vert \vert _1 > 0$ being the L1-norm

-

$V^{\pi_i}$ being the MDP policy evaluation of $\pi_i$

-

$\pi_{i+1}$ being the policy improvement for $\pi_i$ (discuss next)

\[\pi_{i+1}(s) = \arg\max_a \left\{ R(s,a) + \gamma \sum_{s' \in S} P(s'|s,a)V^{\pi_i}(s') \right\} = \arg\max_a Q^{\pi_i}(s,a)\]

which monotonically improves (strict inequality) the policy until optimal policy

-

And we had the problem of $Q^\pi(s,a)$ only containing updates for very small set of $(s,a)$ pairs that we followed a deterministic $\pi(s)$. This means that policy improvement will not try new actions.

Now, the idea is to replace the update step w.r.t the stochastic $\epsilon$-greedy policy $\pi_i$, so that we consider from a $Q^{\pi_i}$ that:

\[\pi_{i+1}(a|s) = \begin{cases} \arg\max_a Q^{\pi_{i}}(s,a),& p=1-\epsilon\\ a \in A, & p = \epsilon / |A| \end{cases}\]being the new update rule, which now can cover a wider range of $(s,a)$ pairs hence our updated $\pi_{i+1}$ will also contain new actions.

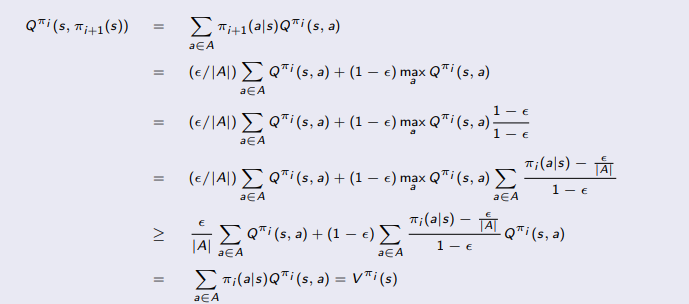

But does this actually provide a monotonic improvement as well (as in the determinstic case)?

Theorem: For any $\epsilon$-greedy policy $\pi_i$, the $\epsilon$-greedy policy $\pi_{i+1}$ w.r.t $Q^{\pi_i}$ is a monotonic improvement, such that $V^{\pi_{i+1}} \ge V^{\pi_i}$

- with this theorem, we can have the MC On Policy Improvement/Control algorithm which uses this to provide a better estimate of $Q^\pi(s,a)$ and improve $\pi$

Proof: We just need to show that the value for taking this new policy is higher:

where basically:

- on the fourth equality, we utilize the fact that $\sum_{a}\pi_i(a\vert s)=1$

- in the last equality, we basically cancelled out the $1-\epsilon$ again and obtained our result

- this of course assumes that you know exactly $Q^{\pi_i}$ for a policy $\pi_i$.

- in case when you have an estimate of $Q^{\pi_i}$, then there is no guarantee of monotonic policy improvement. This will happen a lot in function approximation when we have a continuous state space.

This shows that by following $\pi_{i+1}$ the first step and then following $\pi_i$, we get a higher value. Then, we can show $V^{\pi_{i+1}} \ge V^{\pi_i}$ in the similar fashion as we proved the policy improvement by pushing out the $\pi_{i+1}$ policy to future terms.

Finally, we need guarantees on convergence

**GLIE **(Greedy in the Limit of Infinite Exploration):

all state-action pairs are visitied an infintie number of times

\[\lim_{i\to \infty} N_i(s,a) = \infty\](satisfied as we have stochastic policy)

Behavior policy (the one we used to sample trajectory) converges to greedy policy (deterministic)

\[\lim_{i \to \infty} \pi(a|s) \to \arg\max Q^\pi(s,a)\]

A practice, instead of having $i \to \infty$, we can also reduce $\epsilon$ to have $\epsilon_i = 1/i$ to also satisfy GLIE (over time we have $0$ prob for exploration).

Theorem: GLIE Monte-Carlo control converges to the optimal state-action value function

\[Q(s,a) \to Q^*(s,a)\]from which we can find the optimal policy easily by taking $\arg\max_a Q^*(s,a)$

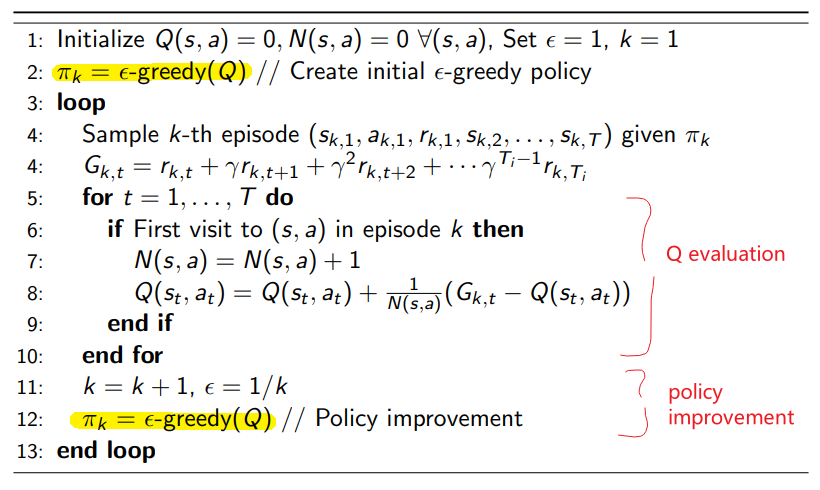

MC On Policy Improvement/Control

Finally, putting everything together, we have the MC On-policy Policy Improvement algorithm as:

which basically combines:

-

and $\epsilon$-Greedy$(Q)$ means that

\[\pi_{k}(a|s) = \begin{cases} \arg\max_a Q(s,a),& p=1-\epsilon\\ a \in A, & p = \epsilon / |A| \end{cases}\]

For Example: Mars Rovers again, with 7 states:

-

Now we specify rewards for both actions as we need to compute $Q(s,a)$

\[r(\cdot, a_1) = [1,0,0,0,0,0,10]\\ r(\cdot, a_2) = [0,0,0,0,0,0,5]\] - assume current greedy policy $\pi(s)=a_1$

- take $\gamma =1, \epsilon = 0.5$

Then, using $\epsilon$-greedy w.r.t to $\pi(s)$, we got a sampled trajectory of

\[(s_3,a_1,0,s_2,a_2,0,s_3,a_1,0, s_2,a_2,0, s_1,a_1,1, \text{terminal})\]So we know the first visit MC estimate of $Q$ of each $(s,a)$ pair gives:

- $Q^{\epsilon-\pi}(\cdot,a_1)=[1,0,1,0,0,0,0]$ since we are doing MC, we propagates the end-of-episode reward to all states

- $Q^{\epsilon-\pi}(\cdot, a_2)=[0,1,0,0,0,0,0]$ same reason as above

now, given the policy evaluation:

Question: what is the greedy policy $\pi(s)$ w.r.t this $Q^{\epsilon - \pi}$?

- simply $\pi(s) = [a_1,a_2,a_1,\text{tie},\text{tie},\text{tie},\text{tie}]$

Question: therefore, what is the new improved policy $\pi_{k+1}=\epsilon\text{-greedy}(Q)$ if $k=3$?

-

this means $\epsilon=1/3$

-

then, from the above result, this means

\[\pi_{k+1}(a|s) = \begin{cases} [a_1,a_2,a_1,\text{tie},\text{tie},\text{tie},\text{tie}], & p=2/3\\ a \in A, & p = 1/6 \end{cases}\]

TD On-Policy Policy Iteration

Recall that the general framework for policy iteration in a model-free case

- initialize $\pi_0(a\vert s)$ randomly for all $s$

- repeat

- policy evaluation: compute $Q^{\pi_i}(s,a)$

- policy improvement: update $\pi_{i+1}$ using $Q^{\pi_i}$

Recall that in the section Policy Evaluation with Unknown World, we had two ways of doing policy evaluation, either doing MC or doing TD. We have come up with a way to compute $Q^\pi(s,a)$ using MC method, therefore, another variant is to use TD method.

Hence, the general algorithm for TD based On-Policy Policy Iteration looks like

-

initialize $\pi_0(a\vert s)$ randomly for all $s$

-

repeat

-

policy evaluation using TD: compute $Q^{\pi_i}(s,a)$

-

policy improvement (same as MC): update $\pi_{i+1}$ using $Q^{\pi_i}$ by

\[\pi_{i+1} = \epsilon\text{-Greedy}(Q^{\pi_i})\]

-

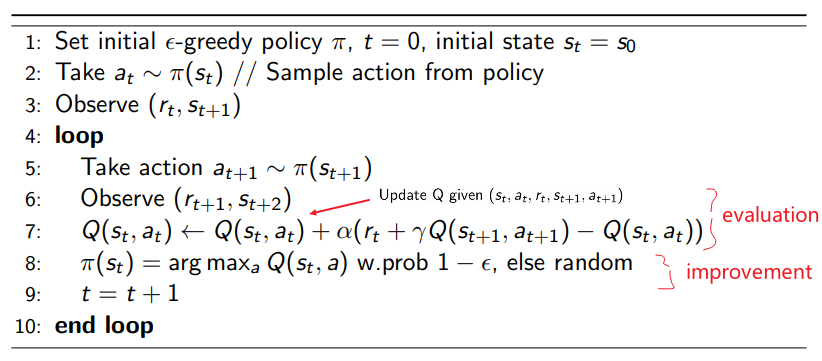

SARSA

Essentially using TD for policy evaluation:

where:

-

the key change is the update rule

\[Q(s_t,a_t) =Q(s_t,a_t)+\alpha( \underbrace{[r_t+\gamma Q(s_{t+1},a_{t+1})]}_{\text{TD target}}-Q(s_t,a_t))\]which can perform update per sample instead of waiting until end-of-episode (in MC case).

-

therefore, notice that the policy are updated much more frequently as well (as compared to the MC case)

-

the $\alpha$ used is also often called the learning rate in this context

Note that we discarded the notation of $Q^\pi$ here and used $Q$, as we are frequently changing the policy when computing this running estimate to converge to $Q^*(s,a)$

Convergence Properties of SARSA

Theorem: SARSA for finite-state and finite-action MDPs converges to the optimal action-value, $Q(s,a)\to Q^*(s,a)$ ,under the following conditions:

the policy sequence $\pi_t(a\vert s)$ satisfies GLIE

the step size/learning rate $\alpha_t$ satisfy the Robbins-Munro sequence such that

\[\sum_{t=1}^\infty \alpha_t =\infty\\ \sum_{t=1}^\infty \alpha_t^2 < \infty\]an example would be $\alpha_t = 1/t$

But as the above are conditions sufficient to guarantee convergence, in reality

- we could also simply use $\alpha$ being some small constants and empirically they often converge as well

- there are also some domains that is hard to satisfy GLIE (e.g. helicopter crashed, can no longer visit some other states). Some research has been working on how to deal with that, but for now we will not worry about it.

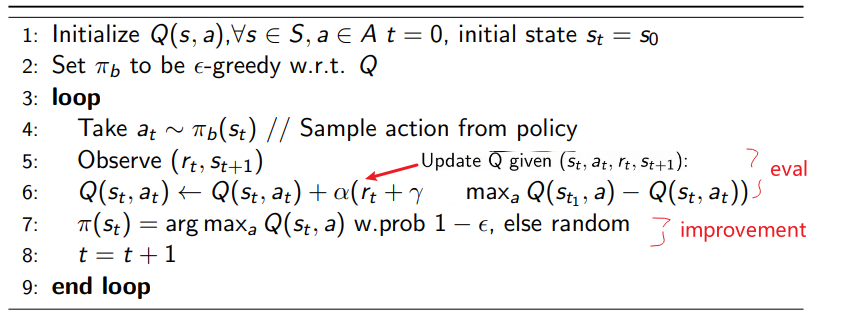

Q-Learning

The only change will be in SARSA

\[Q(s_t,a_t) =Q(s_t,a_t)+\alpha( \underbrace{[r_t+\gamma Q(s_{t+1},a_{t+1})]}_{\text{TD target}}-Q(s_t,a_t))\]but for Q-learning we consider the update rule:

\[Q(s_t,a_t) =Q(s_t,a_t)+\alpha( \underbrace{[r_t+\gamma \max_{a'} Q(s_{t+1},a')]}_{\text{TD target}}-Q(s_t,a_t))\]so that

-

instead of taking $a_{t+1}\sim \pi(a\vert s_{t+1})$, which is being realistic (in SARSA), we are being optimistic so that we update our $Q(s_t,a_t)$ by the best value we could possibly get using $\max_{a’} Q(s_{t+1},a’)$

-

this means that in cases where we have lots of negative reward/risks in early stages (e.g. cliff walking), SARSA would produce better policy whereas Q-Learning would produce more risky policy

Hence the entire algorithm looks like

where notice that:

- we no longer need the $a_{t+1}$ in the five tuple $(s_t, a_t, r_t, s_{t+1}, a_{t+1})$ in SARSA, since we will be taking the next best action

- since we are being optimistic, does how Q is initialized matter? Asymptotically no, under mild conditions, but at the beginning, yes

- finally, like SARSA it is a policy based on TD, this means that the final reward will only propagate slowly to other states.

Convergence Properties of Q-Learning

What conditions are sufficient to ensure that Q-learning with $\epsilon$-greedy exploration converges to optimal $Q^*$ ?

- Visit all $(s, a)$ pairs infinitely often

- and the step-sizes αt satisfy the Robbins-Munro sequence.

- Note: the algorithm does not have to be greedy in the limit of infinite exploration (GLIE) to satisfy this (could keep $\epsilon$ large).

What conditions are sufficient to ensure that Q-learning with $\epsilon$-greedy exploration converges to optimal $\pi^*$ ?

- The algorithm is GLIE (i.e. policy being more and more greedy over time), along with the above requirement to ensure the Q value estimates converge to the optimal Q.

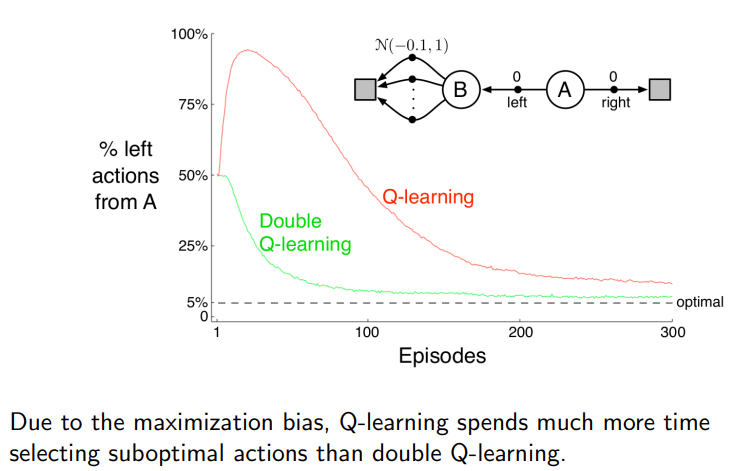

Maximization Bias

Because Q-Learning exploits the greedy action early on, we could have a maximization bias when estimating the value of a policy.

Consider the case of a single state MDP, so that $\vert S\vert =1$, with two actions. And suppose the two actions both have mean random rewards of zero: $\mathbb{E}[r\vert a=a_1]=\mathbb{E}[r\vert a=a_2]=0$

- then this means that $Q(s,a_1)=Q(s,a_2)=0=V(s)$ is the true estimate and is optimal.

In practice, we can only do finite samples taking action $a_1,a_2$. Let $\hat{Q}(s,a_1),\hat{Q}(s,a_2)$ be finite sample estimate of $Q$

-

suppose we used an unbiased estimate of $\hat{Q}(s,a)$ by taking the mean

\[\hat{Q}(s,a_1) = \frac{1}{N(s,a_1)}\sum_{i=1}^{N(s,a_1)}r_i(s,a_1)\] -

Let $\hat{\pi}=\arg\max \hat{Q}(s,a)$ be the greedy policy w.r.t the estimated $\hat{Q}$

-

then we notice that:

\[\begin{align*} \hat{V}^{\hat{\pi}} &=\mathbb{E}[\max (\hat{Q}(s,a_1),\hat{Q}(s,a_2))]\\ &\ge\max(\mathbb{E}[\hat{Q}(s,a_1)],\mathbb{E}[\hat{Q}(s,a_2)]\\ &= \max[0,0]\\ &= V^\pi \end{align*}\]meaning we have a biased estimator of $V^{\hat{\pi}}$ for the optimal policy.

To solve this, the idea is to instead split samples and use to create two independent unbiased estimates of $Q_1(s_1, a_i)$ and $Q_2(s_1, a_i),\forall a$.

- Use one estimate to select max action: $a^* = \arg\max_a Q_1(s_1,a)$

- Use other estimate to estimate value of $a^:Q_2(s,a^)$

- Yields unbiased estimate: $\mathbb{E}[Q_2(s,a^)]=Q(s,a^)$

This therefore gives birth to the double Q-Learning algorithm

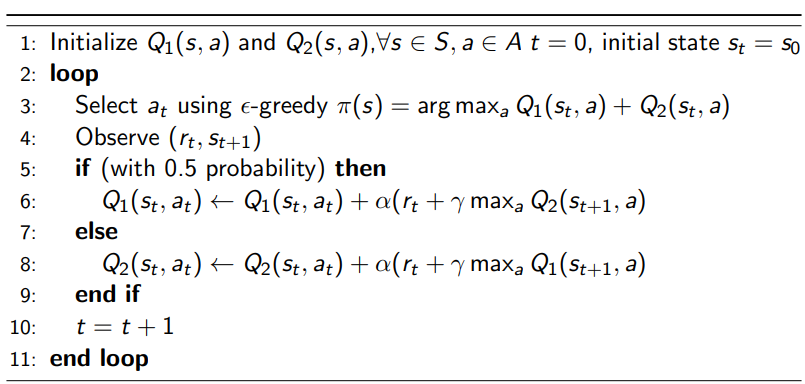

Double Q-Learning

So the only difference is now we have two $Q$ estimates:

where in practice compared to Q-Learning:

- doubles the memory

- same computation requirements

- data requirements are subtle– might reduce amount of exploration needed due to lower bias

Additionally

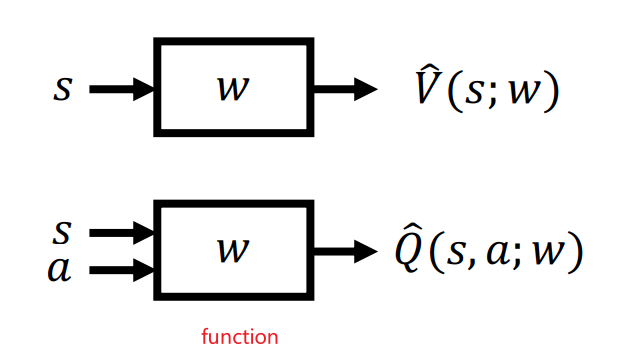

Value Function Approximation

In the previous section, we discussed how to do control (make decisions) without a known model of how the world works:

- learning a good policy from experience (sampled episodes)

- update $Q$ estimate using a tabular representation: finite number of state-action pair

However, as you can imagine many real world problems have enormous state and/or action space so that we cannot really tabulate all possible values. So we need to somehow generalize to those unknown state-actions.

Aim: even if we encounter state-action pairs not met before, we want to make good decisions by past experience.

Value Function Approximation: represent a (state-action/state) value function with a parametrized function instead of a table, so that even if we met an inexperienced state/state-action, we can get some values.

So we imagine

so that essentially we are parameterized the function with weights $W$ as in a deep neural network.

Using such an approach has the following benefits:

- Reduce memory needed to store $(P,R)/V/Q/\pi$

- Reduce computation needed to compute $(P,R)/V/Q/\pi$

- Reduce experience/data needed to find a good $(P,R)/V/Q/\pi$

but this will usually be a tradeoff:

- representational capacity (of the DNN to represent states) v.s. memory/computation/data needed

Then most importantly, we need to consider what class of functions do we consider for such an approximation? Many possible function approximators including

- Linear combinations of features

- Neural networks

- Decision trees (useful for being highly interpretable)

- Nearest neighbors

- Fourier/ wavelet bases

But here we will focus on function approximators that are differentiable, so that we can easily optimize for (e.g. gradient descent). Two popular classes of differentiable function approximators include:

- linear feature representations (this section)

- neural networks (next section)

Also notice that since we are now doing gradient descent, our approximator will be local optimas instead of the global optimas.

VFA for Policy Evaluation

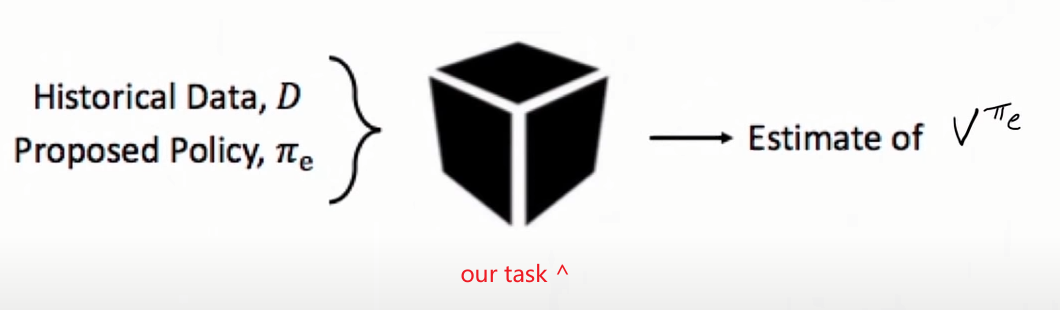

Aim: find the best approximate representation of $V^\pi$ using a parametrized function $\hat{V}$.

VFA for Policy Evaluation with Oracle

In this case, we consider:

- given a policy $\pi$ to evaluate

- assume that there is an oracle that returns the true value for $V^\pi(s)$

so we basically want our function approximation to look like the true $V^\pi(s)$ in our space.

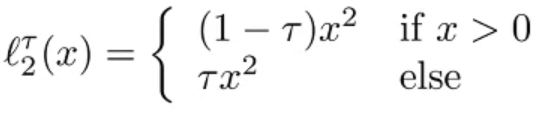

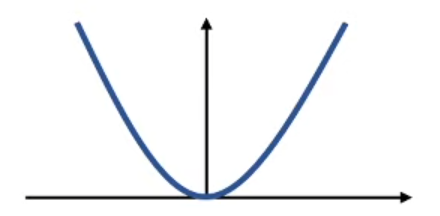

Then we can simply perform gradient descent type methods. For instance,

-

loss function

\[L(w) = \frac{1}{2} \mathbb{E}_\pi[(V^\pi(s) - \hat{V}(s;w))^2]\]where $\mathbb{E}_\pi$ means expected value over the distribution of states under current policy $\pi$

-

then gradient update is

\[w:=w - \alpha \left( \nabla_w L(w) \right)\]and

\[\nabla_w L(w) = \mathbb{E}_\pi[(V^\pi(s)- \hat{V}(s;w))\nabla_w \hat{V}(s)]\]

Then, for instance SGD considers using some batched/single sample to approximate the expected value:

\[\nabla_w L(w) \approx (V^\pi(s)- \hat{V}(s;w))\nabla_w \hat{V}(s)\]for some sampled $s,V^\pi(s)$.

If we are considering a Linear Value Function Approximation with an Oracle, then simply we consider

\[\hat{V}(s;w) = \vec{x}(s)^T \vec{w}\]for $\vec{x}(s)$ being a representation of our state. Then we acn also show the gradient to be:

\[\nabla_w L(w) = \mathbb{E}_\pi[(V^\pi(s)- \hat{V}(s;w)) \vec{x}(s)]\]since $\nabla_w \hat{V} = \vec{x}(s)$ in this case.

Model Free VFA Policy Evaluation

Obviously most of the time we do not have an oracle, which is like knowing the model of the world already.

Aim: do model-free value function approximation for prediction/evaluation/policy evaluation without a model/oracle

- recall that this means given a fixed policy $\pi$

- estimate its $V^\pi$ or $Q^\pi$

Recall that we have done model-free policy evaluation using tabular methods in:

- Monte Carlo Policy Evaluation: update estimate after each episode

- Temporal Difference Learning: update estimate after each step

both of which essentially does:

- maintain a look up table to store current estimates $V^\pi$ or $Q^\pi$

- Updated these estimates after each episode (Monte Carlo methods) or after each step (TD methods)

In VFA, we can basically change the update step to be fitting the function approximation (e.g. gradient descent)

This means that we need to have prepared:

-

a feature vector to represent a state $s$

\[x(s) = \begin{bmatrix} x_1(s)\\ x_2(s)\\ \dots\\ x_n(s) \end{bmatrix}\]for instance, for robot navigation, it can be a 180-dimensional vector, with each cell representing the distance to the first detected obstacle. However, notice that this representation also means that it is not markov, as in different hallways (true states) you could have the same feature vector. But it could be still a good representation to condition our decision on.

-

choose a class of function approximators to approximate the value function

- linear function

- neural networks

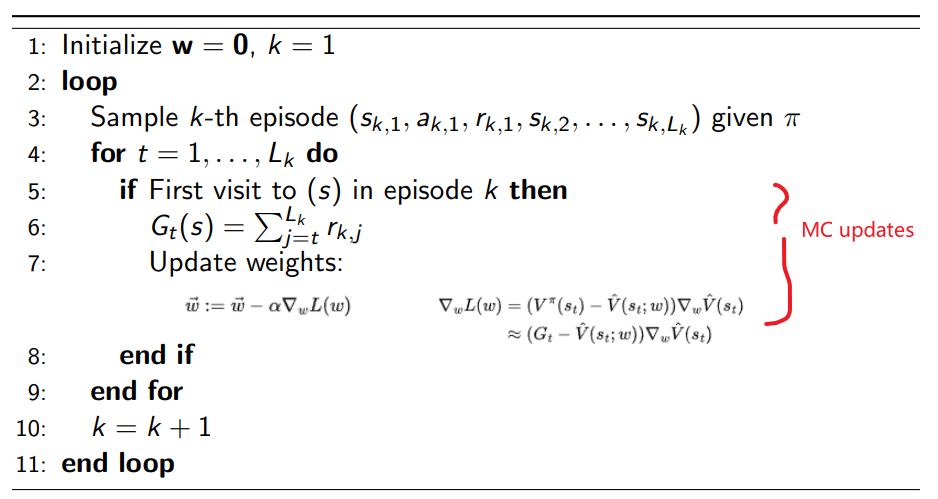

MC Value Function Approximation

Recall that for MC methods, we used the following update rule for value function updates

\[V^\pi(s)=V^\pi(s)+\alpha\left( G_{i,t}-V^\pi(s) \right)\]so that our target is $G_t$, which is an unbiased but noisy estimate of the true value of $V^\pi(s_t)$.

Idea: therere we treat $G_t$ being the “oracle” fo $V^\pi(s_t)$, from which we can get a loss and update our approximator.

This then means our gradient update (for SGD is):

\[\begin{align*} \nabla_w L(w) &=(V^\pi(s_t)- \hat{V}(s_t;w))\nabla_w \hat{V}(s_t)\\ &\approx (G_t- \hat{V}(s_t;w))\nabla_w \hat{V}(s_t) \end{align*}\]If we are using a linear function:

\[\nabla_w L(w) \approx (G_t- \vec{x}(s_t)^T \vec{w}) \vec{x}(s_t)\]and then just use $\vec{w} := \vec{w} - \alpha \nabla_w L(w)$ to do descent.

This means that we essentially reduce MC VFA to doing supervised learning on a set of (state,return) pairs: $(s_1, G_1), (s_2, G_2), …, (s_T, G_t)$

Therefore our algorithm with MC updates looks like

where notice that:

- of course you can also have an every-visit version by changing line 5

- since we have a finite episode, we used $\gamma=1$ in line 6

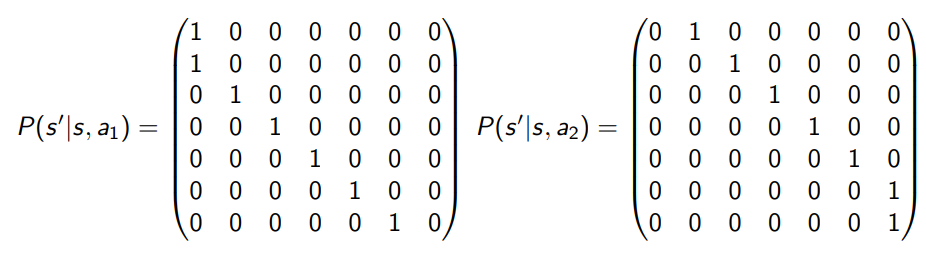

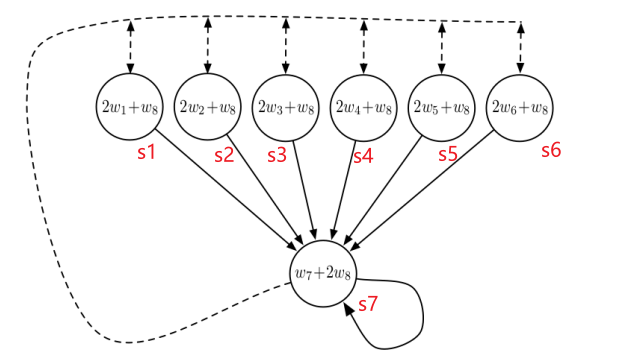

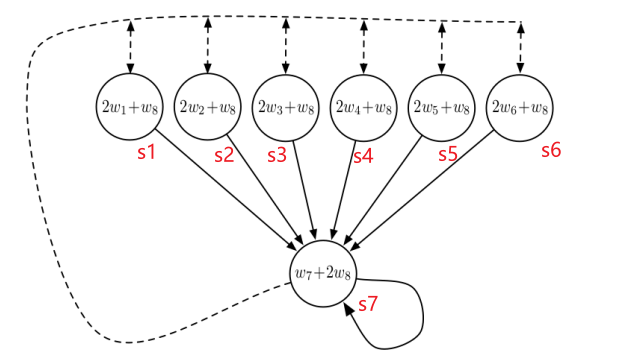

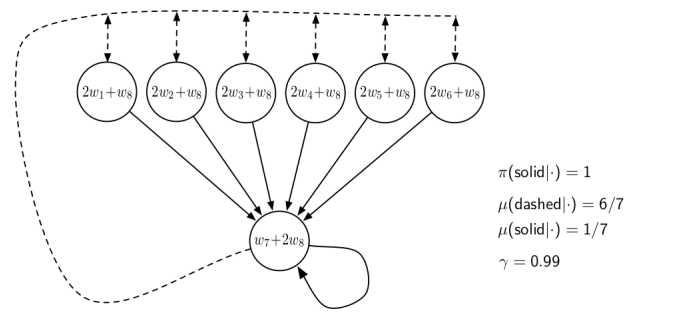

For instance: consider a 7 state space with the transition looking like:

so that:

- state $\vec{x}(s_1) = [2,0,0,0,0,0,1]$ so that $\vec{x}(s_1)^T \vec{w} = 2w_1+w_8$, etc.

- there are two states, $a_1$ being the solid lines and $a_2$ being the dashed lines.

- dashed transition means that here we have a uniform probability $1/6$ to be transitioned to any state $s_i$ for $i \in [1,6]$.

- we assume some small termination probability from state $s_7$, but not shown on the diagram

- all states have zero reward (so the optimal value function is $V^\pi = 0$)

Suppose we have sampled the following episode:

- $s_1, a_1, 0, s_7, a_1, 0, s_7, a_1, 0, \text{terminal}$

- we are using a linear function

- we have initialized all weights to one $\vec{w}_0 = [1,1,1,1,1,1,1]$

- $\alpha = 0.5$, take $\gamma = 1$

Question: what is the MC estimate of $V^\pi(s_1)$? $\vec{w}$ after one update?

- first we need $G_1$, which in this case is $0$

- our current estimate for $V^\pi(s_1)$ is $\vec{x}(s_1)^T \vec{w}_0 = 2+1=3$

-

therefore, our update for one step is:

\[\Delta w = -\alpha \cdot (0 - 3) \cdot \vec{x}(s_1) =-1.5\vec{x}(s_1)=[-3,0,0,0,0,0,-1.5]\] -

finally, our new weight is therefore $w + \Delta w$:

\[\vec{w}_1 = [-2,1,1,1,1,1,-0.5]\]so essentially we are doing SGD per state in the episode.

But does such an update converge to the right thing?

Convergence for MC Linear Value Function Approximation

Recall that if provided a policy, then MDP problem is reduced to a Markov Reward Process (by following that policy). Therefore, if we eventually sample many episodes, we get a probability distribution over states $d(s)$, such that:

- $d(s)$ is the stationary distribution over states following $\pi$

- then obviously $\sum_s d(s) = 1$

Since it is stationary, this means that the distribution after a single transition gives the same $d(s)$:

\[d(s') = \sum_s \sum_a \pi(a|s)p(s'|s,a)d(s)\]must hold, if the markov process has ran long enough.

Using this distribution, we can consider the mean square error of our estimators for a particular policy $\pi$:

\[MSVE(\vec{w}) = \sum_{s\in S} d(s)\cdot (V^\pi(s) - \hat{V}^\pi(s;\vec{w}))^2\]for a linear function, we use $\hat{V}^\pi(s;\vec{w}) = \vec{x}(s)^T \vec{w}$.

Theorem: MC policy evaluation with VFA converges to the weights $\vec{w}_{MC}$ which has the minimum mean squared error possible:

\[MSVE(\vec{w}_{MC}) = \min_w \sum_{s\in S} d(s)\cdot (V^\pi(s) - \hat{V}^\pi(s;\vec{w}))^2\]note that the error might not be zero, e.g. using a linear approximator has only a small capacity.

Batch MC Value Function Approximation

The SGD version basically performs an update per sample, which is suitable for online scenario. However, often we could get a set of episodes already sampled from using a policy $\pi$. Then we can perform a better weight update by considering:

\[\arg\min_\vec{w} \sum_{i=1}^N (G(s_i)- \vec{x}(s_i)^T \vec{w})^2\]to approximate the expected value version of the MSVE. Then the optimal weights can be solved directly to be:

\[\vec{w} = (X^TX)^{-1} X^T \vec{G}\]for:

- $\vec{G}$ is a vector of all $N$ returns

- $X$ is a matrix of the features of each of the $N$ states $\vec{x}(s_i)$

- but of course this would be memory intensive as we need to store all $N$ states and returns.

- finally, you can obviously have something in between SGD and this full batch.

TD Learning with Value Function Approximation

First recall that TD method considers bootstrapping and sampling to approximate $V^\pi$, so that the update rule is based on per sample:

\[V^\pi(s) = V^\pi(s) + \alpha (r + \gamma V^\pi(s') - V^\pi(s))\]with the target being $r+\gamma V^\pi(s’)$, which is a biased estimate of the true $V^\pi$.

Idea: use function to represent $V^\pi$, and use boostrapping + sampling in TD method, with the same update rule shown above.

(recall that we are still on-policy, we are evaluating the value of a given policy $\pi$ and all sampled data + estimation are on the same policy)

Therefore, essentially we now consider TD larning being a supervised learning on the set of data pairs:

\[(s_1, r_1 + \hat{V}^\pi(s_2;\vec{w})), (s_2, r_2 + \hat{V}^\pi(s_3;\vec{w})), ...\]then the MSE loss is simply:

\[L(\vec{w}) = \mathbb{E}_\pi [ (r_j + \gamma \hat{V}^\pi(s_{j+1},\vec{w})) -\hat{V}(s_j; \vec{w})]\]Hence our gradient step with a SGD update (replacing mean with a single sample) is:

\[\Delta w = \alpha \cdot (r + \hat{V}^\pi(s';\vec{w}) - V^\pi(s;\vec{w})) \cdot \nabla_w \hat{V}^\pi(s;\vec{w})\]again, with target being $r+\gamma \hat{V}^\pi(s’;\vec{w})$

In the case of a linear function, then we have:

\[\begin{align*} \Delta w &= \alpha \cdot (r + \hat{V}^\pi(s';\vec{w}) - V^\pi(s;\vec{w})) \cdot \nabla_w \hat{V}^\pi(s;\vec{w})\\ &= \alpha \cdot (r + \hat{V}^\pi(s';\vec{w}) - V^\pi(s;\vec{w})) \cdot \vec{x}(s)\\ &= \alpha \cdot (r + \vec{x}(s')^T \vec{w} - \vec{x}(s)^T \vec{w}) \cdot \vec{x}(s)\\ \end{align*}\]so we are boostrapping our target using our current estimate of $V^\pi(s)$.

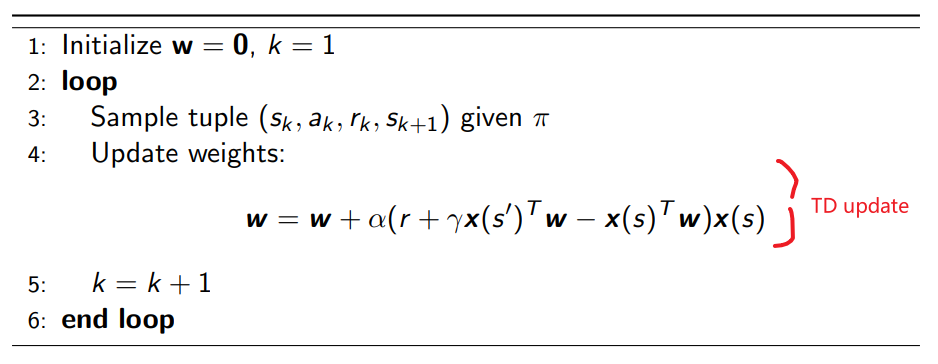

Finally, the algorithm therefore looks like

For instance: we can consider the same example with MC case to compare the difference:

so that:

- state $\vec{x}(s_1) = [2,0,0,0,0,0,1]$ so that $\vec{x}(s_1)^T \vec{w} = 2w_1+w_8$, etc.

- there are two states, $a_1$ being the solid lines and $a_2$ being the dashed lines.

- dashed transition means that here we have a uniform probability $1/6$ to be transitioned to any state $s_i$ for $i \in [1,6]$.

- we assume some small termination probability from state $s_7$, but not shown on the diagram

- all states have zero reward (so the optimal value function is $V^\pi = 0$)

Suppose we have sampled the following episode:

- $s_1, a_1, 0, s_7, a_1, 0, s_7, a_1, 0, \text{terminal}$

- we are using a linear function

- we have initialized all weights to one $\vec{w}_0 = [1,1,1,1,1,1,1]$

- $\alpha = 0.5$, take $\gamma = 0.9$

Question: what is the TD estimate of $V^\pi(s_1)$? $\vec{w}$ after one update of $(s_1,a_1,0,s_7)$?

-

using the update formula:

\[\Delta \vec{w} = \alpha (0+ 0.9 * 3 - 3)\vec{x}(s_1) = -0.3\alpha \vec{x}(s_1)\]which is a much smaller weight update than the MC update.

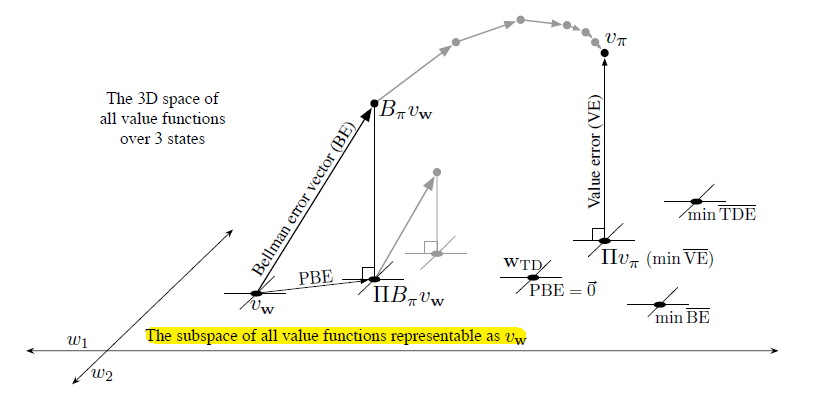

Convergence for TD Linear Value Function Approximation

As mentioned in the MC case, we consider the MSVE for our estimators for a particular policy $\pi$:

\[MSVE(\vec{w}) = \sum_{s\in S} d(s)\cdot (V^\pi(s) - \hat{V}^\pi(s;\vec{w}))^2\]for a linear function, we use $\hat{V}^\pi(s;\vec{w}) = \vec{x}(s)^T \vec{w}$.

Theorem: TD policy evaluation with VFA converges to the weights $\vec{w}_{TD}$ is within a constant factor of minmum mean squared error possible:

\[MSVE(\vec{w}_{TD}) \le \frac{1}{1-\gamma} \min_w \sum_{s\in S} d(s)\cdot (V^\pi(s) - \hat{V}^\pi(s;\vec{w}))^2\]so it is slightly worse than MC method as it is biased, but it updates/converges much faster.

As mentioned before, this happens also because we are using

- some feature representation for a state which might be a subspace of the true space of states

- in TD we are bootstrapping, which gives rise to bias and error

But this also means that if we have some one-hot encoded feature, one for each state, then:

\[\min_w \sum_{s\in S} d(s)\cdot (V^\pi(s) - \hat{V}^\pi(s;\vec{w}))^2 = 0\]as you can have a unique value per state, even with a linear function. Therefore:

-

if we used a MC method, then simply:

\[MSVE(\vec{w}_{MC})= \min_w \sum_{s\in S} d(s)\cdot (V^\pi(s) - \hat{V}^\pi(s;\vec{w}))^2 = 0\] -

if we used a TD method, then:

\[MSVE(\vec{w}_{TD})\le 0\]is also optimal, and MC v.s. TD has no difference.

(of course this also means we have enough data)

If our state representation is a subspace of the true state space, then using a TD might incur some error (due to bootstrapping error). But if the state representation is larger or equal to the true state space, then using a TD and MC has no difference.

Control using VFA

Now for control, essentially we consider moving from policy evaluation (previous sections) to policy iteration. This is basically achieved by:

- estimate $Q^\pi(s,a)$ instead, using MC or TD technique

- perform $\epsilon$-greedy policy improvement

However, this can get unstable because we had the folllowing components:

- function approximation (has uncertainty)

- bootstrapping (has uncertainty)

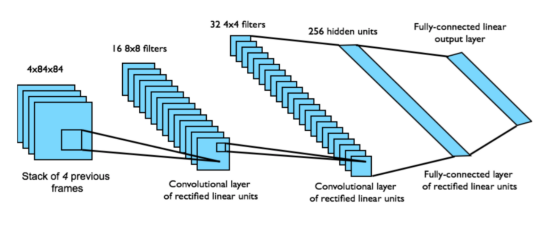

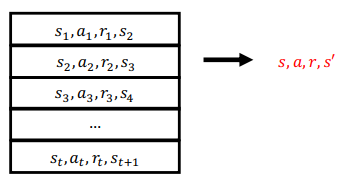

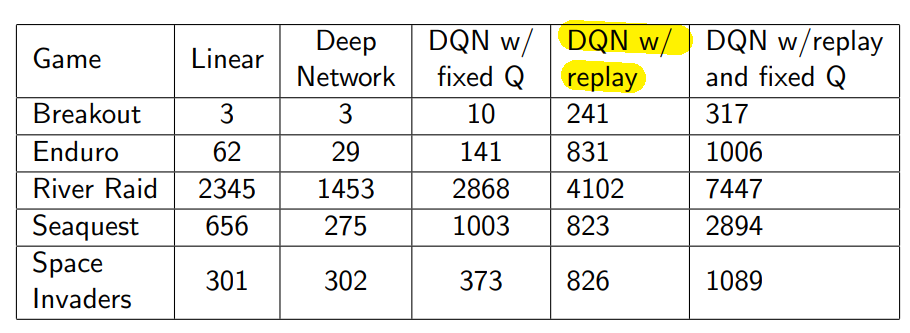

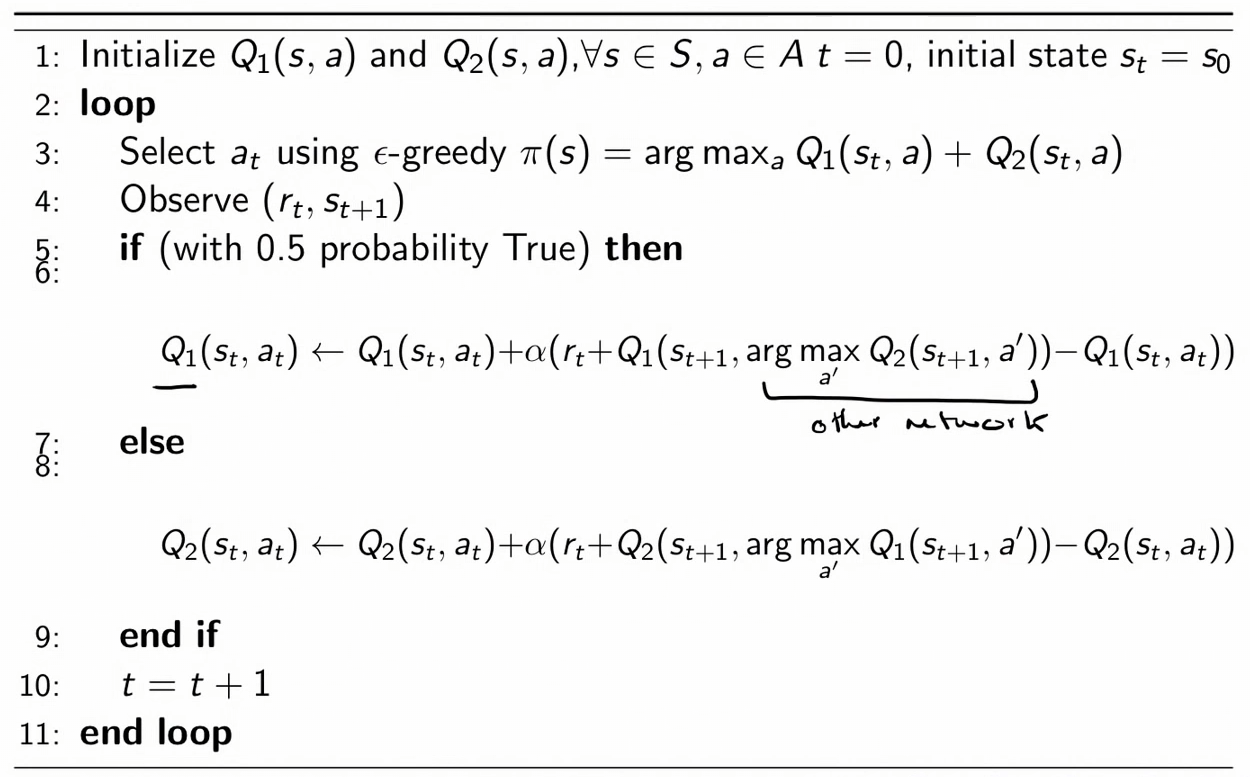

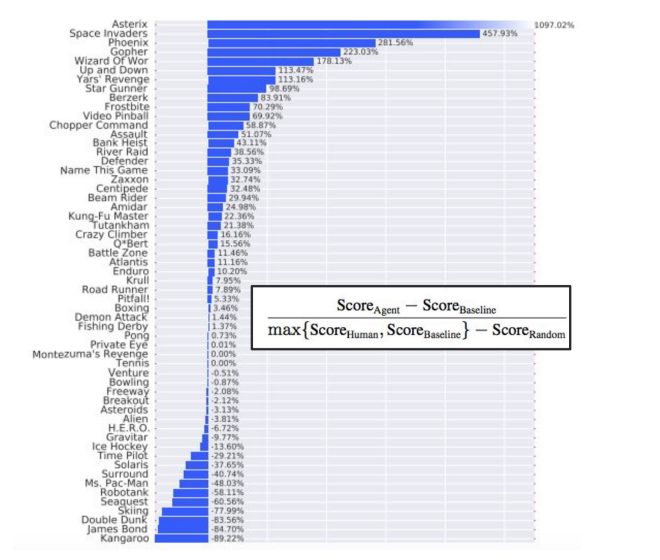

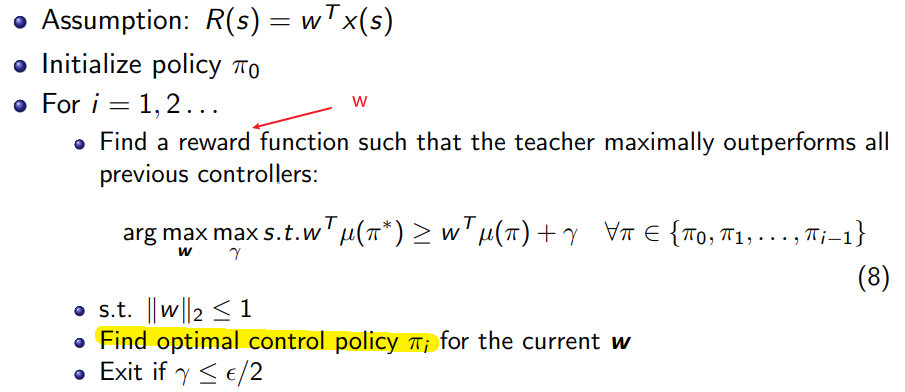

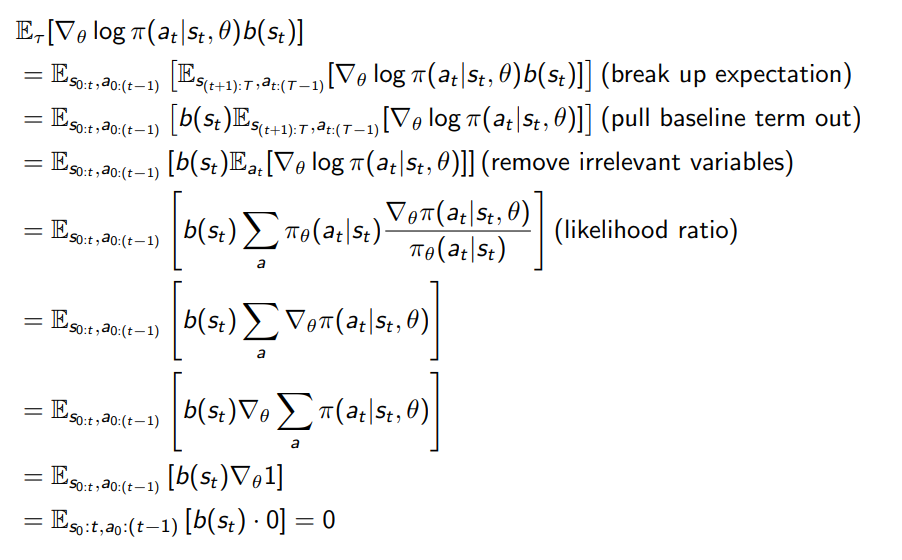

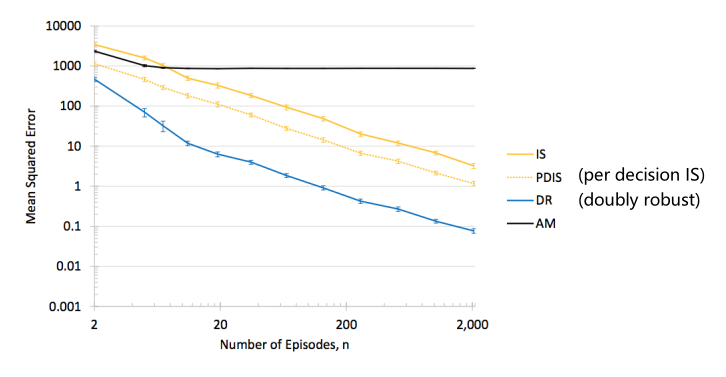

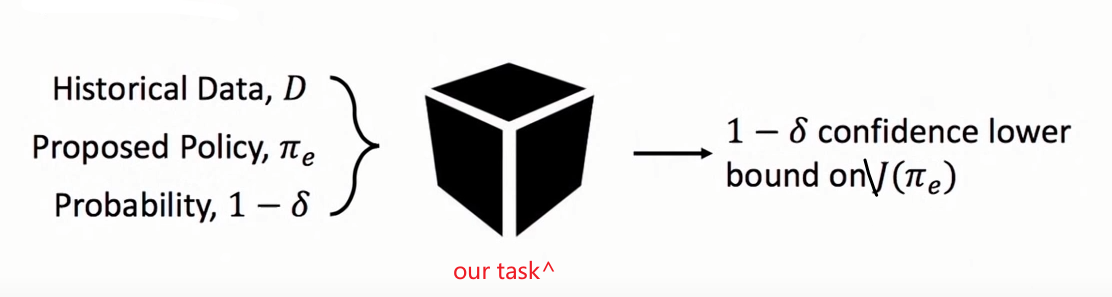

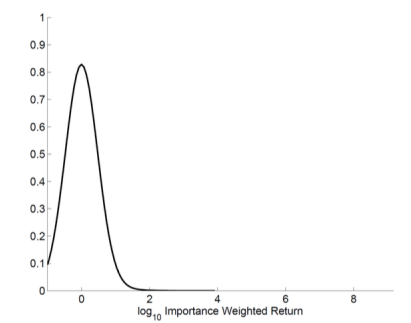

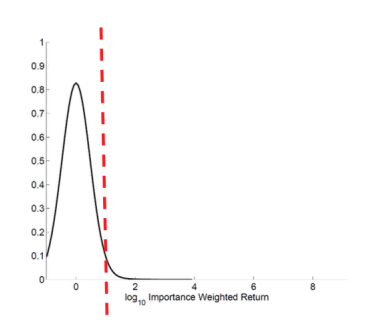

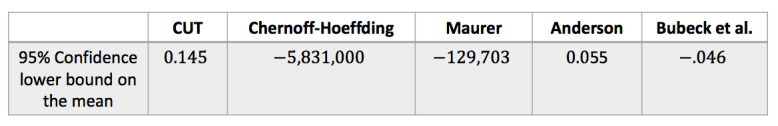

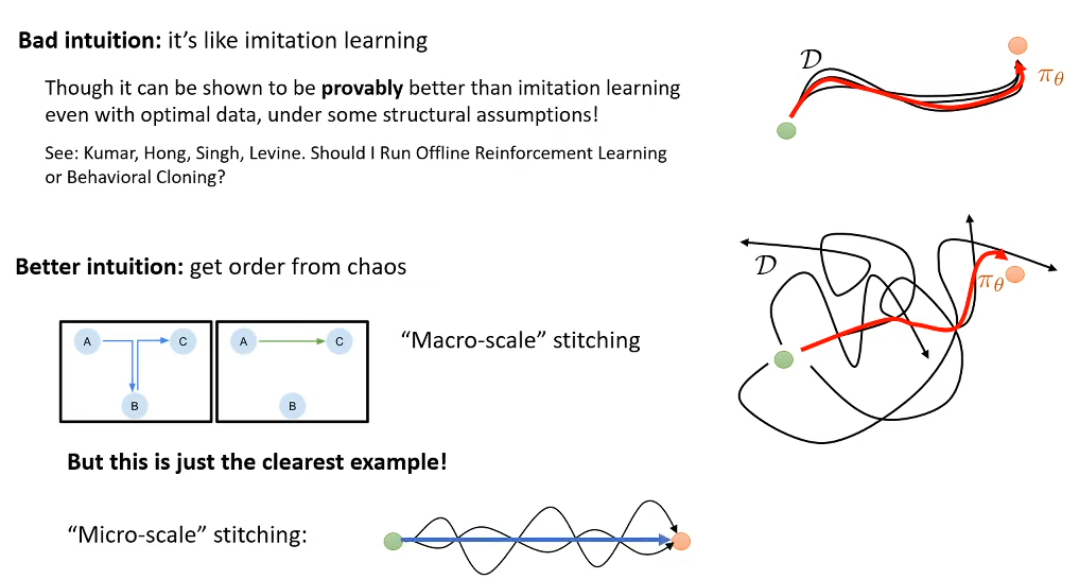

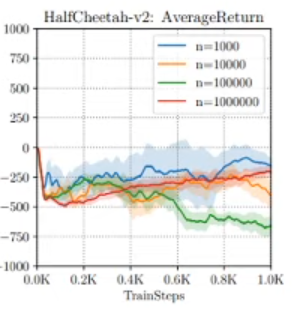

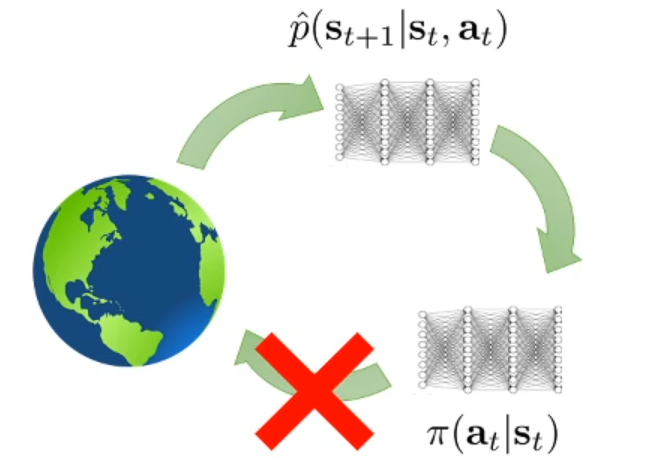

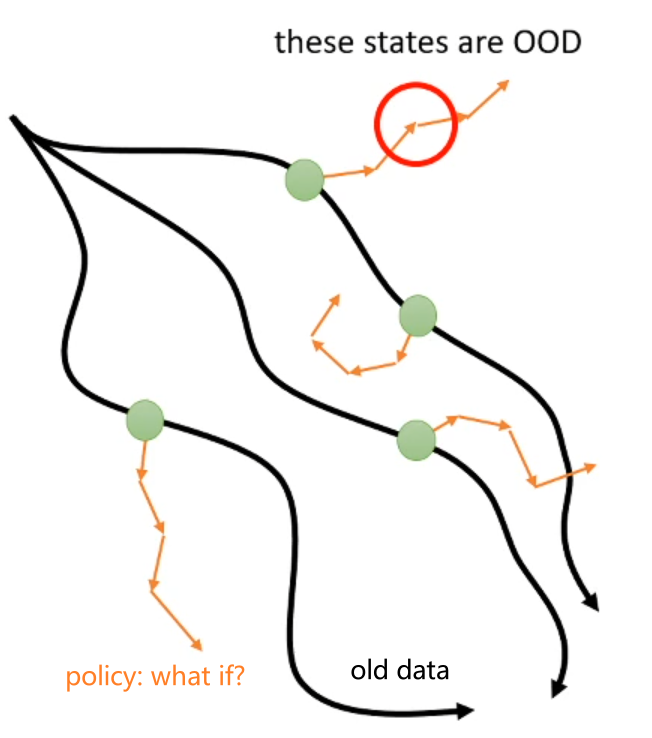

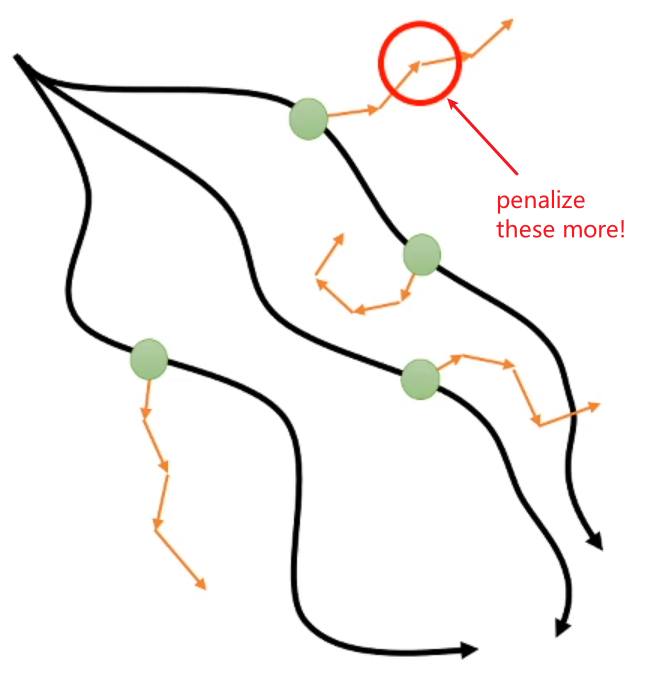

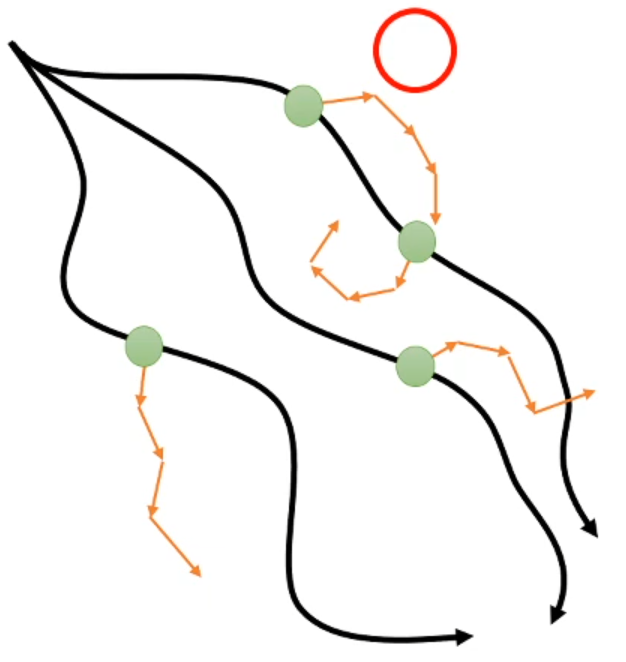

- off-policy learning for policy improvement (has the biggest uncertainty)