CSOR4231 Analysis of Algorithms

- Analysis of Algorithms

- Introduction

- Asymptotic Notation

- The Master Method

- Linear-Time Selection

- QuickSort

- Graphs: The Basics

- Dijkstra’s Shortest Path Algorithm

- Hash Tables and Bloom Filters

- Introduction to Greedy Algorithms

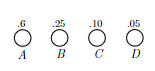

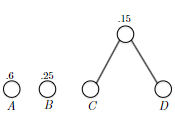

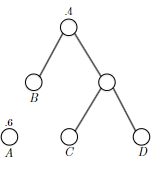

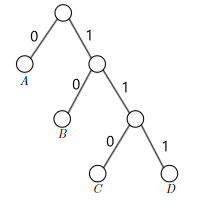

- Huffman Codes

- Prefix-Free Codes

- Huffman’s Greedy Algorithm

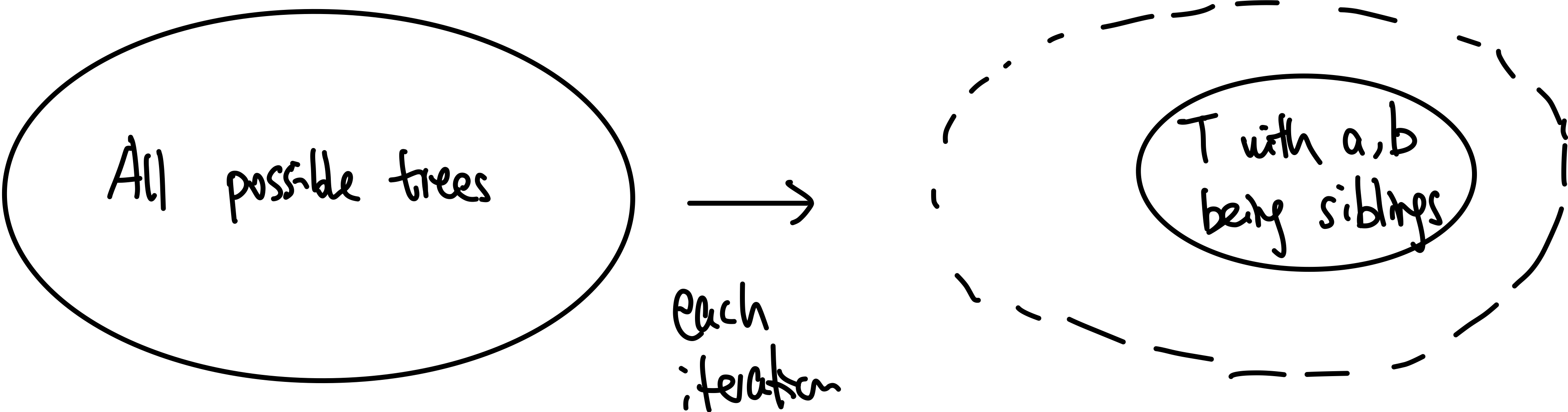

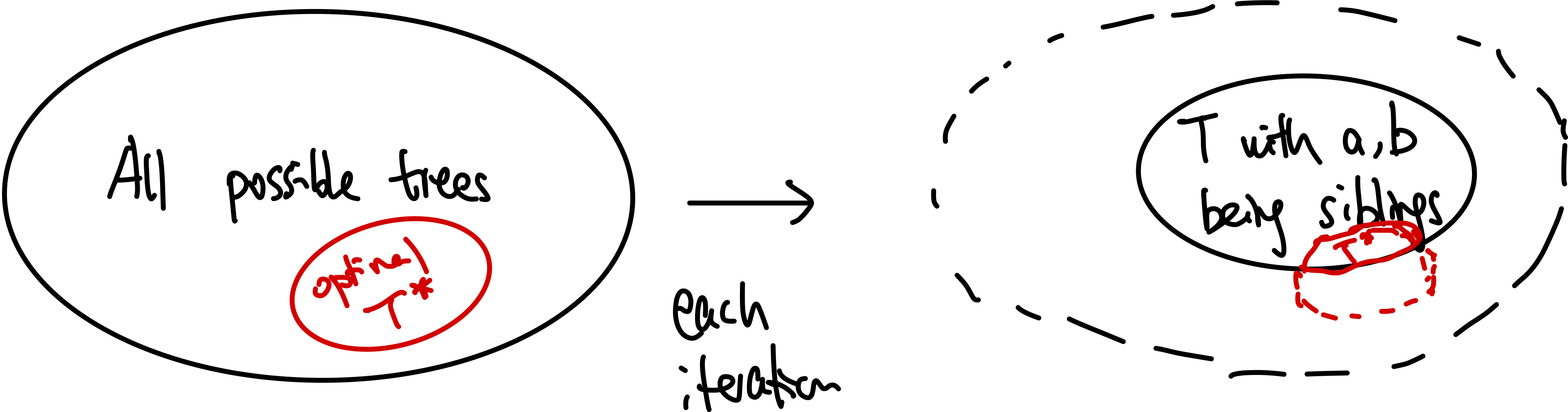

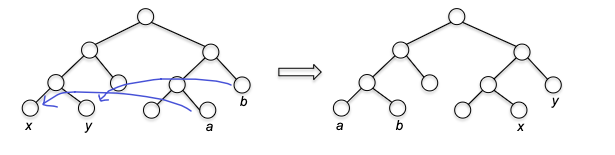

- Correctness of Huffman’s Algorithm

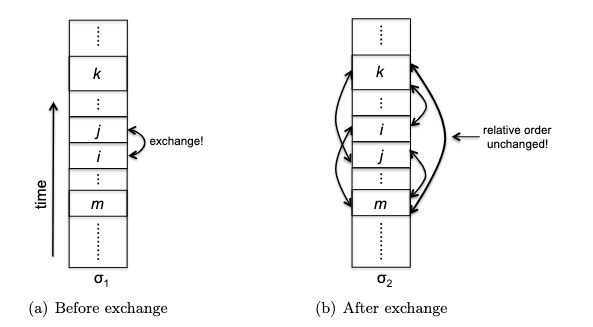

- inductive step: we need to show that $P(k)$ is true. Since each step of the algorithm we are merging two least frequent “symbols” (the root of the subtree), we want to show claim 1 and claim 2 to hold in order to prove that the output tree at this stage is optimal.

- Running Time of Huffman Algorithm

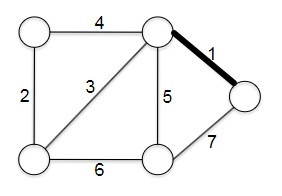

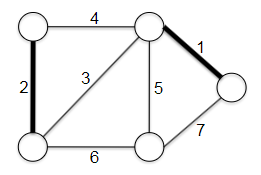

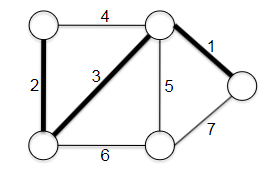

- Minimum Spanning Trees

- Introduction to Dynamic Programming

- Advanced Dynamic Programming

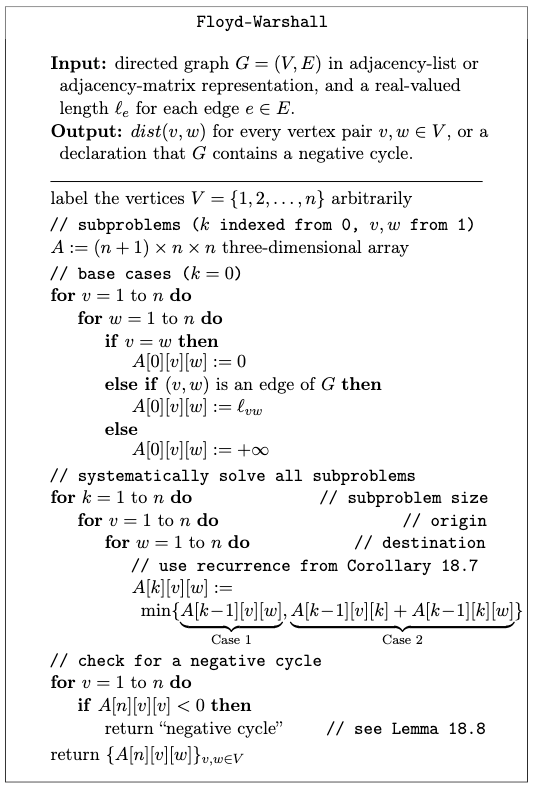

- Shortest Path Revisited

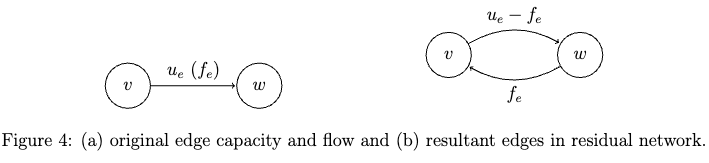

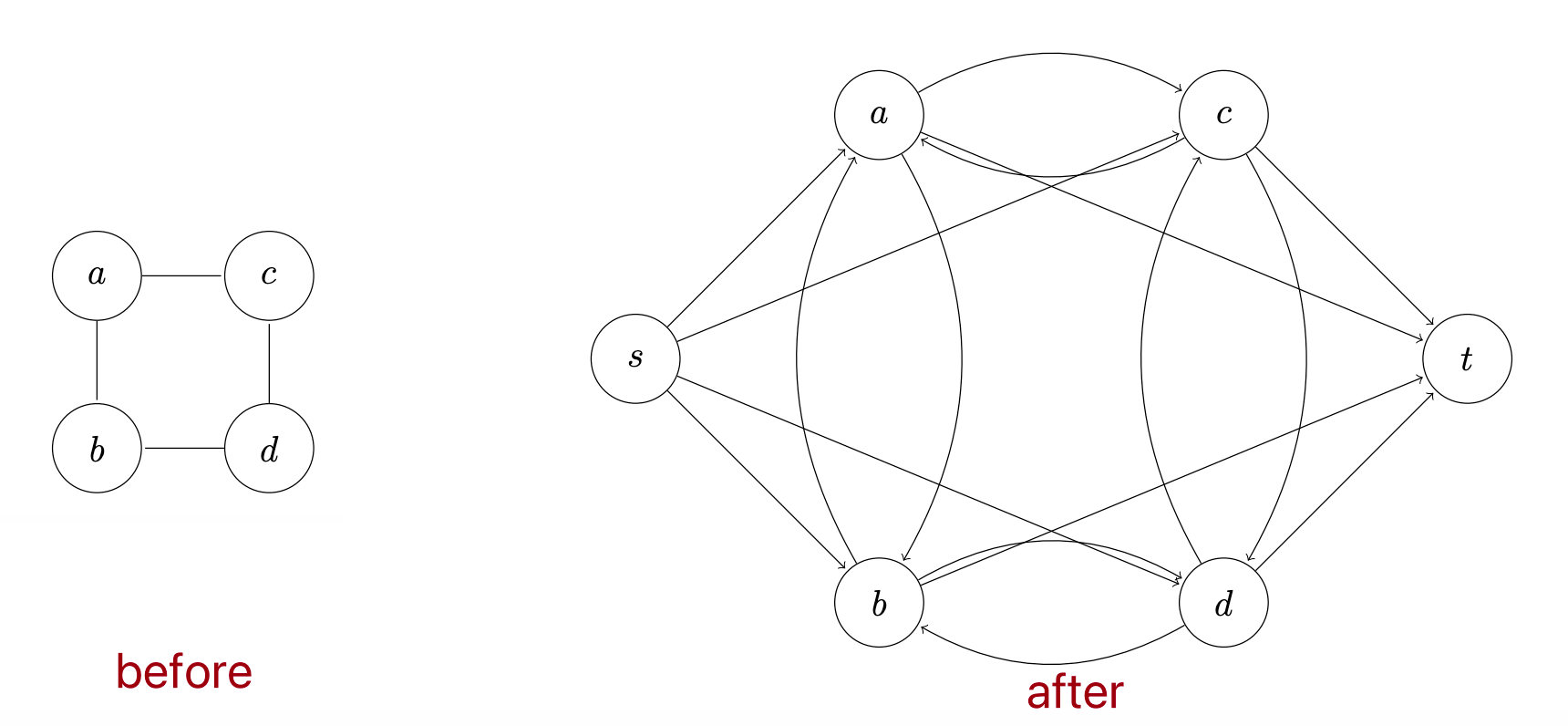

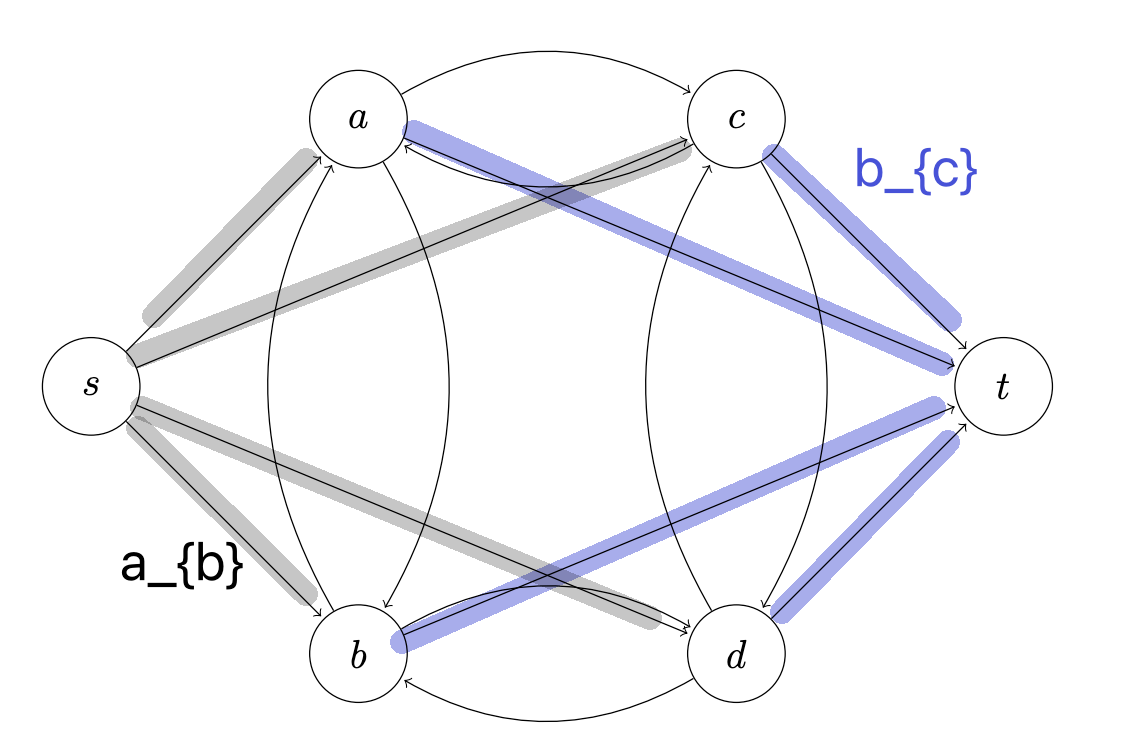

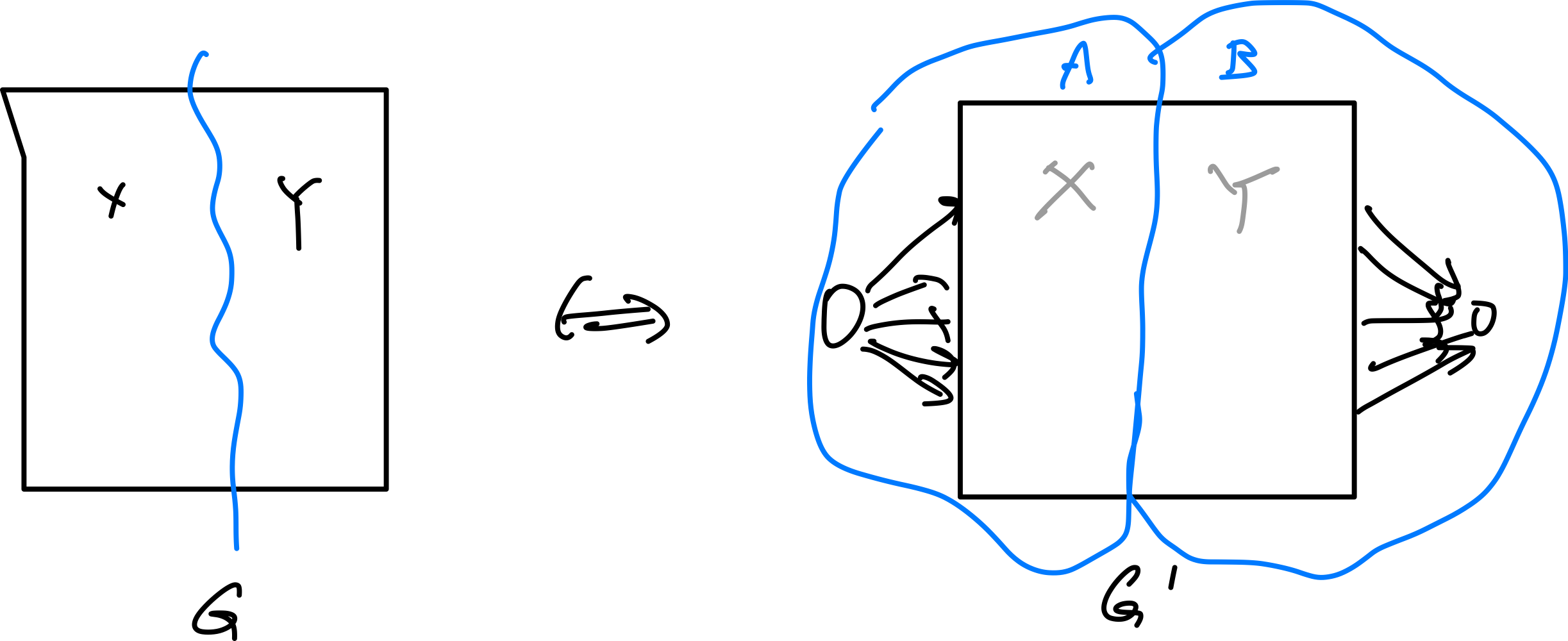

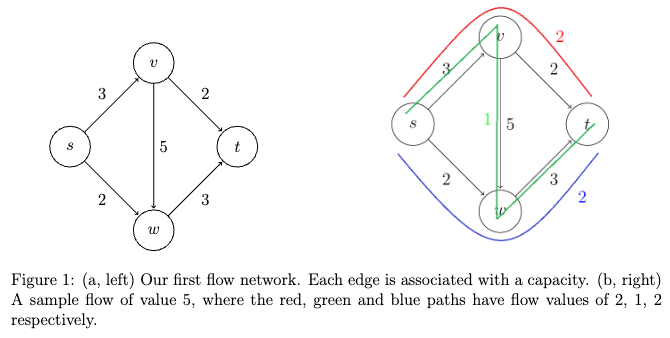

- Max Flows and Min Cuts

- Linear Programming

*Picture credits from the Algorithms Illuminated book

Analysis of Algorithms

Logistics: Mostly see Canvas, but just note that:

- 10 Psets, no late days, but 2 can be dropped. Each pset worth 10 points.

- 3 non-cumulative exam, each worth 60 points. Tentative dates Oct 3, Oct 31, Dec 7.

- exams will have about 50% content verbatim from HW

- textbook: the split version of Algorithms Illuminated will have the same content as the Algorithms Illuminated Omnibus version, except that problem numbering might be different

- skim the readings before/after class, as they are the content you are responsible for

Example demo algorithmic questions:

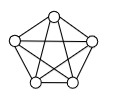

*For example*: Routing in Internet. Let nodes/vertices be hosts, and edges be the physical/wireless connections. Let the connections be bidirectional. How do you figure out the shortest path (least number of hops) between two given hosts?

-

Dikstra algorithm: given a source host (e.g. node 0), we can find the shortest path to all other hosts. The key insight shortest path is composed of shortest path, assuming all edges are positive. This means that if you have a given shortest path from $v$ to $u$, and nodes $w,x,y,z$ are only connected to $u$, then any shortest path from $v$ to $w,x,y,z$ must go through $u$.

The algorithm iteratively grows a “tree” of visited nodes. At each iteration, the node that has the smallest cost (e.g. node 1) will be marked as done. THen we add all the neighbors of that marked node (because of the insight above) to the tree of visited nodes, and update the cost of the neighbors.

however, the issue is that it needs to remember information about the entire internet during computation (i.e. keep an adjacency matrix/is visited of all nodes), which is not scalable.

-

Bellman-Ford algorithm: a differential version that converges to the shortest path.

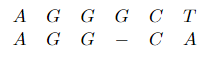

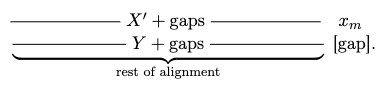

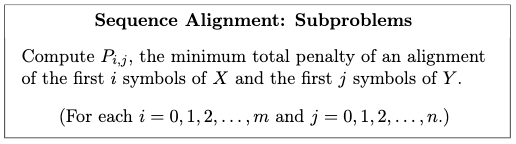

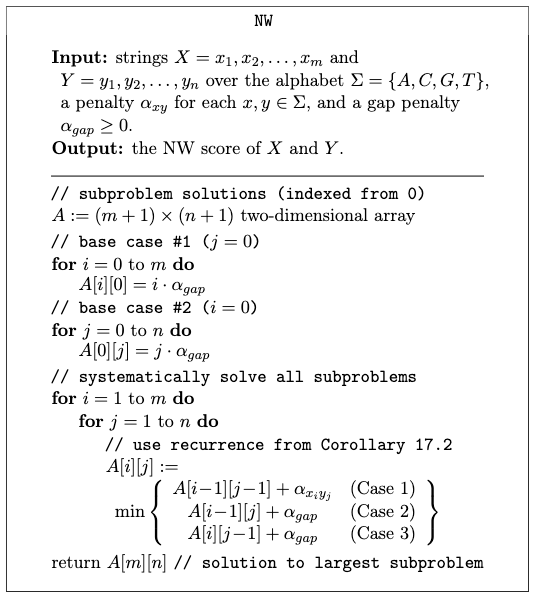

*For example*: Sequence alignment. Consider two strings composed of characters ${ A,C,G,T }$:

\[AGGGCT, AGGCA\]How similar (smallest edit distance, or lowest NW score) are the two strings?

- Brute force: try all possible alignments, and pick the one with the lowest score. Insanely expensive.

- Dynamic programming!

*For example:* Multiplying two numbers. Given two $n$ digit number (e.g. $x=1234$, $y=5678$), find a way to find out their product.

-

Grade school approach: multiply each digit by each, get partial product, and sum.

Cost is $O(n^2)$ as for each digit need to do $n$ multiplication, and there are $n$ digits

-

Recursive v1: We can break down multiplying numbers into multiplying parts of it and add back (divide and conquer). For instance, let $x=10^{n/2}a + b$ and $y=10^{n/2}c + d$ (e.g. $a=12, b=34$ for $x=1234$). Then realize that:

\[\begin{align*} x\cdot y &= (10^{n/2}a + b) (10^{n/2}c + d) \\ &= 10^n ac + 10^{n/2} (ad + bc) + bd \\ \end{align*}\]and then, for each of the 4 multiplication operation, we can further recurse into smaller components until we are at one digit.

Is this necessarily faster than grade school? We will analyze this in the course.

-

Recursive v2 (Karatsuba Algorithm): An improved version than above where we only do 3 multiplications instead of 4. Notice that we can rewrite:

\[(a+b)(c+d) = ac + ad + bc + bd = ac + bd + (ad + bc)\]therefore, to equivalently compute the 4 multiplications in v1, we can do:

- compute $ac$

- compute $bd$

-

compute $(a+b)(c+d)$ and subtract $ac$ and $bd$ from it to get $ad+bc$

Introduction

The basic idea is to break your problem into smaller subproblems, solve the subproblems (often recursively), and finally combine the solutions to the subproblems into one for the original problem.

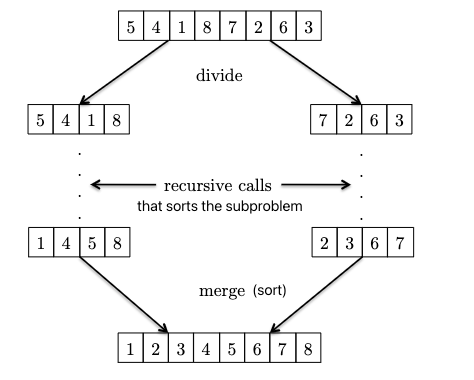

MergeSort: The Algorithm

MergeSort is a classical sorting algorithm using the divide and conquer paradigm. Recall that to sort an array, we could have done:

SelectionSort: scan the array, find the smallest element, put it in the first position, and repeat.InsertionSort: keep two arrays, sorted and unsorted. Scan the unsorted array and place the smallest element there into the correct position of the sorted array.BubbleSort: swap adjacent elements to make sure the smallest is in the first position, then repeat.

the above three simple algorithms have a running time of $O(n^2)$, which is not great. We can do better with MergeSort. High level idea:

where:

- given two sorted subparts, we can combine them into a sorted array by

mergingthem (use a pointer at each of the two subpart, and just move the smallest element of the two to the output array). You will see after the Master Method that the key to $O(n \log n)$ is that this merge only takes $O(n)$!. - from the above you see the recursive nature: the base case of just one element is already sorted! So we can use this base case AND the above merging operation to sort the entire array.

Specifically, the pseduocode is:

# input: array A of n distinct numbers

# output: return a sorted array from smallest to largest

def merge_sort(A):

# base case

if len(A) == 1:

return A

c = merge_sort(A[:n//2]) # recursively sort the first half of A

d = merge_sort(A[n//2:]) # recursively sort the second half of A

return merge(c, d)

so basically all the work is to merge the two sorted subarrays into one sorted array. The pseudocode for merge is:

def merge(c, d):

i = 1

j = 1

e = []

for k in range(len(c) + len(d)):

# there is a bit more code to deal with if one of the two arrays is exhausted

# if the first unused element from c is smaller, then add it to e

if c[i] < d[j]:

e.append(c[i])

i += 1

# otherwise, add the first unused element from d to e

else:

e.append(d[j])

j += 1

return e

So what is its runtime? How fast is this compared to $O(n^{2})$?

MergeSort: The Analysis

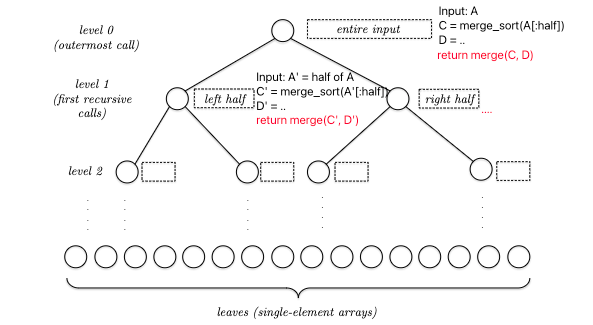

On a high level, we can imagine the runtime as “the total number of lines of code/operation we need to execute to run the implementation”. How do we approach this? In general, we should first visualize what the algorithm does.

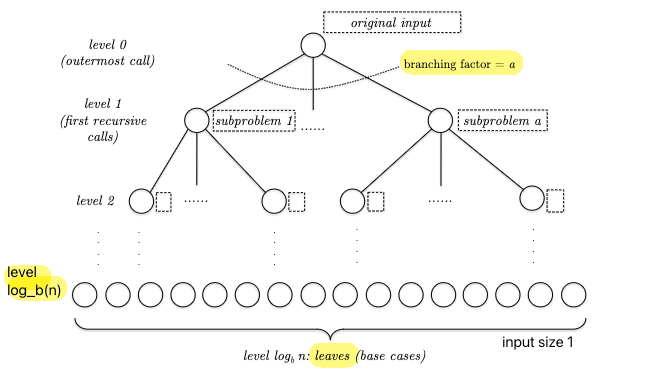

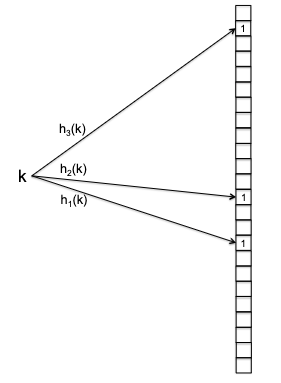

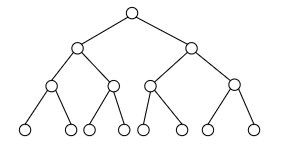

For recursive algorithms such as MergeSort, we can visualize it as a recursion tree:

so basically, at each node THE REAL COST is merge(C,D), assuming your code implementation can slice arrays in halves using $O(1)$ time. This means that the total runtime is:

For a recursive algorithm, generally notice that each level will receive similar input (e.g. sizes). Therefore, we can instead consider:

\[\sum_{j \in \text{all levels}} \text{(\# of nodes at level $j$)} \times \text{(Cost of merge(C,D) at level $j$)}\]Hence we get, for an input of length $n$ (assuming its a power of $2$)

- in total there are $\log_{2} n + 1$ levels

- at each level, there are $2^{j}$ nodes (note that you can imagine growing a tree from top to bottom as an exponential operation, while collapsing it from bottom to top as a logarithmic operation)

- the input size at each node would therefore be $n/2^{j}$

- the cost of

mergeat a node (using the pseudocode above it is about $4m+2$, where we have $4$ operations during the loop and 2 initialization operations). For simplicity let it be $6m$.

The total cost at each level is therefore:

\[2^{j} \cdot 6 \left( \frac{n}{2^{j}} \right) = 6n\]and finally the total runtime cost is:

\[\sum_{j \in \text{all levels}} 6n = \left( \log_{2} n + 1 \right) \cdot 6n = 6n \log_{2} n + 6n = O(n \log n)\]Important notes:

- here we considered the worst case scenario. This would be appropriate for general purpose algorithms as we won’t know what the input is.

- What about “average-case analysis”? For example, in the sorting problem, we could assume that all input arrays are equally likely and then study the average running time of different sorting algorithms. A second alternative is to look only at the performance of an algorithm on a small collection of “benchmark instances” that are thought to be representative of “typical” or “real-world” inputs.

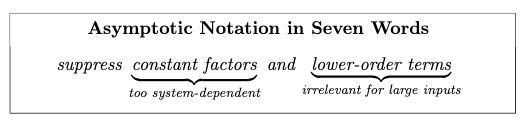

- we are sloppy for constant factors/coefficients. This is because:

- in real life these constants will be heavily implementation dependent

- will see how the Big O notation will not care about these constants

- related to above, we will focus on how runtime scales with input size $n$. Especially when $n$ become large.

- yes, there are cases when a runtime of $0.5n^{2}$ is faster than $6n \log n$, for example when $n=2$. But smart algorithms doesn’t really matter if $n$ is small!

- the holy grail is a linear-time algorithm (seeing each input ~once). For some problems we will not find a linear time algorithm, and for some (e.g. binary search) we can by “cheating” (we are already given an sorted array)

Strassen’s Matrix Multiplication Algorithm

This section applies the divide-and-conquer algorithm design paradigm to the problem of multiplying matrices, culminating in Strassen’s amazing subcubic-time matrix multiplication algorithm.

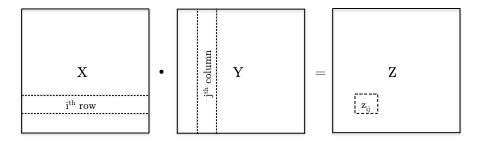

Recall that matrix multiplication considers

Therefore the very basic algorithm would be:

def matrix_mut(X, Y):

# X is n x n, Y is n x n

for i in range(n):

for j in range(n):

Z[i][j] = 0

for k in range(n):

Z[i][j] += X[i][k] * Y[k][j]

return Z

which is obviously $\Theta(n^{3})$

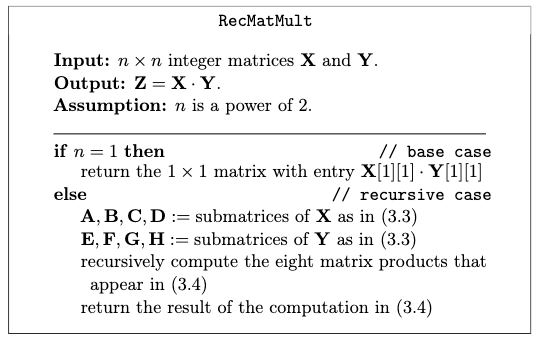

Since the input size is $O(n^{2})$, the best we can hope for would be $O(n^{2})$ runtime. So can we do better? Emboldened by the divide and conquer integer multiplication algorithm, we can try to divide the matrix into smaller submatrices and then recursively compute the product of these submatrices.

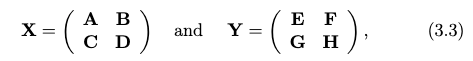

Consider breaking the matrix down to:

then we can write the product as:

where adding two matrices is just element-wise addition with cost $O(l^{2})$ for $l \times l$ matrices. Therefore, analogous to the integer multiplication algorithm, we can write the matrix multiplication algorithm

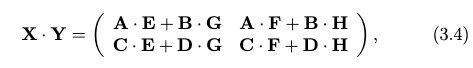

which has basically eight recursive calls. The problem is that this is still $\Theta(n^{3})$!

The key insight from the Karatsuba algorithm was that we can save one multiplication by using some tricks, and that can help us get sub-cubic time!

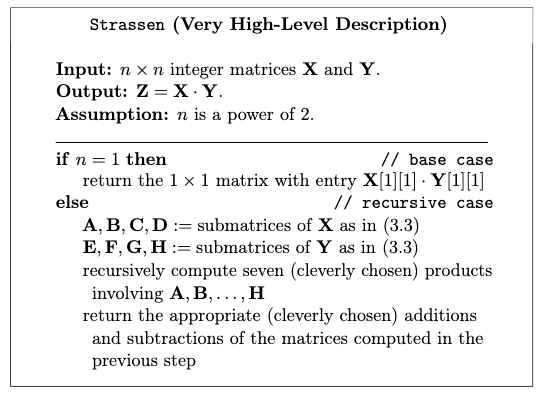

A high level description looks like:

Details of Strassen’s Algorithm

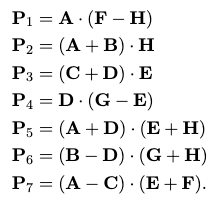

The key detail is how to save the extra multiplication. The idea is to use the following 7 auxiliary matrices:

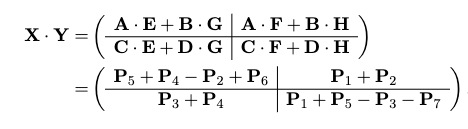

which involves $O(n^{2})$ time doing additional matrix additions, but just with these seven we can compute the matrix product as:

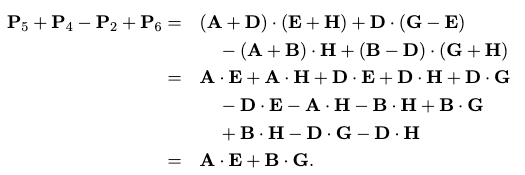

which works due to some crazy cancellation, for example in the upper left:

the rest you can check yourself, but with it down to 7 recursive calls, we can compute the runtime (using the The Master Method): $O(n^{2.807})$!

Asymptotic Notation

The high level idea of why to use Big O:

where saving one recursive call is a bigh win, because that will get saved over and over again as the recursion goes deeper.

Big-O Notation

Let $T(n)$ denote the worst-case running time of an algorithm we care about, where $n={1,2,3, …}$ being the size of the input. What does it mean to say something runs in $O(f(n))$ for some function $f(n)$?

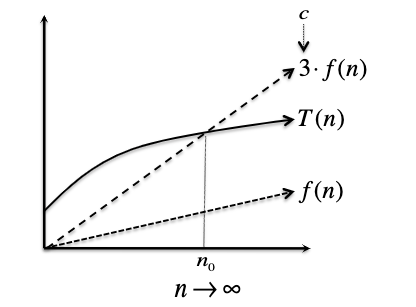

Big-O Notation: $T(n) = O(f(n))$ if and only if there exist positive constant $c$ and $n_0$ such that:

\[T(n) \leq c \cdot f(n),\quad \text{ for all } n \geq n_0\]i.e. eventually when input gets large enough, $T(n)$ is bounded by $c \cdot f(n)$. So all you need to show/prove is that you can construct a $c$ and $n_0$ such that it holds.

Pictorially, we can imagine:

For Example: a degree-$k$ polynomials are $O(n^{k})$. Suppose:

\[T(n) = a_{k} n^{k} + a_{k-1} n^{k-1} + ... + a_{1} n + a_{0}\]for $k \ge 0$ and $a_i$’s are real numbers. We can show that $T(n) = O(n^{k})$:

\[\begin{align*} T(n) &= a_{k} n^{k} + a_{k-1} n^{k-1} + ... + a_{1} n + a_{0} \\ &\le \left|a_k\right| n^{k} + \left|a_{k-1}\right| n^{k-1} + ... + \left|a_{1}\right| n + \left|a_{0}\right|\\ &\le \left|a_k\right| n^{k} + \left|a_{k-1}\right| n^{k} + ... + \left|a_{1}\right| n^k + \left|a_{0}\right| n^k\\ &= \left(\left|a_k\right| + \left|a_{k-1}\right| + ... + \left|a_{1}\right| + \left|a_{0}\right|\right) n^k\\ &\equiv c \cdot n^k \end{align*}\]holds for all $n$, so $n_{0} = 1$ and $c = \left\vert a_k\right\vert + \left\vert a_{k-1}\right\vert + … + \left\vert a_{1}\right\vert + \left\vert a_{0}\right\vert$.

For Example: a degree-$k$ polynomial is not $O(n^{k-1})$. Suppose for simplicity $T(n) = n^{k}$. Then proof by contradition that this means:

\[n^{k} \le c \cdot n^{k-1}, \quad \text{ for all } n \ge n_0\]this means:

\[n \le c, \quad \text{ for all } n \ge n_0\]which is a contradiction as $n$ can be arbitrarily large.

Big-Omega and Big-Theta Notation

On a high level, if big-O is analogous to “less than or equal to ($\le$),” then big-omega and big-theta are analogous to “greater than or equal to ($\ge$),” and “equal to (=).”

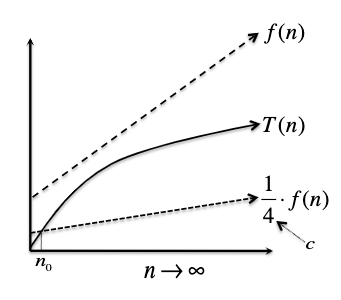

Big-Omega Notation: $T(n) = \Omega(f(n))$ if and only if there exist positive constant $c$ and $n_0$ such that:

\[T(n) \geq c \cdot f(n),\quad \text{ for all } n \geq n_0\]i.e. eventually when input gets large enough, $T(n)$ is bounded below by $c \cdot f(n)$.

Pictorially, bounding from below means:

and we would call $T(n) = \Omega(f(n))$.

Big-Theta Notation: $T(n) = \Theta(f(n))$ if and only if there exist positive constant $c_1, c_2$ and $n_0$ such that:

\[c_1 \cdot f(n) \leq T(n) \leq c_2 \cdot f(n),\quad \text{ for all } n \geq n_0\]i.e. eventually when input gets large enough, $T(n)$ is sandwiched by $c_1 \cdot f(n)$ and $c_2 \cdot f(n)$. Notice that this is analogous to say that both $T(n) = \Theta(f(n))$ and $T(n) = O(f(n))$.

For example, if $T(n) = \frac{1}{2} n^{2} + 3n$, then $T(n) = \Theta(n^{2})$.

Little-O Notation: $T(n) = o(f(n))$ if and only if for every positive constant $c>0$ there exists a constant $n_0$ such that:

\[T(n) \le c \cdot f(n),\quad \text{ for all } n \geq n_0\]i.e. eventually when input gets large enough, $T(n)$ is bounded above by $c \cdot f(n)$ for any $c$ you pick (note that you can pick $n_0$ based on $c$). Also note that this is much stronger than the Big O notation.

Intuitively, this is like saying we want:

\[\lim\limits_{n \to \infty} \frac{f(n)}{T(n)} = 0\]that $f(n)$ grows strictly faster than $T(n)$ (i.e. pick $c$ to be infinitesimally small in the above definition). Also note that the intutive way of converting between Big O and Little O is NOT just switching $\le$ to $<$, as that would actually not change anything (see HW1 Problem 2.7)

For example, $n^{k-1} = o(n^{k})$ is true for any $k \ge 1$.

The Master Method

This “master method” applies to analyzing most of the divide-and-conquer algorithms you’ll ever see, as their runtime typically follows a pattern of the form:

\[T(n) = \underbrace{a \cdot T\left(\frac{n}{b}\right)}_{\text{word done by recursive calls}} + \underbrace{O(n^{d})}_{\text{clean up}}\]where $a$ would be the number of recursive calls you make, $b$ would be by how much you shrink the input size, and $O(n^{d})$ would be the time it takes to combine/clean up any of results of the recursive calls (e.g. the cost for merge in merge_sort).

For Example, recall the multiplication problem. Grade-school algorithm would give us $O(n^{2})$. However,

-

the recursive algorithm v1 (calculate $10^{n}ac + 10^{n / 2}(ad + bc) + bd$) would then give us:

\[T(n) \le 4T(n / 2) + O(n)\]where $4$ comes from doing 4 more multiplications to calculate $ac, ad, bc, bd$, and $n / 2$ because we are chopping each original interger into half $x = 10^{n / 2}a + b$ and $y = 10^{n / 2}c + d$.

-

the recursive algorithm v2 (Karatsuba Algorithm) saves the computation of $ad + bc$ by computing only $(a+b)(c+d)$ and then minus $(ac + bd)$ which would be already computed. Given that addition is done in linear time:

\[T(n) \le 3T(n / 2) + O(n)\]where now you only have to do 3 more multiplications to calculate $ac, bd, (a+b)(c+d)$, with extra work in addition but that is already in $O(n)$ term.

and that for all the above cases, the base case is $T(1) = O(1)$.

Formal Statement

We’ll discuss a version of the master method that handles what we’ll call “standard recurrences”, which have three free parameters and the following form:

Standard Recurrence Format. Let $T(n) = \mathrm{constant}$ for some small enough $n$ (i.e. base case). Then for large values of $n$, we have the runtime being:

\[T(n) \le a \cdot T\left(\frac{n}{b}\right) + O(n^{d})\]where:

- $a$ is the number of recursive calls you make

- $b$ is by how much you shrink the input size

- $O(n^{d})$ is the time it takes to combine/clean up any of results of the recursive calls

Then, we have the master method:

Master Method. If $T(n)$ is defined by a standard recurrence of the form above with $a \ge 1, b > 1$ and $d \ge 0$, then:

\[T(n) = \begin{cases} O(n^{d} \log n) & \text{if } a = b^{d} \\ O(n^{d}) & \text{if } a < b^{d} \\ O(n^{\log_{b} a}) & \text{if } a > b^{d} \end{cases}\]note that

- intuitively, the second one indicates that your algorithm is “clean up step heavy”, and the third one indicates that your algorithm is “recursive call heavy”

- the last case specifically specified a base $b$, whereas the first case did not. This is because any two logarithm base differ by a constant multiple. This will be harmless in the first case because we are multiplying by a constant factor of $d$, but not in the last case because we are raising to a power of $n$.

First for a sanity check, the merge sort algorithm takes the form of:

\[T(n) = 2T\left(\frac{n}{2}\right) + O(n)\]hence we have $a = 2, b = 2, d = 1$, and so $a = b^{d}$, which means that the first case applies and we have $T(n) = O(n^{d} \log n) = O(n \log n)$.

Then we first answer the questions of runtime for KaraSuba algorithm and the recursive algo v1:

- multiplication algo v1 has $a=4, b=2, d=1$ so $a > b^{d}$, hence we have $T(n) = O(n^{\log_{b} a}) = O(n^{2})$, actually just as good as the grade-school algorithm.

- KaraSuba algo has $a=3, b=2, d=1$ so $a > b^{d}$, but we have $T(n) = O(n^{\log_{b} a}) = O(n^{\log_{2} 3}) \approx O(n^{1.59})$, which is better than the grade-school algorithm.

- Matrix Multiplication v1 (splitting into 8 smaller matrix multiplications) have still $O(n^{3})$. To see this, there are two ways: 1) consider $T(n^{2})=8 T(n^{2} / 4) + O(n^{2})$, substitute $n^{2}=u$ we get runtime is $O(u^{\log_{a} b})$; 2) just consider the input $n^{2}$ as a function of $n$, hence runtime is $T(n) = 8T(n / 2) + O(n)$, which gives $O(n^{\log_{a} b})$.

Proof of Master Method

Next we prove the master’s method.

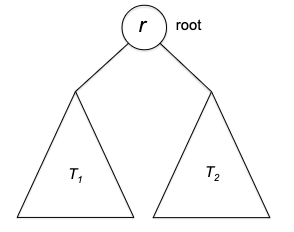

Proof: Suppose that $T(1) = \text{constant}$ and we have the standard recurrence for $n > 1$:

\[T(n) \le a \cdot T\left(\frac{n}{b}\right) + c \cdot n^d\]where we replaced $O(n^{d})$ with $c \cdot n^{d}$ for some constant $c$.

We can try to write a few terms out and see if we can find a pattern, but alternatively we can also draw a recursion tree and see if we can directly find a closed-form equation:

Our goal is to find the runtime of this entire tree. So we consider:

\[T(n) = \sum_{j \in \mathrm{level}} \sum \mathrm{cost}(\text{each node})\]from which we know that:

- there are $a^{j}$ subproblems/nodes at depth $j$

- each node at depth $j$ needs to solve problem with input size $n / b^{j}$

again, assuming splitting the input to $b$ subproblems takes constant time, we have the cost at each node being:

\[\mathrm{cost}(\text{each node}) = c \cdot \left( \frac{n}{b^j} \right)^d\]since at each node it is just the clean up step $c \cdot n^{d}$. Then since there are $a^{j}$ nodes at level $j$, we have:

\[\begin{align*} T(n) &= \sum_{j=0}^{\log_b a} a^j \cdot c \cdot \left( \frac{n}{b^j} \right)^d \\ &= c \cdot n^d \cdot \sum_{j=0}^{\log_b a} \underbrace{\left( \frac{a}{b^d} \right)^j}_{\text{this ratio!}} \\ \end{align*}\]where the ratio of $a / b^{d}$ was the ratio we had in the master method. This ratio also has a very important interpretation:

- $a$ is the rate of growth/proliferation

- $b^{d}$ is the rate of shrinkage of work. Since each of your subproblem shrink by a factor of $b$, but that goes into the term $O(n^{d})$, hence you shrink your work by a factor of $b^{d}$.

Then we have the following cases:

-

if $a = b^{d}$, then:

\[T(n) = c \cdot n^d \cdot \sum_{j=0}^{\log_b a} \left( \frac{a}{b^d} \right)^j = c \cdot n^d \cdot \sum_{j=0}^{\log_b a} 1 = c \cdot n^d \cdot (\log_b a + 1) = O(n^d \log n)\]note that we changed the base here.

-

if $a / b^{d} = r \neq 1$, then we have a finite geometric series where:

\[\sum\limits_{j=0}^{k} r^{j} = 1 + r + r^{2} + \cdots + r^{k} = \frac{r^{k+1} - 1}{r - 1}\]which means that:

-

if $r < 1$, then the first term $O(1)$ dominates. Hence we get

\[T(n) = c \cdot n^d \cdot O(1) = O(n^d)\]which is basically the work done by the root node!

-

if $r > 1$, then the last term $O(r^{k})$ dominates. Hence we get

\[T(n) = c \cdot n^d \cdot O\left[ \left( \frac{a}{b^{d}} \right)^{\log_{b} n} \right]\]note that since $b^{-d \log_{b} n} = (b^{\log_b n})^{-d} = n^{-d}$, we get:

\[T(n) = O(a^{\log_{b} n}) = O(n^{\log_{b} a})\]where $a^{\log_{b} n}$ is basically the number of leaves! However we still use the $n^{\log_{b} a}$ because it’s easier to apply.

-

Linear-Time Selection

This section discusses another divide-and-conquer algorithm, but won’t take the standard form of the master method (probably the only case in this course).

Selection Problem. Given an array $A$ of $n$ distinct numbers and an index $i$, find the $i$th smallest element of $A$.

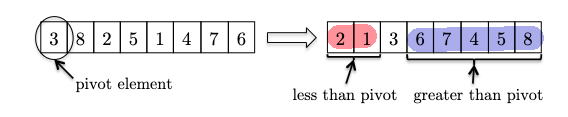

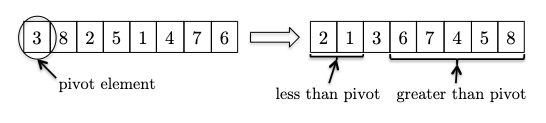

The naive solution would be a sort + indexing, which takes $O(n \log n)$ time. However, we can do better by using the divide-and-conquer strategy. The intuition is that we can select a pivot and partition the array in such a way that we will only need to recurse on one of the two partitions = reduced input size!

one very important finding is that the pivot is at the “rightful” position which can tell us two things:

- that pivot element is in the right position as if it’s sorted, such that:

- if we are looking for, say, the $5$-th smallest element, the entire left partition (red) is irrelevant

Thus a high level sketch of the algorithm is as follows:

def select(A, n, i): # n is the length, i is the i-th smallest

if n == 1:

return A[0]

else:

# select a pivot and sort

p = pivot(A, n)

# recursively select

partition(A around p) # O(n) to put smaller element on left, larger on right

j = "p's position " # 1-indexed

if j == i:

return p

elif j > i:

return select(A[:j], j - 1, i)

else:

# note the new length of RHS search is n - j

# since RHS is all larger, the new i is i - j

return select(A[j:], n - j, i - j)

But what’s the running time? We have not yet discussed how to find a “good” pivot, but we know that:

- worst pivot: we always pick the minimum/maximum element, so that each recursive call can only throw away one element. This is bad, as it results in $\Theta(n^2)$.

- best pivot: if we managed to pick the median (the $i= (n-1) / 2$-th smallest element, which we don’t know), then we can throw away half of the array each time. This is good, as it will result in $O(n)$ (try with the master method), but we don’t know how to find the median.

(we will discuss how to find a “good enough” pivot, such that we can still get $O(n)$)

Median of Medians

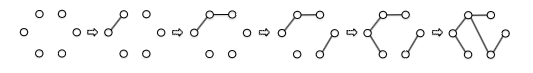

The trick to linear-time selection is to use the “median-of-medians” as a proxy for the true median. First we show the full select algorithm, and then we will discuss the intuition and runtime behind it.

def select(A, n, i): # n is the length, i is the i-th smallest

if n == 1:

return A[0]

else:

### select a pivot and sort

C = []

for h in range(0, n, 5):

# sort each 5-element subarray

B = sort(A[h:h+5])

# find/keep the median of each 5-element subarray

C.append(B[2]) ### first round winners

p = select(C, n / 5, n / 10) ### median of medians, recursively select 1

partition(A around p) # O(n) to put smaller element on left, larger on right

j = "p's position in the sorted array" # 1-indexed

if j == i:

return p

elif j > i: ### recursively select 2

return select(A[:j], j - 1, i)

else:

# note the new length of RHS search is n - j

# since RHS is all larger, the new i is i - j

return select(A[j:], n - j, i - j)

This warrants several “issues”:

- Since there is a

sort, how is this not $O(n \log n)$ runtime? This is because in this case we are sorting a constant number of elements , hence it becomes $O(5 \log 5) = O(1)$. But, since there will be $n / 5$ groups of 5 elements, the total runtime is $O(n)$! - why 5 elements in particular? A short answer is that this is the smallest odd number that can give this algorithm a linear time. For exercise, try using $3$ and compute the runtime.

- how much smaller is the reduced input size after this median of median pivot? The answer is that this guarantees throwing at least 30% of the array away.

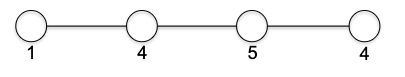

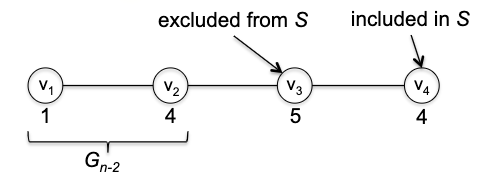

Lemma. The median of median pivot $p$ is at least the $30$th percentile of the array $A$. To see this, consider $k = n / 5$ is the number of groups you have spliited up, and $x_i$ is the $i$th smallest element amongst the $k$ middle elements. Then we can arrange the full array $A$ into the following way:

where basically numbers are guaranteed to be bigger going from bottom to top, though not necessarily left to right. However, this still indicates that:

- the red highlighted bottom left corner is smaller than $x_{k / 2}$

- the red highlighted top right corner is larger than $x_{k / 2}$ therefore, this means that the median of median, $x_{k / 2}$ is bigger than 3/5 of the rows and >50% of the columns, hence it is at least the 30th percentile.

Runtime of Select Algorithm

Now given that the median is guaranteed to throw away at least 30% of the array, we can write the recurrence relation as follows:

\[T(n) = \underbrace{T\left( \frac{n}{5} \right)}_{\text{recursive call by pivot()}} + \underbrace{T \left( \frac{7}{10}n \right)}_{\text{after pivot, search the other 70\%}} + \underbrace{O(n)}_{\text{sort the 5-element subarrays + partition}}\]note that we cannot use master’s method here, as now we have two recursive calls. In this case, we will need to show that with “guess the solution and check”: that this is $O(n)$ time.

Proof: We want to show tat $T(n) = c \cdot n$ for all $n \ge n_{0} = 1$ in this case. (Hindsight) let $c = 10a$, where $a$ is the constant in the $O(n)$ runtime of the sort function:

is already given. We can prove $T(n) \le c \cdot n$ by induction on $n$:

- base case: when $n=1$ this trivially holds as $T(1) = 1 \le c = 10a$ and $a>1$.

- induction hypothesis: assume $T(k) \le c \cdot k$ for all $k < n$ (e.g. holds for $k= n-1$)

-

induction step: we show that it holds for $n$:

\[\begin{align*} T(n) &\le T\left( \frac{n}{5} \right) + T \left( \frac{7}{10}n \right) + an\\ &\le c \cdot \frac{n}{5} + c \cdot \frac{7}{10}n + an\\ &= \frac{9}{10}cn + an\\ &= n \cdot \left( \frac{9}{10}c + a \right)\\ &= n \cdot 10 a = c \cdot n \end{align*}\]where the second inequality comes from the induction step that we assumed $T(k)\le c \cdot k$ holds.

QuickSort

This is perhaps the most famous sorting algorithm, and it is also based on the idea of divide-and-conquer. Although it also operates with $O(n \log n)$, in practice there are differences compared to merge_sort:

- The big win for QuickSort over MergeSort is that it CAN run in place. For this reason it needs to allocate only a minuscule amount of additional memory for intermediate computations. However

merge_sortneeds to allocate a whole new array for each merge step. - On the aesthetic side, QuickSort is just a remarkably beautiful algorithm.

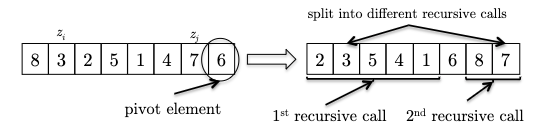

The implementation follows directly from the DSelect and partition algorithm:

how does this help sort the array?

- the pivot element already winds up in its rightful position,

- partitioning has reduced the size of this problem: sorting the elements less than the pivot (which conveniently occupy their own subarray) and the elements greater than the pivot (also in their own subarray).

After recursively sorting the elements in each of these two subarrays, the algorithm is done

Therefore the algorithm is simply:

def quick_sort(A, n):

if n <= 1:

return A

else:

p = pivot(A, n)

A_1, A_2 = partition(A, p)

A_1_sorted = quick_sort(A_1, len(A_1))

A_2_sorted = quick_sort(A_2, len(A_2))

# if you can sort in place, you don't need this

return A_1_sorted + [p] + A_2_sorted

Even though the quick runtime analysis above gives the same result as merge_sort, the quick_sort algorithm feels a bit different. This is because the order of operations is different. In merge_sort, the recursive calls are performed first, followed by the combine step, merge. In quick_sort, the recursive calls occur after partitioning, and their results don’t need to be merged at all!

But first of all, is this even correct? Here we prove it formally using induction, which is very suitable for recursive algorithms.

Proof of Correctness: We will prove by induction. Let $P(n)$ denote the statement “for any array $A$ of length $n$, quick_sort(A, n) returns a correctly sorted array”.

- Base case: $P(1)$ is trivially true, as the array is already sorted.

- Inductive hypothesis: assume $P(k)$ is true for all $k < n$, for any $n > 1$.

- Induction step. Now we need to imagine an input of $P(n)$. First, we note that the pivot element $p$ is already in the right sorted position. Then, we note that since

A_1andA_2are subarrays with size at most $n-1$, this means thatA_1_sortedandA_2_sortedare correctly sorted by the induction hypothesis. Therefore, the concatenation ofA_1_sorted,p, andA_2_sortedis also correctly sorted.

In-Place Partition

To sort the array in place using quick_sort, we just need a routine that can partition the array in place. This is a bit tricky, but it is possible to do this in a single scan over the array, while doing it in-place.

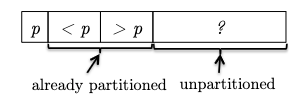

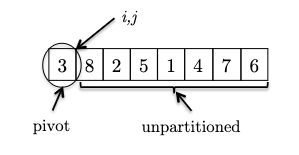

Key Idea: while scanning through the array, we can urge to keep the following invariant:

where to put the pivot element to the first position is just a swap in $O(1)$ during preprocessing. If we can check each new element in the unpartitioned part and swap it to the correct partition, then we can maintain this invariant = correctness!

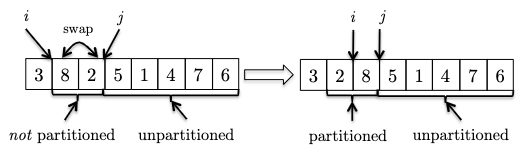

This is easiest to first go through an example. Consider an input:

where we consider the invariant formally to be:

Invariant: all elements between the pivot and $i$ are less than the pivot (the $< p$ partition), and all elements between $i$ and $j$ are greater than the pivot (the $> p$ partition). This means that:

- $i$ represent the boundary between the $< p$ and $> p$ partitions, and

- $j$ represent the boundary for yet unseen elements.

- We initialize $i,j$ to be right next to the pivot (first) element. As there is no element between $i$ and $j$ or between the pivot and $i$, the invariant is trivially satisfied.

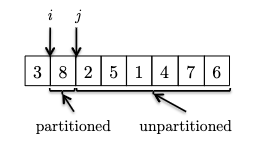

- At each iteraction, we look at a new element and consider what to do to maintain the invariant:

in this case $8$ is larger than the pivot and invariant is maintained.

in this case $8$ is larger than the pivot and invariant is maintained. - If the new element is smaller than the pivot. To maintain invraiant, we can swap it with the first element after $i$ and then increment $i$:

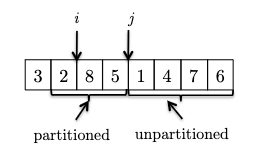

- We continue increment $j$ to see the new element. If it is bigger than the pivot, we can just continue as the invariant is maintained.

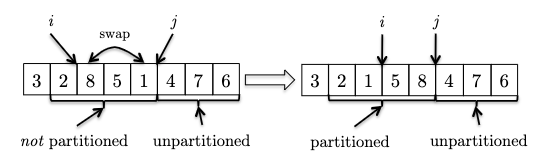

- One last, example, now we see another element smaller than the pivot, so we need to swap and increment $i$:

Hence the pseudocode is:

def partition_inplace(A):

p = A[0] # assuming pivot is the first element

i = 1

for j in range(1, len(A)):

if A[j] < p:

A[i], A[j] = A[j], A[i]

i += 1

A[0], A[i-1] = A[i-1], A[0] # swap pivot to the correct position

return A

Randomized QuickSort

Now, what about runtime? Again, the key step is how we choose the pivot element. Similar to DSelect, this will have a huge impact:

- if we choose min/max of the array, we get $\Omega(n^{2})$ runtime (technically also $O(n^{2})$, but just to emphasize the slowness).

- if we choose the median of the array, we get $T(n) = 2 T( n / 2) + O(n) = O(n \log n)$ where the $O(n)$ cost would be calling

DSelctto find the median.

While the median is the best choice, it is also the most expensive to compute. Therefore, we will use a randomized version of quick_sort that chooses a random pivot element. This is a very simple modification to the algorithm, and we will show that it has average case $O(n \log n)$!

Why on earth would you want to inject randomness into your algorithm? Aren’t algorithms just about the most deterministic thing you can think of? As it turns out, there are hundreds of computational problems for which randomized algorithms are faster, more effective, or easier to code than their deterministic counterparts.

To prove the average case runtime, let’s define some notations:

- sample space $\Omega$: the set of all possible outcomes of some random experiment

- random variable $X$: a (numerical) measurement of the outcome if a random process, so $X: \Omega \to \R$

- $P(\omega)$ is the probability of getting a particular outcome $\omega \in \Omega$.

In the case of quick_sort, we have:

- $\Omega$ being the set of all possible outcomes caused by some random (sequence of) choice of pivot element. (note that it is not defined on the length of the array)

- $X=RT$ that we care about is the runtime of this randomized

quick_sortgiven a sequence of pivot choices. I.e. given $w \in \Omega$, we get a deterministic runtime $RT(\omega)$.

So our goal is to find:

\[\mathbb{E}[RT] = \sum_{\omega \in \Omega} P(\omega) RT(\omega)\]But this $RT(\omega)$ is hard to compute. Taking a look at the algorithm, we notice that we can simplify this random variable to:

Lemma: For every input array $A$ of length $n \ge 2$ and every pivot sequence $\omega$:

\[RT(\omega) \le a \cdot C(\omega)\]for some constant $a > 0$, and $C$ denote the random variable equal to the total number of comparisons made between pairs of input elements performed by

quick_sortwith a given sequence of pivot choices.

See the book chapter 5.5 for more details, but on a high level: the main operation in quick_sort is the partition call, and that is where the comparisons are made.

But $C$ is still a difficult random variable. The trick is that we can decompose it further. Let $X_{ij}$ denote the total number of times the elements $z_i$ and $z_j$ get compared in quick_sort:

which literally represents the sum of all comparisons between pairs of elements in the array. Without loss of generality, let $z_i$, $z_j$ also be the $i$-th and $j$-th smallest elements in the array. Then, the question is how many times do we compare $z_i$ and $z_j$?

\[X_{ij}(\omega) = \{0,1\}\]because comparisons only happen during partition:

- if one of the elements is the pivot, then they can be compared at most once (and then they will be separated by the pivot and sent to different partitions)

- after they are separated, they will never be compared again.

Now the real trick is to use the linearity of expectation:

Linearity of Expectation: For any random variables $X_1, X_2, \dots, X_n$ and any constants $a_1, a_2, \dots, a_n$:

\[\mathbb{E}[a_1 X_1 + a_2 X_2 + \dots + a_n X_n] = a_1 \mathbb{E}[X_1] + a_2 \mathbb{E}[X_2] + \dots + a_n \mathbb{E}[X_n]\]In other words, the expectation of a sum of random variables is the sum of their expectations. Note that this is true even if the random variables are not independent.

Then we compute the expectation of $C$:

\[\begin{aligned} \mathbb{E}[C] &= \mathbb{E} \left[ \sum\limits_{i=1}^{n-1} \sum\limits_{j=i+1}^{n} X_{ij} \right] \\ &= \sum\limits_{i=1}^{n-1} \sum\limits_{j=i+1}^{n} \mathbb{E}[X_{ij}] \\ &= \sum\limits_{i=1}^{n-1} \sum\limits_{j=i+1}^{n} P(X_{ij} = 1) \\ &= \sum\limits_{i=1}^{n-1} \sum\limits_{j=i+1}^{n} P(\text{did compare $z_i$ and $z_j$ in QuickSort}) \end{aligned}\]Lemma If $z_i$ and $z_j$ denote the $i$-th and $j$-th smallest elements in the array, with $i < j$, then the probability that they are compared in

\[P(\text{did compare $z_i$ and $z_j$ in QuickSort}) = \frac{2}{j-i+1}\]quick_sortis:

Proof Sketch: Consider fixing $z_i$, $z_j$ with $i < j$, and let some pivot element $z_k$ be chosen during the first recursive call to quick_sort. What happens next?

- if $z_k$ is smaller than $z_i$ or bigger than $z_j$, then $z_i, z_{i+1}, …, z_{j}$ will be in the same partition, and will be sent to the next recursive call. They might be compared against in the future, we don’t know yet.

- if $z_k$ happens to be between $z_i$ and $z_j$, then $z_i$ and $z_j$ will be separated by the pivot, and are not and will never be compared again.

- if $z_k$ happens to be $z_i$ or $z_j$, then they will be compared once, and then separated by the pivot, and are not and will never be compared again.

Therefore, the first condition is just a “placeholder” that will eventually lead to the second or third conditions. So the probability of $z_i$ and $z_j$ being compared is equivalent to:

\[P(\text{$z_i$ or $z_j$ is chosen as the pivot before any other element in $z_{i+1}, ..., z_{j-1}$}) = \frac{2}{j-i+1}\]Finally we can compute the expectation of $C$ (hence the average runtime):

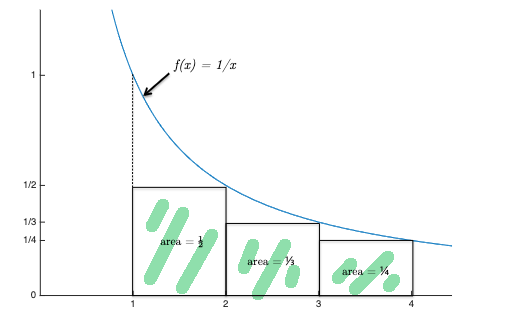

\[\begin{aligned} \mathbb{E}[\cdot C] &= \sum\limits_{i=1}^{n-1} \sum\limits_{j=i+1}^{n} P(\text{did compare $z_i$ and $z_j$ in QuickSort}) \\ &= \sum\limits_{i=1}^{n-1} \sum\limits_{j=i+1}^{n} \frac{2}{j-i+1} \\ &\le \sum\limits_{i=1}^{n-1} \left( 2 \sum\limits_{k=2}^{n} \frac{1}{k} \right) \\ &= 2n \sum\limits_{k=2}^{n} \frac{1}{k} \\ &\le 2n \log n = O(n \log n) \end{aligned}\]where

-

the third inequality comes from the fact that the largest sum we can get is when $i=1$:

\[\sum\limits_{j=i+1}^{n} \frac{1}{j-i+1} = \frac{1}{2} + \frac{1}{3} + ... + \left( \frac{1}{n} \right)\] -

the last inequality can be proven graphically:

where the sum covers the green highlighted rectangles, and the intergral covers the entire area under the blue curve.

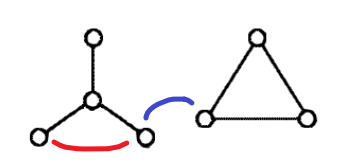

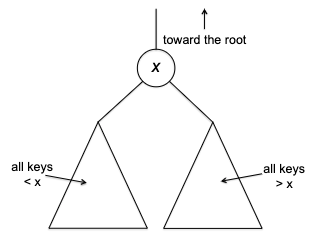

Graphs: The Basics

Here we just go over some basics and notations, so that we are consistent for the rest of the course.

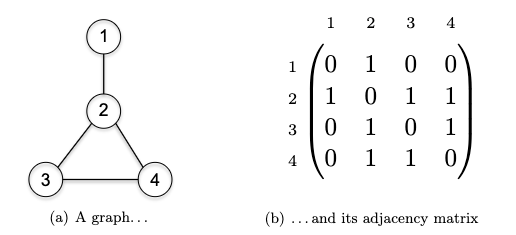

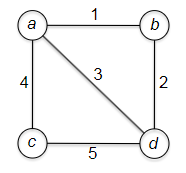

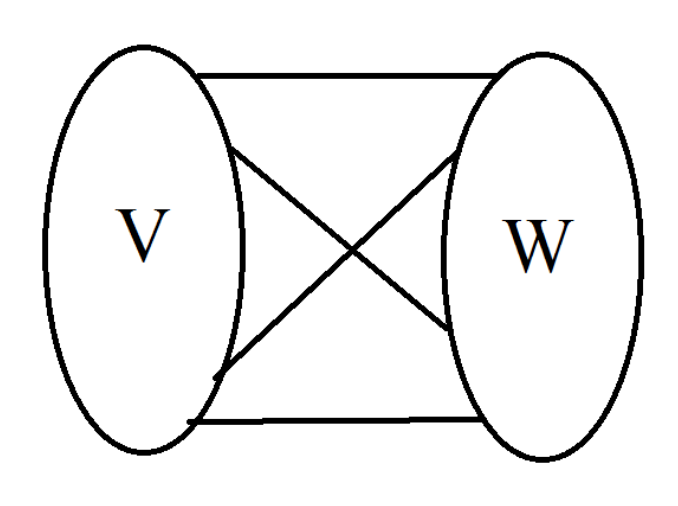

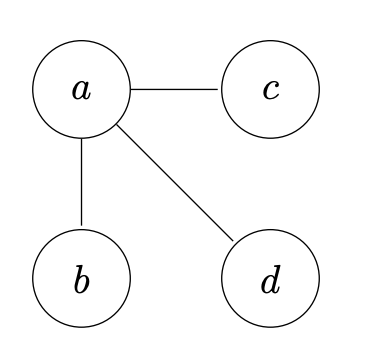

Representing Graphs: Let $G=(V,E)$ consist of vertices and edges. Let $n$ be the number of verticies and $m$ be the number of edges.

- note that if $G$ is undirected, then you will have $m \le { n \choose 2 }$

- note that if $G$ is directed, then you will have $m \le 2 { n \choose 2 } = n(n-1)$

Note that in either case, we have $m \le n^{2}$. Therefore in some proofs you will see, we might just swap $\log m$ with $\log n$ since:

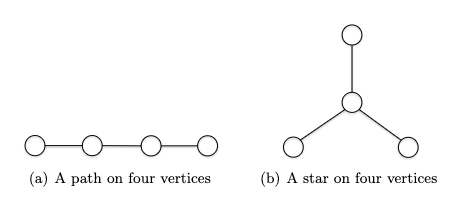

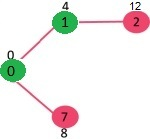

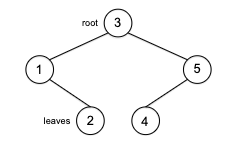

\[\log m \le \log n^{2} = 2 \log n \implies O(\log m) \le O(\log n)\]Tree a connected graph that has no cycles = have $n$ vertices and $n-1$ edges. For example:

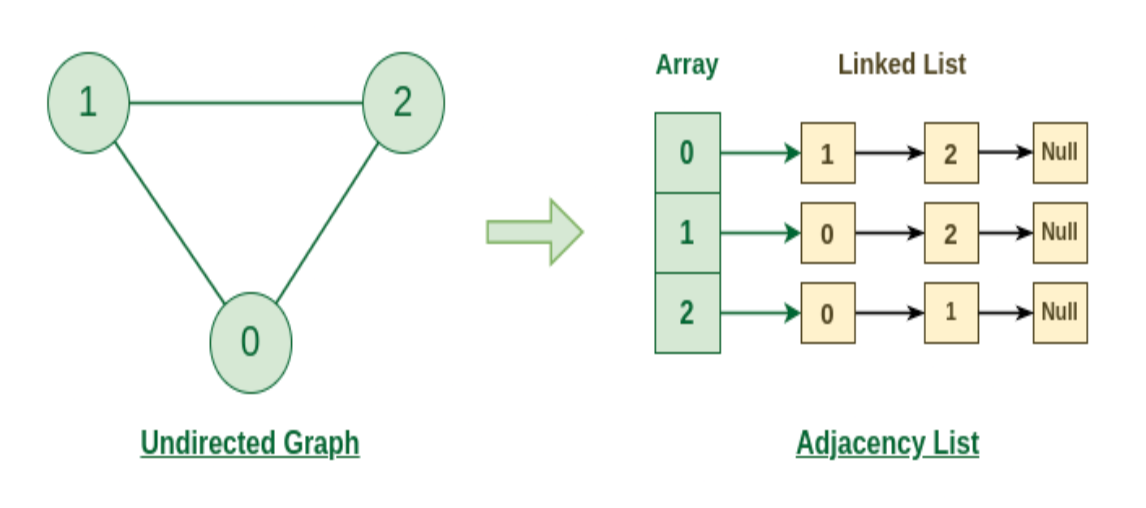

Ingredients for Adjacency List: the adjacency list representation of graphs is the dominant one we will use in this course. The main ingredients for representing such a list include:

- an array containing all the vertices

- an array containing all the edges

- for each edge, a pointer to each of its two endpoints

- for each vertex, a pointer to each of its incident edges so visually, something like this:

where essentially we have $V_0$ having neighbor [2,3,4], and $V_2$ having neighbor [1,8], etc. Or alternatively, you can think of it as:

But how much memory do we need to represent a adjaceny list? Well, we need:

- $O(n)$ space for the array of vertices

- $O(m)$ space for the array of edges

- $2m = O(m)$ space for the pointers to the edges’ endpoints. This is because each edge has two endpoints, hence $2m$.

- $2m = O(m)$ space for the pointers to the vertices’ incident edges. If you think about this, this is the same as the previous point, as the pointers to the edges’ endpoints are the same as (reversing) the pointers to the vertices’ incident edges.

Therefore we need in total $O(n+m)$ space to represent a graph using adjaceny list. Note that this is more efficient than adjaceny matrix, which need $O(n^2)$ space:

Breadth-First Search and Depth-First Search

Both BFS and DFS would work whether if the graph is directed or undirected. On a high level, recall that

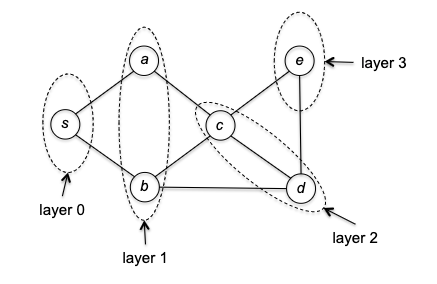

BFS Idea: start a vertex $s$ (layer 0), we want to explore all neighbors (layer 1), and repeat this process (reaching layer 2, layer 3, etc.) until we have explored all vertices.

So how do we implement it? The trick is to use a queue (FIFO) to keep track of the vertices still need to visit in the order of their discovery. The pseudocode is as follows:

def bfs(G, s):

# let G be the adjaceny list representation of the graph

# let s be the starting vertex

explored[s] = True

Q = Queue()

Q.enqueue(s) # initialize the queue with the starting vertex

while Q is not empty:

v = Q.dequeue() # dequeue the first vertex in the queue

for each edge (v, w) in G.adjacentEdges(v): # explore all neighbors of v

if not explored[w]:

explored[w] = True

Q.enqueue(w) # enqueue the neighbor at the end

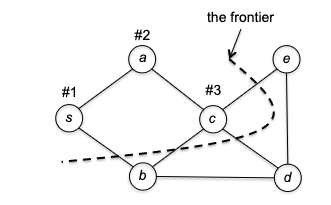

DFS Idea: when you reach a vertex, immediately start exploring its (not yet visited) neighbors and backtracking only when necessary (i.e. when you have no more neighbors to explore).

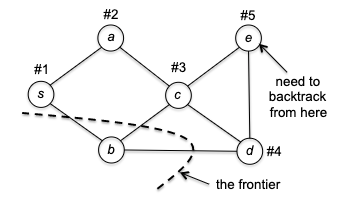

The most intuitive explanation is to talk about an example. Suppose we are given a graph, and the adjaceny list happened to give $s \to a$ before $s \to b$, then starting from $s$ we would go:

where the “frontier” marks the “borderline” between the explored and unexplored vertices. To make things interesting, let’s say the adjacency list had $c \to d$ before $c\to e$. This would then give us:

Now there is a problem: at vertex $e$ we have no unvisited neighbors. So DFS is forced to retreat to its previous vertex $d$: and now, it discovered one unexplored vertex $b$.

After this, DFS collapse quickly as each vertex has no unvisited neighbors, and DFS will eventually retreat all the way to $s$. If all neighbors of $s$ are visited, then DFS is done.

Therefore the pseudocode is as follows:

def dfs(G, s):

# let G be the adjaceny list representation of the graph

# let s be the starting vertex

explored = {} # mark all vertices as unexplored

S = Stack()

S.push(s) # initialize the stack with the starting vertex

while S is not empty:

v = S.pop() # pop the first vertex in the stack

if not explored[v]:

explored[v] = True

for each edge (v, w) in G.adjacentEdges(v): # explore all neighbors of v

S.push(w) # push the neighbor at the top

Why stack? If you think about the more “natural” recursive implementation, you will realize that essentially you are using a call stack. So the iterative implementation is just a “translation” of the recursive implementation.

Runtime of BFS/DFS: The running time for both algorithms is $O(n + m)$ being linear in the size of the graph. This is because both BFS/DFS will:

- examine each vertex at most once

- examine each edge at most twice (once for each endpoint) therefore the total number of operations is $O(n + 2m) = O(n + m)$.

Generic Search

Is BFS and DFS correct? How do we prove that its runtime is $O(n+m)$? The answer is to realize that they both fall into the pattern of a generic search algorithm:

def generic_search(G, s):

# let G=(V, E) be the adjaceny list representation of the graph

# let s be the starting vertex

explored = {s} # mark s as explored, others as unexplored

while there is an edge (v,w) in E with v explored and w unexplored:

choose some such edge (v,w)

explored.add(w)

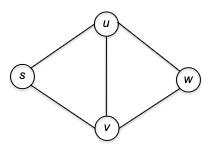

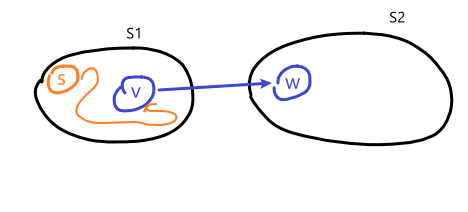

To see why this could contain both BFS and DFS, consider the following example graph:

- initially only our home base $s$ is marked as explored.

- In the fist iteration of the while loop, two edges meet the loop condition: $( s, u )$ and $( s, v )$. The GenericSearch algorithm chooses one of these edges — $(s, u)$, say — and marks $u$ as explored.

- In the second iteration of the loop, there are again two choices: $( s, v )$ and $( u, w )$. The algorithm might choose $( u, w )$ being DFS-like or $( s, v )$ being BFS-like.

Correctness of Generic Graph Search: At the conclusion of the

generic_search, a vertex is $v \in V$ is explored if and only if there is a path from $s$ to $v$ in $G$.

- this also means that every vertex $v$ is explored

- for BFS/DFS, it is then easy to see that each vertex is also explored only once (if reachable)

Proof: This is IFF proof, so we need to argue from both directions.

\[\text{$v$ is explored in generic\_search} \implies \text{there is a path from $s$ to $v$ in $G$}\]this is trivially true, as the only way we can discover $v$ is by following paths from $s$.

\[\text{there is a path from $s$ to $v$ in $G$} \implies \text{$v$ is explored in generic\_search}\]This basically says that the generic_search algorithm didn’t miss any vertex. We can prove this by contradiction: let there be a path from $s\leadsto v$, but `generic_search` halted and missed it. The intuition is that this cannot be, because we checked every edge given a vertex. More formally, let $S \subseteq V$ be the set of vertices just now marked as explored by the algorithm. Then vertex $s \in S$ and, by assumption, $v$ does not. But since there is a path from $s \leadsto v$, then there must exist a path from a vertex in $S$ going to one outside $S$ (reaching $v$). Then, our algorithm would have picked this during the while loop, and the algorithm would have at least explored one more vertex instead of halting, and would have eventually reached $v$. This is a contradiction, as we assumed that the algorithm halted and missed $v$.

Computing connected Components

This is one typical application of BFS/DFS. Let’s use BFS here.

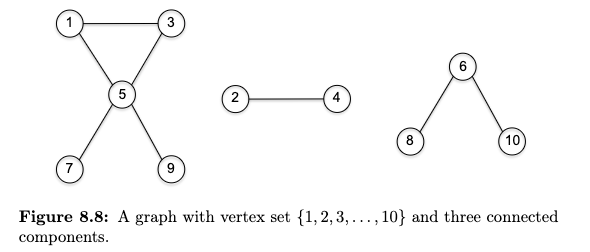

Recall that

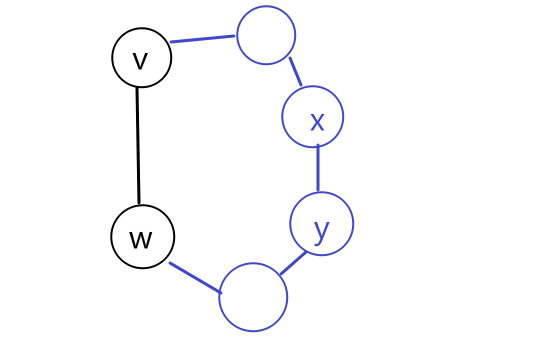

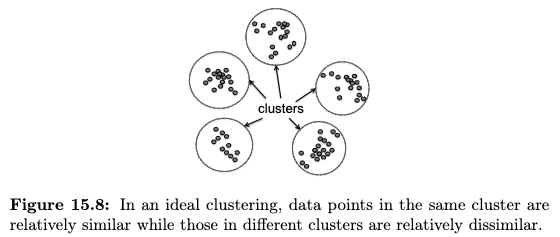

Connected Component is a maximal set of vertices $S \subseteq V$ such that for every pair of vertices $u,v \in S$, there is a path from $u$ to $v$ in $G$. Visually:

Consider $G=(V,E)$ being an undirected graph, and consider the task being to identify all connected components of $G$ (e.g. assign each vertex v a label cc(v) indicating which connected component it belongs to).

The idea is simple: we can use an outer loop to make a single pass over the vertices, and invoke BFS as a subroutine whenever we encounter an unexplored vertex. The pseudocode is as follows:

def ucc(G):

# let G be the adjaceny list representation of the graph

cc = {} # mark all vertices as unexplored

num_cc = 0 # number of connected components

for each vertex v in G.vertices:

if not explored[v]:

cc[v] = v # label the connected component

num_cc += 1 # increment the number of connected components

### BFS subroutine

Q = Queue()

Q.enqueue(v) # initialize the queue with the starting vertex

while Q is not empty:

w = Q.dequeue() # dequeue the first vertex in the queue

for each edge (w, x) in G.adjacentEdges(w): # explore all neighbors of w

if not explored[x]:

explored[x] = True

cc[x] = v # assign the same connected component to x

Q.enqueue(x) # enqueue the neighbor at the end

return cc

What’s the runtime? Although this looks like looping over $O(m+n)$ which is the cost of BFS, in fact we note that BFS/DFS$(G,s)$ is technically linear in the size of the connected component of $s$. Therefore the runtime is:

\[\underbrace{O(n)}_{\text{looping over every vertex}} + O\left( \sum \text{connected component's size} \right) = O(n) + O(n + m) = O(n + m)\]Topological Sort

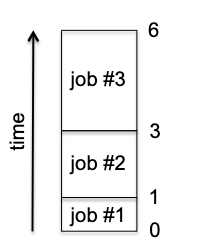

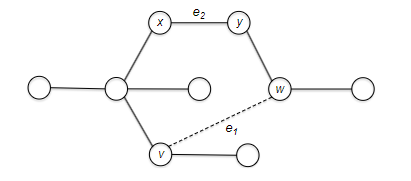

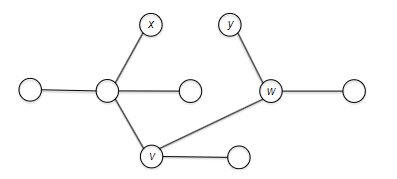

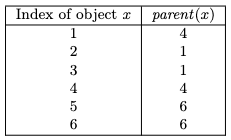

Here is another classic application of DFS. Imagine that you have a bunch of tasks to complete, and there are precedence constraints, meaning that you cannot start some of the tasks until you have completed others. ne application of topological orderings is to sequencing tasks so that all precedence constraints are respected. More formally

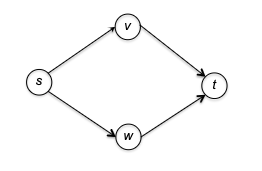

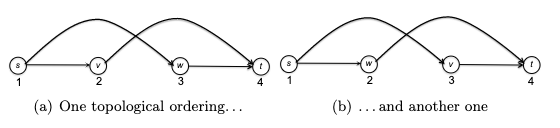

Topological Orderings: let $G=(V,E)$ be a directed graph. A topological ordering of $G$ is an assignment $f(v)$ of every vertex $v \in V$ to a different number such that:

\[\text{for every }v\to w \text{ edge}, f(v) < f(w)\]i.e. all of $G$’s directed edges should travel forward, with the arrow heading pointing to a vertex with a higher number.

Visually, consider the following example:

and there are two ways to topologically sort this graph:

You can visualize a topological ordering by plotting the vertices in order of their f-values. In a topological ordering, all edges of the graph are directed from left to right.

Note that this means, it is impossible to topologically order the vertices of a graph that contains a directed cycle. Therefore, in general a topological order exists only for a directed graph without any directed cycles - a directed acyclic graph (DAG).

In fact

Theorem 8.6: Every DAG has a topological ordering.

To show this, first realize that

Theorem 8.7: Every DAG has a source.

- proof: if you keep following incoming edges backward out of an arbitrary vertex of a directed acyclic graph, you’re bound to eventually reach a source vertex. Otherwise, there would be a directed cycle.

- i.e.: if you DFS and is stuck in a DFS, then there is a cycle in your graph.``

where:

- A source vertex of a directed graph is a vertex with no incoming edges.

- Analogously, a sink vertex is one with no outgoing edges.

Then, we can prove Theorem 8.6 very easily. Let $G$ be a directed acyclic graph with $n$ vertices. The task is to assign $f$-values to vertices in an increasing order:

- the source vertex will be assigned 1

- obtain $G’$ by removing the source vertex and all its outgoing edges. Note that this cannot produce a directed cycle, as deleting stuff can’t create new cycles

- repeat from step 1 on $G’$

So how to do compute a topological sorting, i.e. output a topological ordering of the vertices of a DAG $G$?

- the proof above naturally leads to an algorithm: a loop ($O(n)$) over each vertex where we find the source ($O(n)$), and then deleting it to repeat. This gives us $O(n^2)$.

- next up: a slicker solution via DFS resulting in $O(n+m)$.

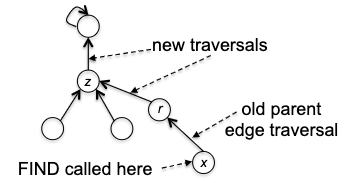

First we show the algorithm, the high level idea is simple: we use DFS to dive to the sink and assign the lowest ordering to it, but also assign things during backtracking!. We then mark it as explored (i.e. as if we removed the vertex from G) and repeat.

curLabel = |V| # tracks the ordering

f = {} # topological ordering

def topo_sort(G):

# let G = (V, E) be the adjaceny list representation of the graph

# let s be the starting vertex

explored = {} # mark all vertices as unexplored

for each vertex v in G.vertices:

if not explored[v]:

dfs_topo(G, v, explored)

return

def dfs_topo(G, s, explored):

global curLabel, f

explored[s] = True

for each edge (s, w) in s outgoing adjaceny list:

if not explored[w]:

dfs_topo(G, w, explored)

f[s] = curLabel # assign the ordering

curLabel -= 1 # decrement the ordering

return

note that topo_sort doesn’t need to us to start at a particular vertex. We can start at any vertex, and the algorithm will still work. (Basically the algorithm dives as deep as possible, and assign that deepest vertex (regardless which starting vertex you choose) with $f(\cdot) = \vert V\vert$.)

Correctness: first of all, it is obvious to see that:

- every vertex $v$ is assigned a unique number $f(v)$, as each is called by

dfs_topoonly once. - to argue why the returned

fmust be a topological order, we need to show for any arbitrary edge $(v,w)$ such that $v\to w$, we have $f(v) < f(w)$.

Proof if there is an edge $(v,w)$, then there are two cases in running topo_sort:

- if $v$ is explored before $w$, i.e. we

dfs_topois invoked with vertex $v$ before $w$ is explored. Then as $v \to w$ is reachable, we will get a call stack of [dfs_topo(v)->dfs_topo(w)]. Since the nature of recursive call will meandfs_topo(w)will terminate first, it will be assigned a higher ordering thandfs_topo(v). Therefore $f(v) < f(w)$. - if $w$ is explored before $v$, then it means there cannot be a path from $w$ back to $v$ (because we already have $v \to w$ and we know the graph is a DAG). Therefore, the call

dfs_topo(w)will terminate without callingdfs_topo(v). Then, astopo_sortwill eventually calldfs_topo(v)later, it means $v$ will get a lower ordering. Hence $f(v) < f(w)$.

Runtime: runs in linear time $O(n+m)$, as it is just DFS with a little extra bookkeeping:

- it explores each edge only once (from its tail), only performs a constant amount of work per edge/vertex

- therefore, the runtime is $O(n+m)$

Computing Strongly Connected Components

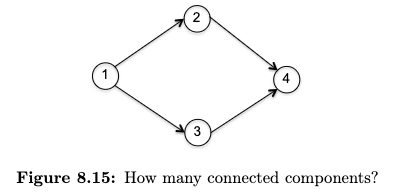

In short, while it didn’t matter much for undirected graph, for directed graph having a connected component makes things more complicated. First, recall that a connected component for undirected graph is defined as maximal regions within which you can get from anywhere to anywhere else in the region. For directed graph, consider

Following the logic, the answer should be zero.

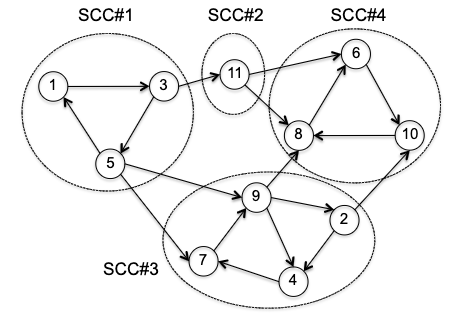

Strongly Connected Component (SCC) a maximal set of vertices $S \subseteq V$ such that there is a directed path from any vertex in $S$ to any other vertex in $S$.

For example

First of all, why would ucc not work? Consider evoking BFS on the following vertices

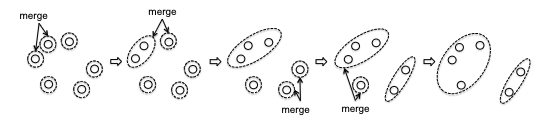

first, notice all the edges are organized to go mainly from left to right. In the case on the left, we would get a wrong result: BFS will discover every vertex and mark them as the same connected component. In the case on the right, we would get a correct result. This indicates that graph search can uncover strongly connected components, provided you start from the right place. But how? The key observation is that we want to first start from a sink SCC, i.e. the SCC with no outgoing edges to other SCCs. Then, we can go in a reverse topological order, plucking off sink SCCs one by one.

Key Lemma: the SCC Meta-Graph is directed acyclic. Visually this is simple to see:

and the argument is also simple. If two meta SCC are not acyclic, then you would have collapsed them into one, i.e., the two meta SCC were not maximal at the first place.

- this lemma is actually very important. This means that all we need to do is to find one vertex in a sink SCC, run BFS/DFS to label all vertices it can reach (and mark them as explored), and repeat

- the above works because for a directed acyclic graph + using a sink SCC, you cannot get out of that sink SCC.

On a high level, the arguments above in the lemma is pretty much what we will do, except you might be a bit confused by the graph reversing step

# sktech of Kosaraju’s algorithm

def kosaraju_idea(G):

G_rev = reverse(G) # reverse the graph, i.e. reverse all edges

dfs_loop(G_rev), let f[v] = "finishing time" of DFS on a vertex v

dfs_loop(G), using f to process vertices in decreasing order # i.e. start from the sink vertex

return vertices with their labels

while yes, the second relates to some kind of topological order, and third step relates to us wanting to get a reverse topological order before diving DFS to label the vertices. But there are a few caveat:

- second step did something on a reversed graph. Why?

- we thought about the

topo_sortalgorithm only in DAGs, and here we have a general directed graph. - the second and third is not equivalent to

topo_sortof a normalGand then start from the sink vertex.

So what’s going on? First we show the full algorithm, and then we will illustrate how it works, and why it is correct.

global numSCC: int

def Kosaraju(G):

# let G = (V, E) be the adjaceny list representation of the graph

G_rev = reverse(G) # reverse the graph, i.e. reverse all edges

mark all vertices in G_rev as unexplored

# first pass of DFS

# computes f(v), the magical ordering

TopoSort(G_rev)

# second pass of DFS

# finds SCCs in reverse topological order

mark all vertices in G as unexplored

global numSCC = 0 # number of SCCs, global variable

for each v in V, in increasing order of f(v):

if not explored[v]:

numSCC += 1

# assign scc-values for all vertices in the SCC

dfs_scc(G, v)

def dfs_scc(G, s):

global numSCC

explored[s] = True

scc[s] = numSCC

for each edge (s, w) in s outgoing adjaceny list:

if not explored[w]:

dfs_scc(G, w)

return

So, it turns out all the concern above will be addressed after thinking about this.

Why reverse graph + topological sort?

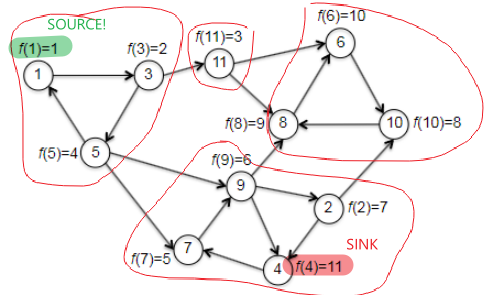

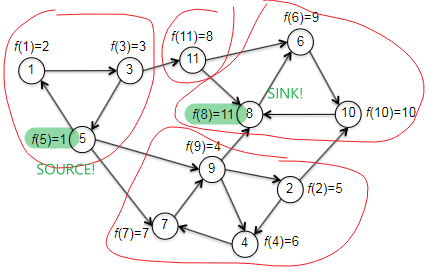

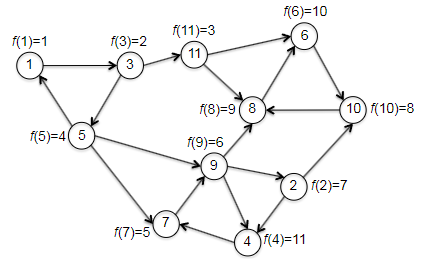

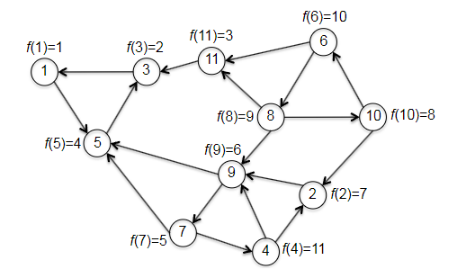

What we want, in the end, is to find a vertex in a sink SCC of $G$. The hope with topo_sort is that, we recall, the vertex in the last position ($f(v)=\vert V\vert$) must be a sink vertex (hence inside sink SCC) of $G$. However, it was for DAGs. So are we lucky enough that this would hold for a general graph? The answer is sadly no. If we consider the following example where we started at vertex 1 (recall that topo_sort starts at random vertex):

| correct source but wrong sink | correct source and correct sink |

|---|---|

|

|

where basically the left is made by trying to start at vertex 1 and wind up ending at vertex 4 during the first iteration, while the right is made by starting at vertex 1 and ends at vertex 8 during the first iteration of topo_sort. Although we couldn’t consistently find a vertex in a sink, it turns out we can consistently find a vertex in the source SCC. But in fact, the statement is even stronger: tThe topological order of the SCCs will also be a topological ordering of the meta-graph, if we label each SCC with the smallest $f$ of one of its vertices, i.e. formally

Theorem 8.10: Topological Ordering of the SCCs. Let $G$ be a directed graph, with vertices ordered arbitrarily, and for each vertex $v \in V$ let $f(v)$ be the position of $v$ computed by

\[\min_{x \in S_1} f(x) < \min_{y \in S_2} f(y)\]topo_sort. Let $S_1, S_2$ denote two SCCs of $G$, and suppose $G$ has an edge $(v,w)$ with $v \in S_1$ and $w \in S_2$, then:i.e. even if

topo_sortis incorrect in this case globally for every vertex, it is still correct locally for the SCCs!.

the proof is similar to the correctness of topo_sort. If we consider the following illustration:

then there are two cases that can happen during topo_sort labeling all the vertices:

topo_sortexplored and initialized a DFS from a vertex $s \in S_1$ before any vertex in $S_2$. But because we know vertices in a SCC can reach each other, then $s \leadsto v$, and since $v \to w$, then we can reach from $s$ to any vertex in $S_2$. Therefore, becausetopo_sortis a DFS/recursive call, the calldfs_topo(s)will not terminate until all the vertices in $S_2$ terminates. Therefore, $f(v)$ must also be smaller than $f(w)$.topo_sortexplored and initialized a DFS from a vertex $s \in S_2$ before any vertex in $S_1$. Then, because the meta-graph is directed acyclic, we are stuck in $S_2$, and $S_1$ will be unscathed. Since the counter for $f$ is a global variable, this means we will get a smaller $f$ for $S_1$ than $S_2$.

The end result? We are sure that the first vertex resides in a source SCC if we do topo_sort. So to find a vertex in a sink SCC, all we need is to reverse the graph first.

Finally, an example before going to the correctness and runtime discussion. We want to check that the magical reverse graph + topo_sort will indeed help us get vertices in a sink SCC, and so that the second pass of depth-first search discovers the SCCs in reverse topological order.

| $G_{rev}$ and compute $f(v)$ | $G$ with computed $f(v)$ |

|---|---|

|

|

Then, given the progress above (by reversing the graph and calling topo_sort), we continue to the second pass to DFS, which checks through vertices in increasing order:

- first call to DFS-SCC is initiated at the vertex 1 with smallest $f$. (the vertex in a sink SCC!)

- then it will label all vertices $1,3,5$, and mark as done.

- the second smallest and third is with vertex 3, which is visited already.

- the third smallest is vertex 11, which is also (a vertex in) the second sink SCC.

- continues

Correctness and Runtime of Kosaraju’s Algorithm

The argument will be short as the proofs are mostly covered in the previous section.

Correctness: each time we initiates a new call to dsf_scc, the algorithm discovers exactly one new SCC - the sink SCC relative to the not-yet-explored part of the graph.

Runtime: each of the two passes of DFS does a constant number of operations per vertex or edge. Therefore, the runtime is $O(n+m)$.

Dijkstra’s Shortest Path Algorithm

We’ve arrived at another one of computer science’s greatest hits: Dijkstra’s shortest-path algorithm.

Assumptions with Dijkstra’s Algorithm: this algorithm works in any directed graph with nonnegative edge length.

- can we extend it to undirected graph? (Yes, by changing the ‘frontier’ condition)

- can we extend it to negative edge length? (No, but Bellman-Ford can)

Problem definition: consider a directed graph $G=(V,E)$, a starting vertex $s \in V$, and a nonnegative length $l_e$ for each edge $e \in E$. The goal is to compute the length of a shortest path $D(v)$ from $s$ to every other vertex $v$ in $G$.

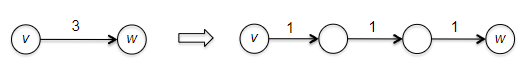

Why can’t we use BFS? Remember that breadth-first search computes the minimum number of edges in a path from the starting vertex to every other vertex (i.e. we continue layer by layer). This is the special case of the single-source shortest path problem in which every edge has length 1.

- but then can’t we just think of an edge with a longer length $l>1$ as a path of edges that each have length 1?

- Yes, in principle, you can solve the single-source shortest path problem by expanding + using BFS

- However, the problem with this is that it blows up the size of the graph

Dijkstra’s Algorithm

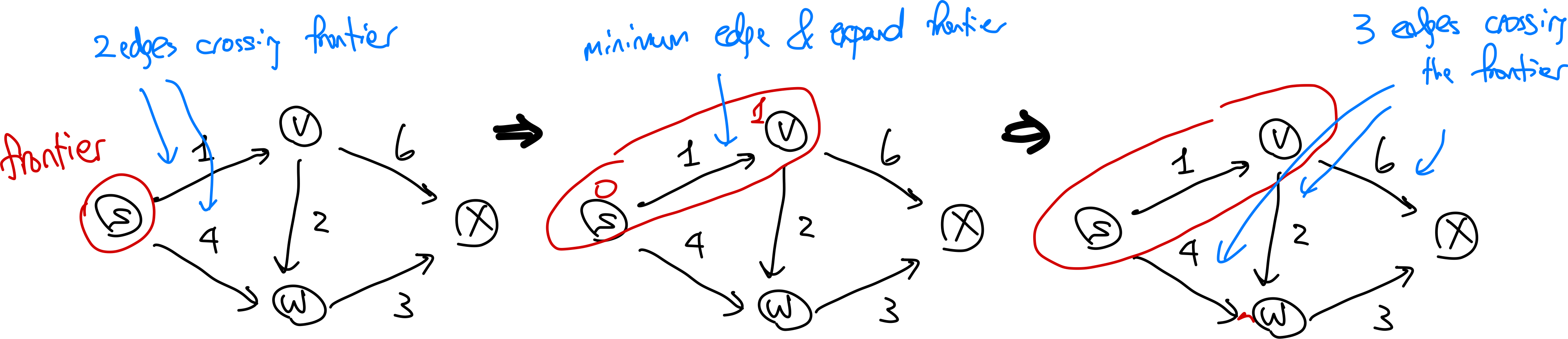

The idea of Dijkstra’s algorithm is to use a greedy approach: at each step, we will grow the frontier by one vertex. Overall it looks similar to BFS/DFS by iterating over the new vertices, but the clever part is how we choose which vertex to process next/is done.

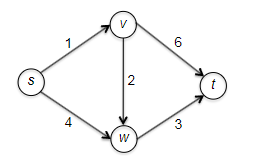

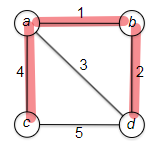

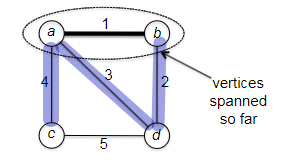

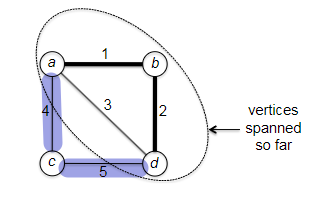

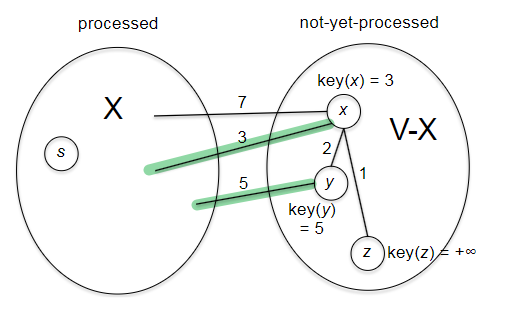

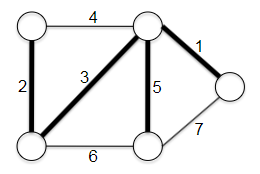

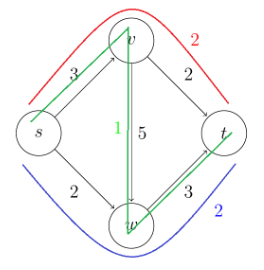

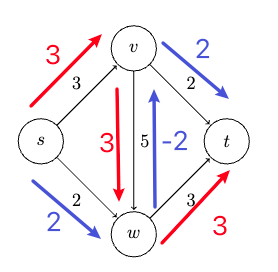

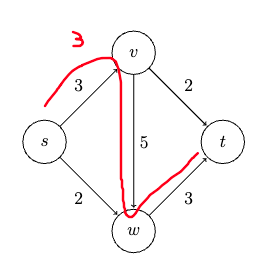

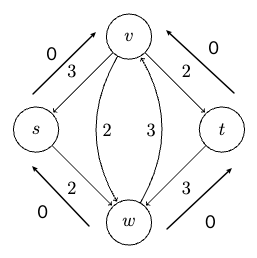

Consider some example graph, where each edge $e$ has a length of $l_e$:

Consider the pseudo-code

def dijkstra(G, s):

# let G = (V, E) be the adjaceny list representation of the graph

# let s be the starting vertex

X = {s} # list of vertices processed so far

dist[s] = 0 # tracks the cost of shortest path distance

path[s] = [] # tracks the shortest path, empty for s

while there is an edge (v,w) with v in X and w not in X: # check edges that crossed the "frontier"

(v*, w*) = edge minimizing dist[v] + l_vw

X[w*] = True # grow exactly one vertex

dist[w*] = dist[v*] + l_vw

path[w*] = path[v*] + [w*]

return dist, path

Visually, this works like:

Is this algorithm correct? If you think about it, this is what Dijkstra’s algorithm is trying to say. Consider an edge $(v,w)$ with $v \in X$ and $w \notin X$. Then the shortes path from $s$ to $w$ consists of the shortest path from $s$ to $v$ (or $s \leadsto v$) with edge $(v,w)$ tacked at the end. So is this correct?

Correctness of Dijkstra’s Algorithm

Essentially Dijkstra’s algorithm iterates over the entire $V$ space, so we can consider induction on the number of vertices $n$.

Proof: Let $P(n)$ denote that “the Dijkstra algorithm correctly computes the shortest-path distance of the $n$th vertex added to the processed set $X$”. We will prove this by induction on $n$:

- Base case: $P(1)$ is trivially true, as the starting vertex $s$ is the first vertex in $X$ and the shortest path distance from $s$ to $s$ is indeed $0$.

- Inductive hypothesis: Let $P(k)$ be true for all $k=1,2,3…, n-1$. This means that we have $d(v)=\mathrm{shortest}(s,v)$ correctly computed for the first $n-1$ vertices added by Dijkstra to $X$.

-

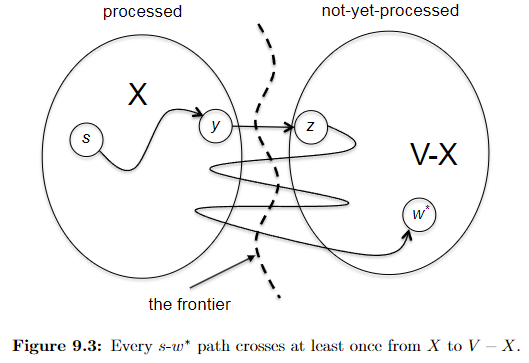

Inductive step: now we need to show $P(n)$. Let $w^{}$ be the $n$th vertex being added, and let Dijkstra to have selected the $(v^*, w^*)$ edge for that to happen (i.e. $v^{}\to w^{*}$ is the shortest edge at the frontier). We need to show that:

\[d(v^*) + l_{v^{*}w^{*}} = \mathrm{shortest}(s, w^*)\]We can show this by:

- showing $d(v^) + l_{v^{}w^{}} \ge \mathrm{shortest}(s, w^)$, that the shortest path can only be less than or equal to the path from $s$ to $v^$ plus the edge $(v^, w^*)$. This is trivially true by definition of the shortest path.

-

showing $d(v^) + l_{v^{}w^{}} \le \mathrm{shortest}(s, w^)$, namely every competitor path $s \leadsto w^{}$ must be at least $d(v^) + l_{v^{}w^{}}$. To show this, consider a “crazily short” path:

where because we know that path has to start at $s$ and ends at $w^{*}$ which is outside $X$, then it must have crossed the frontier at some point. Let’s denote the first edge from that path crossing the frontier be $(y,z)$, such that $y\in X, z \notin X$. So in essence we can considering any competitor path $P’$ taking the form:

\[P' = \underbrace{s \leadsto y}_{\text{inside X}} \to z \leadsto w^{*}\]we note that this path must has at least:

\[len(P') \ge \text{shortest}(s,y) + l_{yz} + 0 = d(y) + l_{yz}\]where we used $+0$ because we assumed no edges can be negative, and that $d(y) = \text{shortest}(s,y)$ from the inductive hypothesis. But we notice that this $d(y) + l_{yz}$ exactly represents an edge that just crossed the frontier. This means that according to Dijkstra’s algorithm that since we picked $(v^{}, w^{})$ edge:

\[d(y) + l_{yz} \ge d(v^{*}) + l_{v^{*}w^{*}}\]Therefore every competitor path $P’$ must be at least $d(v^) + l_{v^{}w^{*}}$, and we are done. (Intuitively, this is saying that *any path $P'$* going outside of $X$ will need to use the shortest path inside $X$ + something outside $X$, and while Dijkstra is minimizing exactly this, it works.)

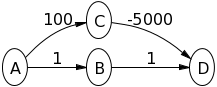

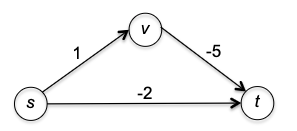

Q: What if we are dealing with a directed graph that has negative edges, but let’s say, no negative cycles?

The main insight here is that the algorithm only looks at all directly connected edges and it takes the smallest of these edge. The algorithm does not look ahead.

Therefore, you can consider the following example (from stackoverflow)

where Dijkstra would return the shortest path from $A\leadsto D$ being at a cost of 2, but in reality it is $-4900$.

Q: is there a case where Dijkstra algorithm is still correct despite there are negative edges?

Yes. Consider a directed graph $G$ and a starting vertex $s$ with the following properties: no edges enter the starting vertex $s$; edges that leaves have arbitrary (possibly negative) lengths; and all other edge lengths are nonnegative. Dijkstra’s algorithm correctly solve the single-source shortest path problem. You can see this in two ways:

- notice that adding the same positive constant $M$ to each of s’s outgoing edges preserves all shortest paths, as the lengths of all the $s \leadsto v$ path goes up by precisely $M$

- go back to the formal correctness proof of Dijkstra, and realize that the induction step would still work.

Hash Tables and Bloom Filters

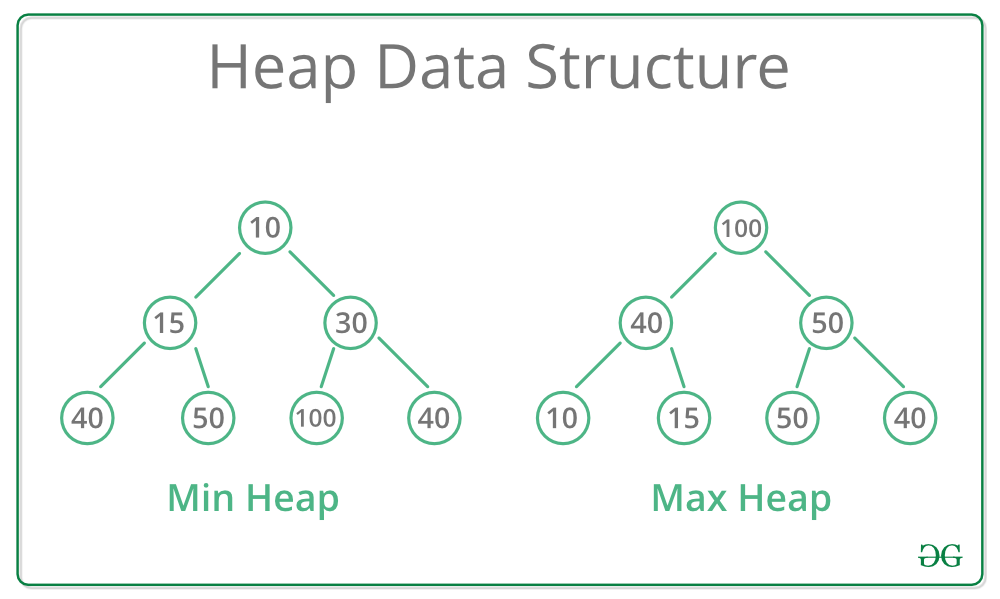

The goal of a hash table is to facilitate super-fast searches, which are also called lookups in this context. Compared to other data structures we will discuss later (e.g. heaps and search trees), hash tables do not maintain any ordering information.

A hash table keep track of an evolving set of objects with keys while supporting fast lookups (by key).

e.g. if you company manages an e-commerce site, you might use one hash table to keep track of employees (perhaps using names as keys).

Hash Table Operations: a hash table needs to support the following operations:

- insert: given a new object $x$, add it to the hash table

- lookup: given a key $k$, return a pointer to an object in the hash table with key $k$ (or null if no such object exists)

- delete: given a key $k$, remove the object with key $k$ from the hash table, if it exists

(for programming purposes you can just imagine the key a numerical representation of the object (e.g. memory address of the object), and a hash function $h$ operates on the key $h(k)$)

and to really be a hash table:

| Operation | Typical/Average Runtime |

|---|---|

| Lookup | O(1)* |

| Insert | O(1) |

| Delete | O(1)* |

where:

- the asterisk (*) indicates that the running time bound holds if and only if the hash table is implemented properly (with a good hash function and an appropriate table size) and the data is non-pathological (see later).

- insert is technically always $O(1)$, not average runtime.

Since this is really for fast lookups, example applications of hash tables include:

- 2-SUM problem: given a target $t$ and a list of integers $A$, find two distinct integers $x,y \in A$ such that $x+y=t$.

- realize that given a $x$, there is only one possible $y$ that can achieve this = just look up $t-y$!

- deduplication: given a list of $n$ items, remove all duplicates in $O(n)$ time

- first check if the hash table already has the key, if not, insert the k and store the object

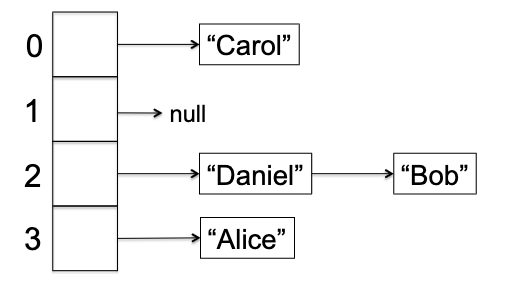

Implementation: Separate Chaining

So how do we get a (near) constant lookup time? Here we discuss a very common implementation of hash tables, which is called separate chaining (and the other popular one is called open addressing). We will first discuss separate chaining.

Visually, this is very easy to understand:

so that:>

Separate Chaining: Let there be an array $A$ of $n$ buckets, and for each bucket you have a linked list so that given a key $k$, lookup/insert/delete essentially all operate on the linked list $A[h(k)]$.

- note that this means your hash function needs to do $h: U \to {0,1,2,…,n-1}$ where $U$ is all possible objects/keys, which can be achieved by simply doing modulo n.

But now we get some problems:

- insert is surely constant time

-

but lookup and delete technically need:

\[\text{Runtime(lookup/delete)} = O(\text{length of linked list})\]

So our goal is to keep small linked list for each bucket. First of all, in what cases can we get bad performance?

- Size of $n$ is too small, so that we have too many collisions.

- This is easy to fix: just increase the size of $n$. But then you also need to rehash everything.

- Using a bad hash function (e.g. $h(k) = 0$ a constant function).

- So then what is a good hash function? One that Mimics a random function by spreading non-pathological datasets (see below) roughly evenly across the positions of the hash table

- The data is pathological, i.e. it is always possible to adversarially come up with some kind of data such that they all hash to the same bucket. i.e. for every hash function $h: U \to {0,1,2,…,n-1}$, there exists a set $S$ of keys such that $h(k_1) = h(k_2)$ for every $k_{1}, k_{2} \in S$.

- this is unfixable. The best we can do is to assume that the data is not pathological.

Takeaway: while 1 and 2 are somewhat avoidable, condition 3 means that hash tables cannot guarantee $O(1)$ for lookup or delete.

However, we can guarantee $O(1)$ is average runtime under some conditions (actually all the three will need to be addressed)

Claim: let there be a dataset $S$. If our hash table has a reasonably big size $\vert S\vert =O(n)$ (condition 1), and $h(x)$ is like a random function (condition 2), and $S$ is not pathological (condition 3), then the average runtime of lookup and delete is $O(1)$.

Proof: since we are discussing average time, the procedure will be similar to quick sort analysis. We begin by figuring out the random variable we want to analyze, then decompose it to simpler random variables, and finally use linear expectation to get the result.

Suppose you have inserted all $s \in S$ into the hash table. Consider a lookup for a new random object $x$ with key $k$, which might or might not be in $S$. Then the runtime is:

\[\mathrm{Runtime} = O(\text{list length of }A[h(k)]) = O(l)\]where $l$ is the random variable as it could be long or short. But notice that

\[O(l) = O(\# \text{of collisions $x$ is making})\]This means that:

\[\begin{align*} l \le 1 + \sum\limits_{y \in S, y \neq x} z_{y} \end{align*}\]where $z_y$ is (again) an indicator random variable indicating if there is a collision, and the one is there if there is a $y=x$ (i.e. essentially $x$ was an object $S$).

\[z_{y} = \begin{cases} 1, & \text{if $h(x)=h(y)$ } \\ 0, & \text{otherwise} \end{cases}, \quad \forall y \in S\]Now decomposition is complete, we realize that:

\[\begin{align*} \mathbb{E}[l] &\le \mathbb{E}\left[1 + \sum\limits_{y \in S, y \neq x} z_{y}\right] \\ &= 1 + \sum\limits_{y \in S, y \neq x} \mathbb{E}[z_{y}] \\ &= 1 + \sum\limits_{y \in S, y \neq x} \Pr[h(x)=h(y)] \end{align*}\]But we realize that if $h$ is a perfectly random hash function, then $\Pr[h(x)=h(y)]$ given an $x$ is like throwing the $y$ dart randomly at the $n$ buckets, and hoping that it lands on the same bucket as $x$. Therefore, the probability is $1/n$. Hence we get:

\[\begin{align*} \mathbb{E}[l] &\le 1 + \sum\limits_{y \in S, y \neq x} \Pr[h(x)=h(y)] \\ &= 1 + \sum\limits_{y \in S, y \neq x} \frac{1}{n} \\ &= 1 + \frac{|S|-1}{n} \\ &= O(1) \end{align*}\]where the last equality is because we had said $\vert S\vert =O(n)$, i.e. our hash table is reasonably big.

Implementation: Open Addressing

Why did we have a linked list version before (i.e. separate chaining)? In the end it was to resolve collision, but letting them live in the same bucket. Note that such collision is inevitable, as we have fixed size of data structure but your dataset $S$ is huge.

The idea of open addressing is, instead of letting the collision live in the same bucket, we will try to find another bucket for the colliding object, assuming $n \ge \vert S\vert$. In this implementation, each bucket stores only 0 or 1 object.

Open Addressing: given an object $x$ with key $k$ and its position to insert $h(k)$. We will use some probe sequence (i.e. a sequence of buckets to check) if $h(k)$ is occupied.

- so for insert, we continue this sequence until the first empty bucket, and insert the object there

- for lookup, we continue this sequence until we find the bucket storing $x$, or an empty bucket (return not found)

- we will not support delete.

So the trick is this “probe sequence”. One simple sequence would be a linear probing: $h(k), h(k)+1, …, \text{wrap around}, h(k)-1$.

We skip other details here, but discuss its performance compared to separate chaining.

- with chaining, the lookup time is affected by linked list length; with open addressing, its the number of probes required to either hit an empty slot or find the object.

- in both implementations, when the hash table gets increasingly full, performance degrades.

- however, with an appropriate hash table size and hash function, open addressing achieves the same running time bound as chaining.

Bloom Filters

Bloom filters have became a very popular data structure since its usage in internet routers. It has a very similar idea to hash tables, but:

- it is more space efficient

- it can only return

true/falsefor lookup (i.e. if the object is in the set or not) - it can return false negatives (i.e. even if the object is not in the set, it might return

true) - it guarantees constant-time operations for every dataset.

Blook Filter Supported Operations: a bloom filter supports the following operations:

- lookup: given a key $k$, return

trueif $k$ is in the bloom filter, andfalseif $k$ is not in the bloom filter- insert: add a new key $k$ to the bloom filter (since we are only returning

true/falsefor lookup, we don’t need to store the key/object itself)

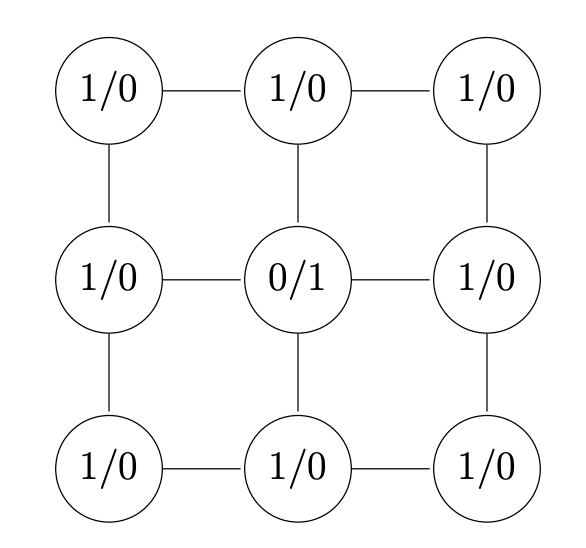

The idea of Bloom filter is simple. Consider an array of $n$ bits all initially zero. Then the data structure uses $m$ hash functions $h_1, h_{2}, …, h_m$ each mapping $h_{i} : U \to { 0,1,2, … , n-1 }$.

- you can create $m$ hash functions *using one real hash function $h_{}$*, by doing $h_{1}(k) = m \cdot h_(k), h_{2} = m \cdot h_*(k) + 1$, etc.

- typically $m$ is quite small, like $m=5$.

Then the operations is simply:

def insertion(k):

# flip all A[h_i(k)] = 1

for i in range(m):

A[h_i(k)] = 1

def lookup(k):

# check if all A[h_i(k)] == 1

for i in range(m):

if A[h_i(k)] == 0:

return False

return True

Visually:

hence it is obvious that: